2026-02-24 20:09:04

Last spring I wrote a blog post about our ongoing work in the background to gradually simplify the curl source code over time.

This is a follow-up: a status update of what we have done since then and what comes next.

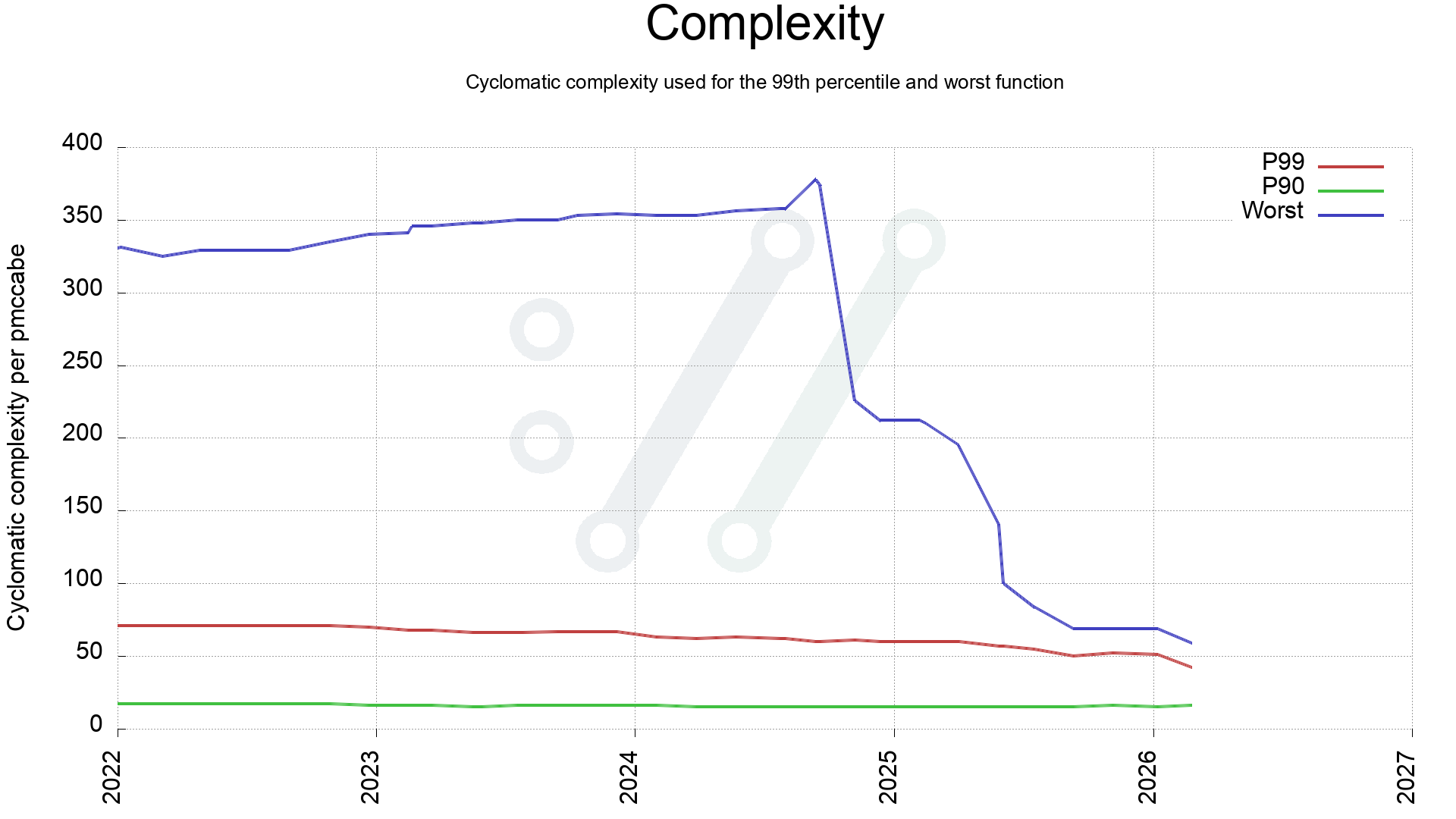

In May 2025 I had just managed to get the worst function in curl down to complexity 100, and the average score of all curl production source code (179,000 lines of code) was at 20.8. We had 15 functions still scoring over 70.

Almost ten months later we have reduced the most complex function in curl from 100 to 59. Meaning that we have simplified a vast number of functions. Done by splitting them up into smaller pieces and by refactoring logic. Reviewed by humans, verified by lots of test cases, checked by analyzers and fuzzers,

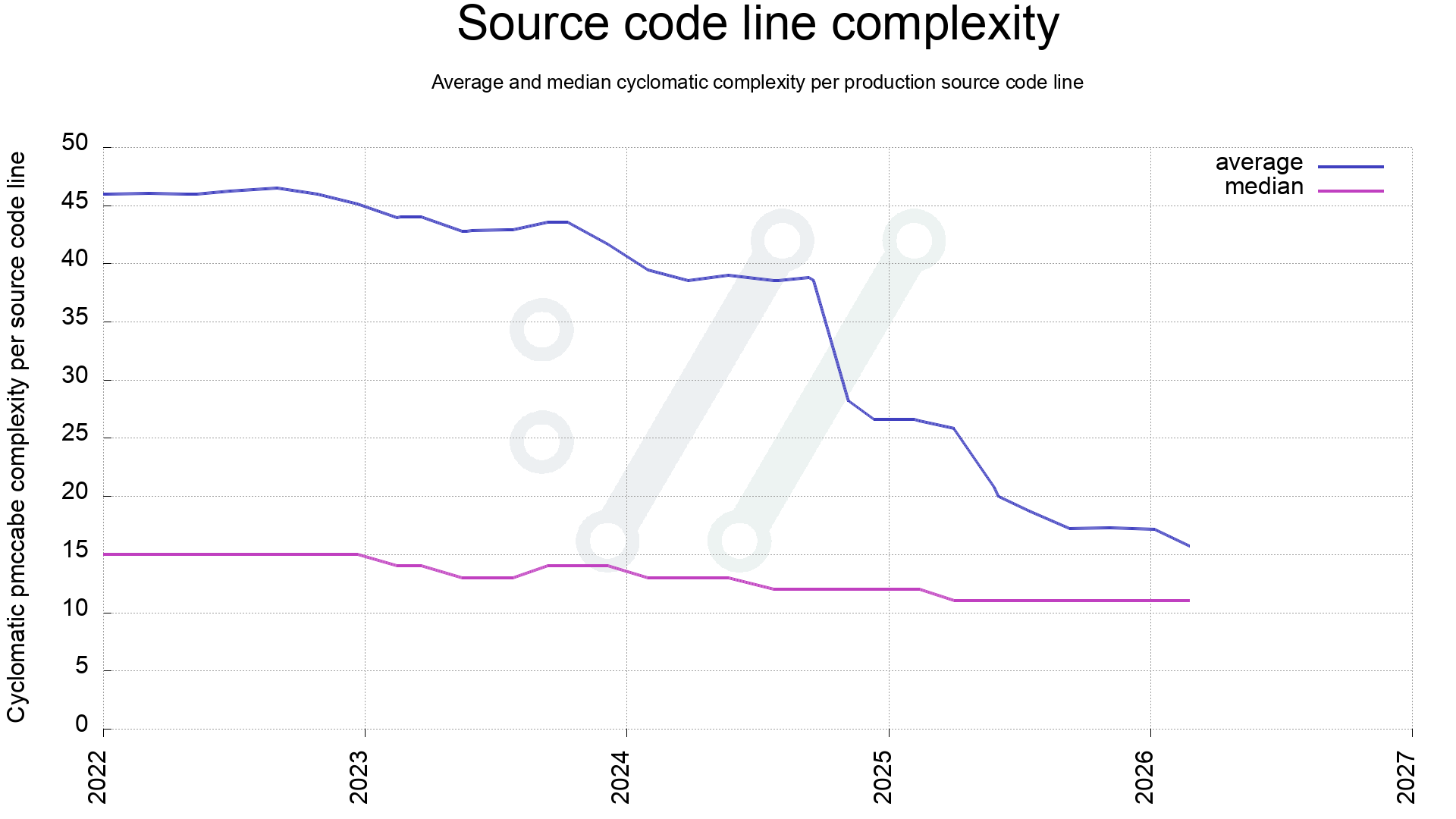

The current 171,000 lines of code now has an average complexity of 15.9.

The complexity score in this case is just the cold and raw metric reported by the pmccabe tool. I decided to use that as the absolute truth, even if of course a human could at times debate and argue about its claims. It makes it easier to just obey to the tool, and it is quite frankly doing a decent job at this so it’s not a problem.

In almost all cases the main problem with complex functions is that they do a lot of things in a single function – too many – where the functionality performed could or should rather be split into several smaller sub functions. In almost every case it is also immediately obvious that when splitting a function into two, three or more sub functions with smaller and more specific scopes, the code gets easier to understand and each smaller function is subsequently easier to debug and improve.

I don’t know how far we can take the simplification and what the ideal average complexity score of a the curl code base might be. At some point it becomes counter-effective and making functions even smaller then just makes it harder to follow code flows and absorbing the proper context into your head.

To illustrate our simplification journey, I decided to render graphs with a date axle starting at 2022-01-01 and ending today. Slightly over four years, representing a little under 10,000 git commits.

First, a look a the complexity of the worst scored function in curl production code over the last four years. Comparing with P90 and P99.

Identifying the worst function might not say too much about the code in general, so another check is to see how the average complexity has changed. This is calculated like this:

For all functions, add its function-score x function-length to a total complexity score, and in the end, divide that total complexity score on total number of lines used for all functions. Also do the same for a median score.

When 2022 started, the average was about 46 and as can be seen, it has been dwindling ever since, with a few steep drops when we have merged dedicated improvement work.

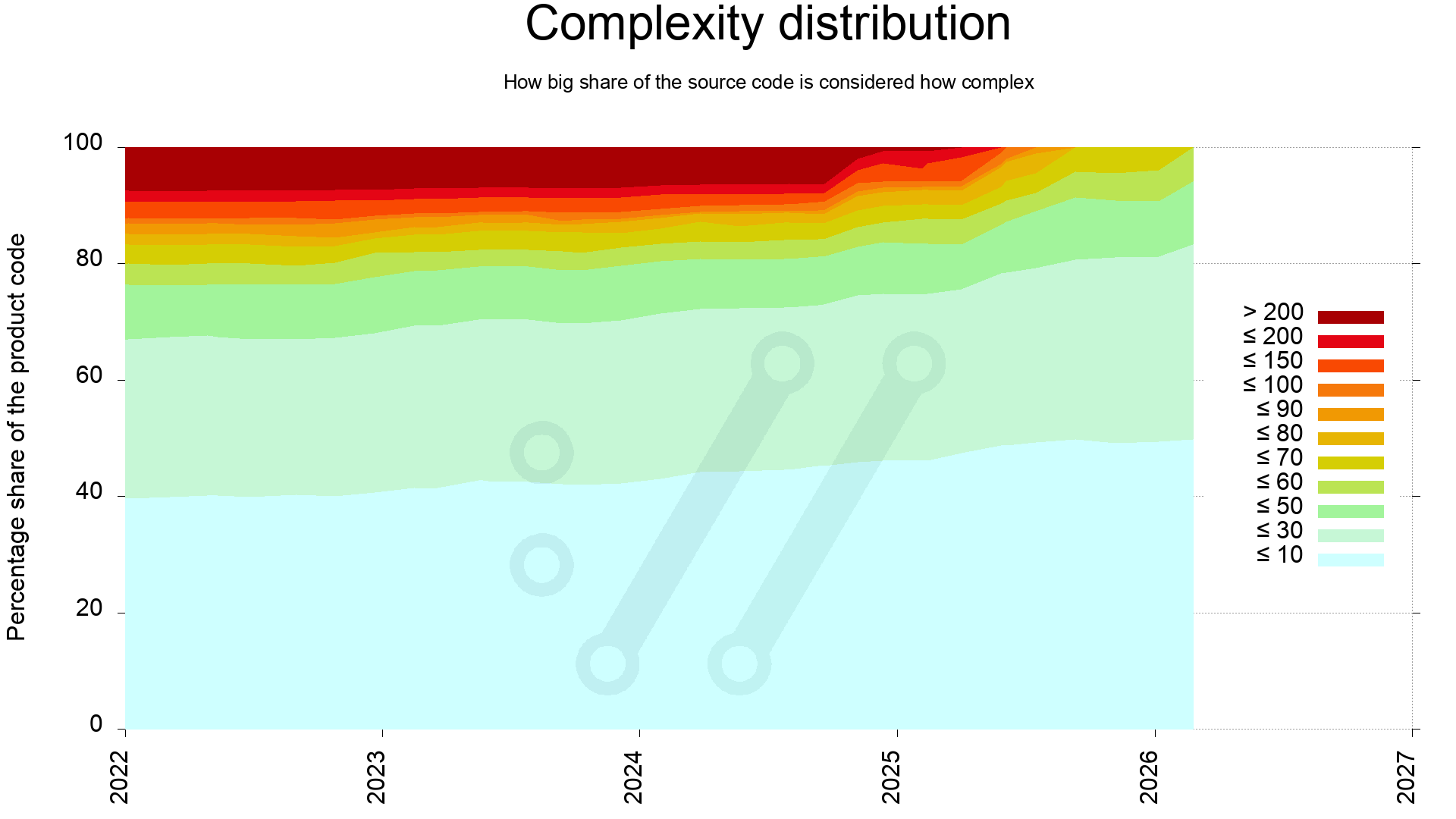

One way to complete the average and median lines to offer us a better picture of the state, is to investigate the complexity distribution through-out the source code.

This reveals that the most complex quarter of the code in 2022 has since been simplified. Back then 25% of the code scored above 60, and now all of the code is below 60.

It also shows that during 2025 we managed to clean up all the dark functions, meaning the end of 100+ complexity functions. Never to return, as the plan is at least.

We don’t really know. We believe less complex code is generally good for security and code readability, but it is probably still too early for us to be able to actually measure any particular positive outcome of this work (apart from fancy graphs). Also, there are many more ways to judge code than by this complexity score alone. Like having sensible APIs both internal and external and making sure that they are properly and correctly documented etc. The fact that they all interact together and they all keep changing, makes it really hard to isolate a single factor like complexity and say that changing this alone is what makes an impact.

Additionally: maybe just the refactor itself and the attention to the functions when doing so either fix problems or introduce new problems, that is then not actually because of the change of complexity but just the mere result of eyes giving attention on that code and changing it right then.

Maybe we just need to allow several more years to pass before any change from this can be measured?

2026-02-03 19:05:45

The title of my ending keynote at FOSDEM February 1, 2026.

As the last talk of the conference, at 17:00 on the Sunday lots of people had already left, and presumably a lot of the remaining people were quite tired and ready to call it a day.

Still, the 1500 seats in Janson got occupied and there was even a group of more people outside wanting to get in that had to be refused entry.

Thanks to the awesome FOSDEM video team, the recording was made available this quickly after the presentation.

You can also get the video off FOSDEM servers.

2026-02-03 00:12:24

In January 2025 I received the European Open Source Achievement Award. The physical manifestation of that prize was a trophy made of translucent acrylic (or something similar). The blog post I above has a short video where I show it off.

In the year that passed since, we have established an organization for how do the awards going forward in the European Open Source Academy and we have arranged the creation of actual medals for the awardees.

That was the medal we gave the award winners last week at the award ceremony where I handed Greg his prize.

I was however not prepared for it, but as a direct consequence I was handed a medal this year, in recognition for the award a got last year, because now there is a medal. A retroactive medal if you wish. It felt almost like getting the award again. An honor.

The medal is made in a shiny metal, roughly 50mm in diameter. In the middle of it is a modern version (with details inspired by PCB looks) of the Yggdrasil tree from old Norse mythology – the “World Tree”. A source of life, a sacred meeting place for gods.

In a circle around the tree are twelve stars, to visualize the EU and European connection.

On the backside, the year and the name are engraved above an EU flag, and the same circle of twelve stars is used there as a margin too, like on the front side.

The medal has a blue and white ribbon, to enable it to be draped over the head and hung from the neck.

The box is sturdy thing in dark blue velvet-like covering with European Open Source Academy printed on it next to the academy’s logo. The same motif is also in the inside of the top part of the box.

I do feel overwhelmed and I acknowledge that I have receive many medals by now. I still want to document them and show them in detail to you, dear reader. To show appreciation; not to boast.

2026-01-30 19:32:51

I had the honor and pleasure to hand over this prize to its first real laureate during the award gala on Thursday evening in Brussels, Belgium.

This annual award ceremony is one of the primary missions for the European Open Source Academy, of which I am the president since last year.

As an academy, we hand out awards and recognition to multiple excellent individuals who help make Europe the home of excellent Open Source. Fellow esteemed academy members joined me at this joyful event to perform these delightful duties.

As I stood on the stage, after a brief video about Greg was shown I introduced Greg as this year’s worthy laureate. I have included the said words below. Congratulations again Greg. We are lucky to have you.

There are tens of millions of open source projects in the world, and there are millions of open source maintainers. Many more would count themselves as at least occasional open source developers. These are the quiet builders of Europe’s digital world.

When we work on open source projects, we may spend most of our waking hours deep down in the weeds of code, build systems, discussing solutions, or tearing our hair out because we can’t figure out why something happens the way it does, as we would prefer it didn’t.

Open source projects can work a little like worlds on their own. You live there, you work there, you debate with the other humans who similarly spend their time on that project. You may not notice, think, or even care much about other projects that similarly have a set of dedicated people involved. And that is fine.

Working deep in the trenches this way makes you focus on your world and maybe remain unaware and oblivious to champions in other projects. The heroes who make things work in areas that need to work for our lives to operate as smoothly as they, quite frankly, usually do.

Greg Kroah-Hartman, however, our laureate of the Prize for Excellence in Open Source 2026, is a person whose work does get noticed across projects.

Our recognition of Greg honors his leading work on the Linux kernel and in the Linux community, particularly through his work on the stable branch of Linux. Greg serves as the stable kernel maintainer for Linux, a role of extraordinary importance to the entire computing world. While others push the boundaries of what Linux can do, Greg ensures that what already exists continues to work reliably. He issues weekly updates containing critical bug fixes and security patches, maintaining multiple long-term support versions simultaneously. This is work that directly protects billions of devices worldwide.

It’s impossible to overstate the importance of the work Greg has done on Linux. In software, innovation grabs headlines, but stability saves lives and livelihoods. Every Android phone, every web server, every critical system running Linux depends on Greg’s meticulous work. He ensures that when hospitals, banks, governments, and individuals rely on Linux, it doesn’t fail them. His work represents the highest form of service: unglamorous, relentless, and essential.

Without maintainers like Greg, the digital infrastructure of our world would crumble. He is, quite literally, one of the people keeping the digital infrastructure we all depend on running.

As a fellow open source maintainer, Greg and I have worked together in the open source security context. Through my interactions with him and people who know him, I learned a few things:

An American by origin, Greg now calls Europe his home, having lived in the Netherlands for many years. While on this side of the pond, he has taken on an important leadership role in safeguarding and advocating for the interests of the open source community. This is most evident through his work on the Cyber Resilience Act, through which he has educated and interacted with countless open source contributors and advocates whose work is affected by this legislation.

We — if I may be so bold — the Open Source community in Europe — and yes, the whole world, in fact — appreciate your work and your excellence. Thank you, Greg. Please come on stage and collect your award.

Here is the entire ceremony, from start to finish.

2026-01-28 17:44:51

We are doing another curl + distro online meeting this spring in what now has become an established annual tradition. A two-hour discussion, meeting, workshop for curl developers and curl distro maintainers.

2026 curl distro meeting details

The objective for these meetings is simply to make curl better in distros. To make distros do better curl. To improve curl in all and every way we think we can, together.

A part of this process is to get to see the names and faces of the people involved and to grease the machine to improve cross-distro collaboration on curl related topics.

Anyone who feels this is a subject they care about is welcome to join. We aim for the widest possible definition of distro and we don’t attempt to define the term.

The 2026 version of this meeting is planned to take place in the early evening European time, morning west coast US time. With the hope that it covers a large enough amount of curl interested people.

The plan is to do this on March 26, and all the details, planning and discussion items are kept on the dedicated wiki page for the event.

Please add your own discussion topics that you want to know or talk about, and if you feel inclined, add yourself as an intended participant. Feel free to help make this invite reach the proper people.

See you on March 26!

2026-01-27 07:01:39

We introduced curl’s -J option, also known as --remote-header-name back in February 2010. A decent amount of years ago.

The option is used in combination with -O (--remote-name) when downloading data from a HTTP(S) server and instructs curl to use the filename in the incoming Content-Disposition: header when saving the content, instead of the filename of the URL passed on the command line (if provided). That header would later be explained further in RFC 6266.

The idea is that for some URLs the server can provide a more suitable target filename than what the URL contains from the beginning. Like when you do a command similar to:

curl -O -J https://example.com/download?id=6347d

Without -J, the content would be save in the target output filename called ‘download’ – since curl strips off the query part.

With -J, curl parses the server’s response header that contains a better filename; in the example below fun.jpg.

Content-Disposition: filename="fun.jpg";

The above approach mentioned works pretty well, but has several limitations. One of them being that the obvious that if the site instead of providing a Content-Disposition header perhaps only redirects the client to a new URL to the download from, curl does not pick up the new name but instead keeps using the one from the originally provided URL.

This is not what most users want and not what they expect. As a consequence, we have had this potential improvement mentioned in the TODO file for many years. Until today.

We have now merged a change that makes curl with -J pick up the filename from Location: headers and it uses that filename if no Content-Disposition.

This means that if you now rerun a similar command line as mentioned above, but this one is allowed to follow redirects:

curl -L -O -J https://example.com/download?id=6347d

And that site redirects curl to the actual download URL for the tarball you want to download:

HTTP/1 301 redirect

Location: https://example.org/release.tar.gz

… curl now saves the contents of that transfer in a local file called release.tar.gz.

If there is both a redirect and a Content-Disposition header, the latter takes precedence.

Since this gets the filename from the server’s response, you give up control of the name to someone else. This can of course potentially mess things up for you. curl ignores all provided directory names and only uses the filename part.

If you want to save the download in a dedicated directory other than the current one, use –output-dir.

As an additional precaution, using -J implies that curl avoids to clobber, overwrite, any existing files already present using the same filename unless you also use –clobber.

Since the selected final name used for storing the data is selected based on contents of a header passed from the server, using this option in a scripting scenario introduces the challenge: what filename did curl actually use?

A user can easily extract this information with curl’s -w option. Like this:

curl -w '%{filename_effective}' -O -J -L https://example.com/download?id=6347dThis command line outputs the used filename to stdout.

Tweak the command line further to instead direct that name to stderr or to a specific file etc. Whatever you think works.

The content-disposition RFC mentioned above details a way to provide a filename encoded as UTF-8 using something like the below, which includes a U+20AC Euro sign:

Content-Disposition: filename*= UTF-8''%e2%82%ac%20rates

curl still does not support this filename* style of providing names. This limitation remains because curl cannot currently convert that provided name into a local filename using the provided characters – with certainty.

Room for future improvement!

This -J improvement ships in curl 8.19.0, coming in March 2026.