2026-02-13 08:00:00

Historically, writing code was slower than reviewing code.

It might not have felt that way, because code reviews sat in queues until someone got around to picking it up. But if you compare the actual acts themselves, creation was usually the more expensive part. In teams where people both wrote and reviewed code, it never felt like “we should probably program slower.”

So when more and more people tell me they no longer know what code is in their own codebase, I feel like something is very wrong here and it’s time to reflect.

Software engineers often believe that if we make the bathtub bigger, overflow disappears. It doesn’t. OpenClaw right now has north of 2,500 pull requests open. That’s a big bathtub.

Anyone who has worked with queues knows this: if input grows faster than throughput, you have an accumulating failure. At that point, backpressure and load shedding are the only things that retain a system that can still operate.

If you have ever been in a Starbucks overwhelmed by mobile orders, you know the feeling. The in-store experience breaks down. You no longer know how many orders are ahead of you. There is no clear line, no reliable wait estimate, and often no real cancellation path unless you escalate and make noise.

That is what many AI-adjacent open source projects feel like right now. And increasingly, that is what a lot of internal company projects feel like in “AI-first” engineering teams, and that’s not sustainable. You can’t triage, you can’t review, and many of the PRs cannot be merged after a certain point because they are too far out of date. And the creator might have lost the motivation to actually get it merged.

There is huge excitement about newfound delivery speed, but in private conversations, I keep hearing the same second sentence: people are also confused about how to keep up with the pace they themselves created.

Humanity has been here before. Many times over. We already talk about the Luddites a lot in the context of AI, but it’s interesting to see what led up to it. Mark Cartwright wrote a great article about the textile industry in Britain during the industrial revolution. At its core was a simple idea: whenever a bottleneck was removed, innovation happened downstream from that. Weaving sped up? Yarn became the constraint. Faster spinning? Fibre needed to be improved to support the new speeds until finally the demand for cotton went up and that had to be automated too. We saw the same thing in shipping that led to modern automated ports and containerization.

As software engineers we have been here too. Assembly did not scale to larger engineering teams, and we had to invent higher level languages. A lot of what programming languages and software development frameworks did was allow us to write code faster and to scale to larger code bases. What it did not do up to this point was take away the core skill of engineering.

While it’s definitely easier to write C than assembly, many of the core problems are the same. Memory latency still matters, physics are still our ultimate bottleneck, algorithmic complexity still makes or breaks software at scale.

When one part of the pipeline becomes dramatically faster, you need to throttle input. Pi is a great example of this. PRs are auto closed unless people are trusted. It takes OSS vacations. That’s one option: you just throttle the inflow. You push against your newfound powers until you can handle them.

But what if the speed continues to increase? What downstream of writing code do we have to speed up? Sure, the pull request review clearly turns into the bottleneck. But it cannot really be automated. If the machine writes the code, the machine better review the code at the same time. So what ultimately comes up for human review would already have passed the most critical possible review of the most capable machine. What else is in the way? If we continue with the fundamental belief that machines cannot be accountable, then humans need to be able to understand the output of the machine. And the machine will ship relentlessly. Support tickets of customers will go straight to machines to implement improvements and fixes, for other machines to review, for humans to rubber stamp in the morning.

A lot of this sounds both unappealing and reminiscent of the textile industry. The individual weaver no longer carried responsibility for a bad piece of cloth. If it was bad, it became the responsibility of the factory as a whole and it was just replaced outright. As we’re entering the phase of single-use plastic software, we might be moving the whole layer of responsibility elsewhere.

But to me it still feels different. Maybe that’s because my lowly brain can’t comprehend the change we are going through, and future generations will just laugh about our challenges. It feels different to me, because what I see taking place in some Open Source projects, in some companies and teams feels deeply wrong and unsustainable. Even Steve Yegge himself now casts doubts about the sustainability of the ever-increasing pace of code creation.

So what if we need to give in? What if we need to pave the way for this new type of engineering to become the standard? What affordances will we have to create to make it work? I for one do not know. I’m looking at this with fascination and bewilderment and trying to make sense of it.

Because it is not the final bottleneck. We will find ways to take responsibility for what we ship, because society will demand it. Non-sentient machines will never be able to carry responsibility, and it looks like we will need to deal with this problem before machines achieve this status. Regardless of how bizarre they appear to act already.

I too am the bottleneck now. But you know what? Two years ago, I too was the bottleneck. I was the bottleneck all along. The machine did not really change that. And for as long as I carry responsibilities and am accountable, this will remain true. If we manage to push accountability upwards, it might change, but so far, how that would happen is not clear.

2026-02-09 08:00:00

Last year I first started thinking about what the future of programming languages might look like now that agentic engineering is a growing thing. Initially I felt that the enormous corpus of pre-existing code would cement existing languages in place but now I’m starting to think the opposite is true. Here I want to outline my thinking on why we are going to see more new programming languages and why there is quite a bit of space for interesting innovation. And just in case someone wants to start building one, here are some of my thoughts on what we should aim for!

Does an agent perform dramatically better on a language that it has in its weights? Obviously yes. But there are less obvious factors that affect how good an agent is at programming in a language: how good the tooling around it is and how much churn there is.

Zig seems underrepresented in the weights (at least in the models I’ve used) and also changing quickly. That combination is not optimal, but it’s still passable: you can program even in the upcoming Zig version if you point the agent at the right documentation. But it’s not great.

On the other hand, some languages are well represented in the weights but agents still don’t succeed as much because of tooling choices. Swift is a good example: in my experience the tooling around building a Mac or iOS application can be so painful that agents struggle to navigate it. Also not great.

So, just because it exists doesn’t mean the agent succeeds and just because it’s new also doesn’t mean that the agent is going to struggle. I’m convinced that you can build yourself up to a new language if you don’t want to depart everywhere all at once.

The biggest reason new languages might work is that the cost of coding is going down dramatically. The result is the breadth of an ecosystem matters less. I’m now routinely reaching for JavaScript in places where I would have used Python. Not because I love it or the ecosystem is better, but because the agent does much better with TypeScript.

The way to think about this: if important functionality is missing in my language of choice, I just point the agent at a library from a different language and have it build a port. As a concrete example, I recently built an Ethernet driver in JavaScript to implement the host controller for our sandbox. Implementations exist in Rust, C, and Go, but I wanted something pluggable and customizable in JavaScript. It was easier to have the agent reimplement it than to make the build system and distribution work against a native binding.

New languages will work if their value proposition is strong enough and they evolve with knowledge of how LLMs train. People will adopt them despite being underrepresented in the weights. And if they are designed to work well with agents, then they might be designed around familiar syntax that is already known to work well.

So why would we want a new language at all? The reason this is interesting to think about is that many of today’s languages were designed with the assumption that punching keys is laborious, so we traded certain things for brevity. As an example, many languages — particular modern ones — lean heavily on type inference so that you don’t have to write out types. The downside is that you now need an LSP or the resulting compiler error messages to figure out what the type of an expression is. Agents struggle with this too, and it’s also frustrating in pull request review where complex operations can make it very hard to figure out what the types actually are. Fully dynamic languages are even worse in that regard.

The cost of writing code is going down, but because we are also producing more of it, understanding what the code does is becoming more important. We might actually want more code to be written if it means there is less ambiguity when we perform a review.

I also want to point out that we are heading towards a world where some code is never seen by a human and is only consumed by machines. Even in that case, we still want to give an indication to a user, who is potentially a non-programmer, about what is going on. We want to be able to explain to a user what the code will do without going into the details of how.

So the case for a new language comes down to: given the fundamental changes in who is programming and what the cost of code is, we should at least consider one.

It’s tricky to say what an agent wants because agents will lie to you and they are influenced by all the code they’ve seen. But one way to estimate how they are doing is to look at how many changes they have to perform on files and how many iterations they need for common tasks.

There are some things I’ve found that I think will be true for a while.

The language server protocol lets an IDE infer information about what’s under the cursor or what should be autocompleted based on semantic knowledge of the codebase. It’s a great system, but it comes at one specific cost that is tricky for agents: the LSP has to be running.

There are situations when an agent just won’t run the LSP — not because of technical limitations, but because it’s also lazy and will skip that step if it doesn’t have to. If you give it an example from documentation, there is no easy way to run the LSP because it’s a snippet that might not even be complete. If you point it at a GitHub repository and it pulls down individual files, it will just look at the code. It won’t set up an LSP for type information.

A language that doesn’t split into two separate experiences (with-LSP and without-LSP) will be beneficial to agents because it gives them one unified way of working across many more situations.

It pains me as a Python developer to say this, but whitespace-based indentation is a problem. The underlying token efficiency of getting whitespace right is tricky, and a language with significant whitespace is harder for an LLM to work with. This is particularly noticeable if you try to make an LLM do surgical changes without an assisted tool. Quite often they will intentionally disregard whitespace, add markers to enable or disable code and then rely on a code formatter to clean up indentation later.

On the other hand, braces that are not separated by whitespace can cause issues too. Depending on the tokenizer, runs of closing parentheses can end up split into tokens in surprising ways (a bit like the “strawberry” counting problem), and it’s easy for an LLM to get Lisp or Scheme wrong because it loses track of how many closing parentheses it has already emitted or is looking at. Fixable with future LLMs? Sure, but also something that was hard for humans to get right too without tooling.

Readers of this blog might know that I’m a huge believer in async locals and flow execution context — basically the ability to carry data through every invocation that might only be needed many layers down the call chain. Working at an observability company has really driven home the importance of this for me.

The challenge is that anything that flows implicitly might not be configured. Take for instance the current time. You might want to implicitly pass a timer to all functions. But what if a timer is not configured and all of a sudden a new dependency appears? Passing all of it explicitly is tedious for both humans and agents and bad shortcuts will be made.

One thing I’ve experimented with is having effect markers on functions that are added through a code formatting step. A function can declare that it needs the current time or the database, but if it doesn’t mark this explicitly, it’s essentially a linting warning that auto-formatting fixes. The LLM can start using something like the current time in a function and any existing caller gets the warning; formatting propagates the annotation.

This is nice because when the LLM builds a test, it can precisely mock out these side effects — it understands from the error messages what it has to supply.

For instance:

fn issue(sub: UserId, scopes: []Scope) -> Token

needs { time, rng }

{

return Token{

sub,

exp: time.now().add(24h),

scopes,

}

}

test "issue creates exp in the future" {

using time = time.fixed("2026-02-06T23:00:00Z");

using rng = rng.deterministic(seed: 1);

let t = issue(user("u1"), ["read"]);

assert(t.exp > time.now());

}

Agents struggle with exceptions, they are afraid of them. I’m not sure to what degree this is solvable with RL (Reinforcement Learning), but right now agents will try to catch everything they can, log it, and do a pretty poor recovery. Given how little information is actually available about error paths, that makes sense. Checked exceptions are one approach, but they propagate all the way up the call chain and don’t dramatically improve things. Even if they end up as hints where a linter tracks which errors can fly by, there are still many call sites that need adjusting. And like the auto-propagation proposed for context data, it might not be the right solution.

Maybe the right approach is to go more in on typed results, but that’s still tricky for composability without a type and object system that supports it.

The general approach agents use today to read files into memory is line-based, which means they often pick chunks that span multi-line strings. One easy way to see this fall apart: have an agent work on a 2000-line file that also contains long embedded code strings — basically a code generator. The agent will sometimes edit within a multi-line string assuming it’s the real code when it’s actually just embedded code in a multi-line string. For multi-line strings, the only language I’m aware of with a good solution is Zig, but its prefix-based syntax is pretty foreign to most people.

Reformatting also often causes constructs to move to different lines. In many languages, trailing commas in lists are either not supported (JSON) or not customary. If you want diff stability, you’d aim for a syntax that requires less reformatting and mostly avoids multi-line constructs.

What’s really nice about Go is that you mostly cannot import symbols from

another package into scope without every use being prefixed with the package

name. Eg: context.Context instead of Context. There are escape hatches

(import aliases and dot-imports), but they’re relatively rare and usually

frowned upon.

That dramatically helps an agent understand what it’s looking at. In general,

making code findable through the most basic tools is great — it works with

external files that aren’t indexed, and it means fewer false positives for

large-scale automation driven by code generated on the fly (eg: sed, perl

invocations).

Much of what I’ve said boils down to: agents really like local reasoning. They want it to work in parts because they often work with just a few loaded files in context and don’t have much spatial awareness of the codebase. They rely on external tooling like grep to find things, and anything that’s hard to grep or that hides information elsewhere is tricky.

What makes agents fail or succeed in many languages is just how good the build tools are. Many languages make it very hard to determine what actually needs to rebuild or be retested because there are too many cross-references. Go is really good here: it forbids circular dependencies between packages (import cycles), packages have a clear layout, and test results are cached.

Agents often struggle with macros. It was already pretty clear that humans struggle with macros too, but the argument for them was mostly that code generation was a good way to have less code to write. Since that is less of a concern now, we should aim for languages with less dependence on macros.

There’s a separate question about generics and comptime. I think they fare somewhat better because they mostly generate the same structure with different placeholders and it’s much easier for an agent to understand that.

Related to greppability: agents often struggle to understand barrel files and they don’t like them. Not being able to quickly figure out where a class or function comes from leads to imports from the wrong place, or missing things entirely and wasting context by reading too many files. A one-to-one mapping from where something is declared to where it’s imported from is great.

And it does not have to be overly strict either. Go kind of goes this way, but not too extreme. Any file within a directory can define a function, which isn’t optimal, but it’s quick enough to find and you don’t need to search too far. It works because packages are forced to be small enough to find everything with grep.

The worst case is free re-exports all over the place that completely decouple the implementation from any trivially reconstructable location on disk. Or worse: aliasing.

Agents often hate it when aliases are involved. In fact, you can get them to even complain about it in thinking blocks if you let them refactor something that uses lots of aliases. Ideally a language encourages good naming and discourages aliasing at import time as a result.

Nobody likes flaky tests, but agents even less so. Ironic given how particularly good agents are at creating flaky tests in the first place. That’s because agents currently love to mock and most languages do not support mocking well. So many tests end up accidentally not being concurrency safe or depend on development environment state that then diverges in CI or production.

Most programming languages and frameworks make it much easier to write flaky tests than non-flaky ones. That’s because they encourage indeterminism everywhere.

In an ideal world the agent has one command, that lints and compiles and it tells the agent if all worked out fine. Maybe another command to run all tests that need running. In practice most environments don’t work like this. For instance in TypeScript you can often run the code even though it fails type checks. That can gaslight the agent. Likewise different bundler setups can cause one thing to succeed just for a slightly different setup in CI to fail later. The more uniform the tooling the better.

Ideally it either runs or doesn’t and there is mechanical fixing for as many linting failures as possible so that the agent does not have to do it by hand.

I think we will. We are writing more software now than we ever have — more websites, more open source projects, more of everything. Even if the ratio of new languages stays the same, the absolute number will go up. But I also truly believe that many more people will be willing to rethink the foundations of software engineering and the languages we work with. That’s because while for some years it has felt you need to build a lot of infrastructure for a language to take off, now you can target a rather narrow use case: make sure the agent is happy and extend from there to the human.

I just hope we see two things. First, some outsider art: people who haven’t built languages before trying their hand at it and showing us new things. Second, a much more deliberate effort to document what works and what doesn’t from first principles. We have actually learned a lot about what makes good languages and how to scale software engineering to large teams. Yet, finding it written down, as a consumable overview of good and bad language design, is very hard to come by. Too much of it has been shaped by opinion on rather pointless things instead of hard facts.

Now though, we are slowly getting to the point where facts matter more, because you can actually measure what works by seeing how well agents perform with it. No human wants to be subject to surveys, but agents don’t care. We can see how successful they are and where they are struggling.

2026-01-31 08:00:00

If you haven’t been living under a rock, you will have noticed this week that a project of my friend Peter went viral on the internet. It went by many names. The most recent one is OpenClaw but in the news you might have encountered it as ClawdBot or MoltBot depending on when you read about it. It is an agent connected to a communication channel of your choice that just runs code.

What you might be less familiar with is that what’s under the hood of OpenClaw is a little coding agent called Pi. And Pi happens to be, at this point, the coding agent that I use almost exclusively. Over the last few weeks I became more and more of a shill for the little agent. After I gave a talk on this recently, I realized that I did not actually write about Pi on this blog yet, so I feel like I might want to give some context on why I’m obsessed with it, and how it relates to OpenClaw.

Pi is written by Mario Zechner and unlike Peter, who aims for “sci-fi with a touch of madness,” 1 Mario is very grounded. Despite the differences in approach, both OpenClaw and Pi follow the same idea: LLMs are really good at writing and running code, so embrace this. In some ways I think that’s not an accident because Peter got me and Mario hooked on this idea, and agents last year.

So Pi is a coding agent. And there are many coding agents. Really, I think you can pick effectively anyone off the shelf at this point and you will be able to experience what it’s like to do agentic programming. In reviews on this blog I’ve positively talked about AMP and one of the reasons I resonated so much with AMP is that it really felt like it was a product built by people who got both addicted to agentic programming but also had tried a few different things to see which ones work and not just to build a fancy UI around it.

Pi is interesting to me because of two main reasons:

And a little bonus: Pi itself is written like excellent software. It doesn’t flicker, it doesn’t consume a lot of memory, it doesn’t randomly break, it is very reliable and it is written by someone who takes great care of what goes into the software.

Pi also is a collection of little components that you can build your own agent on top. That’s how OpenClaw is built, and that’s also how I built my own little Telegram bot and how Mario built his mom. If you want to build your own agent, connected to something, Pi when pointed to itself and mom, will conjure one up for you.

And in order to understand what’s in Pi, it’s even more important to understand what’s not in Pi, why it’s not in Pi and more importantly: why it won’t be in Pi. The most obvious omission is support for MCP. There is no MCP support in it. While you could build an extension for it, you can also do what OpenClaw does to support MCP which is to use mcporter. mcporter exposes MCP calls via a CLI interface or TypeScript bindings and maybe your agent can do something with it. Or not, I don’t know :)

And this is not a lazy omission. This is from the philosophy of how Pi works. Pi’s entire idea is that if you want the agent to do something that it doesn’t do yet, you don’t go and download an extension or a skill or something like this. You ask the agent to extend itself. It celebrates the idea of code writing and running code.

That’s not to say that you cannot download extensions. It is very much supported. But instead of necessarily encouraging you to download someone else’s extension, you can also point your agent to an already existing extension, say like, build it like the thing you see over there, but make these changes to it that you like.

When you look at what Pi and by extension OpenClaw are doing, there is an example of software that is malleable like clay. And this sets certain requirements for the underlying architecture of it that are actually in many ways setting certain constraints on the system that really need to go into the core design.

So for instance, Pi’s underlying AI SDK is written so that a session can really contain many different messages from many different model providers. It recognizes that the portability of sessions is somewhat limited between model providers and so it doesn’t lean in too much into any model-provider-specific feature set that cannot be transferred to another.

The second is that in addition to the model messages it maintains custom messages in the session files which can be used by extensions to store state or by the system itself to maintain information that either not at all is sent to the AI or only parts of it.

Because this system exists and extension state can also be persisted to disk, it has built-in hot reloading so that the agent can write code, reload, test it and go in a loop until your extension actually is functional. It also ships with documentation and examples that the agent itself can use to extend itself. Even better: sessions in Pi are trees. You can branch and navigate within a session which opens up all kinds of interesting opportunities such as enabling workflows for making a side-quest to fix a broken agent tool without wasting context in the main session. After the tool is fixed, I can rewind the session back to earlier and Pi summarizes what has happened on the other branch.

This all matters because for instance if you consider how MCP works, on most model providers, tools for MCP, like any tool for the LLM, need to be loaded into the system context or the tool section thereof on session start. That makes it very hard to impossible to fully reload what tools can do without trashing the complete cache or confusing the AI about how prior invocations work differently.

An extension in Pi can register a tool to be available to the LLM to call and every once in a while I find this useful. For instance, despite my criticism of how Beads is implemented, I do think that giving an agent access to a to-do list is a very useful thing. And I do use an agent-specific issue tracker that works locally that I had my agent build itself. And because I wanted the agent to also manage to-dos, in this particular case I decided to give it a tool rather than a CLI. It felt appropriate for the scope of the problem and it is currently the only additional tool that I’m loading into my context.

But for the most part all of what I’m adding to my agent are either skills or TUI extensions to make working with the agent more enjoyable for me. Beyond slash commands, Pi extensions can render custom TUI components directly in the terminal: spinners, progress bars, interactive file pickers, data tables, preview panes. The TUI is flexible enough that Mario proved you can run Doom in it. Not practical, but if you can run Doom, you can certainly build a useful dashboard or debugging interface.

I want to highlight some of my extensions to give you an idea of what’s possible. While you can use them unmodified, the whole idea really is that you point your agent to one and remix it to your heart’s content.

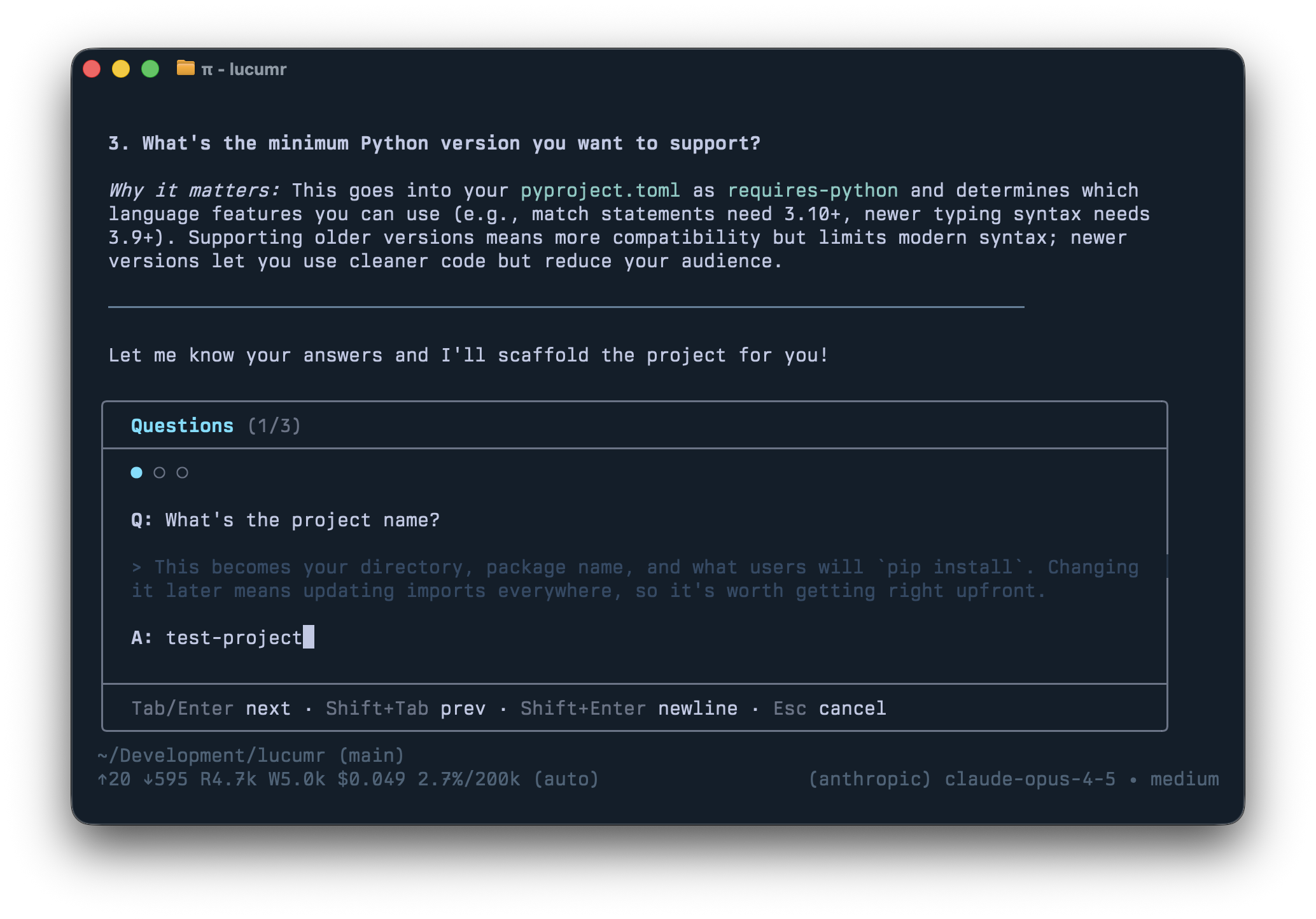

/answerI don’t use plan mode. I encourage the agent to ask questions and there’s a productive back and forth. But I don’t like structured question dialogs that happen if you give the agent a question tool. I prefer the agent’s natural prose with explanations and diagrams interspersed.

The problem: answering questions inline gets messy. So /answer reads the

agent’s last response, extracts all the questions, and reformats them into a

nice input box.

/todosEven though I criticize Beads for its

implementation, giving an agent a to-do list is genuinely useful. The /todos

command brings up all items stored in .pi/todos as markdown files. Both the

agent and I can manipulate them, and sessions can claim tasks to mark them as in

progress.

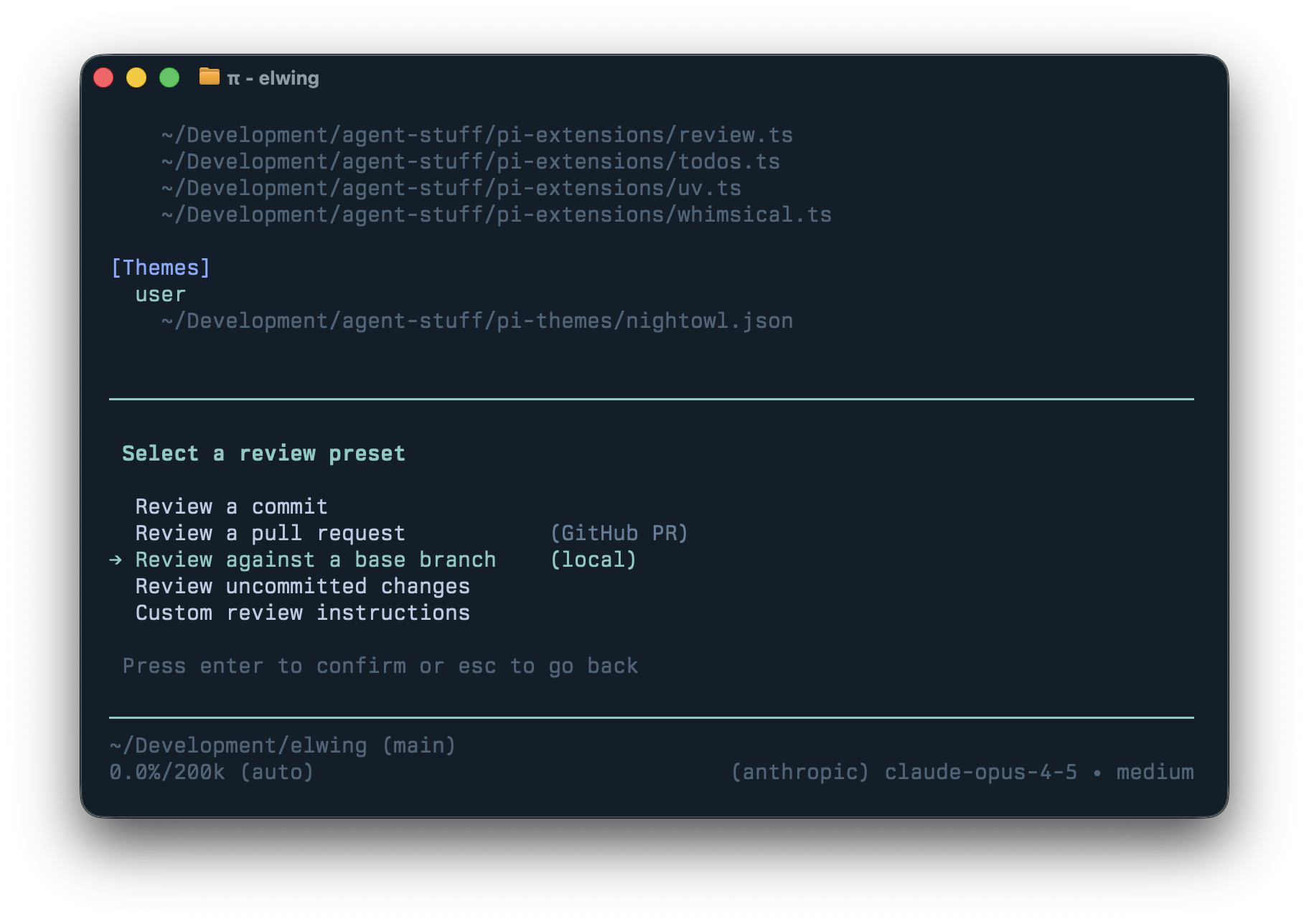

/reviewAs more code is written by agents, it makes little sense to throw unfinished work at humans before an agent has reviewed it first. Because Pi sessions are trees, I can branch into a fresh review context, get findings, then bring fixes back to the main session.

The UI is modeled after Codex which provides easy to review commits, diffs, uncommitted changes, or remote PRs. The prompt pays attention to things I care about so I get the call-outs I want (eg: I ask it to call out newly added dependencies.)

/controlAn extension I experiment with but don’t actively use. It lets one Pi agent send prompts to another. It is a simple multi-agent system without complex orchestration which is useful for experimentation.

/filesLists all files changed or referenced in the session. You can reveal them in

Finder, diff in VS Code, quick-look them, or reference them in your prompt.

shift+ctrl+r quick-looks the most recently mentioned file which is handy when

the agent produces a PDF.

Others have built extensions too: Nico’s subagent extension and interactive-shell which lets Pi autonomously run interactive CLIs in an observable TUI overlay.

These are all just ideas of what you can do with your agent. The point of it mostly is that none of this was written by me, it was created by the agent to my specifications. I told Pi to make an extension and it did. There is no MCP, there are no community skills, nothing. Don’t get me wrong, I use tons of skills. But they are hand-crafted by my clanker and not downloaded from anywhere. For instance I fully replaced all my CLIs or MCPs for browser automation with a skill that just uses CDP. Not because the alternatives don’t work, or are bad, but because this is just easy and natural. The agent maintains its own functionality.

My agent has quite a few

skills and crucially

I throw skills away if I don’t need them. I for instance gave it a skill to

read Pi sessions that other engineers shared, which helps with code review. Or

I have a skill to help the agent craft the commit messages and commit behavior I

want, and how to update changelogs. These were originally slash commands, but

I’m currently migrating them to skills to see if this works equally well. I

also have a skill that hopefully helps Pi use uv rather than pip, but I also

added a custom extension to intercept calls to pip and python to redirect

them to uv instead.

Part of the fascination that working with a minimal agent like Pi gave me is that it makes you live that idea of using software that builds more software. That taken to the extreme is when you remove the UI and output and connect it to your chat. That’s what OpenClaw does and given its tremendous growth, I really feel more and more that this is going to become our future in one way or another.

2026-01-27 08:00:00

Regular readers of this blog will know that I started a new company. We have put out just a tiny bit of information today, and some keen folks have discovered and reached out by email with many thoughtful responses. It has been delightful.

Colin and I met here, in Vienna. We started sharing coffees, ideas, and lunches, and soon found shared values despite coming from different backgrounds and different parts of the world. We are excited about the future, but we’re equally vigilant of it. After traveling together a bit, we decided to plunge into the cold water and start a company together. We want to be successful, but we want to do it the right way and we want to be able to demonstrate that to our kids.

Vienna is a city of great history, two million inhabitants and a fascinating vibe that is nothing like San Francisco. In fact, Vienna is in many ways the polar opposite to the Silicon Valley, both in mindset, in opportunity and approach to life. Colin comes from San Francisco, and though I’m Austrian, my career has been shaped by years working with California companies and people from there who used my Open Source software. Vienna is now our shared home. Despite Austria being so far away from California, it is a place of tinkerers and troublemakers. It’s always good to remind oneself that society consists of more than just your little bubble. It also creates the necessary counter balance to think in these times.

The world that is emerging in front of our eyes is one of change. We incorporated as a PBC with a founding charter to craft software and open protocols, strengthen human agency, bridge division and ignorance and to cultivate lasting joy and understanding. Things we believe in deeply.

I have dedicated 20 years of my life in one way or another creating Open Source software. In the same way as artificial intelligence calls into question the very nature of my profession and the way we build software, the present day circumstances are testing society. We’re not immune to these changes and we’re navigating them like everyone else, with a mixture of excitement and worry. But we share a belief that right now is the time to stand true to one’s values and principles. We want to take an earnest shot at leaving the world a better place than we found it. Rather than reject the changes that are happening, we look to nudge them towards the right direction.

If you want to follow along you can subscribe to our newsletter, written by humans not machines.

2026-01-18 08:00:00

You can use Polecats without the Refinery and even without the Witness or Deacon. Just tell the Mayor to shut down the rig and sling work to the polecats with the message that they are to merge to main directly. Or the polecats can submit MRs and then the Mayor can merge them manually. It’s really up to you. The Refineries are useful if you have done a LOT of up-front specification work, and you have huge piles of Beads to churn through with long convoys.

— Gas Town Emergency User Manual, Steve Yegge

Many of us got hit by the agent coding addiction. It feels good, we barely sleep, we build amazing things. Every once in a while that interaction involves other humans, and all of a sudden we get a reality check that maybe we overdid it. The most obvious example of this is the massive degradation of quality of issue reports and pull requests. As a maintainer many PRs now look like an insult to one’s time, but when one pushes back, the other person does not see what they did wrong. They thought they helped and contributed and get agitated when you close it down.

But it’s way worse than that. I see people develop parasocial relationships with their AIs, get heavily addicted to it, and create communities where people reinforce highly unhealthy behavior. How did we get here and what does it do to us?

I will preface this post by saying that I don’t want to call anyone out in particular, and I think I sometimes feel tendencies that I see as negative, in myself as well. I too, have thrown some vibeslop up to other people’s repositories.

In His Dark Materials, every human has a dæmon, a companion that is an externally visible manifestation of their soul. It lives alongside as an animal, but it talks, thinks and acts independently. I’m starting to relate our relationship with agents that have memory to those little creatures. We become dependent on them, and separation from them is painful and takes away from our new-found identity. We’re relying on these little companions to validate us and to collaborate with. But it’s not a genuine collaboration like between humans, it’s one that is completely driven by us, and the AI is just there for the ride. We can trick it to reinforce our ideas and impulses. And we act through this AI. Some people who have not programmed before, now wield tremendous powers, but all those powers are gone when their subscription hits a rate limit and their little dæmon goes to sleep.

Then, when we throw up a PR or issue to someone else, that contribution is the result of this pseudo-collaboration with the machine. When I see an AI pull request come in, or on another repository, I cannot tell how someone created it, but I can usually after a while tell when it was prompted in a way that is fundamentally different from how I do it. Yet it takes me minutes to figure this out. I have seen some coding sessions from others and it’s often done with clarity, but using slang that someone has come up with and most of all: by completely forcing the AI down a path without any real critical thinking. Particularly when you’re not familiar with how the systems are supposed to work, giving in to what the machine says and then thinking one understands what is going on creates some really bizarre outcomes at times.

But people create these weird relationships with their AI agent and once you see how some prompt their machines, you realize that it dramatically alters what comes out of it. To get good results you need to provide context, you need to make the tradeoffs, you need to use your knowledge. It’s not just a question of using the context badly, it’s also the way in which people interact with the machine. Sometimes it’s unclear instructions, sometimes it’s weird role-playing and slang, sometimes it’s just swearing and forcing the machine, sometimes it’s a weird ritualistic behavior. Some people just really ram the agent straight towards the most narrow of all paths towards a badly defined goal with little concern about the health of the codebase.

These dæmon relationships change not just how we work, but what we produce. You can completely give in and let the little dæmon run circles around you. You can reinforce it to run towards ill defined (or even self defined) goals without any supervision.

It’s one thing when newcomers fall into this dopamine loop and produce something. When Peter first got me hooked on Claude, I did not sleep. I spent two months excessively prompting the thing and wasting tokens. I ended up building and building and creating a ton of tools I did not end up using much. “You can just do things” was what was on my mind all the time but it took quite a bit longer to realize that just because you can, you might not want to. It became so easy to build something and in comparison it became much harder to actually use it or polish it. Quite a few of the tools I built I felt really great about, just to realize that I did not actually use them or they did not end up working as I thought they would.

The thing is that the dopamine hit from working with these agents is so very real. I’ve been there! You feel productive, you feel like everything is amazing, and if you hang out just with people that are into that stuff too, without any checks, you go deeper and deeper into the belief that this all makes perfect sense. You can build entire projects without any real reality check. But it’s decoupled from any external validation. For as long as nobody looks under the hood, you’re good. But when an outsider first pokes at it, it looks pretty crazy. And damn some things look amazing. I too was blown away (and fully expected at the same time) when Cursor’s AI written Web Browser landed. It’s super impressive that agents were able to bootstrap a browser in a week! But holy crap! I hope nobody ever uses that thing or would try to build an actual browser out of it, at least with this generation of agents, it’s still pure slop with little oversight. It’s an impressive research and tech demo, not an approach to building software people should use. At least not yet.

There is also another side to this slop loop addiction: token consumption.

Consider how many tokens these loops actually consume. A well-prepared session with good tooling and context can be remarkably token-efficient. For instance, the entire port of MiniJinja to Go took only 2.2 million tokens. But the hands-off approaches—spinning up agents and letting them run wild—burn through tokens at staggering rates. Patterns like Ralph are particularly wasteful: you restart the loop from scratch each time, which means you lose the ability to use cached tokens or reuse context.

We should also remember that current token pricing is almost certainly subsidized. These patterns may not be economically viable for long. And those discounted coding plans we’re all on? They might not last either.

And then there are things like Beads and Gas Town, Steve Yegge’s agentic coding tools, which are the complete celebration of slop loops. Beads, which is basically some sort of issue tracker for agents, is 240,000 lines of code that … manages markdown files in GitHub repositories. And the code quality is abysmal.

There appears to be some competition in place to run as many of these agents in parallel with almost no quality control in some circles. And to then use agents to try to create documentation artifacts to regain some confidence of what is actually going on. Except those documents themselves read like slop.

Looking at Gas Town (and Beads) from the outside, it looks like a Mad Max cult. What are polecats, refineries, mayors, beads, convoys doing in an agentic coding system? If the maintainer is in the loop, and the whole community is in on this mad ride, then everyone and their dæmons just throw more slop up. As an external observer the whole project looks like an insane psychosis or a complete mad art project. Except, it’s real? Or is it not? Apparently a reason for slowdown in Gas Town is contention on figuring out the version of Beads, which takes 7 subprocess spawns. Or using the doctor command times out completely. Beads keeps growing and growing in complexity and people who are using it, are realizing that it’s almost impossible to uninstall. And they might not even work well together even though one apparently depends on the other.

I don’t want to pick on Gas Town or these projects, but they are just the most visible examples of this in-group behavior right now. But you can see similar things in some of the AI builder circles on Discord and X where people hype each other up with their creations, without much critical thinking and sanity checking of what happens under the hood.

It takes you a minute of prompting and waiting a few minutes for code to come out of it. But actually honestly reviewing a pull request takes many times longer than that. The asymmetry is completely brutal. Shooting up bad code is rude because you completely disregard the time of the maintainer. But everybody else is also creating AI-generated code, but maybe they passed the bar of it being good. So how can you possibly tell as a maintainer when it all looks the same? And as the person writing the issue or the PR, you felt good about it. Yet what you get back is frustration and rejection.

I’m not sure how we will go ahead here, but it’s pretty clear that in projects that don’t submit themselves to the slop loop, it’s going to be a nightmare to deal with all the AI-generated noise.

Even for projects that are fully AI-generated but are setting some standard for contributions, some folks now prefer actually just getting the prompts over getting the actual code. Because then it’s clearer what the person actually intended. There is more trust in running the agent oneself than having other people do it.

Which really makes me wonder: am I missing something here? Is this where we are going? Am I just not ready for this new world? Are we all collectively getting insane?

Particularly if you want to opt out of this craziness right now, it’s getting quite hard. Some projects no longer accept human contributions until they have vetted the people completely. Others are starting to require that you submit prompts alongside your code, or just the prompts alone.

I am a maintainer who uses AI myself, and I know others who do. We’re not luddites and we’re definitely not anti-AI. But we’re also frustrated when we encounter AI slop on issue and pull request trackers. Every day brings more PRs that took someone a minute to generate and take an hour to review.

There is a dire need to say no now. But when one does, the contributor is genuinely confused: “Why are you being so negative? I was trying to help.” They were trying to help. Their dæmon told them it was good.

Maybe the answer is that we need better tools — better ways to signal quality, better ways to share context, better ways to make the AI’s involvement visible and reviewable. Maybe the culture will self-correct as people hit walls. Maybe this is just the awkward transition phase before we figure out new norms.

Or maybe some of us are genuinely losing the plot, and we won’t know which camp we’re in until we look back. All I know is that when I watch someone at 3am, running their tenth parallel agent session, telling me they’ve never been more productive — in that moment I don’t see productivity. I see someone who might need to step away from the machine for a bit. And I wonder how often that someone is me.

Two things are both true to me right now: AI agents are amazing and a huge productivity boost. They are also massive slop machines if you turn off your brain and let go completely.

2026-01-14 08:00:00

Turns out you can just port things now. I already attempted this experiment in the summer, but it turned out to be a bit too much for what I had time for. However, things have advanced since. Yesterday I ported MiniJinja (a Rust Jinja2 template engine) to native Go, and I used an agent to do pretty much all of the work. In fact, I barely did anything beyond giving some high-level guidance on how I thought it could be accomplished.

In total I probably spent around 45 minutes actively with it. It worked for around 3 hours while I was watching, then another 7 hours alone. This post is a recollection of what happened and what I learned from it.

All prompting was done by voice using pi, starting with Opus 4.5 and switching to GPT-5.2 Codex for the long tail of test fixing.

MiniJinja is a re-implementation of Jinja2 for Rust. I originally wrote it because I wanted to do a infrastructure automation project in Rust and Jinja was popular for that. The original project didn’t go anywhere, but MiniJinja itself continued being useful for both me and other users.

The way MiniJinja is tested is with snapshot tests: inputs and expected outputs, using insta to verify they match. These snapshot tests were what I wanted to use to validate the Go port.

My initial prompt asked the agent to figure out how to validate the port. Through that conversation, the agent and I aligned on a path: reuse the existing Rust snapshot tests and port incrementally (lexer -> parser -> runtime).

This meant the agent built Go-side tooling to:

.snap snapshots and compare output.This resulted in a pretty good harness with a tight feedback loop. The agent had a clear goal (make everything pass) and a progression (lexer -> parser -> runtime). The tight feedback loop mattered particularly at the end where it was about getting details right. Every missing behavior had one or more failing snapshots.

I used Pi’s branching feature to structure the session into phases. I rewound back to earlier parts of the session and used the branch switch feature to inform the agent automatically what it had already done. This is similar to compaction, but Pi shows me what it puts into the context. When Pi switches branches it does two things:

Without switching branches, I would probably just make new sessions and have more plan files lying around or use something like Amp’s handoff feature which also allows the agent to consult earlier conversations if it needs more information.

What was interesting is that the agent went from literal porting to behavioral porting quite quickly. I didn’t steer it away from this as long as the behavior aligned. I let it do this for a few reasons. First, the code base isn’t that large, so I felt I could make adjustments at the end if needed. Letting the agent continue with what was already working felt like the right strategy. Second, it was aligning to idiomatic Go much better this way.

For instance, on the runtime it implemented a tree-walking interpreter (not a bytecode interpreter like Rust) and it decided to use Go’s reflection for the value type. I didn’t tell it to do either of these things, but they made more sense than replicating my Rust interpreter design, which was partly motivated by not having a garbage collector or runtime type information.

On the other hand, the agent made some changes while making tests pass that I disagreed with. It completely gave up on all the “must fail” tests because the error messages were impossible to replicate perfectly given the runtime differences. So I had to steer it towards fuzzy matching instead.

It also wanted to regress behavior I wanted to retain (e.g., exact HTML escaping

semantics, or that range must return an iterator). I think if I hadn’t steered

it there, it might not have made it to completion without going down problematic

paths, or I would have lost confidence in the result.

Once the major semantic mismatches were fixed, the remaining work was filling in all missing pieces: missing filters and test functions, loop extras, macros, call blocks, etc. Since I wanted to go to bed, I switched to Codex 5.2 and queued up a few “continue making all tests pass if they are not passing yet” prompts, then let it work through compaction. I felt confident enough that the agent could make the rest of the tests pass without guidance once it had the basics covered.

This phase ran without supervision overnight.

After functional convergence, I asked the agent to document internal functions and reorganize (like moving filters to a separate file). I also asked it to document all functions and filters like in the Rust code base. This was also when I set up CI, release processes, and talked through what was created to come up with some finalizing touches before merging.

There are a few things I find interesting here.

First: these types of ports are possible now. I know porting was already possible for many months, but it required much more attention. This changes some dynamics. I feel less like technology choices are constrained by ecosystem lock-in. Sure, porting NumPy to Go would be a more involved undertaking, and getting it competitive even more so (years of optimizations in there). But still, it feels like many more libraries can be used now.

Second: for me, the value is shifting from the code to the tests and documentation. A good test suite might actually be worth more than the code. That said, this isn’t an argument for keeping tests secret — generating tests with good coverage is also getting easier. However, for keeping code bases in different languages in sync, you need to agree on shared tests, otherwise divergence is inevitable.

Lastly, there’s the social dynamic. Once, having people port your code to other languages was something to take pride in. It was a sign of accomplishment — a project was “cool enough” that someone put time into making it available elsewhere. With agents, it doesn’t invoke the same feelings. Will McGugan also called out this change.

Lastly, some boring stats for the main session:

claude-opus-4-5 and gpt-5.2-codex for the unattended overnight runThis did not count the adding of doc strings and smaller fixups.