2025-06-27 21:33:12

Back when I started programming, project teams were large. Organizations had dozens of programmers on sprawling projects. Reusing code was not trivial. Sharing code required real effort. Sometimes you had to hand over a disk or recopy code from a printout.

Better networking eased code reuse. Open source took over. We no longer have someone writing a red-black tree from scratch each time we need one.

What happened to those writing red-black trees when you could just download one from the internet?

They moved on to other work.

Generative AI (Copilot and friends) is basically just that: easier code reuse. If you’re trying to solve a problem that 20 engineers have solved and posted online, AI will solve it for you.

What happens to you? You move on to other, better things.

Software is supposed to be R&D. You’re supposed to solve problems no one has quite solved before.

But aren’t people predicting mass unemployment due to AI?

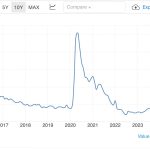

I suggest not making claims about the future without looking at hard data first The unemployment rate in Canada is relatively high right now, but historical data shows no ChatGPT discontinuity (before 2022, after 2022).

Maybe unemployment due to AI will hit computer science graduates hardest? Compared to what? Biology or business graduates? Where’s the data? Whether you should go to college at all is another question, but if you recommend opting out of computer science while still going to college, please provide the ChatGPT-safe alternative.

Can anyone become a programmer today? Let’s look at what happens on campus. Over 90% of students use AI for coursework. I estimate the percentage is higher in computer science. Let’s say all computer science students use AI.

A couple of years ago, I added a chatbot to my intro-to-programming class (now using RAG + GPT-4). With over 300 students a year, it’s a good test case.

I have grade data, and there’s no visible before/after ChatGPT effect. Last term, the failure rate was about the same as five years ago.

I don’t have data from other universities, but I haven’t heard anyone complain that students breeze through programming classes. This is despite AI being able to handle most assignments in an intro-to-computing course.

The challenge in an intro-to-programming class was never finding answers. Before ChatGPT, you could find solutions on Google or StackOverflow. Maybe it took longer, but it’s a quantitative difference, not a qualitative one, for elementary problems.

The skill you need to develop early on is reading code and instantly understanding it. If you’re a programmer, you might forget you have this skill. ChatGPT can give you code, but can your brain process it?

If you give Donald Trump ChatGPT, he still won’t code your web app. He’ll get code but won’t understand it.

More broadly, OECD economists predict worker productivity could grow by up to 0.9% per year thanks to AI over the next ten years. I’m not sure I trust economists to predict a decade ahead, but I trust they’ve studied recent trends carefully. And economist will tell you that a 0.9% rise in worker productivity per year is not at all a break from historical trends.

In fact, by historical standards, productivity growth is low. We are not at all in a technological surge like our grand-parents who lived through the electrification of their country. Going from no refrigerator to a refrigerator and a TV, that’s a drastic change. Going from Google to ChatGPT is nothing extraordinary.

Can generative AI bring about more drastic changes? Maybe. I hope so. But we don’t have hard evidence right now that it does.

2025-06-22 09:55:37

Herb Sutter just announced that the verdict is in: C++26, the next version of C++, will include compile-time reflection.

Reflection in programming languages means that you have access the code’s own structure. For example, you can take a class, and enumerate its methods. For example, you could receive a class, check whether it contains a method that returns a string, call this method and get the string. Most programming languages have some form of reflection. For example, the good old Java does have complete reflection support.

However, C++ is getting compile-time reflection. It is an important development.

I announced a few months ago that thanks to joint work with Francisco Geiman Thiesen, the performance-oriented JSON library simdjson would support compile-time reflection as soon as mainstream compilers support it.

This allows you to take your own data structure and convert it to a JSON string without any effort, and at high speed:

kid k{12, "John", {"car", "ball"}};

simdjson::to_json(k);

// would generate {"age": 12, "name": "John", "toys": ["car", "ball"]}

And you can also go back, given a JSON document, you can get back an instance of your custom type:

kid k = doc.get<kid>();

The code can be highly optimized and it can be thoroughly tested, in the main library. Removing the need for boilerplate code has multiple benefits.

To illustrate the idea further, let me consider the case of object-to-SQL mapping. Suppose you have your own custom type:

struct User { int id; std::string name; double balance; private: int secret; // Ignored in SQL generation };

You want to insert an instance of this user into your database. You somehow need to convert it to a string such as

INSERT INTO tbl (id, name, balance) VALUES (0, '', 0.000000);

How easy can it be? With compile-time reflection, we can make highly efficient and as simple as single function call:

generate_sql_insert(u, "tbl");

Of course, the heavy lifting still needs to be done. But it only needs to be done once.

What might it look like? First, we want to generate the column string (e.g., id, name, balance).

I do not have access yet to a true C++26 compiler. When C++26 arrive, we will have features such as ‘template for’ which are like ‘for’ loops, but for template metaprogramming. Meanwhile, I use a somewhat obscure ‘expand’ syntax.

Still the code is reasonable:

template<typename T> consteval std::string generate_sql_columns() { std::string columns; bool first = true; constexpr auto ctx = std::meta::access_context::current(); [:expand(std::meta::nonstatic_data_members_of(^^T, ctx)):] >> [&]<auto member>{ using member_type = typename[:type_of(member):]; if (!first) { columns += ", "; } first = false; // Get member name auto name = std::meta::identifier_of(member); columns += name; }; return columns; }

This function is ‘consteval’ which means that you should expect it to get evaluated at compile time. So it is very efficient: the string is computed while you are compiling your code. Thus the following function might just return a precomputed string:

std::string g() { return generate_sql_columns<User>(); }

Next we need to compute the string for the values (e.g., (0, ”, 0.000000)). That’s a bit trickier. You need to escape strings, and handle different value types. Here is a decent sketch:

template<typename T> constexpr std::string generate_sql_valuess(const T& obj) { std::string values; bool first = true; constexpr auto ctx = std::meta::access_context::current(); [:expand(std::meta::nonstatic_data_members_of(^^T, ctx)):] >> [&]<auto member>{ using member_type = typename[:type_of(member):]; if (!first) { values += ", "; } first = false; // Get member value auto value = obj.[:member:]; // Format value based on type if constexpr (std::same_as<member_type, std::string>) { // Escape single quotes in strings std::string escaped = value; size_t pos = 0; while ((pos = escaped.find('\'', pos)) != std::string::npos) { escaped.replace(pos, 1, "''"); pos += 2; } values += "'" + escaped + "'"; } else if constexpr (std::is_arithmetic_v<member_type>) { values += std::to_string(value); } }; return values; }

You can now put it all together:

template<typename T> constexpr std::string generate_sql_insert(const T& obj, const std::string& table_name) { constexpr std::string columns = generate_sql_columns<T>(); std::string values = generate_sql_valuess(obj); return "INSERT INTO " + table_name + " (" + columns + ") VALUES (" + values + ");"; }

It is just one of many applications. The important idea is that you can craft highly optimized and very safe code, that will get reused in many instances. The code looks a bit scary, as C++ tends to, but it is quite reasonable.

In the coming years, many projects will be simplified and optimized thanks to compile-time reflection.

Code: I have posted a complete implementation in the code repository of my blog. I am sure it can be significantly improved.

2025-06-21 08:32:39

In North America, my home province of Quebec has a slightly higher life expectancy than the rest of the country. It is also a poorer-than-average province, so that is maybe surprising. For 2023, the life expectancy at birth in Ontario was 82.33 years, whereas it was 82.55 years for Quebec.

However, if drill down in the data, you find that the differences has to do with men: men in Quebec live on average to be 80.77 years, the highest among Canadian provinces. Females in Ontario and British Columbia live longer than in Quebec.

What could explain Quebec’s men longevity?

People in Quebec smoke more then the Canadian average. It is probably not why Quebec men live longer..

Alcohol consumption patterns are worth examining. Unfortunately, data on alcohol use by sex for Canadian provinces were unavailable. Focusing on overall alcohol consumption, Quebec stands out with significantly higher levels. The correlation between total alcohol consumption (across men and women) and male longevity is positive but modest, with an R-squared value of 0.27.

One hypothesis suggests that variations in obesity rates may be a key factor. Quebec differs from other Canadian provinces in several ways. Notably, men in British Columbia and Quebec tend to have lower body mass indices compared to those in other regions. The correlation between obesity and longevity is more obvious (R square of 0.59).

In conclusion, I do not know why men in Quebec tend to live a bit longer than men in other provinces, but they tend to drink a lot more alcohol and they are leaner.

2025-06-16 22:42:38

Prescientific and preindustrial thought tied truth to authority and tradition. “We’ve always done it this way.” “The king decrees it.” “We know this is how it’s done.”

The scientific and industrial revolutions shattered this mindset. Suddenly, outsiders could challenge entrenched norms. Two brothers in a rundown workshop could build empires to rival the wealthiest lords. Ordinary people could question the highest authorities.

What made this possible? A belief in objective truth. Reality exists independently of our perceptions. For Christians, this meant God created a discoverable world, and humanity’s role was to uncover its workings and harness them.

How could an individual stand against tradition or authority? Through facts. A new method is cheaper—or it isn’t. It’s faster—or it isn’t. It yields better results—or it doesn’t.

This is the essence of progress: you can improve, and you can prove it.

The British Empire dominated the seas, waged wars, and abolished slavery not because of superior manpower or land, but through better governance, business practices, and science.

Not every question is empirical, but the critical ones often are. To make your case, build it and demonstrate its value. That is the heart of the scientific revolution

2025-06-15 22:32:49

Guido van Rossum, Python’s creator, recently said: “We have a huge community, but relatively few people, relatively speaking, are contributing meaningfully.”

This highlights a paradox.

Software thrives on the network effect, or Metcalfe’s Law, where a system’s value scales with the square of its users. Linux excels because its vast user base fuels adoption, documentation, and compatibility everywhere.

But larger teams don’t build better software—often the reverse. Brooks’ Law, from Fred Brooks’ The Mythical Man-Month, shows that adding people increases communication overhead, slowing progress. The Pareto Principle (80/20 rule) also applies: a small minority drives most meaningful contributions. Great software often stems from a single visionary or a small, cohesive team, not a crowd.

The network effect applies primarily to users, not necessarily to creators.

2025-06-12 22:33:00

There is an extremely naive model of science and innovation called the linear model: The model postulated that innovation starts with basic research, is followed by applied research and development, and ends with production and diffusion. According to Godin (2006), the model has been falsely attributed to Bush and its dominance is derived rather from its remarkable simplicity. It aligns with a technocratic worldview where bureaucrats get to steer science and innovation.

Yet it has been long documented that innovations arise from practical experience and are driven by user demand. My favorite example is the industrial revolution which was driven by textile which, in turn, served to provide comfortable underwear for women. We invented modern-day keyboards after musicians perfected the keyboard for musical instruments.

Kline and Rosenberg are eloquent on this topic:

« Even more important, from the viewpoint of understanding innovation, is the recognition that when the science is inadequate, or even totally lacking, we still can, do, and often have created important innovations, and innumerable smaller, but cumulatively important evolutionary changes. Recently, a member of the National Academy of Engineering, highly versed in dynamics and control, attempted to analyze the stability of an ordinary bicycle with a rider-and failed. No acceptable analysis is known. But this lack of theory did not prevent the invention of the bicycle a century ago, nor did it prevent a multitude of beta-phase improvements in bicycle design that have cumulatively created a reliable, relatively inexpensive, and useful machine. Nor does the absence of understanding of the theory of stability prevent a 5-year-old child from mounting a bicycle and with a few tries learning to stabilize the human-machine system. Had the idea been true that science is the initiating step in innovation, we would never have invented the bicycle. »

There are countless examples of important innovations that we can consider:

The development of the steam engine, a cornerstone of the Industrial Revolution, did not begin with basic scientific research but with practical needs in mining. Miners faced the challenge of pumping water out of deep coal mines, and early inventors like Thomas Savery and Thomas Newcomen developed steam-powered pumps to address this issue. James Watt’s later improvements, often seen as a scientific leap, were driven by practical experimentation and feedback from users, such as mine operators, rather than purely theoretical research.

The Wright brothers’ invention of powered flight is a classic case of innovation rooted in practical experience. Neither Wilbur nor Orville Wright had formal scientific training; their breakthroughs came from hands-on experimentation with gliders, kites, and wind tunnels they built themselves. Their motivation stemmed from the growing public and commercial interest in aviation, coupled with their own observations as bicycle mechanics, where they learned about balance and control. The iterative process of testing and refining their designs was driven by practical problem-solving, not a linear progression from basic research to application.

Fleming’s initial observation of penicillin’s antibacterial properties in 1928 was not immediately pursued due to a lack of practical application. It was only when the urgent demand for effective treatments for wounded soldiers emerged that scientists like Howard Florey and Ernst Chain, working with industrial partners, developed methods to produce penicillin at scale. This process was driven by the immediate needs of medical practitioners and the military, not a linear path from basic science.

The rise of personal computers, exemplified by companies like Apple and IBM, showcases innovation driven by user demand and practical experimentation. Early computers were developed not by academic researchers but by hobbyists and entrepreneurs, such as Steve Wozniak, who built the Apple I to meet the needs of tech enthusiasts in the Homebrew Computer Club. The rapid diffusion of PCs was fueled by user demand for accessible computing in homes and businesses, with iterative improvements based on feedback from users rather than a top-down research pipeline. Software development, like Microsoft’s operating systems, similarly responded to practical needs for user-friendly interfaces.

Early mobile phones, like Motorola’s DynaTAC, were developed to meet the practical needs of business professionals for portable communication. The transition to smartphones, particularly with the iPhone in 2007, was driven by consumer demand for multifunctional devices that combined communication, entertainment, and productivity. Apple’s success came from observing user behaviors and integrating existing technologies (touchscreens, internet connectivity) into a user-friendly package, rather than groundbreaking basic research.

The rapid development and adoption of generative AI chatbots, such as OpenAI’s ChatGPT, Google’s Gemini, and Anthropic’s Claude, showcase innovation propelled by user demand and practical applications. ChatGPT would not exist without the massive amount of content produced online by users and the powerful graphics processors developed for gamers.

It is high time we bury the linear model of innovation. Researchers are not in the driving seat of technological innovation, nor are government bureaucrats.