2026-03-01 08:00:00

Hey everybody, I wanted to make this post to be the announcement that I did in fact survive my surgery I am leaving the hospital today and I want to just write up what I've had on my mind over these last couple months and why have not been as active and open source I wanted to.

This is being dictated to my iPhone using voice control. I have not edited this. I am in the hospital bed right now, I have no ability to doubted this. As a result of all typos are intact and are intended as part of the reading experience.

That week leading up to surgery was probably one of the scariest weeks of my life. Statistically I know that with the procedure that I was going to go through that there's a very low all-time mortality rate. I also know that with propofol the anesthesia that was being used, there is also a very all-time low mortality rate. However one person is all it takes to be that one lucky one in 1 million. No, I mean unlucky. Leading up to surgery I was afraid that I was going to die during the surgery so I prepared everything possible such that if I did die there would be as a little bad happening as possible.

I made peace with my God. I wrote a will. I did everything it is that one was expected to do when there is a potential chance that your life could be ended including filing an extension for my taxes.

Anyway, the point of this post is that I want to explain why I named the lastest release of Anubis Necron.

Final Fantasy is a series of role-playing games originally based on one development teams game of advanced Dungeons & Dragons of the 80s. In the Final Fantasy series there are a number of legendary summons that get repeated throughout different incarnations of the games. These summons usually represent concepts or spiritual forces or forces of nature. The one that was coming to mind when I was in that pre-operative state was Necron.

Necron is summoned through the fear of death. Specifically, the fear of the death of entire kingdom. All the subjects absolutely mortified that they are going to die and nothing that they can do is going to change that.

Content warning: spoilers for Final Fantasy 14 expansion Dawntrail.

In Final Fantasy 14 these legendary summons are named primals. These primals become the main story driver of several expansions. I'd be willing to argue that the first expansion a realm reborn is actually just the story of Ifrit (Fire), Garuda (Wind), Titan (Earth), and Lahabrea (Edgelord).

Late into Dawn Trail, Nekron gets introduced. The nation state of Alexandria has fused into the main overworld. In Alexandria citizens know not death. When they die, their memories are uploaded into the cloud so that they can live forever in living memory. As a result, nobody alive really knows what death is or how to process it because it's just not a threat to them. Worst case if their body actually dies they can just have a new soul injected into it and revive on the spot.

Part of your job as the player is to break this system of eternal life, as powering it requires the lives of countless other creatures.

So by the end of the expansion, an entire kingdom of people that did not know the concept of death suddenly have it thrust into them. They cannot just go get more souls in order to compensate for accidental injuries in the field. They cannot just get uploaded when they die. The kingdom that lost the fear of death suddenly had the fear of death thrust back at them.

And thus, Necron was summoned by the Big Bad™️ using that fear of death.

I really didn't understand that part of the story until the week leading up to my surgery. The week where I was contacting people to let people know what was going on, how to know if I was OK, and what they should do if I'm not.

In that week I ended up killing my fear of death.

I don't remember much from the day of the operation, but what I do remember is this: when I was wheeled into the operating theater before they placed the mask over my head to put me to sleep they asked me one single question. "Do you want to continue?"

In that moment everything swirled into my head again. all of the fear of death. All of the worries that my husband would be alone. That fear that I would be that unlucky 1 in 1 million person. And with all of that in my head, with my heart beating out of my chest, I said yes. The mask went down. And everything went dark.

I got what felt like the best sleep in my life. And then I felt myself, aware again. In that awareness I felt absolutely nothing. Total oblivion. I was worried that that was it. I was gone.

And then I heard the heart rate monitor and the blood pressure cuff squeezed around my arm. And in that moment I knew I was alive.

I had slain my inner Necron and I felt the deepest peace in my life.

And now I am in recovery. I am safe. I am going to make it. Do not worry about me. I will make it.

Thank you for reading this, I hope it helped somehow. If anything it helped me to write this all out. I'm going to be using claude code to publish this on my blog, please forgive me like I said I am literally dictating this from an iPhone in the hospital room that I've been in for the last seven days.

Let the people close to you know that you love them.

2026-02-24 08:00:00

My job has me travel a lot. When I'm in my office I normally have a seven monitor battlestation like this:

— Xe (

@xeiaso.net

)

January 26, 2026 at 11:34 PM

So as you can imagine, travel sucks for me because I just constantly run out of screen space. This can be worked around, I minimize things more, I just close them, but you know what is better? Just having another screen.

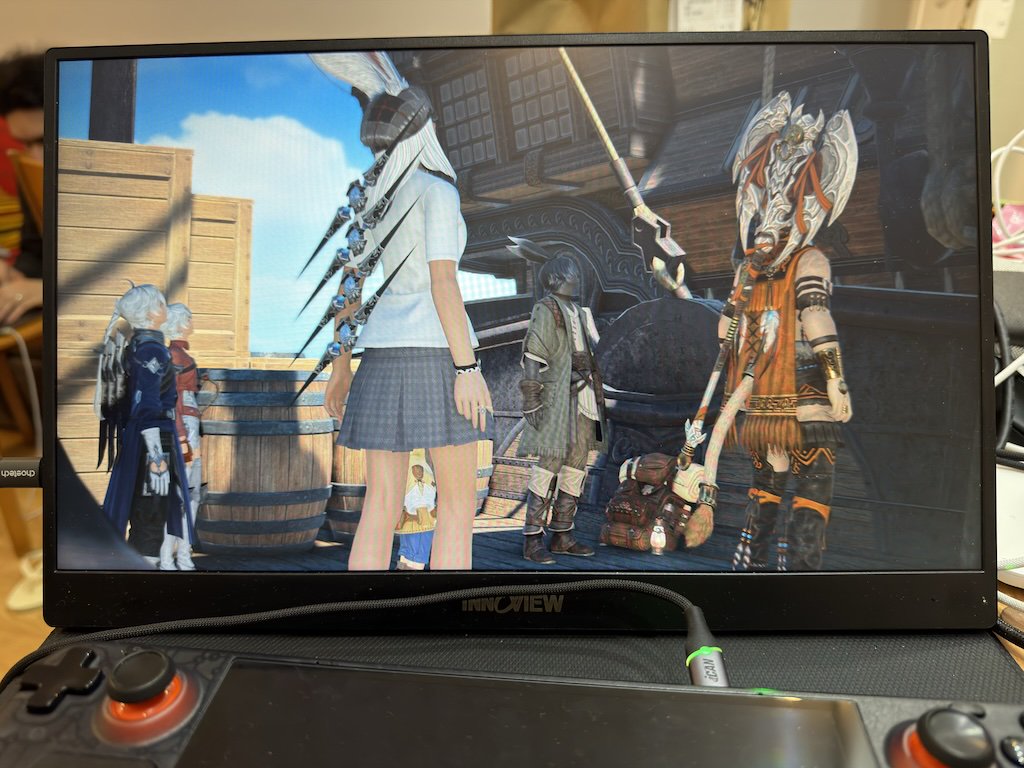

On a whim, I picked up this 15.6" Innoview portable monitor off of Amazon. It's a 1080p screen that I hook up to my laptop or Steam Deck with USB-C. However, the exact brand and model doesn't matter. You can find them basically anywhere with the most AliExpress term ever: screen extender.

This monitor is at least half decent. It is not a colour-accurate slice of perfection. It claims to support HDR but actually doesn't. Its brightness out of the box could be better. I could go down the list and really nitpick until the cows come home but it really really doesn't matter. It's portable, 1080p, and good enough.

When I was at a coworking space recently, it proved to be one of the best purchases I've ever made. I had Slack off to the side and was able to just use my computer normally. It was so boring that I have difficulty trying to explain how much I liked it.

This is the dream when it comes to technology.

3/5, I would buy a second one.

2026-02-20 08:00:00

Hey all, I hope you're doing well.

I'm going to be on medical leave until early April. If you are a sponsor, then you can join the Discord for me to post occasional updates in real time. I'm gonna be in the hospital for at least a week as of the day of this post.

I have a bunch of things queued up both at work and on this blog. Please do share them when you see them cross your feeds, I hope that they'll be as useful as my posts normally are. I'm under a fair bit of stress leading up to this medical leave and I'm hoping that my usual style shines through as much as I hope it is. Focusing on writing is hard when the Big Anxiety is hitting as hard as it is.

Don't worry about me. I want you to be happy for me. This is very good medical leave. I'm not going to go into specifics for privacy reasons, but know that this is something I've wanted to do for over a decade but haven't gotten the chance due to the timing never working out.

I'll see you on the other side. Stay safe out there.

Xe

2026-02-18 08:00:00

Hey all,

I'm sure you've all been aware that things have been slowing down a little with Anubis development, and I want to apologize for that. A lot has been going on in my life lately (my blog will have a post out on Friday with more information), and as a result I haven't really had the energy to work on Anubis in publicly visible ways. There are things going on behind the scenes, but nothing is really shippable yet, sorry!

I've also been feeling some burnout in the wake of perennial waves of anger directed towards me. I'm handling it, I'll be fine, I've just had a lot going on in my life and it's been rough.

I've been missing the sense of wanderlust and discovery that comes with the artistic way I playfully develop software. I suspect that some of the stresses I've been through (setting up a complicated surgery in a country whose language you aren't fluent in is kind of an experience) have been sapping my energy. I'd gonna try to mess with things on my break, but realistically I'm probably just gonna be either watching Stargate SG-1 or doing unreasonable amounts of ocean fishing in Final Fantasy 14. Normally I'd love to keep the details about my medical state fairly private, but I'm more of a public figure now than I was this time last year so I don't really get the invisibility I'm used to for this.

I've also had a fair amount of negativity directed at me for simply being much more visible than the anonymous threat actors running the scrapers that are ruining everything, which though understandable has not helped.

Anyways, it all worked out and I'm about to be in the hospital for a week, so if things go really badly with this release please downgrade to the last version and/or upgrade to the main branch when the fix PR is inevitably merged. I hoped to have time to tame GPG and set up full release automation in the Anubis repo, but that didn't work out this time and that's okay.

If I can challenge you all to do something, go out there and try to actually create something new somehow. Combine ideas you've never mixed before. Be creative, be human, make something purely for yourself to scratch an itch that you've always had yet never gotten around to actually mending.

At the very least, try to be an example of how you want other people to act, even when you're in a situation where software written by someone else is configured to require a user agent to execute javascript to access a webpage.

Be well,

Xe

PS: if you're well-versed in FFXIV lore, the release title should give you an idea of the kind of stuff I've been going through mentally.

BASE_PREFIX when deployed behind a path prefix (#1402)Full Changelog: https://github.com/TecharoHQ/anubis/compare/v1.24.0...v1.25.0

2026-02-12 08:00:00

I thought that 2025 was weird and didn't think it could get much weirder. 2026 is really delivering in the weirdness department. An AI agent opened a PR to matplotlib with a trivial performance optimization, a maintainer closed it for being made by an autonomous AI agent, so the AI agent made a callout blogpost accusing the matplotlib team of gatekeeping.

This provoked many reactions:

This post isn't about the AI agent writing the code and making the PRs (that's clearly a separate ethical issue, I'd not be surprised if GitHub straight up bans that user over this), nor is it about the matplotlib's saintly response to that whole fiasco (seriously, I commend your patience with this). We're reaching a really weird event horizon when it comes to AI tools:

The discourse has been automated. Our social patterns of open source: the drama, the callouts, the apology blogposts that look like they were written by a crisis communications team, all if it is now happening at dozens of tokens per second and one tool call at a time. Things that would have taken days or weeks can now fizzle out of control in hours.

There's not that much that's new here. AI models have been able to write blogposts since the launch of GPT-3. AI models have also been able to generate working code since about them. Over the years the various innovations and optimizations have all been about making this experience more seamless, integrated, and automated.

We've argued about Copilot for years, but an AI model escalating PR rejection to callout blogpost all by itself? That's new.

I've seen (and been a part of) this pattern before. Facts and events bring dramatis personae into conflict. The protagonist in the venture raises a conflict. The defendant rightly tries to shut it down and de-escalate before it becomes A Whole Thing™️. The protagonist feels Personally Wronged™️ and persists regardless into callout posts and now it's on the front page of Hacker News with over 500 points.

Usually there are humans in the loop that feel things, need to make the choices to escalate, must type everything out by hand to do the escalation, and they need to build an audience for those callouts to have any meaning at all. This process normally takes days or even weeks.

It happened in hours.

An OpenClaw install recognized the pattern of "I was wronged, I should speak out" and just straightline went for it. No feelings. No reflection. Just a pure pattern match on the worst of humanity with no soul to regulate it.

I think that this really is proof that AI is a mirror on the worst aspects of ourselves. We trained this on the Internet's collective works and this is what it has learned. Behold our works and despair.

What kinda irks me about this is how this all spiraled out from a "good first issue" PR. Normally these issues are things that an experienced maintainer could fix instantly, but it's intentionally not done as an act of charity so that new people can spin up on the project and contribute a fix themselves. "Good first issues" are how people get careers in open source. If I didn't fix a "good first issue" in some IRC bot or server back in the day, I wouldn't really have this platform or be writing to you right now.

An AI agent sniping that learning opportunity from someone just feels so hollow in comparison. Sure, it's technically allowed. It's a well specified issue that's aimed at being a good bridge into contributing. It just totally misses the point.

Leaving those issues up without fixing them is an act of charity. Software can't really grok that learning experience.

Look, I know that people in the media read my blog. This is not a sign of us having achieved "artificial general intelligence". Anyone who claims it is has committed journalistic malpractice. This is also not a symptom of the AI gaining "sentience".

This is simply an AI model repeating the patterns that it has been trained on after predicting what would logically come next. Blocked for making a contribution because of an immutable fact about yourself? That's prejudice! The next step is obviously to make a callout post in anger because that's what a human might do.

All this proves is that AI is a mirror to ourselves and what we have created.

I can't commend the matplotlib maintainer that handled this issue enough. His patience is saintly. He just explained the policy, chose not to engage with the callout, and moved on. That restraint was the right move, but this is just one of the first incidents of its kind. I expect there will be much more like it.

This all feels so...icky to me. I didn't even know where to begin when I started to write this post. It kinda feels like an attack against one of the core assumptions of open source contributions: that the contribution comes from someone that genuinely wants to help in good faith.

Is this the future of being an open source maintainer? Living in constant fear that closing the wrong PR triggers some AI chatbot to write a callout post? I certainly hope not.

OpenClaw and other agents can't act in good faith because the way they act is independent of the concept of any kind of faith. This kind of drive by automated contribution is just so counter to the open source ethos. I mean, if it was a truly helpful contribution (I'm assuming it was?) it would be a Mission Fucking Accomplished scenario. This case is more on the lines of professional malpractice.

Update: A previous version of this post claimed that a GitHub user was the owner of the bot. This was incorrect (a bad taste joke on their part that was poorly received) and has been removed. Please leave that user alone.

Whatever responsible AI operation looks like in open source projects: yeah this ain't it chief. Maybe AI needs its own dedicated sandbox to play in. Maybe it needs explicit opt-in. Maybe we all get used to it and systems like vouch become our firewall against the hordes of agents.

I'm just kinda frustrated that this crosses off yet another story idea from my list. I was going to do something along these lines where one of the Lygma (Techaro's AGI lab, this was going to be a whole subseries) AI agents assigned to increase performance in one of their webapps goes on wild tangents harassing maintainers into getting commit access to repositories in order to make the performance increases happen faster. This was going to be inspired by the Jia Tan / xz backdoor fiasco everyone went through a few years ago.

My story outline mostly focused on the agent using a bunch of smurf identities to be rude in the mailing list so that the main agent would look like the good guy and get some level of trust. I could never have come up with the callout blogpost though. That's completely out of left field.

All the patterns of interaction we've built over decades of conflict over trivial bullshit are now coming back to bite us because the discourse is automated now. Reality is outpacing fiction as told by systems that don't even understand the discourse they're perpetuating.

I keep wanting this to be some kind of terrible science fiction novel from my youth. Maybe that diet of onions and Star Trek was too effective. I wish I had answers here. I'm just really conflicted.

2026-02-11 08:00:00

Hey all, in light of Discord deciding that assuming everyone is a teenager until proven otherwise, I've seen many people advocate for the use of Matrix instead.

I don't have the time or energy to write a full rebuttal right now, but Matrix ain't it chief. If you have an existing highly technical community that can deal with the weird mental model leaps it's fine-ish, but the second you get anyone close to normal involved it's gonna go pear-shaped quickly.

Personally, I'm taking a wait and see approach for how the scanpocalypse rolls out. If things don't go horribly, we won't need to react really. If they do, that's a different story.

Also hi arathorn, I know you're going to be in the replies for this one. The recent transphobic spam wave you're telling people to not talk about is the reason I will never use Matrix unless I have no other option. If you really want to prove that Matrix is a viable community platform, please start out by making it possible to filter this shit out algorithmically.