2026-02-18 08:00:00

Hello! After spending some time working on the Git man pages last year, I’ve been thinking a little more about what makes a good man page.

I’ve spent a lot of time writing cheat sheets for tools (tcpdump, git, dig, etc) which have a man page as their primary documentation. This is because I often find the man pages hard to navigate to get the information I want.

Lately I’ve wondering – could the man page itself have an amazing cheat sheet in it? What might make a man page easier to use? I’m still very early in thinking about this but I wanted to write down some quick notes.

I asked some people on Mastodon for their favourite man pages, and here are some examples of interesting things I saw on those man pages.

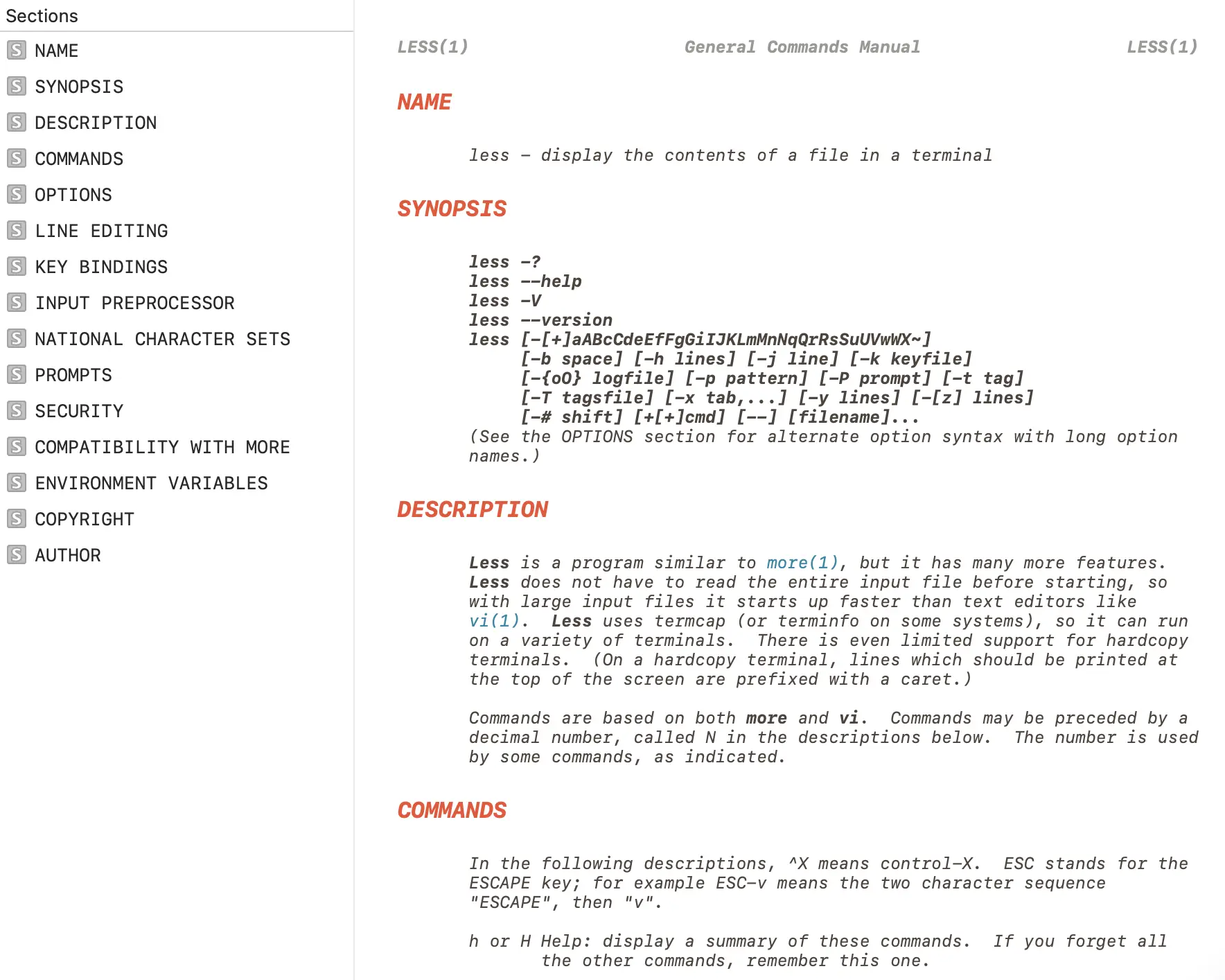

If you’ve read a lot of man pages you’ve probably seen something like this in

the SYNOPSIS: once you’re listing almost the entire alphabet, it’s hard

ls [-@ABCFGHILOPRSTUWabcdefghiklmnopqrstuvwxy1%,]

grep [-abcdDEFGHhIiJLlMmnOopqRSsUVvwXxZz]

The rsync man page has a solution I’ve never seen before: it keeps its SYNOPSIS very terse, like this:

Local:

rsync [OPTION...] SRC... [DEST]

and then has an “OPTIONS SUMMARY” section with a 1-line summary of each option, like this:

--verbose, -v increase verbosity

--info=FLAGS fine-grained informational verbosity

--debug=FLAGS fine-grained debug verbosity

--stderr=e|a|c change stderr output mode (default: errors)

--quiet, -q suppress non-error messages

--no-motd suppress daemon-mode MOTD

Then later there’s the usual OPTIONS section with a full description of each option.

The strace man page organizes its options by category (like “General”, “Startup”, “Tracing”, and “Filtering”, “Output Format”) instead of alphabetically.

As an experiment I tried to take the grep man page and make an

“OPTIONS SUMMARY” section grouped by category, you can see the results

here. I’m not

sure what I think of the results but it was a fun exercise. When I was writing

that I was thinking about how I can never remember the name of the -l grep

option. It always takes me what feels like forever to find it in the man page

and I was trying to think of what structure would make it easier for me to find.

Maybe categories?

A couple of people pointed me to the suite of Perl man pages (perlfunc, perlre, etc), and one thing I

noticed was man perlcheat, which has

cheat sheet sections like this:

SYNTAX

foreach (LIST) { } for (a;b;c) { }

while (e) { } until (e) { }

if (e) { } elsif (e) { } else { }

unless (e) { } elsif (e) { } else { }

given (e) { when (e) {} default {} }

I think this is so cool and it makes me wonder if there are other ways to write condensed ASCII 80-character-wide cheat sheets for use in man pages.

A common comment was something to the effect of “I like any man page that has examples”. Someone mentioned the OpenBSD man pages, and the openbsd tail man page has examples of the exact 2 ways I use tail at the end.

I think I’ve most often seen the EXAMPLES section at the end of the man page, but some man pages (like the rsync man page from earlier) start with the examples. When I was working on the git-add and git rebase man pages I put a short example at the beginning.

This isn’t a property of the man page itself, but one issue with man pages in the terminal is it’s hard to know what sections the man page has.

When working on the Git man pages, one thing Marie and I did was to add a table of contents to the sidebar of the HTML versions of the man pages hosted on the Git site.

I’d also like to add more hyperlinks to the HTML versions of the Git man pages at some point, so that you can click on “INCOMPATIBLE OPTIONS” to get to that section. It’s very easy to add links like this in the Git project since Git’s man pages are generated with AsciiDoc.

I think adding a table of contents and adding internal hyperlinks is kind of a nice middle ground where we can make some improvements to the man page format (in the HTML version of the man page at least) without maintaining a totally different form of documentation. Though for this to work you do need to set up a toolchain like Git’s AsciiDoc system.

It would be amazing if there were some kind of universal system to make it easy

to look up a specific option in a man page (“what does -a do?”).

The best trick I know is use the man pager to search for something like ^ *-a

but I never remember to do it and instead just end up going through

every instance of -a in the man page until I find what I’m looking for.

The curl man page has examples for every option, and there’s also a table of contents on the HTML version so you can more easily jump to the option you’re interested in.

For instance the example for --cert makes it easy to see that you likely also want to pass the --key option, like this:

curl --cert certfile --key keyfile https://example.com

The way they implement this is that there’s [one file for each option](https://github.com/curl/curl/blob/dc08922a61efe546b318daf964514ffbf41583 25/docs/cmdline-opts/append.md) and there’s an “Example” field in that file.

Quite a few people said that man ascii was their favourite man page, which looks like this:

Oct Dec Hex Char

───────────────────────────────────────────

000 0 00 NUL '\0' (null character)

001 1 01 SOH (start of heading)

002 2 02 STX (start of text)

003 3 03 ETX (end of text)

004 4 04 EOT (end of transmission)

005 5 05 ENQ (enquiry)

006 6 06 ACK (acknowledge)

007 7 07 BEL '\a' (bell)

010 8 08 BS '\b' (backspace)

011 9 09 HT '\t' (horizontal tab)

012 10 0A LF '\n' (new line)

Obviously man ascii is an unusual man page but I think what’s cool about this man page (other than the fact that it’s always

useful to have an ASCII reference) is it’s very easy to scan to find the

information you need because of the table format. It makes me wonder if there

are more opportunities to display information in a “table” in a man page to make

it easier to scan.

When I talk about man pages it often comes up that the GNU coreutils man pages (for example man tail) don’t have examples, unlike the OpenBSD man pages, which do have examples.

I’m not going to get into this too much because it seems like a fairly political topic and I definitely can’t do it justice here, but here are some things I believe to be true:

info tool. I’ve heard from some Emacs users that they like the Emacs info browser. I don’t think I’ve ever talked to anyone who uses the standalone info tool.After a certain level of complexity a man page gets really hard to navigate: while I’ve never used the coreutils info manual and probably won’t, I would almost certainly prefer to use the GNU Bash reference manual or the The GNU C Library Reference Manual via their HTML documentation rather than through a man page.

Here are some tools I think are interesting:

tldr grep. Lots of people have told me they find it useful.

Man pages are such a constrained format and it’s fun to think about what you can do with such limited formatting options.

Even though I’m very into writing I’ve always had a bad habit of never reading documentation and so it’s a little bit hard for me to think about what I actually find useful in man pages, I’m not sure whether I think most of the things in this post would improve my experience or not. (Except for examples, I LOVE examples)

So I’d be interested to hear about other man pages that you think are well designed and what you like about them, the comments section is here.

2026-01-27 08:00:00

Hello! One of my favourite things is starting to learn an Old Boring Technology that I’ve never tried before but that has been around for 20+ years. It feels really good when every problem I’m ever going to have has been solved already 1000 times and I can just get stuff done easily.

I’ve thought it would be cool to learn a popular web framework like Rails or Django or Laravel for a long time, but I’d never really managed to make it happen. But I started learning Django to make a website a few months back, I’ve been liking it so far, and here are a few quick notes!

I spent some time trying to learn Rails in 2020,

and while it was cool and I really wanted to like Rails (the Ruby community is great!),

I found that if I left my Rails project alone for months, when I came

back to it it was hard for me to remember how to get anything done because

(for example) if it says resources :topics in your routes.rb, on its own

that doesn’t tell you where the topics routes are configured, you need to

remember or look up the convention.

Being able to abandon a project for months or years and then come back to it is really important to me (that’s how all my projects work!), and Django feels easier to me because things are more explicit.

In my small Django project it feels like I just have 5 main files (other

than the settings files): urls.py, models.py, views.py, admin.py, and

tests.py, and if I want to know where something else is (like an HTML template)

is then it’s usually explicitly referenced from one of those files.

For this project I wanted to have an admin interface to manually edit or view some of the data in the database. Django has a really nice built-in admin interface, and I can customize it with just a little bit of code.

For example, here’s part of one of my admin classes, which sets up which fields to display in the “list” view, which field to search on, and how to order them by default.

@admin.register(Zine)

class ZineAdmin(admin.ModelAdmin):

list_display = ["name", "publication_date", "free", "slug", "image_preview"]

search_fields = ["name", "slug"]

readonly_fields = ["image_preview"]

ordering = ["-publication_date"]

In the past my attitude has been “ORMs? Who needs them? I can just write my own SQL queries!”.

I’ve been enjoying Django’s ORM so far though, and I think it’s cool how Django

uses __ to represent a JOIN, like this:

Zine.objects

.exclude(product__order__email_hash=email_hash)

This query involves 5 tables: zines, zine_products, products, order_products, and orders.

To make this work I just had to tell Django that there’s a ManyToManyField

relating “orders” and “products”, and another ManyToManyField relating

“zines”, and “products”, so that it knows how to connect zines, orders, products.

I definitely could write that query, but writing product__order__email_hash is

a lot less typing, it feels a lot easier to read, and honestly I think it would

take me a little while to figure out how to construct the query

(which needs to do a few other things than just those joins).

I have zero concern about the performance of my ORM-generated queries so I’m pretty excited about ORMs for now, though I’m sure I’ll find things to be frustrated with eventually.

The other great thing about the ORM is migrations!

If I add, delete, or change a field in models.py, Django will automatically

generate a migration script like migrations/0006_delete_imageblob.py.

I assume that I could edit those scripts if I wanted, but so far I’ve just been running the generated scripts with no change and it’s been going great. It really feels like magic.

I’m realizing that being able to do migrations easily is important for me right now because I’m changing my data model fairly often as I figure out how I want it to work.

I had a bad habit of never reading the documentation but I’ve been really enjoying the parts of Django’s docs that I’ve read so far. This isn’t by accident: Jacob Kaplan-Moss has a talk from PyCon 2011 on Django’s documentation culture.

For example the intro to models lists the most important common fields you might want to set when using the ORM.

After having a bad experience trying to operate Postgres and not being able to

understand what was going on, I decided to run all of my small websites with

SQLite instead. It’s been going way better, and I love being able to backup by

just doing a VACUUM INTO and then copying the resulting single file.

I’ve been following these instructions for using SQLite with Django in production.

I think it should be fine because I’m expecting the site to have a few hundred writes per day at most, much less than Mess with DNS which has a lot more of writes and has been working well (though the writes are split across 3 different SQLite databases).

Django seems to be very “batteries-included”, which I love – if I want CSRF

protection, or a Content-Security-Policy, or I want to send email, it’s all

in there!

For example, I wanted to save the emails Django sends to a file in dev mode (so that it didn’t send real email to real people), which was just a little bit of configuration.

I just put this settings/dev.py:

EMAIL_BACKEND = "django.core.mail.backends.filebased.EmailBackend"

EMAIL_FILE_PATH = BASE_DIR / "emails"

and then set up the production email like this in settings/production.py

EMAIL_BACKEND = "django.core.mail.backends.smtp.EmailBackend"

EMAIL_HOST = "smtp.whatever.com"

EMAIL_PORT = 587

EMAIL_USE_TLS = True

EMAIL_HOST_USER = "xxxx"

EMAIL_HOST_PASSWORD = os.getenv('EMAIL_API_KEY')

That made me feel like if I want some other basic website feature, there’s likely to be an easy way to do it built into Django already.

I’m still a bit intimidated by the settings.py file: Django’s settings system

works by setting a bunch of global variables in a file, and I feel a bit

stressed about… what if I make a typo in the name of one of those variables?

How will I know? What if I type WSGI_APPLICATOIN = "config.wsgi.application"

instead of WSGI_APPLICATION?

I guess I’ve gotten used to having a Python language server tell me when I’ve made a typo and so now it feels a bit disorienting when I can’t rely on the language server support.

I haven’t really successfully used an actual web framework for a project before (right now almost all of my websites are either a single Go binary or static sites), so I’m interested in seeing how it goes!

There’s still lots for me to learn about, I still haven’t really gotten into Django’s form validation tooling or authentication systems.

Thanks to Marco Rogers for convincing me to give ORMs a chance.

(we’re still experimenting with the comments-on-Mastodon system! Here are the comments on Mastodon! tell me your favourite Django feature!)

2026-01-08 08:00:00

Hello! This past fall, I decided to take some time to work on Git’s documentation. I’ve been thinking about working on open source docs for a long time – usually if I think the documentation for something could be improved, I’ll write a blog post or a zine or something. But this time I wondered: could I instead make a few improvements to the official documentation?

So Marie and I made a few changes to the Git documentation!

After a while working on the documentation, we noticed that Git uses the terms “object”, “reference”, or “index” in its documentation a lot, but that it didn’t have a great explanation of what those terms mean or how they relate to other core concepts like “commit” and “branch”. So we wrote a new “data model” document!

You can read the data model here for now. I assume at some point (after the next release?) it’ll also be on the Git website.

I’m excited about this because understanding how Git organizes its commit and branch data has really helped me reason about how Git works over the years, and I think it’s important to have a short (1600 words!) version of the data model that’s accurate.

The “accurate” part turned out to not be that easy: I knew the basics of how Git’s data model worked, but during the review process I learned some new details and had to make quite a few changes (for example how merge conflicts are stored in the staging area).

git push, git pull, and moreI also worked on updating the introduction to some of Git’s core man pages. I quickly realized that “just try to improve it according to my best judgement” was not going to work: why should the maintainers believe me that my version is better?

I’ve seen a problem a lot when discussing open source documentation changes where 2 expert users of the software argue about whether an explanation is clear or not (“I think X would be a good way to explain it! Well, I think Y would be better!”)

I don’t think this is very productive (expert users of a piece of software are notoriously bad at being able to tell if an explanation will be clear to non-experts), so I needed to find a way to identify problems with the man pages that was a little more evidence-based.

I asked for test readers on Mastodon to read the current version of documentation and tell me what they find confusing or what questions they have. About 80 test readers left comments, and I learned so much!

People left a huge amount of great feedback, for example:

Most of the test readers had been using Git for at least 5-10 years, which I think worked well – if a group of test readers who have been using Git regularly for 5+ years find a sentence or term impossible to understand, it makes it easy to argue that the documentation should be updated to make it clearer.

I thought this “get users of the software to comment on the existing documentation and then fix the problems they find” pattern worked really well and I’m excited about potentially trying it again in the future.

We ended updating these 4 man pages:

git add (before, after)git checkout (before, after)git push (before, after)git pull (before, after)The git push and git pull changes were the most interesting to me: in

addition to updating the intro to those pages, we also ended up writing:

Making those changes really gave me an appreciation for how much work it is

to maintain open source documentation: it’s not easy to write things that are

both clear and true, and sometimes we had to make compromises, for example the sentence

“git push may fail if you haven’t set an upstream for the current branch,

depending on what push.default is set to.” is a little vague, but the exact

details of what “depending” means are really complicated and untangling that is

a big project.

It took me a while to understand Git’s development process. I’m not going to try to describe it here (that could be a whole other post!), but a few quick notes:

I also found the mailing list archives on lore.kernel.org hard to navigate, so I hacked together my own git list viewer to make it easier to read the long mailing list threads.

Many people helped me navigate the contribution process and review the changes: thanks to Emily Shaffer, Johannes Schindelin (the author of GitGitGadget), Patrick Steinhardt, Ben Knoble, Junio Hamano, and more.

(I’m experimenting with comments on Mastodon, you can see the comments here)

2025-10-10 08:00:00

Hello! Earlier this summer I was talking to a friend about how much I love using fish, and how I love that I don’t have to configure it. They said that they feel the same way about the helix text editor, and so I decided to give it a try.

I’ve been using it for 3 months now and here are a few notes.

I think what motivated me to try Helix is that I’ve been trying to get a working language server setup (so I can do things like “go to definition”) and getting a setup that feels good in Vim or Neovim just felt like too much work.

After using Vim/Neovim for 20 years, I’ve tried both “build my own custom configuration from scratch” and “use someone else’s pre-buld configuration system” and even though I love Vim I was excited about having things just work without having to work on my configuration at all.

Helix comes with built in language server support, and it feels nice to be able to do things like “rename this symbol” in any language.

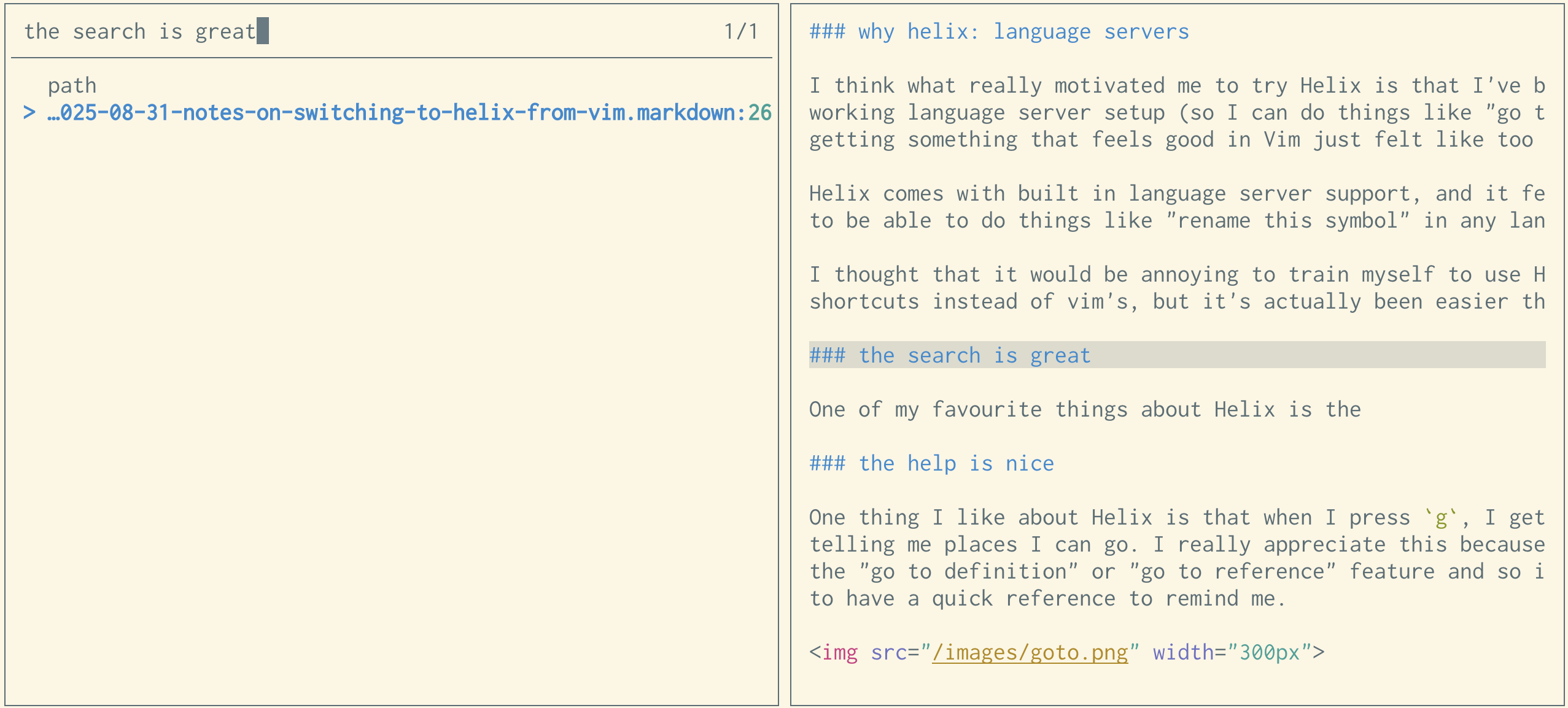

One of my favourite things about Helix is the search! If I’m searching all the files in my repository for a string, it lets me scroll through the potential matching files and see the full context of the match, like this:

For comparison, here’s what the vim ripgrep plugin I’ve been using looks like:

There’s no context for what else is around that line.

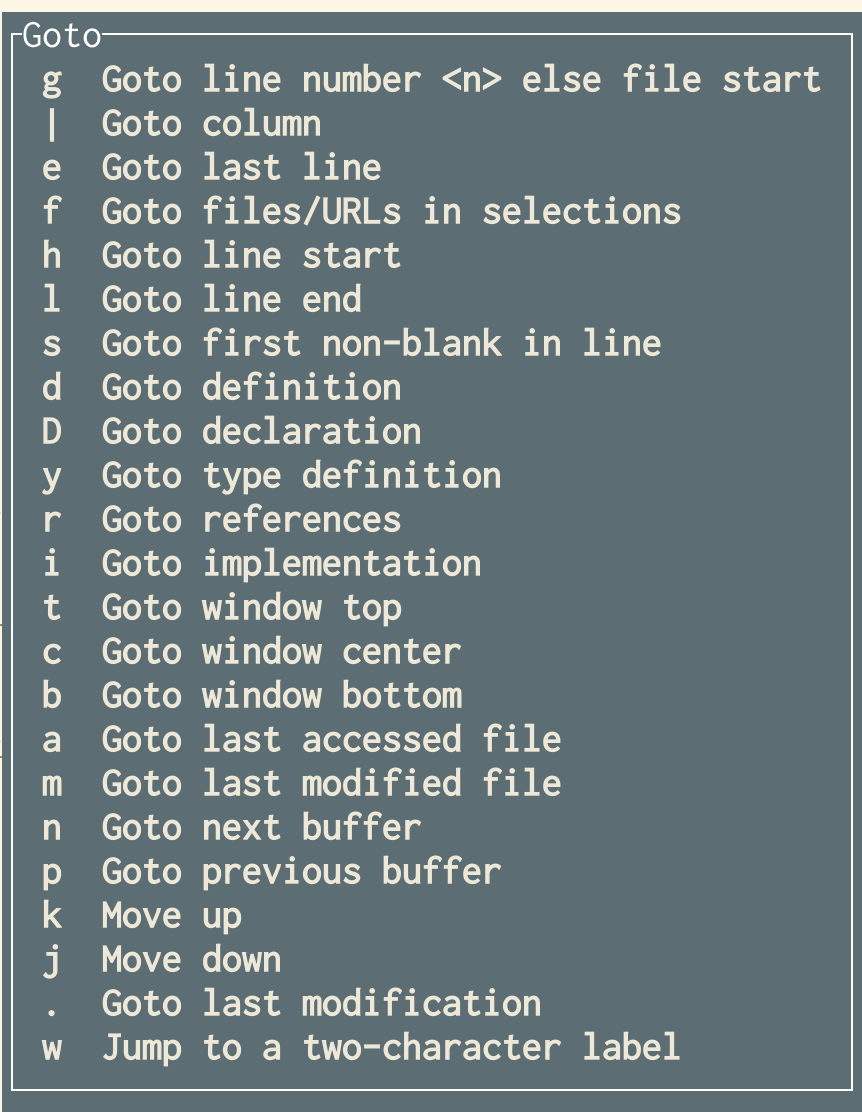

One thing I like about Helix is that when I press g, I get a little help popup

telling me places I can go. I really appreciate this because I don’t often use

the “go to definition” or “go to reference” feature and I often forget the

keyboard shortcut.

ma, 'a, instead I’ve been using Ctrl+O and

Ctrl+I to go back (or forward) to the last cursor location% (to highlight everything), then s

to select (with a regex) the things I want to change, then I can just edit

all of them as needed.<space>b)

I can use to switch to the buffer I want. There’s a

pull request here to implement neovim-style tabs.

There’s also a setting bufferline="multiple" which can act a bit like tabs

with gp, gn for prev/next “tab” and :bc to close a “tab”.Here’s everything that’s annoyed me about Helix so far.

:reflow works much less than how

vim reflows text with gq. It doesn’t work as well with lists. (github issue):reload-all (:ra<tab>) to manually reload them. Not a big deal.The “markdown list” and reflowing issues come up a lot for me because I spend a lot of time editing Markdown lists, but I keep using Helix anyway so I guess they can’t be making me that mad.

I was worried that relearning 20 years of Vim muscle memory would be really hard.

It turned out to be easier than I expected, I started using Helix on a vacation for a little low-stakes coding project I was doing on the side and after a week or two it didn’t feel so disorienting anymore. I think it might be hard to switch back and forth between Vim and Helix, but I haven’t needed to use Vim recently so I don’t know if that’ll ever become an issue for me.

The first time I tried Helix I tried to force it to use keybindings that were more similar to Vim and that did not work for me. Just learning the “Helix way” was a lot easier.

There are still some things that throw me off: for example w in vim and w in

Helix don’t have the same idea of what a “word” is (the Helix one includes the

space after the word, the Vim one doesn’t).

For many years I’d mostly been using a GUI version of vim/neovim, so switching to actually using an editor in the terminal was a bit of an adjustment.

I ended up deciding on:

It works pretty well, I might actually like it better than my previous workflow.

I appreciate that my configuration is really simple, compared to my neovim configuration which is hundreds of lines. It’s mostly just 4 keyboard shortcuts.

theme = "solarized_light"

[editor]

# Sync clipboard with system clipboard

default-yank-register = "+"

[keys.normal]

# I didn't like that Ctrl+C was the default "toggle comments" shortcut

"#" = "toggle_comments"

# I didn't feel like learning a different way

# to go to the beginning/end of a line so

# I remapped ^ and $

"^" = "goto_first_nonwhitespace"

"$" = "goto_line_end"

[keys.select]

"^" = "goto_first_nonwhitespace"

"$" = "goto_line_end"

[keys.normal.space]

# I write a lot of text so I need to constantly reflow,

# and missed vim's `gq` shortcut

l = ":reflow"

There’s a separate languages.toml configuration where I set some language

preferences, like turning off autoformatting.

For example, here’s my Python configuration:

[[language]]

name = "python"

formatter = { command = "black", args = ["--stdin-filename", "%{buffer_name}", "-"] }

language-servers = ["pyright"]

auto-format = false

Three months is not that long, and it’s possible that I’ll decide to go back to Vim at some point. For example, I wrote a post about switching to nix a while back but after maybe 8 months I switched back to Homebrew (though I’m still using NixOS to manage one little server, and I’m still satisfied with that).

2025-06-26 08:00:00

Hello! After many months of writing deep dive blog posts about the terminal, on Tuesday I released a new zine called “The Secret Rules of the Terminal”!

You can get it for $12 here: https://wizardzines.com/zines/terminal, or get an 15-pack of all my zines here.

Here’s the cover:

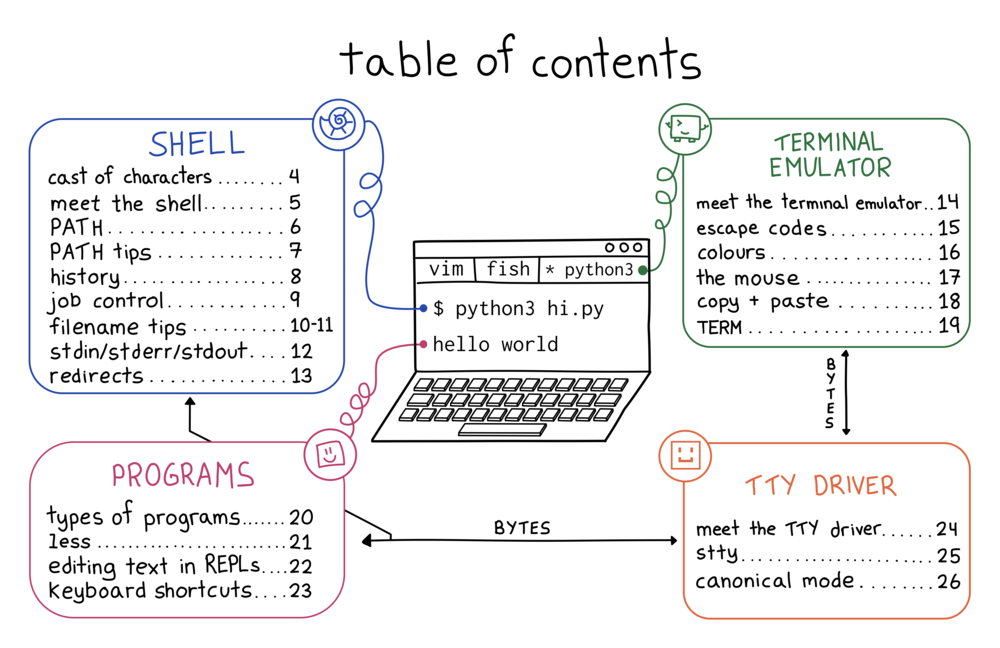

Here’s the table of contents:

I’ve been using the terminal every day for 20 years but even though I’m very confident in the terminal, I’ve always had a bit of an uneasy feeling about it. Usually things work fine, but sometimes something goes wrong and it just feels like investigating it is impossible, or at least like it would open up a huge can of worms.

So I started trying to write down a list of weird problems I’ve run into in terminal and I realized that the terminal has a lot of tiny inconsistencies like:

^[[D

If you use the terminal daily for 10 or 20 years, even if you don’t understand exactly why these things happen, you’ll probably build an intuition for them.

But having an intuition for them isn’t the same as understanding why they happen. When writing this zine I actually had to do a lot of work to figure out exactly what was happening in the terminal to be able to talk about how to reason about it.

It turns out that the “rules” for how the terminal works (how do

you edit a command you type in? how do you quit a program? how do you fix your

colours?) are extremely hard to fully understand, because “the terminal” is actually

made of many different pieces of software (your terminal emulator, your

operating system, your shell, the core utilities like grep, and every other random

terminal program you’ve installed) which are written by different people with different

ideas about how things should work.

So I wanted to write something that would explain:

Terminal internals are a mess. A lot of it is just the way it is because someone made a decision in the 80s and now it’s impossible to change, and honestly I don’t think learning everything about terminal internals is worth it.

But some parts are not that hard to understand and can really make your experience in the terminal better, like:

cating a binary to stdout messes up your terminal, you can just type reset and move onWhen I wrote How Git Works, I thought I

knew how Git worked, and I was right. But the terminal is different. Even

though I feel totally confident in the terminal and even though I’ve used it

every day for 20 years, I had a lot of misunderstandings about how the terminal

works and (unless you’re the author of tmux or something) I think there’s a

good chance you do too.

A few things I learned that are actually useful to me:

reset works under the hood (it does the equivalent of stty sane; sleep 1; tput reset) – basically I learned that I don’t ever need to worry about

remembering stty sane or tput reset and I can just run reset insteadunbuffer program > out; less out)sqlite3 are so annoying to use (they use libedit instead of readline)As usual these days I wrote a bunch of blog posts about various side quests:

terminfo database is serving us well todayA long time ago I used to write zines mostly by myself but with every project I get more and more help. I met with Marie Claire LeBlanc Flanagan every weekday from September to June to work on this one.

The cover is by Vladimir Kašiković, Lesley Trites did copy editing, Simon Tatham (who wrote PuTTY) did technical review, our Operations Manager Lee did the transcription as well as a million other things, and Jesse Luehrs (who is one of the very few people I know who actually understands the terminal’s cursed inner workings) had so many incredibly helpful conversations with me about what is going on in the terminal.

Here are some links to get the zine again:

As always, you can get either a PDF version to print at home or a print version shipped to your house. The only caveat is print orders will ship in August – I need to wait for orders to come in to get an idea of how many I should print before sending it to the printer.

2025-06-10 08:00:00

I have never been a C programmer but every so often I need to compile a C/C++

program from source. This has been kind of a struggle for me: for a

long time, my approach was basically “install the dependencies, run make, if

it doesn’t work, either try to find a binary someone has compiled or give up”.

“Hope someone else has compiled it” worked pretty well when I was running Linux but since I’ve been using a Mac for the last couple of years I’ve been running into more situations where I have to actually compile programs myself.

So let’s talk about what you might have to do to compile a C program! I’ll use a couple of examples of specific C programs I’ve compiled and talk about a few things that can go wrong. Here are three programs we’ll be talking about compiling:

This is pretty simple: on an Ubuntu system if I don’t already have a C compiler I’ll install one with:

sudo apt-get install build-essential

This installs gcc, g++, and make. The situation on a Mac is more

confusing but it’s something like “install xcode command line tools”.

Unlike some newer programming languages, C doesn’t have a dependency manager. So if a program has any dependencies, you need to hunt them down yourself. Thankfully because of this, C programmers usually keep their dependencies very minimal and often the dependencies will be available in whatever package manager you’re using.

There’s almost always a section explaining how to get the dependencies in the README, for example in paperjam’s README, it says:

To compile PaperJam, you need the headers for the libqpdf and libpaper libraries (usually available as libqpdf-dev and libpaper-dev packages).

You may need

a2x(found in AsciiDoc) for building manual pages.

So on a Debian-based system you can install the dependencies like this.

sudo apt install -y libqpdf-dev libpaper-dev

If a README gives a name for a package (like libqpdf-dev), I’d basically

always assume that they mean “in a Debian-based Linux distro”: if you’re on a

Mac brew install libqpdf-dev will not work. I still have not 100% gotten

the hang of developing on a Mac yet so I don’t have many tips there yet. I

guess in this case it would be brew install qpdf if you’re using Homebrew.

./configure (if needed)Some C programs come with a Makefile and some instead come with a script called

./configure. For example, if you download sqlite’s source code, it has a ./configure script in

it instead of a Makefile.

My understanding of this ./configure script is:

Makefile or fails because you’re missing some

dependency./configure script is part of a system called

autotools

that I have never needed to learn anything about beyond “run it to generate

a Makefile”.I think there might be some options you can pass to get the ./configure

script to produce a different Makefile but I have never done that.

make

The next step is to run make to try to build a program. Some notes about

make:

make -j8 to parallelize the build and make it go

fasterHere’s an error I got while compiling paperjam on my Mac:

/opt/homebrew/Cellar/qpdf/12.0.0/include/qpdf/InputSource.hh:85:19: error: function definition does not declare parameters

85 | qpdf_offset_t last_offset{0};

| ^

Over the years I’ve learned it’s usually best not to overthink problems like

this: if it’s talking about qpdf, there’s a good change it just means that

I’ve done something wrong with how I’m including the qpdf dependency.

Now let’s talk about some ways to get the qpdf dependency included in the right way.

Before we talk about how to fix dependency problems: building C programs is split into 2 steps:

gcc or clang)ld)It’s important to know this when building a C program because sometimes you need to pass the right flags to the compiler and linker to tell them where to find the dependencies for the program you’re compiling.

make uses environment variables to configure the compiler and linkerIf I run make on my Mac to install paperjam, I get this error:

c++ -o paperjam paperjam.o pdf-tools.o parse.o cmds.o pdf.o -lqpdf -lpaper

ld: library 'qpdf' not found

This is not because qpdf is not installed on my system (it actually is!). But

the compiler and linker don’t know how to find the qpdf library. To fix this, we need to:

"-I/opt/homebrew/include" to the compiler (to tell it where to find the header files)"-L/opt/homebrew/lib -liconv" to the linker (to tell it where to find library files and to link in iconv)And we can get make to pass those extra parameters to the compiler and linker using environment variables!

To see how this works: inside paperjam’s Makefile you can see a bunch of environment variables, like LDLIBS here:

paperjam: $(OBJS)

$(LD) -o $@ $^ $(LDLIBS)

Everything you put into the LDLIBS environment variable gets passed to the

linker (ld) as a command line argument.

CPPFLAGS

Makefiles sometimes define their own environment variables that they pass to

the compiler/linker, but make also has a bunch of “implicit” environment

variables which it will automatically pass to the C compiler and linker. There’s a full list of implicit environment variables here,

but one of them is CPPFLAGS, which gets automatically passed to the C compiler.

(technically it would be more normal to use CXXFLAGS for this, but this

particular Makefile hardcodes CXXFLAGS so setting CPPFLAGS was the only

way I could find to set the compiler flags without editing the Makefile)

make

I learned thanks to @zwol that there are actually two ways to pass environment variables to make:

CXXFLAGS=xyz make (the usual way)make CXXFLAGS=xyzThe difference between them is that make CXXFLAGS=xyz will override the

value of CXXFLAGS set in the Makefile but CXXFLAGS=xyz make won’t.

I’m not sure which way is the norm but I’m going to use the first way in this post.

CPPFLAGS and LDLIBS to fix this compiler errorNow that we’ve talked about how CPPFLAGS and LDLIBS get passed to the

compiler and linker, here’s the final incantation that I used to get the

program to build successfully!

CPPFLAGS="-I/opt/homebrew/include" LDLIBS="-L/opt/homebrew/lib -liconv" make paperjam

This passes -I/opt/homebrew/include to the compiler and -L/opt/homebrew/lib -liconv to the linker.

Also I don’t want to pretend that I “magically” knew that those were the right arguments to pass, figuring them out involved a bunch of confused Googling that I skipped over in this post. I will say that:

-I compiler flag tells the compiler which directory to find header files in, like /opt/homebrew/include/qpdf/QPDF.hh

-L linker flag tells the linker which directory to find libraries in, like /opt/homebrew/lib/libqpdf.a

-l linker flag tells the linker which libraries to link in, like -liconv means “link in the iconv library”, or -lm means “link math”make $FILENAME

Yesterday I discovered this cool tool called

qf which you can use to quickly

open files from the output of ripgrep.

qf is in a big directory of various tools, but I only wanted to compile qf.

So I just compiled qf, like this:

make qf

Basically if you know (or can guess) the output filename of the file you’re

trying to build, you can tell make to just build that file by running make $FILENAME

I sometimes write 5-line C programs with no dependencies, and I just learned

that if I have a file called blah.c, I can just compile it like this without creating a Makefile:

make blah

It gets automaticaly expanded to cc -o blah blah.c, which saves a bit of

typing. I have no idea if I’m going to remember this (I might just keep typing

gcc -o blah blah.c anyway) but it seems like a fun trick.

If you’re having trouble building a C program, maybe other people had problems building it too! Every Linux distribution has build files for every package that they build, so even if you can’t install packages from that distribution directly, maybe you can get tips from that Linux distro for how to build the package. Realizing this (thanks to my friend Dave) was a huge ah-ha moment for me.

For example, this line from the nix package for paperjam says:

env.NIX_LDFLAGS = lib.optionalString stdenv.hostPlatform.isDarwin "-liconv";

This is basically saying “pass the linker flag -liconv to build this on a

Mac”, so that’s a clue we could use to build it.

That same file also says env.NIX_CFLAGS_COMPILE = "-DPOINTERHOLDER_TRANSITION=1";. I’m not sure what this means, but when I try

to build the paperjam package I do get an error about something called a

PointerHolder, so I guess that’s somehow related to the “PointerHolder

transition”.

Once you’ve managed to compile the program, probably you want to install it somewhere!

Some Makefiles have an install target that let you install the tool on your

system with make install. I’m always a bit scared of this (where is it going

to put the files? what if I want to uninstall them later?), so if I’m compiling

a pretty simple program I’ll often just manually copy the binary to install it

instead, like this:

cp qf ~/bin

Once I figured out how to do all of this, I realized that I could use my new

make knowledge to contribute a paperjam package to Homebrew! Then I could

just brew install paperjam on future systems.

The good thing is that even if the details of how all of the different packaging systems, they fundamentally all use C compilers and linkers.

I think all of this is an interesting example of how it can useful to understand some basics of how C programs work (like “they have header files”) even if you’re never planning to write a nontrivial C program if your life.

It feels good to have some ability to compile C/C++ programs myself, even

though I’m still not totally confident about all of the compiler and linker

flags and I still plan to never learn anything about how autotools works other

than “you run ./configure to generate the Makefile”.

Two things I left out of this post:

LD_LIBRARY_PATH / DYLD_LIBRARY_PATH (which you use to tell the dynamic

linker at runtime where to find dynamically linked files) because I can’t

remember the last time I ran into an LD_LIBRARY_PATH issue and couldn’t

find an example.pkg-config, which I think is important but I don’t understand yet