2026-01-03 08:00:00

It’s time for a yearly review again. Time flies.

I read a few books this year.

The whole Dungeon Crawler Carl series was absolutely amazing and it quickly jumped up to one of my favorite series of all time.

I can’t be held accountable for everything I’ve ever said to a stripper.

Board games are great.

I still don’t play nearly as much as I’d like to but my kids are quickly growing up and to my eternal joy they’re all interested in board games! Isidor (8) has been loving Radlands, Loke (5) likes Chronicles of Avel, and Freja (3) loves Dragon’s Breath. My personal favorite is Luthier and I think it might be my absolute favorite game right now.

Computer games have made a small comeback.

As my kids have been gaming more I’ve regained a little bit of interest in gaming too. I’ve been playing a little Core Keeper and Beyond All Reason with Isidor and it felt really fun.

Migrated my Neovim config to Fennel.

Eh, it was fun to play with a new language.

I wrote 12 blog posts, not great, not terrible.

I managed to push back my fantasy writing ambitions to the future.

It’s important as I tend to get stuck on things, preventing me from making progress in other parts. I really don’t have the time or energy to try to write a fantasy book right now, but making my brain see that wasn’t easy.

2025 was the first full year running my company.

I’m still consulting for the same company I started out with and I enjoy it. It feels good to say that I haven’t blown it completely just yet.

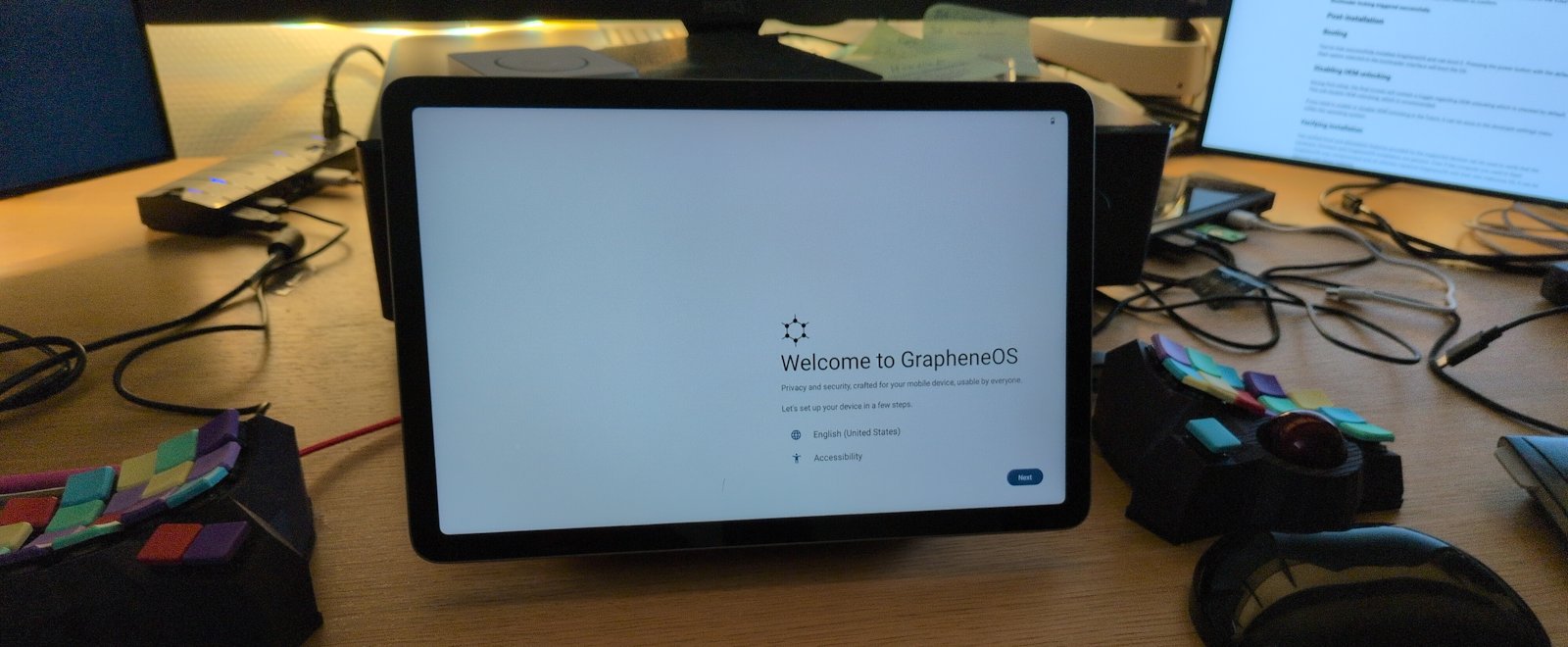

I took a big step forward in de-Googling and to increase my personal security by moving to GrapheneOS. I’m super happy about it.

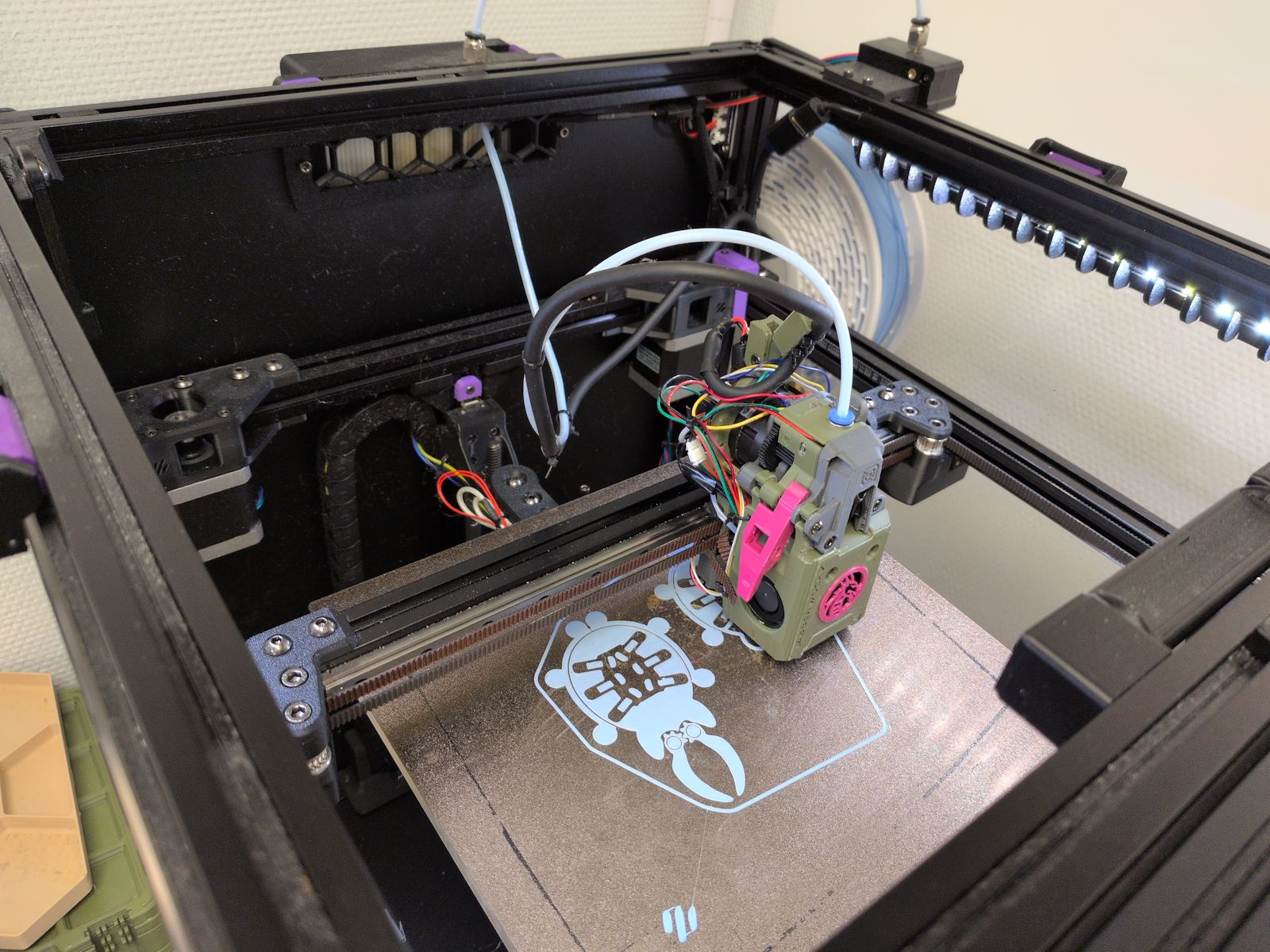

Built my second 3D-printer, a VORON 0.

It’s good! It’s fun! And I’m already planning my next printer…

We migrated away from Sonos in our Kitchen.

We got tired of buggy vendor lock-in and now we use my own Music Assistant based setup. If things break we can now blame me instead of Sonos.

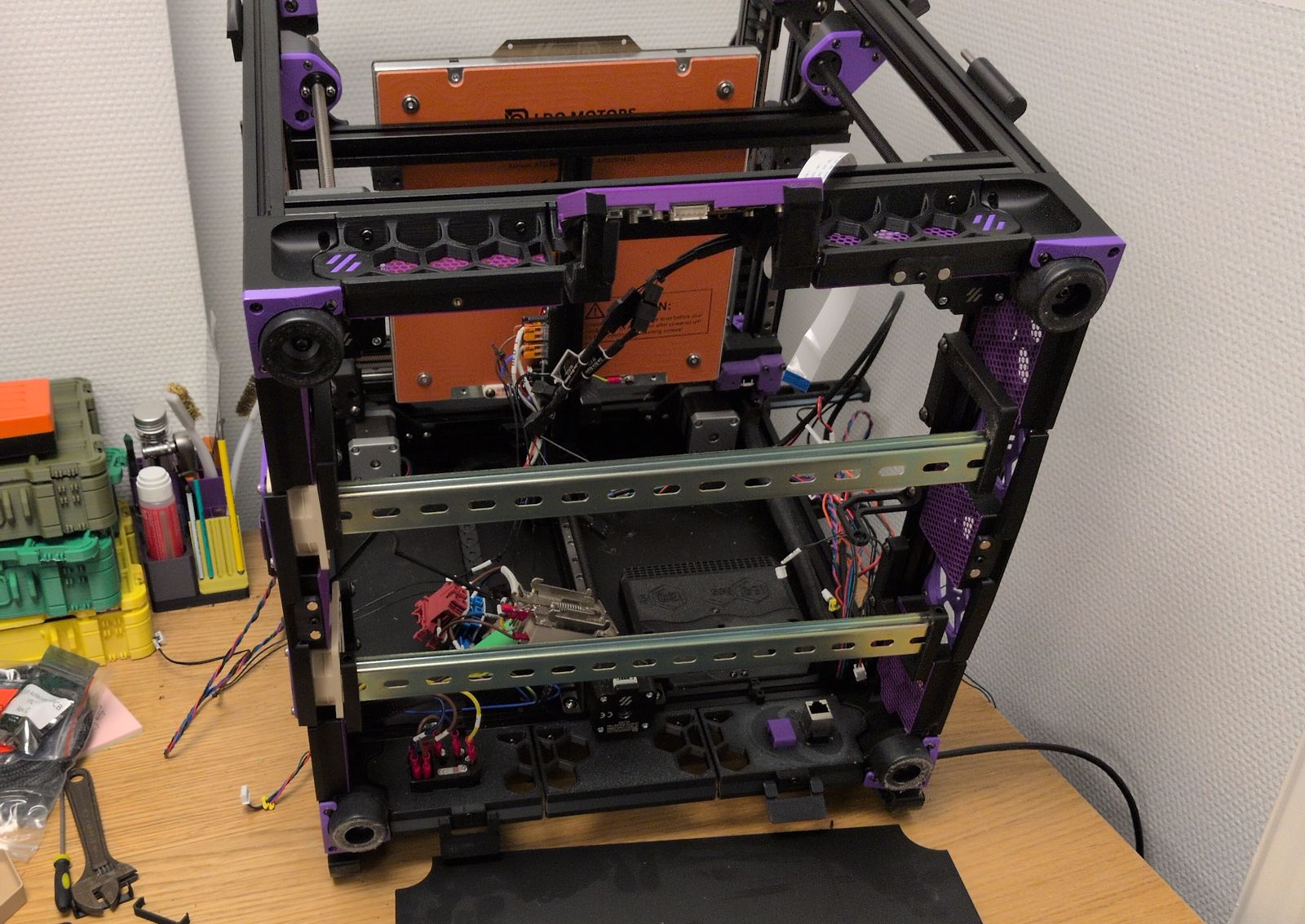

I built my server first rack to host a new homelab server.

I still need to cleanup the cables and write a blog post about it.

Go on some roller coasters with the family.

We’re planning to go to Liseberg in Göteborg and I know the kids will love it.

Lose weight and build muscle.

I’ve been having a rough year training and health wise. Not being able to get a consistent strength training routine, inconsistent sleep, and spouts of depression has made me gain too much weight again.

Getting it sorted should (must) be a high priority this year.

Get our own training space for our martial arts club.

I’ve been wanting to get our own place for quite a while and I feel that we need to make it happen sooner rather than later.

Develop a company product MVP.

While I enjoy the consulting work I do at the moment my goal is to one day have a project of my own that can earn me money. I have a couple of ideas I want to pursue and I was going to do it in 2025 but I didn’t get far enough for that.

Migrate my homelab to the recently built server and expand on it.

I have this newly built server that’s just sitting idle. I need to migrate my old services to it and I’ve got ideas for lots of stuff I want to put there.

2025-12-02 08:00:00

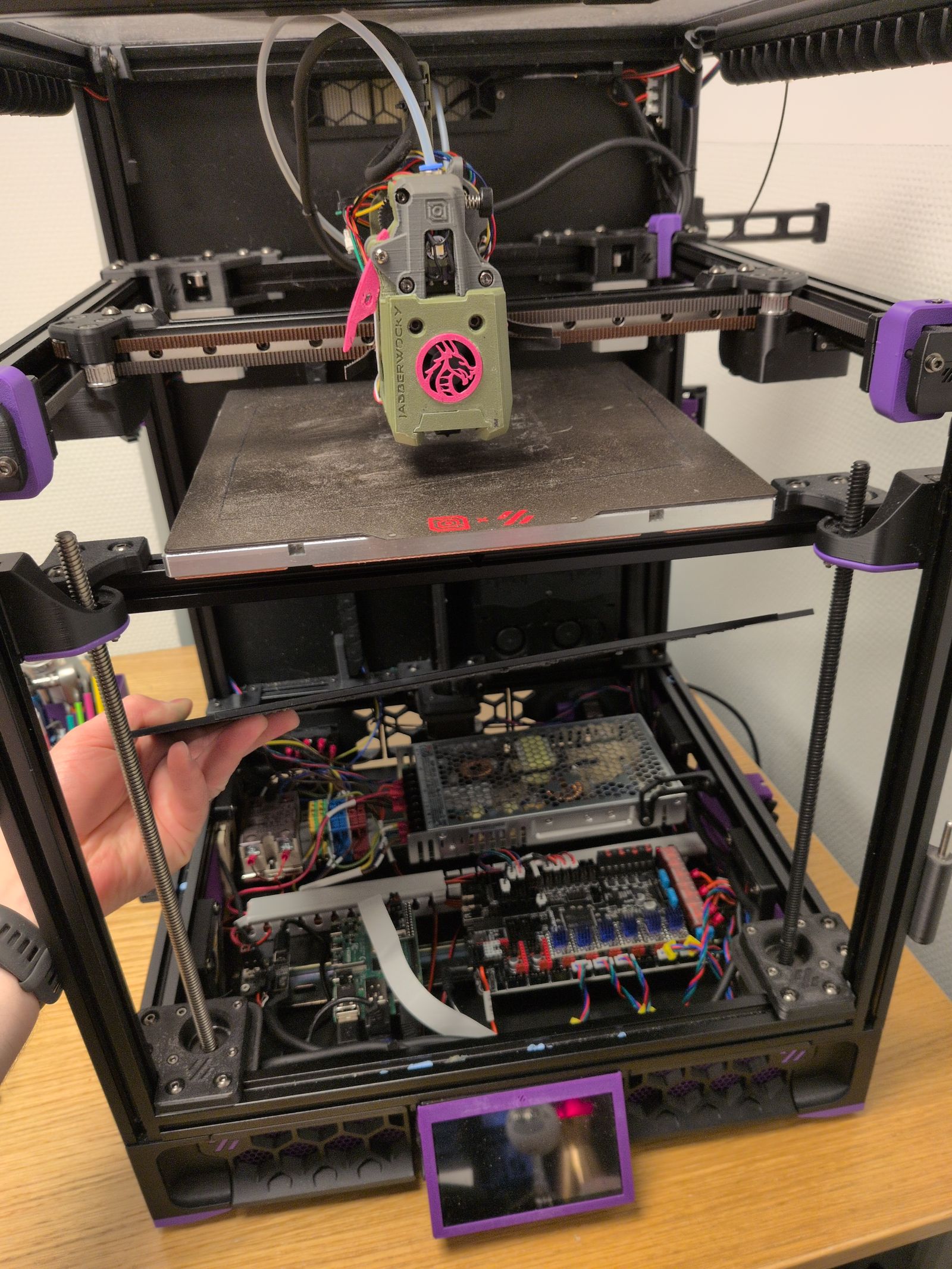

I’ve had my VORON Trident for 2 years and I’ve run it for 2600 hours. Overall I’m happy with the printer but I’ve been itching to make some more mods to it. Having finally finished the VORON 0 (with mods) I now have a backup printer I can use to rescue myself when I screw up.

As the printer was starting to crap out with a leadscrew starting to grind down again, the chamber thermistor stopped working, and PLA clogging up the Rapido hotend again it was time for a bit of a rebuild.

Besides fixing the printer I also wanted to prepare for a multi-color solution such as the Box Turtle and make some quality of life changes.

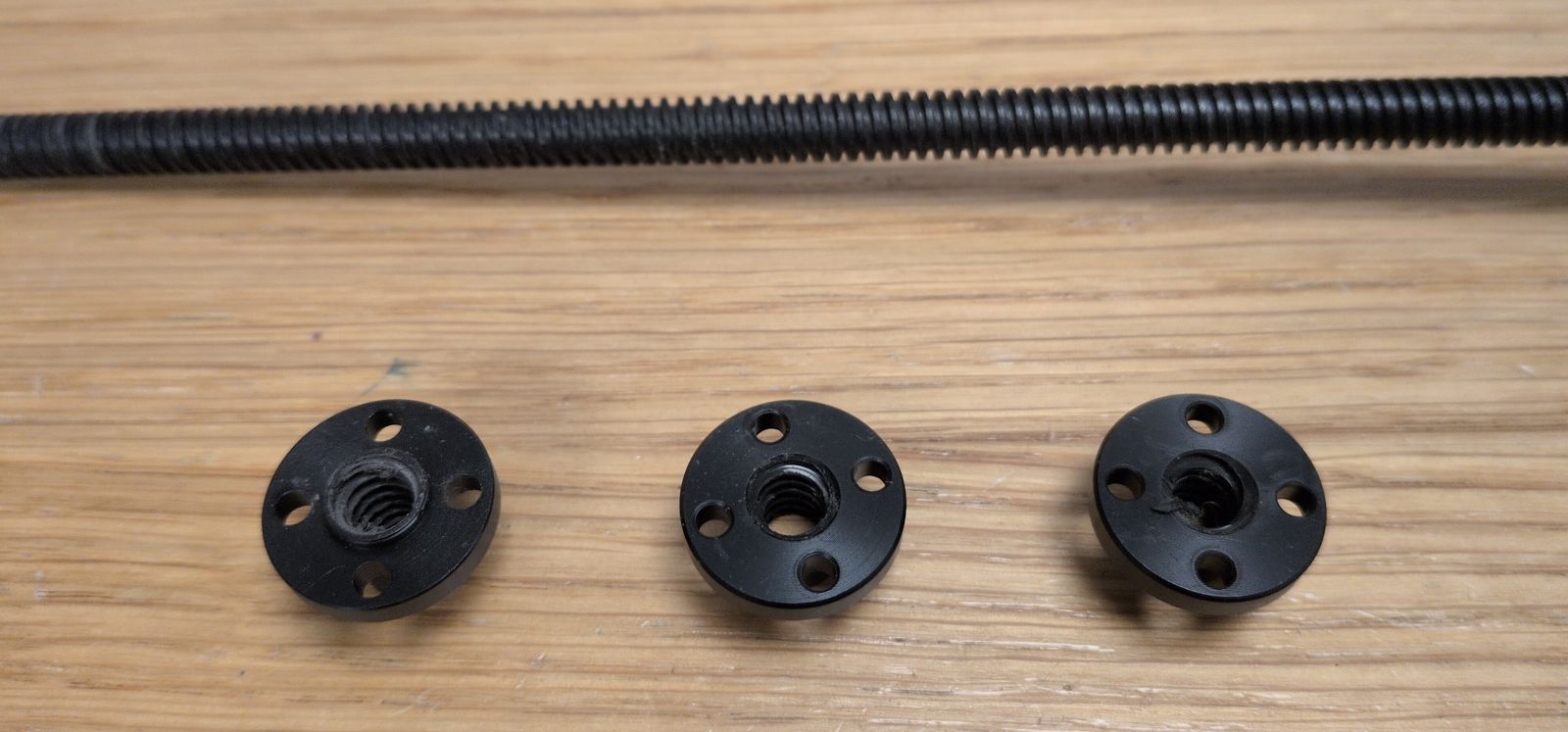

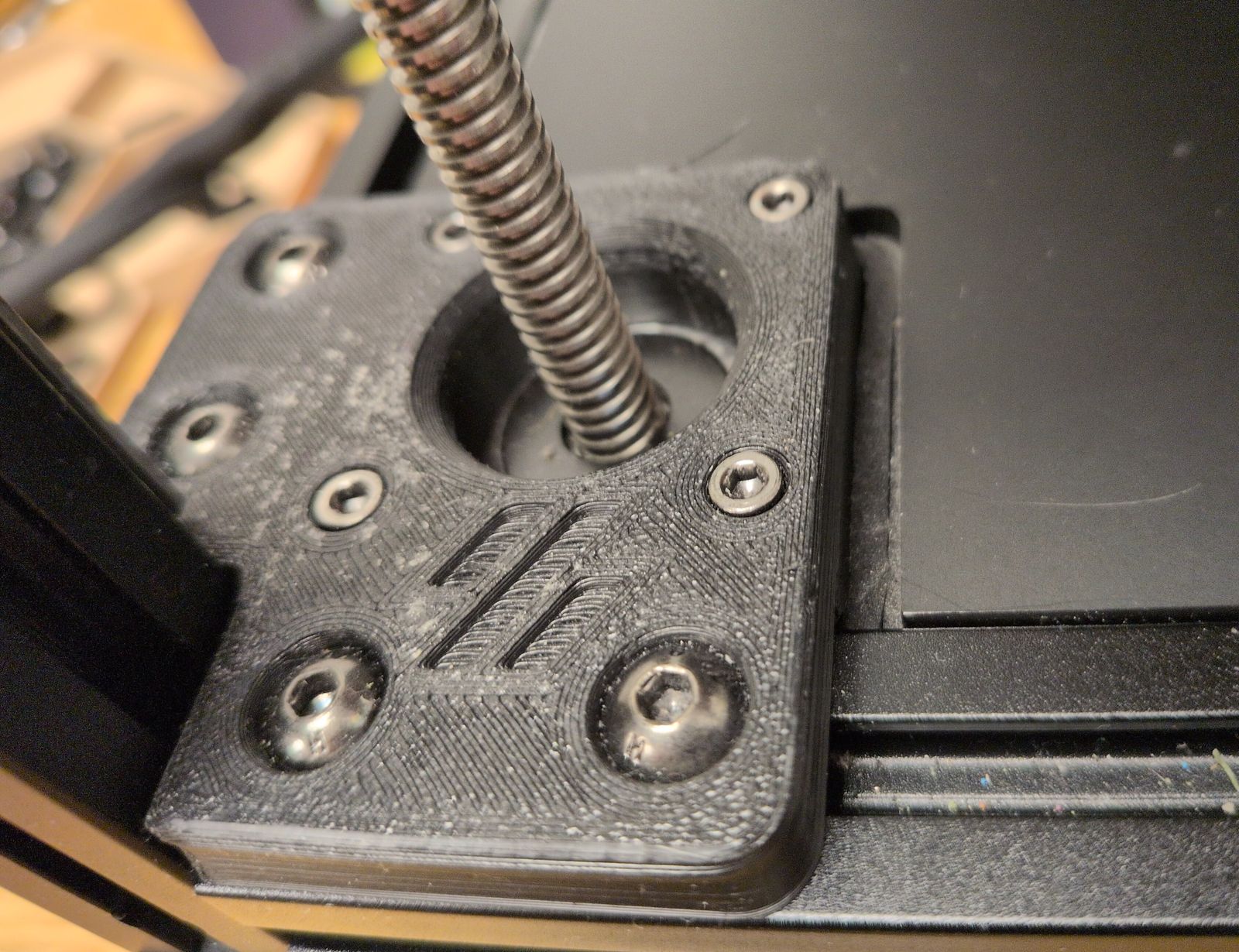

Replace the problematic leadscrew with a replacement part I received from LDO and replace the POM nuts on the other leadscrews.

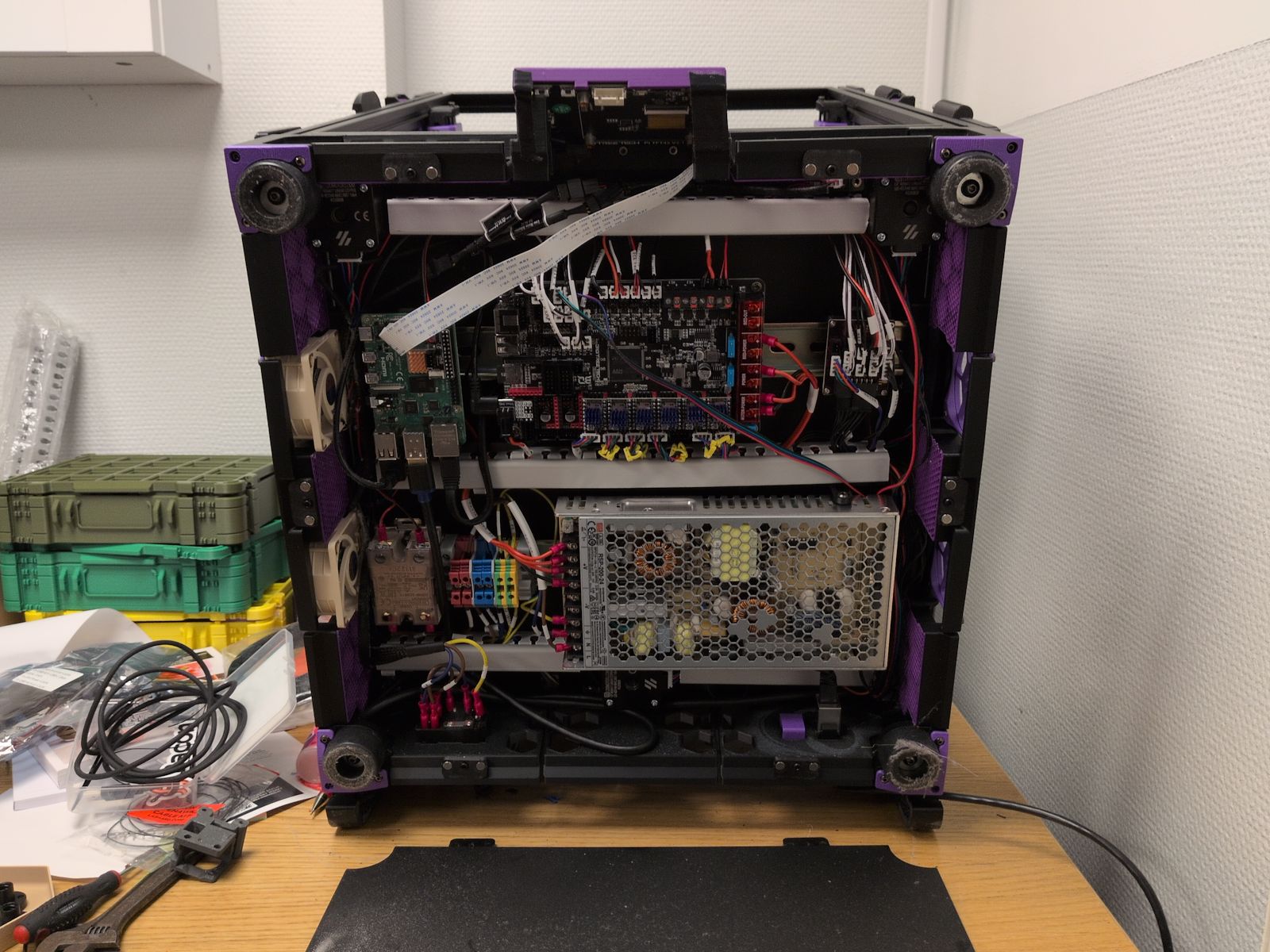

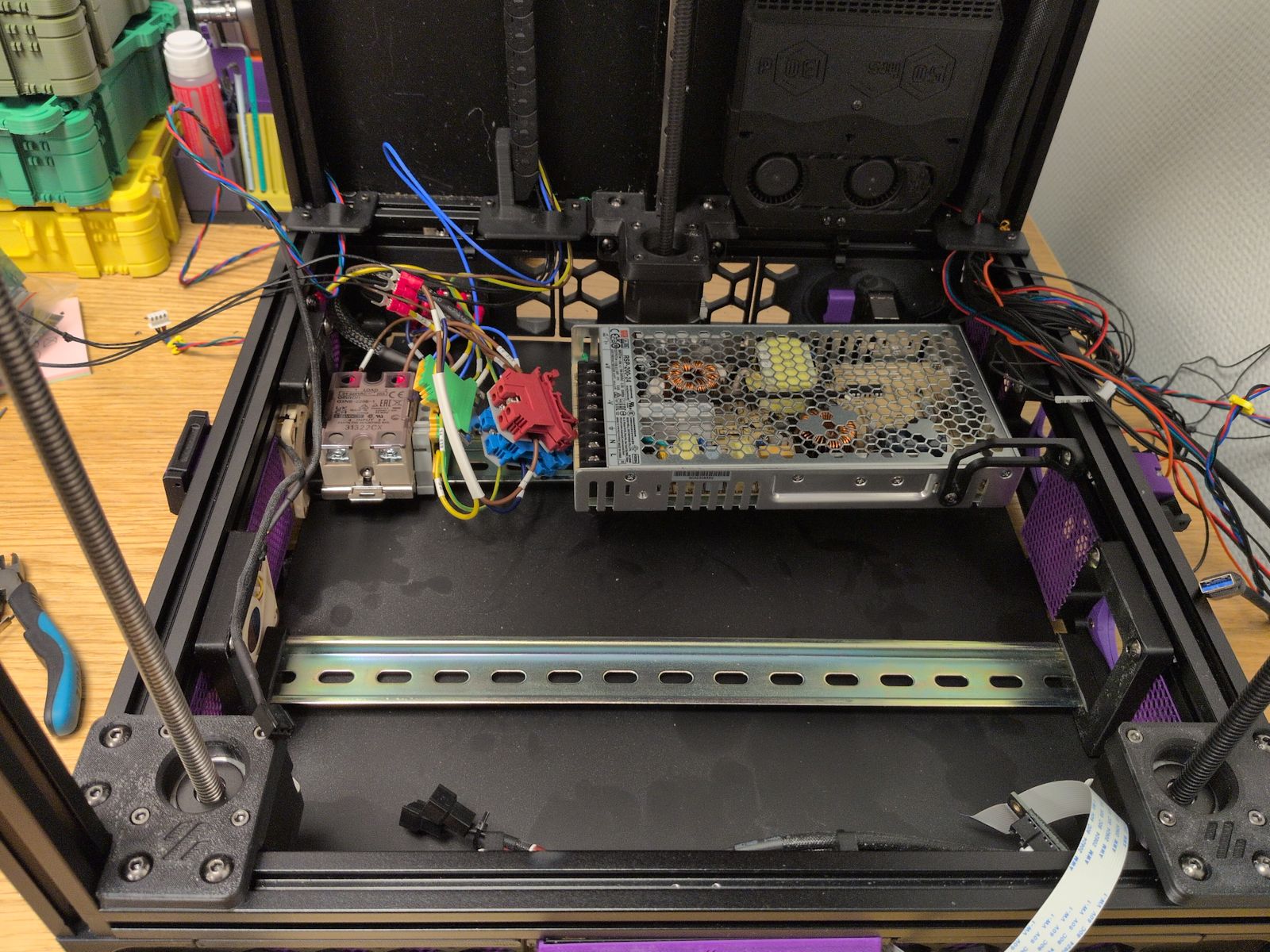

Install the Inverted electronics mod.

I’ve been using the RockNRoll mod to give access to the electronics compartment by tilting the printer backwards. The Inverted electronics mod would instead allow me to lift the bottom plate to access the electronics compartment and I want to do it before installing a Box Turtle on top of the printer.

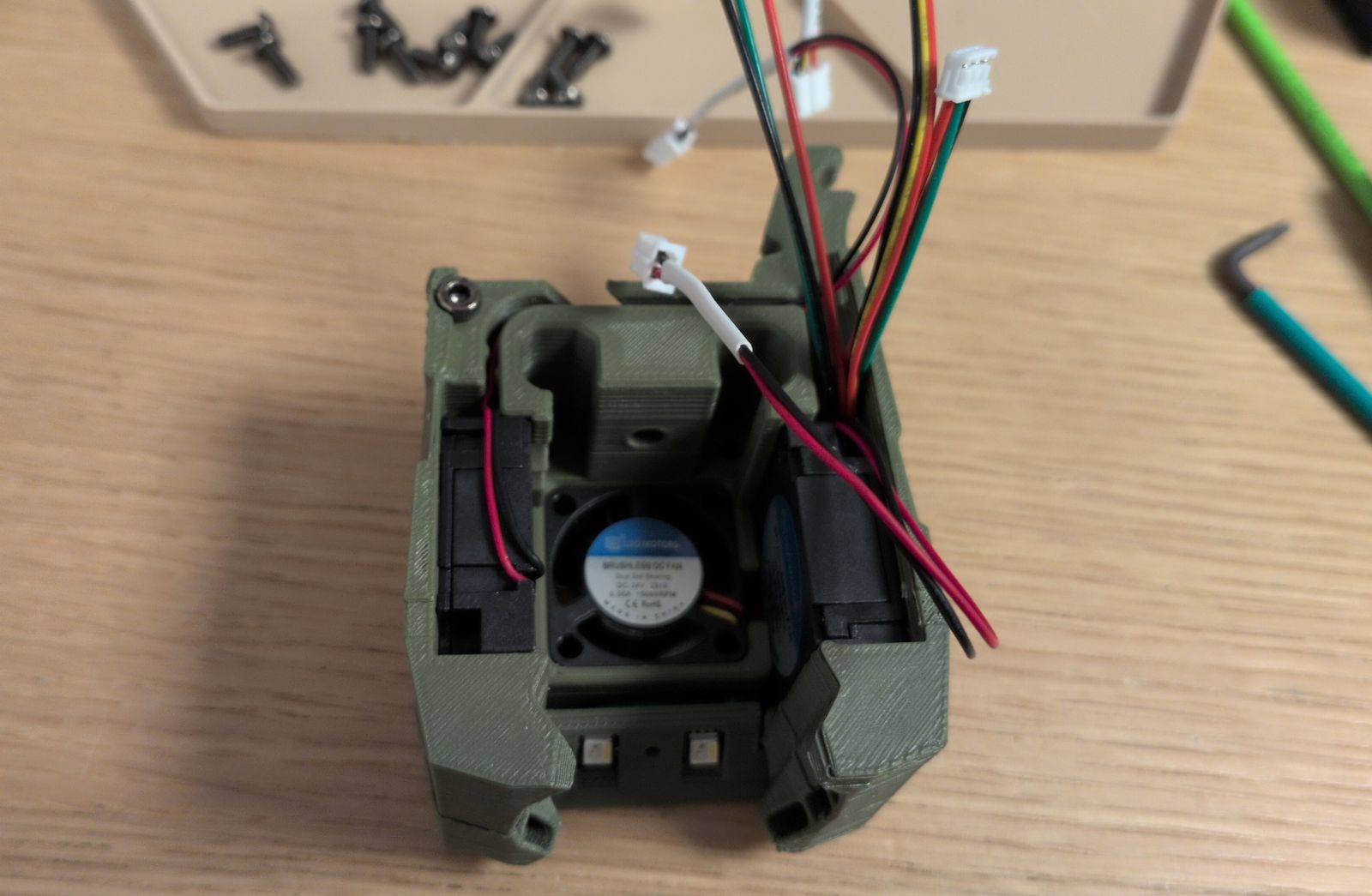

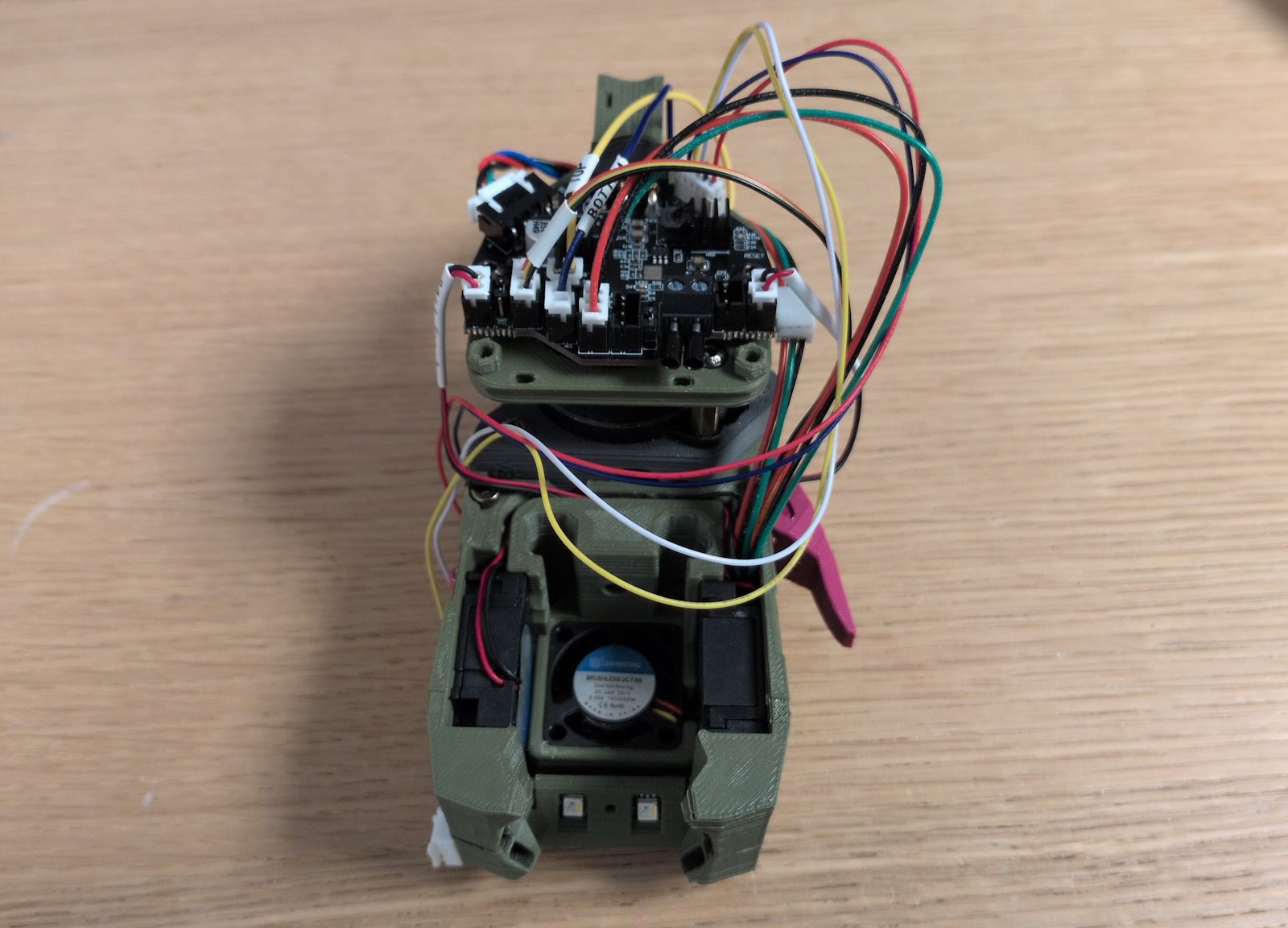

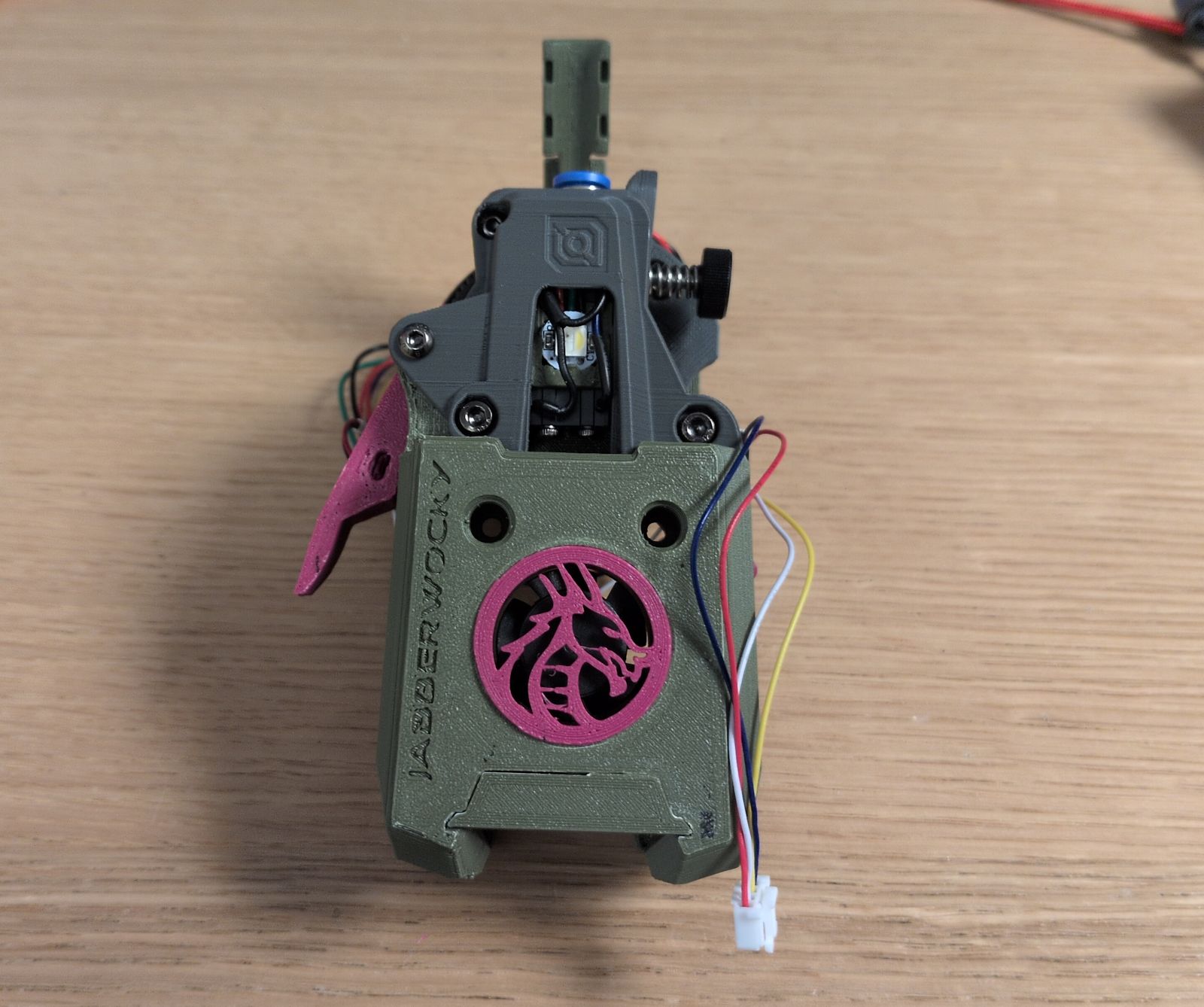

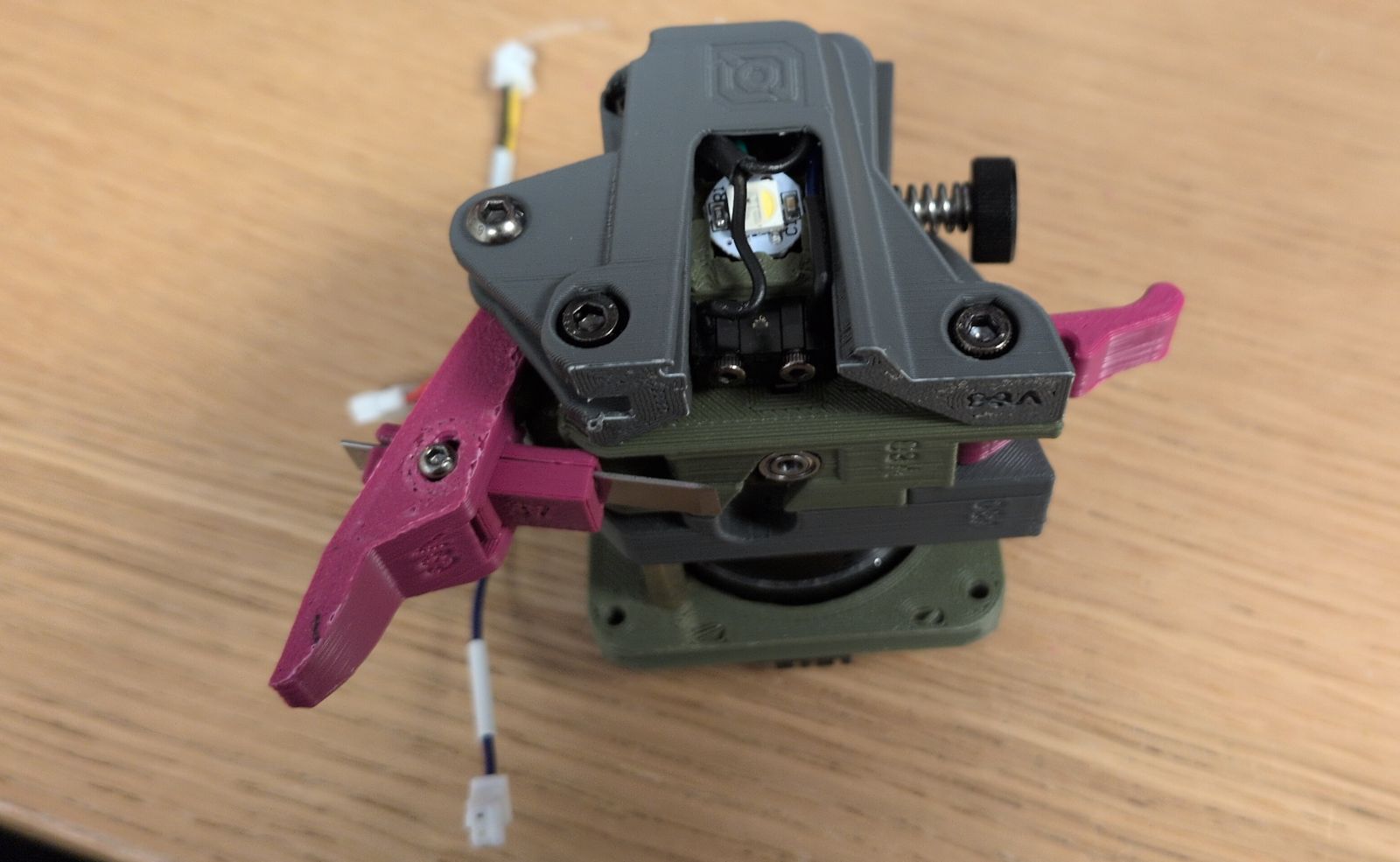

Replace the Stealthburner with the Jabberwocky toolhead.

This introduced a series of changes:

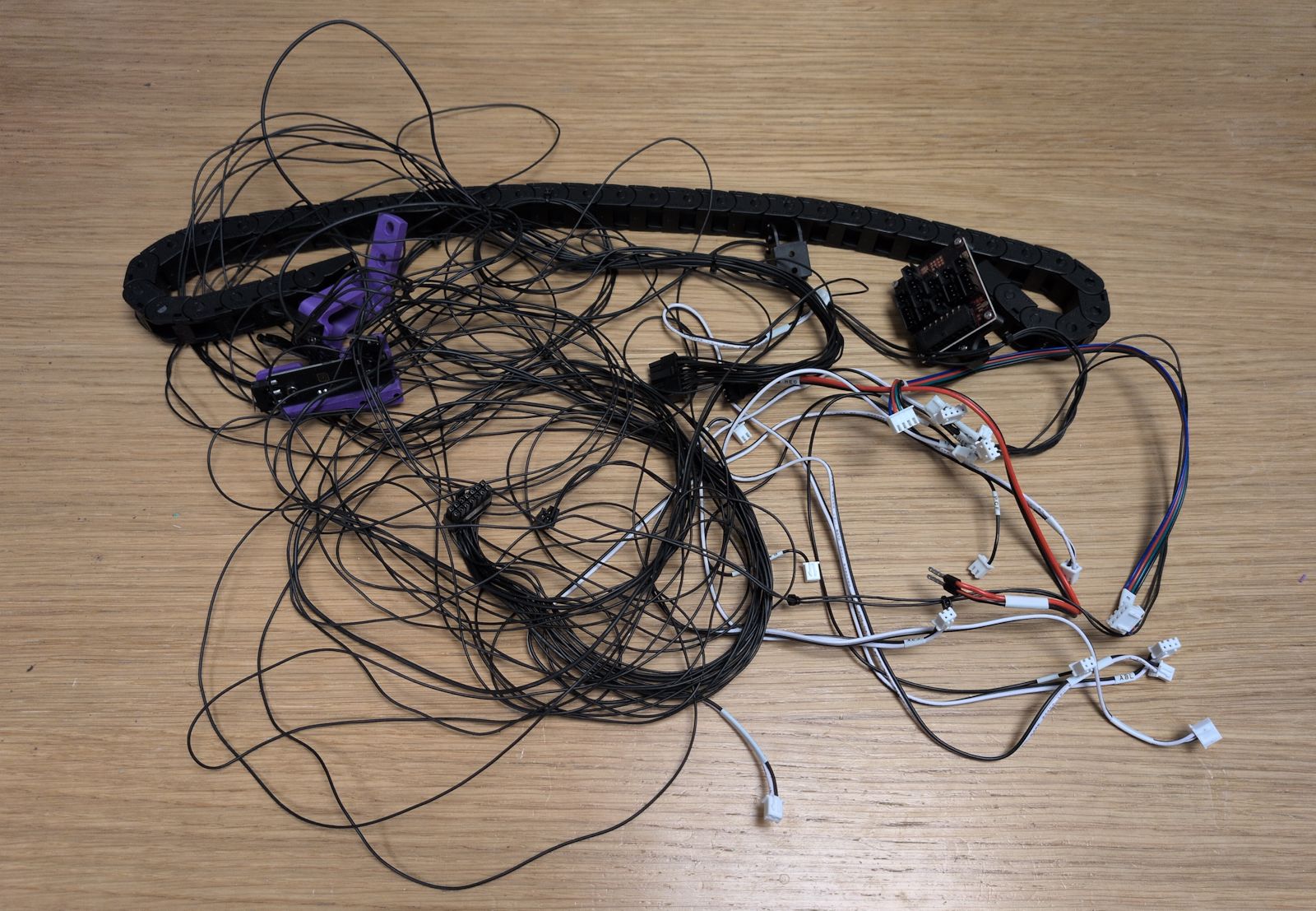

Move to an umbilical setup with the Nitehawk36 toolboard.

Use sensorless homing to get rid of the Y drag chain.

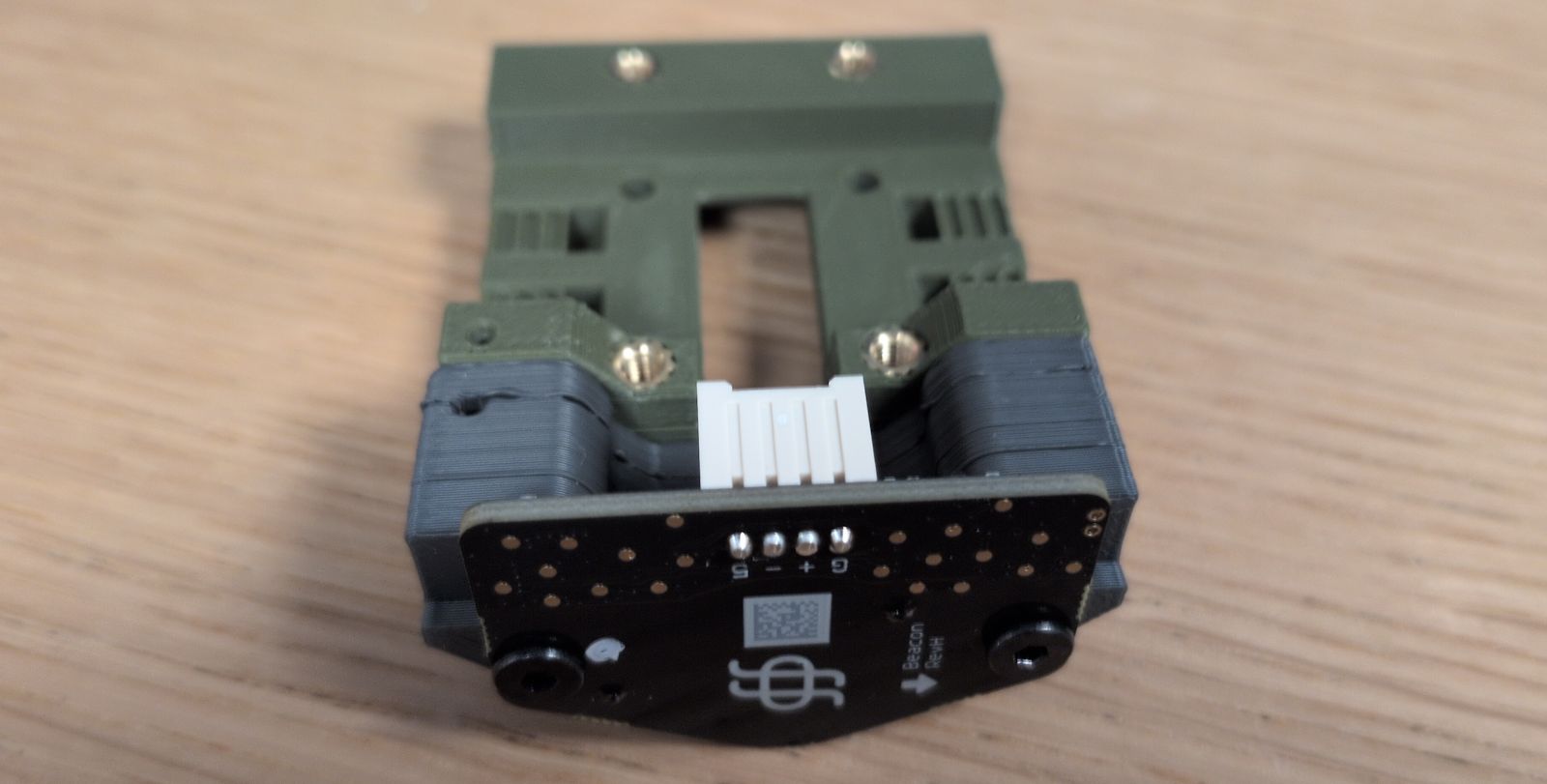

Replace TAP with the Beacon probe.

Finally, install the Jabberwocky.

I’ve had issues before where one of the POM nuts were ground down and I felt it was happening again. The printer didn’t completely fail like before but it was sometimes getting really bad first layers in that same corner and the Z probe was occasionally failing to configure Z tilt.

I replaced all three POM nuts together with the whole lead screw (I got a new one sent to me by LDO the first time it failed but I hadn’t installed it yet).

This is apparently a common problem with some LDO kits that have coated lead screws. I still have two of the old ones that I may have to replace in the future.

I’ve been looking at the Inverted electronics mod even before finishing my Trident printer. But it wasn’t possible with the Print It Forward service I used to print parts for my first 3D printer, and after the printer was completed I didn’t feel the need to redo the wiring again.

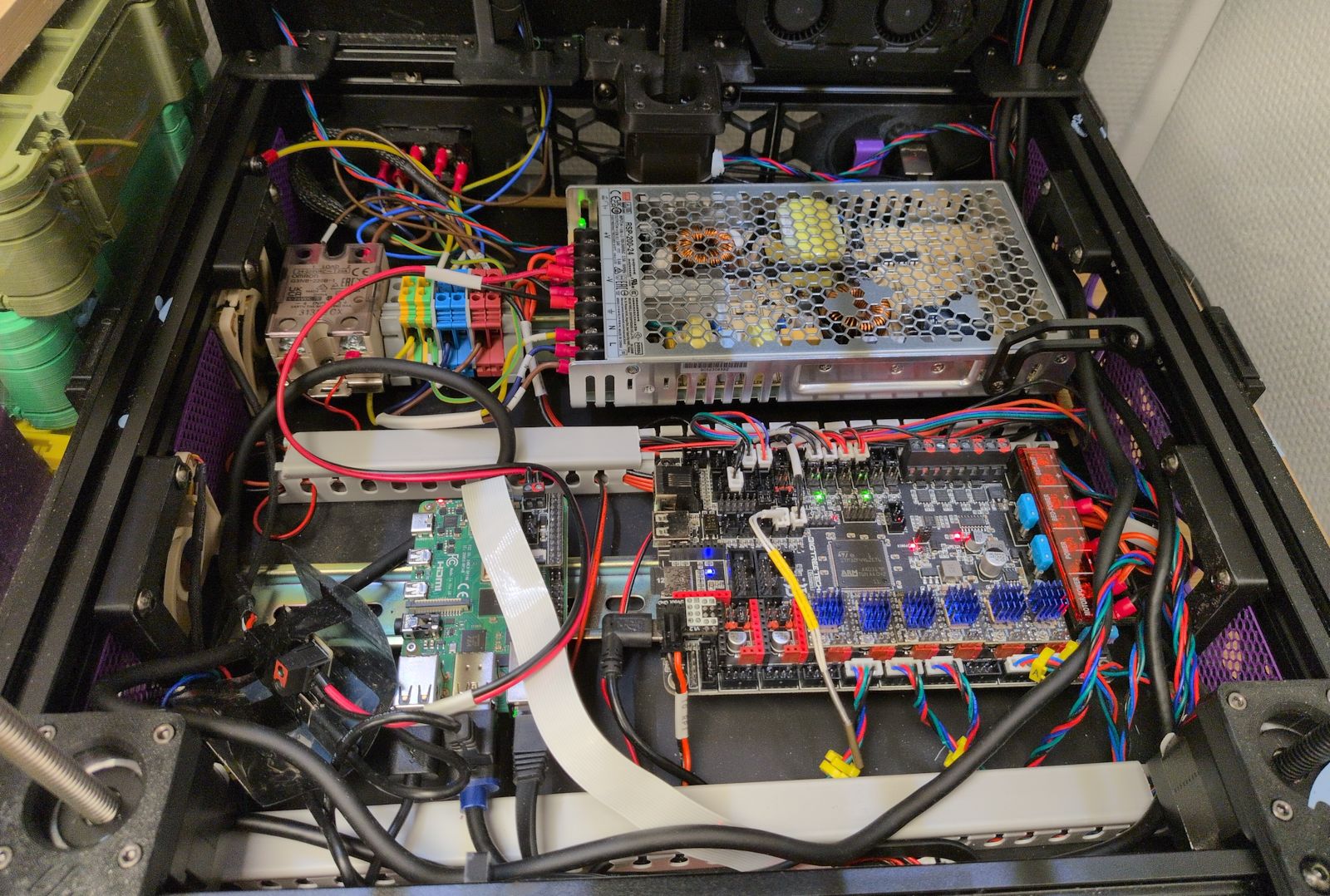

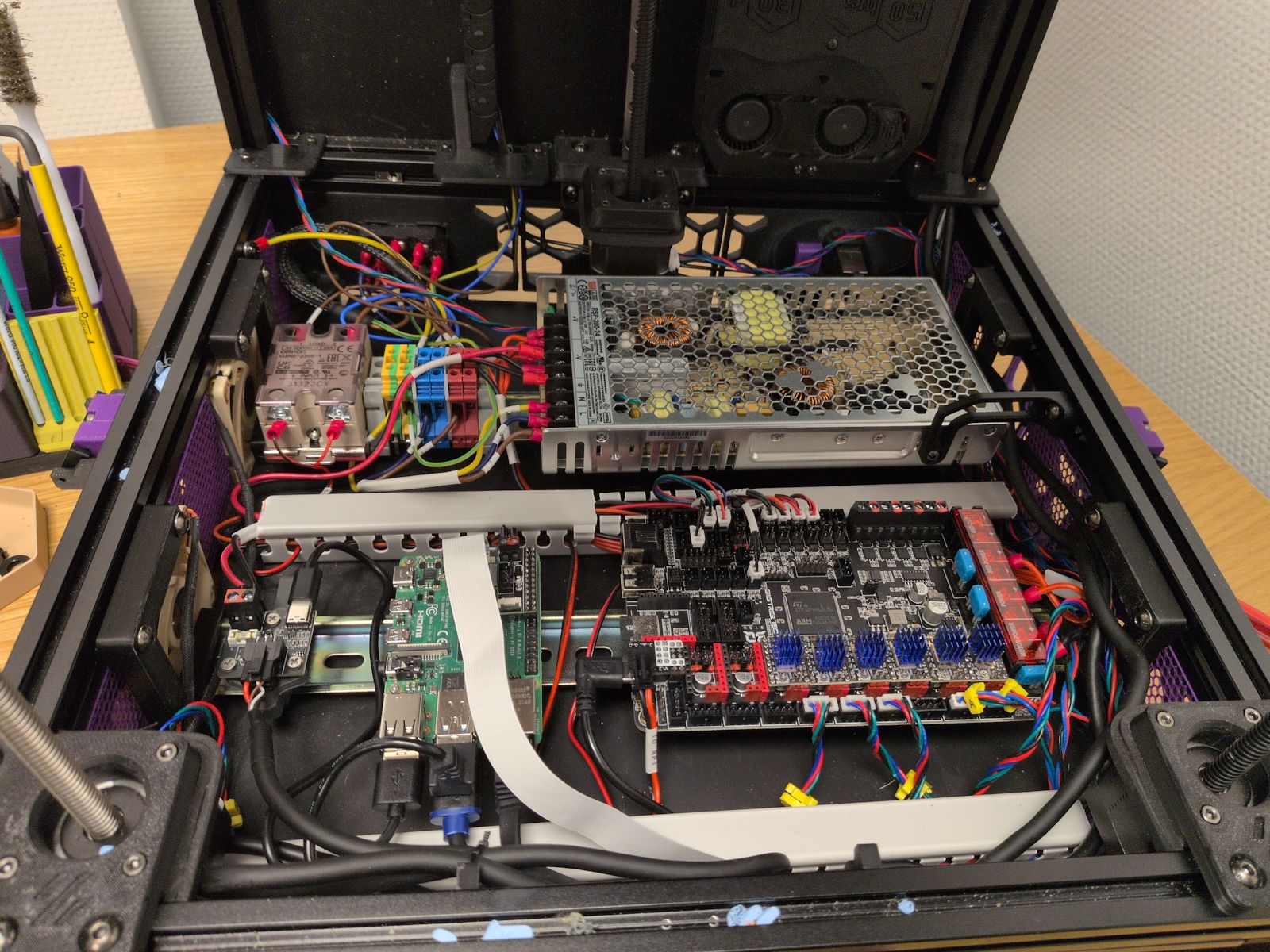

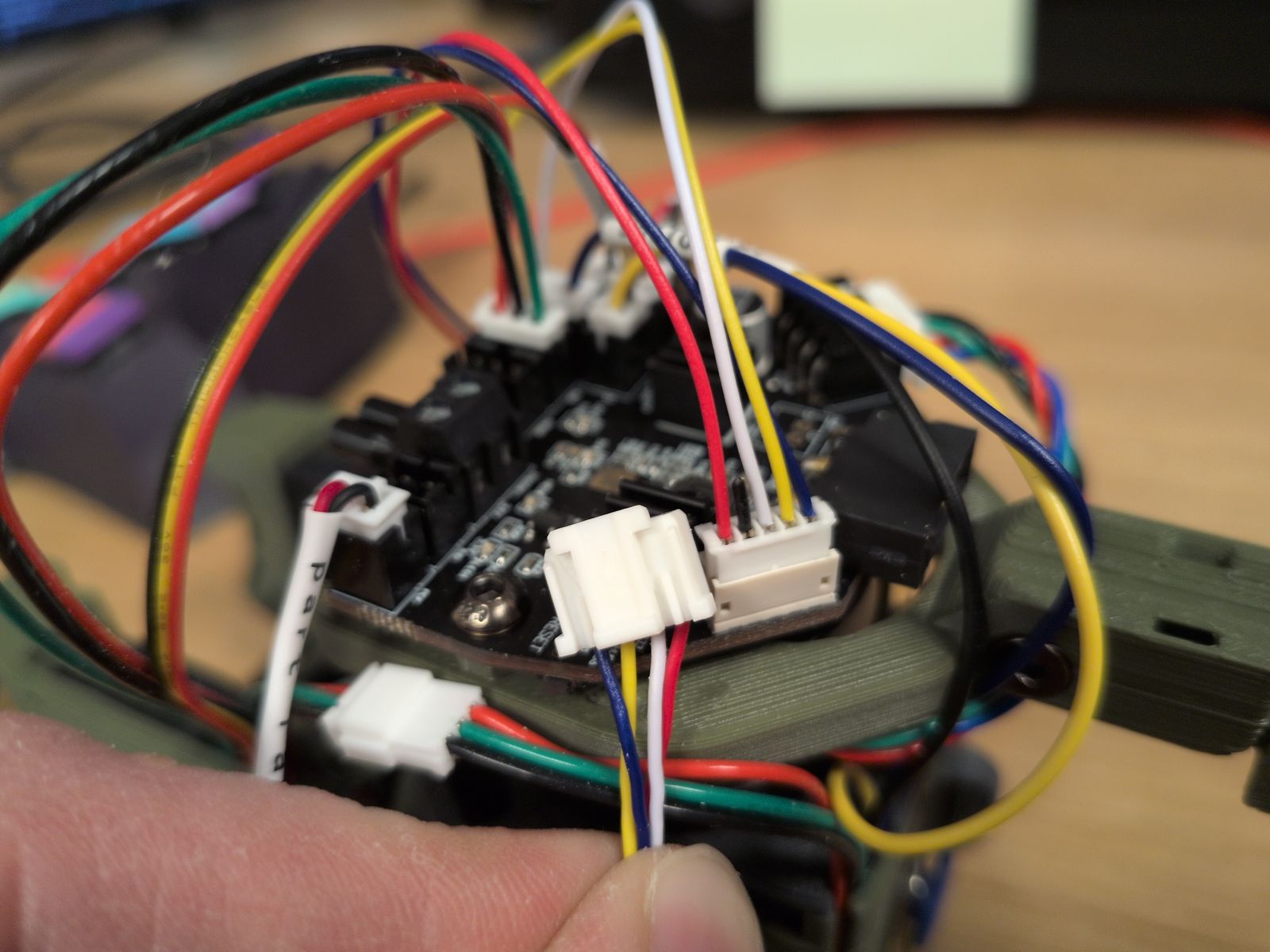

Overall it was surprisingly easy to reinstall all the electronics. It was made easier by the move to umbilical and a single USB cable to the toolhead as it removed quite a bit of wiring:

One issue I had with the mod is that the cutouts for the Z motors were a bit large, with gaps where stray filament or heat can escape through. I tried to cover them up by placing some foam tape around the motors:

I’ve been wanting to replace the Stealthburner toolhead a long time:

But what to choose? There are quite a few interesting toolheads I considered:

I use the Dragon Burner in my VORON 0 and using the same toolhead is boring.

A pretty fun toolhead and I was considering the Mjölnir version. It does require you to flip your XY joints to hang upside down and I couldn’t find a filament sensor or filament cutter for it, so I ended up skipping it.

XOL seems like a very well regarded and mature option with tons of support. It boasts much better cooling for PLA, which is one of the main reasons I want to migrate away from the Stealthburner.

A4T seems similar to XOL, while having even better cooling and a slightly simpler assembly. It would also make use of the Dragon hotend I’ve got lying here, gathering dust.

An all-in-one toolhead solution with filament sensors and a filament cutter that seems to have some quality of life features I think I’d really enjoy:

Flip up Extruder. Probably an industry first, a tool-less easy to access toolhead design so that one can access the blade or the filament path for servicing and troubleshooting. This allows a user, in the event of hopefully a rare problem during a filament changing print the ability to access the filament path to clear it of issues and continue with a print job.

The A4T-toolhead is interesting but the (supposedly) easier maintenance and multi-color consistency of the Jabberwocky really appealed to me.

I struggled a bit to get the filament to load/unload consistently by hand. I rebuilt the toolhead but in the end I believe I just didn’t have enough grip on the filament to guide it past the gears down into bottom hole.

Most of the wiring came as-is except for the cable between the Beacon and the Nitehawk36. I got the Nitehawk36 side of the cable pre-made in the Nitehawk36 kit but I had to pin the Beacon side myself.

The colors of the wires in cable were all over the place but there’s a description on the PCB of both the Nitehawk36 and Beacon so I just had to take care to match them. I also referenced the Nitehawk36 documentation and the Beacon documentation.

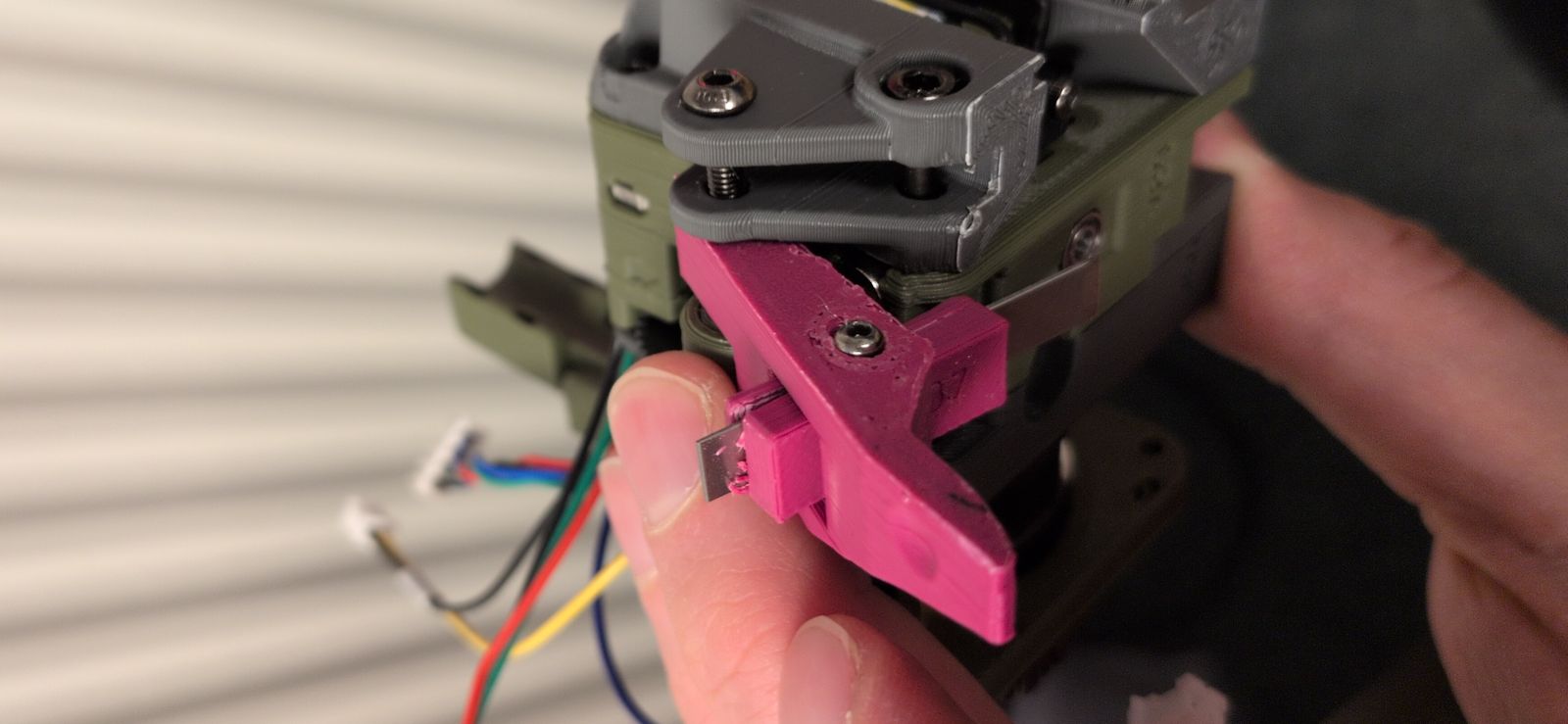

I had real difficulties installing the blade into the blade holder. There was some filament in the hole (likely due to poor print tuning) and I managed to break the holder when I tried to install the blade:

As I didn’t have a working printer when it broke I had to make it work without the filament cutter initially. Luckily I didn’t break anything crucial…

I had to make some software changes but luckily they were quite straightforward:

Use sensorless homing.

I just followed the VORON documentation.

Setup the Nitehawk36 toolboard.

LDO has setup instructions and the Jabberwocky GitHub contains klipper settings.

Setup Beacon for Z offset and mesh calibration.

Their quickstart documentation was fast and easy. I did not setup Beacon Contact; maybe I’ll get to it one day.

After months of not having a working 3D printer I’ve gotten renewed energy to play around with the printer again. I’ve got some loose plans for some mods to make on this printer:

… Or maybe something else? Who knows!

2025-10-29 08:00:00

… anyone can do any amount of work, provided it isn’t the work he is supposed to be doing at that moment.

My partner Veronica is amazing as she’ll listen to my bullshit and random whims (or at least, pretend to). That’s a big benefit to having a blog: so I have an outlet for rambling about my weird projects and random fixations and spare Veronica’s sanity a little.

I know that Veronica won’t be impressed by another Neovim config rewrite (even when done in Lisp!) so I’ll simply write a big blog post about it.

I wanted to rewrite my Neovim configuration in Fennel (a Lisp that compiles to Lua) and while doing so I wanted to migrate from lazy.nvim to Neovim’s new built-in package manager vim.pack.

This included bootstrapping Fennel compilation for Neovim; replicating missing features from lazy.nvim such as running build scripts and lazy loading; modernizing my LSP and treesitter config; and trying out some new interesting plugins.

Please see my new Neovim config here.

Lua has been a fantastic boon to Neovim and it’s a significant improvement over Vimscript, yet I can’t help but raise an eyebrow when I hear people describe Lua as a great language. It’s definitely great at being a simple and fast embeddable language but the language itself leaves me wanting more.

Fennel doesn’t solve all issues as some of Lua’s quirks bleeds through but it should make it a little bit nicer. I particularly like the destructuring; more functional programming constructs; macros for convenient DSL; and the amazing pipe operator.

But the biggest reason is that I’m simply a bit bored and trying out new programming languages is fun.

I don’t rewrite my config often. But when I do, I do it properly.

Folke, maker of many popular plugins such as lazy.nvim and snacks.nvim, recently had a ~5 month break from working on his plugins. Of course, they continued to work and anyone working on open source projects can (and should) take a break whenever they want.

But it exemplifies that core Neovim features will likely be better maintained than standalone plugins and should probably be preferred (if they provide the features you need).

While Neovim’s built-in plugin manager is a work in progress and still a bit too simplistic for my needs I wanted to try it out.

If you’ve got a small configuration having it all inside a single init.lua is probably fine.

Somehow I’ve gathered almost 6k lines of Lua code under ~/.config/nvim so showing it all in one file isn’t that appealing.

I first wanted to separate the configuration into a core/plugin split, where non-plugin configuration happens in core and plugin configuration lives under plugin.

However, to support lazy loading with a single call to vim.pack.add I decided to go back to letting the files under plugin/ return plugin specs, like lazy.nvim does for you.

With Fennel support under fnl/ this is how my configuration is structured:

init.lua ; Minimal bootstrap to load Fennel files

fnl ; All Fennel source in the `fnl/` folder

├── config

│ ├── init.fnl ; Loaded by `init.lua` and loads the rest

│ ├── colorscheme.fnl

│ ├── keymaps.fnl

│ ├── lsp.fnl ; Config may reference plugins

│ └── ...

├── macros.fnl ; Custom Fennel macros goes here

└── plugins

├── init.fnl ; Loads everything under `plugins/`

├── appearance.fnl

├── coding.fnl

└── ...

lua ; Lua stuff is still loaded transparently

ftplugin

└── djot.lua ; nvim-thyme doesn't load `ftplugin/`

It’s not a perfect system as I’d ideally want the plugins/ to only add packages while I would configure the plugins in config/.

But some plugins use lazy loading making it more convenient to do it together with the plugin spec.

There are a handful of different plugins that allows you to easily write your Neovim config in Fennel.

I ended up choosing nvim-thyme because it’s fast (it hooks into require and only compiles on-demand) and it allows you to mix Fennel and Lua source files.

nvim-thyme contains installation instructions for lazy.nvim and it references a bootstrapping function to run git to manually clone packages.

But we’re going to use vim.pack and it makes the bootstrap a bit cleaner:

vim.pack.add({

-- Fennel environment and compiler.

"https://github.com/aileot/nvim-thyme",

"https://git.sr.ht/~technomancy/fennel",

-- Gives us some pleasant fennel macros.

"https://github.com/aileot/nvim-laurel",

-- Enables lazy loading of plugins.

"https://github.com/BirdeeHub/lze",

}, { confirm = false })

(I added lze to my bootstrapping too as I’ll use it later when adding lazy loading support, it was simpler having it in the bootstrap.)

nvim-thyme also instructs us to override require() calls (so it can compile on demand) and

to setup the cache path (where it’ll store the compiled Lua files):

-- Override package loading so thyme can hook into `require` calls

-- and generate lua code if the required package is a fennel file.

table.insert(package.loaders, function(...)

return require("thyme").loader(...)

end)

-- Setup the compile cache path for thyme.

local thyme_cache_prefix = vim.fn.stdpath("cache") .. "/thyme/compiled"

vim.opt.rtp:prepend(thyme_cache_prefix)

And now we’re ready to write the rest of the config with Fennel!

-- Load the rest of the config with transparent fennel support.

require("config")

Now we can continue with Fennel fnl/config.fnl or fnl/config/init.fnl:

;; Load all plugins

(require :plugins)

;; Load the other config files

(require :config.colorscheme)

(require :config.keymaps)

(require :config.lsp)

;; etc...

There’s one last thing we should do to make the bootstrap complete: we should call :ThymeCacheClear when nvim-laurel or nvim-thyme changes.

The recommended way is to use the PackChanged event, with something like this:

vim.api.nvim_create_autocmd("PackChanged", {

callback = function(event)

local name = event.data.spec.name

if name == "nvim-thyme" or name == "nvim-laurel" then

require("thyme").setup()

vim.cmd("ThymeCacheClear")

end

end,

group = vim.api.nvim_create_augroup("init.lua", { clear = true }),

})

vim.pack.add(...)

But if we for example force an update for nvim-laurel (by deleting it with

vim.pack.del({"nvim-laurel"}) and restart Neovim) we get this error:

Error in /home/tree/code/nvim-conf/init.lua..PackChanged Autocommands for "*":

Lua callback: /home/tree/code/nvim-conf/init.lua:12: module 'thyme' not found:

no field package.preload['thyme']

cache_loader: module 'thyme' not found

cache_loader_lib: module 'thyme' not found

no file './thyme.lua'

...

There is no order guarantee for the packages and so PackChanged for nvim-laurel may run before thyme has been loaded.

I worked around this with a variable that I check after vim.pack.add, which will guarantee that all packages have been added before we try to require a package:

local rebuild_thyme = false

vim.api.nvim_create_autocmd("PackChanged", {

callback = function(event)

local name = event.data.spec.name

if name == "nvim-thyme" or name == "nvim-laurel" then

rebuild_thyme = true

end

end,

group = vim.api.nvim_create_augroup("init.lua", { clear = true }),

})

vim.pack.add(...)

table.insert(package.loaders, function(...)

return require("thyme").loader(...)

end)

local thyme_cache_prefix = vim.fn.stdpath("cache") .. "/thyme/compiled"

vim.opt.rtp:prepend(thyme_cache_prefix)

require("thyme").setup()

-- Rebuild thyme cache after `vim.pack.add` to avoid dependency issues

-- and to make sure all packages are loaded.

if rebuild_thyme then

vim.cmd("ThymeCacheClear")

end

I wanted to migrate to vim.pack but it’s missing a few key features from lazy.nvim:

make after install or update).

I could’ve given up and gone back to lazy.nvim but that just wouldn’t do.

I want to be able to create a file under plugins/, have it return a vim.pack.Spec, and have it automatically added. This is similar to the structured plugins approach of lazy.nvim.

To build this I first list all files under plugins/ like so:

;; List all files, with absolute paths.

(local paths (-> (vim.fn.stdpath "config")

(.. "/fnl/plugins/*")

(vim.fn.glob)

(vim.split "\n")))

This uses Fennel’s -> threading macro, Fennel’s version of the pipe operator.

It’s one of my favorite features of Elixir and was stoked to discover that Fennel has it too.

(Fennel actually has even more power with the ->>, -?>, and -?>> operators!)

Now we need to loop through and transform the paths to relative paths and evaluate the files to get our specs. (I’m using accumulate to explicitly build a list instead of collect as we’ll soon expand on it):

;; Make the paths relative to plugins and remove extension, e.g. "plugins/snacks"

;; and require those packages to get our pack specs.

(local specs (accumulate [acc [] _ abs_path (ipairs paths)]

(do

(local path (string.match abs_path "(plugins/[^./]+)%.fnl$"))

(if (and path (not= path "plugins/init"))

(do

(local mod_res (require path))

(table.insert acc mod_res))

acc)

acc))))

Now we can populate specs from files under plugins/, for example like this that returns a single spec:

{:src "https://github.com/romainl/vim-cool"}

But I also want to be able to return multiple specs:

[{:src "https://github.com/nvim-lua/popup.nvim"}

{:src "https://github.com/nvim-lua/plenary.nvim"}]

To support this we can match on the return value to see if it’s a list, and then loop and insert each spec in the list, otherwise we do as before:

(local specs (accumulate [acc [] _ abs_path (ipairs paths)]

(do

(local path (string.match abs_path "(plugins/[^./]+)%.fnl$"))

(if (and path (not= path "plugins/init"))

(do

(local mod_res (require path))

(case mod_res

;; Flatten return if we return a list of specs.

[specs]

(each [_ spec (ipairs mod_res)]

(table.insert acc spec))

;; Can return a string or a single spec.

_

(table.insert acc mod_res))

acc)

acc))))

Now all that’s left is to call vim.pack.add with our list of specs and our plugins are now automatically added from files under plugins/:

(vim.pack.add specs {:confirm false})

lze is a nice and simple plugin to add lazy-loading to vim.pack.

We’ve already added it as a dependency in our init.lua so all we need to do is modify the load parameter to vim.pack.add like so:

;; Override loader when adding to let lze handle lazy loading

;; when specified via the `data` attribute.

(vim.pack.add specs {:load (fn [p]

(local spec (or p.spec.data {}))

(set spec.name p.spec.name)

(local lze (require :lze))

(lze.load spec))

:confirm false})

Now we can specify lazy loading via the data parameter in our specs:

{:src "https://github.com/romainl/vim-cool"

:data {:event ["BufReadPost" "BufNewFile"]}}

It relies on wrapping configuration under data but that’s annoying, so let’s simplify things a little.

The idea here is to transform the specs before we call vim.pack.add.

We can do it easily when we collect our specs by calling the transform_spec function:

(local specs (accumulate [acc [] _ abs_path (ipairs paths)]

(do

(local path (string.match abs_path "(plugins/[^./]+)%.fnl$"))

(if (and path (not= path "plugins/init"))

(do

(local mod_res (require path))

(case mod_res

[specs]

(each [_ spec (ipairs mod_res)]

(table.insert acc (transform_spec spec)))

_

(table.insert acc (transform_spec mod_res)))

acc)

acc))))

I want transform_spec to transform this:

{:src "https://github.com/romainl/vim-cool"

:event ["BufReadPost" "BufNewFile"]}

Into this:

{:src "https://github.com/romainl/vim-cool"

:data {:event ["BufReadPost" "BufNewFile"]}}

By storing keys other than src, name, and version under a data table.

This is what I came up with:

(λ transform_spec [spec]

"Transform a vim.pack spec and move lze arguments into `data`"

(case spec

{}

(do

;; Split keys to vim.pack and rest into `data`.

(local pack_args {})

(local data_args {})

(each [k v (pairs spec)]

(if (vim.list_contains [:src :name :version] k)

(tset pack_args k v)

(tset data_args k v)))

(set pack_args.data data_args)

pack_args)

;; Bare strings are valid vim.pack specs too.

other

other))

Another quality of life feature I’d like is to make it simpler to call setup functions.

lazy.nvim again does this well and it’s pretty convenient.

For example, this is how it looks like with lze to add an after hook and call a setup function:

{:src "https://github.com/A7Lavinraj/fyler.nvim"

:on_require :fyler

:after (λ []

(local fyler (require :fyler))

(fyler.setup {:icon_provider "nvim_web_devicons"

:default_explorer true}))}

What if we could instead do:

{:src "https://github.com/A7Lavinraj/fyler.nvim"

:on_require :fyler

:setup {:icon_provider "nvim_web_devicons" :default_explorer true}}]

But this is just data and we can transform the second case to the first one fairly easily.

In the transform_spec function:

(λ transform_spec [spec]

"Transform a vim.pack spec and move lze arguments into `data`

and create an `after` hook if `setup` is specified."

(case spec

{}

(do

;; Split keys to vim.pack and rest into `data`.

(local pack_args {})

(local data_args {})

(each [k v (pairs spec)]

(if (vim.list_contains [:src :name :version] k)

(tset pack_args k v)

(tset data_args k v)))

(λ after [args]

;; Call `setup()` functions if needed.

(when spec.setup

(local pkg (require spec.on_require))

(pkg.setup spec.setup))

;; Load user specified `after` if it exists.

(when spec.after

(spec.after args)))

(set data_args.after after)

(set pack_args.data data_args)

pack_args)

;; Bare strings are valid vim.pack specs too.

other

other))

How to figure out the package name to require (since it may differ from the path)?

lazy.nvim has a bunch of rules to try to figure this out automatically but I chose to be explicit.

lze uses the on_require argument so it can load on a require call (on

(require :fyler)

for example), which seems like a good idea to reuse.

And just to prevent me from making mistakes, I added a sanity check:

;; `:setup` needs to know what package to require,

;; therefore we use `:on_require`

(when (and spec.setup (not spec.on_require))

(error (.. "`:setup` specified without `on_require`: "

(vim.inspect spec))))

(λ after [args]

;; ...

There’s one last feature I really want from lazy.nvim and that’s to automatically run build scripts after a package is installed or updated.

I basically want to specify this in my specs:

{:src "https://github.com/eraserhd/parinfer-rust"

:build ["cargo" "build" "--release"]}

Again, we’ll rely on PackChanged for this:

;; Before `vim.pack.add` to capture changes.

(augroup! :plugin_init (au! :PackChanged pack_changed))

The above code uses macros from nvim-laurel to define an autocommand that calls the pack_changed function.

That function will then run pack_changed when the package is updated or installed:

(λ pack_changed [event]

(when (vim.list_contains [:update :install] event.data.kind)

(execute_build event.data))

;; Return false to not remove the autocommand.

false)

(λ execute_build [pack]

;; `?.` will prevent crashing if any field is nil.

(local build (?. pack :spec :data :build))

(when build

(run_build_script build pack)))

To run the scripts I use vim.system with some simple printing:

(λ run_build_script [build pack]

(local path pack.path)

(vim.notify (.. "Run `" (vim.inspect build) "` for " pack.spec.name)

vim.log.levels.INFO)

(vim.system build {:cwd path}

(λ [exit_obj]

(when (not= exit_obj.code 0)

;; If I use `vim.notify` it errors with:

;; vim/_editor.lua:0: E5560: nvim_echo must not be called in a fast event context

;; Simply printing is fine I guess, it doesn't have to be the prettiest solution.

(print (vim.inspect build) "failed in" path

(vim.inspect exit_obj))))))

This will now allow us to run build scripts like cargo build --release or make after a package is installed or updated.

It’s a bit too basic as there’s no visible progress bar for long running builds (Rust, I’m looking at you!) and it doesn’t handle build errors that well but it works well enough I guess.

But what about user commands or requiring a package? For example with nvim-treesitter you’d want to run :TSUpdate after an update,

something like this:

{:src "https://github.com/nvim-treesitter/nvim-treesitter"

:version :main

:build #(vim.cmd "TSUpdate")}

Let’s try it by allowing functions in the build parameter (and bare strings because why not):

(λ execute_build [pack]

(local build (?. pack :spec :data :build))

(when build

(case (type build)

;; We can specify either "make" or ["make"]

"string" (run_build_script [build] pack)

"table" (run_build_script build pack)

;; Run a callback instead.

"function" (call_build_cb build pack))))

(λ call_build_cb [build pack]

(vim.notify (.. "Call build hook for " pack.spec.name) vim.log.levels.INFO)

(build pack))

If we run this though it doesn’t work:

Error in /home/tree/code/nvim-conf/init.lua..PackChanged Autocommands for "*":

Lua callback: vim/_editor.lua:0: /home/tree/code/nvim-conf/init.lua..PackChanged Autocommands for "*"..script nvim_exec2() called

at PackChanged Autocommands for "*":0, line 1: Vim:E492: Not an editor command: TSUpdate

The problem is that PackChanged is run before the pack is loaded.

Maybe we could work around this by calling packadd ourselves but that would shortcut lazy loading.

In this instance we’d like to run TSUpdate after the pack is loaded but only if it’s been updated or installed so we don’t run it after every restart.

What I did was introduce an after_build parameter to the spec that’s run after load if a PackChanged event was seen before:

{:src "https://github.com/nvim-treesitter/nvim-treesitter"

:version :main

:after_build #(vim.cmd "TSUpdate")}

Then in plugins/init.fnl I use a local variable packs_changed that’s updated on PackChanged like so:

;; Capture packs that are updated or installed.

(g! :packs_changed {})

(λ set_pack_changed [name event]

;; Maybe there's an easier way of updating a table global...?

(var packs vim.g.packs_changed)

(tset packs name event)

(g! :packs_changed packs))

(λ pack_changed [event]

(when (vim.list_contains [:update :install] event.data.kind)

(local pack event.data)

(set_pack_changed pack.spec.name event)

(execute_build pack))

false)

Then we’ll call after_build from the after hook we setup before:

(λ transform_spec [spec]

(case spec

{}

(do

;; Split keys to vim.pack and rest into `data`.

;; ...

(λ after [args]

(local pack_changed_event (. vim.g.packs_changed args.name))

(set_pack_changed args.name false)

(when spec.setup

(local pkg (require spec.on_require))

(pkg.setup spec.setup))

;; Run `after_build` scripts if a `PackChanged` event

;; was run with `install` or `update`.

(when (and spec.after_build pack_changed_event)

(spec.after_build args))

(when spec.after

(spec.after args)))

(set data_args.after after)

(set pack_args.data data_args)

pack_args)

other

other))

With this we can finally specify build actions such as these:

{:build "make"

:build ["cargo" "build" "--release"]

:after_build #(vim.cmd "TSUpdate")}

You’ve already seen how Fennel code looks like but what about configuration with Fennel? One of the negative things of moving my configuration from Vimscript to Lua was that simple things such as settings options or simple keymaps is more verbose.

So how does Fennel compare for the simpler, more declarative stuff?

set relativenumber

set clipboard^=unnamed,unnamedplus

set backupdir=~/.config/nvim/backup

let mapleader=" "

vim.opt.relativenumber = true

vim.opt.clipboard:append({ "unnamed", "unnamedplus" })

vim.opt.backupdir = vim.fn.expand("~/.config/nvim/backup")

vim.g.mapleader = [[ ]]

(set! :relativenumber true)

(set! :clipboard + ["unnamed" "unnamedplus"])

(set! :backupdir (vim.fn.expand "~/.config/nvim/backup"))

(g! :mapleader " ")

With nvim-laurel macros I think Fennel is decent. Slightly better than Lua but not as convenient as Vimscript.

local map = vim.keymap.set

map("n", "<localleader>D", vim.lsp.buf.declaration,

{ silent = true, buffer = buffer, desc = "Declaration" }

)

map("n", "<leader>ep", function() find_org_file("projects") end,

{ desc = "Org projects" }

)

map("n", "]t", function()

require("trouble").next({ skip_groups = true, jump = true })

end, {

desc = "Trouble next",

silent = true,

})

(bmap! :n "<localleader>D" vim.lsp.buf.declaration

{:silent true :desc "Declaration"})

(map! :n "<leader>ep" #(find_org_file "projects")

{:desc "Org projects"})

(map! :n "]t" #(do

(local trouble (require :trouble))

(trouble.next {:skip_groups true :jump true}))

{:silent true :desc "Trouble next"})

Not a huge difference to be honest.

I like the #(do_the_thing) shorthand for anonymous functions fennel has.

Having to (sometimes) split up require and method calls on separate lines in Fennel is annoying.

One example that was a big step up with Fennel is overriding highlight groups. I’m using melange which is a fantastic and underrated color scheme but I’ve collected a fair bit of overrides for it.

In Lua you use nvim_set_hl to add an override, for example like this:

vim.api.nvim_set_hl(0, "@symbol.elixir", { link = "@label" })

When you do this 100 times this is annoying so I made an override table to accomplish the job:

local overrides = {

{ name = "@symbol.elixir", val = { link = "@label" } },

{ name = "@string.special.symbol.elixir", val = { link = "@label" } },

{ name = "@constant.elixir", val = { link = "Constant" } },

-- And around 100 other overrides...

}

for _, v in pairs(overrides) do

vim.api.nvim_set_hl(0, v.name, v.val)

end

In Fennel with the hi! macro this all becomes as simple as:

(hi! "@symbol.elixir" {:link "@label"})

(hi! "@string.special.symbol.elixir" {:link "@label"})

(hi! "@constant.elixir" {:link "Constant"})

Here are some autocommands to enable cursorline only in the currently active window (while skipping buffers such as the dashboard):

local group = augroup("my-autocmds", { clear = true })

autocmd({ "VimEnter", "WinEnter", "BufWinEnter" }, {

group = group,

callback = function(x)

if string.len(x.file) > 0 then

vim.opt_local.cursorline = true

end

end,

})

autocmd("WinLeave", {

group = group,

callback = function()

vim.opt_local.cursorline = false

end,

})

(augroup! :my-autocmds

(au! [:VimEnter :WinEnter :BufWinEnter]

#(do

(when (> (string.len $1.file) 0)

(let! :opt_local :cursorline true))

false))

(au! :WinLeave #(do

(let! :opt_local :cursorline false)

false)))

One thing I like more in Lua compared to Fennel is how readable tables are. The Fennel formatter fnlfmt might be partly to blame as it has a tendency to use very little whitespace. Regardless, I prefer this Lua code:

return {

"https://github.com/stevearc/conform.nvim",

{ src = "https://github.com/mason-org/mason.nvim", dep_of = "mason-lspconfig.nvim" },

{ src = "https://github.com/neovim/nvim-lspconfig", dep_of = "mason-lspconfig.nvim" },

"https://github.com/mason-org/mason-lspconfig.nvim",

{

src = "https://github.com/nvim-treesitter/nvim-treesitter",

version = "main",

after = function()

vim.cmd("TSUpdate")

end,

},

}

Over this corresponding Fennel code:

["https://github.com/stevearc/conform.nvim"

{:src "https://github.com/mason-org/mason.nvim" :dep_of :mason-lspconfig.nvim}

{:src "https://github.com/neovim/nvim-lspconfig" :dep_of :mason-lspconfig.nvim}

"https://github.com/mason-org/mason-lspconfig.nvim"

{:src "https://github.com/nvim-treesitter/nvim-treesitter"

:version :main

:after #(vim.cmd "TSUpdate")}

To me the Lua code is for some reason easier to read.

Similarly I don’t have a problem with this lazy.nvim spec:

return {

"folke/snacks.nvim",

priority = 1000,

lazy = false,

opts = {

indent = {

indent = {

enabled = true,

char = "┆",

},

scope = {

enabled = true,

only_current = true,

},

},

scroll = {

animate = {

duration = { step = 15, total = 150 },

},

},

explorer = {},

},

}

But with this new Fennel spec I use—even though it’s simpler in some ways—it’s harder for me to quickly see what table the keys belong to:

{:src "https://github.com/folke/snacks.nvim"

:on_require :snacks

:lazy false

:setup {:indent {:indent {:enabled true :char "┆"}

:scope {:enabled true :only_current true}}

:scroll {:animate {:duration {:step 15 :total 150}}}

:explorer {}}}

Maybe it’s something you’ll get used to?

Neovim is moving quickly and I’ve had a bit of catching up to do in the plugin department. I won’t bore you with an exhaustive list; just a few highlights.

I’ve been using undotree a long time and it’s excellent. This feature was recently merged into Neovim:

;; It's optional so we need to use packadd to activate the plugin:

(vim.cmd "packadd nvim.undotree")

;; Then we can add a keymap to open it:

(map! :n "<leader>u" #(: (require :undotree) :open {:command "topleft 30vnew"}))

Neovim routinely gets shit on for LSPs being so hard to setup. Yes, it could probably be easier but Neovim has recently made some changes to streamline LSP configuration and it’s not nearly as involved as it used to be.

Here’s how my base config looks like:

(require-macros :macros)

;; Convenient way of installing LSPs and other tools.

(local mason (require :mason))

(mason.setup)

;; Convenient way of automatically enabling LSPs installed via Mason.

(local mason-lspconfig (require :mason-lspconfig))

(mason-lspconfig.setup {:automatic_enable true})

;; Show diagnostics as virtual lines on the current line.

;; It's pretty cool actually, you should try it out.

(vim.diagnostic.config {:virtual_text false

:severity_sort true

:virtual_lines { :current_line true })

;; I like inlay hints.

(vim.lsp.inlay_hint.enable true)

(augroup! :my-lsps

(au! :LspAttach

(λ [_]

(local snacks (require :snacks))

(bmap! :n "<localleader>D" snacks.picker.lsp_declarations

{:silent true :desc "Declaration"})

(bmap! :n "<localleader>l"

#(vim.diagnostic.open_float {:focusable false})

{:silent true :desc "Diagnostics"})

;; etc

I also use nvim-lspconfig but it doesn’t do anything magical (anymore). It’s basically a collection of LSP configs, so I don’t have to fill my config with things like this:

(vim.lsp.config "expert"

{:cmd ["expert"]

:root_markers ["mix.exs" ".git"]

:filetypes ["elixir" "eelixir" "heex"]})

(vim.lsp.enable "expert")

If you don’t want to change the keymaps (Neovim comes with defaults that I personally dislike) or customize specific LSPs then there’s not that much left. Mason is also totally optional and if you want to manage your LSPs outside of Neovim you can totally do that. The only thing missing is autocomplete, which blink.cmp provides out of the box.

Another thing that has changed since my last config overhaul is nvim-treesitter being rewritten and is now a much simpler plugin. The new version lives on the main branch and the old archived one on master and it contains a bunch of breaking changes.

For example, it no longer supports installing and activating grammars automatically. I think I saw a plugin for that somewhere but here’s some Fennel code that sets it up:

(require-macros :macros)

(local nvim-treesitter (require :nvim-treesitter))

;; Ignore auto install for these filetypes:

(local ignored_ft [])

(augroup! :treesitter

(au! :FileType

(λ [args]

(local bufnr args.buf)

(local ft args.match)

;; Auto install grammars unless explicitly ignored.

(when (not (vim.list_contains ignored_ft ft))

(: (nvim-treesitter.install ft) :await

(λ []

;; Enable highlight only if there's an installed grammar.

(local installed (nvim-treesitter.get_installed))

(when (and (vim.api.nvim_buf_is_loaded bufnr)

(vim.list_contains installed ft))

(vim.treesitter.start bufnr))))))))

If you use nvim-treesitter-textobjects (which you should) remember to migrate to the main branch there too.

fyler.nvim, edit a file explorer like a buffer

oil.nvim is a great plugin that allows you to manage files by simply editing text. fyler.nvim takes it to the next level by combining it with a tree-style file explorer.

blink.cmp, faster autocomplete

I’ve been using nvim-cmp as my completion plugin but I migrated to blink.cmp as it’s faster and more actively maintained. It’s too bad that it broke my custom nvim-cmp source for my blog but it wasn’t too hard to migrate.

snacks.nvim, a better picker

telescope.nvim has been a solid picker but it’s no longer actively developed and the snacks.nvim is the replacement I settled on.

I tried fff.nvim for file picking but surprisingly it felt really slow compared to snacks.nvim. fzf-lua is another great alternative that I haven’t given enough attention to.

grug-far.nvim, global query replace

I’ve been happy with Neovim’s regular %s/foo/bar for single files (aided by search-replace.nvim for easy population).

But query replace in multiple files has always felt lacking.

I used to use telescope.nvim to populate the quickfix window and then use replacer.nvim to make it editable, updating multiple files.

It worked but was a bit annoying so now I’m trying grug-far.nvim as a more “over engineered” solution. I haven’t used it that long to say for sure but I’m hopeful.

It would be better to gradually evolve your Neovim config over time instead of doing these large rewrites. But afterwards it feels pretty good as I can once more try to claim with a straight face that I know what’s in my configuration and what it’s doing.

The vim.pack migration was more painful than I had expected. It’s still an experimental nightly feature and it’s missing a lot of nice features that lazy.nvim has. I’ll keep using vim.pack as I think I’ve gotten it to a state of good enough but I’m looking forward to vim.pack becoming more feature complete.

Fennel is fun to write in and I will keep using it where I can. To be honest though, for basic configuration I was expecting Fennel to make a bigger difference than it did. It’s nicer for sure but it’s nothing revolutionary.

Then again, it’s the little things in life that matters.

2025-09-01 08:00:00

I recently bought a couple of Hue Tap Dial switches for our house to enhance our smart home. We have quite a few smart lights and I figured the tap dial—with it’s multiple buttons and rotary dial—would be a good fit.

Since I’ve been moving away from standard Home Assistant automations to my own automation engine in Elixir I had to figure out how best to integrate the tap dial.

At first I tried to rely on my existing Home Assistant connection but I realized that it’s better to bypass Home Assistant and go directly via MQTT, as I already use Zigbee2MQTT as the way to get Zigbee devices into Home Assistant.

This post walks through how I set it all up and I’ll end up with an example of how I control multiple Zigbee lights from one dial via Elixir.

I’m a huge fan of using direct bindings in Zigbee to directly pair switches to lights. This way the interaction is much snappier; instead of going through:

device 1 -> zigbee2mqtt -> controller -> zigbee2mqtt -> device 2

The communication can instead go:

device 1 -> device 2

It works if my server is down, which is a huge plus for important functionality such as turning on the light in the middle of the night when one of the kids have soiled the bed. That’s not the time you want to debug your homelab setup!

The Hue Tap Dial can be bound to lights with Zigbee2MQTT and dimming the lights with the dial feels very smooth and nice. You can also rotate the dial to turn on and off the light, like a normal dimmer switch.

Unfortunately, if you want to bind the dimmer it also binds the hold of all of the four buttons to turn off the light, practically blocking the hold functionality if you use direct binding. There’s also no way to directly bind a button press to turn on or toggle the light—dimming and hold to turn off is what you get.

To add more functionality you have to use something external; a Hue Bridge or Home Assistant with a Zigbee dongle works, but I wanted to use Elixir.

The first thing we need to do is figure out is how to receive MQTT messages and how to send updates to Zigbee devices.

I found the tortoise311 library that implements an MQTT client and it was quite pleasant to use.

First we’ll start a Tortoise311.Connection in our main Supervisor tree:

[

# Remember to generate a unique id if you want to connect multiple clients

# to the same MQTT service.

client_id: :my_unique_client_id,

# They don't have to be on the same server.

server: ,

# Messages will be sent to `Haex.MqttHandler`.

handler: ,

# Subscribe to all events under `zigbee2mqtt`.

subscriptions: []

]},

I’ll also add Phoenix PubSub to the Supervisor, which we’ll use to propagate MQTT messages to our automation:

,

When starting Tortoise311.Connection above we configured it to call the Haex.MqttHandler whenever an MQTT message we’re subscribing to is received.

Here we’ll simply forward any message to our PubSub, making it easy for anyone to subscribe to any message, wherever they are:

use Tortoise311.Handler

alias Phoenix.PubSub

payload = Jason.decode!(payload)

PubSub.broadcast!(Haex.PubSub, Enum.join(topic, ), )

end

end

Then in our automation (which in my automation system is a regular GenServer) we can subscribe to the events the Tap Dial creates:

use GenServer

alias Phoenix.PubSub

@impl true

# `My tap dial` is the name of the tap dial in zigbee2mqtt.

PubSub.subscribe(Haex.PubSub, )

end

@impl true

dbg(payload)

end

end

If everything is setup correctly we should see messages like these when we operate the Tap Dial:

payload #=> %{

"action" => "button_1_press_release",

...

}

payload #=> %{

"action" => "dial_rotate_right_step",

"action_direction" => "right",

"action_time" => 15,

"action_type" => "step",

...

}

To change the state of a device we should send a json payload to the “set” topic.

For example, to turn off a light named My hue light we should send the payload

to zigbee2mqtt/My hue light/set.

Here’s a function to send payloads to our light:

Tortoise311.publish(

# Important that this id matches the `client_id`

# we gave to Tortoise311.Connection.

:my_unique_client_id,

,

Jason.encode!(payload)

)

end

With the MQTT communication done, we can start writing some automations.

Here’s how we can toggle the light on/off when we click the first button on the dial in our GenServer:

set(%)

end

(Remember that we subscribed to the

topic during init.)

You can also hold a button, which generates a hold and a hold_release event.

Here’s how to use them to start moving through the hues of a light when you hold down a button and stop when you release it.

set(%)

end

set(%)

end

How about double clicking?

You could track the timestamp of the presses in the GenServer state and check the duration between them to determine if it’s a double click or not; maybe something like this:

double_click_limit = 350

now = DateTime.utc_now()

if state[:last_press] &&

DateTime.diff(now, state[:last_press], :millisecond) < double_click_limit do

# If we double clicked.

set(%)

else

# If we single clicked.

set(%)

end

end

This however executes an action on the first and second click. To get around that we could add a timeout for the first press by sending ourselves a delayed message, with the downside of introducing a small delay for single clicks:

double_click_limit = 350

now = DateTime.utc_now()

if state[:last_press] &&

DateTime.diff(now, state[:last_press], :millisecond) < double_click_limit do

set(%)

# The double click clause is the same as before except we also remove `click_ref`

# to signify that we've handled the interaction as a double click.

state =

state

|> Map.delete(:last_press)

|> Map.delete(:click_ref)

else

# When we first press a key we shouldn't execute it directly,

# instead we send ourself a message to handle it later.

# Use `make_ref` signify which press we should handle.

ref = make_ref()

Process.send_after(self(), , double_click_limit)

state =

state

|> Map.put(:last_press, now)

|> Map.put(:click_ref, ref)

end

end

# This is the delayed handling of a single button press.

# If the stored reference doesn't exist we've handled it as a double click.

# If we press the button many times (completely mash the button) then

# we might enter a new interaction and `click_ref` has been replaced by a new one.

# This equality check prevents such a case, allowing us to only act on the very

# last press.

# This is also useful if we in the future want to add double clicks to other buttons.

if state[:click_ref] == ref do

set(%)

else

end

end

You can generalize this concept to triple presses and beyond by keeping a list of timestamps instead of the singular one we use in

:last_press,

but I personally haven’t found a good use-case for them.

Now, let’s see if we can create a smooth dimming functionality. This is surprisingly problematic but let’s see what we can come up with.

Rotating the dial produces a few different actions:

dial_rotate_left_step

dial_rotate_left_slow

dial_rotate_left_fast

dial_rotate_right_step

dial_rotate_right_slow

dial_rotate_right_fast

brightness_step_up

brightness_step_down

Let’s start with dial_rotate_* to set the brightness_step attribute of the light:

speed = rotate_speed(type)

set(%)

end

-rotate_speed(speed)

rotate_speed(speed)

10

20

45

This works, but the transitions between the steps aren’t smooth as the light immediately jumps to a new brightness value.

With a transition we can smooth it out:

# I read somewhere that 0.4 is standard for Philips Hue.

set(%)

It’s actually fairly decent (when the stars align).

As an alternative implementation we can try to use the brightness_step_* actions:

%

=> <> dir,

=> step

}},

state

) do

step =

case dir do

-> step

-> -step

end

# Dimming was a little slow, adding a factor speeds things up.

set(%)

end

This implementation lets the tap dial itself provide the amount of steps and I do think it feels better than the dial_rotate_* implementation.

Note that this won’t completely turn off the light and it’ll stop at brightness 1.

We can instead provide

brightness_step_onoff: step to allow the dimmer to turn on and off the light too.

One of the reasons I wanted a custom implementation was to be able to do other things with the rotary dial.

For example, maybe I’d like to alter the hue of light by rotating? All we have to do is set the hue instead of the brightness:

set(%)

(This produces a very cool effect!)

Another idea is to change the volume by rotating. Here’s the code that I use to control the volume of our kitchen speakers (via Home Assistant, not MQTT):

rotate: fn step ->

# A step of 8 translates to a volume increase of 3%

volume_step = step / 8 * 3 / 100

# Clamp volume to not accidentally set a very loud or silent volume.

volume =

# HAStates stores the current states in memory whenever a state is changed.

(HAStates.get_attribute(kitchen_player_ha(), :volume_level, 0.2) + volume_step)

|> Math.clamp(0.05, 0.6)

# Calls the `media_player.volume_set` action.

MediaPlayer.set_volume(kitchen_player_ha(), volume)

# Prevents a possible race condition where we use the old volume level

# stored in memory for the next rotation.

HAStates.override_attribute(kitchen_player_ha(), :volume_level, volume)

end

We’ve got a bunch of lights in the boys bedroom that we can control and it’s a good use-case for a device such as the Tap Dial.

These are the lights we can control in the room:

(Yes, I need to get a lava light for Isidor too. They’re awesome!)

The window light isn’t controlled by the tap dial and there are other automations that controls circadian lighting for most of the lights.

I’m opting to use direct binding because of two reasons:

Even though it overrides the hold functionality I think direct binding for lights is the way to go.

These are the functions for the tap dial in the boys room:

There’s many different ways you can design the interactions and I may switch it up in the future, but for now this works well.

The code I’ve shown you so far has been a little simplified to explain the general approach. As I have several tap dials around the house I’ve made a general tap dial controller with a more declarative approach.

For example, here’s how the tap dial in the boys room is defined:

TapDialController.start_link(

device: boys_room_tap_dial(),

scene: 0,

rotate: fn _step ->

# This disables the existing circadian automation.

# I found that manually disabling it is more reliable than trying to

# detect external changes over MQTT as messages may be delayed and arrive out of order.

LightController.set_manual_override(boys_room_ceiling_light(), true)

end,

button_1: %

click: fn ->

Mqtt.set(boys_room_ceiling_light(), %)

end,

double_click: fn ->

# This function compares the current light status and sets it to 100%

# or reverts back to circadian lighting (if setup for the light).

HueLights.toggle_max_brightness(boys_room_ceiling_light())

end

},

button_2: %

click: fn state ->

# The light controller normally uses circadian lighting to update

# the light. Setting manual override pauses circadian lighting,

# allowing us to manually control the light.

LightController.set_manual_override(boys_room_ceiling_light(), true)

# This function steps through different light states for the ceiling light

# (hue 0..300 with 60 intervals) and stores it in `state`.

next_scene(state)

end

},

button_3: %

click: fn ->

Mqtt.set(boys_room_floor_light(), %)

end,

double_click: fn ->

Mqtt.set(isidor_sleep_light(), %)

end,

hold: fn ->

Mqtt.set(isidor_sleep_light(), %)

end,

hold_release: fn ->

Mqtt.set(isidor_sleep_light(), %)

end

},

button_4: %

click: fn ->

Mqtt.set(loke_lava_lamp(), %)

end,

double_click: fn ->

Mqtt.set(loke_sleep_light(), %)

end,

hold: fn ->

Mqtt.set(loke_sleep_light(), %)

end,

hold_release: fn ->

Mqtt.set(loke_sleep_light(), %)

end

}

)

I’m not going to go through the implementation of the controller in detail. Here’s the code you can read through if you want:

use GenServer

alias Haex.Mqtt

alias Haex.Mock

require Logger

@impl true

# This allows us to setup expectations and to collect what messages

# the controller sends during unit testing.

if parent = opts[:parent] do

Mock.allow(parent, self())

end

device = opts[:device] || raise

# Just subscribes to pubsub under the hood.

Mqtt.subscribe_events(device)

state =

Map.new(opts)

|> Map.put_new(:double_click_timeout, 350)

end

GenServer.start_link(__MODULE__, opts)

end

@impl true

case parse_action(payload) do

->

# We specify handlers with `button_3: %{}` specs.

case fetch_button_handler(button, state) do

->

# Dispatch to action handlers, such as `handle_hold` and `handle_press_release`.

fun.(spec, state)

:not_found ->

end

->

case fetch_rotate_handler(state) do

->

call_handler(cb, step, state)

:not_found ->

end

:skip ->

end

end

# Only execute the callback for the last action.

if state[:click_ref] == ref do

call_handler(cb, Map.delete(state, :click_ref))

else

end

end

single_click_handler = spec[:click]

double_click_handler = spec[:double_click]

cond do

double_click_handler ->

now = DateTime.utc_now()

valid_double_click? =

state[:last_press] &&

DateTime.diff(now, state[:last_press], :millisecond) < state.double_click_timeout

if valid_double_click? do

# Execute a double click immediately.

state =

state

|> Map.delete(:last_press)

|> Map.delete(:click_ref)

call_handler(double_click_handler, state)

else

# Delay single click to see if we get a double click later.

ref = make_ref()

Process.send_after(

self(),

,

state.double_click_timeout

)

state =

state

|> Map.put(:last_press, now)

|> Map.put(:click_ref, ref)

end

single_click_handler ->

# No double click handler, so we can directly execute the single click.

call_handler(single_click_handler, state)

true ->

end

end

call_handler(spec[:hold], state)

end

call_handler(spec[:hold_release], state)

end

end

# If a callback expects one argument we'll also send the state,

# otherwise we simply call it.

case Function.info(handler)[:arity] do

0 ->

handler.()

1 ->

x ->

Logger.error(

)

end

end

end

case Function.info(handler)[:arity] do

1 ->

handler.(arg1)

2 ->

x ->

Logger.error(

)

end

end

step =

case dir do

-> step

-> -step

end

end

case Regex.run(, action, capture: :all_but_first) do

[button, ] ->

[button, ] ->

[button, ] ->

_ ->

:skip

end

end

Logger.debug()

:skip

end

spec = state[ |> String.to_atom()]

if spec do

else

:not_found

end

end

if spec = state[:rotate] do

else

:not_found

end

end

end

2025-08-28 08:00:00

Cops say criminals use a Google Pixel with GrapheneOS — I say that’s freedom

We’re in a dark time period right now.

Authoritarianism is on the rise throughout the globe. Governments wants to monitor your social media accounts so they can make you disappear if you engage in wrongthink such as opposing wars or genocide. This is worsened by misguided laws like Chat control that would mandate scanning of all digital communication, exposing any wrongthink in your “private messages”.

A rational reaction to threats is to “shell up” and try to make your personal space safe. This is increasingly difficult as the devices you buy often doesn’t feel like yours anymore. Files are moved to the cloud without your knowledge; companies are doing everything they can to prevent you from blocking the ads they’re shoving in everywhere; and everything you do will soon be ingested by an LLM in order to present personalized slop to you (even your passwords and screenshots of any nasty porn habits you may have).

While you can avoid most of this crap on computers (try Linux if you haven’t) the situation on smartphones is much bleaker. Apple has been blocking sideloading apps for years and Google will soon follow by only allowing apps from verified developers to be installed on Android, preventing you from installing what you want.

(They claim it’s “for security” but it’s obvious they’re doing this to protect their income stream. Apple takes a ridiculous 30% cut from all sales in their walled garden and Google hates the ability to strip out their ads.)

I like the idea of a “dumb phone” but I unfortunately need and want apps on my phone (I consider banking and authentication apps essential to surviving in the modern world, and sometimes you must run an Android or iOS app). A “degoogled” Android-compatible operating system is the answer I see, with GrapheneOS as the exceptional standout.

The big dragons recognize this as for example Samsung removes bootloader unlocking and the EU age verification app may ban Android systems not licensed by Google. In true Streisand fashion this only makes me more motivated to fight back.

When people talk about GrapheneOS they will understandably focus on the privacy and security aspect. I’ll go into it a later but I think it’s important to first dispel the idea that GrapheneOS is only for the hardcore tech savvy user or that you’ll have to sacrifice a lot of functionality.

While GrapheneOS isn’t quite as simple as stock Android (I had to tweak a few settings to get some apps working) the experience has been very smooth.

The installation was straightforward and worked without a hitch:

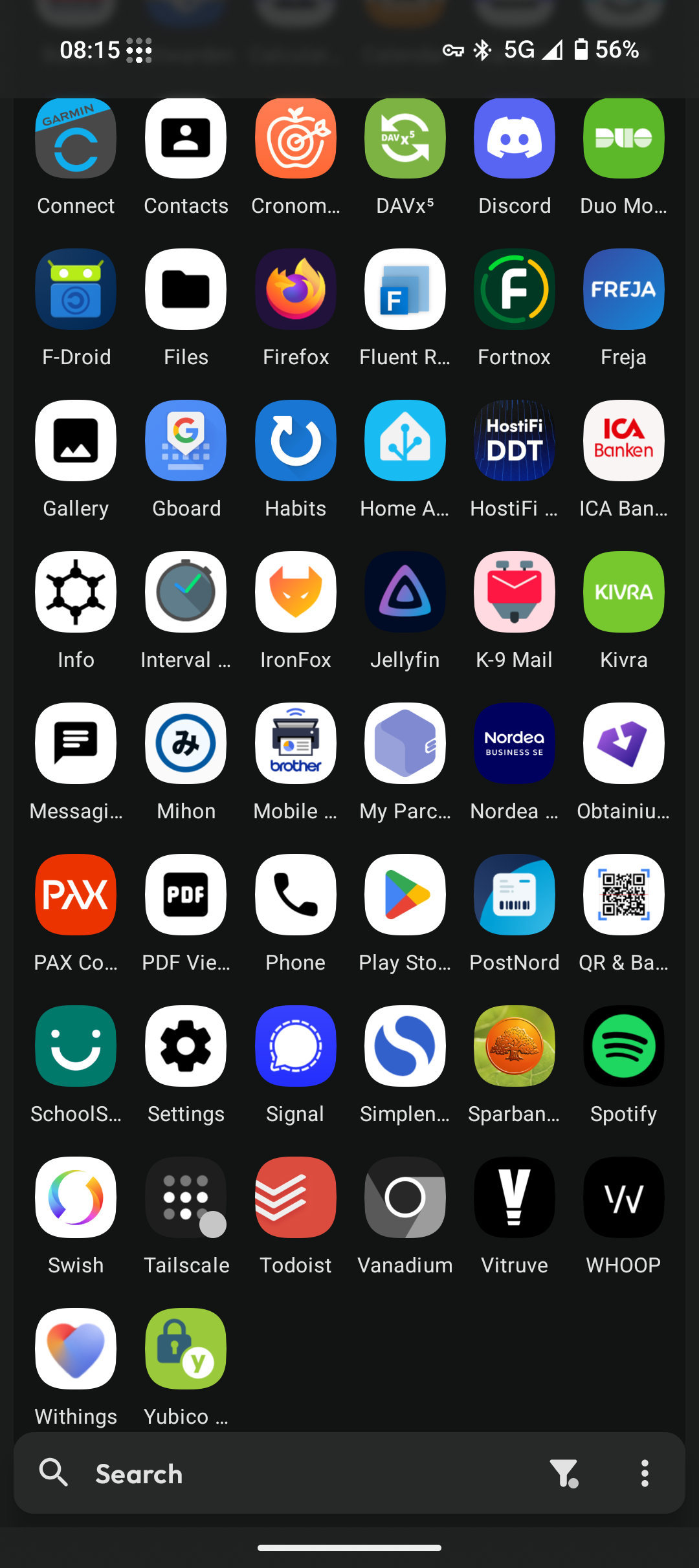

Some of the apps I’ve got installed. I use the Kvaesitso launcher.

Apps are also just as easy to install as on stock Android. I’ve installed most of the apps from the Play Store just as I would’ve on stock and they work just fine.

So far I’ve had a grand total of two issues with any apps I’ve tried:

At first I couldn’t copy BankID over from my old phone.

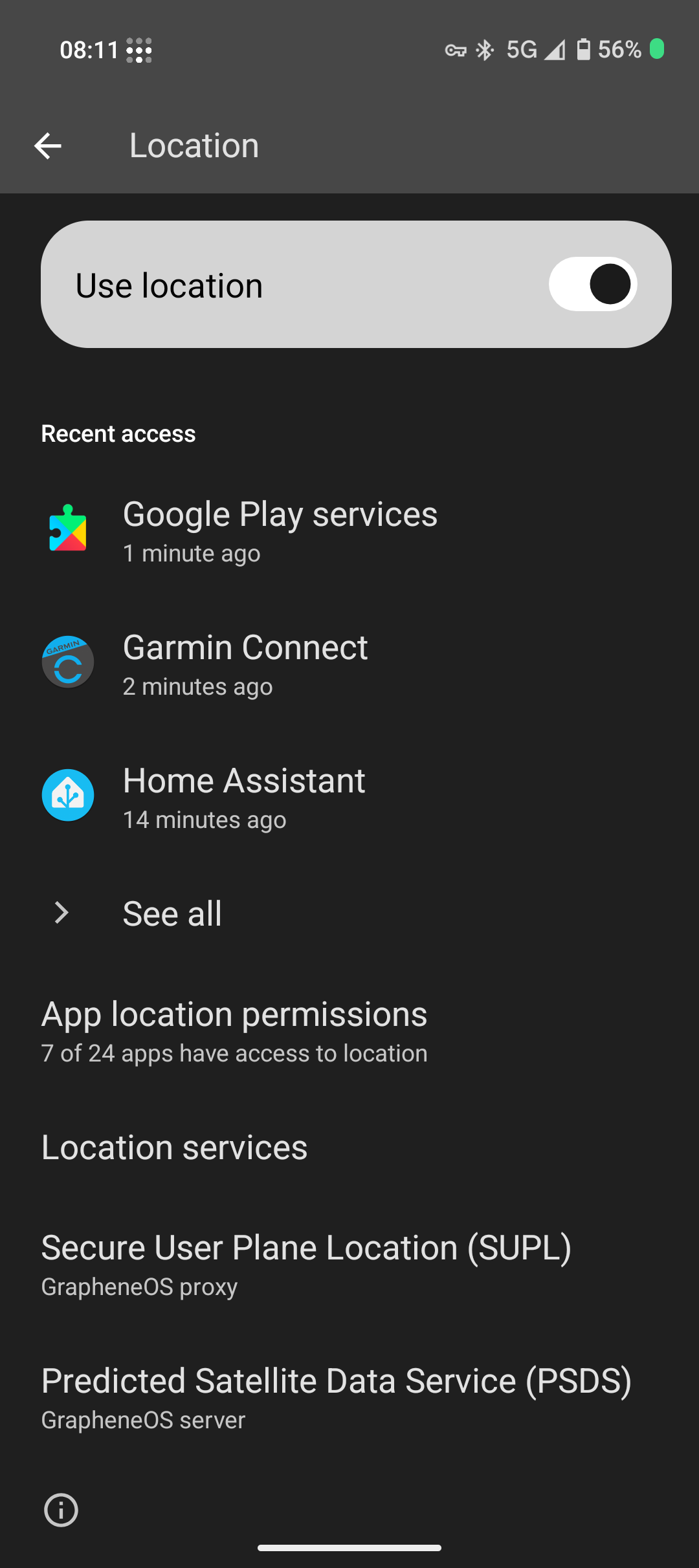

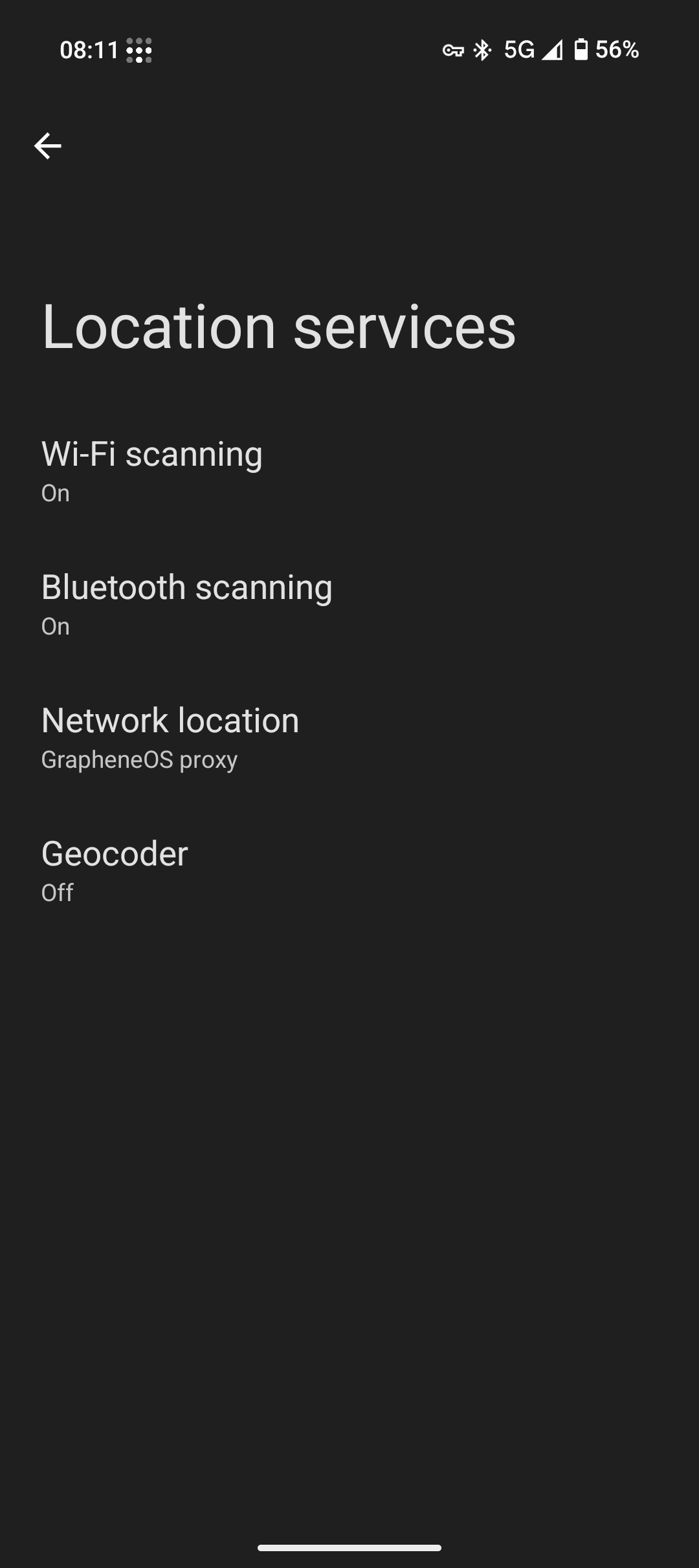

I had to tweak some permissions and disable some of the location privacy features of GrapheneOS before the phones recognized each other. Presumably there’s some security measure there so that you can only copy it if the phones are nearby.

I had to play with some of these location settings to be able to copy BankID from my old phone to the new one.

The AI detection feature of MacroFactor refused to work.

MacroFactor is a food tracking app where you can take a photo and it’ll try to infer the food from the picture but uploading the photo simply failed. It’s a pretty cool feature and I instead use the app Cronometer that has the same functionality.

MacroFactor uses Play Integrity which may in some cases break certain apps. I’ve got a few other apps that also uses Play Integrity but they don’t have any issues.

I was worried that I’d run into issues with the banking apps as I know there are some banking apps that have issues, but all the Swedish banking apps I’ve tried work well.

You can of course install apps from other sources such as F-Droid, Accrescent, Obtanium, or manually as well.

Finally, I love that there’s no bloatware bullshit on GrapheneOS. There are no shitty vendor specific apps that you cannot uninstall and it isn’t trying to trick you into installing stupid games via dark patterns. The downside is that GrapheneOS doesn’t come with a lot of customization and lets you install apps for that yourself.

In short, it feels like with GrapheneOS you’re in control, not some mega corporation.

While other Android compatible distributions such as CalyxOS and LineageOS all mention privacy and security as benefits they’re nothing like GrapheneOS. In some cases—as with CalyxOS where security updates have seen significant delays—they may provide even less security compared to stock Android.

Everything I’ve read suggests that GrapheneOS takes security and privacy very seriously. I feel that sometimes they may take a too extreme stance but I can respect that, despite being overkill for the threat levels I care about.

See for example this comparison of Android-based Operating Systems.

What about Apple then? Aren’t they great at privacy and security? While I’m sure they’ll respect your privacy more than Google, I just can’t trust a company that shoves ads into their wallet app.

It would’ve been great to have more choices. If I only looked at hardware I might have gotten the Fairphone 5 as I like the idea of repairability, ethically sourced components, and a phone made in the EU. I also like the idea of a smaller phone and a Flip phone would’ve been great. Or maybe a really cheap phone (or tablet) as I don’t care that much about performance and could save some money.

But alas, GrapheneOS only support Pixel devices (for now). There’s a handful of phones to choose from but only a single tablet.

I guess the best way to degoogle right now is to buy from Google… So I got the Pixel 9a for myself and the Pixel Tablet for our family. (Admittedly, they’ve been pretty great.)

I’ve had an interesting shift when I evaluate mobile devices; instead of comparing phones primarily by hardware I prioritize the software on the phone.

Today, more than ever, hardware upgrades in new phones provide diminishing returns for ever increasing prices. There’s little practical difference between a new phone and a phone from a few years ago and savvy people can save a lot of money by simply avoiding the constant stream of new releases.

Before I bought the Pixel 9a I used the Fairphone 4 for almost 4 years, and it was performing just fine! If I hadn’t gotten the urge of trying out GrapheneOS I would’ve still be happy with the Fairphone hardware (which was a bit underpowered already at release).

Software on the other hand is more important than ever and for me it’s what makes or breaks a phone today.

The right software will protect your privacy and help keep your device secure, while the wrong software will fill your phone with uninstallable bloatware and cripple performance after system updates (if they deign to provide them).

For example, I’ve used a Galaxy Tab A7 Lite as a dashboard for my smart home for a while and it worked great. Then I installed an update and it suddenly became extremely slow, so slow that you barely could interact with the UI without punching the damn device. Even though the hardware is great, Samsung crippled the device for no reason.

With the right software your device works for you, not against you. It’s not a lot to ask for, yet in the modern day that’s very rare indeed, and it’s why I’ll only be buying mobile devices supported by GrapheneOS for the foreseeable future.

2025-06-04 08:00:00

My general advice for life is: be a good person, and care for the people around you. And follow this one very specific rule: avoid vendor lock-in.

I’ve always wondered how to setup a sound systems around the house that you can control from your devices, such as your phone. To get a working setup it seemed you had to embrace vendor lock-in; either by committing to an entire ecosystem such as Sonos, or by relying on a service like Spotify and buying amplifiers that supports their particular integration (such as Spotify Connect).

When I wanted to replace and upgrade our old Sonos speaker I did som research and I found a promising alternative: it’s called Music Assistant and it’s great.

We’ve had a Sonos speaker in our kitchen for more than a decade. At first I was very happy with it; the speaker was easy to use, it integrated well with Spotify, and despite being a single fairly cheap speaker the sound was pretty good.

But gradually the experience got worse:

It could be worse—at least our speaker wasn’t bricked and we (supposedly) dodged a bunch of other issues by never upgrading to the new app.

Use Music Assistant as the central controller for streaming music and radio to different players and speakers.

Setup an Arylic A50+ amplifier that Music Assistant can control.

Together with a pair of speakers it replaces the Sonos in the kitchen.

Connect Music Assistant with Home Assistant to control playback via our smart-home dashboard and automations.

Setup more players for Music Assistant to control.

Music Assistant is a service that acts as a hub that connects different providers and players, letting you control the sound in your home from one central location.

So you could have this kind of setup and let Music Assistant connect them together in whatever way you wish, including multi-room setups (if the players are from the same ecosystem, such as Airplay or Squeezebox):

| Provider | Player | |

|---|---|---|

| Spotify | Sonos | |

| Audible | Chromecast | |

| Radio | → | Media player in Home Assistant |

| Plex | Streaming amplifier (Squeezebox) | |

| Local storage | Computer with Linux (Squeezebox) |

Here’s a few reasons why I think Music Assistant is awesome:

I host my homelab things using docker compose and it was as simple as:

services:

music-assistant-server:

image: ghcr.io/music-assistant/server:latest

container_name: music-assistant-server

restart: unless-stopped

# Network mode must be set to host for MA to work correctly

network_mode: host

volumes:

- ./music-assistant-server/data:/data/

# privileged caps (and security-opt) needed to mount

# smb folders within the container

cap_add:

- SYS_ADMIN

- DAC_READ_SEARCH

security_opt:

- apparmor:unconfined

environment:

- LOG_LEVEL=info

# And home assistant and other things.

Add providers

A provider is a source of music. There’s a bunch of them but at the moment I only use a few:

The Spotify provider for example should automatically sync all Spotify playlists into Music Assistant and allows you to search and play any song on Spotify.

Add players

We need players to play our music, here’s what I currently use:

The players should be automatically added as long as they have a matching provider enabled.

Open-source music management—particularly on Linux—has a reputation of being notoriously troublesome. But I’ve gotta say, Music Assistant was simple to setup and it works well (except issues with some players that I’ll get to shortly).

I was fairly lost in what kind of amplifier and music player I should get.

There’s a lot of options out there but I was worried about paying a lot of money for something I wasn’t sure it would integrate well into my smart home setup. Here’s a few options I’ve tried:

It’s pretty funny, the Sonos speaker works better with Music Assistant than with the Sonos app. The radio completely stopped working via the Sonos app, while I can use Music Assistant to play the radio on the Sonos speaker.

The speaker might still disconnect or stop playback at odd times but it’s good enough to raise the mood in the washing room.

I hate modern smart TVs with a passion so to stream we use a computer running Linux, connected to a dumb amplifier with some speakers. It works well but it makes it a bit more cumbersome to play music.

By installing Squeezelite the computer acts as a squeezebox client, effectively transforming it into a smart player for Music Assistant and Home Assistant to stream music to.

As I had a Raspberry Pi lying around it made sense to try the HifiBerry AMP2 that transforms the Pi into a surprisingly capable amplifier and smart music controller. For simplicity I decided to start with their operating system HifiBerryOS that hopefully should “just work” and be open enough for me to manually fix things if needed.

While it works very well as a Spotify Connect device or to play over Bluetooth I had issues with it acting as a Squeezelite or Airplay client as the volume was super low when I tried to stream to it from Music Assistant.

It might be a software issue with HifiBerryOS but I haven’t had the energy to debug it or try other OS versions. Maybe I’ll revisit it when I want to outfit another room with speakers.

I was planning to use the HifiBerry to power two new speakers in the ceiling in the kitchen to replace the Sonos. But I got stressed out by the HifiBerry not working properly and the kitchen renovation was looming ever closer so I bought the Arylic A50+, hoping that it would work better with Music Assistant.

The device supports both Airplay and Squeezebox like the HifiBerry but again there were some issue with the volume being very low. I don’t know if both devices just happen to have similar bugs, if there’s a bug with Music Assistant, or if it’s some weird compatibility issue.

Sigh.

But there’s another way to make it work; the Arylic has an excellent Home Assistant integration and if you go that way then Music Assistant can use the Arylic as a player properly. You need the LinkPlay integration in Home Assistant and then enable the Home Assistant integration in Music Assistant.

(I tried the same with the HifiBerry integration but for some reason the HifiBerry media player exposed from Home Assistant didn’t show up in Music Assistant.)

Music Assistant integrates well with Home Assistant. Setup is straightforward:

Add the Music Assistant integration in Home Assistant:

This will expose all players in Music Assistant to Home Assistant (such as the Squeezebox players running on Linux).

Enable the Home Assistant plugin in Music Assistant:

This will expose all media players in Home Assistant to Music Assistant (such as the Arylic or the Home Assistant Voice Preview Edition).

With this setup you can setup Home Assistant actions to start a particular radio station, add a media player card to start/stop playback, or simply give Music Assistant access to more players.

Play the P4 Norrbotten radio station?

action: music_assistant.play_media

target:

entity_id: media_player.kitchen

data:

media_id: P4 Norrbotten

Play the To Hell and Back track?

action: music_assistant.play_media

target:

entity_id: media_player.kitchen

data:

media_id: To Hell and Back

media_type: track

Play the kpop playlist?

action: music_assistant.play_media

target:

entity_id: media_player.kitchen

data:

media_id: kpop

media_type: playlist

Play the kpop playlist randomized?

action: media_player.shuffle_set

target:

entity_id:

- media_player.kitchen

data:

shuffle: true

action: music_assistant.play_media

target:

entity_id: media_player.kitchen

data:

media_id: kpop

media_type: playlist

enqueue: replace

You get the new music_assistant.play_media action but otherwise you control the media players just as the other media player entities in Home Assistant.

Music Assistant is a fairly young open source project so minor bugs are to be expected. There are also two larger feature that I miss:

I’d like to have access to my library even when I’m away from home. Maybe an Android app with native volume controls, notifications, and support for offline listening?

A better widget for Home Assistant.

For basic sound controls in the kitchen I use the Mini Media Player card but I’d like a better way to filter through playlists and music via the Home Assistant Lovelace UI. I currently embed the Music Assistant dashboard itself via an iframe but the UI is a bit too clunky for the size of my tablet.

Still, I must admit, I’m stoked about finding a smarter way to control music throughout our house and I’m searching for an excuse to expand the setup in the future. Here are some ideas I have:

Despite some integration issues and us not using Music Assistant to it’s fullest potential it has still improved our setup in a major way.