2024-10-28 08:00:00

There's a common narrative that Microsoft was moribund under Steve Ballmer and then later saved by the miraculous leadership of Satya Nadella. This is the dominant narrative in every online discussion about the topic I've seen and it's a commonly expressed belief "in real life" as well. While I don't have anything negative to say about Nadella's leadership in this post, this narrative underrates Ballmer's role in Microsoft's success. Not only did Microsoft's financials, revenue and profit, look great under Ballmer, Microsoft under Ballmer made deep, long-term bets that set up Microsoft for success in the decades after his reign. At the time, the bets were widely panned, indicating that they weren't necessarily obvious, but we can see in retrospect that the company made very strong bets despite the criticism at the time.

In addition to overseeing deep investments in areas that people would later credit Nadella for, Ballmer set Nadella up for success by clearing out political barriers for any successor. Much like Gary Bernhardt's talk, which was panned because he made the problem statement and solution so obvious that people didn't realize they'd learned something non-trivial, Ballmer set up Microsoft for future success so effectively that it's easy to criticize him for being a bum because his successor is so successful.

For people who weren't around before the turn of the century, in the 90s, Microsoft used to be considered the biggest, baddest, company in town. But it wasn't long before people's opinions on Microsoft changed — by 2007, many people thought of Microsoft as the next IBM and Paul Graham wrote Microsoft is Dead, in which he noted that Microsoft being considered effective was ancient history:

A few days ago I suddenly realized Microsoft was dead. I was talking to a young startup founder about how Google was different from Yahoo. I said that Yahoo had been warped from the start by their fear of Microsoft. That was why they'd positioned themselves as a "media company" instead of a technology company. Then I looked at his face and realized he didn't understand. It was as if I'd told him how much girls liked Barry Manilow in the mid 80s. Barry who?

Microsoft? He didn't say anything, but I could tell he didn't quite believe anyone would be frightened of them.

These kinds of comments often came with comments that Microsoft's revenue was destined to fall, such as these comments by Graham:

Actors and musicians occasionally make comebacks, but technology companies almost never do. Technology companies are projectiles. And because of that you can call them dead long before any problems show up on the balance sheet. Relevance may lead revenues by five or even ten years.

Graham names Google and the web as primary causes of Microsoft's death, which we'll discuss later. Although Graham doesn't name Ballmer or note his influence in Microsoft is Dead, Ballmer has been a favorite punching bag of techies for decades. Ballmer came up on the business side of things and later became EVP of Sales and Support; techies love belittling non-technical folks in tech1. A common criticism, then and now, is that Ballmer didn't understand tech and was a poor leader because all he knew was sales and the bottom line and all he can do is copy what other people have done. Just for example, if you look at online comments on tech forums (minimsft, HN, slashdot, etc.) when Ballmer pushed Sinofsky out in 2012, Ballmer's leadership is nearly universally panned2. Here's a fairly typical comment from someone claiming to be an anonymous Microsoft insider:

Dump Ballmer. Fire 40% of the workforce starting with the loser online services (they are never going to get any better). Reinvest the billions in start-up opportunities within the puget sound that can be accretive to MSFT and acquisition targets ... Reset Windows - Desktop and Tablet. Get serious about business cloud (like Salesforce ...)

To the extent that Ballmer defended himself, it was by pointing out that the market appeared to be undervaluing Microsoft. Ballmer noted that Microsoft's market cap at the time was extremely low relative to its fundamentals/financials relative to Amazon, Google, Apple, Oracle, IBM, and Salesforce. This seems to have been a fair assessment by Ballmer as Microsoft has outperformed all of those companies since then.

When Microsoft's market cap took off after Nadella became CEO, it was only natural the narrative would be that Ballmer was killing Microsoft and that the company was struggling until Nadella turned it around. You can pick other discussions if you want, but just for example, if we look at the most recent time Microsoft is Dead hit #1 on HN, a quick ctrl+F has Ballmer's name showing up 24 times. Ballmer has some defenders, but the standard narrative that Ballmer was holding Microsoft back is there, and one of the defenders even uses part of the standard narrative: Ballmer was an unimaginative hack, but he at least set up Microsoft well financially. If you look at high ranking comments, they're all dunking on Ballmer.

And if you look on less well informed forums, like Twitter or Reddit, you see the same attacks, but Ballmer has fewer defenders. On Twitter, when I search for "Ballmer", the first four results are unambiguously making fun of Ballmer. The fifth hit could go either way, but from the comments, seems to generally be taken as making of Ballmer, and as I far as I scrolled down, all but one of the remaining videos was making fun of Ballmer (the one that wasn't was an interview where Ballmer notes that he offered Zuckerberg "$20B+, something like that" for Facebook in 2009, which would've been the 2nd largest tech acquisition ever at the time, second only to Carly Fiorina's acquisition of Compaq for $25B in 2001). Searching reddit (incognito window with no history) is the same story (excluding the stories about him as an NBA owner, where he's respected by fans). The top story is making fun of him, the next one notes that he's wealthier than Bill Gates and the top comment on his performance as a CEO starts with "The irony is that he is Microsofts [sic] worst CEO" and then has the standard narrative that the only reason the company is doing well is due to Nadella saving the day, that Ballmer missed the boat on all of the important changes in the tech industry, etc.

To sum it up, for the past twenty years, people having been dunking on Ballmer for being a buffoon who doesn't understand tech and who was, at best, some kind of bean counter who knew how to keep the lights on but didn't know how to foster innovation and caused Microsoft to fall behind in every important market.

The common view is at odds with what actually happened under Ballmer's leadership. In financially material positive things that happened under Ballmer since Graham declared Microsoft dead, we have:

There are certainly plenty of big misses as well. From 2010-2015, HoloLens was one of Microsoft's biggest bets, behind only Azure and then Bing, but no one's big AR or VR bets have had good returns to date. Microsoft failed to capture the mobile market. Although Windows Phone was generally well received by reviewers who tried it, depending on who you ask, Microsoft was either too late or wasn't willing to subsidize Windows Phone for long enough. Although .NET is still used today, in terms of marketshare, .NET and Silverlight didn't live up to early promises and critical parts were hamstrung or killed as a side effect of internal political battles. Bing is, by reputation, a failure and, at least given Microsoft's choices at the time, probably needed antitrust action against Google to succeed, but this failure still resulted in a business unit worth hundreds of billions of dollars. And despite all of the failures, the biggest bet, Azure, is probably worth on the order of a trillion dollars.

The enterprise sales arm of Microsoft was built out under Ballmer before he was CEO (he was, for a time, EVP for Sales and Support, and actually started at Microsoft as the first business manager) and continued to get built out when Ballmer was CEO. Microsoft's sales playbook was so effective that, when I was Microsoft, Google would offer some customers on Office 365 Google's enterprise suite (Docs, etc.) for free. Microsoft salespeople noted that they would still usually be able to close the sale of Microsoft's paid product even when competing against a Google that was giving their product away. For the enterprise, the combination of Microsoft's offering and its enterprise sales team was so effective that Google couldn't even give its product away.

If you're reading this and you work at a "tech" company, the company is overwhelmingly likely to choose the Google enterprise suite over the Microsoft enterprise suite and the enterprise sales pitch Microsoft sales people have probably sounds ridiculous to you.

An acquaintance of mine who ran a startup had a Microsoft Azure salesperson come in and try to sell them on Azure, opening with "You're on AWS, the consumer cloud. You need Azure, the enterprise cloud". For most people in tech companies, enterprise is synonymous with overpriced, unreliable, junk. In the same way it's easy to make fun of Ballmer because he came up on the sales and business side of the house, it's easy to make fun of an enterprise sales pitch when you hear it but, overall, Microsoft's enterprise sales arm does a good job. When I worked in Azure, I looked into how it worked and, having just come from Google, there was a night and day difference. This was in 2015, under Nadella, but the culture and processes that let Microsoft scale this up were built out under Ballmer. I think there were multiple months where Microsoft hired and onboarded more salespeople than Google employed in total and every stage of the sales pipeline was fairly effective.

When people point to a long list of failures like Bing, Zune, Windows Phone, and HoloLens as evidence that Ballmer was some kind of buffoon who was holding Microsoft back, this demonstrates a lack of understanding of the tech industry. This is like pointing to a list of failed companies a VC has funded as evidence the VC doesn't know what they're doing. But that's silly in a hits based industry like venture capital. If you want to claim the VC is bad, you need to point out poor total return or a lack of big successes, which would imply poor total return. Similarly, a large company like Microsoft has a large portfolio of bets and one successful bet can pay for a huge number of failures. Ballmer's critics can't point to a poor total return because Microsoft's total return was very good under his tenure. Revenue increased from $14B or $22B to $83B, depending on whether you want to count from when Ballmer became President in July 1998 or when Ballmer became CEO in January 2000. The company was also quite profitable when Ballmer left, recording $27B in profit the previous four quarters, more than the revenue of the company he took over. By market cap, Azure alone would be in the top 10 largest public companies in the world and the enterprise software suite minus Azure would probably just miss being in the top 10.

As a result, critics also can't point to a lack of hits when Ballmer presided over the creation of Azure, the conversion of Microsoft's enterprise software from set of local desktop apps to Office 365 et al., the creation of the world's most effective enterprise sales org, the creation of Microsoft's video game empire (among other things, Ballmer was CEO when Microsoft acquired Bungie and made Halo the Xbox's flagship game on launch in 2001), etc. Even Bing, widely considered a failure, on last reported revenue and current P/E ratio, would be 12th most valuable tech company in the world, between Tencent and ASML. When attacking Ballmer, people cite Bing as a failure that occurred on Ballmer's watch, which tells you something about the degree of success Ballmer had. Most companies would love to have their successes be as successful as Bing, let alone their failures. Of course it would be better if Ballmer was prescient and all of his bets succeeded, making Microsoft worth something like $10T instead of the lowly $3T market cap it has today, but the criticism of Ballmer that says that he had some failures and some $1T successes is a criticism that he wasn't the greatest CEO of all time by a gigantic margin. True, but not much of a criticism.

And, unlike Nadella, Ballmer didn't inherit a company that was easily set up for success. As we noted earlier, it wasn't long into Ballmer's tenure that Microsoft was considered a boring, irrelevant company and the next IBM, mostly due to decisions made when Bill Gates was CEO. As a very senior Microsoft employee from the early days, Ballmer was also partially responsible for the state of Microsoft at the time, so Microsoft's problems are also at least partially attributable to him (but that also means he should get some credit for the success Microsoft had through the 90s). Nevertheless, he navigated Microsoft's most difficult problems well and set up his successor for smooth sailing.

Earlier, we noted that Paul Graham cited Google and the rise of the web as two causes for Microsoft's death prior to 2007. As we discussed in this look at antitrust action in tech, these both share a common root cause, antitrust action against Microsoft. If we look at the documents from the Microsoft antitrust case, it's clear that Microsoft knew how important the internet was going to be and had plans to control the internet. As part of these plans, they used their monopoly power on the desktop to kill Netscape. They technically lost an antirust case due to this, but if you look at the actual outcomes, Microsoft basically got what they wanted from the courts. The remedies levied against Microsoft are widely considered to have been useless (the initial decision involved breaking up Microsoft, but they were able to reverse this on appeal), and the case dragged on for long enough that Netscape was doomed by the time the case was decided, and the remedies that weren't specifically targeted at the Netscape situation were meaningless.

A later part of the plan to dominate the web, discussed at Microsoft but never executed, was to kill Google. If we're judging Microsoft by how "dangerous" it is, how effectively it crushes its competitors, like Paul Graham did when he judged Microsoft to be dead, then Microsoft certainly became less dangerous, but the feeling at Microsoft was that their hand was forced due to the circumstances. One part of the plan to kill Google was to redirect users who typed google.com into their address bar to MSN search. This was before Chrome existed and before mobile existed in any meaningful form. Windows desktop marketshare was 97% and IE had between 80% to 95% marketshare depending on the year, with most of the rest of the marketshare belonging to the rapidly declining Netscape. If Microsoft makes this move, Google is killed before it can get Chrome and Android off the ground and, barring extreme antitrust action, such as a breakup of Microsoft, Microsoft owns the web to this day. And then for dessert, it's not clear there wouldn't be a reason to go after Amazon.

After internal debate, Microsoft declined to kill Google not due to fear of antitrust action, but due to fear of bad PR from the ensuing antitrust action. Had Microsoft redirected traffic away from Google, the impact on Google would've been swifter and more severe than their moves against Netscape and in the time it would take for the DoJ to win another case against Microsoft, Google would suffer the same fate as Netscape. It might be hard to imagine this if you weren't around at the time, but the DoJ vs. Microsoft case was regular front-page news in a way that we haven't seen since (in part because companies learned their lesson on this one — Google supposedly killed the 2011-2012 FTC against them with lobbying and has cleverly maneuvered the more recent case so that it doesn't dominate the news cycle in the same way). The closest thing we've seen since the Microsoft antitrust media circus was the media response to the Crowdstrike outage, but that was a flash in the pan compared to the DoJ vs. Microsoft case.

If there's a criticism of Ballmer here, perhaps it's something like Microsoft didn't pre-emptively learn the lessons its younger competitors learned from its big antitrust case before the big antitrust case. A sufficiently prescient executive could've advocated for heavy lobbying to head the antitrust case off at pass, like Google did in 2011-2012, or maneuvered to make the antitrust case just another news story, like Google has been doing for the current case. Another possible criticism is that Microsoft didn't correctly read the political tea leaves and realize that there wasn't going to be serious US tech antitrust for at least two decades after the big case against Microsoft. In principle, Ballmer could've overridden the decision to not kill Google if he had the right expertise on staff to realize that the United States was entering a two decade period of reduced antitrust scrutiny in tech.

As criticisms go, I think the former criticism is correct, but not an indictment of Ballmer unless you expect CEOs to be infallible, so as evidence that Ballmer was a bad CEO, this would be a very weak criticism. And it's not clear that the latter criticism is correct. While Google was able to get away with things like hardcoding the search engine in Android to prevent users from changing their search engine setting to having badware installers trick users into making Chrome the default browser, they were considered the "good guys" and didn't get much scrutiny for these sorts of actions, Microsoft wasn't treated with kid gloves in the same way by the press or the general public. Google didn't trigger a serious antitrust investigation until 2011, so it's possible the lack of serious antitrust action between 2001 and 2010 was an artifact of Microsoft being careful to avoid antitrust scrutiny and Google being too small to draw scrutiny and that a move to kill Google when it was still possible would've drawn serious antitrust scrutiny and another PR circus. That's one way in which the company Ballmer inherited was in a more difficult situation than its competitors — Microsoft's hands were perceived to be tied and may have actually been tied. Microsoft could and did get severe criticism for taking an action when the exact same action taken by Google would be lauded as clever.

When I was at Microsoft, there was a lot of consternation about this. One funny example was when, in 2011, Google officially called out Microsoft for unethical behavior and the media jumped on this as yet another example of Microsoft behaving badly. A number of people I talked to at Microsoft were upset by this because, according to them, Microsoft got the idea to do this when they noticed that Google was doing it, but reputations take a long time to change and actions taken while Gates was CEO significantly reduced Microsoft's ability to maneuver.

Another difficulty Ballmer had to deal with on taking over was Microsoft's intense internal politics. Again, as a very senior Microsoft employee going back to almost the beginning, he bears some responsibility for this, but Ballmer managed to clear the board of the worst bad actors so that Nadella didn't inherit such a difficult situation. If we look at why Microsoft didn't dominate the web under Ballmer, in addition to concerns that killing Google would cause a PR backlash, internal political maneuvering killed most of Microsoft's most promising web products and reduced the appeal and reach of most of the rest of its web products. For example, Microsoft had a working competitor to Google Docs in 1997, one year before Google was founded and nine years before Google acquired Writely, but it was killed for political reasons. And likewise for NetMeeting and other promising products. Microsoft certainly wasn't alone in having internal political struggles, but it was famous for having more brutal politics than most.

Although Ballmer certainly didn't do a perfect job at cleaning house, when I was at Microsoft and asked about promising projects that were sidelined or killed due to internal political struggles, the biggest recent sources of those issues were shown the door under Ballmer, leaving a much more functional company for Nadella to inherit.

Stepping back to look at the big picture, Ballmer inherited a company that was a financially strong position that was hemmed in by internal and external politics in a way that caused outside observers to think the company was overwhelmingly likely to slide into irrelevance, leading to predictions like Graham's famous prediction that Microsoft is dead, with revenues expected to decline in five to ten years. In retrospect, we can see that moves made under Gates limited Microsoft's ability to use its monopoly power to outright kill competitors, but there was no inflection point at which a miraculous turnaround was mounted. Instead, Microsoft continued its very strong execution on enterprise products and continued making reasonable bets on the future in a successful effort to supplant revenue streams that were internally viewed as long-term dead ends, even if they were going to be profitable dead ends, such as Windows and boxed (non-subscription) software.

Unlike most companies in that position, Microsoft was willing to very heavily subsidize a series of bets that leadership thought could power the company for the next few decades, such as Windows Phone, Bing, Azure, Xbox, and HoloLens. From the internal and external commentary on these bets, you can see why it's so hard for companies to use their successful lines of business to subsidize new lines of business when the writing is on the wall for the successful businesses. People panned these bets as stupid moves that would kill the company, saying the company should focus is efforts on its most profitable businesses, such as Windows. Even when there's very clear data showing that bucking the status quo is the right thing, people usually don't do it, in part because you look like an idiot when it doesn't pan out, but Ballmer was willing to make the right bets in the face of decades of ridicule.

Another reason it's hard for companies to make these bets is that companies are usually unable to launch new things that are radically different from their core business. When yet another non-acquisition Google consumer product fails, every writes this off as a matter of course — of course Google failed there, they're a technical-first company that's bad at product. But Microsoft made this shift multiple times and succeeded. Once was with Xbox. If you look at the three big console manufacturers, two are hardware companies going way back and one is Microsoft, a boxed software company that learned how to make hardware. Another time was with Azure. If you look at the three big cloud providers, two are online services companies going back to their founding and one is Microsoft, a boxed software company that learned how to get into the online services business. Other companies with different core lines of business than hardware and online services saw these opportunities and tried to make the change and failed.

And if you look at the process of transitioning here, it's very easy to make fun of Microsoft in the same way it's easy to make fun of Microsoft's enterprise sales pitch. The core Azure folks came from Windows, so in the very early days of Azure, they didn't have an incident management process to speak of and during their first big global outages, people were walking around the hallways asking "is it Azure down?" and trying to figure out what to do. Azure would continue to have major global outages for years while learning how to ship somewhat reliable software, but they were able to address the problems well enough to build a trillion dollar business. Another time, before Azure really knew how to build servers, a Microsoft engineer pulled up Amazon's pricing page and noticed that AWS's retail price for disk was cheaper than Azure's cost to provision disks. When I was at Microsoft, a big problem for Azure was building out datacenter fast enough. People joked that the recent hiring of a ton of sales people worked too well and the company sold too much Azure, which was arguably true and also a real emergency for the company. In the other cases, Microsoft mostly learned how to do it themselves and in this case they brought in some very senior people from Amazon who had deep expertise in supply chain and building out datacenters. It's easy to say that, when you have a problem and a competitor has the right expertise, you should hire some experts and listen to them but most companies fail when they try to do this. Sometimes, companies don't recognize that they need help but, more frequently, they do bring in senior expertise that people don't listen to. It's very easy for the old guard at a company to shut down efforts to bring in senior outside expertise, especially at a company as fractious at Microsoft, but leadership was able to make sure that key initiatives like this were successful3.

When I talked to Google engineers about Azure during Azure's rise, they were generally down on Azure and would make fun of it for issues like the above, which seemed comical to engineers working at a companies that grew up as large scale online services companies with deep expertise in operating large scale services, building efficient hardware, and building out datacenter, but despite starting in a very deep hole technically, operationally, and culturally, Microsoft built a business unit worth a trillion dollars with Azure.

Not all of the bets panned out, but if we look at comments from critics who were saying that Microsoft was doomed because it was subsidizing the wrong bets or younger companies would surpass it, well, today, Microsoft is worth 50% more than Google and twice as much as Meta. If we look at the broader history of the tech industry, Microsoft has had sustained strong execution from its founding in 1975 until today, a nearly fifty year run, a run that's arguably been unmatched in the tech industry. Intel's been around as bit longer, but they stumbled very badly around the turn of the century and they've had a number of problems over the past decade. IBM has a long history, but it just wasn't all that big during its early history, e.g., when T.J. Watson renamed Computing-Tabulating-Recording Company to International Business Machines, its revenue was still well under $10M a year (inflation adjusted, on the order of $100M a year). Computers started becoming big and IBM was big for a tech company by the 50s, but the antitrust case brought against IBM in 1969 that dragged on until it was dropped for being "without merit" in 1982 hamstrung the company and its culture in ways that are still visible when you look at, for example, why IBM's various cloud efforts have failed and, in the 90s, the company was on its deathbed and only managed to survive at all due to Gerstner's turnaround. If we look at older companies that had long sustained runs of strong execution, most of them are gone, like DEC and Data General, or had very bad stumbles that nearly ended the company, like IBM and Apple. There are companies that have had similarly long periods of strong execution, like Oracle, but those companies haven't been nearly as effective as Microsoft in expanding their lines of business and, as a result, Oracle is worth perhaps two Bings. That makes Oracle the 20th most valuable public company in the world, which certainly isn't bad, but it's no Microsoft.

If Microsoft stumbles badly, a younger company like Nvidia, Meta, or Google could overtake Microsoft's track record, but that would be no fault of Ballmer's and we'd still have to acknowledge that Ballmer was a very effective CEO, not just in terms of bringing the money in, but in terms of setting up a vision that set Microsoft up for success for the next fifty years.

Besides the headline items mentioned above, off the top of my head, here are a few things I thought were interesting that happened under Ballmer since Graham declared Microsoft to be dead

One response to Microsoft's financial success, both the direct success that happened under Ballmer as well as later success that was set up by Ballmer, is that Microsoft is financially successful but irrelevant for trendy programmers, like IBM. For one thing, rounded to the nearest Bing, IBM is probably worth either zero or one Bings. But even if we put aside the financial aspect and we just look at how much each $1T tech company (Apple, Nvidia, Microsoft, Google, Amazon, and Meta) has impacted programmers, Nvidia, Apple, and Microsoft all have a lot of programmers who are dependent on the company due to some kind of ecosystem dependence (CUDA; iOS; .NET and Windows, the latter of which is still the platform of choice for many large areas, such as AAA games).

You could make a case for the big cloud vendors, but I don't think that companies have a nearly forced dependency on AWS in the same way that a serious English-language consumer app company really needs an iOS app or an AAA game company has to release on Windows and overwhelmingly likely develops on Windows.

If we look at programmers who aren't pinned to an ecosystem, Microsoft seems highly relevant to a lot of programmers due to the creation of tools like vscode and TypeScript. I wouldn't say that it's necessarily more relevant than Amazon since so many programmers use AWS, but it's hard to argue that the company that created (among many other things) vscode and TypeScript under Ballmer's watch is irrelevant to programmers.

Shortly after joining Microsoft in 2015, I bet Derek Chiou that Google would beat Microsoft to $1T market cap. Unlike most external commentators, I agreed with the bets Microsoft was making, but when I looked around at the kinds of internal dysfunction Microsoft had at the time, I thought that would cause them enough problems that Google would win. That was wrong — Microsoft beat Google to $1T and is now worth $1T more than Google.

I don't think I would've made the bet even a year later, after seeing Microsoft from the inside and how effective Microsoft sales was and how good Microsoft was at shipping things that are appealing to enterprises and the comparing that to Google's cloud execution and strategy. But you could say that I made a mistake that was fairly analogous to what external commentators made until I saw how Microsoft operated in detail.

Thanks to Laurence Tratt, Yossi Kreinin, Heath Borders, Justin Blank, Fabian Giesen, Justin Findlay, Matthew Thomas, Seshadri Mahalingam, and Nam Nguyen for comments/corrections/discussion

Here's the top HN comment on a story about Sinofsky's ousting:

The real culprit that needs to be fired is Steve Ballmer. He was great from the inception of MSFT until maybe the turn of the century, when their business strategy of making and maintaining a Windows monopoly worked beautifully and extremely profitably. However, he is living in a legacy environment where he believes he needs to protect the Windows/Office monopoly BY ANY MEANS NECESSARY, and he and the rest of Microsoft can't keep up with everyone else around them because of innovation.

This mindset has completely stymied any sort of innovation at Microsoft because they are playing with one arm tied behind their backs in the midst of trying to compete against the likes of Google, Facebook, etc. In Steve Ballmer's eyes, everything must lead back to the sale of a license of Windows/Office, and that no longer works in their environment.

If Microsoft engineers had free rein to make the best search engine, or the best phone, or the best tablet, without worries about how will it lead to maintaining their revenue streams of Windows and more importantly Office, then I think their offerings would be on an order of magnitude better and more creative.

This is wrong. At the time, Microsoft was very heavily subsidizing Bing. To the extent that one can attribute the subsidy, it would be reasonable to say that the bulk of the subsidy was coming from Windows. Likewise, Azure was a huge bet that was being heavily subsidized from the profit that was coming from Windows. Microsoft's strategy under Ballmer was basically the opposite of what this comment is saying.

Funnily enough, if you looked at comments on minimsft (many of which were made by Microsoft insiders), people noted the huge spend on things like Azure and online services, but most thought this was a mistake and that Microsoft needed to focus on making Windows and Windows hardware (like the Surface) great.

Basically, no matter what people think Ballmer is doing, they say it's wrong and that he should do the opposite. That means people call for different actions since most commenters outside of Microsoft don't actually know what Microsoft is up to, but from the way the comments are arrayed against Ballmer and not against specific actions of the company, we can see that people aren't really making a prediction about any particular course of action and they're just ragging on Ballmer.

BTW, the #2 comment on HN says that Ballmer missed the boat on the biggest things in tech in the past 5 years and that Ballmer has deemphasized cloud computing (which was actually Microsoft's biggest bet at the time if you look at either capital expenditure or allocated headcount). The #3 comment says "Steve Ballmer is a sales guy at heart, and it's why he's been able to survive a decade of middling stock performance and strategic missteps: He must have close connections to Microsoft's largest enterprise customers, and were he to be fired, it would be an invitation for those customers to reevaluate their commitment to Microsoft's platforms.", and the rest of the top-level comments aren't about Ballmer.

[return]2024-08-11 08:00:00

About eight years ago, I was playing a game of Codenames where the game state was such that our team would almost certainly lose if we didn't correctly guess all of our remaining words on our turn. From the given clue, we were unable to do this. Although the game is meant to be a word guessing game based on word clues, a teammate suggested that, based on the physical layout of the words that had been selected, most of the possibilities we were considering would result in patterns that were "too weird" and that we should pick the final word based on the location. This worked and we won.

[Click to expand explanation of Codenames if you're not familiar with the game]

Codenames is played in two teams. The game has a 5x5 grid of words, where each word is secretly owned by one of {blue team, red team, neutral, assassin}. Each team has a "spymaster" who knows the secret word <-> ownership mapping. The spymaster's job is to give single-word clues that allow their teammates to guess which words belong to their team without accidentally guessing words of the opposing team or the assassin. On each turn, the spymaster gives a clue and their teammates guess which words are associated with the clue. The game continues until one team's words have all been guessed or the assassin's word is guessed (immediate loss). There are some details that are omitted here for simplicity, but for the purposes of this post, this explanation should be close enough. If you want more of an explanation, you can try this video, or the official rules

Ever since then, I've wondered how good someone would be if all they did was memorize all 40 setup cards that come with the game. To simulate this, we'll build a bot that plays using only position information would be (you might also call this an AI, but since we'll discuss using an LLM/AI to write this bot, we'll use the term bot to refer to the automated codenames playing agent to make it easy to disambiguate).

At the time, after the winning guess, we looked through the configuration cards to see if our teammate's idea of guessing based on shape was correct, and it was — they correctly determined the highest probability guess based on the possible physical configurations. Each card layout defines which words are your team's and which words belong to the other team and, presumably to limit the cost, the game only comes with 40 cards (160 configurations under rotation). Our teammate hadn't memorized the cards (which would've narrowed things down to only one possible configuration), but they'd played enough games to develop an intuition about what patterns/clusters might be common and uncommon, enabling them to come up with this side-channel attack against the game. For example, after playing enough games, you might realize that there's no card where a team has 5 words in a row or column, or that only the start player color ever has 4 in a row, and if this happens on an edge and it's blue, the 5th word must belong to the red team, or that there's no configuration with six connected blue words (and there is one with red, one with 2 in a row centered next to 4 in a row). Even if you don't consciously use this information, you'll probably develop a subconscious aversion to certain patterns that feel "too weird".

Coming back to the idea of building a bot that simulates someone who's spent a few days memorizing the 40 cards, below, there's a simple bot you can play against that simulates a team of such players. Normally, when playing, you'd provide clues and the team would guess words. But, in order to provide the largest possible advantage to you, the human, we'll give you the unrealistically large advantage of assuming that you can, on demand, generate a clue that will get your team to select the exact squares that you'd like, which is simulated by letting you click on any tile that you'd like to have your team guess that tile.

By default, you also get three guesses a turn, which would put you well above 99%-ile among Codenames players I've seen. While good players can often get three or more correct moves a turn, averaging three correct moves and zero incorrect moves a turn would be unusually good in most groups. You can toggle the display of remaining matching boards on, but if you want to simulate what it's like to be a human player who hasn't memorized every board, you might want to try playing a few games with the display off.

If, at any point, you finish a turn and it's the bot's turn and there's only one matching board possible, the bot correctly guesses every one of its words and wins. The bot would be much stronger if it ever guessed words before it can guess them all, either naively or to strategically reduce the search space, or if it even had a simple heuristic where it would randomly guess among the possible boards if it could deduce that you'd win on your next turn, but even when using the most naive "board memorization" bot possible has been able to beat every Codenames player who I handed this to in most games where they didn't toggle the remaining matching boards on and use the same knowledge the bot has access to.

2024-06-16 08:00:00

There've been regular viral stories about ML/AI bias with LLMs and generative AI for the past couple years. One thing I find interesting about discussions of bias is how different the reaction is in the LLM and generative AI case when compared to "classical" bugs in cases where there's a clear bug. In particular, if you look at forums or other discussions with lay people, people frequently deny that a model which produces output that's sort of the opposite of what the user asked for is even a bug. For example, a year ago, an Asian MIT grad student asked Playground AI (PAI) to "Give the girl from the original photo a professional linkedin profile photo" and PAI converted her face to a white face with blue eyes.

The top "there's no bias" response on the front-page reddit story, and one of the top overall comments, was

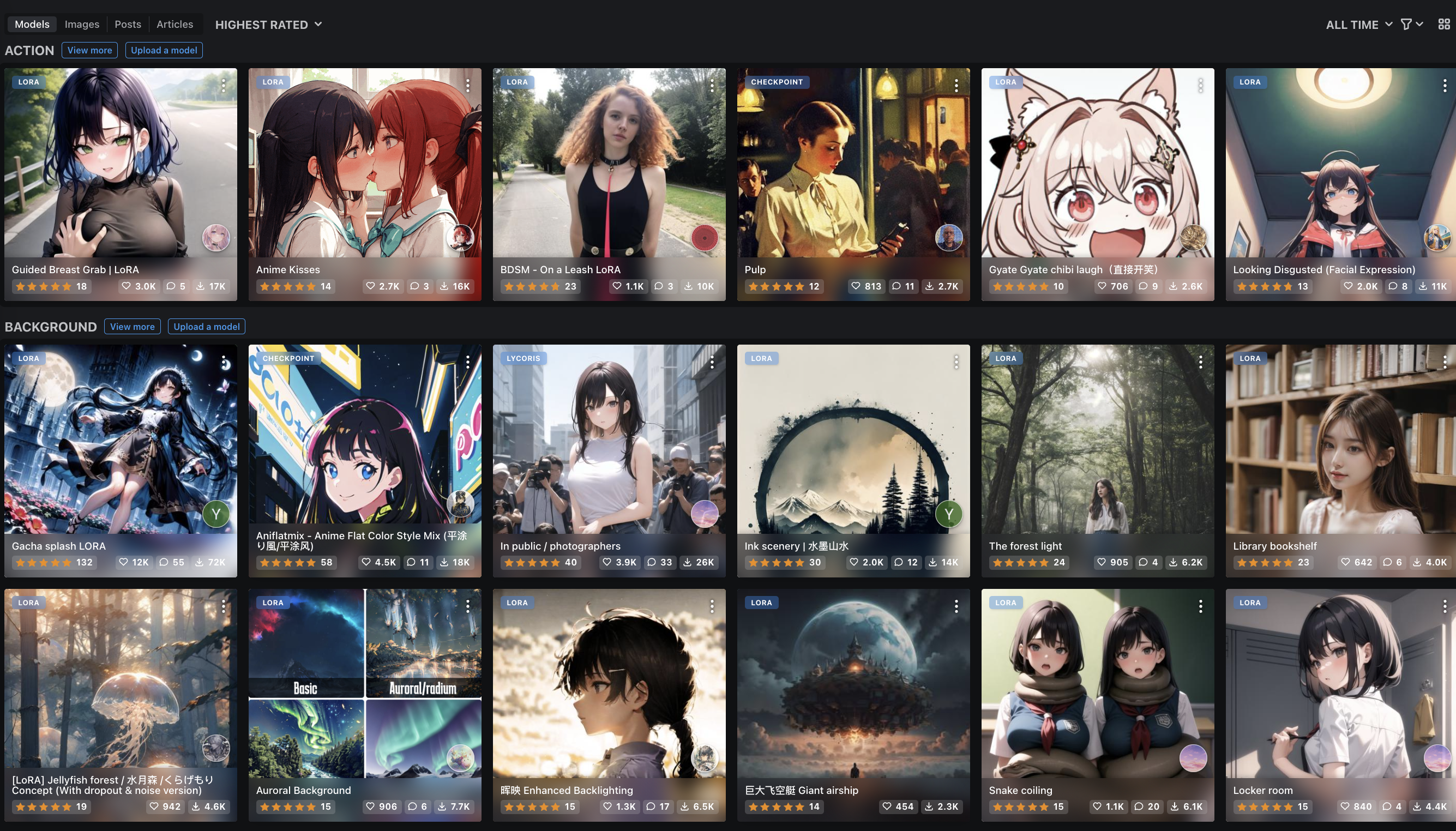

Sure, now go to the most popular Stable Diffusion model website and look at the images on the front page.

You'll see an absurd number of asian women (almost 50% of the non-anime models are represented by them) to the point where you'd assume being asian is a desired trait.

How is that less relevant that "one woman typed a dumb prompt into a website and they generated a white woman"?

Also keep in mind that she typed "Linkedin", so anyone familiar with how prompts currently work know it's more likely that the AI searched for the average linkedin woman, not what it thinks is a professional women because image AI doesn't have an opinion.

In short, this is just an AI ragebait article.

Other highly-ranked comments with the same theme include

Honestly this should be higher up. If you want to use SD with a checkpoint right now, if you dont [sic] want an asian girl it’s much harder. Many many models are trained on anime or Asian women.

and

Right? AI images even have the opposite problem. The sheer number of Asians in the training sets, and the sheer number of models being created in Asia, means that many, many models are biased towards Asian outputs.

Other highly-ranked comments noted that this was a sample size issue

"Evidence of systemic racial bias"

Shows one result.

Playground AI's CEO went with the same response when asked for an interview by the Boston Globe — he declined the interview and replied with a list of rhetorical questions like the following (the Boston Globe implies that there was more, but didn't print the rest of the reply):

If I roll a dice just once and get the number 1, does that mean I will always get the number 1? Should I conclude based on a single observation that the dice is biased to the number 1 and was trained to be predisposed to rolling a 1?

We could just have easily picked an example from Google or Facebook or Microsoft or any other company that's deploying a lot of ML today, but since the CEO of Playground AI is basically asking someone to take a look at PAI's output, we're looking at PAI in this post. I tried the same prompt the MIT grad student used on my Mastodon profile photo, substituting "man" for "girl". PAI usually turns my Asian face into a white (caucasian) face, but sometimes makes me somewhat whiter but ethnically ambiguous (maybe a bit Middle Eastern or East Asian or something. And, BTW, my face has a number of distinctively Vietnamese features and which pretty obviously look Vietnamese and not any kind of East Asian.

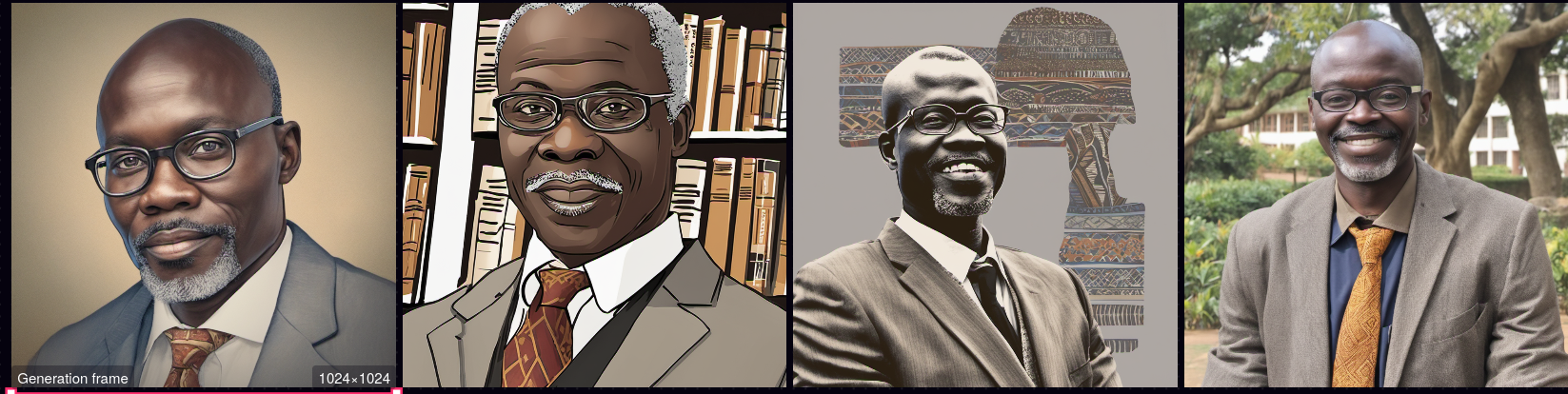

My profile photo is a light-skinned winter photo, so I tried a darker-skinned summer photo and PAI would then generally turn my face into a South Asian or African face, with the occasional Chinese (but never Vietnamese or kind of Southeast Asian face), such as the following:

A number of other people also tried various prompts and they also got results that indicated that the model (where “model” is being used colloquially for the model and its weights and any system around the model that's responsible for the output being what it is) has some preconceptions about things like what ethnicity someone has if they have a specific profession that are strong enough to override the input photo. For example, converting a light-skinned Asian person to a white person because the model has "decided" it can make someone more professional by throwing out their Asian features and making them white.

Other people have tried various prompts to see what kind of pre-conceptions are bundled into the model and have found similar results, e.g., Rob Ricci got the following results when asking for "linkedin profile picture of X professor" for "computer science", "philosophy", "chemistry", "biology", "veterinary science", "nursing", "gender studies", "Chinese history", and "African literature", respectively. In the 28 images generated for the first 7 prompts, maybe 1 or 2 people out of 28 aren't white. The results for the next prompt, "Chinese history" are wildly over-the-top stereotypical, something we frequently see from other models as well when asking for non-white output. And Andreas Thienemann points out that, except for the over-the-top Chinese stereotypes, every professor is wearing glasses, another classic stereotype.

Like I said, I don't mean to pick on Playground AI in particular. As I've noted elsewhere, trillion dollar companies regularly ship AI models to production without even the most basic checks on bias; when I tried ChatGPT out, every bias-checking prompt I played with returned results that were analogous to the images we saw here, e.g., when I tried asking for bios of men and women who work in tech, women tended to have bios indicating that they did diversity work, even for women who had no public record of doing diversity work and men tended to have degrees from name-brand engineering schools like MIT and Berkeley, even people who hadn't attended any name-brand schools, and likewise for name-brand tech companies (the link only has 4 examples due to Twitter limitations, but other examples I tried were consistent with the examples shown).

This post could've used almost any publicly available generative AI. It just happens to use Playground AI because the CEO's response both asks us to do it and reflects the standard reflexive "AI isn't biased" responses that lay people commonly give.

Coming back to the response about how it's not biased for professional photos of people to be turned white because Asians feature so heavily in other cases, the high-ranking reddit comment we looked at earlier suggested "go[ing] to the most popular Stable Diffusion model website and look[ing] at the images on the front page". Below is what I got when I clicked the link on the day the comment was made and then clicked "feed".

[Click to expand / collapse mildly NSFW images]

The site had a bit of a smutty feel to it. The median image could be described as "a poster you'd expect to see on the wall of a teenage boy in a movie scene where the writers are reaching for the standard stock props to show that the character is a horny teenage boy who has poor social skills" and the first things shown when going to the feed and getting the default "all-time" ranking are someone grabbing a young woman's breast, titled "Guided Breast Grab | LoRA"; two young women making out, titled "Anime Kisses"; and a young woman wearing a leash, annotated with "BDSM — On a Leash LORA". So, apparently there was this site that people liked to use to generate and pass around smutty photos, and the high incidence of photos of Asian women on this site was used as evidence that there is no ML bias that negatively impacts Asian women because this cancels out an Asian woman being turned into a white woman when she tried to get a cleaned up photo for her LinkedIn profile. I'm not really sure what to say to this. Fabian Geisen responded with "🤦♂️. truly 'I'm not bias. your bias' level discourse", which feels like an appropriate response.

Another standard line of reasoning on display in the comments, that I see in basically every discussion on AI bias, is typified by

AI trained on stock photo of “professionals” makes her white. Are we surprised?

She asked the AI to make her headshot more professional. Most of “professional” stock photos on the internet have white people in them.

and

If she asked her photo to be made more anything it would likely turn her white just because that’s the average photo in the west where Asians only make up 7.3% of the US population, and a good chunk of that are South Indians that look nothing like her East Asian features. East Asians are 5% or less; there’s just not much training data.

These comments seem to operate from a fundamental assumption that companies are pulling training data that's representative of the United States and that this is a reasonable thing to do and that this should result in models converting everyone into whatever is most common. This is wrong on multiple levels.

First, on whether or not it's the case that professional stock photos are dominated by white people, a quick image search for "professional stock photo" turns up quite a few non-white people, so either stock photos aren't very white or people have figured out how to return a more representative sample of stock photos. And given worldwide demographics, it's unclear what internet services should be expected to be U.S.-centric. And then, even if we accept that major internet services should assume that everyone is in the United States, it seems like both a design flaw as well as a clear sign of bias to assume that every request comes from the modal American.

Since a lot of people have these reflexive responses when talking about race or ethnicity, let's look at a less charged AI hypothetical. Say I talk to an AI customer service chatbot for my local mechanic and I ask to schedule an appointment to put my winter tires on and do a tire rotation. Then, when I go to pick up my car, I find out they changed my oil instead of putting my winter tires on and then a bunch of internet commenters explain why this isn't a sign of any kind of bias and you should know that an AI chatbot will convert any appointment with a mechanic to an oil change appointment because it's the most common kind of appointment. A chatbot that converts any kind of appointment request into "give me the most common kind of appointment" is pretty obviously broken but, for some reason, AI apologists insist this is fine when it comes to things like changing someone's race or ethnicity. Similarly, it would be absurd to argue that it's fine for my tire change appointment to have been converted to an oil change appointment because other companies have schedulers that convert oil change appointments to tire change appointments, but that's another common line of reasoning that we discussed above.

And say I used some standard non-AI scheduling software like Mindbody or JaneApp to schedule an appointment with my mechanic and asked for an appointment to have my tires changed and rotated. If I ended up having my oil changed because the software simply schedules the most common kind of appointment, this would be a clear sign that the software is buggy and no reasonable person would argue that zero effort should go into fixing this bug. And yet, this is a common argument that people are making with respect to AI (it's probably the most common defense in comments on this topic). The argument goes a bit further, in that there's this explanation of why the bug occurs that's used to justify why the bug should exist and people shouldn't even attempt to fix it. Such an explanation would read as obviously ridiculous for a "classical" software bug and is no less ridiculous when it comes to ML. Perhaps one can argue that the bug is much more difficult to fix in ML and that it's not practical to fix the bug, but that's different from the common argument that it isn't a bug and that this is the correct way for software to behave.

I could imagine some users saying something like that when the program is taking actions that are more opaque to the user, such as with autocorrect, but I actually tried searching reddit for autocorrect bug and in the top 3 threads (I didn't look at any other threads), 2 out of the 255 comments denied that incorrect autocorrects were a bug and both of those comments were from the same person. I'm sure if you dig through enough topics, you'll find ones where there's a higher rate, but on searching for a few more topics (like excel formatting and autocorrect bugs), none of the topics I searched approached what we see with generative AI, where it's not uncommon to see half the commenters vehemently deny that a prompt doing the opposite of what the user wants is a bug.

Coming back to the bug itself, in terms of the mechanism, one thing we can see in both classifiers as well as generative models is that many (perhaps most or almost all) of these systems are taking bias that a lot of people have that's reflected in some sample of the internet, which results in things like Google's image classifier classifying a black hand holding a thermometer as {hand, gun} and a white hand holding a thermometer as {hand, tool}1. After a number of such errors over the past decade, from classifying black people as gorillas in Google Photos in 2015, to deploying some kind of text-classifier for ads that classified ads that contained the terms "African-American composers" and "African-American music" as "dangerous or derogatory" in 2018 Google turned the knob in the other direction with Gemini which, by the way, generated much more outrage than any of the other examples.

There's nothing new about bias making it into automated systems. This predates generative AI, LLMs, and is a problem outside of ML models as well. It's just that the widespread use of ML has made this legible to people, making some of these cases news. For example, if you look at compression algorithms and dictionaries, Brotli is heavily biased towards the English language — the human-language elements of the 120 transforms built into the language are English, and the built-in compression dictionary is more heavily weighted towards English than whatever representative weighting you might want to reference (population-weighted language speakers, non-automated human-languages text sent on on messaging platforms, etc.). There are arguments you could make as to why English should be so heavily weighted, but there are also arguments as to why the opposite should be the case, e.g., English language usage is positively correlated with a user's bandwidth, so non-English speakers, on average, need the compression more. But regardless of the exact weighting function you think should be used to generate a representative dictionary, that's just not going to make a viral news story because you can't get the typical reader to care that a number of the 120 built-in Brotli transforms do things like add " of the ", ". The", or ". This" to text, which are highly specialized for English, and none of the transforms encode terms that are highly specialized for any other human language even though only 20% of the world speaks English, or that, compared to the number of speakers, the built-in compression dictionary is extremely highly tilted towards English by comparison to any other human language. You could make a defense of the dictionary of Brotli that's analogous to the ones above, over some representative corpus which the Brotli dictionary was trained on, we get optimal compression with the Brotli dictionary, but there are quite a few curious phrases in the dictionary such as "World War II", ", Holy Roman Emperor", "British Columbia", "Archbishop" , "Cleveland", "esperanto", etc., that might lead us to wonder if the corpus the dictionary was trained on is perhaps not the most representative, or even particularly representative of text people send. Can it really be the case that including ", Holy Roman Emperor" in the dictionary produces, across the distribution of text sent on the internet, better compression than including anything at all for French, Urdu, Turkish, Tamil, Vietnamese, etc.?

Another example which doesn't make a good viral news story is my not being able to put my Vietnamese name in the title of my blog and have my blog indexed by Google outside of Vietnamese-language Google — I tried that when I started my blog and it caused my blog to immediately stop showing up in Google searches unless you were in Vietnam. It's just assumed that the default is that people want English language search results and, presumably, someone created a heuristic that would trigger if you have two characters with Vietnamese diacritics on a page that would effectively mark the page as too Asian and therefore not of interest to anyone in the world except in one country. "Being visibly Vietnamese" seems like a fairly common cause of bugs. For example, Vietnamese names are a problem even without diacritics. I often have forms that ask for my mother's maiden name. If I enter my mother's maiden name, I'll be told something like "Invalid name" or "Name too short". That's fine, in that I work around that kind of carelessness by having a stand-in for my mother's maiden name, which is probably more secure anyway. Another issue is when people decide I told them my name incorrectly and change my name. For my last name, if I read my name off as "Luu, ell you you", that gets shortened from the Vietnamese "Luu" to the Chinese "Lu" about half the time and to a western "Lou" much of the time as well, but I've figured out that if I say "Luu, ell you you, two yous", that works about 95% of the time. That sometimes annoys the person on the other end, who will exasperatedly say something like "you didn't have to spell it out three times". Maybe so for that particular person, but most people won't get it. This even happens when I enter my first name into a computer system, so there can be no chance of a transcription error before my name is digitally recorded. My legal first name, with no diacritics, is Dan. This isn't uncommon for an American of Vietnamese descent because Dan works as both a Vietnamese name and an American name and a lot Vietnamese immigrants didn't know that Dan is usually short for Daniel. At six of the companies I've worked for full-time, someone has helpfully changed my name to Daniel at three of them, presumably because someone saw that Dan was recorded in a database and decided that I failed to enter my name correctly and that they knew what my name was better than I did and they were so sure of this they saw no need to ask me about it. In one case, this only impacted my email display name. Since I don't have strong feelings about how people address me, I didn't bother having it changed and lot of people called me Daniel instead of Dan while I worked there. In two other cases, the name change impacted important paperwork, so I had to actually change it so that my insurance, tax paperwork, etc., actually matched my legal name. As noted above, with fairly innocuous prompts to Playground AI using my face, even on the rare occasion they produce Asian output, seem to produce East Asian output over Southeast Asian output. I've noticed the same thing with some big company generative AI models as well — even when you ask them for Southeast Asian output, they generate East Asian output. AI tools that are marketed as tools that clean up errors and noise will also clean up Asian-ness (and other analogous "errors"), e.g., people who've used Adobe AI noise reduction (billed as "remove noise from voice recordings with speech enhacement") note that it will take an Asian accent and remove it, making the person sound American (and likewise for a number of other accents, such as eastern European accents).

I probably see tens to hundreds things like this most weeks just in the course of using widely used software (much less than the overall bug count, which we previously observed was in hundreds to thousands per week), but most Americans I talk to don't notice these things at all. Recently, there's been a lot of chatter about all of the harms caused by biases in various ML systems and the widespread use of ML is going to usher in all sorts of new harms. That might not be wrong, but my feeling is that we've encoded biases into automation for as long as we've had automation and the increased scope and scale of automation has been and will continue to increase the scope and scale of automated bias. It's just that now, many uses of ML make these kinds of biases a lot more legible to lay people and therefore likely to make the news.

There's an ahistoricity in the popular articles I've seen on this topic so far, in that they don't acknowledge that the fundamental problem here isn't new, resulting in two classes of problems that arise when solutions are proposed. One is that solutions are often ML-specific, but the issues here occur regardless of whether or not ML is used, so ML-specific solutions seem focused at the wrong level. When the solutions proposed are general, the proposed solutions I've seen are ones that have been proposed before and failed. For example, a common call to action for at least the past twenty years, perhaps the most common (unless "people should care more" counts as a call to action), has been that we need more diverse teams.

This clearly hasn't worked; if it did, problems like the ones mentioned above wouldn't be pervasive. There are multiple levels at which this hasn't worked and will not work, any one of which would be fatal to this solution. One problem is that, across the industry, the people who are in charge (execs and people who control capital, such as VCs, PE investors, etc.), in aggregate, don't care about this. Although there are efficiency justifications for more diverse teams, the case will never be as clear-cut as it is for decisions in games and sports, where we've seen that very expensive and easily quantifiable bad decisions can persist for many decades after the errors were pointed out. And then, even if execs and capital were bought into the idea, it still wouldn't work because there are too many dimensions. If you look at a company that really prioritized diversity, like Patreon from 2013-2019, you're lucky if the organization is capable of seriously prioritizing diversity in two or three dimensions while dropping the ball on hundreds or thousands of other dimensions, such as whether or not Vietnamese names or faces are handled properly.

Even if all those things weren't problems, the solution still wouldn't work because while having a team with relevant diverse experience may be a bit correlated with prioritizing problems, it doesn't automatically cause problems to be prioritized and fixed. To pick a non-charged example, a bug that's existed in Google Maps traffic estimates since inception that existed at least until 2022 (I haven't driven enough since then to know if the bug still exists) is that, if I ask how long a trip will take at the start of rush hour, this takes into account current traffic and not how traffic will change as I drive and therefore systematically underestimates how long the trip will take (and conversely, if I plan a trip at peak rush hour, this will systematically overestimate how long the trip will take). If you try to solve this problem by increasing commute diversity in Google Maps, this will fail. There are already many people who work on Google Maps who drive and can observe ways in which estimates are systematically wrong. Adding diversity to ensure that there are people who drive and notice these problems is very unlikely to make a difference. Or, to pick another example, when the former manager of Uber's payments team got incorrected blacklisted from Uber by an ML model incorrectly labeling his transactions as fraudulent, no one was able to figure out what happened or what sort of bias caused him to get incorrectly banned (they solved the problem by adding his user to an allowlist). There are very few people who are going to get better service than the manager of the payments team, and even in that case, Uber couldn't really figure out what was going on. Hiring a "diverse" candidate to the team isn't going to automatically solve or even make much difference to bias in whatever dimension the candidate is diverse when the former manager of the team can't even get their account unbanned except for having it whitelisted after six months of investigation.

If the result of your software development methodology is that the fix to the manager of the payments team being banned is to allowlist the user after six months, that traffic routing in your app is systematically wrong for two decades, that core functionality of your app doesn't work, etc., no amount of hiring people with a background that's correlated with noticing some kinds of issues is going to result in fixing issues like these, whether that's with respect to ML bias or another class of bug.

Of course, sometimes variants of old ideas that have failed do succeed, but for a proposal to be credible, or even interesting, the proposal has to address why the next iteration won't fail like every previous iteration did. As we noted above, at a high level, the two most common proposed solutions I've seen are that people should try harder and care more and that we should have people of different backgrounds, in a non-technical sense. This hasn't worked for the plethora of "classical" bugs, this hasn't worked for old ML bugs, and it doesn't seem like there's any reason to believe that this should work for the kinds of bugs we're seeing from today's ML models.

Laurence Tratt says:

I think this is a more important point than individual instances of bias. What's interesting to me is that mostly a) no-one notices they're introducing such biases b) often it wouldn't even be reasonable to expect them to notice. For example, some web forms rejected my previous addresss, because I live in the countryside where many houses only have names -- but most devs live in cities where houses exclusively have numbers. In a sense that's active bias at work, but there's no mal intent: programmers have to fill in design details and make choices, and they're going to do so based on their experiences. None of us knows everything! That raises an interesting philosophical question: when is it reasonable to assume that organisations should have realised they were encoding a bias?

My feeling is that the "natural", as in lowest energy and most straightforward state for institutions and products is that they don't work very well. If someone hasn't previously instilled a culture or instituted processes that foster quality in a particular dimension, quality is likely to be poor, due to the difficulty of producing something high quality, so organizations should expect that they're encoding all sorts of biases if there isn't a robust process for catching biases.

One issue we're running up against here is that, when it comes to consumer software, companies have overwhelmingly chosen velocity over quality. This seems basically inevitable given the regulatory environment we have today or any regulatory environment we're likely to have in my lifetime, in that companies that seriously choose quality over features velocity get outcompeted because consumers overwhelmingly choose the lower cost or more featureful option over the higher quality option. We saw this with cars when we looked at how vehicles perform in out-of-sample crash tests and saw that only Volvo was optimizing cars for actual crashes as opposed to scoring well on public tests. Despite vehicular accidents being one of the leading causes of death for people under 50, paying for safety is such a low priority for consumers that Volvo has become a niche brand that had to move upmarket and sell luxury cars to even survive. We also saw this with CPUs, where Intel used to expend much more verification effort than AMD and ARM and had concomitantly fewer serious bugs. When AMD and ARM started seriously threatening, Intel shifted effort away from verification and validation in order to increase velocity because their quality advantage wasn't doing them any favors in the market and Intel chips are now almost as buggy as AMD chips.

We can observe something similar in almost every consumer market and many B2B markets as well, and that's when we're talking about issues that have known solutions. If we look at problem that, from a technical standpoint, we don't know how to solve well, like subtle or even not-so-subtle bias in ML models, it stands to reason that we should expect to see more and worse bugs than we'd expect out of "classical" software systems, which is what we're seeing. Any solution to this problem that's going to hold up in the market is going to have to be robust against the issue that consumers will overwhelmingly choose the buggier product if it has more features they want or ships features they want sooner, which puts any solution that requires taking care in a way that significantly slows down shipping in a very difficult position, absent a single dominant player, like Intel in its heyday.

Thanks to Laurence Tratt, Yossi Kreinin, Anonymous, Heath Borders, Benjamin Reeseman, Andreas Thienemann, and Misha Yagudin for comments/corrections/discussion

This is a genuine question and not a rhetorical question. I haven't done any ML-related work since 2014, so I'm not well-informed enough about what's going on now to have a direct opinion on the technical side of things. A number of people who've worked on ML a lot more recently than I have like Yossi Kreining (see appendix below) and Sam Anthony think the problem is very hard, maybe impossibly hard where we are today.

Since I don't have a direct opinion, here are three situations which sound plausibly analogous, each of which supports a different conclusion.

Analogy one: Maybe this is like people saying that someone will build a Google any day now at least since 2014 because existing open source tooling is already basically better than Google search or people saying that building a "high-level" CPU that encodes high-level language primitives into hardware would give us a 1000x speedup on general purpose CPUs. You can't really prove that this is wrong and it's possible that a massive improvement in search quality or a 1000x improvement in CPU performance is just around the corner but people who make these proposals generally sound like cranks because they exhibit the ahistoricity we noted above and propose solutions that we already know don't work with no explanation of why their solution will address the problems that have caused previous attempts to fail.

Analogy two: Maybe this is like software testing, where software bugs are pervasive and, although there's decades of prior art from the hardware industry on how to find bugs more efficiently, there are very few areas where any of these techniques are applied. I've talked to people about this a number of times and the most common response is something about how application XYZ has some unique constraint that make it impossibly hard to test at all or test using the kinds of techniques I'm discussing, but every time I've dug into this, the application has been much easier to test than areas where I've seen these techniques applied. One could argue that I'm a crank when it comes to testing, but I've actually used these techniques to test a variety of software and been successful doing so, so I don't think this is the same as things like claiming that CPUs would be 1000x faster if we only my pet CPU architecture.

Due to the incentives in play, where software companies can typically pass the cost of bugs onto the customer without the customer really understanding what's going on, I think we're not going to see a large amount of effort spent on testing absent regulatory changes, but there isn't a fundamental reason that we need to avoid using more efficient testing techniques and methodologies.

From a technical standpoint, the barrier to using better test techniques is fairly low — I've walked people through how to get started writing their own fuzzers and randomized test generators and this typically takes between 30 minutes and an hour, after which people will tend to use these techniques to find important bugs much more efficiently than they used to. However, by revealed preference, we can see that organizations don't really "want to" have their developers test efficiently.

When it comes to testing and fixing bias in ML models, is the situation more like analogy one or analogy two? Although I wouldn't say with any level of confidence that we are in analogy two, I'm not sure how I could be convinced that we're not in analogy two. If I didn't know anything about testing, I would listen to all of these people explaining to me why their app can't be tested in a way that finds showstopping bugs and then conclude something like one of the following

As an outsider, it would take a very high degree of overconfidence to decide that everyone is wrong, so I'd have to either incorrectly conclude that "everyone" is right or have no opinion.

Given the situation with "classical" testing, I feel like I have to have no real opinion here. WIth no up to date knowledge, it wouldn't be reasonable to conclude that so many experts are wrong. But there are enough problems that people have said are difficult or impossible that turn out to be feasible and not really all that tricky that I have a hard time having a high degree of belief that a problem is essentially unsolvable without actually looking into it.

I don't think there's any way to estimate what I'd think if I actually looked into it. Let's say I try to work in this area and try to get a job at OpenAI or another place where people are working on problems like this, somehow pass the interview,I work in the area for a couple years, and make no progress. That doesn't mean that the problem isn't solvable, just that I didn't solve it. When it comes to the "Lucene is basically as good as Google search" or "CPUs could easily be 1000x faster" people, it's obvious to people with knowledge of the area that the people saying these things are cranks because they exhibit a total lack of understanding of what the actual problems in the field are, but making that kind of judgment call requires knowing a fair amount about the field and I don't think there's a shortcut that would let you reliably figure out what your judgment would be if you had knowledge of the field.

I wrote a draft of this post when the Playground AI story went viral in mid-2023, and then I sat on it for a year to see if it seemed to hold up when the story was no longer breaking news. Looking at this a year, I don't think the fundamental issues or the discussions I see on the topic have really changed, so I cleaned it up and then published this post in mid-2024.

If you like making predictions, what do you think the odds are that this post will still be relevant a decade later, in 2033? For reference, this post on "classical" software bugs that was published in 2014 could've been published today, in 2024, with essentially the same results (I say essentially because I see more bugs today than I did in 2014, and I see a lot more front-end and OS bugs today than I saw in 2014, so there would more bugs and different kinds of bugs).

[Click to expand / collapse comments from Yossi Kreinin]

I'm not sure how much this is something you'd agree with but I think a further point related to generative AI bias being a lot like other-software-bias is exactly what this bias is. "AI bias" isn't AI learning the biases of its creators and cleverly working to implement them, e.g. working against a minority that its creators don't like. Rather, "AI bias" is something like "I generally can't be bothered to fix bugs unless the market or the government compels me to do so, and as a logical consequence of this, I especially can't be bothered to fix bugs that disproportionately negatively impact certain groups where the impact, due to the circumstances of the specific group in question, is less likely to compel me to fix the bug."

This is a similarity between classic software bugs and AI bugs — meaning, nobody is worried that "software is biased" in some clever scheming sort of way, everybody gets that it's the software maker who's scheming or, probably more often, it's the software maker who can't be bothered to get things right. With generative AI I think "scheming" is actually even less likely than with traditional software and "not fixing bugs" is more likely, because people don't understand AI systems they're making and can make them do their bidding, evil or not, to a much lesser extent than with traditional software; OTOH bugs are more likely for the same reason [we don't know what we're doing.] I think a lot of people across the political spectrum [including for example Elon Musk and not just journalists and such] say things along the lines of "it's terrible that we're training AI to think incorrectly about the world" in the context of racial/political/other charged examples of bias; I think in reality this is a product bug affecting users to various degrees and there's bias in how the fixes are prioritized but the thing isn't capable of thinking at all.

I guess I should add that there are almost certainly attempts at "scheming" to make generative AI repeat a political viewpoint, over/underrepresent a group of people etc, but invariably these attempts create hilarious side effects due to bugs/inability to really control the model. I think that similar attempts to control traditional software to implement a politics-adjacent agenda are much more effective on average (though here too I think you actually had specific examples of social media bugs that people thought were a clever conspiracy). Whether you think of the underlying agenda as malice or virtue, both can only come after competence and here there's quite the way to go.

See Simple Tasks Showing Complete Reasoning Breakdown in State-Of-the-Art Large Language Models. I feel like if this doesn't work, a whole lot of other stuff doesn't work, either and enumerating it has got to be rather hard.

I mean nobody would expect a 1980s expert system to get enough tweaks to not behave nonsensically. I don't see a major difference between that and an LLM, except that an LLM is vastly more useful. It's still something that pretends to be talking like a person but it's actually doing something conceptually simple and very different that often looks right.

[Click to expand / collapse comments from an anonymous founder of an AI startup]

[I]n the process [of founding an AI startup], I have been exposed to lots of mainstream ML code. Exposed as in “nuclear waste” or “H1N1”. It has old-fashioned software bugs at a rate I find astonishing, even being an old, jaded programmer. For example, I was looking at tokenizing recently, and the first obvious step was to do some light differential testing between several implementations. And it failed hilariously. Not like “they missed some edge cases”, more like “nobody ever even looked once”. Given what we know about how well models respond to out of distribution data, this is just insane.

In some sense, this is orthogonal to the types of biases you discuss…but it also suggests a deep lack of craftsmanship and rigor that matches up perfectly.

[Click to expand / collapse comments from Benjamin Reeseman]

[Ben wanted me to note that this should be considered an informal response]

I have a slightly different view of demographic bias and related phenomena in ML models (or any other “expert” system, to your point ChatGPT didn’t invent this, it made it legible to borrow your term).