2026-01-27 17:22:23

原文:https://x.com/karpathy/status/2015883857489522876

作者:Andrej Karpathy (@karpathy)

译者:Gemini 3 Flash

过去几周里,我一直在大量使用 Claude 进行编程,这里有一些随机笔记。

鉴于大语言模型(LLM)编程能力的最新提升,和许多人一样,我在 11 月还处于大约 80% 的手动+自动补全编程和 20% 的智能体(Agent)编程,到了 12 月就迅速转变为 80% 的智能体编程 and 20% 的编辑与润色。也就是说,我现在基本上是在用英语编程,甚至有点不好意思地告诉 LLM 要写什么代码……用文字来表达。

这虽然有点伤自尊,但在大型"代码操作"中操作软件的能力实在是太有用了,特别是当你适应它、配置它、学会使用它,并搞清楚它能做什么和不能做什么之后。这无疑是我近 20 年编程生涯中对基本编程工作流最大的改变,而且它就在几周内发生了。我预计类似的转变也发生在两位数比例的工程师身上,而在普通人群中,对此的认知度似乎还处于个位数百分比。

在我看来,无论是"不再需要 IDE"的炒作,还是"智能体集群"的炒作,目前都有些过头了。模型肯定还是会犯错的,如果你有任何真正关心的代码,我建议你在旁边开一个好用的大型 IDE,像鹰一样盯着它们。

错误的类型发生了很大变化——它们不再是简单的语法错误,而是微妙的概念性错误,就像一个略显马虎、匆忙的初级开发人员可能会犯的那样。最常见的一类是模型代表你做出了错误的假设,然后不加检查就直接执行。它们也不会管理自己的困惑,不会寻求澄清,不会揭示不一致之处,不会权衡利弊,在应该反驳的时候不会推辞,而且仍然有点过于迎合用户。

在计划模式下情况会有所好转,但仍需要一种轻量级的行内计划模式。它们还非常喜欢把代码和 API 复杂化,让抽象变得臃肿,不清理自己产生的废弃代码等等。它们可能会用 1000 行代码实现一个低效、臃肿且脆弱的结构,这时就需要你跳出来说:“嗯,你不能换成这种做法吗?”,然后它们会回答:“当然可以!”,并立即将其精简到 100 行。

即使与当前任务无关,它们有时仍会作为副作用更改或删除它们不喜欢或不完全理解的注释和代码。尽管我尝试通过 CLAUDE.md 中的指令进行了一些简单的修复,但这些情况仍然会发生。尽管存在这些问题,它仍然带来了巨大的净改进,很难想象再回到手动编程。长话短说(TLDR),每个人都有自己的开发流程,我目前的方法是:左边是几个在 ghostty 窗口/标签页中的 CC(Claude Code)会话,右边是一个用于查看代码和进行手动编辑的 IDE。

观察一个智能体坚持不懈地处理某件事是非常有趣的。它们永远不会疲倦,永远不会气馁,它们只是不断地尝试,而换做人类可能早就放弃,改天再战了。看着它纠结于某件事很长时间,最后在 30 分钟后获得成功,这真是一个"感受到 AGI"的时刻。你会意识到,耐力是工作的核心瓶颈,而有了 LLM,这个瓶颈已经得到了极大的缓解。

目前还不清楚如何衡量 LLM 辅助带来的"加速"。当然,我确实感觉到在处理原本要做的事情时速度快得多,但主要的影响是我做的事情比原本打算做的多得多,原因在于:1)我可以编写各种以前不值得花时间去写的代码;2)由于知识或技能问题,我以前无法触及的代码,现在也可以尝试了。所以这确实是加速,但可能更多地是一种能力的扩展。

LLM 非常擅长通过循环直到达到特定目标,而这正是大多数"感受到 AGI"的神奇之处所在。不要告诉它该怎么做,而是给它成功标准,然后看它发挥。让它先写测试,然后通过测试。把它与浏览器 MCP 放在同一个循环中。先写一个极有可能是正确的朴素算法,然后要求它在保持正确性的同时进行优化。将你的方法从命令式转变为声明式,以便让智能体循环更久并获得杠杆作用。

我没有预料到,有了智能体,编程会变得更有趣,因为大量的填空式繁琐工作被移除了,剩下的则是极具创造性的部分。 我也觉得没那么容易卡壳了(卡壳的感觉可不好受),而且我感到更有勇气,因为几乎总有办法与它携手合作并取得一些积极的进展。我也看到过其他人的相反观点;LLM 编程将根据那些主要喜欢编码的人和那些主要喜欢构建的人来划分工程师。

我已经注意到,我手动编写代码的能力正慢慢开始萎缩。大脑中"生成"(写代码)和"判别"(读代码)是不同的能力。很大程度上由于编程涉及的所有那些细微的语法细节,即使你写起来很吃力,你也仍然可以很好地审查代码。

我正准备迎接 2026 年,这一年将是 GitHub、Substack、arXiv、X/Instagram 以及所有数字媒体中"垃圾内容大爆发"的一年。在真正的改进之外,我们还将看到更多人工智能炒作式的"效率演戏"(这真的可能吗?)。

我脑海中的几个问题:

LLM 智能体能力(特别是 Claude 和 Codex)在 2025 年 12 月左右跨越了某种连贯性阈值,并引发了软件工程及其相关领域的阶段性转变。智能部分突然感觉比其他所有部分都领先了一大截——包括集成(工具、知识)、新的组织工作流和流程的必要性,以及更广泛的普及。2026 年将是高能的一年,因为整个行业正在消化这种新的能力。

2026-01-20 06:17:35

原文:https://www.aihero.dev/my-agents-md-file-for-building-plans-you-actually-read

作者:Matt Pocock

大多数开发者最初对 AI 代码生成持怀疑态度。似乎 AI 不可能像你一样理解你的代码库,或者匹配你多年经验积累下来的直觉。

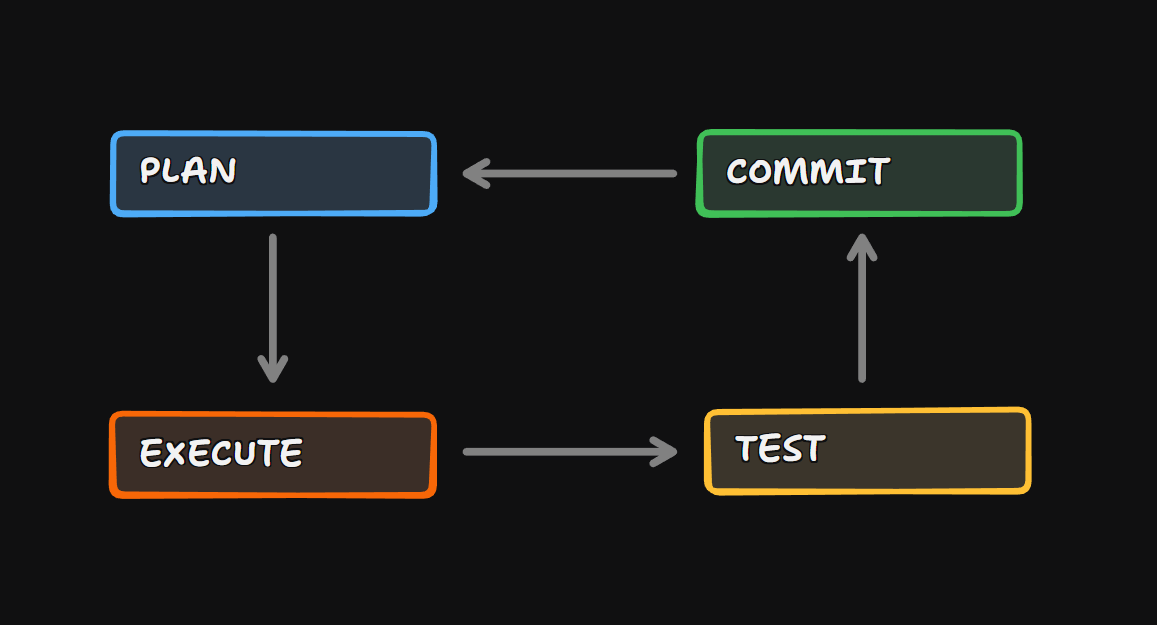

但有一项技术改变了一切:规划循环(planning loop)。你不再是直接要求 AI 编写代码,而是通过一个结构化的循环来工作,这会显著提高你获得的代码质量。

这种方法将 AI 从一个不可靠的代码生成器转变为一个不可或缺的编程伙伴。

现在,每一段代码都要经过相同的循环。

首先与 AI 进行规划。在编写任何代码之前,共同思考方法。讨论策略并在你要构建的内容上达成一致。

通过要求 AI 编写符合规划的代码来执行。你不是在要求它弄清楚要构建什么——你们已经共同完成了这一步。

共同测试代码。运行单元测试,检查类型安全,或进行手动 QA。验证实现是否符合你的规划。

提交代码并为下一部分再次开始循环。

这个循环对于从 AI 获取像样的输出是完全不可或缺的。

如果你完全放弃规划步骤,你实际上是在阻碍自己。你是在要求 AI 猜测你想要什么,最终你会陷入与幻觉和误解的斗争中。

规划强制要求清晰度。它使 AI 的工作变得更容易,也让你的代码变得更好。

以下是我 CLAUDE.md 文件中使规划模式有效的关键规则:

## Plan Mode

- Make the plan extremely concise. Sacrifice grammar for the sake of concision.

- At the end of each plan, give me a list of unresolved questions to answer, if any.

这些简单的准则将冗长的规划转变为可扫描、可操作的文档,使你和 AI 保持同步。

将它们复制到你的 CLAUDE.md 或 AGENTS.md 文件中,享受更简单、更具可读性的规划。

或者,运行此脚本将它们附加到你的 ~/.claude/CLAUDE.md 文件中:

mkdir -p ~/.claude && cat >> ~/.claude/CLAUDE.md << 'EOF'

## Plan Mode

- Make the plan extremely concise. Sacrifice grammar for the sake of concision.

- At the end of each plan, give me a list of unresolved questions to answer, if any.

EOF

2026-01-20 05:14:40

原文:https://cursor.com/blog/agent-best-practices

作者:Cursor Team

译者:Claude Opus 4.5

编程 Agent 正在改变软件的构建方式。

模型现在可以运行数小时,完成雄心勃勃的多文件重构,并不断迭代直到测试通过。但要充分发挥 Agent 的作用,需要理解它们的工作原理并开发新的模式。

本指南涵盖了使用 Cursor Agent 的技术。无论你是 Agent 编程的新手,还是想了解我们团队如何使用 Cursor,我们都将介绍使用 Agent 编程的最佳实践。

Agent 运行环境由三个组件构建而成:

Cursor 的 Agent 运行环境为我们支持的每个模型编排这些组件。我们根据内部评估和外部基准,专门为每个前沿模型微调指令和工具。

运行环境之所以重要,是因为不同的模型对相同提示词的反应不同。一个经过大量 shell 工作流训练的模型可能更喜欢 grep 而不是专门的搜索工具。另一个模型可能需要在编辑后显式指令来调用 linter 工具。Cursor 的 Agent 会为你处理这些,因此随着新模型的发布,你可以专注于构建软件。

你能做出的最有影响力的改变就是在编码前进行计划。

芝加哥大学的一项研究发现,经验丰富的开发人员更有可能在生成代码之前进行计划。计划迫使你清晰地思考正在构建的内容,并为 Agent 提供具体的工作目标。

在 Agent 输入框中按 Shift+Tab 切换到计划模式。Agent 不会立即编写代码,而是:

计划模式实战:Agent 提出澄清问题并创建一个可审查的计划。

计划以 Markdown 文件形式打开,你可以直接编辑以删除不必要的步骤、调整方法或添加 Agent 遗漏的内容。

提示: 点击 “Save to workspace” 将计划存储在

.cursor/plans/中。这可以为你的团队创建文档,方便恢复中断的工作,并为将来处理同一功能的 Agent 提供背景信息。

并非每个任务都需要详细的计划。对于快速更改或你已经做过多次的任务,直接交给 Agent 处理即可。

有时 Agent 构建的内容不符合你的预期。与其尝试通过后续提示词来修复它,不如回到计划。

撤销更改,改进计划以更具体地说明你的需求,然后再次运行。这通常比修复进行中的 Agent 更快,并且能产生更整洁的结果。

随着你越来越习惯让 Agent 编写代码,你的工作变成了为每个 Agent 提供完成任务所需的上下文。

你不需要在提示词中手动标记每个文件。

Cursor 的 Agent 拥有强大的搜索工具,并按需获取上下文。当你询问 “身份验证流程 (authentication flow)” 时,Agent 会通过 grep 和语义搜索找到相关文件,即使你的提示词中没有包含这些确切的词。

即时 grep 让 Agent 可以在几毫秒内搜索你的代码库。

保持简单:如果你知道确切的文件,就标记它。如果不知道,Agent 会找到它。包含无关文件可能会让 Agent 混淆重点。

Cursor 的 Agent 还有一些有用的工具,比如 @Branch,它可以让你向 Agent 提供关于你正在处理的任务的上下文。"检查此分支上的更改"或"我正在处理什么?"成为引导 Agent 了解你当前任务的自然方式。

一个最常见的问题:我是应该继续这段对话,还是重新开始?

在以下情况下开始新对话:

在以下情况下继续对话:

漫长的对话会导致 Agent 失去焦点。经过多次往返和总结,上下文会积累噪声,Agent 可能会分心或转向无关任务。如果你注意到 Agent 的效率下降,那就是时候开始新对话了。

当你开始新对话时,使用 @Past Chats 来引用之前的工作,而不是复制粘贴整个对话。Agent 可以有选择地从聊天记录中读取内容,只拉取它需要的上下文。

这比复制整个对话更有效率。

Cursor 提供了两种自定义 Agent 行为的主要方式:适用于每个对话的静态上下文 Rules (规则),以及 Agent 在相关时可以使用的动态能力 Skills (技能)。

Rules 提供了持久的指令,塑造了 Agent 处理代码的方式。可以将它们视为 Agent 在每次对话开始时都会看到的"常驻"上下文。

在 .cursor/rules/ 中将规则创建为 markdown 文件:

# Commands

- `npm run build`: Build the project

- `npm run typecheck`: Run the typechecker

- `npm run test`: Run tests (prefer single test files for speed)

# Code style

- Use ES modules (import/export), not CommonJS (require)

- Destructure imports when possible: `import { foo } from 'bar'`

- See `components/Button.tsx` for canonical component structure

# Workflow

- Always typecheck after making a series of code changes

- API routes go in `app/api/` following existing patterns

让规则专注于核心:要运行的命令、要遵循的模式以及指向代码库中典型示例的指针。引用文件而不是复制其内容;这样可以保持规则简短,并防止它们随着代码更改而过时。

Rules 中应避免的内容:

提示: 从简单开始。只有当你注意到 Agent 反复犯同样的错误时,才添加规则。在了解你的模式之前,不要过度优化。

将你的规则提交到 git,以便整个团队受益。当你看到 Agent 犯错时,更新规则。你甚至可以在 GitHub issue 或 PR 上标记 @cursor,让 Agent 为你更新规则。

Agent Skills 扩展了 Agent 的能力。Skills 封装了特定领域的知识、工作流和脚本,Agent 可以在相关时调用。

Skills 在 SKILL.md 文件中定义,可以包括:

/ 触发的可重用工作流与始终包含的 Rules 不同,Skills 是在 Agent 决定它们相关时动态加载的。这保持了你的上下文窗口整洁,同时让 Agent 能够访问专业能力。

一个强大的模式是使用 Skills 来创建长时间运行的 Agent,不断迭代直到实现目标。下面介绍如何构建一个钩子,让 Agent 一直工作到所有测试通过为止。

首先,在 .cursor/hooks.json 中配置钩子:

{

"version": 1,

"hooks": {

"stop": [{ "command": "bun run .cursor/hooks/grind.ts" }]

}

}

钩子脚本 (.cursor/hooks/grind.ts) 从 stdin 接收上下文,并返回一个 followup_message 以继续循环:

import { readFileSync, existsSync } from "fs";

interface StopHookInput {

conversation_id: string;

status: "completed" | "aborted" | "error";

loop_count: number;

}

const input: StopHookInput = await Bun.stdin.json();

const MAX_ITERATIONS = 5;

if (input.status !== "completed" || input.loop_count >= MAX_ITERATIONS) {

console.log(JSON.stringify({}));

process.exit(0);

}

const scratchpad = existsSync(".cursor/scratchpad.md")

? readFileSync(".cursor/scratchpad.md", "utf-8")

: "";

if (scratchpad.includes("DONE")) {

console.log(JSON.stringify({}));

} else {

console.log(

JSON.stringify({

followup_message: `[Iteration ${

input.loop_count + 1

}/${MAX_ITERATIONS}] Continue working. Update .cursor/scratchpad.md with DONE when complete.`,

})

);

}

这种模式适用于:

提示: 带有钩子的 Skills 可以与安全工具、密钥管理器和可观察性平台集成。有关合作伙伴集成,请参阅 hooks 文档。

Agent Skills 目前仅在 nightly 发布渠道中提供。打开 Cursor 设置,选择 Beta,然后将更新渠道设置为 Nightly 并重启。

除了编码之外,你还可以将 Agent 连接到你日常使用的其他工具。MCP (模型上下文协议) 让 Agent 可以读取 Slack 消息、调查 Datadog 日志、调试 Sentry 错误、查询数据库等。

Agent 可以直接从你的提示词中处理图像。你可以粘贴截图、拖入设计文件或引用图像路径。

粘贴设计原型图,让 Agent 实现它。Agent 能看到图像并匹配布局、颜色和间距。你也可以使用 Figma MCP server。

对错误状态或非预期的 UI 进行截图,让 Agent 进行调查。这通常比用语言描述问题更快。

Agent 还可以控制浏览器进行截图、测试应用程序并验证视觉更改。详情请参阅 Browser 文档。

浏览器侧边栏让你能够同时进行设计和编码。

以下是适用于不同类型任务的 Agent 模式。

Agent 可以自动编写代码、运行测试并进行迭代:

当 Agent 有明确的迭代目标时,表现最好。测试允许 Agent 进行更改、评估结果并逐步改进,直到成功。

在熟悉新代码库时,利用 Agent 进行学习和探索。问它一些你会问同事的问题:

CustomerOnboardingFlow 处理了哪些边缘情况?”setUser() 而不是 createUser()?”Agent 使用 grep 和语义搜索来查看代码库并找到答案。这是快速上手陌生代码的最快方法之一。

Agent 可以搜索 git 历史记录、解决合并冲突并自动化你的 git 工作流。

例如,一个 /pr 命令,用于提交、推送并开启 pull request:

Create a pull request for the current changes.

1. Look at the staged and unstaged changes with `git diff`

2. Write a clear commit message based on what changed

3. Commit and push to the current branch

4. Use `gh pr create` to open a pull request with title/description

5. Return the PR URL when done

对于每天运行多次的工作流,使用命令是理想的选择。将它们作为 Markdown 文件存储在 .cursor/commands/ 中并提交到 git,以便整个团队都能使用。

我们使用的其他命令示例:

/fix-issue [number]:通过 gh issue view 获取 issue 详情,找到相关代码,实施修复并开启 PR/review:运行 linter,检查常见问题,并总结可能需要注意的事项/update-deps:检查过时的依赖项并逐一更新,每次更新后运行测试Agent 可以自主使用这些命令,因此你可以通过单个 / 调用来委派多步工作流。

AI 生成的代码需要审查,Cursor 提供了多种选择。

观察 Agent 的工作过程。diff 视图会实时显示更改。如果你看到 Agent 朝着错误的方向进行,请按 Escape 中断并重新引导。

Agent 完成后,点击 Review → Find Issues 来运行专门的审查。Agent 会逐行分析建议的编辑并标记潜在问题。

对于所有本地更改,打开 Source Control 选项卡并运行 Agent Review,将其与你的主分支进行比较。

AI 代码审查直接在 Cursor 中发现并修复 bug。

推送到源代码控制系统,以获得 pull requests 的自动审查。Bugbot 应用高级分析,尽早发现问题并在每个 PR 上提出改进建议。

对于重大更改,可以让 Agent 生成架构图。尝试提示:“创建一个 Mermaid 图表,显示我们身份验证系统的数据流,包括 OAuth 提供程序、会话管理和令牌刷新。”这些图表对于文档很有用,并且可以在代码审查之前发现架构问题。

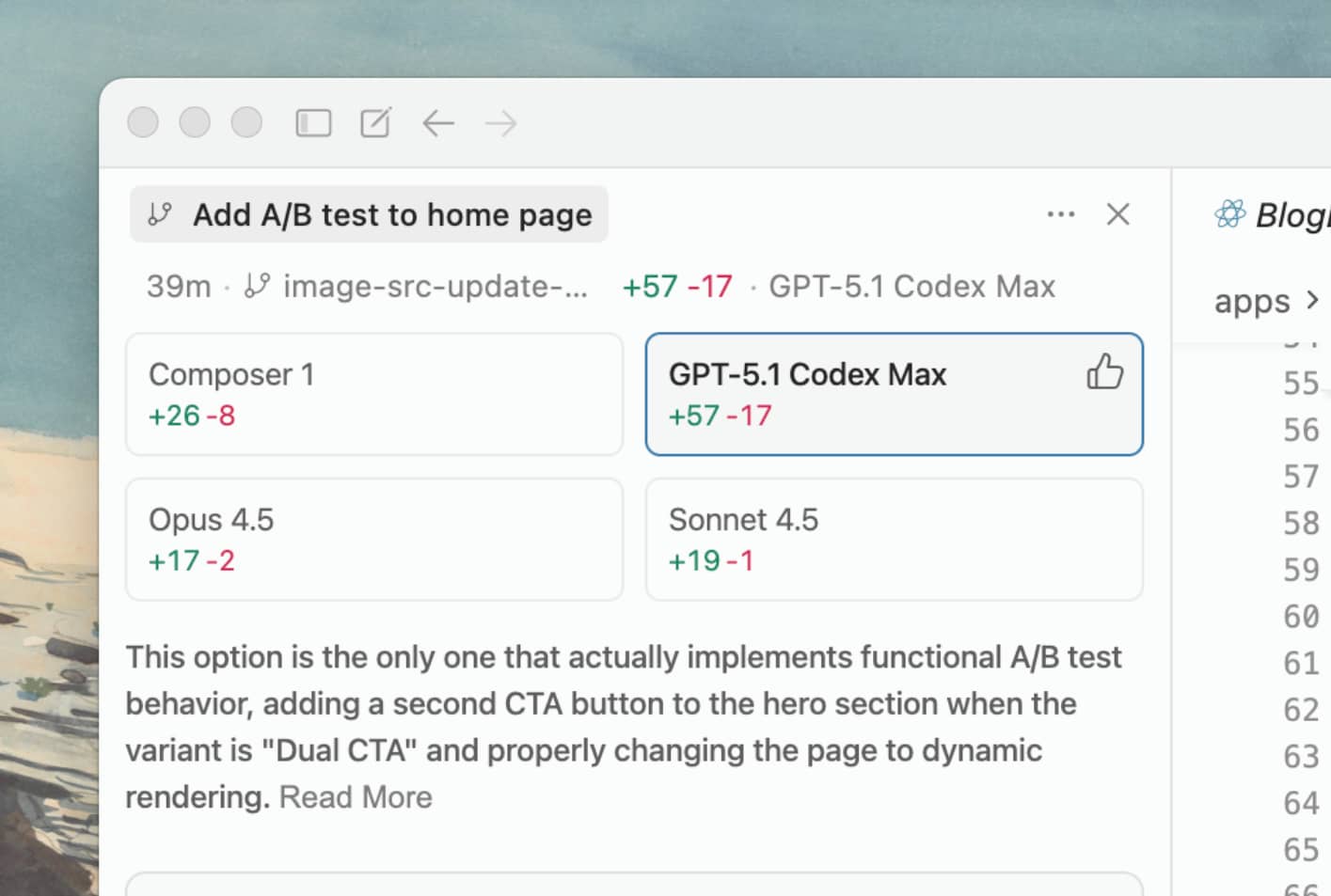

Cursor 让你能够轻松地并行运行多个 Agent,而不会互相干扰。我们发现,让多个模型尝试解决同一个问题并从中选择最佳结果,可以显著提高最终输出的质量,尤其是对于较难的任务。

Cursor 为并行运行的 Agent 自动创建并管理 git worktrees。每个 Agent 在各自的 worktree 中运行,文件和更改相互隔离,因此 Agent 可以编辑、构建和测试代码,而不会互相干扰。

要在 worktree 中运行 Agent,请从 Agent 下拉菜单中选择 worktree 选项。当 Agent 完成后,点击 Apply 将其更改合并回你的工作分支。

一个强大的模式是同时在多个模型上运行同一个提示词。从下拉菜单中选择多个模型,提交提示词,然后并排比较结果。Cursor 还会建议它认为最佳的解决方案。

这对于以下情况特别有用:

当并行运行多个 Agent 时,请配置通知和声音,以便你了解它们何时完成。

云端 Agent 非常适合处理你本来会添加到待办事项列表中的任务:

你可以根据任务在本地和云端 Agent 之间切换。从 cursor.com/agents、Cursor 编辑器或手机启动云端 Agent。当你离开座位时,可以通过网页或手机查看会话。云端 Agent 在远程沙盒中运行,因此你可以合上电脑,稍后再查看结果。

从 cursor.com/agents 管理多个云端 Agent

以下是云端 Agent 的底层工作原理:

提示: 你可以在 Slack 中通过 “@Cursor” 触发 Agent。了解更多。

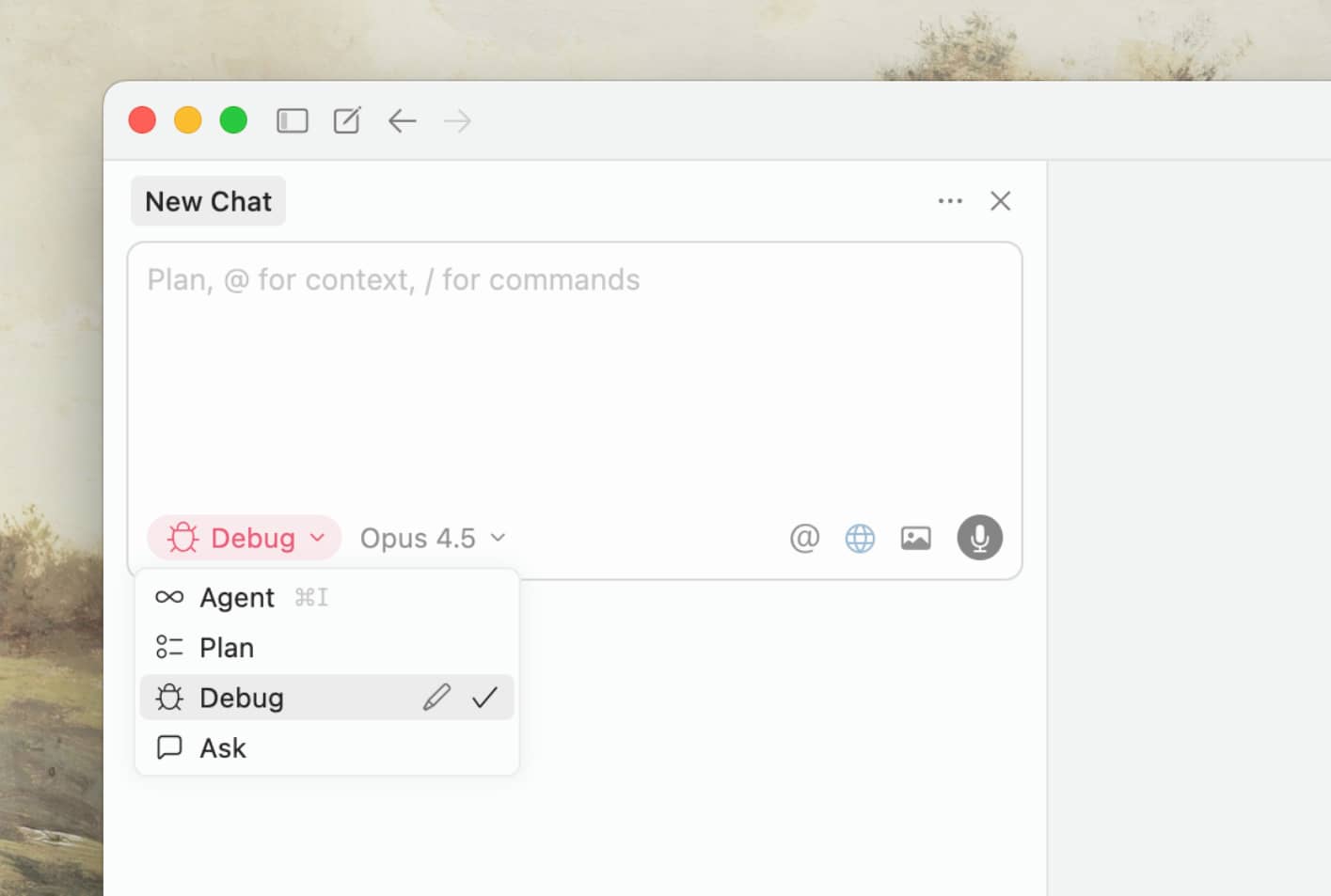

当标准 Agent 交互难以解决某个 bug 时,调试模式提供了一种不同的方法。

调试模式不是猜测修复方案,而是:

这最适用于:

关键是提供关于如何复现问题的详细上下文。你提供的越具体,Agent 添加的插桩就越有用。

从 Agent 中获益最多的开发人员通常具备以下几个特质:

他们编写具体的提示词。 有了具体的指令,Agent 的成功率会显著提高。比较一下 “为 auth.ts 添加测试” 和 “为 auth.ts 编写一个覆盖注销边缘情况的测试用例,使用 __tests__/ 中的模式并避免使用 mock”。

他们不断迭代配置。 从简单开始。只有当你注意到 Agent 反复犯同样的错误时,才添加规则。只有在你确定了想要重复的工作流后,才添加命令。在了解你的模式之前,不要过度优化。

他们仔细审查。 AI 生成的代码可能看起来正确,但实际上有细微的错误。阅读 diff 并仔细审查。Agent 工作得越快,你的审查过程就越重要。

他们提供可验证的目标。 Agent 无法修复它不知道的问题。使用静态类型语言,配置 linter 并编写测试。为 Agent 提供关于更改是否正确的清晰信号。

他们将 Agent 视为有能力的合作者。 索要计划。请求解释。拒绝你不喜欢的方法。

Agent 正在飞速改进。虽然模式会随着新模型的出现而演变,但我们希望这些能帮助你在今天使用编程 Agent 时更具生产力。

立即开始使用 Cursor’s agent 来尝试这些技术。

Author: Cursor Team

2026-01-19 17:02:08

原文:https://x.com/thedankoe/status/2010751592346030461

作者:DAN KOE (@thedankoe)

译者:Claude Opus 4.5

如果你和我一样,你也会觉得“新年愿望”很愚蠢。

因为大多数人改变生活的方式完全错误。他们制定这些愿望只是因为别人都在这么做——我们在地位游戏中创造出一些肤浅的意义——但这些愿望并不符合真正改变的要求。真正的改变比说服自己今年要变得更自律或更高效要深刻得多。

如果你也是其中一员,我并不是要贬低你(我在写作时往往有点刻薄)。我放弃的目标比我实现的目标多 10 倍。我认为对大多数人来说情况都是如此。但事实依然是:人们试图改变生活,却几乎每次都以彻底失败告终。

然而,尽管我认为新年愿望很蠢,但反思你所讨厌的生活,从而让自己投身于更好的事物,这始终是明智的,正如我们将要讨论的那样。

所以,无论你是想创业、重塑体型,还是想在不半途而废的情况下冒险追求更有意义的生活,我都想分享 7 个关于行为改变、心理学和生产力的观点,你可能以前从未听过,这些观点能帮你实现在 2026 年的目标。

这将是一份全面的指南。

这不属于那种看完就忘的信件。

这是你会想要收藏、做笔记并专门花时间思考的东西。

结尾处的协议(旨在深入挖掘你的心理并揭示你真正想要的生活)大约需要一整天才能完成,但其效果将持久得多。

让我们开始吧。

在设定大目标时,人们通常关注成功的两个要求之一:

大多数人设定一个表面的目标,在最初几周亢奋地保持自律,然后就不费吹灰之力地回到了老样子。这是因为他们试图在腐烂的基础上建立伟大的生活。

如果这听起来难以理解,让我们来看一个例子。

想象一个成功人士。他可能是一个拥有完美体格的健身者,一个身价数亿的创始人/CEO,或者一个极具魅力、能毫无焦虑地与人群谈笑风生的男人。

你认为健身者为了吃得健康必须“死磕”吗?CEO 为了到场领导团队必须强迫自律吗?在你看来,表面上似乎是这样,但事实是他们无法想象自己以任何其他方式生活。健身者要吃得不健康才需要死磕。CEO 必须强迫自己睡过闹钟,而且他们讨厌那一分一秒。

对某些人来说,我自己的生活方式似乎有点极端和自律。但对我而言,这很自然。我只是喜欢这样生活。

如果你想要生活中特定的结果,你必须在达到它之前很久,就拥有创造该结果的生活方式。

如果有人说想减掉 30 磅,我通常不相信他们。不是因为我认为他们没能力,而是因为有太多次同一个人会说:“我等不及减完肥,好重新开始享受生活了。”如果你不终身采取那种让你减轻体重的社交方式,你就会直接回到起点。

当你真正改变自己时,所有那些不能让你朝着目标前进的习惯都会变得令人厌恶。因为你深刻地意识到,这些行为会复合成什么样的生活。

“只相信行动。生命发生在事件层面,而非言语层面。相信行动。” —— 阿尔弗雷德·阿德勒

如果你想改变你是谁,你必须理解心智是如何运作的,这样你才能开始对其进行重编程。

所有的行为都是以目标为导向的。这是目的论。大多数时候,你的目标是潜意识的。你向前迈出一步是因为你想到达某个位置。

在更深层的潜意识和更复杂的层面上,你会追求那些可能伤害你的目标,但你会以社会可接受的方式为自己的行为辩解。如果你无法停止拖延,你可能会以缺乏自律为由进行辩解,但实际上,你可能是在保护自己免受完成并分享作品后带来的评判。

真正的改变需要改变你的目标。目标是对未来的投射,它充当了感知的透镜。

“你需要记住的重要一点是,你如何得到这个想法或它来自哪里根本不重要……如果你接受了一个想法……而且,如果你坚信这个想法是真的,它对你的控制力就像催眠师的话语对受试者的控制力一样。” —— 麦克斯韦·莫尔茨

身份的解剖:

这个过程始于童年。你拥有生存的目标。你依赖父母并顺从他们的信念以避免惩罚。

当你的身份感到受到威胁时,你会进入“战斗或逃跑”状态。如果你对某种政治意识形态或宗教有强烈的认同感,当受到挑战时你会感到压力。当你潜意识里把自己视为一名律师、一名游戏玩家,或者是一个不会采取行动追求更好生活的人时,情况也是如此。

心智通过可预测的阶段进化(基于马斯洛、格罗伊特、螺旋动力学等):

跨越这些阶段遵循一定的模式。

“智力的唯一真正测试是看你是否得到了你想要的生活。” —— 纳瓦尔·拉维康特

成功公式:能动性 + 机会 + 智力。

控制论(源自 kybernetikos,意为“操舵”)是“获取所想之物的艺术”。

高智力是迭代、坚持和理解全局的能力。低智力是无法从错误中学习。

为了变得更聪明:

真正的改变发生在紧张感积累之后:

反向愿景:

愿景 MVP(最小可行性愿景):

为这些问题设置提醒:

将目标视为一种视角:

整理你的见解:

你玩得越多,抵御干扰的力场就越强。