2026-03-04 00:30:27

Start reading the latest version of the book everyone is talking about.

The second edition of Designing Data-Intensive Applications was just published – with significant revisions for AI and cloud-native!

Martin Kleppmann and Chris Riccomini help you navigate the options and tradeoffs for processing and storing data for data-intensive applications. Whether you’re exploring how to design data-intensive applications from the ground up or looking to optimize an existing real-time system, this guide will help you make the right choices for your application.

Peer under the hood of the systems you already use, and learn how to use and operate them more effectively

Make informed decisions by identifying the strengths and weaknesses of different approaches and technologies

Understand the distributed systems research upon which modern databases are built

Go behind the scenes of major online services and learn from their architectures

We’re offering 3 complete chapters. Start reading it today.

Agoda generates and processes millions of financial data points (sales, costs, revenue, and margins) every day.

These metrics are fundamental to daily operations, reconciliation, general ledger activities, financial planning, and strategic evaluation. They not only enable Agoda to predict and assess financial outcomes but also provide a comprehensive view of the company’s overall financial health.

Given the sheer volume of data and the diverse requirements of different teams, the Data Engineering, Business Intelligence, and Data Analysis teams each developed their own data pipelines to meet their specific demands. The appeal of separate data pipeline architectures lies in their simplicity, clear ownership boundaries, and ease of development.

However, Agoda soon discovered that maintaining separate financial data pipelines, each with its own logic and definitions, could introduce discrepancies and inconsistencies that could impact the company’s financial statements. In other words, there is no single source of truth, which is not a good situation for financial data.

In this article, we will look at how the Agoda engineering team built a single source of truth for its financial data and the challenges encountered.

Disclaimer: This post is based on publicly shared details from the Agoda Engineering Team. Please comment if you notice any inaccuracies.

A data pipeline is an automated system that extracts data from source systems, transforms it according to business rules, and loads it into databases where analysts can use it.

The high-level architecture of multiple data pipelines, each owned by different teams, introduced several fundamental problems that affected both data quality and operational efficiency.

First, duplicate sources became a significant issue. Many pipelines pulled data from the same upstream systems independently. This led to redundant processing, synchronization issues, data mismatches, and increased maintenance overhead. When three different teams all read from the same booking database but at slightly different times or with different queries, this wastes computational resources and can lead to scenarios where teams are looking at slightly different versions of the same underlying data.

Second, inconsistent definitions and transformations created confusion across the organization. Each team applied its own logic and assumptions to the same data sources. As a result, financial metrics could differ depending on which pipeline produced them. For instance, the Finance team might calculate revenue differently from the Analytics team, leading to conflicting reports when executives ask fundamental questions about business performance. This undermined data reliability and created a lack of trust in the numbers.

Third, the lack of centralized monitoring and quality control resulted in inconsistent practices across teams. The absence of a unified system for tracking pipeline health and enforcing data standards meant that each team implemented its own quality checks. This resulted in duplicated effort, inconsistent practices, and delays in identifying and resolving issues. When problems did occur, it was difficult to determine which pipeline was the source of the issue.

See the diagram below:

During a recent review, Agoda observed that differences in data handling and transformation across these pipelines led to inconsistencies in reporting, as well as operational delays.

AI coding tools are fast, capable, and completely context-blind. Even with rules, skills, and MCP connections, they generate code that misses your conventions, ignores past decisions, and breaks patterns. You end up paying for that gap in rework and tokens.

Unblocked changes the economics.

It builds organizational context from your code, PR history, conversations, docs, and runtime signals. It maps relationships across systems, reconciles conflicting information, respects permissions, and surfaces what matters for the task at hand. Instead of guessing, agents operate with the same understanding as experienced engineers.

You can:

Generate plans, code, and reviews that reflect how your system actually works

Reduce costly retrieval loops and tool calls by providing better context up front

Spend less time correcting outputs for code that should have been right in the first place

To overcome these challenges, Agoda developed a centralized financial data pipeline known as FINUDP, which stands for Financial Unified Data Pipeline.

This system delivers both high data availability and data quality. Built on Apache Spark, FINUDP processes all financial data from millions of bookings each day. It makes this data reliably available to downstream teams for reconciliation, ledger, and financial activities.

The architecture consists of several key components.

Source tables contain raw data from upstream systems such as bookings and payments.

The execution layer is where the actual data processing happens, running on Apache Spark for distributed computing power. This layer sends email and Slack alerts when jobs fail and uses an internally developed tool called GoFresh to monitor if data is updated on schedule.

The data lake is where processed data is stored, with validation mechanisms ensuring data quality.

Finally, downstream teams (including Finance, Planning, and Ledger teams) consume the data for their specific needs.

See the diagram below:

For this centralized pipeline, three non-functional requirements stood out.

Data freshness: The pipeline is designed to update data every hour, aligned with strict service level agreements for downstream consumers. To ensure these targets are never missed, Agoda uses GoFresh, which monitors table update timestamps. If an update is delayed, the system flags it, prompting immediate action to prevent any breaches of these agreements.

Reliability: Data quality checks run automatically as soon as new data lands in a table. A suite of automated validation checks investigates each column against predefined rules. Any violation triggers an alert, stopping the pipeline and allowing the team to address data issues before they cascade downstream.

Maintainability: Each change begins with a strong, peer-reviewed design. Code reviews are mandatory, and all changes undergo shadow testing, which runs on real data in a non-production environment for comparison.

Agoda implemented several technical practices to ensure the reliability of FINUDP. Understanding these practices provides insight into how production-grade data systems are built and maintained.

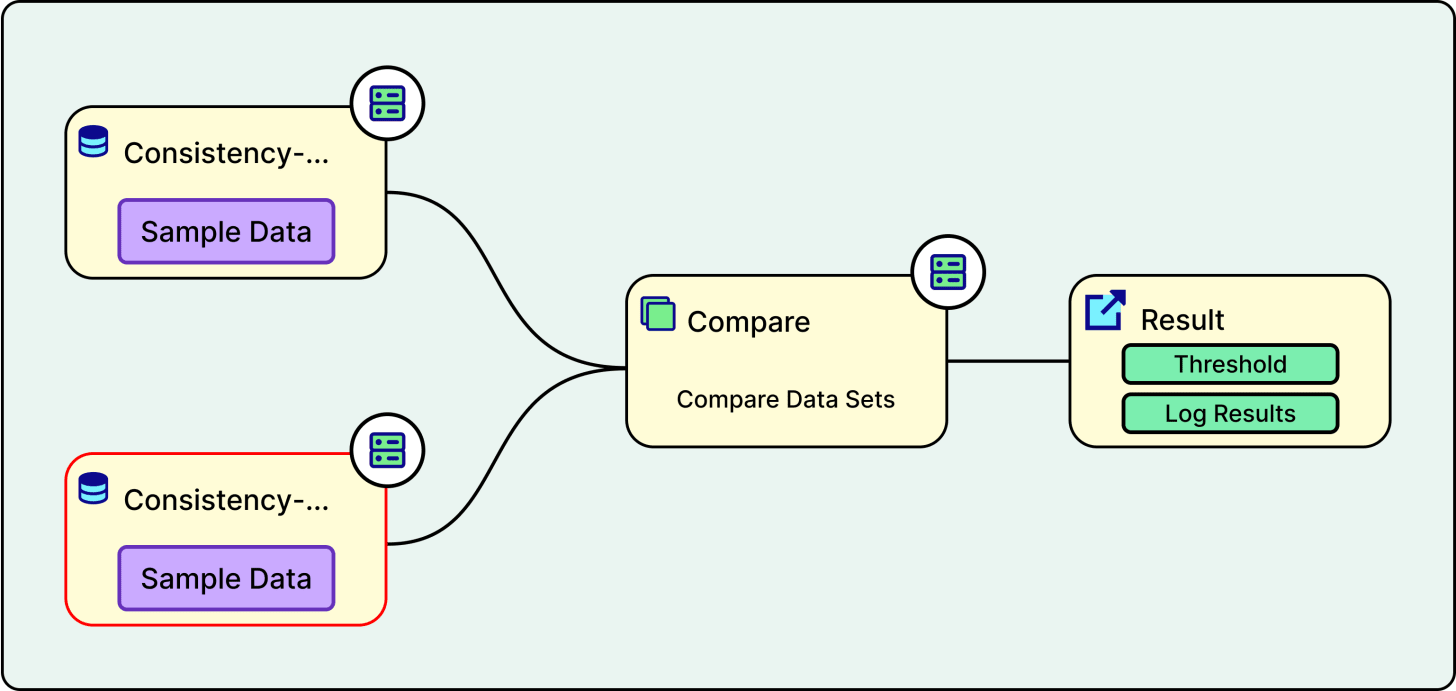

Shadow testing is one of the most important practices. When a developer makes a change to the pipeline code, the system runs both the old version and the new version on the same production data in a test environment. The outputs from both versions are then compared, and a summary of the differences is shared directly within the code review process. This provides reviewers with clear visibility into the impact of proposed changes on the data. It is an excellent way to catch unexpected side effects before they reach production.

See the diagram below:

The staging environment serves as a safety net between development and production. It closely mirrors the production setup, allowing Agoda to test new features, pipeline logic, schema changes, and data transformations in a controlled setting before releasing them to all users. By running the full pipeline with realistic data in staging, the team can identify and resolve issues such as data quality problems, integration errors, or performance bottlenecks without risking the integrity of production data. This approach reduces the likelihood of unexpected failures and builds confidence that every change has been thoroughly validated before going live.

Proactive monitoring for data reliability includes several mechanisms.

Daily snapshots are taken to preserve historical states of the data.

Partition count checks ensure that all expected data partitions are present. A partition is a way of dividing large tables into smaller chunks, typically by date, so queries only need to scan relevant portions of the data.

Automated alerts detect spikes in corrupted data, ensuring that row counts align with expectations.

The multi-level alerting system ensures that failures are caught quickly and the right people are notified:

Email alerts are the first level, where any pipeline failure triggers an email to developers via the coordinator, enabling quick awareness and response.

Slack alerts form the second level, where a dedicated backend service monitors all running jobs and sends notifications about successes or failures directly to the development team’s Slack channels, categorized by hourly and daily frequency.

The third level involves GoFresh continuously checking the freshness of specific columns in target tables. If data is not updated within a predetermined timeframe, GoFresh notifies the Network Operations Center, a 24/7 support team that monitors all critical Agoda alerts, notifies the correct team, and coordinates emergency response sessions.

Data integrity is verified using a third-party data quality tool called Quilliup. Agoda executes predefined test cases that utilize SQL queries to compare data in target tables with their respective source tables. Quilliup measures the variation between source and target data and alerts the team if the difference exceeds a set threshold. This ensures consistency between the original data and its downstream representation.

Data contracts establish formal agreements with upstream teams that provide source data. These contracts define required data rules and structure. If incoming source data violates the contract, the source team is immediately alerted and asked to resolve the issue. There are two types of data contracts.

Detection contracts monitor real production data and alert when violations occur.

Preventative contracts are integrated into the continuous integration pipelines of upstream data producers, catching issues before data is even published.

Lastly, anomaly detection utilizes machine learning models to monitor data patterns and identify unusual fluctuations or spikes in the data. When anomalies are detected, the team investigates the root cause and provides feedback to improve model accuracy, distinguishing between valid alerts and false positives.

Throughout the journey of building FINUDP and migrating multiple data pipelines into one, Agoda encountered several key challenges:

Stakeholder management proved to be more complex than anticipated. Centralizing multiple data pipelines into a single platform meant that each output still served its own set of downstream consumers. This created a broad and diverse stakeholder landscape across product, finance, and engineering teams.

Performance issues emerged as a significant technical challenge. Bringing together several large pipelines and consolidating high-volume datasets into FINUDP initially incurred a performance cost. The end-to-end runtime was approximately five hours, which was far from ideal for daily financial reporting. Through a series of optimization cycles covering query tuning, partitioning strategy, pipeline orchestration, and infrastructure adjustments, Agoda was able to reduce the runtime from five hours to approximately 30 minutes.

Data inconsistency was perhaps the most conceptually challenging problem. Each legacy pipeline had evolved with its own set of assumptions, business rules, and data definitions. When these were merged into FINUDP, those differences surfaced as data inconsistencies and mismatches. To address this, Agoda went back to first principles. The team documented the intended meaning of each key metric and field, conducted detailed gap analyses comparing the outputs of different pipelines, and facilitated workshops with stakeholders to agree on a single definition for each dataset.

Centralizing data pipelines came with clear benefits but also required navigating key trade-offs between competing priorities:

The most significant trade-off involved velocity. In the previous decentralized approach, independent small datasets could move through their separate pipelines without waiting on each other. With the centralized setup, Agoda established data dependencies to ensure that all upstream datasets are ready before proceeding with the entire data pipeline.

Data quality requirements also affected development speed. Since the unified pipeline includes multiple data sources and components, changes to any component require testing and a data quality check of the whole pipeline. This causes a slowness in velocity, but it is a trade-off accepted in exchange for the numerous benefits of having a unified pipeline, including consistency, reliability, and a single source of truth.

Data governance became more rigorous and time-consuming. Migrating to a single pipeline required that every transformation be meticulously documented and reviewed. Since financial data is at stake, full alignment and approval from all stakeholders are needed before changing upstream processes. The need for thorough vetting and consensus slowed implementation, but ultimately built a foundation for trust and integrity in the new unified system. In essence, centralization increases reliability and transparency, but it demands tighter coordination and careful change management at every step.

Consolidating financial data pipelines at Agoda has made a real difference in how the company handles and trusts its financial metrics.

Through FINUDP, Agoda has established a single source of truth for all financial metrics. By introducing centralized monitoring, automated testing, and robust data quality checks, the team has significantly improved both the reliability and availability of data. This setup means downstream teams always have access to accurate and consistent information.

Last year, the data pipeline achieved 95.6% uptime (with a goal to reach 99.5% data availability). Maintaining such high data standards is always a work in progress, but with these systems in place, Agoda is better equipped to catch issues early and collaborate across teams.

References:

2026-03-03 00:30:50

npx workos launches an AI agent, powered by Claude, that reads your project, detects your framework, and writes a complete auth integration directly into your existing codebase. It’s not a template generator. It reads your code, understands your stack, and writes an integration that fits.

The WorkOS agent then typechecks and builds, feeding any errors back to itself to fix.

In December 2024, DeepSeek released V3 with the claim that they had trained a frontier-class model for $5.576 million. They used an attention mechanism called Multi-Head Latent Attention that slashed memory usage. An expert routing strategy avoided the usual performance penalty. Aggressive FP8 training cuts costs further.

Within months, Moonshot AI’s Kimi K2 team openly adopted DeepSeek’s architecture as their starting point, scaled it to a trillion parameters, invented a new optimizer to solve a training stability challenge that emerged at that scale, and competed with it across major benchmarks.

Then, in February 2026, Zhipu AI’s GLM-5 integrated DeepSeek’s sparse attention mechanism into their own design while contributing a novel reinforcement learning framework.

This is how the open-weight ecosystem actually works: teams build on each other’s innovations in public, and the pace of progress compounds. To understand why, you need to look at the architecture.

In this article, we will cover various open-source models and the engineering bets that define each one.

Every major open-weight LLM released at the frontier in 2025 and 2026 uses a Mixture-of-Experts (MoE) transformer architecture.

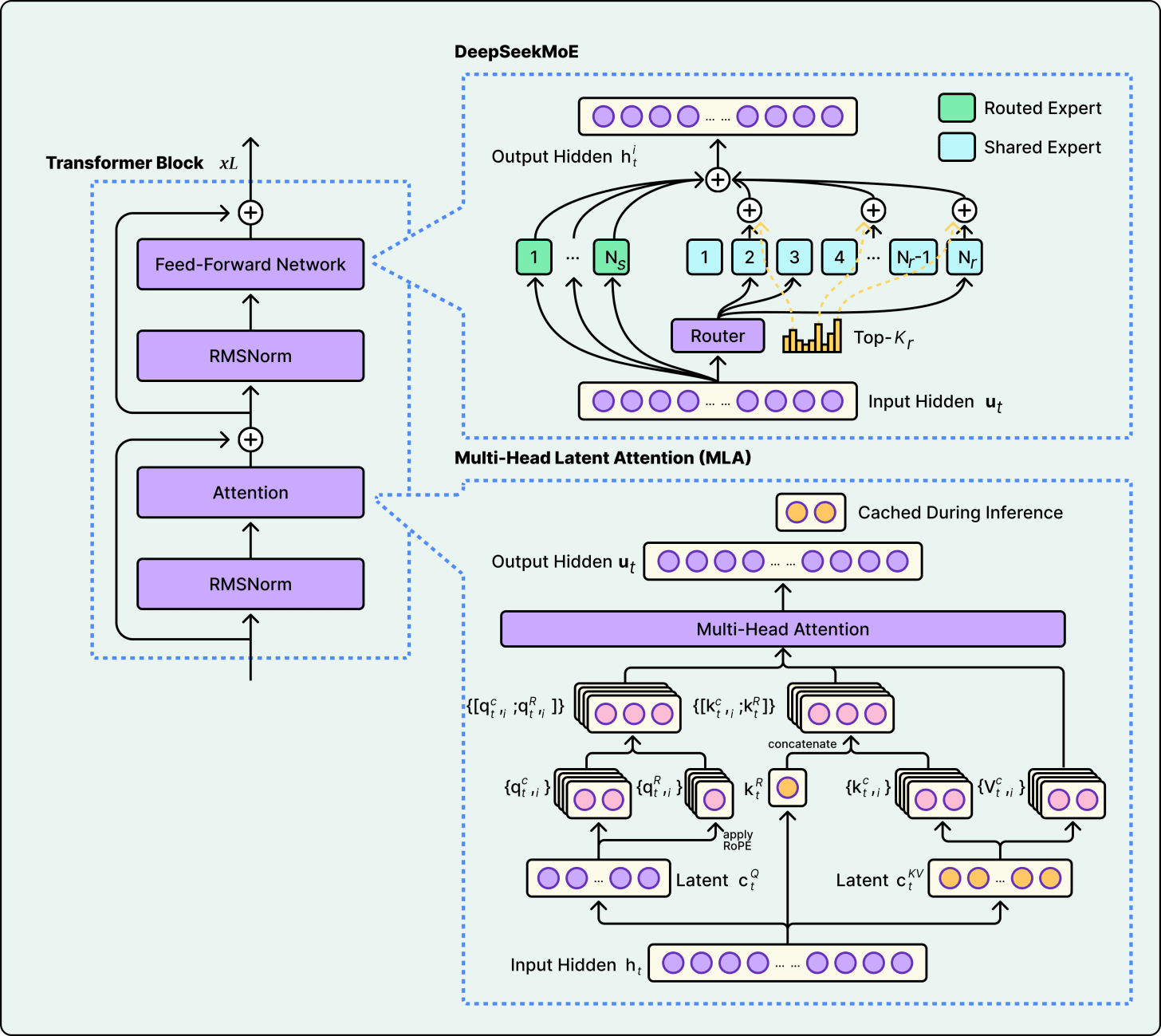

See the diagram below that shows the concept of the MoE architecture:

The reason is that dense transformers activate all parameters for every token. To make a denser model smarter, if you add more parameters, the computational cost scales linearly. With hundreds of billions of parameters, this becomes prohibitive.

MoE solves this by replacing the monolithic feed-forward layer in each transformer block with multiple smaller “expert” networks and a learned router that decides which experts handle each token. This result is a model that can, for example, store the knowledge of 671 billion parameters but only compute 37 billion per token.

This is why two numbers matter for every model:

Total parameters (memory footprint, knowledge capacity)

Active parameters (inference speed, per-token cost).

Think of a specialist hospital with 384 doctors on staff, but only 8 in the room for any given patient. You benefit from the knowledge of 384 specialists while only paying for 8 at a time. The triage nurse (the router) decides who gets called.

That’s also why a trillion-parameter model and a 235-billion-parameter model cost roughly the same per query. For example, Kimi K2 activates 32 billion parameters per token, while Qwen3 activates 22 billion. In other words, you’re comparing the active counts, not the totals.

Take your meeting context to new places

If you’re already using Claude or ChatGPT for complex work, you know the drill: you feed it research docs, spreadsheets, project briefs... and then manually copy-paste meeting notes to give it the full picture.

What if your AI could just access your meeting context automatically?

Granola’s new Model Context Protocol (MCP) integration connects your meeting notes to your AI app of choice.

Ask Claude to review last week’s client meetings and update your CRM. Have ChatGPT extract tasks from multiple conversations and organize them in Linear. Turn meeting insights into automated workflows without missing a beat.

Perfect for engineers, PMs, and operators who want their AI to actually understand their work.

-> Try the MCP integration for free here or use the code BYTEBYTEGO

Almost every model marketed as “open source” is technically open weight. This means that the trained parameters are public, but the training data and often the full training code are not. In traditional software, however, “open source” means code is available, modifiable, and redistributable.

What does this mean in practice?

You can download, fine-tune, and commercially deploy all six of these models. However, you cannot see or audit their training data, and you cannot reproduce their training runs from scratch. For most engineering teams, the first part is what matters. But the distinction is worth knowing.

The license landscape also varies. DeepSeek V3 and GLM-5 use the MIT license. Qwen3 and Mistral Large 3 use Apache 2.0. Both are fully permissive for commercial use. Kimi K2 uses a modified MIT license. Llama 4 uses a custom community license that restricts usage for companies with over 700 million monthly users and prohibits using the model to train competing models.

Transparency also varies. Some teams publish detailed technical reports with architecture diagrams, ablation studies, and hyperparameters. Others provide weights and a blog post with less architectural detail. More transparency enables the “borrow and build” dynamic described above, which is part of why it works.

Every time a model generates a token, it needs to “remember” keys and values for all previous tokens in the conversation. This storage, called the KV-cache, grows linearly with sequence length and becomes a memory bottleneck for long contexts. Different models use three different strategies to deal with it.

Grouped-Query Attention (GQA) shares key-value pairs across groups of query heads. It’s the industry default, offering straightforward implementation and moderate memory savings. Qwen3 and Llama 4 both use GQA.

Multi-Head Latent Attention (MLA) compresses key-value pairs into a low-dimensional latent space before caching, then decompresses when needed. It was introduced in DeepSeek V2 and used in both DeepSeek V3 and Kimi K2. MLA saves more memory than GQA but adds computational overhead for the compress/decompress step.

Sparse Attention skips attending to all previous tokens and instead selects the most relevant ones. DeepSeek introduced DeepSeek Sparse Attention (DSA) in V3.2, and GLM-5 openly adopted DSA in its architecture. Since sparse attention optimizes the attention layers while MoE optimizes the feed-forward layers, the two techniques compound. Therefore, GLM-5 benefits from both.

See the diagram below that shows DeepSeek’s Multi-Head Latent Attention approach:

The tradeoff comes down to what matters most in your deployment. GQA is simpler and proven. MLA is more memory-efficient but more complex to engineer. Sparse attention reduces compute for long contexts but requires careful design to avoid missing important tokens. The strategy a model chooses depends on whether the bottleneck is memory, compute, or context length.

The six models range from 16 to 384 experts, reflecting a fundamental disagreement about how far sparsity should be pushed.

At a fixed compute budget, increasing the number of experts can improve both training and validation loss. However, the report also notes that this gain comes with increased infrastructure complexity. More total experts means more total parameters stored in memory, and Kimi K2’s trillion parameters require a multi-GPU cluster regardless of how few fire per token. By contrast, Llama 4 Scout’s 109 billion total parameters can fit on a single high-memory server.

Two other design choices stand out:

First, the shared expert question. DeepSeek V3, Llama 4, and Kimi K2 include a shared expert that processes every token, providing a baseline capability floor. Qwen3’s technical report notes that, unlike their earlier Qwen2.5-MoE, they dropped the shared expert, but doesn’t disclose why. There is no consensus in the field on whether shared experts are worth the compute cost.

Second, Llama 4 takes a unique approach. Rather than making every layer MoE, Llama 4 alternates between dense and MoE layers, and routes each token to only 1 expert (plus the shared) rather than 8. This means fewer active experts per token, but each expert is larger.

See the diagram below that shows Llama’s approach:

Architecture determines capacity, but training determines what a model actually does with it.

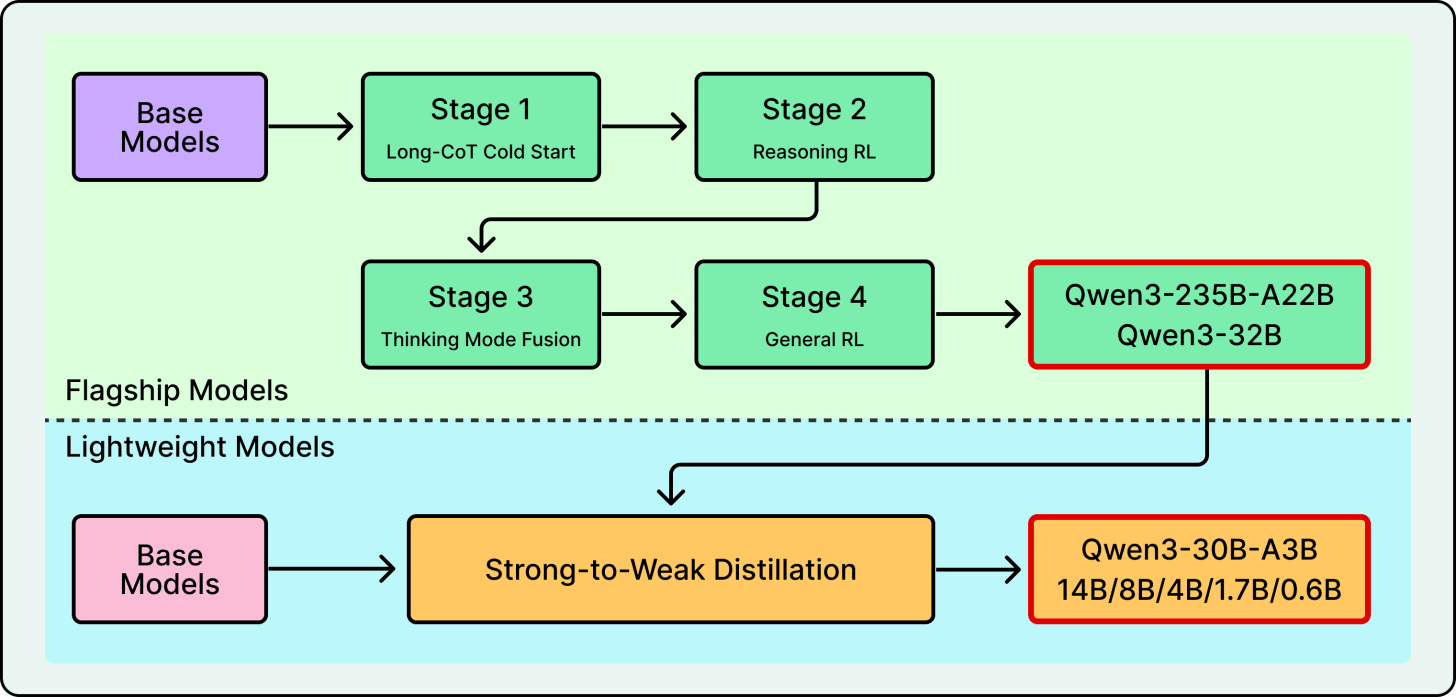

Pre-training, where the model learns by predicting the next token across trillions of tokens, gives the model its base knowledge. The scale varies (14.8 trillion tokens for DeepSeek V3, up to 36 trillion for Qwen3), but the approach is similar. Post-training is where models diverge, and it’s now the primary differentiator.

Reinforcement learning with verifiable rewards checks whether the model’s output is objectively correct.

Did the code compile? Is the math answer right? The model is rewarded for correct outputs and penalized for wrong ones. This was the breakthrough behind DeepSeek R1, and elements of it were distilled into DeepSeek V3.

Distillation from a larger teacher trains a massive model and uses its outputs to teach smaller ones. Llama 4 co-distilled from Behemoth, a 2-trillion-parameter teacher model, during pre-training itself. Qwen3 distills from its flagship down to smaller models in the family.

See the diagram below that shows Qwen’s post-training flow:

Synthetic agentic data involves building simulated environments loaded with real tools like APIs, shells, and databases, then rewarding the model for completing tasks in those environments. For example, Kimi K2’s technical report describes a large-scale pipeline that systematically generates tool-use demonstrations across simulated and real-world environments.

Novel RL infrastructure can be a contribution in itself. GLM-5 developed “Slime,” a new asynchronous reinforcement learning framework that improves training throughput for post-training, enabling more iterations within the same compute budget.

Training stability also deserves attention here. At this scale, a single training crash can waste days of GPU time. To counter this, Kimi K2 developed the MuonClip optimizer specifically to prevent exploding attention logits, enabling them to train on 15.5 trillion tokens without a single loss spike. DeepSeek V3 similarly reported zero irrecoverable loss spikes across its entire training run. These engineering contributions may prove more reusable than any particular architectural choice.

Architectures are converging. Everyone is building MoE transformers. Training approaches are diverging, with teams placing different bets on reinforcement learning, distillation, synthetic data, and new optimizers.

The specific models covered here will be overtaken in months. However, the framework for evaluating them likely won’t change.

Important questions would still be about active parameter count and not just the total. Also, what attention tradeoff did they make, and does it match the context-length needs? How many experts fire per token, and can the infrastructure handle the total? How was it post-trained, and does that approach align with your use case? What does the license actually permit?

References:

2026-03-01 00:30:26

AI coding tools are fast, capable, and completely context-blind. Even with rules, skills, and MCP connections, they generate code that misses your conventions, ignores past decisions, and breaks patterns. You end up paying for that gap in rework and tokens.

Unblocked changes the economics.

It builds organizational context from your code, PR history, conversations, docs, and runtime signals. It maps relationships across systems, reconciles conflicting information, respects permissions, and surfaces what matters for the task at hand. Instead of guessing, agents operate with the same understanding as experienced engineers.

You can:

Generate plans, code, and reviews that reflect how your system actually works

Reduce costly retrieval loops and tool calls by providing better context up front

Spend less time correcting outputs for code that should have been right in the first place

This week’s system design refresher:

11 Ways To Use AI To Increase Your Productivity

Al Topics to Learn before Taking Al/ML Interviews

PostgreSQL versus MySQL

Why AI Needs GPUs and TPUs?

Network Protocols Explained

AI is changing how we work. People who use AI get more done in less time. You do not need to code. You need to know which tool to use and when.

For example, instead of reading long technical blogs, you can upload them to Google’s NotebookLM and ask it to summarize the key points.

Or you can use Otter.ai to turn meeting transcripts into action items, decisions, and highlights.

Here is a list of 19 tools that can speed up your daily workflow across different areas. Save this for the next time you feel stuck getting started.

Over to you: What’s the underrated AI tool that others might not know about?

AI interviews often test fundamentals, not tools. This visual splits them into two buckets that show up repeatedly: Traditional AI and Modern AI.

Traditional AI focuses on fundamental ML topics, mostly from before neural networks became dominant.

Modern AI focuses on neural network foundations and newer concepts like transformers, RAG, and post-training.

Interviewers generally expect you to know both. They expect you to explain how they work, when they break, and the trade-offs.

Use this as a checklist, and make sure you can explain each topic clearly before your next AI interview.

Over to you: which topic here do you find hardest to explain under interview pressure? What else is missing?

Built using the C language, PostgreSQL uses a process-based architecture.

You can think of it like a factory with a manager (Postmaster) coordinating specialized workers. Each connection gets its own process and shares a common memory pool. Background workers handle tasks like writing data, vacuuming, and logging independently.

MySQL takes a thread-based approach. Imagine a single multi-tasking brain.

It uses a layered design with one server handling multiple connections through threads. The magic happens using pluggable storage engines (such as InnoDB, MyISAM) that you can swap based on your needs.

Over to you: Which database do you prefer?

When AI processes data, it’s essentially multiplying massive arrays of numbers. This can mean billions of calculations happening simultaneously.

CPUs can handle such calculations sequentially. To perform any calculation, the CPU must fetch an instruction, retrieve data from memory, execute the operation, and write results back. This constant transfer of information between the processor and memory is not very efficient. It’s like having one very smart person solve a giant puzzle alone.

GPUs change the game with parallel processing. They split the work across hundreds of cores, reducing processing time to milliseconds.

However, TPUs take it even further with a systolic array architecture that’s thousands of times faster than CPUs. Each unit in a TPU multiplies its stored weight by incoming data, adds to a running sum flowing vertically, and passes both values to its neighbor. This cuts down on I/O costs and reduces processing times even further.

Over to you: What else will you add to explain the need for GPUs and TPUs to run AI workloads?

Every time you type a URL and hit Enter, half a dozen network protocols quietly come to life. We usually talk about HTTP or HTTPS, but that’s just the tip of the iceberg. Under the hood, the web runs on a carefully layered stack of protocols, each solving a very specific problem.

At the top is HTTP. It defines the request-response model that powers browsers, APIs, and microservices. Simple, stateless, and everywhere.

When security is added, HTTP becomes HTTPS, wrapping every request in TLS so data is encrypted and the server is authenticated before anything meaningful is exchanged.

Before any of that can happen, DNS steps in. Humans think in domain names, machines think in IP addresses. DNS bridges that gap, resolving names into routable IPs so packets know where to go.

Then comes the transport layer. TCP sets up a connection, performs the three-way handshake, retransmits lost packets, and ensures everything arrives in order. It’s reliable, but that reliability comes with overhead.

UDP skips all of that. No handshakes, no guarantees, just fast datagrams. That’s why it’s used for streaming, gaming, and newer protocols like QUIC.

At the bottom sits IP. This is the postal system of the internet. It doesn’t care about reliability or order. Its only job is to move packets from one network to another through routers, hop by hop, until they reach the destination.

Each layer is deliberately limited in scope. DNS doesn’t care about encryption. TCP doesn’t care about HTTP semantics. IP doesn’t care if packets arrive at all. That separation is exactly why the internet scales.

Over to you: When something breaks, which layer do you usually blame first, DNS, TCP, or the application itself?

2026-02-27 00:30:39

If a database stores data on three servers in three different cities, and we write a new value, when exactly is that write “done”? Does it happen when the first server saves it? Or when all three have it? Or when two out of three confirm?

The answer to this question is quite important. Consider a simple bank transfer where we move $500 from savings to checking. We see the updated balance on our phone. But our partner, checking the same account from their laptop in another city, still sees the old balance. For a few seconds, the household has two different versions of the truth. For something similar to a like count on social media, that kind of temporary disagreement is harmless. For a bank balance, it’s a different story.

The guarantee that every reader sees the most recent write, no matter where or when they read, is what distributed systems engineers call strong consistency. It sounds straightforward. Making it work across machines, data centers, and continents is one of the hardest problems in distributed systems, because it requires those machines to coordinate, and coordination has a cost governed by physics.

In a previous article, we had looked at eventual consistency. In this article, we will look at what strong consistency actually means, how systems deliver it, and what it really costs.

2026-02-26 00:30:28

Context engineering is the new critical layer in every production AI app, and Redis is the real-time context engine powering it. Redis gathers, syncs, and serves the right mix of memory, knowledge, tools, and state for each model call, all from one unified platform. Search across RAG, short- and long-term memory, and structured and unstructured data without stitching together a fragile multi-tool stack. With 30+ agent framework integrations across OpenAI, LangChain, Bedrock, NVIDIA NIM, and more, Redis fits the stack your teams are already building on. Accurate, reliable AI apps that scale. Built on one platform.

Every time we open X (formerly Twitter) and scroll through the “For You” tab, a recommendation system is deciding which posts to show and in what order. This recommendation system works in real-time.

In the world of social media, this is a big deal because any latency issues can cause user dissatisfaction.

Until now, the internal workings of this recommendation system were more or less a mystery. However, recently, the xAI engineering team open-sourced the algorithm that powers this feed, publishing it on GitHub under an Apache-2.0 license. It reveals a system built on a Grok-based transformer model that has replaced nearly all hand-crafted rules with machine learning.

In this article, we will look at what the algorithm does, how its components fit together, and why the xAI Engineering Team made the design choices they did.

Disclaimer: This post is based on publicly shared details from the xAI Engineering Team. Please comment if you notice any inaccuracies.

When you request the For You feed in X, the algorithm draws from two separate sources of content:

The first source is called in-network content. These are posts from accounts you already follow. If you follow 200 people, the system looks at what those 200 people have posted recently and considers them as candidates for your feed.

The second source is called out-of-network content. These are posts from accounts you do not follow. The algorithm discovers them by searching across a global pool of posts using a machine learning technique called similarity search. The idea behind this is that if your past behavior suggests you would find a post interesting, that post becomes a candidate even if you have never heard of the author.

Both sets of candidates are then merged into a single list, scored, filtered, and ranked. The top-ranked posts are what you see when you open the app.

The diagram below shows the overall architecture of the system built by the xAI engineering team:

The codebase is organized into four main directories, each representing a distinct part of the system. The entire codebase is written in Rust (62.9%) and Python (37.1%).

Home Mixer is the orchestration layer. It acts as the coordinator that calls the other components in the right order and assembles the final feed. It is not doing the heavy ML work itself, but just managing the pipeline.

When a request comes in, Home Mixer kicks off several stages in sequence:

Fetching user context

Retrieving candidate posts

Enriching those posts with metadata

Filtering out the ineligible ones, scoring the survivors

Selecting the top results and running final checks.

The server exposes a gRPC endpoint called ScoredPostsService that returns the ranked list of posts for a given user.

Thunder is an in-memory post store and real-time ingestion pipeline. It consumes post creation and deletion events from Kafka and maintains per-user stores for original posts, replies, reposts, and video posts.

When the algorithm needs in-network candidates, it queries Thunder, which can return results in sub-millisecond time because everything lives in memory rather than in an external database. Thunder also automatically removes posts that are older than a configured retention period, keeping the data set fresh.

Phoenix is the ML brain of the system. It has two jobs:

Phoenix uses a two-tower model to find out-of-network posts:

One tower (the User Tower) takes your features and engagement history and encodes them into a mathematical representation called an embedding.

The other tower (the Candidate Tower) encodes every post into its own embedding.

Finding relevant posts then becomes a similarity search. The system computes a dot product between your user embedding and each candidate embedding and retrieves the top-K most similar posts. If you are unfamiliar with dot products, the core idea is that two embeddings that “point in the same direction” in a high-dimensional space produce a high score, meaning the post is likely relevant to you.

See the diagram below that shows the concept of embeddings:

Once candidates have been retrieved from both Thunder and Phoenix’s retrieval step, Phoenix runs a Grok-based transformer model to predict how likely you are to engage with each post.

See the diagram below that shows the concept of a transformer model:

The transformer implementation is ported from the Grok-1 open source release by xAI, adapted for recommendation use cases. It takes your engagement history and a batch of candidate posts as input and outputs a probability for each type of engagement action.

The Candidate Pipeline is a reusable framework that defines the structure of the whole recommendation process.

It provides traits (interfaces, in Rust terminology) for each stage of the pipeline:

Source (fetch candidates)

Hydrator (enrich candidates with extra data)

Filter (remove ineligible candidates)

Scorer (compute scores)

Selector (sort and pick the top candidates)

SideEffect (run asynchronous tasks like caching and logging).

The framework runs independent stages in parallel where possible and includes configurable error handling. This modular design makes it straightforward for the xAI Engineering Team to add new data sources or scoring models without rewriting the pipeline logic.

Here is the full sequence that runs every time you open the For You feed:

Query Hydration: The system fetches your recent engagement history (what you liked, replied to, and reposted) and your metadata, such as your following list.

Candidate Sourcing: Thunder provides recent posts from accounts you follow. Phoenix Retrieval provides ML-discovered posts from the global corpus.

Candidate Hydration: Each candidate post is enriched with additional information: its text and media content, the author’s username and verification status, video duration if applicable, and subscription status.

Pre-Scoring Filters: Before any scoring happens, the system removes posts that are duplicates, too old, authored by you, from accounts you have blocked or muted, containing keywords you have muted, posts you have already seen, or ineligible subscription content.

Scoring: The remaining candidates pass through multiple scorers in sequence. First, the Phoenix Scorer gets ML predictions from the transformer. Then, the Weighted Scorer combines those predictions into a single relevance score. Next, an Author Diversity Scorer reduces the score of posts from repeated authors so your feed is not dominated by one person. Finally, an OON (out-of-network) Scorer adjusts scores for posts from accounts you do not follow.

Selection: Posts are sorted by their final score, and the top K are selected.

Post-Selection Filters: A final round of checks removes posts that have been deleted, flagged as spam, or identified as containing violent or graphic content. A conversation deduplication filter also ensures you do not see multiple branches of the same reply thread.

The Phoenix transformer predicts probabilities for a wide range of user actions: liking, replying, reposting, quoting, clicking, visiting the author’s profile, watching a video, expanding a photo, sharing, dwelling (spending time reading), following the author, marking “not interested,” blocking the author, muting the author, and reporting the post.

Each of these predicted probabilities is multiplied by a weight and then summed to produce a final score. Positive actions like liking, reposting, and sharing carry positive weights. Negative actions like blocking, muting, and reporting carry negative weights. This means that if the model predicts you are likely to block the author of a post, that post’s score gets pushed down significantly. The formula is simple:

Final Score = sum of (weight for action * predicted probability of that action)

This multi-action prediction approach is more nuanced than a single “relevance” score because it lets the system distinguish between content you would enjoy and content you would find annoying or harmful.

There are five architectural choices worth understanding from xAI’s recommendation system:

Instead of humans deciding which signals matter (post length, hashtag count, time of day), the Grok-based transformer learns what matters directly from user engagement sequences. This simplifies the data pipelines and serving infrastructure.

When the transformer scores a batch of candidate posts, each post can only “attend to” (or look at) the user’s context. It cannot attend to the other candidates in the same batch. This design choice ensures that a post’s score does not change depending on which other posts happen to be in the same batch. It makes scores consistent and cacheable, which is important at the scale X operates at.

Both the retrieval and ranking stages use multiple hash functions for embedding lookup.

Rather than collapsing everything into a single relevance number, the model predicts probabilities for many distinct actions. This gives the Weighted Scorer fine-grained control over what the feed optimizes for.

The Candidate Pipeline framework separates the pipeline’s execution logic from the business logic of individual stages. This makes it easy to add a new data source, swap in a different scoring model, or insert a new filter without touching the rest of the system.

References:

2026-02-25 00:30:15

Monster SCALE Summit is a new virtual conference all about extreme-scale engineering and data-intensive applications.

Join us on March 11 and 12 to learn from engineers at Discord, Disney, LinkedIn, Uber, Pinterest, Rivian, ClickHouse, Redis, MongoDB, ScyllaDB and more. A few topics on the agenda:

What Engineering Leaders Get Wrong About Scale

How Discord Automates Database Operations at Scale

Lessons from Redesigning Uber’s Risk-as-a-Service Architecture

Scaling Relational Databases at Nextdoor

How LinkedIn Powers Recommendations to Billions of Users

Powering Real-Time Vehicle Intelligence at Rivian with Apache Flink and Kafka

The Data Architecture behind Pinterest’s Ads Reporting Services

Bonus: We have 500 free swag packs for attendees. And everyone gets 30-day access to the complete O’Reilly library & learning platform.

Uber’s infrastructure runs on thousands of microservices, each making authorization decisions millions of times per day. This includes every API call, database query, and message published to Kafka. To make matters more interesting, Uber needs these decisions to happen in microseconds to have the best possible user experience.

Traditional access control could not handle the complexity. For instance, you might say “service A can call service B” or “employees in the admin group can access this database.” While these rules work for small systems, they fall short when you need more control. For example, what if you need to restrict access based on the user’s location, the time of day, or relationships between different pieces of data?

Uber needed a better approach. They built an attribute-based access control system called Charter to evaluate complex conditions against attributes pulled from various sources at runtime.

In this article, we will look at how the Uber engineering team built Charter and the challenges they faced.

Disclaimer: This post is based on publicly shared details from the Uber Engineering Team. Please comment if you notice any inaccuracies.

Before diving into ABAC, you need to understand how Uber thinks about authorization. Every access request can be broken down into a simple question:

Can an Actor perform an Action on a Resource in a given Context?

Let’s understand each component of this statement:

Actor represents the entity making the request. At Uber, this could be an employee, a customer, or another microservice. Uber uses the SPIFFE format to identify actors. An employee might be identified as spiffe://personnel.upki.ca/eid/123456, where 123456 is their employee ID. A microservice running in production would be identified as spiffe://prod.upki.ca/workload/service-foo/production

Action describes what the actor wants to do. Common actions include create, read, update, and delete, often abbreviated as CRUD. Services can also define custom actions like invoke for API calls, subscribe for message queues, or publish for event streams.

A resource is the object being accessed. Uber represents resources using UON, which stands for Uber Object Name. This is a URI-style format that looks like uon://service-name/environment/resource-type/identifier. For example, a specific table in a database might be uon://orders.mysql.storage/production/table/orders.

The host portion of the UON is called the policy domain. This acts as a namespace for grouping related policies and configurations.

As mentioned, Uber built a centralized service called Charter to manage all authorization policies. Think of Charter as a policy repository where administrators define who can access what. This approach offers several advantages over having each service implement its own authorization logic.

See the diagram below:

Policies stored in Charter are distributed to individual services. Each service includes a local library called authfx that evaluates these policies.

The architecture works as follows:

Policy authors create and update policies in Charter

Charter stores these policies in a database

A unified configuration distribution system pushes policy updates to all relevant services

Services use the authfx library to evaluate policies for incoming requests

Authorization decisions are made locally within each service

SerpApi turns live search engines into APIs, returning clean JSON for results, reviews, prices, locations, and more. Use it to ground your app or LLMs with real-world data from Google, Maps, Amazon, and beyond, without maintaining scrapers.

The simplest form of policy at Uber connects actors to resources through actions.

A basic policy might look like this in YAML format:

file_type: policy

effect: allow

actions:

- invoke

resource: “uon://service-foo/production/rpc/foo/method1”

associations:

- target_type: WORKLOAD

target_id: “spiffe://prod.upki.ca/workload/service-bar/production”This policy translates to: “Allow service-bar to invoke method1 of service-foo.” Another example shows how employees can be granted access:

file_type: policy

effect: allow

actions:

- read

- write

resource: “uon://querybuilder/production/report/*”

associations:

- target_type: GROUP

target_id: “querybuilder-development”This policy means: “Allow employees in the querybuilder-development group to read and write query reports.”

These basic policies work well for straightforward authorization scenarios. However, real-world requirements are often more complex.

Uber encountered several limitations with the basic policy model.

For example, consider a payment support service. Customer support representatives need to access payment information to help customers. However, for privacy and compliance reasons, support reps should only access payment data for customers in their assigned region. The basic policy syntax can only specify that a representative can access a payment profile by its UUID. It cannot express the requirement that the rep’s region must also match the customer’s region.

Another example involves employee data. An employee information service needs to allow employees to view and edit their own profiles. It should also allow their managers to access their profiles. The basic policy model cannot express this “self or manager” relationship because it would require checking whether the actor’s employee ID matches either the resource’s employee ID or the resource’s manager ID.

A third scenario involves data analytics. Some reports should only be accessible to users who belong to multiple specific groups simultaneously. The existing model supported checking if a user belonged to any group in a list, but not whether they belonged to all groups in a list.

In a nutshell, Uber needed a way to incorporate additional context and attributes into authorization decisions.

ABAC extends the basic policy model by adding conditions. A condition is a Boolean expression that evaluates to true or false based on attributes. If a permission includes a condition, that permission only grants access when the condition evaluates to true.

Attributes are characteristics of actors, resources, actions, or the environment. For example:

An actor might have attributes like location, department, or role.

A resource might have attributes such as owner, sensitivity level, or creation date.

The environment might provide attributes like current time, day of the week, or request IP address.

Attribute Stores are the sources that provide attribute values at authorization time. In formal authorization terminology, these are called Policy Information Points or PIPs. When evaluating a condition, the authorization engine queries the appropriate attribute store to fetch the necessary values.

The enhanced policy model adds an optional condition field to each permission. Here’s an example:

actions: [create, delete, read, update]

resource: “uon://payments.svc/production/payment/*”

associations:

- target_type: EMPLOYEE

condition:

expression: “resource.paymentType == ‘credit card’ && actor.location == resource.paymentLocation”

effect: ALLOW

```This policy allows employees to perform CRUD operations on payment records, but only when two conditions are met: the payment type is a credit card, and the employee’s location matches the payment’s location.

When ABAC is enabled, the authorization architecture includes additional components.

The authfx library now includes an authorization engine that coordinates policy evaluation. When a request arrives, the engine first checks if the basic requirements are met: does the actor match, does the action match, does the resource match? If those checks pass and a condition exists, the engine moves to condition evaluation.

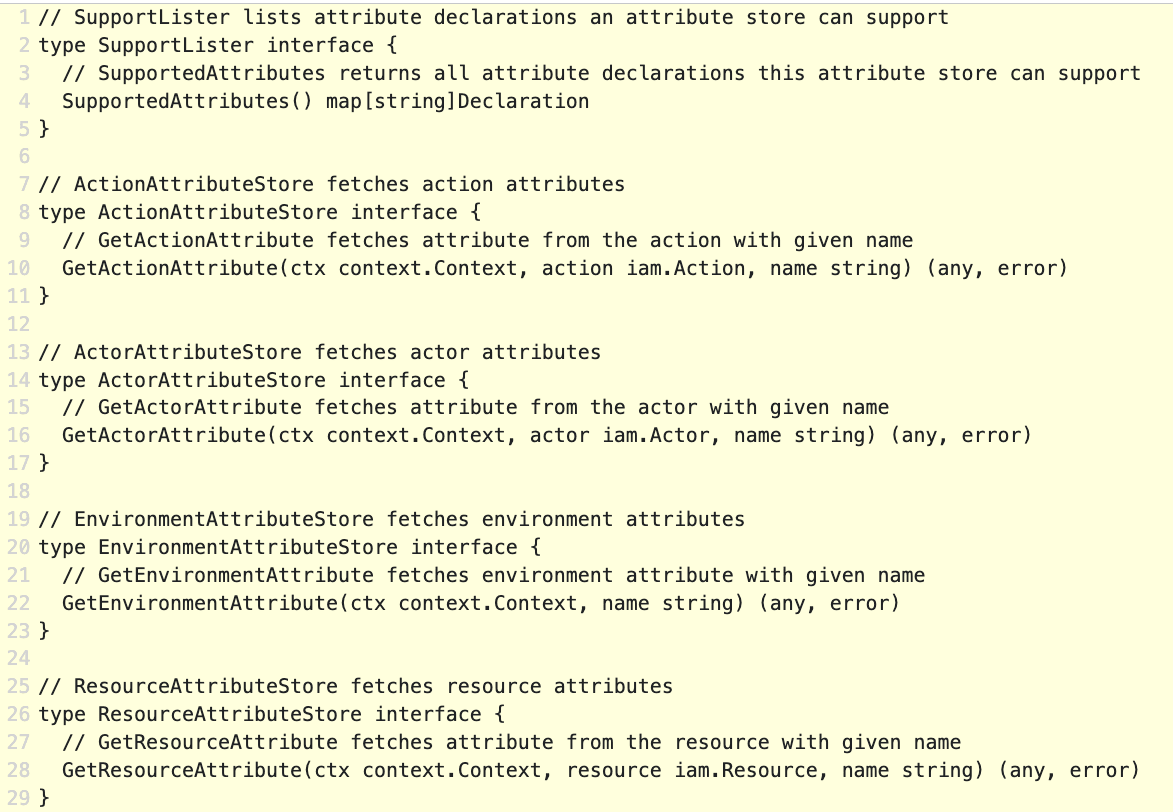

The authorization engine interacts with an expression engine that evaluates the condition expression. The expression engine identifies which attributes are needed and requests them from the appropriate attribute stores. See the diagram below:

Uber defined four types of attribute store interfaces:

ActorAttributeStore fetches attributes about the actor making the request. This might include their employee ID, group memberships, location, or department.

ResourceAttributeStore fetches attributes about the resource being accessed. This could include the resource’s owner, creation date, sensitivity classification, or any custom business attributes.

ActionAttributeStore fetches attributes related to the action being performed, though this is used less frequently than actor and resource attributes.

EnvironmentAttributeStore fetches contextual attributes like the current timestamp, day of week, or request metadata.

Each attribute store must implement a SupportedAttributes() function that declares which attributes it can provide. This enables the authorization engine to pre-compile condition expressions and validate that all required attributes are available. At runtime, when an attribute value is needed, the engine calls the appropriate method on the corresponding store.

See the code snippet below:

The design allows a single service to use multiple attribute stores, and a single attribute store can be shared across multiple services for reusability.

To represent conditions based on attributes, Uber needed an expression language. Rather than inventing a new language from scratch, the engineering team evaluated existing open-source options.

They selected the Common Expression Language (CEL), developed by Google. CEL offered several advantages:

First, it has a simple, familiar syntax similar to other programming languages.

Second, it supports multiple data types, including strings, numbers, booleans, and lists.

Third, it includes built-in functions for string manipulation, arithmetic operations, and boolean logic.

CEL also provides macros that are particularly useful for working with collections. For instance, you can write actor.groups.exists(g, g == ‘admin’) to check if the actor belongs to a group called “admin.”

The performance characteristics of CEL were excellent. Expression evaluation typically takes only a few microseconds. Both Go and Java implementations of CEL are available, meeting Uber’s backend service requirements. Additionally, both implementations support lazy attribute fetching, meaning they only request the attribute values actually needed to evaluate the expression, improving efficiency.

A sample CEL expression looks like this:

resource.paymentType == ‘credit card’ && actor.location == resource.paymentLocationThis expression is evaluated against attribute values fetched at runtime to produce a true or false result.

To illustrate the practical benefits of ABAC, consider how Uber manages authorization for Apache Kafka topics.

Uber uses thousands of Kafka topics for event streaming across its platform. Each topic needs access controls to specify which services can publish messages and which can subscribe to receive messages. The Kafka infrastructure team is responsible for managing these policies.

With basic policies, the Kafka team would need to create individual policies for every topic. Given the sheer volume of topics, this would be impractical and time-consuming.

Uber has a service called uOwn that tracks ownership and roles for technological assets. Each Kafka topic can have roles assigned directly or inherited through the organizational hierarchy. One such role is “Develop,” which indicates responsibility for developing and maintaining that topic.

Using ABAC, the Uber engineering team created a single generic policy that applies to all Kafka topics:

effect: allow

actions: [admin]

resource: “uon://topics.kafka/production/*”

associations:

- target_type: EMPLOYEE

condition:

expression: ‘actor.adgroup.exists(x, x in resource.uOwnDevelopGroups)’Source: Uber Engineering Blog

The wildcard in the resource pattern means this policy applies to every Kafka topic. The condition checks whether the actor belongs to any Active Directory group that has the Develop role for the requested topic.

An attribute store plugin retrieves the list of groups with the Develop role for each topic from uOwn. This information becomes the resource.uOwnDevelopGroups attribute. When an employee attempts to perform an admin action on a topic, the authorization engine evaluates whether that employee belongs to one of the authorized groups.

This solution saved the Kafka team enormous effort. Instead of managing thousands of individual policies, they maintain one generic policy. As ownership changes in uOwn, authorization automatically adjusts without any policy updates.

The implementation of ABAC delivered multiple benefits across Uber’s infrastructure.

Authorization policies became more precise and fine-grained. Decisions could now consider any relevant attribute rather than just basic identity and group membership. This enabled security policies that more accurately reflected business requirements.

The system became more dynamic. When attribute values change in source systems like uOwn or employee directories, authorization decisions automatically adapt. No code deployment or policy update is required. This agility is critical in a fast-moving organization.

Scalability improved dramatically. A single well-designed ABAC policy can govern authorization for thousands or even millions of resources.

Centralization through the Charter made policy management easier. Rather than scattering authorization logic across hundreds of services, security teams can audit and manage policies in one place.

Performance remained excellent. Despite the added complexity of condition evaluation and attribute fetching, authorization decisions are still completed in microseconds due to local evaluation and on-demand attribute fetching.

Also, most importantly, ABAC separated policy from code. System owners can change authorization policies without building and deploying new code. This separation of concerns allows security policies to evolve independently from application logic.

Since implementing ABAC, 70 Uber services have adopted attribute-based policies to meet their specific authorization requirements. The framework provides a unified approach across diverse use cases, from protecting microservice endpoints to securing database access to managing infrastructure resources.

References: