2026-02-20 03:28:47

I was facilitating a workshop recently when someone asked one of my favorite questions about a graph on the screen: “So… what are we supposed to take away from this?”

Such a simple—and useful—question.

One challenge was that the graph was attempting to show multiple comparisons at once, so it wasn’t clear what mattered most. To further complicate things, the data in question spanned very different magnitudes, with one category dwarfing the rest.

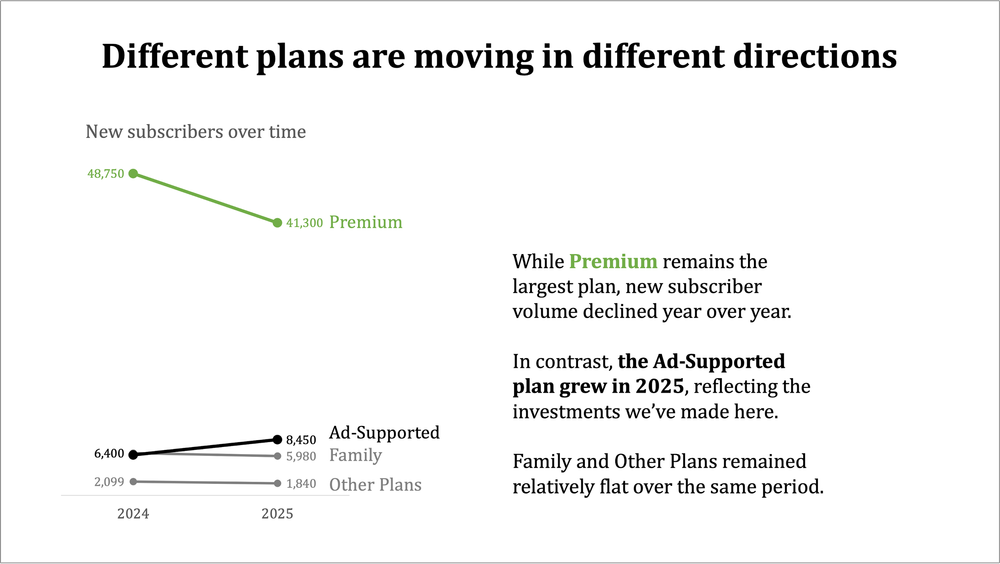

Here’s an anonymized version of the original slide, showing new subscribers to a streaming service by plan type across two years:

Let’s revisit the simple question that was raised: what are we supposed to take away from this?

The story could be about one plan dominating the rest. Alternatively, the long tail of niche plans might be worthy of review and discussion. Or perhaps there’s something interesting about the year-over-year shifts across plans.

Each of those takeaways can be found in the original slide, but it takes work to get there. If this is part of a routine report or update and no action is needed, it might be fine as is. However, if we want someone to understand one or more of the nuances at play and drive a discussion or decision, we can approach things more strategically. Part of doing this means choosing different visuals for different takeaways. Another aspect to consider is whether to aggregate and how to treat categories so that we can see important points—without overwhelming with detail.

Let’s tackle one specific takeaway at a time, intentionally choosing a visual that supports it.

If our goal is to clearly communicate that one plan accounts for the majority of new subscribers, instead of burying that insight in a crowded bar chart, we can make it explicit.

This view is useful to show concentration (alternatively, stacked vertical bars or even two pie charts could have worked here). By collapsing many categories into just two—Premium vs. everything else—we remove noise and make the dominant pattern unmistakeable. This kind of aggregation is most appropriate when the goal is orientation or framing (not detailed plan-level decisions). The audience no longer needs to scan bars, compare labels, or reconcile magnitudes. The message is immediate.

I’ve kept the words descriptive here, but you can imagine how we might use a view like this to prompt a specific discussion, for example, whether the business is overly dependent on a single plan, how resilient new subscriber growth would be if Premium performance changed, or to consider whether it makes sense to invest in Premium or elsewhere.

Speaking of elsewhere—if those non-Premium plans are key, we’ll want to approach things differently.

It can be challenging to effectively visualize numbers of very different magnitudes in a single graph. In the instance where one or a few categories are large, but the story is in the smaller ones, removing the large categories from the visual can sometimes make sense. Key is to retain the context of the omitted categories so things aren’t accidentally misinterpreted.

In this instance, we could use words to comment on the size of Premium, then focus the graph on the non-Premium plans. See below. (If you were still afraid it might be missed, you could even play with positioning a bar under the premium note that extends off the right side of the page.)

Treating Premium separately and removing it from the graph allows us to see what’s happening in the tail. Low volume doesn’t mean low value—but it does suggest a different set of strategic questions.

A view like this could be used to drive discussions about portfolio rationalization (are there too many low-impact offerings?), whether small plans are strategically important or operationally distracting, or where simplification might free up resources without materially impacting growth.

A third—and entirely different—focus is change over time. Which plans are gaining momentum, and which are losing it? A slopegraph can make those changes easy and fast to see.

A view like this could help frame discussions about where recent investments are paying off (or not), which plans may need renewed attention or repositioning, or simply to better understand how subscriber preferences are shifting year over year.

None of these views is the correct one. Each highlights a distinct takeaway—and is useful to drive a different type of conversation. The mistake isn’t choosing the “wrong” chart; it’s asking one chart to answer every question at once.

By separating stories—dominance, the long tail, and change over time—we reduce cognitive load and increase clarity. We also give ourselves flexibility: the same data can support different discussions, depending on what is important and which decisions need to be made.

You could even imagine a progression through these three views, with an overarching narrative to tie it all together. One chart doesn’t have to do it all. A thoughtful set of charts can tell a more effective story.

Meta lesson: start with the question or takeaway. Then choose a visual (or combination of visuals) that will help you make it unmistakeable.

To learn more about various graph types, check out the SWD chart guide.

2026-02-06 00:24:25

This article is part of our back-to-basics blog series called what is…?, where we’ll break down some common topics and questions posed to us. We’ve covered much of the content in previous posts, so this series allows us to bring together many disparate resources, creating a single source for your learning. We believe it’s important to take an occasional pulse on foundational knowledge, regardless of where you are in your learning journey. The success of many visualizations is dependent on a solid understanding of basic concepts. So whether you’re learning this for the first time, reading to reinforce core principles, or looking for resources to share with others—like our new comprehensive chart guide—please join us as we revisit and embrace the basics.

Most people are familiar with maps—from school, the news, and navigation apps—making them a natural option to explore when visualizing geographical data. In this article, we’ll explore one common map visual: the choropleth map. We will cover what it is, how to interpret it, when to use it, and common pitfalls to avoid when using one.

A choropleth map, sometimes called a filled map, is one way to display a numeric variable across a geographic area. They are often used to show population statistics, such as the Pew Research map below, which shows the importance of religion around the world.

SOURCE: pewresearch.org/religion/2022/12/21/key-findings-from-the-global-religious-futures-project

The level of the geographic grouping sets the boundaries for the regions, and each defined area is shaded to signify the value for that location. The map above is grouped by country, and their shading is determined by the color scale in the legend at the bottom: blues represent lower values, while oranges indicate higher values.

In a choropleth map, color fill is applied to help compare values across geographic areas. Often, darker or more intense colors represent the extreme values, making it quicker to see patterns and compare data across locations. When interpreting a choropleth map, it helps to pay close attention to both how the data is defined and how the colors are applied. Choropleths work best for normalized values, such as rates, percentages, or per‑capita figures, rather than raw totals, which can unfairly favor large or populous areas.

The ability to glean insights from a choropleth map is heavily influenced by the color scale used. While more color gradients could make it more complex to interpret, too few can make it hard to see small differences. For example, in the Pew Research example above, India and Pakistan are both dark orange, yet their values are quite different: India at 80% and Pakistan at 94%. Understanding how color ranges are defined is essential, since small changes in these cutoffs can shift which category a region falls into and, in turn, what patterns your audience perceives first.

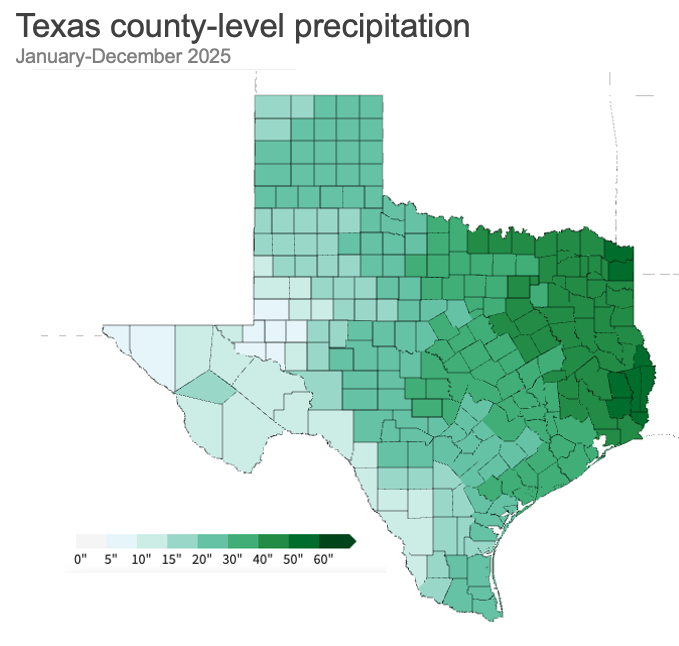

This type of map is most useful when the values being visualized are meaningfully tied to the geographic areas being displayed. For example, weather is specific to certain locations, so showing yearly rainfall by county in a given state could make sense if you want to display the differences across various locations.

SOURCE: ncei.noaa.gov/access/monitoring/climate-at-a-glance/county/mapping

The choropleth map above shows the total county-level precipitation across Texas for January to December 2025, with darker green representing wetter areas and lighter green representing drier areas relative to other parts of the state. East Texas stands out as much wetter, with many counties in the darkest green shades, indicating 40-plus inches of rain during the year. The west and southwest counties appear drier, with very light green counties showing lower annual precipitation totals. County-level variation is substantial, so state averages could mask important local differences in water availability, drought risk, and flood potential.

Although maps help visualize patterns and comparisons, they can be challenging to analyze and even misleading when large areas visually overpower smaller ones.

SOURCE: https://www.iihs.org/research-areas/fatality-statistics/detail/state-by-state

For instance, the graph above shows motor vehicle crash fatalities by state. At first glance, California and Texas jump out with the darkest shade of blue. However, these states are also among the largest in both population and land area, meaning there are more people and more roads where crashes can occur.

If you factor in population, the view normalized per 100,000 people would look like this.

SOURCE: https://www.iihs.org/research-areas/fatality-statistics/detail/state-by-state

Normalizing the metric creates a very different view of the data. Here, Wyoming and Mississippi have the highest rates. Wyoming stands out a bit more, given that it is larger on the map than Mississippi.

Even when the metric is appropriate, differences in the physical size of geographic areas can still influence what appears most important. Large states can dominate visually, while smaller states risk getting lost. To account for variation in each state's geographical footprint, you might opt for an alternative display that uses a consistent hexagonal shape to represent each state.

SOURCE: https://www.iihs.org/research-areas/fatality-statistics/detail/state-by-state

With a hex-tile map, you eliminate discrepancies in US state sizes, giving equal visual space to smaller states like Hawaii and larger states like Texas.

In addition to size, the way values are grouped into ranges (for example, equal intervals versus quantiles) and the choice of color scale also shape what patterns pop out first. Small changes in how you define the breaks between ranges can shift which group a place falls into, changing the visual story. Using a clear, ordered legend and an intuitive color progression from light (less) to dark (more) is important. And when missing data is present, make it visually distinct from true zeros so your audience does not confuse “no data” with “no phenomenon.”

Just because you have geographic data does not mean you must use a map. In some cases, not using a map may be a better option. For example, if you want your audience to more precisely compare the differences between each state and see how they rank, then an ordered horizontal bar chart could be worth exploring. Having a clear understanding of your message and how you want your audience to process the information will help you choose an appropriate view for the data.

Cartography, the field of map design, is a deep topic, and we’ve only covered a small bit here. If you want to explore maps further, check out these resources:

Listen to our podcast with cartographer Kenneth Field and visit his site.

Tackle a map-related exercise in the SWD Community.

Watch our live event about maps to explore other types, such as symbol maps and cartograms (available to premium members only).

Learn how to create a map in Excel and Tableau, including hex maps.

To explore other charts, browse the full collection in the SWD Chart Guide, or for resources to teach younger audiences, check out DaphneDrawsData.com.

2026-01-24 04:17:33

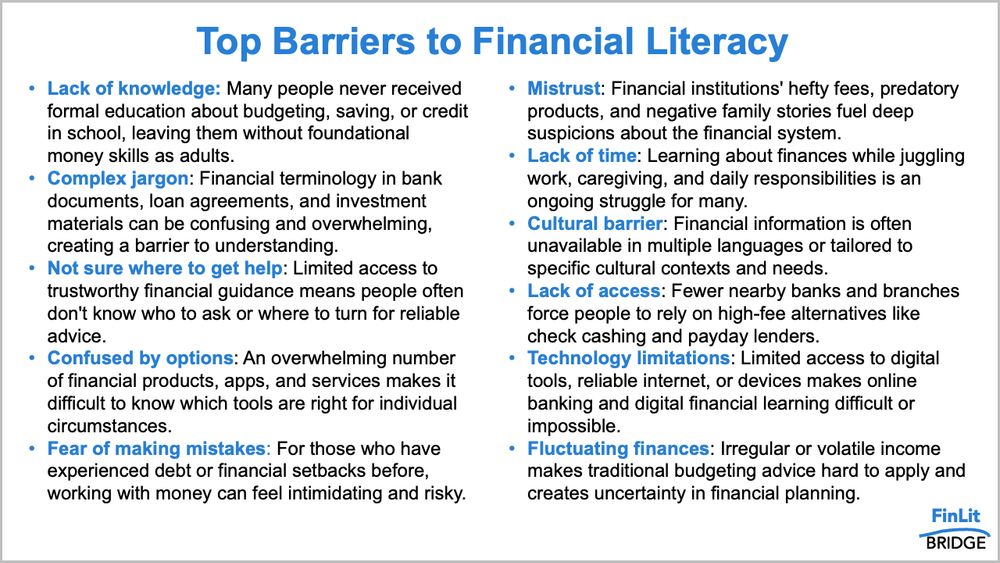

Sometimes the problem with a slide isn’t the content, rather the issues are the layout and structure. Recently, I worked with a client who had thoughtful content grounded in solid research, but the slide itself was a wall of text, making it impossible to digest and understand the message.

If you read the slide above carefully, you’ll notice it’s not wrong. The bullets are specific and describe real barriers. But imagine encountering this in a meeting or while checking your inbox. It’s hard to scan, and you’d likely give up reading after a couple of bullets. I’ll walk you through a simple transformation that reframes and reorganizes these same ideas so an audience can quickly grasp the information.

One challenge with the slide is that there is no breathing room. Allowing more space on the margins and between lines improves readability by reducing the overwhelming, cluttered feeling of the original view. One option is to reduce the font size to make more room, but that could make the resulting text hard to read. Another idea is to break the content into two columns and bump up the font size a bit.

The added whitespace around the margins and between the columns makes it a little less intimidating, but still a lot to process. Let’s take things a step further and add more white space between the bullets.

That’s much easier to scan, yet it still feels crowded because it has the same number of words. Next, let’s consider how to group these barriers to create greater visual hierarchy, making it less challenging for our audience to understand.

One helpful way to tame an overstuffed slide is to look for ways to group information. Our brains are better at processing and remembering a smaller amount. By chunking the bullets into a smaller set of categories, the content becomes easier to comprehend.

Looking at the original list, each item falls into one of three broad themes:

Things that make it hard to get started with money

A lack of options that work for someone’s specific situation

Barriers that tend to show up more in underserved communities

Instead of a single long list, we now have three categories we can use to organize the bulleted text. With defined groups, we can structure the slide around these themes to make it quick to scan.

I opted for a three-column layout to provide visual hierarchy, with pithy, enumerated headings at the top and more detail below as people traverse the information. Within each block of text, keywords and phrases are bolded to make it easier to scan the information. Also note how, with this layout, even if someone only reads the headings, they still get the main points.

The slide above is an improvement from the original, but it still contains quite a bit of text, making it challenging to consume in a live setting when your audience is also trying to pay attention to what you are saying. When presenting in person, apply animation to slowly introduce the information bit by bit, helping the audience follow along and avoid being overwhelmed by too much at once.

Also, look for ways to reduce the amount of text. When speaking to the content, you can elaborate on each point verbally rather than have all the words on the slide, as the added details would be spoken in the delivery.

If we consider all of the above steps together, we have turned the eleven disconnected bullets into a more effective slide. The content didn’t change much—the organization did.

For more examples of visual transformations, check out the before-and-afters in our makeover gallery, including how to apply similar techniques to emails and reports. If you want to practice improving a text-heavy slide, tackle this related exercise.

2026-01-21 06:14:16

“What do you do?”

It’s one of the most common questions we ask in casual conversation. It’s also one of the trickiest to answer—especially if your work involves data.

I learned this the hard way early in my career.

At that time, I worked in banking in Credit Risk Management. When someone asked what I did in a social setting, I’d respond with, “I’m a credit risk analyst.”

This was a guaranteed conversation-killer. I don’t think people were trying to be rude. It’s just that most people don’t have a mental model for what that title means. In the absence of understanding, the brain does the most efficient thing it can do: it moves on.

Looking back now, what I was doing was the professional equivalent of showing up to dinner and saying, “I’m a Level 3 wizard in the school of quantitative uncertainty.” It’s accurate—but not super helpful. It wasn’t because the role wasn’t interesting. It was meaningful work. But I didn’t know how to explain it.

Later, at Google, I figured out why.

Many job titles are designed for internal systems—org charts, hiring ladders, compensation bands. They aren’t necessarily intended to help another human being understand what you do. Titles are shorthand for insiders. To everyone else, they can feel like jargon, ambiguity, or worse: an insurmountable wall.

Even today, in the analytical world common job titles can leave people guessing:

Data Scientist

Decision Scientist

Insights Strategist

Analytics Partner

Business Intelligence Lead

Quantitative Researcher

Data Storyteller

To the people inside these fields, those titles signal important distinctions. Outside those circles, they may all sound like “does something with computers.”

Which brings me to my simple claim: explaining your job is a data storytelling opportunity.

Because it has the same root challenge:

the work is complex

the context is missing

the terms are unfamiliar

the audience doesn’t know what to listen for

So the solution looks familiar, too:

start where the audience is

provide context

make it concrete

explain why it matters

At Google, I worked in People Analytics. I quickly realized that leading with my job title (“People Analyst”) led to the same glazed-over look I’d gotten in banking.

I tried starting with context. Instead of saying “I’m a People Analyst,” I’d say something like: “Google is a very data-driven company. So much so that they use data not just for products and marketing—but also for one of their biggest assets: people. I work with data in HR. I help leaders understand things like what makes a great manager, or what influences whether someone stays at or leaves the organization.”

Same job. Totally different entry point. This mattered: people could track with the story because I wasn’t expecting them to already know what “People Analyst” meant. I was building their understanding from familiar ground: Google, data, decisions. That’s the heart of storytelling—making something relatable and understandable.

Recently, I was tagged in a LinkedIn post by a dad in Belgium. He shared a picture of his daughter reading Daphne Draws Data (that I used at the onset of this post) and described a moment that made me smile.

He wrote about how his kids described data after reading the book:

“Data is a beautiful princess!”—his two-year old.

“Data are numbers. And you can use data to help other people.”—his four-year-old.

Then he shared something even more meaningful: the book helped his kids understand what he does for work. Not “computers.” Not “math stuff.” Something closer to the truth: “He makes drawings of data to help people.”

I love this for so many reasons. First, kids are brilliant. Second: that framing is objectively better than most job descriptions I’ve heard from adults. And finally: it’s a reminder that what makes something understandable isn’t the accuracy of the label—it’s the clarity of the explanation. Titles rarely do that, but stories do.

If you’ve ever struggled to explain your job to someone outside your world, you’re not alone. You’re not failing. You’re just bumping up against a very normal communication gap.

Here are three tactics that can help.

1) Start with who you help or what you enable. Instead of a title, lead with a “helping” statement.

“I help leaders make better decisions using data.”

“I help teams understand what’s happening and what to do next.”

“I help organizations communicate complex information clearly.”

This gives people an immediate anchor.

2) Explain through an example. People understand examples far more quickly than definitions. Try a single sentence that starts with: “For example…”

“For example, I analyzed what predicts employee turnover so we could test ways to reduce it.”

“For example, I help teams figure out which steps in a process cause the most drop-off.”

“For example, I redesign charts to executives can quickly see what matters.”

3) Use a “because” sentence. This is one of my favorite patterns because it forces meaning.

“I work with data because it helps people make smarter decisions.”

“I analyze customer behavior because it helps teams build better products.”

“I teach data storytelling because insight isn’t useful if nobody understands it.”

On the surface, you might think this is about making conversation smoother at parties. That’s true, but it’s bigger than that. When we can’t explain our work, we make it harder for others to value it. This can have the undesirable result of making our impact invisible. We hand people a label and hope it does the heavy lifting. When people don’t understand, they disengage. This isn’t solely a social problem—it’s a professional one, too.

So yes: explaining your job is a data storytelling opportunity. And, like most data storytelling scenarios, it gets easier once you stop trying to be comprehensive and start trying to be clear.

Because the goal isn’t to sound impressive; the goal is to be understood.

Related: On the topic of job titles, if “Data Storyteller” makes your ears perk up—we recently opened a role here at SWD. We’ve had an incredible response and will be closing the application window soon. If you’re interested, please apply by January 23rd.

2026-01-11 03:00:00

At storytelling with data, our work is centered on helping people communicate more clearly with data—so they can build understanding, influence decisions, and drive positive change.

As our workshops and teaching continue to reach organizations around the world, we’re looking to add data storytellers to the SWD team.

This role is a great fit for someone who enjoys facilitating and teaching, cares deeply about craft and clarity, and is excited to help others build confidence communicating with data—using an established framework and real-world examples. In addition to workshop delivery, data storytellers contribute to shared teaching content (such as blog posts and videos) that extends our work beyond the classroom.

If this sounds like you—or someone you know—you can learn more and apply, or visit our careers page to find out what it’s like to work at SWD.

As always, thank you for inspiring the work we do and for helping us spread the word!

2026-01-07 22:00:00

One of the most common pitfalls in data visualization is manipulating axis scales in ways that distort the story. A frequent example is the use of logarithmic scales where they are not appropriate.

Let’s walk through a case where this choice can mislead, even if unintentionally.

Below is a scatterplot showing student participation in after-school sports programs across a school district. Each dot represents one school.

The horizontal axis shows the percentage of students participating in competitive (interscholastic) athletics. The vertical axis shows participation in casual (intramural) programs. At first glance, the data set seems to show a clear and linear upward trend: schools with more competitive participation tend to have more casual participation as well.

Let’s highlight a few individual schools for comparison.

Imagine we’re trying to compare schools’ rates of casual participation, which is on the vertical axis. Coos Bay and Jeffersonville (marked and labeled in orange) appear to be quite different in their casual participation rates, while Jeffersonville and Cortez (which is in blue) seem more similar. This impression is shaped by the vertical axis being on a logarithmic scale.

On a standard axis, values increase by equal increments (for example, 10%, 20%, 30%). You can see that on the horizontal axis in the above graph, and in the vast majority of data visualizations we commonly see and use in business.

A logarithmic scale, on the other hand, increases geometrically (such as 1%, 10%, 100%). From a visual perception standpoint, this stretches out gaps between smaller values and compresses larger ones.

That difference matters. It alters how we perceive the distances between points.

Here is the exact same data, but with a standard, arithmetic scale on the vertical axis.

Now we can see that Cortez and Jeffersonville are not as close, vertically as they initially appeared. Elk City stands out as significantly higher than all others. This version more accurately reflects the data's true distribution.

To be clear, logarithmic scales are not inherently wrong. In fact, they are essential in some situations.

A log scale is appropriate when:

The data spans several orders of magnitude (e.g., from tens to millions)

The values grow exponentially, as with compound interest or viral spread

The focus is on relative rather than absolute change

For example, a chart showing the growth of confirmed cases in the early stages of a pandemic might use a log scale to better compare countries with vastly different totals. In financial data, log scales can help normalize trends across portfolios with different starting values.

However, when working with percentages that fall within a consistent range—such as 0% to 100%—a logarithmic scale can be misleading unless the data has a clear exponential pattern.

So why use a log scale in the original example? Most often, it’s an attempt to make small values near the origin easier to distinguish. But manipulating the axis scale to make those differences more visible can distort the bigger picture.

If small values are important, a better approach is to zoom in intentionally.

In this version, we highlight the lower-left corner of the plot and then show that region in its own focused view.

Now, the differences between Coos Bay, Jeffersonville, and Cortez are clearly visible, without changing the underlying scale. More importantly, we maintain an accurate sense of proportion across the full dataset.

If your data does not have a logarithmic structure, avoid using a log scale as a visual shortcut. It can introduce confusion or mislead the viewer, even if the chart is technically accurate.

When small values matter, consider breaking them out into a secondary chart or zoomed-in view. Use your axes to reflect the nature of the data, not to force a certain look.

In short: let the data guide the scale, not the other way around.