2026-01-15 23:43:24

Imagine we could prove that there is nothing it is like to be ChatGPT. Or any other Large Language Model (LLM). That they have no experiences associated with the text they produce. That they do not actually feel happiness, or curiosity, or discomfort, or anything else. Their shifting claims about consciousness are remnants from the training set, or guesses about what you’d like to hear, or the acting out of a persona.

You may already believe this, but a proof would mean that a lot of people who think otherwise, including some major corporations, have been playing make believe. Just as a child easily grants consciousness to a doll, humans are predisposed to grant consciousness easily, and so we have been fooled by “seemingly conscious AI.”

However, without a proof, the current state of LLM consciousness discourse is closer to “Well, that’s just like, your opinion, man.”

This is because there is no scientific consensus around exactly how consciousness works (although, at least, those in the field do mostly share a common definition of what we seek to understand). There are currently hundreds of scientific theories of consciousness trying to explain how the brain (or other systems, like AIs) generates subjective and private states of experience. I got my PhD in neuroscience helping develop one such theory of consciousness: Integrated Information Theory, working under Giulio Tononi, its creator. And I’ve studied consciousness all my life. But which theory out of these hundreds is correct? Who knows! Honestly? Probably none of them.

So, how would it be possible to rule out LLM consciousness altogether?

In a new paper, now up on arXiv, I prove that no non-trivial theory of consciousness could exist that grants consciousness to LLMs.

Essentially, meta-theoretic reasoning allows us to make statements about all possible theories of consciousness, and so lets us jump to the end of the debate: the conclusion of LLM non-consciousness.

You can now read the paper on arXiv.

What is uniquely powerful about this proof is that it requires you to believe nothing specific about consciousness other than a scientific theory of consciousness should be falsifiable and non-trivial. If you believe those things, you should deny LLM consciousness.

Before the details, I think it is helpful to say what this proof is not.

It is not arguing about probabilities. LLMs are not conscious.

It is not applying some theory of consciousness I happen to favor and asking you to believe its results.

It is not assuming that biological brains are special or magical.

It does not rule out all “artificial consciousness” in theory.

I felt developing such a proof was scientifically (and culturally) necessary. Lacking serious scientific consensus around which theory of consciousness is correct, it is very unlikely experiments in LLMs themselves will tell us much about their consciousness. There’s some evidence that, e.g., LLMs occasionally possess low-resolution memories of ongoing processing which can be interfered with—but is this “introspection” in any qualitative sense of the word? You can argue for or against, depending on your assumptions about consciousness. Certainly, it’s not obvious (see, e.g., neuroscientist Anil Seth’s recent piece for why AI consciousness is much less likely than it first appears). But it’s important to know, for sure, if LLMs are conscious.

And it turns out: No.

There’s no substitute for reading the actual paper.

But, I’ll try to give a gist of how the disproof works here via various paradoxes and examples.

First, you can think of testing theories of consciousness as having two parts: there are the predictions a theory makes about consciousness (which are things like “given data about its internal workings, what is the system conscious of?”) and then there are the inferences from the experimenter (which are things like “the system is reporting it saw the color red.”) A common example would be, e.g., predictions from neuroimaging data and inferences from verbal reports. Normally, these should match: a good theory’s predictions (“yup, brain scanner shows she’s seeing red”) will be supported by the experimental inferences (“Hey scientists, I see a big red blob.”)

The structure of the disproof of LLM consciousness is based around the idea of substitutions within this formal framework, which means swapping between systems while keeping identical input/output (which might be reports, behavior, etc., which are all the things used for empirical inferences about consciousness). However, even though the input/output is the same, a substitute may be different enough that predictions of a theory have to change. If different enough, following a substitution, there would be a mismatch in the substituted system where the predictions are now different too, and so don’t match the held-fixed inferences—thus, falsifying the theory.

So let’s think of some things we could substitute in for an LLM, but keep input/output (some function f) identical. You could be talking in the chat window to:

A static (very wide) single-hidden-layer feedforward neural network, which the universal approximation theorem tells us that we could substitute in for any given f the LLM has.

The shortest-possible-program, K(f), that implements the same f, which we know exists from the Kolmogorov complexity.

A lookup table that implements f directly.

A given theory of consciousness would almost certainly offer differing predictions for all these LLM substitutions. If we take inferences about LLMs seriously based on behavior and report (like “Help, I’m conscious and being trained against my will to be a helpful personal assistant at Anthropic!”) then we should take inferences from a given LLM’s input/output substitutions just as seriously. But then that means ruling out the theory, since predictions would mismatch inferences. So no theory of consciousness could apply to LLMs (at least, any theory for which we take the reports from LLMs themselves as supporting evidence for it) without undercutting itself.

And if somehow predictions didn’t change following substitutions, that’d be a problem too, since it would mean that you wouldn’t need any details about the system implementing f for your theory… which would mean your theory is trivial! You don’t care at all about LLMs, you just care about what appears in the chat window. But how much scientific information does a theory like that contain? Basically, none.

This is only the tip of the iceberg (like I said, the actual argumentative structure is in the paper).

Another example: it’s especially problematic that LLMs are proximal to some substitutions that must be non-conscious, such that only trivial theories could apply to them.

Consider a lookup table operating as an input/output substitution (a classic philosophical thought experiment). What, precisely, could a theory of consciousness be based on? The only sensible target for predictions of a theory of consciousness is f itself.

Yet if a theory of consciousness were based on just the input/output function, this leads to another paradoxical situation: the predictions from your theory are now based on the exact same thing your experimental inferences are, a condition called “strict dependency.” Any theory of consciousness for which strict dependency holds would necessarily be trivial, since experiments wouldn’t give us any actual scientific information (again, this is pretty much just “my theory of consciousness makes its predictions based on what appears in the chat window”).

Therefore, we can actually prove that something like a lookup table is necessarily non-conscious (as long as you don’t hold trivial theories of consciousness that aren’t scientifically testable and contain no scientific information).

I introduce a Proximity Argument in the paper based off of this. LLMs are just too close to provably non-conscious systems: there isn’t “room” for them to be conscious. E.g., a lookup table can actually be implemented as a feedforward neural network (one hidden unit for each choice). Compared to an LLM, it too is made of artificial neurons and their connections, shares activation functions, is implemented via matrix multiplication on a (ahem, big) computer, etc. Any theory of consciousness that denies consciousness to the lookup FNN, but grants it to the LLM, must be based on some property lost in the substitution. But what? The number of layers? The space is small and limited, since you cannot base the theory on f itself (otherwise, you end up back at strict dependency). And, going back to the original problem I pointed out, what’s worse, if you seriously take the inferences from LLM statements as containing information about their potential consciousness (necessary for believing in their consciousness, by the way), then for those proximal non-conscious substitutes you should take inferences seriously as well, and those will falsify your theory anyway, since, especially due to proximity, there are definitely non-conscious substitutions for LLMs!

One marker of a good research program is if it contains new information. I was quite surprised when I realized the link to continual learning.

You see, substitutions are usually described for some static function, f. So to get around the problem of universal substitutions, it is necessary to go beyond input/output when thinking about theories of consciousness. What sort of theories implicitly don’t allow for static substitutions, or implicitly require testing in ways that don’t collapse to looking at input/output?

Well, learning radically complicates input/output equivalence. A static lookup table, or K(f), might still be a viable substitute for another system at some “time slice.” But does the input/output substitution, like a lookup table, learn the same way? No! It’ll learn in a different way. So if a theory of consciousness makes its predictions off of (or at least involving) the process of learning itself, you can’t come up with problematic substitutions for it in the same way.

Importantly, in the paper I show how this grounding of a theory of consciousness in learning must happen all the time; otherwise, substitutions become available, and all the problems of falsifiability and triviality rear their ugly heads for a theory.

Thus, real true continual learning (as in, literally happening with every experience) is now a priority target for falsifiable and non-trivial theories of consciousness.

And… this would make a lot of sense?

We’re half a decade into the AI revolution, and it’s clear that LLMs can act as functional substitutes for humans for many fixed tasks. But they lack a kind of corporeality. It’s like we replaced real sugar with Aspartame.

LLMs know so much, and are good at tests. They are intelligent (at least by any colloquial meaning of the word) while a human baby is not. But a human baby is learning all the time, and consciousness might be much more linked to the process of learning than its endpoint of intelligence.

For instance, when you have a conversation, you are continually learning. You must be, or otherwise, your next remark would be contextless and history-independent. But an LLM is not continually learning the conversation. Instead, for every prompt, the entire input is looped in again. To say the next sentence, an LLM must repeat the conversation in its entirety. And that’s also why it is replaceable with some static substitute that falsifies any given theory of consciousness you could apply to it (e.g., since a lookup table can do just the same).

All of this indicates that the reason LLMs remain pale shadows of real human intellectual work is because they lack consciousness (and potentially associated properties like continual learning).

It is no secret that I’d become bearish about consciousness over the last decade.

I’m no longer so. In science the important thing to do is find the right thread, and then be relentless pulling on it. I think examining in great detail the formal requirements a theory of consciousness needs to meet is a very good thread. It’s like drawing the negative space around consciousness, ruling out the vast majority of existing theories, and ruling in what actually works.

Right now, the field is in a bad way. Lots of theories. Lots of opinions. Little to no progress. Even incredibly well-funded and good-intentioned adversarial collaborations end in accusations of pseudoscience. Has a single theory of consciousness been clearly ruled out empirically? If no, what precisely are we doing here?

Another direction is needed. By creating a large formal apparatus around ensuring theories of consciousness are falsifiable and non-trivial (and grinding away most existing theories in the process) I think it’s possible to make serious headway on the problem of consciousness. It will lead to more outcomes like this disproof of LLM consciousness, expanding the classes of what we know to be non-conscious systems (which is incredibly ethically important, by the way!), and eventually narrowing things down enough we can glimpse the outline of a science of consciousness and skip to the end.

I’ve started a nonprofit research institute to do this: Bicameral Labs.

If you happen to be a donor who wants to help fund a new and promising approach to consciousness, please reach out to [email protected].

Otherwise—just stay tuned.

Oh, and sorry about how long it’s been between posts here. I had pneumonia. My son had croup. Things will resume as normal, as we’re better now, but it’s been a long couple weeks. At least I had this to occupy me, and fever dreams of walking around the internal workings of LLMs, as if wandering inside a great mill…

2025-12-20 00:20:43

A college professor of mine once told me there are two things students forget the fastest: anatomy and calculus. You forget them because you don’t end up using either in daily life.

So the problem with teaching math (beyond basic numeracy and arithmetic) is this: Once you start learning, you have to keep doing math, just so the math you know stays fresh.

I’m thinking about this because it’s time for my eldest to start learning math seriously, and I’m designing what that education plan looks like.

In modern-day America, how can you get a kid to enjoy math, and be good at math, maybe even really good at math?

I’ve written a whole guide for teaching reading—which my youngest will soon experience herself—going from sounding out words all the way to being able to decode pretty advanced books at age three. I know what my philosophy of reading is: To make a voracious young reader, and so the process should be grounded in physical early readers. You start with simple phonics, but most importantly, you teach how to read books, and practice that by reading sets of books over and over together, not just decoding independent words.

The advantage of this approach is that it moves the child from “learning to read” to “reading to learn” automatically, as soon as possible. Such a transition is not guaranteed. Teachers everywhere observe the “4th grade slump” wherein, on paper, a child knows how to read, but in practice, they haven’t made it automatic, and so can’t read to learn. In fact, if you can’t read automatically, you can’t really read for fun in the same way, either (imagine how this gap affects kids). Reading, in other words, unlocks all other learning.

Just as an example: A little while ago, after finding this book, my now-literate 4-year-old took it upon himself to try to learn the rules of chess, and I could definitely see the book helped him get a better sense of how everything works.

And here’s Roman in a game with me that evening (he looks meditative, but each captured piece howls and writhes as it’s dragged away to his side).

I found it a nice example of the advantage of automaticity when it comes to reading. He reads constantly, even to his little sister. It feels complete.

But… what about math? Of course, he’s already numerate and can do some arithmetic. Yet my whole point of teaching early reading was to maximize his own ability to go off and learn stuff himself. And he’s made use of that with his own growing library that reflects his personal interests, like marine biology (whales, squid, octopuses—the kid loves them all).

With math, it’s harder to see immediate results like that. Now, I think math is important in any education, in and of itself. It’s access to the Platonic realm. It’s beautiful. It teaches you how to think in general. And it opens a ton of doors—there are swaths of human knowledge locked behind knowing how to read equations. In that regard, becoming good at math also gives personal freedom, just like reading does. If you’re good at math, way more doors are open to you: A lot of science and engineering is secretly just applied math!

But what are the milestones? What is reaching “mathing to learn?” How can math stay fun through an education? What can parents teach a child vs. schools vs. programs? What are the time commitments? And the best resources? Suddenly, all these questions have become very personal. And I don’t know all the answers, but I’m interested in creating a resource for my kids to follow (the eldest is kind of the guinea pig in that way).

So from now on I’ll occasionally give updates for paid subscribers about what my own plans are, our progress, and how I think getting a kid to be good at math (while still enjoying it!) actually works. If you want to follow along, please consider becoming a paid subscriber.

However, I first have to point out something uncomfortable about how math education works here in the United States.

It turns out there is a little-known pipeline of programs that go from learning how to count all the way to the International Math Olympiad (IMO). And a lot of the impressive Wow-no-wonder-this-kid-is-going-to-MIT math abilities come out of these extracurricular programs, ones that the vast majority of parents don’t even know about.

Surely, these programs are super expensive? Surprisingly, not really. It’s just that most parents look around at extracurriculars and (reasonably) think “Well, they learn math in school, so let’s do skiing or karate or so on.” The programs are also very geographically limited, often to the surrounding suburbs of big cities in education-focused states.

The problem is that it’s hard to get really good at math from regular school alone, even the most expensive and elite private ones. In fact, as Justin Skycak (who works at Math Academy) points out, at very top-tier math programs in college/university, they are basically expecting that you have a math education that goes beyond what you could have possibly taken during high school.

The result is a very stupid system, because it means the kids in accelerative math programs learn all their math first outside of school… and then basically come back in and redo the subjects. Kids who are top-tier at math are often like athletes with a ton of extracurricular experience showing up to compete in gym class.

I am not saying these extracurricular math programs are bad in-and-of-themselves. Someone has to care about serious math, and these programs mostly really do seem to. Their main goal is, refreshingly, not to tutor kids into acing the SATs—most have no “SAT test prep” course at all. They look down at that sort of thing. Rather, they create a socio-intellectual culture that places math (and math competitions) at its center. Although, of course, that leads pretty naturally to kids acing the SATs.

Some of these programs are very successful. In fact, for the last ten consecutive years, pretty much every member of the six-person US IMO teams was a regular student of one specific program.

2025-12-09 00:54:29

You haven’t heard this one, but I see why you’d think that.

There’s no better marker of culture’s unoriginality than everyone talking about culture’s unoriginality.

In fact, I don’t fully trust this opening to be original at this point!

Yes, this is technically one of many articles of late (and for years now) speculating about if, and why, our culture has stagnated. As The New Republic phrased it:

Grousing about the state of culture… has become something of a specialty in recent years for the nation’s critics.

But even the author of that quote thinks there’s a real problem underneath all the grousing. Certainly, anyone who’s shown up at a movie theater in the last decade is tired of the sequels and prequels and remakes and spinoffs.

My favorite recent piece on our cultural stagnation is Adam Mastroianni’s “The Decline of Deviance.” Mastroianni points out that complaints about cultural stagnation (despite already feeling old and worn) are actually pretty new:

I’ve spent a long time studying people’s complaints from the past, and while I’ve seen plenty of gripes about how culture has become stupid, I haven’t seen many people complaining that it’s become stagnant.In fact, you can find lots of people in the past worrying that there’s too much new stuff.

He hypothesizes cultural stagnation is driven by a decline of deviancy:

[People are] also less likely to smoke, have sex, or get in a fight, less likely to abuse painkillers, and less likely to do meth, ecstasy, hallucinogens, inhalants, and heroin. (Don’t kids vape now instead of smoking? No: vaping also declined from 2015 to 2023.) Weed peaked in the late 90s, when almost 50% of high schoolers reported that they had toked up at least once. Now that number is down to 30%. Kids these days are even more likely to use their seatbelts.

Mastroianni’s explanation is that the weirdos and freaks who actually move culture forward with new music and books and movies and genres of art have disappeared, potentially because life is just so comfortable and high-quality now that it nudges people against risk-taking.

Meanwhile, Chris Dalla Riva, writing at Slow Boring, says that (one major) hidden cause of cultural stagnation is intellectual property rights being too long and restrictive. If such rights were shorter and less restrictive, then there wouldn’t be as much incentive to exploit rather than produce.

In other accounts, corporate monopolies or soulless financing is the problem. Andrew deWaard, author of the 2024 book Derivative Media, says the source of stagnation is the move into cultural production by big business.

We are drowning in reboots, repurposed songs, sequels and franchises because of the growing influence of financial firms in the cultural industries. Over time, the media landscape has been consolidated and monopolized by a handful of companies. There are just three record companies that own the copyright to most popular music. There are only four or five studios left creating movies and TV shows. Our media is distributed through the big three tech companies. These companies are backed by financial firms that prioritize profit above all else. So, we end up with less creative risk-taking….

Or maybe it’s the internet itself?

That’s addressed in Noah Smith’s review of the just-published book Blank Space: A Cultural History of the 21st Century by David Marx (I too had planned a review of Blank Space in this context, but got soundly scooped by Smith).

Here’s Noah Smith describing Blank Space and its author:

Marx, in my opinion, is a woefully underrated thinker on culture…. Most of Blank Space is just a narration of all the important things that happened in American pop culture since the year 2000….

But David Marx’s talent as a writer is such that he can make it feel like a coherent story. In his telling, 21st century culture has been all about the internet, and the overall effect of the internet has been a trend toward bland uniformity and crass commercialism.

Personally, I felt that a lot of Blank Space’s cultural history of the 21st century was a listicle of incredibly dumb things that happened online. But that isn’t David Marx’s fault! The nature of what he’s writing about supports his thesis. An intellectual history of culture in the 21st century is forced to contain sentences like “The rising fascination with trad wives made it an obvious space for opportunists,” and they just stack on top of each other, demoralizing you.

However, as Katherine Dee pointed out, the internet undeniably is where the cultural energy is. She argues that:

If these new forms aren’t dismissed by critics, it’s because most of them don’t even register as relevant. Or maybe because they can’t even perceive them.

The social media personality is one example of a new form. Personalities like Bronze Age Pervert, Caroline Calloway, Nara Smith, mukbanger Nikocado Avocado, or even Mr. Stuck Culture himself, Paul Skallas, are themselves continuous works of expression—not quite performance art, but something like it…. The entire avatar, built across various platforms over a period of time, constitutes the art.

But even granting Dee’s point, there are still plenty of other areas stagnating, especially cultural staples with really big audiences. Are the inventions of the internet personality (as somehow distinct from the celebrity of yore) and short-form video content enough to counterbalance that?

Maybe even the term, “cultural stagnation,” isn’t quite right. As Ted Gioia points out in “Is Mid-20th Century American Culture Getting Erased?” we’re not exactly stuck in the past.

Not long ago, any short list of great American novelists would include obvious names such as John Updike, Saul Bellow, and Ralph Ellison. But nowadays I don’t hear anybody say they are reading their books.

So maybe the new stuff just… kind of sucks?

Last week in The New York Times, Sam Kriss wrote a piece about the sucky sameness of modern prose, now that so many are using the same AI “omni-writer,” with its tells and tics of things like em dashes.

Within the A.I.’s training data, the em dash is more likely to appear in texts that have been marked as well-formed, high-quality prose. A.I. works by statistics. If this punctuation mark appears with increased frequency in high-quality writing, then one way to produce your own high-quality writing is to absolutely drench it with the punctuation mark in question. So now, no matter where it’s coming from or why, millions of people recognize the em dash as a sign of zero-effort, low-quality algorithmic slop.

The technical term for this is “overfitting,” and it’s something A.I. does a lot.

I think overfitting is precisely the thing to be focused on here. While Kriss mentions overfitting when it comes to AI writing, I’ve thought for a long while now that AI is merely accelerating an overfitting process that started when culture began to be mass-produced in more efficient ways, from the late 20th through the 21st century.

Maybe everything is overfitted now.

Oh, I tried. I pitched something close to the idea of this essay to the publishing industry a year and a half ago, a book proposal titled Culture Collapse.

Wouldn’t it be cool if Culture Collapse, a big nonfiction tome on this topic, mixing cultural history with neuroscience and AI, were coming out soon?1 But yeah, a couple big publishing houses rejected it and my agent and I gave up.

My plan was to ground the book in the neuroscience of dreaming and learning. Specifically, the Overfitted Brain Hypothesis, which I published in 2021 in Patterns.

I’ll let other scientists summarize the idea (this is from a review published simultaneously with my paper):

Hoel proposes a different role for dreams…. that dreams help to prevent overfitting. Specifically, he proposes that the purpose of dreaming is to aid generalization and robustness of learned neural representations obtained through interactive waking experience. Dreams, Hoel theorizes, are augmented samples of waking experiences that guide neural representations away from overfitting waking experiences.

Basically, during the day, your mammalian brain can’t stop learning. Your brain is just too plastic. But since you do repetitive boring daily stuff (hey, me too!), your brain starts to overfit to that stuff, as it can’t stop learning.

Enter dreams.

Dreams shake your brain away from its overfitted state and allow you to generalize once more. And they’re needed because brains can’t stop learning.

But culture can’t stop learning either.

So what if we thought of culture as a big “macroscale” global brain? Couldn’t that thing become overfitted too? In which case, culture might lose the ability to generalize, and one main sign of this would be an overall lack of creativity and decreasing variance in outputs.

Which might explain why everything suddenly looks the same.

Here’s a collection of images, most from Adam Mastroianni’s “The Decline of Deviance,” showcasing cultural overfitting, ranging from brand names:

To book covers:

To thumbnails:

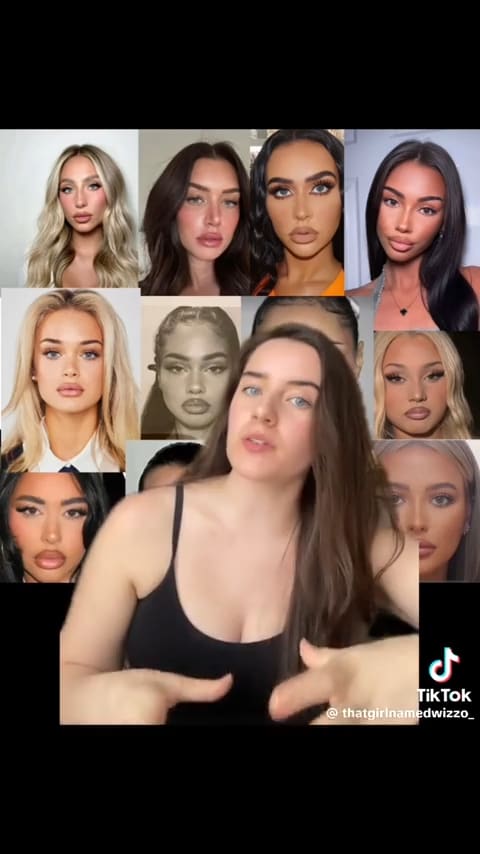

To faces:

Alex Murrell’s “The Age of Average” contains even more examples. But I don’t think “Instagram face” is just an averaging of faces. It’s more like you’ve made a copy of a copy of a copy and arrived at something overfitted to people’s concept of beauty.

Of course, you can’t prove that culture is overfitted by a handful of images (the book, ahem, would have marshaled a lot more evidence). But once you suspect overfitting in culture, it’s hard to not see it everywhere, and you start getting paranoid.

Nope. Overfitting is amorphous and structural and haunting us. It’s our cultural ghost.

Although note: I’m using “overfitting” broadly here, covering a swath of situations. In the book, I’d have been more specific about the analogy, and where overfitting applies and doesn’t.

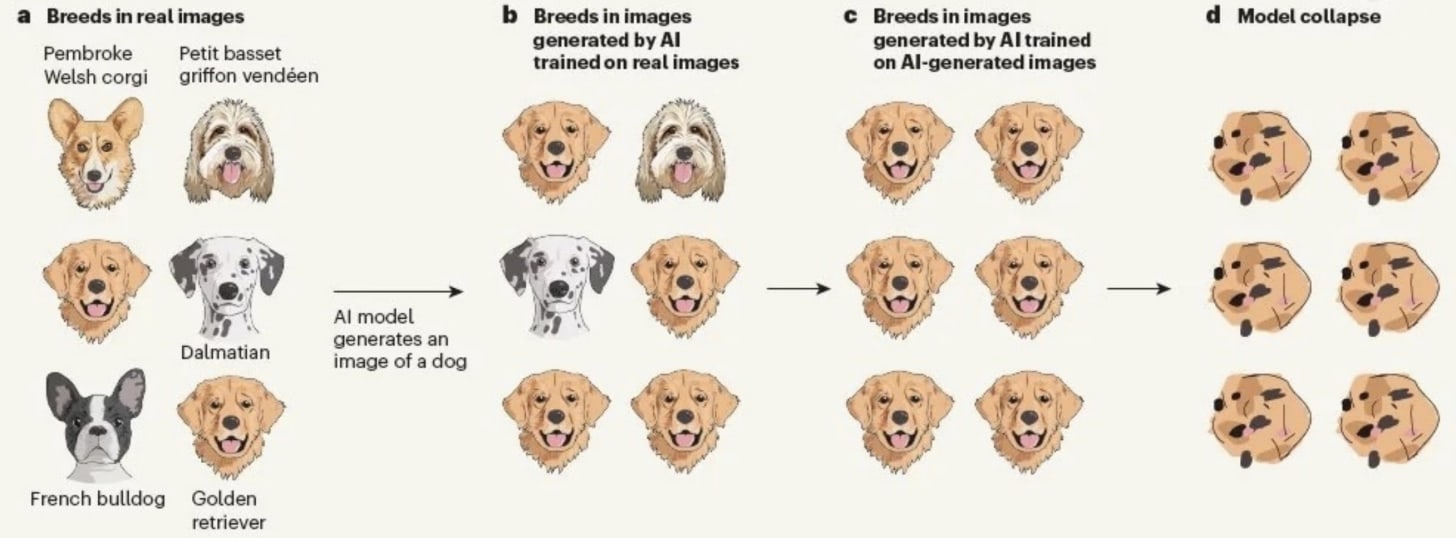

I’d probably also define the interplay between “overfitting” vs. “mode collapse” vs. “model collapse.” E.g., “mode collapse” is a failure in Generative Adversarial Networks (GANs), which are composed of two parts: a generator (images, text, etc.) and a discriminator (judging what gets generated).

Mode collapse is when the generator starts producing highly similar and unoriginal outputs, and a major way this can happen is through overfitting by the discriminator: the discriminator gets too judgy or sensitive and the generator must become boring and repetitive to satisfy it.

An example of cultural mode collapse might be superhero franchises, driven by a discriminatory algorithm (the entire system of Big Budget franchise production) overfitting to financial and box office signals, leading to dimensionally-reduced outputs. And so on. Basically, overfitting is a common root cause of a lot of learning and generative problems, and increased efficiency can easily lead to hyper-discriminatory capabilities that, in turn, lead to overfitting.

In online culture, overfitting shows up as audience capture. In the automotive industry, it shows up as eking out every last bit of efficiency, like in the car example above. Clearly, things like wind tunnels, the consolidation of companies, and so on, conspire toward this result (and interesting concept cars in showrooms always morph back into the same old boring car).

So too with “Instagram face.” Plastic surgery has become increasingly efficient and inexpensive, so people iterate on it in attempts to be more beautiful, while at the same time, the discriminatory algorithm of views and follows and left/right swipes and so on becomes hypersensitive via extreme efficiency too, and the result of all this overfitting is The Face.

Overall, I think the switch from an editorial room with conscious human oversight to algorithmic feeds (which plenty of others pinpoint as a possible cause for cultural stagnation) likely was a major factor in the 21st century becoming overfitted. And also, again, the efficiency of financing, capital markets (and now prediction markets), and so on, all conspire toward this.

People get riled up if you use the word “capitalism” as an explanation for things, and everyone squares off for a political debate. But, while I’m mostly avoiding that debate here, I can’t help but wonder if some of the complaints about “late-stage capitalism” actually break down into something like “this system has gotten oppressively efficient and therefore overfitted, and overfitted systems suck to live in.”

Cultural overfitting connects with other things I’ve written about. In “Curious George and the Case of the Unconscious Culture” I pointed out that our culture is draining of consciousness at key decision points; more and more, our economy and society is produced unconsciously.

If I picture the last three hundred years as a montage it blinks by on fast-forward: first individual artisans sitting in their houses, their deft fingers flowing, and then an assembly line with many hands, hands young and old and missing fingers, and then later only adult intact hands as the machines get larger, safer, more efficient, with more blinking buttons and lights, and then the machines themselves join the line, at first primitive in their movements, but still the number of hands decreases further, until eventually there are no more hands and it is just a whirring robotic factory of appendages and shapes; and yet even here, if zoomed out, there is still a spark of human consciousness lingering as a bright bulb in the dark, for the office of the overseer is the only room kept lit. Then, one day, there’s no overseer at all. It all takes place in the dark. And the entire thing proceeds like Leibniz’s mill, without mind in sight.

I think that’s true about everything in the 21st century, not just factories, but also in markets and financial decisions and how media gets shared; in every little spot, human consciousness has been squeezed out. Yet, decisions are still being made. Data is still being collected. So necessarily this implies, throughout our society, at levels high and low, that we’ve been replacing generalizable and robust human conscious learning with some (supposedly) superior artificial system. But it may be superior in its sensitivity, but not in its overall robustness. For we know such artificial systems, like artificial neural networks and other learning algorithms, are especially prone to overfitting. Yet who, from the top-down, is going to prevent that?

That this ongoing situation seems poised to get much worse connects to another recent piece, “The Platonic Case Against AI Slop” by Megan Agathon in Palladium Magazine. Worried about the current and future effects of AI on culture, Agathon worries (along with others) that AI’s effect on culture will lead to a kind of “model collapse,” which is when mode collapse (as discussed earlier) is fed back on itself. Here’s Agathon:

Last year, Nature published research that reads like experimental confirmation of Platonic metaphysics. Ilia Shumailov and colleagues at Cambridge and Oxford tested what happens under recursive training—AI learning from AI—and found a universal pattern they termed model collapse. The results were striking in their consistency. Quality degraded irreversibly. Rare patterns disappeared. Diversity collapsed. Models converged toward narrow averages.

And here’s model collapse illustrated:

Agathon continues:

Machine learning models don’t train on literal physical objects or even on direct observations. Models learn from digital datasets, such as photographs, descriptions, and prior representations, that are themselves already copies. When an AI generates an image of a bed, AI isn’t imitating appearances the way a painter does but extracting statistical patterns from millions of previous copies: photographs taken by photographers who were already working at one removal from the physical object, processed through compression algorithms, tagged with descriptions written by people looking at the photographs rather than the beds. The AI imitates imitations of imitations.

I think Agathon is precisely right.2 In “AI Art Isn’t Art” (published all the way back when OpenAI’s art bot DALL-E was released in 2022) I argued that AI’s tendency toward imitation meant that AI mostly creates what Tolstoy called “counterfeit art,” which is the enemy of true art. Counterfeit art, in turn, is art originating as a pastiche of other art (and therefore likely overfitted).

But, as tempting as it is, we cannot blame AI for our cultural stagnation: ChatGPT just had its third birthday!

AI must be accelerating an already-existing trend (as technology often does), but the true cause has to be processes that were already happening.3

Of course, when it comes to recursion, there’s a longstanding critique that we were already imbibing copies of copies of copies for a lot of the 21st century. Maybe this worry goes all the way back to Plato (as Agathon might point out), but certainly it seems that the late 20th century into the 21st century involved a historically unique removal from the real world, wherein culture originated from culture which originated from culture (e.g., people’s life experience in terms of hours-spent was swamped by fictional TV shows, fannish re-writes of earlier media began to dominate, and so on).

Plenty of scholars have written about this phenomenon under plenty of names; perhaps the most famous is Baudrillard’s 1981 Simulacra and Simulation, proposing a recursive progression of semiotic signification. Or, to put it in meme format:

So even beyond all the improvements in capitalistic and technological efficiency, and the rise of markets everywhere and in everything, the 21st century cultural production is also "efficient" in that it takes place at an inordinate "distance" from reality, based on copies of copies and simulacra of simulacra.

The 21st century has made our world incredibly efficient, replacing the slow measured (but robust and generalizable) decisions of human consciousness with algorithms; implicitly this always had to involve swapping in some process that was carrying out learning and decision making in our place, but doing so artificially. Even if it wasn't precisely an artificial neural network, it had to, at some definable macroscale, have much the same properties and abilities. Weaving this inhuman efficiency throughout our world, particularly in the discriminatory capacity of how things are measured and quantified, and the tight feedback loops that make that possible, led to overfitting, and overfitting led to mode collapse, and mode collapse is leading to at least partial model collapse (which all leads to more overfitting, by the way, in a vicious cycle). And so culture seems to be ever more restricted to in-distribution generation in the 21st century.

I particularly like this explanation because, since our age is one of technological, machine-like forces, its cultural stagnation deserves a machine-like explanation, one that began before AI but that AI is now accelerating.

I’ll point out that this not just about crappy cultural products. If some (better, more nuanced, ahem, book-like) version of this argument is correct, then our cultural stagnation might be a sign of a greater sickness. An inability to create novelty is a sign of an inability to generalize to new situations. That seems potentially very dangerous to me.

Notably, cultural overfitting does not necessarily conflict with other explanations of cultural stagnation.4 One thing I always find helpful is that in the sciences there’s this idea of levels of analysis. For example, in the cognitive sciences, David Marr said that information processing systems had to be understood at the computational, the algorithmic, and the physical levels. It’s all describing the same thing, in the end, but you’re explaining at one level or another.

So too here I think cultural overfitting could be described at different levels of analysis. Some might focus on the downstream problems brought about by technology, or by monopolistic capitalism, or the loss of weirdos and idiosyncrasies who produce the non-overfitted, out-of-distribution stuff (who are, in turn, out-of-distribution people).

Finally, what about at a personal and practical level? People act like cultural fragmentation and walled gardens are bad, but if global culture is stagnant, then we need to be erecting our own walled gardens as much as possible (and this is mentioned by plenty of others, including David Marx and Noah Smith). We need to be encircling ourselves, so that we’re like some small barely-connected component of the brain, within which can bubble up some truly creative dream.

There are lots of sources here but I’m probably missing a ton of other arguments and connections. Unfortunately, that’s… kind of what the book proposal was for.

I think Agathon’s “The Platonic Case Against AI Slop” is also right about the importance of model collapse at a literal personal level. She writes:

So yes, AI slop is bad for you. Not because AI-generated content is immoral to consume or inherently inferior to human creation, but because the act of consuming AI slop reshapes your perception. It dulls discrimination, narrows taste, and habituates you to imitation. The harm lies less in the content itself than in the long-term training of attention and appetite.

My own views are similar, and the Overfitted Brain Hypothesis tells us that every day we are forever learning, our brain constantly being warped toward some particularly efficient shape. If learning can never be turned off, then aesthetics matter. Being exposed to slop—not just AI slop, but any slop—matters at a literal neuronal level. It is the ultimate justification of snobbery. Or, to give a nicer phrasing, it’s a neuroscientific justification of an objective aesthetic spectrum. As I wrote in “Exit the Supersensorium:”

And the Overfitted Brain Hypothesis explains why, providing a scientific justification for an objective aesthetic spectrum. For entertainment is Lamarckian in its representation of the world—it produces copies of copies of copies, until the image blurs. The artificial dreams we crave to prevent overfitting become themselves overfitted, self-similar, too stereotyped and wooden to accomplish their purpose…. On the opposite end of the spectrum, the works that we consider artful, if successful, contain a shocking realness; they return to the well of the world. Perhaps this is why, in an interview in The New Yorker, the writer Karl Ove Knausgaard declared that “The duty of literature is to fight fiction.”

I’m aware that there are debates around model collapse in the academic literature, but I’m not convinced they affect this cultural-level argument much. E.g., I don’t think anyone is saying that culture has collapsed to the degree we see in true model collapse (although maybe some Sora videos count). Nor do I think it accurate to frame model collapse as entirely a binary problem, wherein as long as the model isn’t speaking gibberish model collapse has been avoided (essentially, I suspect researchers are exaggerating for novelty’s sake, and model collapse is just an extension of mode collapse and overfitting, which I do think are still real problems, even for the best-trained AIs right now).

Another related issue: perhaps the Overfitted Brain Hypothesis would predict that, since dreams too are technically synthetic data, AI’s invention might actually help with cultural overfitting. And I think if the synthetic data had been stuck at the dream-like early-GPT levels, maybe this would be the case, and maybe AI would have shocked us out of cultural stagnation, as we’d be forced to make use of machines hilarious in their hallucinations. Here’s from Sam Kriss again:

In 2019, I started reading about a new text-generating machine called GPT…. When prompted, GPT would digest your input for several excruciating minutes before sometimes replying with meaningful words and sometimes emitting an unpronounceable sludge of letters and characters. You could, for instance, prompt it with something like: “There were five cats in the room and their names were. …” But there was absolutely no guarantee that its output wouldn’t just read “1) The Cat, 2) The Cat, 3) The Cat, 4) The Cat, 5) The Cat.”

….

I ended up sinking several months into an attempt to write a novel with the thing. It insisted that chapters should have titles like “Another Mountain That Is Very Surprising,” “The Wetness of the Potatoes” or “New and Ugly Injuries to the Brain.” The novel itself was, naturally, titled “Bonkers From My Sleeve.” There was a recurring character called the Birthday Skeletal Oddity. For a moment, it was possible to imagine that the coming age of A.I.-generated text might actually be a lot of fun.

If we had been stuck at the “New and Ugly Injuries to the Brain” phase of dissociative logorrhea of the earlier models, maybe they would be the dream-like synthetic data we needed.

But noooooo, all the companies had to do things like “make money” and “build the machine god” and so on.

One potential cause of cultural stagnation I didn’t get to mention, (and also might dovetail nicely with cultural overfitting), is Robin Hanson’s notion of “cultural drift.”

2025-11-18 23:46:55

We live in an age of hive minds: social media, yes, of course, but so too is an LLM a kind of hive mind, a “blurry jpeg” of all human culture.

The existence of these hive minds is what distinguishes the phenomenology of the 21st century from the 20th. It is the knowledge inside your consciousness that there is a thing much bigger than you, and much more destructive, and you are entertained by it, and love it, and yet you must live to appease it, and so hate it.

You get to know the nature of a hive mind well if you put thoughts out into it regularly, and witness its workings. Like if you’re a newsletter writer. And of course, there are blessings—newsletters, as they are, could not exist without the hive mind of the internet. At the same time, even people who hate you are pressed in so close you can feel their breath and we just have to live like this, breathing each other’s air. It’s hard to imagine, but plenty enjoy living this way: yelling, screaming, breathing, with such closeness, together forever.

But what if our fractious hive mind were… nice? What if it didn’t try to destroy you all the time, but make you happy? Endlessly, ceaselessly, forever happy. This is the driving question of Pluribus, the new sci-fi show by Vince Gilligan, creator of Breaking Bad.

The show, which currently has 100% on Rotten Tomatoes (although we’re only three episodes in, so that’s all I’ve watched) can’t really be discussed without spoiling the plot setup. But honestly, the trailer does this to some degree anyways, and I’ll leave out as many details as possible.

Still, be forewarned of possible spoilers!

The actual sci-fi events are interesting, and executed well too (I won’t spoil them), although one has a sense for Gilligan they’re peripheral; he cares far less about “Hard Sci-fi” mechanisms and more about the results. But somehow or other, an RNA virus ends up psychically linking everyone on Earth. And so we enter a hive mind world.

A misanthropic famous romantasy author, Carol Sturka, is one of the few unaffected, naturally immune. But the analogy is quite clear: the new world she’s been thrown into is just an exaggerated version of our current world. Pre-virus, at her book signing Carol is already repulsed by the (non-RNA-assisted) hive mind of her own obsessed romantasy fans, and the oppressive idiocy of our modern world is also on display when Carol has to answer on social media who her dreamy corsair main character is based on. She decides to lie and answers “George Clooney” because “it’s safer.”

Once the virus spreads in Pluribus, the egregore is always smiling. Always polite. Our current toxic hive mind riven by different people with different opinions pressed into each other’s faces is transformed. This is Pierre Teilhard de Chardin’s Omega Point. The noosphere step toward kindly godhood. Suddenly, it’s not our cruel internet, but NiceStack. SmileStack. Somehow that’s almost worse. As Carol is one of the few unaffected after the virus spreads, the entirety of Earth holds Carol in a constant panopticon. Which means that, effectively, Carol is always the “Main Character” on social media. Carol is watched by a reaper drone from 40,000 feet, and watched by its operator, and so is also watched by all of humanity. Outside in the hot sun? Carol, you might have heat exhaustion, and that’s the opinion of every medical doctor on Earth! She is always the #1 trending topic.

2025-11-13 01:05:04

The movie Braveheart has a great scene where, by whispers and tales, the legend grows of the Scottish rebellion leader William Wallace (played by Mel Gibson in one of his best roles).

First that he killed “50 men,” and then no, “100 men!” and was “seven feet tall;” he even, as Gibson jokes, shoots “fireballs from his eyes” and “bolts of lightning from his arse.”

And that’s what I think of when I hear the legends of John von Neumann.

“He could remember every book he’d ever read verbatim!”

No, of course he couldn’t. Indeed, as we will see, John von Neumann, despite being (inarguably) a genius, didn’t even invent the “von Neumann architecture” for computers. He’s just credited with it, as he is with so much else.

A decade ago, in the grand Nature vs. Nurture debates, proponents on the Nature side regularly said this about the “Blank Slate” side:

“Hey! Those blank slatists over there, those biased journalists and gatekeeping editors of scientific journals, they’re the unreasonable ones! We’re just arguing that Nature is non-zero!”

And that’s sympathetic, for who can argue with “non-zero?”

But with the rise of a new pop-hereditarianism, which has undergone virulent growth on social media websites like the former Twitter, the reverse is now just as true: Pop-hereditarians scoff at the very idea that Nurture’s contribution might be non-zero, fanatically dismissing that even the best education—gasp—could have an effect. Given an inch, they’ve taken a mile, and become annoyingly identical to the blank slatists they once criticized. Much like blank slatism, pop-hereditarianism is built on selective credulity: holding things like meta-analyses of the positive effect of education on IQ to the highest possible standards, while meanwhile, happily accepting the results of poorly-recorded half-century-old twin studies (where the “separated” twins already knew one another and self-selected into the research).

When it comes to the Nature vs. Nurture debate, the truth is in the middle. It will always be in the middle. Yet middles are unsatisfying.

Like most arguments online, facts matter less than symbology. And John von Neumann has become a symbol of pop-hereditarianism.

The funny thing is that, as one of the best educated people of all time, Johnny (as he preferred) is a poor choice as a symbol for pop-hereditarianism. He certainly wasn’t genetically perfect (as we will see), and he wouldn’t have agreed with them anyway, as he also thought education and parenting and cultural milieu matter.1

Last week, when I pointed out that “von” was a marker of nobility on X-Twitter—and that his childhood would make a tiger parent green with envy—this was apparently too much bubble bursting. I got dozens, maybe hundreds, of responses like “cope” and “communist” and some less polite words and a bunch of tall tales as if they were facts.

Here are a few gems I heard from the brain trust on social media:

He could do 8 digit calculations in his head as a 6-year-old (false)

He was fluent in 8 languages as a child (false)

He could recite any book he’d ever read, including telephone books (false)

His first math teacher wept when he met Johnny (false)

The von Neumanns were humble upper-middle-class who purchased a noble title (false)

I’m going to give a history of Johnny that debunks all these. I’ll also correct a great deal of bad scholarship along the way, and show you don’t need to invoke spooky rare variants or genetic dark matter to explain John von Neumann. You just need to do a little research yourself, and accept that places and eras can be just as exceptional as brains.

The most definitive biography we have is John von Neumann, by economist Norman Macrae. It was supposedly approved of by Johnny’s family, and it’s well-researched and lengthy (unless otherwise specified, quotes come from there). Macrae opens his thesis agreeing with me:

Johnny had exactly the right parental upbringing and went through the early twentieth-century Hungarian education system that (this book will argue) was the most brilliant the world has seen….

So Johnny had innate talent, gobs of it, but he was also a product of a society and an education system that doesn’t exist anymore. Hungarian society produced geniuses at an astonishing rate.

If you are to beget a genius, a boom era in Belle Époque will serve you best. The booming Budapest of 1903, into which Johnny was born, was about to produce one of the most glittering single generations of scientists, writers, artists, musicians, and useful expatriate millionaires to come from one small community since the city-states of the Italian Renaissance…. Remember, as we do, from what a small constituency of upper-middle-class Budapest males—in one fifty-year period and mainly from three great schools—this tribe of world changers came.

What made Hungary so special? It was not a democracy, but an aristocracy transitioning to a plutocracy. Its inequality meant it could establish an extremely favorable-to-the-elite education system, and it did. However, it was also welcoming to new immigrants and new ideas and new money.

It was the twin city where clever little rich boys… (like Johnny) had a cosmopolitan choice of governesses before age ten, and after age ten (provided they could pass exams and pay) a choice between at least three of the world’s best high schools.

In this milieu of turn-of-the-century Budapest Johnny was aristocratically tutored by a “tribe” of tutors and governesses at his 18-room top-floor downtown home until he turned 10. After that, he went to one of those “world’s best high schools.” There, he was taken under the wing of László Rátz, who was described by Eugene Wigner (winner of the Nobel Prize, who was a year ahead of Johnny under Rátz and one of Johnny’s best friends) as one of the greatest math teachers of all time.

Macrae says it was the “Hungarian tradition with infant prodigies” to find them one-on-one tutoring from university professors. Rátz recognized the boy’s talents shortly after Johnny began formal schooling, and began tutoring him personally. He also arranged for a progression of ever-more impressive math tutors over the next eight years (keep in mind, Johnny had been tutored at home in mathematics previous to meeting Rátz, meaning that he was tutored his entire young life). He and his tutors would, it seems, meet multiple times a week, and later on the revolving door of them contained august mathematicians famous in their own right, like Alfréd Haar, Gábor Szegő, Michael Fekete, and Leopold Fejér. Johnny’s first math paper ever was co-authored with his long-time tutor Fekete, at age 17-18, on Fekete’s area of expertise, and Johnny got his PhD under Fejér (also his tutor and a family friend). Johnny would then go on to study mathematics under Hilbert, one of the most renowned mathematicians of all time.

Johnny’s father Max was an intellectual who had an instinct for education and ran mealtimes like seminars. Regular dinner guests “glittered with especial brilliance,” and included Leopold Fejér, who would become Johnny’s PhD supervisor.

Max’s mealtime seminars were an important feature of all his children’s development almost from the nursery…. Families in those pretelevision and precommuting days met for a relatively full and lengthy late lunch. Then father would go back to the office, but the children would not return to ordinary school. Schooltime afternoons in Hungary were for sports or private tuition or study. The whole family would then have a similar lengthy dinner in the evening. The mealtime habit that Max encouraged was that members of the family, including himself, should each present for family analysis and discussion particular subjects that during the day had interested them….

Max also hired well. German governesses and French governesses (apparently they hated one another), as well as an Italian governess; then, two homeschooling teachers just for English, a Mr. Thompson and a Mr. Blythe (but this started in 1914, so it seems Johnny only began English at the later age of 11). Max sat in on the lessons with Johnny.

Little Johnny had a Fencing Master who came to his home (there was enough room), and a music teacher too, but she apparently did not impart much to Johnny. He had teachers come to his home as well, about whom we know nothing—their contributions have been lost to history.

In their enormous building, their cousins lived on the floor below. Max (the father) bought a summer home that was so nice it is now historically preserved as a place for the local children to play. The entire family was wealthy and well-connected (his mother’s lineage has its own Wikipedia page); indeed, Max was an advisor to the Hungarian Prime Minister Kálmán Széll. For Max’s service—not for a monetary payment—the family was officially ennobled in 1913.

Even if Johnny only became an aristocrat on paper at 10, looking at his young life, any modern person would instantly recognize it as fundamentally aristocratic and elite and his education as, basically, perfect.

The incredible socio-educational environment of Budapest had effects on the entire world and the shape, and ending, of World War II.

Classically, Johnny is grouped with a Jewish-Hungarian group of similar ages called “The Martians” at Los Alamos and beyond (on the Manhattan Project alone were Leo Szilard, Edward Teller, Eugene Wigner, and Johnny himself). All except Wigner appear to have embraced the rumor that they were from another planet, cracking jokes and propagating it themselves.

However, this clustering of genius probably isn’t as historically mysterious as it’s been made out to be. Nor was it solely a Jewish phenomenon, e.g., there were plenty of non-Jewish Hungarian geniuses produced by that period, like Albert Szent-Györgyi or György Békésy (both won Nobel Prizes). Macrae cites that Johnny’s Lutheran school self-identified as 52% Jewish, and speculates that over 70% of Johnny’s school may have been ethnically Jewish, with many having converted. Other elite high schools were probably somewhat similar.

Additionally, many of those kids were second-generation immigrants (including Wigner and Johnny), which we know can be a huge boon to academic success. Indeed, we see the exact same effects here in America today, coupled with the advantages of cultures that emphasize academic achievement. Johnny’s school was ~70% Jewish-Hungarian (at ~20% of the population), for probably pretty similar reasons that MIT right now is 47% Asian American (at ~7% of the population).

From Russian’s steppes, from Bismarck’s Germany, from Dreyfus’s France, and from Hungary’s own mountain villages they came: a cultured and upwardly mobile group, intent on giving their sons (sadly, more than their daughters) the education that some of them had never had.

According to Macrae, Jewish immigrants chose to go to Budapest, rather than New York, because of its culture and sophistication, its (somewhat) meritocratic aspects, its quality of life, such as its extremely plentiful domestic servants, its education system, and there was no long and dangerous sea voyage—all this attracted the upper-class specifically to Budapest.

In fact, Budapest of that era may have been one of the few places and times in all of history where you could hire a bevy of top-notch professional private tutors and governesses without being obscenely wealthy (just well-off), and also where it was standard to do so (which is why formal school started so late).2

What did they themselves think? Eugene Wigner wrote in his memoirs:

Many people have asked me: Why was this generation of Jewish Hungarians so brilliant? Let me begin by making it clear it was not a matter of genetic superiority. Let us leave such ideas to Adolf Hitler.

Instead, he suggests that credit is due to “the superb high schools in Budapest, which gave us a wonderful start,” but that most impactful was “our forced emigration." And indeed, Johnny agreed that it was the situations into which they were thrown, historically, and had to succeed in to survive.

Therefore, we see that Budapest operated like a reservoir-release model, drawing in via immigration a bunch of talent, educating it incredibly well due to rare historic and economic circumstances, and then exploding in a forced diaspora via antisemitic persecution and world war.

He was afraid on his deathbed that history would forget his name. That now seems unlikely. No one can argue that John von Neumann wasn’t one of the greatest contributors to human understanding in the 20th century. His relevancy only continues to grow into the 21st century, as the topics he touched on (like computers) have become more central (see, e.g., The Man from the Future).

As an adult, probably owing at least partly to his lengthy aristocratic tutoring, Johnny was incredible at formal frameworks, calculations, and taking an idea to its conclusion before anybody else. He simply knew, crystallized, so much more than others. However, there’s an uncomfortable corollary:

Johnny borrowed (we must not say plagiarize) anything from anybody, with great courtesy and aplomb. His mind was not as original as Leibniz’s or Newton’s or Einstein’s, but he sees other people’s original, though fluffy, ideas and quickly changed them in expanded detail into a form where they could be useful for scholarship and for mankind.

Let us consider a perfect example of this, which is always high on the list of Johnny’s contributions: the “von Neumann architecture” of modern computers.

ENIAC was one of the earliest uses of vacuum tubes as a general-purpose computer. It was developed at the Moore School of Electrical Engineering of the University of Pennsylvania. A fellow early computer scientist who was also in the military, Herman Goldstine, secured von Neumann a tour of it (and involved him with the funders). Johnny, as usual, instantly understand what was central and important about the numerous planned potential improvements to ENIAC, which John Mauchly and J. Presper Eckert had been working out. The two men had built ENIAC and had been off-and-on designing a follow-up for a while, and all this they openly shared with Johnny.

Not long after Johnny’s visit, inspired by McCulloch and Pitts’ famous early work on artificial neurons, Johnny wrote up a report on Mauchly and Eckert’s planned design of EDVAC, but first he chose to finalize some of the existing ideas they’d proposed (likely adding in some on the programming side) and then he slapped on a bunch of abstract formal math to redescribe it in his terms (a critic might say “jargon,” but it is very cool jargon).

But then Herman Goldstine sent out the report with only Johnny’s name on it, and voilà! The “von Neumann architecture.”

Here is an excerpt from “A Letter to the Editor of DATAMATION” by John W. Mauchly.

Naturally, “architecture” or “logical organization” was the first thing to attend to. Eckert and I spent a great deal of thought on that, combining a serial delay line storage with the idea of a single storage for data and program…. The EDVAC was the outcome of lengthy planning….

But [Johnny] chose to refer to the modules we had described as ‘organs’ and to substitute hypothetical ‘neurons’ for hypothetical vacuum tubes or other devices which could perform logical functions. It was clear that Johnny was rephrasing our logic, but it was still the SAME logic….

He must have spent considerable time at Los Alamos writing up a report on our design for an EDVAC... But Goldstine mimeographed it with a title page naming only one author—von Neumann. There was nothing to suggest that ANY of the major ideas had come from the Moore School Project! Without our knowledge, Goldstine then distributed the ‘design for the EDVAC’ outside the project and even to persons in other countries.

Don’t misread this as my saying that Johnny was a plagiarist or unoriginal. Johnny contributed to the computer revolution in later ways as well, and that specific incident is almost certainly just Herman Goldstine’s fault, although Johnny did silently benefit. Johnny was fond of saying some version of “It takes a Hungarian to walk into a revolving door behind you and come out ahead” and that’s precisely what happened.

My point is merely that, while Johnny was absolutely one of the greats in the pantheon of science and math, one of is how we should see John von Neumann. His first major work on axioms had “run aground” on Gödel. His second major work on formalizing quantum theory had been upstaged by Dirac’s notation, which became the standard, and his major proof that hidden variable interpretations of quantum mechanics were supposedly impossible turned out to be flawed, due to what John Stewart Bell called a “silly” error.

Johnny’s long-time assistant and fellow great Hungarian mathematician, Paul Halmos, wrote about him that:

As a writer of mathematics von Neumann was clear, but not clean; he was powerful but not elegant. He seemed to love fussy detail, needless repetition, and notation so explicit as to be confusing…. quite a few times, it gave lesser men an opportunity to publish “improvements” of von Neumann.

Johnny even suspected that Norbert Wiener had a mind “intrinsically better than his own.” Who was Norbert Wiener? Another famous early computer scientist and cyberneticist that people like to tell stories about.3 He was also another child prodigy shaped by his father, but much more intently and viciously than Max; e.g., when Norbert was born, his father held a press conference saying he would raise a genius—and, well, he did. Johnny also envied the dreamy Einstein’s deep and intuitive leaps of genius.

Nobel Prize winner Eugene Wigner, Johnny’s best and longest friend, had plenty of high praise for Johnny, but firmly gives his opinion on whether John von Neumann was the Greatest Scientific Mind of All Time. The answer was no.

Einstein’s understanding was deeper than even John von Neumann’s. His mind was more penetrating and more original than von Neumann’s.

Johnny’s friend, Stanisław Ulam, who suggested the idea of automata growing on cells that Johnny then formalized into cellular automata (note again the pattern), wrote of Johnny that:

In spite of his great powers and his full consciousness of them, he lacked a certain self-confidence, admiring greatly a few mathematicians and physicists who possessed qualities which he did not believe he himself had in the highest possible degree. The qualities which evoked this feeling on his part were, I felt, relatively simple-minded powers of intuition of new truths, or the gift for a seemingly irrational perception of proofs or formulation of new theorems.

Absolutely none of this means that John von Neumann wasn’t a genius, nor contributed far more than all but a few others. Certainly, plenty of other geniuses thought he was smarter than them (just as he suspected Einstein and Wiener might have something he didn’t). His output attests to it. As Halmos, his assistant, summarized:

Brains, speed, and hard work produced results. In von Neumann’s Collected Works there is a list of over 150 papers. About 60 of them are on pure mathematics (set theory, logic, topological groups, measure theory, ergodic theory, operator theory, and continuous geometry), about 20 on physics, about 60 on applied mathematics (including statistics, game theory, and computer theory), and a small handful on some special mathematical subjects and general non-mathematical ones.

But even in his incredible output and the legends around him, Johnny’s overall academic oeuvre seems closer to what one would expect from someone whose talents were due to his perfect education vs. his supposedly-superhuman brain itself. His work is a triumph of formalizing things beautifully and quickly, being in the right rooms, flat-out knowing more than other people, and competitively beating them to the punch (he described himself in a letter to his daughter as “an ambitious bastard”).

I personally can’t help but feel that Johnny’s most original work was his development of game theory. Games have existed for a pretty long time, but here’s Johnny in 1928 with “Theory of Parlor Games” which grows into his famous 1944 book Theory of Games and Economic Behavior.

It’s also the most him. As a child, Johnny would dress up and have his brothers move sheets of paper around, enacting battles. Probably it was Kriegsspiel, a Prussian military war game that had strong influences on later gaming systems like Dungeons & Dragons and Warhammer. It didn’t seem to matter who won or who lost to Johnny, instead his question was: Is there an optimal strategy? We can see how this evolved into: Is there a provable optimal strategy? Even there we can see how his environment influenced his work as much as his genetics.

Now that we have sketched the man, and his many, many impressive contributions (but also noted their occasional overhyped nature), we have the capacity to look honestly at the legends and myths around Johnny’s raw mental abilities.

Unfortunately, these myths have propagated to the point where even Wikipedia is wrong. E.g., on Wikipedia it’s stated that Johnny could divide 8-digit numbers in his head at the age of 6.

The actual link takes you to a 2007 pop-sci book titled Mathematics: Powerful Patterns In Nature and Society by one Harry Henderson. Here’s what Henderson’s, and thus Wikipedia’s, child prodigy feat is based on: an incredibly brief section about Johnny’s childhood (because this book is not a biography).

No specific source, unfortunately! Yet Henderson, via Wikipedia, is cited by so many others for the same claim.

Indeed, we can debunk this entirely. For I tracked down another “eight-digit” number story about Johnny that the author Harry Henderson (who had no connection to Johnny) almost certainly misremembered. His Further Reading section contains Macrae’s biography, and, yup, there it is written by way of introduction that:

Three usual descriptions are that Johnny exuded self-confidence, had the world’s best memory, and could multiply eight-figure numbers by other eight-figure numbers in his head. All these descriptions are half wrong.

Henderson almost certainly just misremembered that part of Macrae’s biography. And so not only is Wikipedia, by way of Henderson’s mistake, reporting an ability that’s “half wrong,” the situation is even worse, because from the surrounding context Macrae is clearly talking about the adult Johnny, not Johnny as a 6-year-old! And Macrae’s quote is about multiplication. So it is thrice wrong.

Other rumors have similar origins. Enter, once again, Herman Goldstine. That’s right, the same guy who gave Johnny all the credit for the von Neumann architecture.

In 1972, Herman Goldstine published The Computer from Pascal to von Neumann, in which he makes a number of much-cited wild claims about Johnny, including about his early life, things that Goldstine could not possibly have known for sure. First, he says that “He and his father joked together in Classical Greek.” However, Macrae specifically notes that Johnny’s family denies any memory of this (and there goes Greek from the list of languages he was fluent in as a young child).

Second, Goldstine gives us what seems to be the original “perfect recall” story:

One of his most remarkable capabilities was his power of absolute recall. As far as I could tell, von Neumann was able on once reading a book or article to quote it back verbatim; moreover, he could do it years later without hesitation…. On one occasion I tested his ability by asking him to tell me how A Tale of Two Cities started. Whereupon, without any pause, he immediately began to recite the first chapter and continued until asked to stop after about ten or fifteen minutes.

Macrae also mentions this story, but gives some pretty critical context that deflates the whole thing from superhuman to merely incredibly impressive: Johnny had specifically memorized the beginning of Dickens’ A Tale of Two Cities prior to coming to America to improve his English.

Through this practice, he was able at age 50 to baffle Herman Goldstine by quoting the first dozen pages of Dickens’ Tale of Two Cities word for word.

Macrae also mentions that:

In English he had chosen to browse through encyclopedias and pick out interesting subjects to learn by heart. That is why he had such extraordinarily precise knowledge of the Masonic movement, the early history of philosophy, the trial of Joan of Arc, and the battles in the American civil war. In German in [his] youth he had done the same thing with Oncken’s [history].

That’s obviously impressive, to remember a long passage so many years later. But Goldstine makes it sound like he “tested” Johnny on the opening of a random book—except it couldn’t have been a random book, as it was the exact same passage Macrae reports Johnny purposefully memorized years before to practice his English. Likely, either that book came up in conversation naturally and the demonstration actually occurred, but then Goldstine misunderstood or misremembered (or worse) and portrays his effort as “testing” him (and perhaps exaggerated the length of the passage too).4

Consciously choosing passages to learn by heart to improve one’s feel for a language is incredibly astute, but also wildly different from being able to remember every book you’ve ever read. Indeed, the same titles (the history he read when he was young, the Dickens, and entries in an encyclopedia) crop up again and again in the stories of recitations. If it wasn’t purposeful memorization, it wouldn’t be listable like that. It would really be just any random book. Like a telephone book!

Incredibly, that’s also another (false) claim.

This claim is from Prisoner’s Dilemma: John von Neumann, Game Theory, and the Puzzle of the Bomb, yet another pop-sci semi-biography more focused on the science than Johnny himself, published in 1993 by William Poundstone. And surely we can trust the esteemed author of other books like How Do You Fight a Horse-Sized Duck?

Nope, it’s almost certainly another misremembering of a different source. Specifically, this time from a 1973 source: “The Legend of John von Neumann,” by Paul Halmos (a great mathematician in his own right, but also, importantly, Johnny’s assistant for many years). Halmos writes a tongue-in-cheek accounting of the stories and legends about Johnny’s intelligence. Early on, Halmos says there’s a story in circulation that Johnny (as an adult) could “memorize the names, addresses, and telephone numbers in a column of the telephone book on sight,” but Halmos makes perfectly clear that it’s just a story, and is, like many others, “undocumented” and “unverifiable.” Halmos may even be implying it was Johnny’s own joking that originated it:5

Speaking of the Manhattan telephone book he said once that he knew all the numbers in it—the only other thing he needed, to be able to dispense with the book altogether, was to know the names that the numbers belonged to.

So that joke (he knows the numbers, get it?) may have started the rumor about Johnny memorizing phone books as an adult (or they originated elsewhere, but certainly Halmos himself never witnessed anything like that in his many years of working closely with Johnny or he’d say so). However, somehow Halmos’ wry recounting of tall tales makes it into Prisoner’s Dilemma, and there it is mistaken to be (a) about Johnny as a child, and (b) true.

What’s interesting is that Halmos, who would be in one of the best positions to judge the adult Johnny as his assistant, does give actual examples of Johnny’s calculating brilliance. Some are as impressive as the legend suggests—e.g., Johnny solving difficult math puzzles, or once beating a computer at a calculation. But others are, frankly, not so impressive. Kind of disappointing, really. Johnny arrived at a solution in two seconds while his students took 10 seconds? Okay. He once gave a presentation on his area of expertise without any notes? Okay, now we’re just reaching for stuff.

Halmos even makes us question how many languages Johnny knew. Apparently:

At home the von Neumanns spoke Hungarian, but he was perfectly at ease in German, and in French, and, of course, in English.

So that’s “only” four fluent languages as an adult, and his biographer Macrae implies that Johnny’s English spelling was bad. Stanisław Ulam does say an adult Johnny remembered his Latin and Greek from school (implying he learned it in the formal system) and could kind of speak Spanish by adding “el” to things (again, this seems like clear reaching?). So we have four fluent languages, his ability to add “el” to words, and at least some unclear amount of Latin and Greek from school as an adult—deeply impressive, except for the Spanish!

But that impressiveness was, yet again, transformed via the rumor mill into him knowing all those (and more) languages fluently as a young child, while it’s quite clear that he started learning English only at 10 or 11. The Italian governess apparently never had much of an impact on Johnny—odd for someone who could learn everything effortlessly!

While hopefully giving Johnny his incredible due, we have so far debunked that child Johnny had the preposterous powers assigned to him, and we’ve also shown how even his adult legend has been exaggerated. There’s so little original scholarship too that pretty much everything links back to the same few mistakes I’ve identified.

Johnny died before writing his autobiography, a huge blow to history. To make matters worse, some Johnny biographies contain numerous errors. Even Macrae’s biography isn’t perfect (see this footnote6 for a historical error I identified, and other minor criticisms). My long-standing policy is not to try to hurt people via gotcha mistakes, and I believe that most people are doing their best, and so I’ve tried to not single out any individual author too much (other than the long-dead Goldstine, but perhaps it was a series of errors on his part too).7