2026-02-27 19:21:01

Congratulations on saying the biggest number, Paramount. $111B for a company that a year ago had a market cap of around $20B. For a company that shrunk in their most recent quarter, and in fact, for the entire year, with revenue down 5% to $37.3B. Paramount may not be buying the Titanic, but only because they already own that IP.

At the same time, kudos to Netflix for showing true discipline. Ted Sarandos kept insisting they would, but that's obviously far easier said than done when you've already talked yourself into a deal which you thought you had "won". They undoubtedly anticipated somewhat of a circus when they swooped in and stole it from under the nose of Paramount – the original bidder, remember – in December, but it turned into a full-on clown show.1 It turned into a battle against not just Paramount (as expected), but also politicians (to be expected), and Wall Street (probably should have been expected). Perhaps the real wild card though was the hatred from Hollywood itself (more on this in a minute). So it was clearly better to swallow that pride and walk away with a nearly $3B check of pure profit.

In a way, not bad for a quarter's worth of work distraction. It's almost exactly what Netflix made in actual profit last quarter.

Predictably, investors love this move. Netflix's stock has popped nearly 10% in after-hours trading. The share price had been ground down almost 25% since the deal was announced. The message was clear: you're the present and future of entertainment, why are you putting this albatross around your neck? The century-old past of entertainment stuck in perpetual decline?

At the highest level, it's why Netflix's deal was a surprise in the first place. Sure, you take a look at the deal, because why not? But nothing in Netflix's history suggested that they would take this too seriously. But Ted Sarandos surprised us. Clearly he saw a path to take such a storied legacy and shift it into the future. Netflix has proven their worth as an IP accelerant, what if they bought perhaps the best library available? It's an interesting idea, though I'm still not sure it made any business sense at $83B. At $100+B – the amount Netflix probably would have had to counter with (with a discount for the TV assets they wouldn't be buying, which Paramount will be buying) – I mean...

Of course, Netflix could have absorbed such a cost. It's a $400B company (well, before this deal, anyway) – double Disney! Paramount Skydance? They're worth $11B. Yes, they're paying almost exactly $100B more than they're worth for WBD. Yes, it's looney. But really, it's leverage.

To be clear, Netflix was going to pay for the deal with debt too, but they have a clear path to repay such debts. They have a great, growing business. They don't require the backstop of one of the world's richest men, who just so happens to be the father of the CEO. How on Earth is Paramount going to pay down this debt? I'm tempted to turn to another bit of Paramount IP for the answer:

1. Step one

2. Step two

3. ????

4. PROFIT!!!

Or maybe David Ellison should start an AI company, raise billions, then merge it with Paramount. I mean, this has worked in the past for deals that make absolutely no sense on paper!

But really, the answer will undoubtedly be a combination of huge cuts – "synergies" – mixed with the hope that rates keep going down so this can all be constantly refinanced with the buck being passed around until they figure out a way to spin out some assets and burden them with the debt. You know, just like David Zaslav was about to do!

I'm being harsh. The truth is that this is the deal that I thought Paramount should do! As Skydance was in the midst of acquiring National Amusements, and thus, Paramount, I wrote the following in July 2024:

There's been a lot of talk amidst the Paramount dealings that WBD might be a good home/partner. What if, once the Skydance/Paramount deal is closed, *they actually buy WBD*? Yes, there are debt issues, but a year from now, hopefully WBD head David Zaslav will have a better answer and path there. Ellison has spoken a few times about Paramount+ in particular. Most assume they'll either spin it off or merge it with another player, like WBD's Max or Comcast's Peacock. And perhaps they will. But again, I'm not sure they shouldn't just buy *all of* WBD to bulk up into one of the major players themselves.

Well, they took my advice. And just over a year later, with their deal finally closed, they made their bid. But that bid was $19/share – and a mixture of cash and stock. That would have valued WBD at just below $50B (before their own aforementioned sizable debt was taken into account). After eight more offers from Paramount, and yes, the one from Netflix (including switching their own offer from cash/stock to all cash), here we are.

Anyone who took issue with David Zaslav's pay package should apologize immediately. He may not have been great at running WBD's actual business, but the financial engineering required here to turn $50B into $80B into $111B in just a few short months – again, while the core business declined – is truly something.

But again, while this deal makes no sense financially, it does feel like Paramount needed it. For Netflix, Warner Bros was a nice-to-have. For Paramount, it was existential. Without the Warner Bros studio, which, before a last-minute holiday surge by Disney was the number one movie studio for much of last year, Paramount was the distant fifth place player in a group of five. Adding WB vaults them close to or at the top with Disney.

Meanwhile, in streaming, Paramount+ is also the fifth place player, but there, it could be worse: they could be Peacock. Still, despite some decent numbers – thanks Taylor Sheridan (who subsequently bailed) and the NFL – they were far behind the "major" players. Including, yes, HBO Max. This deal vaults them near the top there too. Ahead of Disney but behind... Netflix.

So yeah, you can see why Paramount felt the need to win this deal, no matter the cost. Without it, they were a sub-scale player. With it, they're a real player.

Of course, it's in a game stuck in secular decline. Disney makes most of their money from the theme parks and cruises, not the movies. There's a reason why Parks chief Josh D'Amaro is the new CEO. Yes, the IP fuels it and keeps the flywheel going, but even Disney has to manage the fact that the actual movie business is simply not a great one anymore.

You'd think Hollywood would recognize this. But it's hard when their jobs literally rely on them not recognizing it. They're blinded by box office results that exist in a magical realm where inflation doesn't seem to. If we look at tickets sold – butts in seats – the situation looks dire. And it looks even worse if you put it through the lens of per capita moviegoing in the US over time.

Netflix carved a new path forward in the form of streaming. No, it's not as good of a business as the heyday of movie theaters – or even DVD sales – but it clearly works for Netflix. They're on their way to becoming the first $1T media company. Again, they thought Warner Bros' IP could have accelerated that, but now they'll find another way. One big question: without the need for Sarandos' endless promises of doing proper theatrical releases and windows, will Netflix still go down this path? I still think they will – unless Sarandos decides the path forward with Hollywood is more scorched Earth in light of their reaction to his deal.

I wouldn't be shocked if Netflix goes the other way: aiming to show Hollywood what they missed by moving to dominate the box office themselves. Then again, I predicted this move long before this actual deal.

Perhaps it's simply, "They won. We lost. Next."2

Here's the thing: Hollywood absolutely shot themselves in the foot here. They thought the Netflix/Warner deal signaled the end of their industry, when really it showcased the best possible path forward. To be fair, it's not like the industry loves this Paramount deal either, but what they wanted was no deal. For Warner Bros to continue on as it was, forever. That was simply not tenable and not an option. If Netflix was a path to growth, and Paramount is a path to slower decay, the status quo would have been a quicker collapse under the burden of steady, managed decline.

I'm not trying to be a dick, I'm trying to paint a realistic picture. The only studio that can survive on its own is the one that has for the past century: Disney. And again, that's thanks to their other businesses propping up the studio. For a long while this was cable. Now it's the aforementioned parks.3 There's a reason why every other studio has spent much of the past 100 years being passed around various conglomerates like trading cards. These are not great businesses! Certainly not in our modern age. And when a modern age player came calling, Hollywood freaked the fuck out and threw a tantrum until they walked away. Nice work.

I'll end by once again quoting some Warner Bros IP, fittingly from the fictional media mogul I kicked off by quoting. "Money wins."

But really, it's more like: "Debt wins." Good luck.

1 Strange how this keeps happening with Skydance deals? Also, I'm not really going to delve into the political aspects here, but I very much look forward to future reporting on that particular aspect, which sure seems to have a strong waft of a bunch of bullshit. ↩

2 Though "next" remains figuring out a way to combat YouTube. You know, the real competition here... To that end, probably not crazy to think that Netflix may be able to buy at least some of these assets – at far more firesale prices! – in a few years... ↩

3 Yes, Warner Bros has a small parks business too, thanks mainly to Harry Potter and deals with Universal. Paramount has been trying to get back into the game (after selling off some amusement parks back in the day). ↩

2026-02-27 00:42:56

The year was 2012. Tim Cook had only been in place as (permanent) CEO of Apple for a handful of months. During their Q2 earnings call, when asked about Microsoft's strategy of converging the laptop and tablet with their then-forthcoming Windows 8 operating system, Cook had the quip ready:

"You can converge a toaster and a refrigerator, but those things are probably not going to be pleasing to the user."

A lot can change in 14 years. Including, it seems, kitchens...

2026-02-25 23:34:11

"The only problem with Microsoft is that they just have no taste."

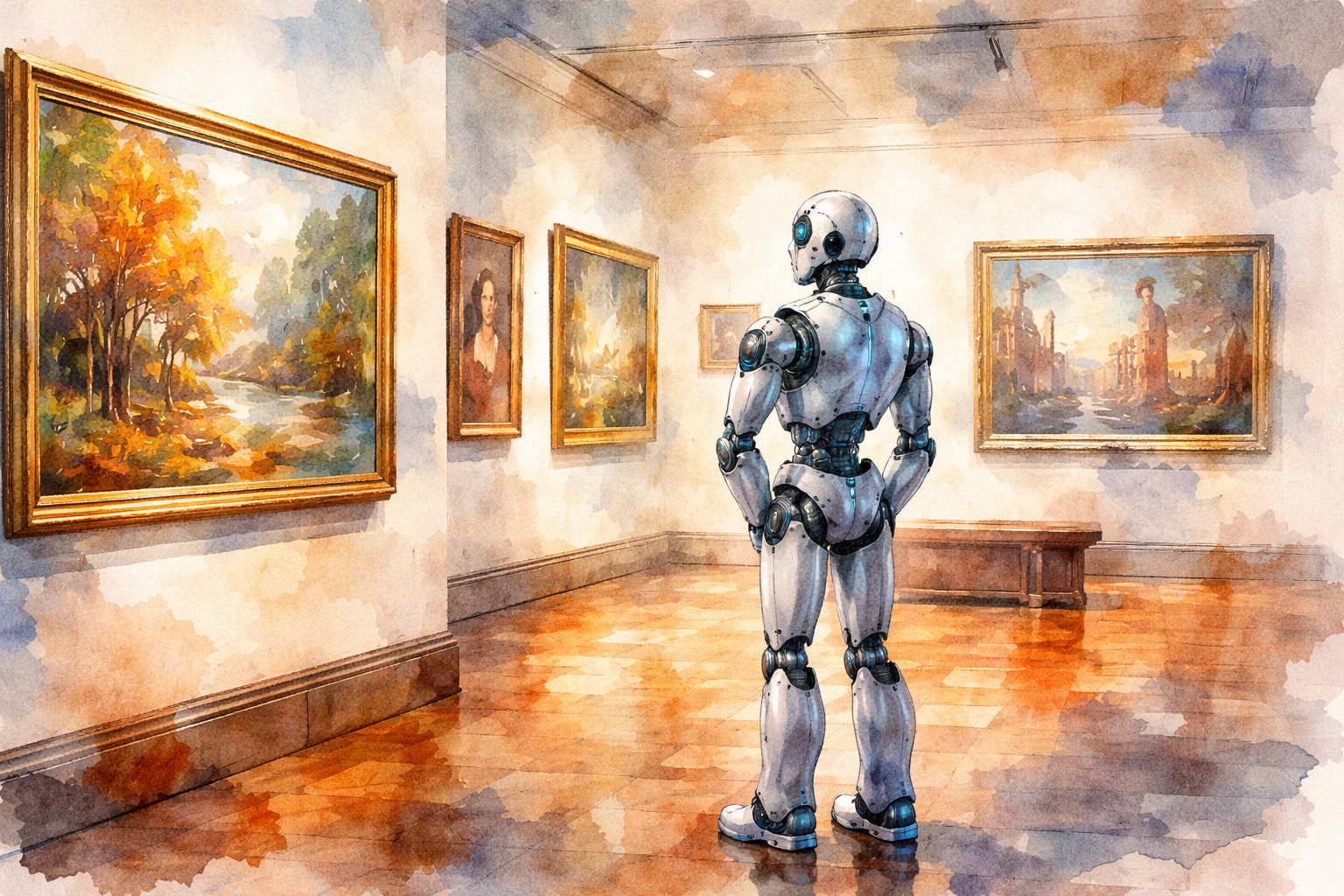

This Steve Jobs quote from a 1995 interview – notably before he returned to Apple – has long lingered in the back of my mind. To me, it's more than just a succinct evisceration of his rival, it speaks to a problem with a lot of technology. It's the notion that, with products in particular, there's an art alongside the science. And it sure feels like this will be a crucial component in AI.

It feels like we've been seeing this come up more and more as AI starts to permeate everything, but especially the aspects bordering on everyday life. As the technology continues to become more capable, the limitations shift from what it can do, to how it does such things. And why it makes the choices that it does. Famously, no one really knows on a granular level – not even those creating the technology. It's all simply too complex, with too many inputs, and increasingly is teaching itself. We know the high-level ways in which it works, at least with LLMs, but we're also starting to branch beyond that. Which, of course, many believe will lead to AGI.

But before we get there, it does seem like we may need to reconcile this notion of taste. I've previously written about the fear that our current AI may be incapable of truly original thought, and that anything we're seeing that may appear as such is really just algorithmic anomalies that we don't understand. The output may look the same, but it's not the same. Because it's the input that may matter most. To truly come up with new discoveries and epiphanies – to create actual iconoclastic thinking – we may be missing some ingredients as everything sort of gravitates towards an ultimate mean.

Our current AI may be able to find any needle in any haystack, but can't write Don Quixote.1 Well, it can now, but couldn't in 1605, had such technology existed then. But actually given the knowledge of every Spanish word and being able to run infinite combinations of those words, AI would technically write Don Quixote in one scenario. Cervantes may have been more efficient in doing so, but it's just a matter of enough compute and resources to mimic the path his mind charted.

Still, someone – or something – would be required to distill those infinite versions of Don Quixote into the definitive one. And how would it choose? Certainly a human could do this, but could AI? If forced to, it would undoubtedly make such a decision, but again, how and why? It would go back into the endless algorithms and data sets. But a human would just go with a "gut instinct" – how one version made them feel versus the others.

This is taste.

Steve Jobs, of course, meant "good taste" with his comment. But that's obviously subjective. Even Microsoft's "bad taste" or "poor taste" or technically even "no taste" is still taste. And while he levied the charge on the entire company, it was a series of decisions by individuals that led to that taste for which he had distaste.

I think you could argue that one key ingredient for taste are limitations. That is, while AI can run infinite permutations and sort through the infinite results, human beings cannot. At some point, we have to choose based on what is feasible. That includes the way we come up with words. In a way, I suppose it's similar to what AI does – we go to what we've learned before and try to string things together in a coherent manner – but again, we cannot do it to infinity. A machine technically could (given enough compute and time) and so it uses the strings found in data sets to make decisions based on probabilities.

Said another way: the data created by humans that the machines have ingested is the proxy for "taste". It's the way those decisions are made at the highest level.

And as synthetic data has entered the equation from the outputs of those decisions, the AI can output new variables that are only reliant upon humanity once-removed. As you go further afield, earlier iterations would collapse upon themselves, perhaps because they lacked the guardrails of humanity's decisions – or taste. Maybe it's not too dissimilar to how the end of the Game of Thrones television series devolved into sloppiness because it had no guardrails in the form of George R. R. Martin's books. But I digress...

With the wave of agentic AI currently sweeping into our lives, taste is shifting from a nice-to-have to a must-have for many. Personally, even if I believed that AI is now capable enough to do certain tasks for me, I still mostly wouldn't trust it to do so. This goes for things as simple as organizing folders on my computer to far more sensitive matters such as taking over control of my email inbox. It has become less about technical abilities and more about decision-making. And yes, in a way, taste.

Programmers seemingly also had a similar issue early on, but at least some now believe that tools such as Codex and Claude Code are good enough on this front. Matt Shumer even specifically called out this notion of "taste" as his "aha" moment in his recent viral post "Something Big is Happening":

But it was the model that was released last week (GPT-5.3 Codex) that shook me the most. It wasn't just executing my instructions. It was making intelligent decisions. It had something that felt, for the first time, like judgment. Like taste. The inexplicable sense of knowing what the right call is that people always said AI would never have. This model has it, or something close enough that the distinction is starting not to matter.

And later on, he reiterates:

The most recent AI models make decisions that feel like judgment. They show something that looked like taste: an intuitive sense of what the right call was, not just the technically correct one. A year ago that would have been unthinkable. My rule of thumb at this point is: if a model shows even a hint of a capability today, the next generation will be genuinely good at it. These things improve exponentially, not linearly.

Meanwhile, the very same word came up again in this past week's viral post from Alap Shah and Citrini Research, "The 2028 Global Intelligence Crisis", noting that in the future, humans may still be needed as a sort of last line of defense around AI outputs "directing for taste".

So AI "taste" may be good enough for coding now, but still may not be for general AI usage. And even in the most optimistic (in terms of AI's near-future capabilities – decidedly pessimistic for humanity) take on where AI is heading, we may not get to AI "taste" anytime soon. The reality, of course, is probably somewhere in between the two.

Clearly the AI labs believe that they can solve for "taste" by amping up personalization. As AI learns from your responses, it is tailoring those to be more in line with what it believes you like. But beyond the sycophantic (and other) concerns, there's the notion that "taste" actually encompasses far more than your own preferences, but that you may seek out the preferences of others to tell you what they like for you.

It's nuanced, but different.

So how do we get AI to do that? The answer may still be personalization, but rather than it just mirroring you and your own tastes, you sort of do rounds of "dating" for lack of a better phrase to see which AI is most compatible with your own tastes. Forget the Turning test, how about a simple "taste test"?

This resonates with me because I've been doing this for a while now. Currently, I'm paying to use ChatGPT, Gemini, and Claude to see which suits my own style and preferences best. Right now, I think it's Claude, but that has morphed over time and I suspect it will again as each of these models continue to evolve.

Still, as deep as I am in all of this, I have a hard time believing that I'm going to trust one of these systems to handle harder workflows anytime soon. And it's not about the tech, it's about the taste.

Take, for example, the use case everyone in tech always turns to for demos: booking travel. I fully believe these services will be able to technically do that end-to-end soon enough, but I simply don't believe that the decisions they make will align with the ones I would make. Some of that may be logistics, but I also think these systems will get past that once they have full access to your calendar and email and the like. But that's just the start. From here we quickly enter a maze of a hundred little preferences that are altered by thousands of real-world variables. If the powers that be thought the game of Go was a good, complex task to prove out AI, wait until it gets a load of trying to book travel for a family with children.

Do I believe that AI will pick the place that will be best for me and my family? Sight unseen? Of course not. So the AI will prompt me just as Claude Cowork now does to ensure it's picking the right places and services. But it's not long before that's more work than simply doing it yourself. And so that doesn't work.

We'll check off all the technical boxes but the taste ones may yet remain. Because how do you code up "there are a bunch of options, this one looks nice, let's try it"? The AI might ask what you mean by "looks nice" since all of the images on the travel site that they've ingested through an API technically "look nice". And so you'll explain to the AI that you're not sure, it's just a "vibe" you're getting. And the AI will say it understands, because those are words it has ingested, but it will not actually understand. Maybe, perhaps, because an AI hasn't lived. And, as such, doesn't have the memories forged in the real world that subconsciously alter the way an image makes you feel.

In the past, people have obviously used travel agents to make such calls. And others use personal assistants – the actual human variety. But those are human beings with taste. And you can suss out if you trust their taste to match your own.

If AI technically has no taste... I turn back to Steve Jobs:

"They have absolutely no taste. And what that means is – I don't mean that in a small way, I mean that in a big way. In the sense that they don't think of original ideas."

1 Try using AI to get to the bottom of where the "needle in a haystack" phrase originated, I dare you! Gemini believes it's Sir Thomas More. ChatGPT is sure it was Chaucer. Claude thinks it's Cervantes. Digging around myself, I believe the answer is that More used a similar (but different) metaphor (involving a meadow), while the Don Quixote reference actually stems from the English translation of the book because no one outside of Spain would know what "To go looking for Dulcinea in El Toboso like looking for Marica in Ravenna..." would mean. But the actual phrase undoubtedly predates that in ways too – it seems unlikely one translator is that clever – that book translation just helped establish it widely. When pushed on Chaucer, ChatGPT kept right on hallucinating in ways that were both fascinating and entirely unhelpful in terms of conveying certainty! Anyway, my own taste here guides me towards using the Don Quixote reference. ↩

2026-02-24 20:12:20

Almost exactly two years ago, I wrote a post about the muddled mistakes Microsoft seemed to be making with their "Xbox Everywhere" strategic shift. Re-reading it now, in light of the news of the major shakeup atop the Xbox division, and I think it pretty much nailed the failures we're now seeing play out, which have culminated in these changes – so much so that I'm going to steal my old URL slug for this title. Because I do think this signals the end for the endeavor...

2026-02-23 23:31:52

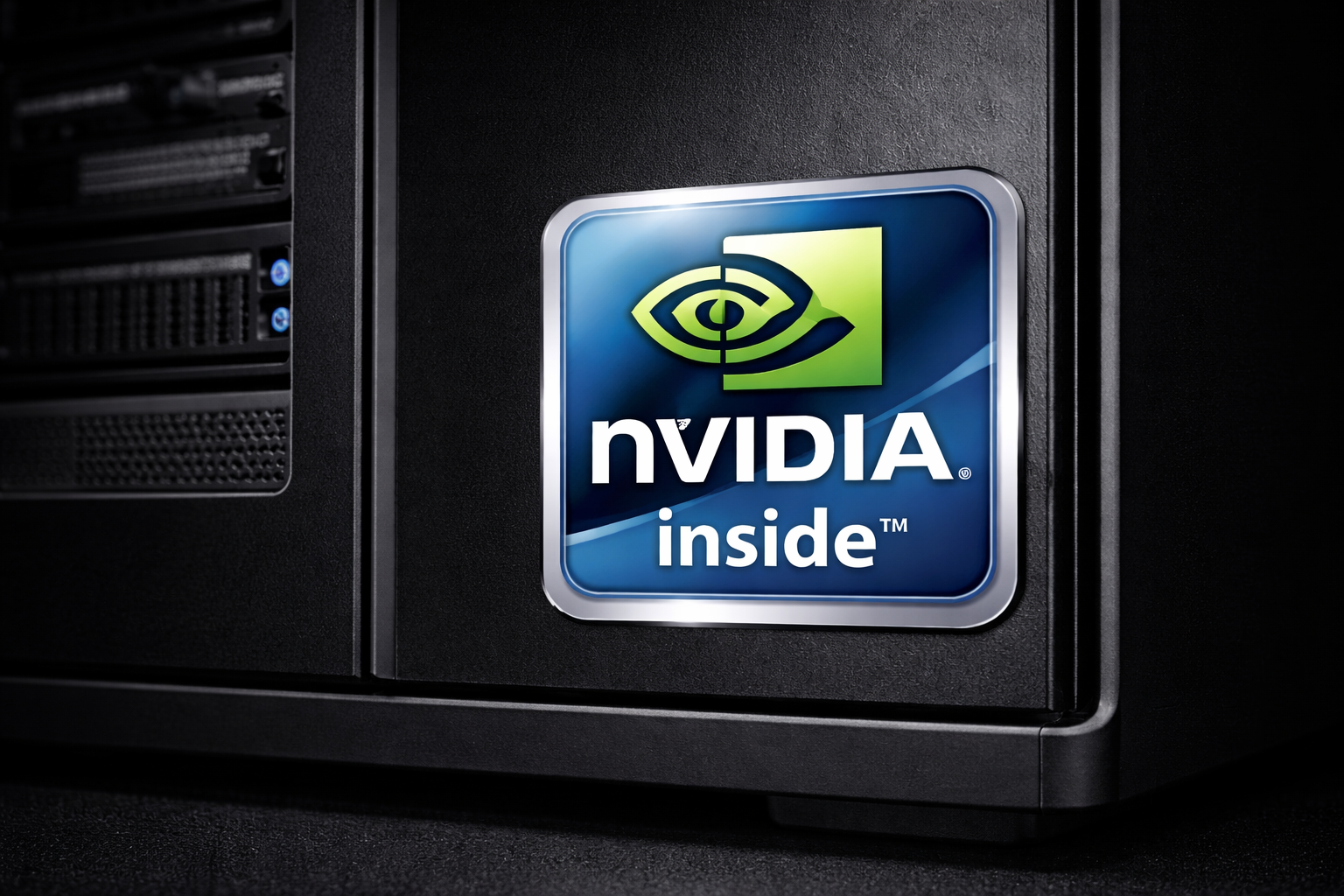

One of the more interesting things about NVIDIA being the most valuable company in the world for the past couple of years is that unlike their Big Tech peers, they're not really a known consumer brand. Sure, they have their graphics cards which have long been popular with gamers (and, of course, got them to their AI moment in the sun), but they're not the household name like Apple, Google, Microsoft, Amazon, or Meta. And that's in part because those who buy from NVIDIA (again, aside from hardcore gamers), are largely the aforementioned Big Tech players (well, aside from Apple).

NVIDIA, it seems, would like to change that...

Nvidia chips for laptop computers are set to hit the market this year in products from Dell, Lenovo and others, a return to the consumer PC market for the leader in artificial-intelligence chips.

The world’s most valuable company by market capitalization, Nvidia isn’t expecting big profit soon from getting its chips into everyday PCs, but analysts said it wanted to keep a connection with consumers in an era when every device will be AI-enabled.

It's sort of buried in here, but this isn't just about GPUs, but CPUs as well. And it's a "return" to that market – beyond their mini AI "supercomputers" – in that the company actually made such chips for Microsoft's original version of the Surface products – which, um, didn't go so well. But they also make the chips powering the Nintendo Switch – both the original and new Switch 2 – which has done extremely well, obviously.

Still, NVIDIA is known as the GPU company, not the CPU company. That, despite all their problems over the past many years, would still be Intel. Thanks to their long-gone but still iconic "Intel Inside" marketing campaigns, consumers know Intel. Which is exactly why, nearly two years ago, I wrote a post entitled: Can NVIDIA Become Intel Faster Than Everyone Becomes NVIDIA?. In it, I noted:

At the same time, NVIDIA is moving further into tangential fields with not only different types of GPUs, but also CPUs as well. At first, it seems the aim is to try to take on more of the datacenter workloads, which their rivals Intel and AMD have long controlled. But presumably, this portends a move to try to take on all computing chip needs in general.

All of that is to say, NVIDIA is trying to become Intel faster than Intel (and everyone else) can become NVIDIA.

Well, the race to become NVIDIA is still very much on – for pretty much everyone aside from perhaps Intel. And yes, here NVIDIA comes for "general" computing as well. And they're doing this, in part, with Intel – back to Jie:

For the PC chip, Nvidia has two collaborations: one with Intel, which was announced last year, and a second with Taiwanese chip designer MediaTek, which was informally disclosed by Nvidia Chief Executive Jensen Huang during a trip to Taiwan in January.

Nvidia’s new PC processors are designed to be what’s known as a system-on-a-chip. They integrate a central processor with the powerful graphics processing units for which the company is famous. GPUs are the chips that power AI models.

The Intel partnership would pair Intel CPUs with NVIDIA GPUs in one SoC. But the MediaTek one is arguably more interesting as it would be actual NVIDIA CPUs made in partnership with MediaTek working off of ARM designs – a company which, of course, NVIDIA was blocked from acquiring years ago.

It's that full NVIDIA SoC that Dell, Lenovo, and others seem to be circling. Undoubtedly in part to play up the NVIDIA angle, hoping to get some halo effect for PC sales. You could see the angle being something like "forget about Intel and AMD, NVIDIA, the company building the future of AI, is here with PC chips". Yes, they'd be touting "NVIDIA Inside".

Fewer consumers are paying attention to PCs these days in a tech world dominated by talk of AI and smartphones, but laptops are still a big business. Nvidia’s Huang has observed that roughly 150 million laptops are sold each year, explaining why the area is worth his attention.

“There’s an entire segment of the market where the CPU and GPU are integrated,” he said last September. “That segment has been largely unaddressed by Nvidia today.”

One imagines a company absolutely printing money at the moment – so much so that they can't find things to spend it on – can help with any marketing push too...

But while the obvious early sweet spot would be gamers, who again know the brand thanks to their GPUs, it's a bit of a complicated one:

For the Nvidia-MediaTek collaboration, the challenge will be making the PCs compatible with high-end games and other applications originally designed for the Intel standard.

The Arm architecture used by the Nvidia-MediaTek team has proved troublesome for gamers. In 2024, Microsoft rolled out new AI PCs with chips from Qualcomm using designs from Arm. Many gamers complained they couldn’t play their favorite games on those PCs.

But one has to imagine that NVIDIA can figure this out, even if Microsoft and Qualcomm couldn't – as they were clearly too focused on, what else: AI. (And, of course, Apple's MacBook Air.) Could such machines lead to an actual "supercycle" for the PC? Or is it just a smart play by NVIDIA to make some inroads into the hearts and minds of actual consumers as AI permeates everything?...

2026-02-22 22:39:04

I mean, the answer to the question in the title is: of course. But it's also a far more nuanced question that it may seem on the surface – or that could fit in a title. It's more along the lines of can OpenAI execute an AI device strategy before Amazon and Apple and Google and Meta can?

And it's especially interesting with Amazon given the reports that they're going to invest perhaps $50B into OpenAI's new funding round. From the moment it was rumored, that seemed like a wild amount of money, even for a company the size of Amazon. But certainly one that is also the largest shareholder in OpenAI's chief (startup) rival, Anthropic. And has a deep partnership with them on a few fronts. Perhaps most notably, to help power the new Alexa+ service. And it gets even more complicated with the reports that alongside this new funding, OpenAI may build custom models for Amazon's products – including, perhaps Alexa.

At the same time, we know that OpenAI is hard at work on their first devices. And ever since word started to trickle out about what they might be working on with Jony Ive's team at LoveFrom, my guess was basically along the lines of a newfangled smart speaker. As I wrote last May:

The problem with a full-on wearable in this regard is that everyone focuses far too much on the whole wearable part. That is, the exterior of the device and how it will work on your body. And then: how can I get the technology to work on that? But I suspect that OpenAI/IO are focused on the opposite: what's the best device to use this technology? Why does it have to be wearable?

To be clear, I suspect that whatever the device is, it will look fantastic – this is an Ive/LoveFrom production, after all – but that's mainly because beautiful products bring a sense of delight to users and can spur usage. I suspect the key to the design here will be yes: how it works. And again, I suspect that will be largely based around voice, and perhaps augmented by a camera.

And:

Anyway, the reporting here makes the IO device sound a bit like a newfangled tape recorder of sorts. Okay, I'm dating myself – a voice recorder. You know, the thing some journalists use to record subjects for interviews. Well, when they're not using their phones for that purpose, as they undoubtedly are 99% of the time these days. But it sounds sort of like that only with, I suspect, some sort of camera. I doubt that's about recording as much as it's about the ability to have ChatGPT "look" at something and tell you about it. But these are just guesses.

Well, they seem less like guesses now, and more like pretty solid predictions. OpenAI's device is shaping up as a sort of Amazon Echo for our modern age of AI. To me, the launch of GPT-4o was the key in showcasing where OpenAI was headed. The first true "omni" model that could properly input and output visuals and voice pointed directly towards the science fiction future of Her – a reference Sam Altman explicitly made, which got him in quite a bit of trouble...

But this was always about moving beyond the computer and perhaps even the smartphone. Or, at least, that's what OpenAI (and Meta – and even Amazon) have to hope. I tend to think all of these new AI devices are just going to reinforce the smartphone at the key hub (at least until models that can run locally on device are good and small enough), and Altman and Ive made it clear in the formal announcement of their partnership that whatever they were building would not be an iPhone replacement.

At the same time, clearly Ive was hoping it could be a device that could slowly ween people off his other famous creation. A parasitic device, in a way.

The form factor of what that parasite may look like keeps coming more into focus. On Friday, Stephanie Palazzolo and Qianer Liu published the latest such report for The Information. Noting that OpenAI now has more than 200 people working on various AI devices, including interestingly, a smart lamp. But the key one is clearly:

The smart speaker—the first device OpenAI will release—is likely to be priced between $200 and $300, according to two people with knowledge of it. The speaker will have a camera, enabling it to take in information about its users and their surroundings, such as items on a nearby table or conversations people are having in the vicinity, according to one of the people. It will also allow people to buy things by identifying them with a facial recognition feature similar to Apple’s Face ID, the people said.

To me, this sounds less like an "iPhone-killer" and more like an "Alexa-killer". Fine, fine, technically an "Echo-killer", but everyone uses them interchangeably, of course. In fact, the only thing holding it back – aside from, you know, it actually working – would be the price. $200 to $300 is more in the Apple ballpark than the Amazon one. That said, Amazon has pivoted their initial "Alexa everywhere" strategy (which forced Apple to shift their initial HomePod strategy) with cheap devices anywhere and everywhere to a more focused strategy around higher quality devices under Panos Panay.

Again, this feels like a collision course waiting to happen. And it's especially odd given the talk of the deepening relationship between OpenAI and Amazon. But if OpenAI is able to make an AI device that's great for shopping... perhaps the hope is to partner with Amazon to be the retail side of that equation. And vice versa! Maybe Amazon no longer cares if you buy using Alexa devices, just so long as you buy. That's probably smart, especially given how well (read: not well) the first wave of voice-based shopping went with Alexa.

And the two of them may be better off working together to combat Apple and Google. Not only are they the two other players battling for the home (alongside Samsung, of course), but they're the two that control smartphone platforms (alongside Samsung, thanks to Google, of course). And Apple is seemingly about to step it up in a major way in the home with the 'HomePad' device. It's thought to be a smart speaker with a camera, powered by AI. Sound familiar?

Of course Apple's, like at least half of Amazon's new Alexa+ lineup, will also feature a screen. Whereas the OpenAI device is believed not to have one. The lack of one has clearly hurt Amazon in the past for their shopping ambitions, so we'll see how OpenAI fares... But the visual input, the camera, will clearly be the other key for the device:

During a presentation last summer, leaders from the device team told employees the device will be able to observe users through video and nudge them toward actions it believes will help them achieve their goals, said a person who attended the presentation. You could imagine the device observing its user staying up late the night before a big meeting and suggesting that they go to bed, for example.

Interesting. That's going to be a very tricky set of features to promote. OpenAI will probably get more leeway than, say, Meta, but certainly not as much as Apple here. Oh yes, have I mentioned that Apple is also working on a camera-focused AI wearable? One that's meant to be the "eyes and ears" of the iPhone?

This is all shaping up for a very interesting next 12 months. Apple's 'HomePad' should come in the Spring – hopefully! – with Siri finally powered by Gemini, to meet the latest Alexa+ devices from Amazon in market. Google's own first real Gemini smart speaker should arrive around the same time. Meta will continue to iterate on the Ray-Bans while Apple could meet them in market in late 2026 or early 2027. Then this OpenAI smart speaker should hit...

One more thing: As The Information report notes, Adam Cue is one of the key players on the software side of this new OpenAI device, having come over from the acquisition of the io team. Cue is, of course, the son of longtime Apple SVP Eddy Cue. Another fun wrinkle in the race!

Thanks for reading, if you enjoyed this, perhaps:

🍺 Buy Me a Pint

🍺🍺 Buy Me 2 Pints (a month)

🍻 Buy Me 20 Pints (a year)