2025-07-04 22:02:13

I usually do a “state of the nation” post on July 4th, but I was traveling to Japan today, and the wifi didn’t work on the plane, so I’ll have to do it tomorrow. In the meantime, I thought I’d repost a fun post that I wrote back in 2022, about how an excessive boom in higher education, coupled with a saturation in the markets for many humanities-based jobs, might have contributed to America’s era of unrest.

Three years later, I think most of what I said in this post still looks right. The decline in college enrollment suggests that Americans might have collectively realized that a bachelor’s degree isn’t an automatic ticket to a comfortable lifestyle. But we may still be in for a second round of elite overproduction, because the “practical” STEM majors that lots of students shifted into in response to the humanities bust are now seeing higher unemployment:

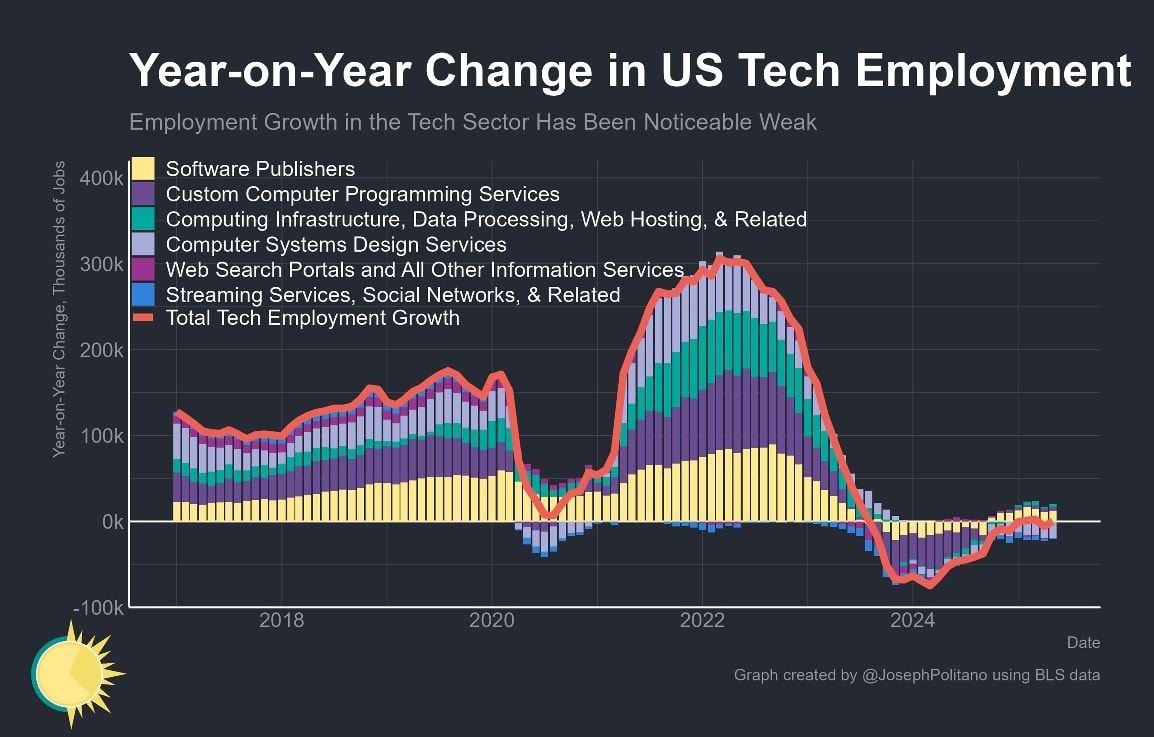

This is due in large part to the crash in tech sector hiring over the past two years:

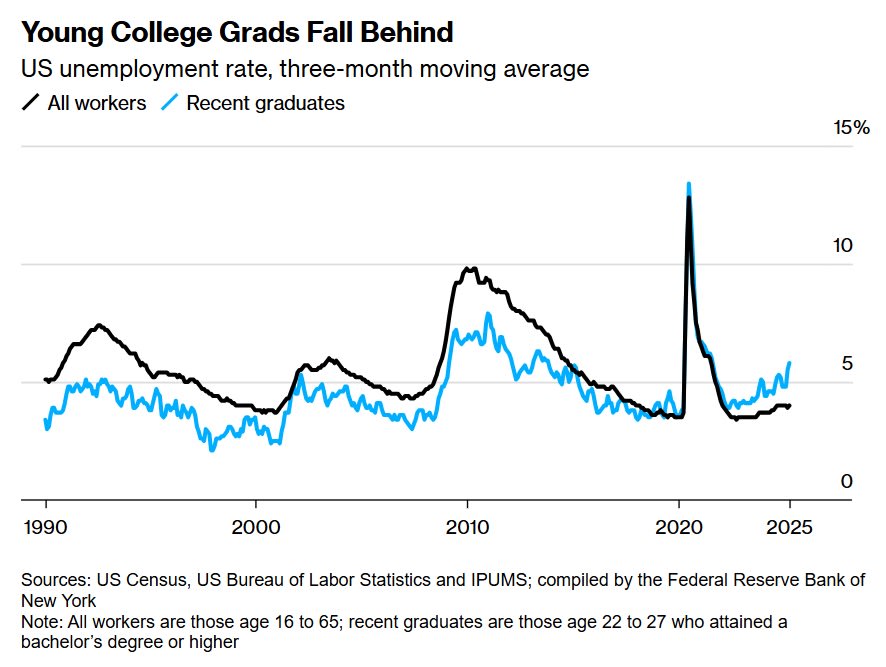

In fact, unemployment is now higher for recent college graduates than for the general public:

Some people think these trends are due to the rise of generative AI; others disagree. But whatever the reason, the failure of STEM to provide a secure alternative career path in the wake of the big humanities bust might be setting us up for more unrest among the youth.

Anyway, here’s that original post from 2022:

“We're talented and bright/ We're lonely and uptight/ We've found some lovely ways/ To disappoint” — The Weakerthans

Here’s an eye-opening bit of data: The percent of U.S. college students majoring in the humanities has absolutely crashed since 2010.

Ben Schmidt has many more interesting data points in his Twitter thread. To me the most striking was that there are now almost as many people majoring in computer science as in all of the humanities put together:

When you look at the data, it becomes very apparent why the shift is happening. College kids increasingly want majors that will lead them directly to secure and/or high-paying jobs. That’s why STEM and medical fields — and to a lesser degree, blue-collar job-focused fields like hospitality — have been on the rise.

But looking back at that big bump of humanities majors in the 2000s and early 2010s (the raw numbers are here), and thinking about the social unrest America has experienced over the last 8 years, makes me think about Peter Turchin’s theory of elite overproduction. Basically, the idea here is that America produced a lot of highly educated people with great expectations for their place in American society, but that our economic and social system was unable to accommodate many of these expectations, causing them to turn to leftist politics and other disruptive actions out of frustration and disappointment. From the Wikipedia article on Turchin’s theory:

Elite overproduction has been cited as a root cause of political tension in the U.S., as so many well-educated Millennials are either unemployed, underemployed, or otherwise not achieving the high status they expect. Even then, the nation continued to produce excess PhD holders before the COVID-19 pandemic hit, especially in the humanities and social sciences, for which employment prospects were dim.

Turchin and the others who have suggested this theory make some questionable assumptions about how labor markets work — in general, they focus on labor supply while ignoring the importance of labor demand. But still, the Elite Overproduction Hypothesis is fascinating — there’s some circumstantial evidence in its favor, and it dovetails nicely with some other economic and sociological theories I know of. I think it’s a good candidate for explaining at least some of the unrest — and in particular, the resurgence of leftist politics — that we’ve seen in the U.S. recently.

I went over this idea in a Twitter thread back in 2018 (in response to an earlier batch of data about the humanities), but I thought it deserved a longer treatment. As you read this, keep in mind that I’m just making the case for this hypothesis; I’m not sure how much of the last decade it really explains, but I think it’s plausible enough to deserve serious thought.

If you graduated with a degree in English or History back in 2006, what would you do with that degree? If you wanted a secure stable prestigious high-paying job, you could go to law school and be a lawyer. If you wanted to live on the East Coast and work in an industry with a romantic reputation, you could work in media or publishing. If you just wanted intellectual stimulation and prestige, you could try for academia. If you just wanted security and stability and didn’t care that much about money or glamour, you could be a K-12 teacher, or work for the government.

This wealth of career paths probably made young people feel that it was safe to major in the humanities — that despite the stereotype that you couldn’t do anything with an English degree, there was still tons of work out there for them if they wanted it. Studying humanities was fun, it made you feel like an intellectual, and the social opportunities were probably a lot better than if you were stuck in a lab or in front of a computer screen coding all day. And if after a few years enjoying the fullness of youth you felt like going nose-to-the-grindstone and getting that big suburban house and dog and kids and two-car garage like your parents had, well, you could just go to law school.

But in the years after the Great Recession, every one of these career paths has become much more difficult.

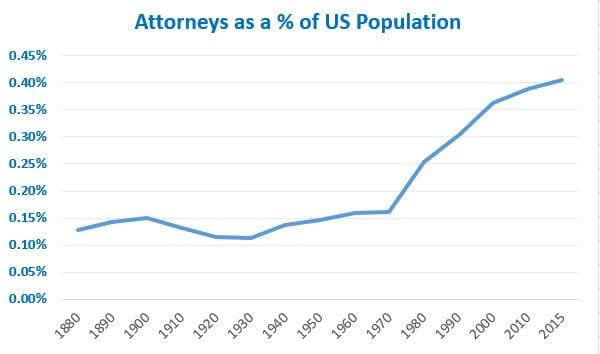

First, let’s start with the most important one — the humanity major’s ultimate fallback, the legal profession. Starting around 1970, there was a massive boom in the number of lawyers per capita in the U.S., but by the turn of the century it had started to level off:

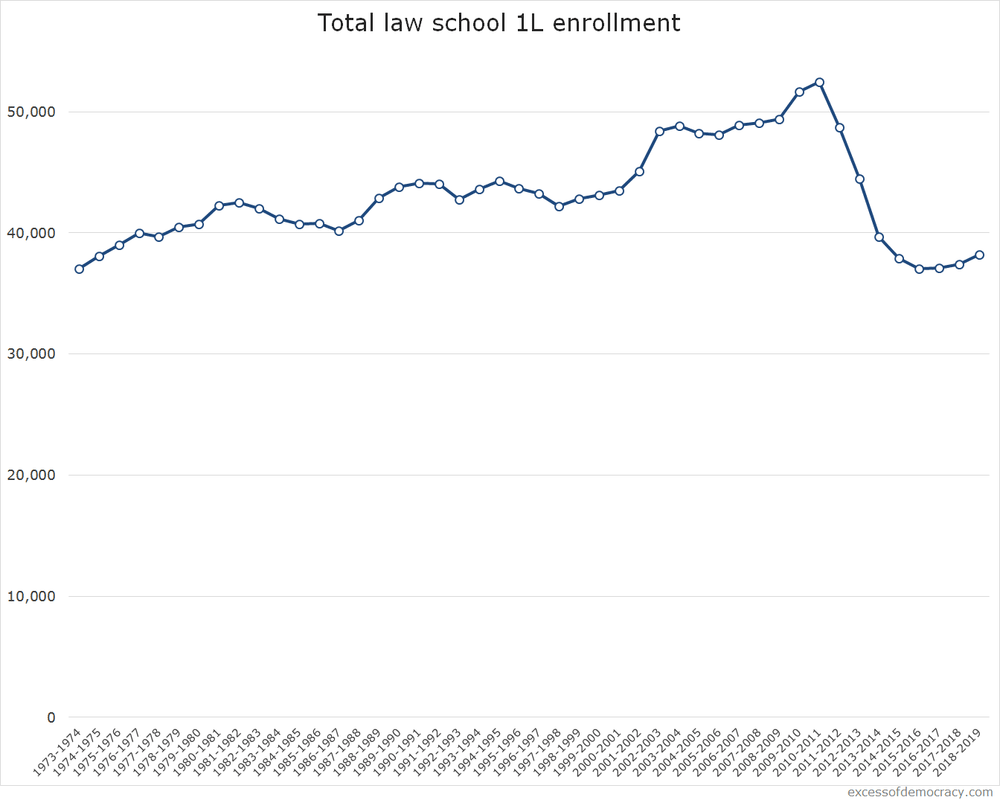

As Jordan Weissmann wrote back in 2012, the shakeout that begun in 2008 led to a stagnation in employment in legal services. You could see it in little things like the decline of the “billable hour” (which basically raised lawyers’ incomes by allowing them to overcharge a bit). There was a glut of young people going to law school, but not enough jobs to fulfill their expectations. So after a few years, people realized this, and there was a big crash in law school enrollment:

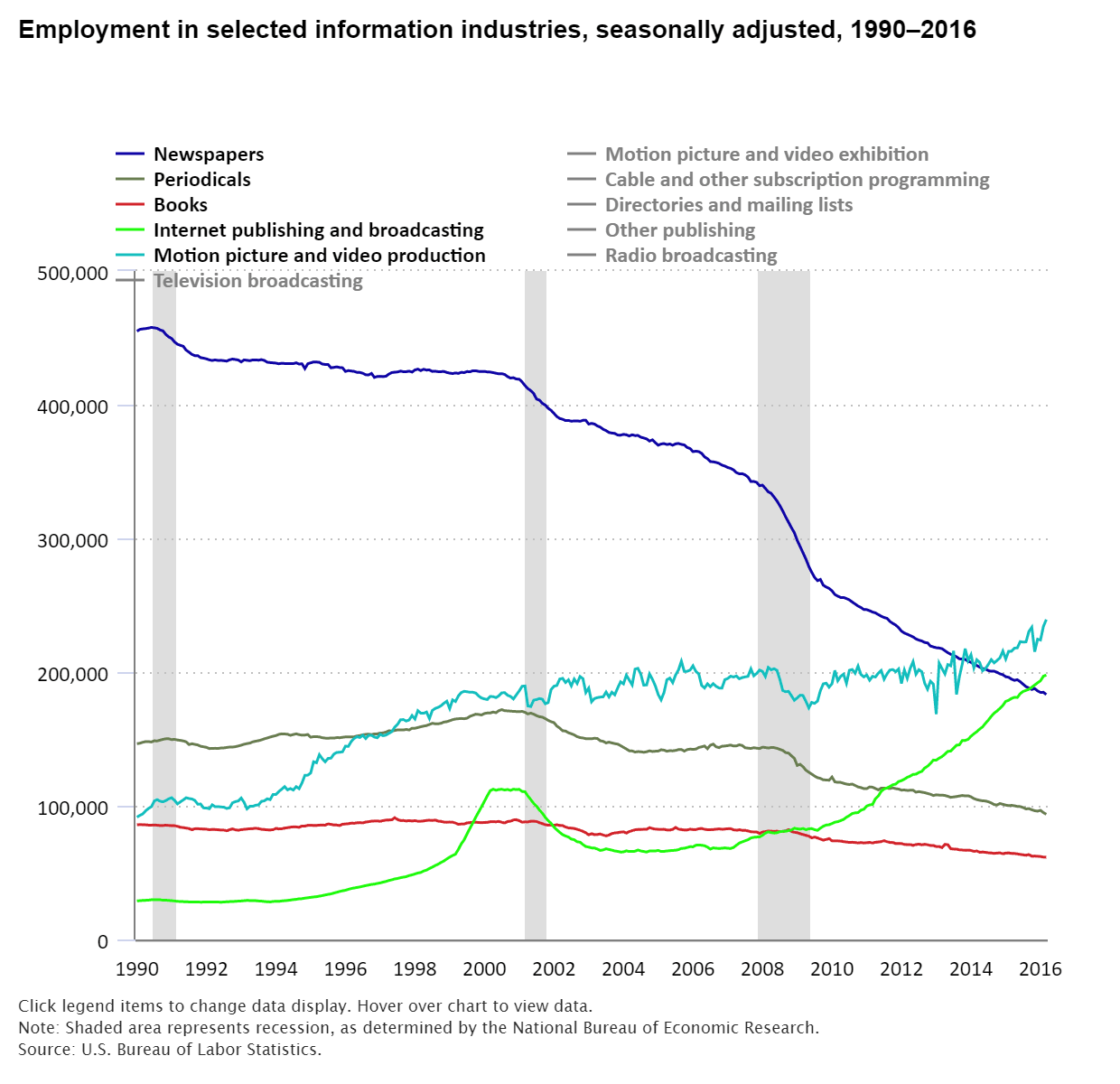

How about publishing? Here, the decline of titans like Conde Nast is no mere anecdote. The industry also suffered from the Great Recession, but it was probably in long-term decline since the turn of the century:

There’s the internet, of course. Digital publishing is growing, but it seems highly unlikely to make up for the devastation in newsrooms, books, and magazines:

As for academia, tenure-track hiring in the humanities was never exactly robust, but with the decline in higher education funding after the Great Recession, it went into deep decline:

Universities were saving money by replacing tenured faculty with low-paid adjuncts. This led to the horror stories of adjuncts sleeping in their cars, hanging on year after year in the desperate hope that somehow they would catch a lucky break and ascend into the ranks of the tenure track.

How about a job as a public servant? 2008 marked the end of a long boom in government employment:

The same story holds in the K-12 teaching profession. In addition to stagnating employment after 2008, that industry is anything but cushy:

So all of these traditional career paths for humanities graduates suffered in the late 2000s and 2010s. But at the same time, there had been a giant boom in the number of people studying humanities. I showed the percentages above, but looking at the raw numbers gives a better idea of how many people surged into these fields in the 2000s and early 2010s:

That surge set a lot of people up for career disappointment at exactly the wrong time.

So who was hiring in the 2010s? Not Wall Street, which had been at least temporarily tamed by the financial crisis and the Dodd-Frank financial reform. Finance became a tamer and moderately less prestigious industry, and overall employment stagnated. Michael Lewis was famously able to take his art and archaeology degrees and walk into a job as a bond salesman in the 1980s, but in the shakeout after 2008 that was just a lot less possible.

There was Silicon Valley, of course; the 2010s featured the Second Tech Boom, the rise of Google and Facebook and the rest of Big Tech, and the explosion of the venture-funded startup economy. But overall information technology jobs were also in the dumps; sure, you could make a lot of money as an engineer at Google, but “learn to code” is not exactly something you want to be told right after graduating with a degree in art history.

So yes, there were jobs out there in the 2010s. But everything was a lot more competitive than in the decades before — even just to get a regular boring job in corporate America, you had to fight and scrabble. And the kind of intellectually rewarding or socially prestigious careers that humanities majors had prepared for were in especially short supply.

The Elite Overproduction Hypothesis says that this situation produced a combustible social environment that exploded into the unrest of the late 2010s. But why would that happen? Here we have to turn to theory.

The basic concept here hearkens back to the mid 20th century concept of the “revolution of rising expectations”. Here’s a concise statement of the idea:

In the 1960s researchers in sociology and political science applied the concept of the revolution of rising expectations to explain not only the attractiveness of communism in many third world countries but also revolutions in general, for example, the French, American, Russian, and Mexican revolutions. In 1969 James C. Davies used those cases to illustrate his J-curve hypothesis, a formal model of the relationships among rising expectations, their level of satisfaction, and revolutionary upheavals. He proposed that revolution is likely when, after a long period of rising expectations accompanied by a parallel increase in their satisfaction, a downturn occurs. When perceptions of need satisfaction decrease but expectations continue to rise, a widening gap is created between expectations and reality. That gap eventually becomes intolerable and sets the stage for rebellion against a social system that fails to fulfill its promises.

In fact, this idea hearkens back at least to Alexis de Tocqueville, and is sometimes called the Tocqueville Effect. Some people claim to have found evidence for this process.

Why would this happen? If things get better for 20 years and then stop, why would you be mad? After all, at least things are better than they were 20 years ago, right?

But expectations matter. In the finance world, a number of economists have recently been playing around with the idea of “extrapolative expectations”. Basically, when a trend goes on long enough, people start to think there’s some sort of structural process underlying the trend, and therefore they assume the trend will continue indefinitely. For upwardly mobile people, or people in an economy that’s growing rapidly, or people whose stocks or houses are appreciating steadily in value, good times might come to seem normal.

And then what happens when it turns out that good times aren’t baked into the nature of the Universe? Suddenly, the mediocrity of reality intrudes upon the complacent expectations of eternal upward growth — housing prices plateau or fall, incomes hit a ceiling, economic growth stalls out. At this point, people could get quite angry. The economists Miles Kimball and Robert Willis have a theory that happiness is just the difference between reality and expectations. If things are better than you predicted, you’re happy; if things are worse, you’re upset. Kimball and Willis formalize the idea with math, but in fact “Happiness = Reality - Expectations” is already a common saying. Evidence from surveys generally supports the idea.

Together, this expectations-based theory of happiness, along with the idea that expectations are extrapolative, makes for a combustible mix. Extrapolative expectations are almost always unrealistic — growth trends don’t continue forever, so people are setting themselves up for disappointment.

A number of people have invoked this idea to explain the massive global wave of protests in 2019 and 2020. Some World Bank researchers wrote that “[Latin American] protesters…are emboldened by recent social gains, rather than by worsening conditions, to demand levels of fairness and equality which are still far from their reality.” In Chile, the Latin American with the most intense and widespread protests, there was also a growth slowdown in the mid-2010s after decades of rapidly rising living standards:

Anyway, it’s pretty simple to apply this to the U.S. in the 2010s. Productivity growth, which had been robust since the early 90s, slowed down sharply around 2005. Housing prices — a big determinant of middle-class wealth — plateaued in 2006 and began to decline in 2007. And the economy crashed in the Great Recession.

But for elites, especially those on the humanities track, the years after the Great Recession were a particularly brutal slap in the face. Income had largely stagnated for Americans with low and medium incomes, but for people in the upper middle class there had still been steady growth — and the upper middle class is the class in which college graduates typically expect to find themselves. The fact that so many young people flooded into humanities majors in the 2000s and early 2010s suggests that lots of them expected a double bounty — to be able to earn a good income while also having a career that fit their personal interests.

In a 2013 blog post, Tim Urban showed some data that supports this story. Here is a Google Ngrams search for the phrase “a fulfilling career”:

Urban somewhat mockingly depicts educated Millennial expectations with the following meme:

I don’t think people deserve to be mocked for having great expectations for their lives, or for being frustrated when those expectations don’t pan out. Try to think of things from the perspective of a 25-year-old who just graduated from UC Davis in 2010 with an English degree. For the past four years, you’ve lived the life of an intellectual — you’ve read dozens of books, expanded your mind with a hundred deep ideas about society and history and the purpose of life, spent long nights discussing and debating those ideas with people just as smart as you are. And all that time, whether you’re the first in your family to go to school or a scion of an upper-middle-class family looking to make your parents proud, you’ve been told that college is the ticket to a spot in the top 20% of American society. You and your parents have certainly paid a price tag that reflects that expectation! And on top of that, everyone has told you that you can (and should!) find a career doing something you love, something that helps the world, and something that uses the education you paid so much to get.

Then you graduate, and nobody wants lawyers, magazines are dying, newsrooms are dying, universities aren’t hiring, and your best bet is either to roll the dice again with years of grad school or to claw your way into some corporate drone job where you’ll be filing TPS reports all day while your diploma rots in a box in your parents’ attic. Meanwhile you’re stuck with $40,000 in undergraduate debt, and the payments are now coming due. It’s neither entitled nor bratty nor arrogant to be unhappy with that outcome.

So I think this is a strong candidate for explaining why unrest exploded among the American elite in the late 2010s.

I’ve been talking about humanities major so far, because Ben Schmidt’s data is so striking, and because humanities careers seem to have borne the worst of the brunt of the post-2008 economic shakeout. But although it may have been most intense among downwardly mobile or unemployable English majors, the unrest in the 2010s was really a broader phenomenon that touched most of America’s young elites.

Various polls throughout the decade showed that young Americans with college degrees were a bit less happy at work than their high-school-educated peers, despite making a lot more money.

It’s easy to draw a line between this unhappiness and the socialist movement in the U.S. Socialism rapidly became more popular among young Americans in the 2010s, and the Bernie Sanders movement exploded upon the national scene. The socialist movement has people from all classes, but overall it’s far from a proletarian movement — this is fundamentally a revolt of the professional-managerial class, or at least the people who expected their education to make them a part of that class. It’s telling that two of the new socialist movement’s most passionate crusades have been student debt forgiveness and free college.

For me, a telling anecdote that first clued me into this hypothesis was when I debated Jacobin writer Meagan Day in 2018. When I pointed out that very few Americans are financially destitute, she responded that “it’s not just destitution, it’s disappointment”, and proceeded to describe her own frustration with the two unpaid internships she went through as a struggling college-educated writer. (This is far from the only such origin story.) From that moment onward, when socialists with college degrees talked to me about the “working class”, it became clear to me that the class they were describing was themselves.

But educated youth unrest in the late 2010s went far beyond socialism. In the 60s it was the urban poor who rioted, but surveys found that the people who flooded into the streets during the massive protests of summer 2020 were disproportionately college-educated. It’s even possible to see wokeness itself as partly an expression of frustration with the stagnant hierarchies of elite society in early 2010s America. After all, if the number of spots at university departments and companies and schools and government agencies suddenly stops growing, it means that young people’s upward mobility will be blocked by an incumbent cohort of older people who — given the greater discrimination and different demographics of earlier decades — are disproportionately White and male.

We like to think of revolutions as being carried out by downtrodden factory workers and farmers, and in some cases that’s true. But frustrated and underemployed elites are uniquely well-positioned to disrupt society. They have the talent, the connections, and the time to organize radical movements and promulgate radical ideas. So far, education polarization means that a large fraction of the non-college majority hasn’t chosen to join these movements (or has expressed unrest in different, far less intellectual ways). But a society that generates a large cohort of restless, frustrated, talented, highly educated young people is asking for trouble.

So if the Elite Overproduction Hypothesis is broadly correct, how do we get out of this mess? If happiness equals reality minus expectations, simple math tells us that we basically have two options for pacifying our educated youth — improve reality, or reduce expectations.

Improving reality is very hard, but we’re working on it. The industrial policies of the Biden administration are aimed at jump-starting faster economic growth, and more progressives are talking about an “abundance agenda” that would reduce the cost of living for Americans of all classes. But barring a lucky break like the simultaneous tech boom and cheap oil of the 1990s, boosting growth and abundance will be painstakingly slow going. It will also require overcoming the opposition of a whole lot of vested interests — particularly local NIMBYs — who themselves will be disappointed and angry if the government railroads their parochial preferences to fulfill its national objectives.

A more feasible strategy is to reset expectations to a more realistic — or even pessimistic — level. If we take humanities majors as a measure of economic optimism, we can already see this happening, as young people turn to more practical degrees. Interestingly, Google Ngrams for “a fulfilling career” have now ticked down as well. The over-optimistic angry Millennial generation may soon be supplanted by a Generation Z whose modest expectations echo those of their Gen X parents in the late 70s and early 80s.

There may be things that cultural creators and media figures like myself might be able to do to help this “expectations reset” along. Perhaps we should emphasize grit and struggle instead of talking so much about wealth and personal fulfillment.

The government and universities have to be part of this too. Canceling student debt is fine, but long-term reforms to reduce the cost of college, and the debt burdens students incur, will reduce the stakes of the post-college job scramble. Universities should avoid marketing materials that depict them as a golden ticket to wealth and intellectual fulfillment, and should offer career counseling that prepare students for a realistic job market. And government should implement apprenticeships, vocational education, free community college, and other programs that make working-class life a decent bet — in addition to reducing inequality, this will make college graduates feel less “elite” relative to their non-college peers.

Of course, an expectations reset isn’t permanent. If we manage to restore good times, future generations will get used to that upward escalator, and they’ll form extrapolative expectations for their own glide path to success. But that’s a worry for the future. If the Elite Overproduction Hypothesis is true, then our best bet to calm our age of unrest is to bring our dreams down to Earth.

2025-07-02 16:16:00

“I wanted the money.” — Edward Pierce

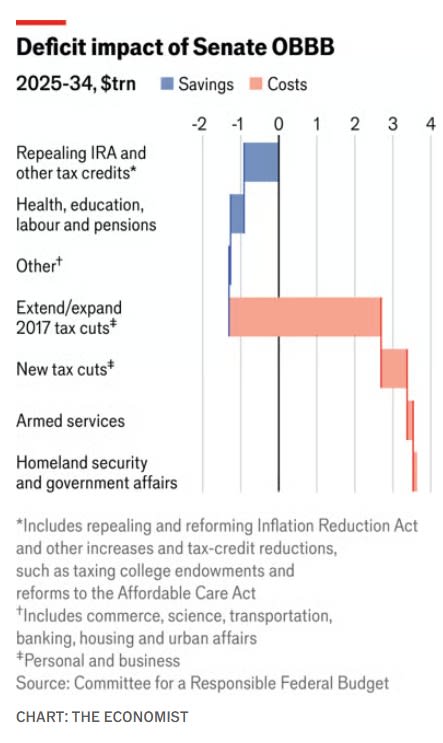

Donald Trump has done away with much of the Reaganite conservative ideology that defined the Republican party of my youth. But one Reagan tradition remains in place: Every time the GOP finds itself in control of both Congress and the Presidency, they pass a giant tax cut. Bush did this in 2001, Trump did this in 2017, and now Trump is about to do it once again in 2025. Trump’s rather ludicrously titled One Big Beautiful Bill Act is, first and foremost, a tax cut bill. The Economist tallied up the numbers on the version of the OBBBA that just passed the Senate, and found that tax cuts dominated everything else in terms of their impact on the government’s finances:

A more detailed breakdown is available from the Committee for a Responsible Federal Budget. The New York Times has found similar numbers for the House version of the bill.

A lot of people are mad about the OBBBA, for many reasons — the health insurance cutoffs, the huge cuts to scientific research, the crazy energy policy, and so on. But really, this bill is first and foremost about tax cuts for the rich.

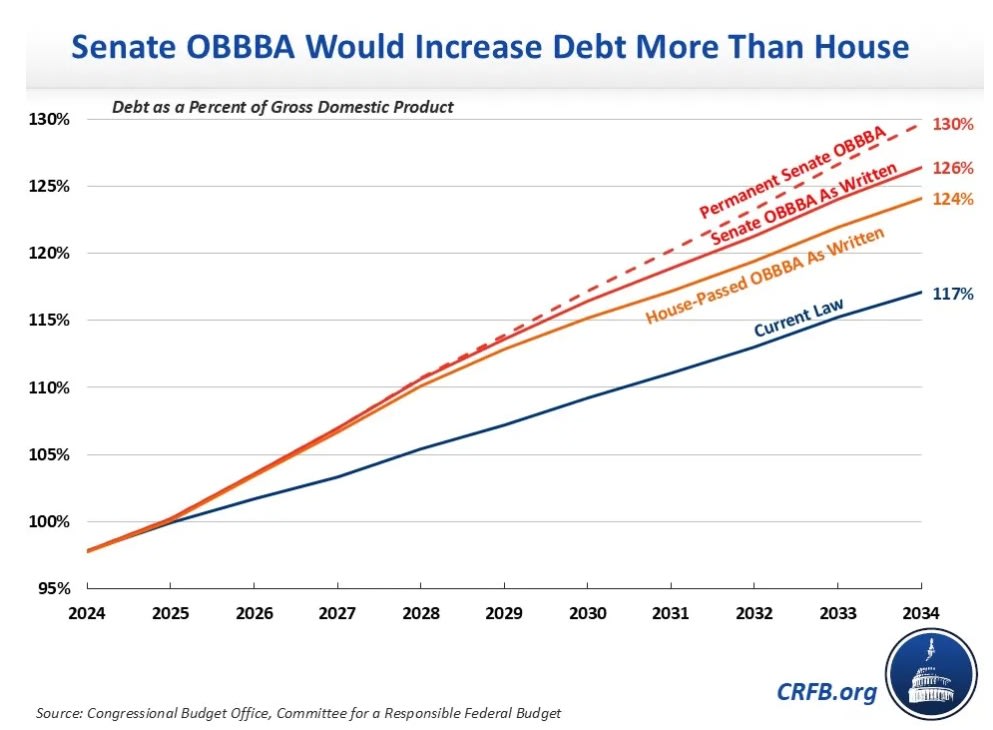

Those new tax cuts will require a staggering amount of government debt. Even with all the money that the OBBBA will cut from Medicaid, energy, and so on, the Senate version will add an estimated $3.9 trillion to the federal debt over the next decade. Federal debt is already on an unsustainable path, but this will get much worse after Trump’s big tax cut:

In fact, that’s a low estimate; the truth is probably much worse. As the CRFB points out, Trump’s bill pretends that some of its tax cuts will expire in the future, when in fact they will probably be made permanent. That would raise the debt cost of the bill by an additional trillion dollars or more.

What is the point of borrowing money to cut taxes? Every time they do this, Republicans claim that their tax cuts will supercharge economic growth so much that they’ll pay for themselves, and actually reduce the deficit. And if you go on the White House website, you can still find them making this claim:

MYTH: The One Big Beautiful Bill “increases the deficit.”

FACT: The One Big Beautiful Bill reduces deficits by over $2 trillion by increasing economic growth and cutting waste, fraud, and abuse across government programs at an unprecedented rate….President Trump’s pro-growth economic formula will reduce the deficit…MYTH: “But the CBO says….”

FACT: The Crooked Budget Office has a terrible record with its predictions and hasn’t earned the attention the media gives it. The CBO misreads the economic consequences of not extending the Trump Tax Cuts. The One Big Beautiful Bill delivers real savings that will unleash our economy and prevent the largest tax hike in history, resulting in historic prosperity, while lowering the debt burden.

In the 1980s, you could probably find a few economists who actually believed that tax cuts would pay for themselves. Nowadays, no one really thinks this is true; every big tax cut since the Reagan days has increased the federal debt.

In fact, Trump’s 2017 tax cuts were better in this regard than most. Economists generally agree that corporate taxes are more harmful to the economy than personal income taxes, so when Trump cut the corporate tax rate, some were optimistic. But while Trump’s first tax cut did spur a bit of growth, Chodorow-Reich and Zidar (2024) find that this only offset a tiny amount of the deficit that the tax cut created:

Domestic investment of firms with the mean tax change increases 20% versus a no-change baseline. Due to novel foreign incentives, foreign capital of U.S. multinationals rises substantially. These incentives also boost domestic investment, indicating complementarity between domestic and foreign capital. In the model, the long-run effect on domestic capital in general equilibrium is 7% and the tax revenue feedback from growth offsets only 2p.p. of the direct cost of 41% of pre-TCJA corporate revenue.

This time around, things are likely to be even worse, because of long-term interest rates.

In the late 2010s, for reasons we still don’t entirely understand, America was still in a disinflationary regime, where the Fed could and did keep interest rates at zero without causing inflation. ZIRP meant that big deficits didn’t push up interest rates. Since the pandemic, however, America has been in an inflationary regime, where the Fed has had to keep interest rates around 4-5% in order to prevent inflation from rising. In other words, it looks like interest rates have normalized, and that means there are probably no more free lunches.

There are basically three ways that increased debt can make long-term interest rates rise.

First, more government borrowing can increase inflation. People may assume the government will print money in order to help pay off the debt in the future. This can become a self-fulfilling prophecy, where businesses raise prices in order to get ahead of the inflation — thus causing the very inflation they feared. In this case, the Fed will have to hike short-term rates in order to squash inflation, and raising short-term rates causes long-term rates to rise as well.

Second, higher deficits can crowd out private investment. If banks and bond investors have only a limited amount to invest, then higher government debt can starve the private sector of capital, forcing everyone to pay higher interest rates in order to borrow.

Finally, higher deficits can introduce a default risk premium into long-term interest rates. Investors may assume that the government’s reckless borrowing is a sign that it doesn’t take its own future solvency seriously. At that point, they may start charging the U.S. government a premium to borrow money. If that happens, private businesses have to pay higher rates too.

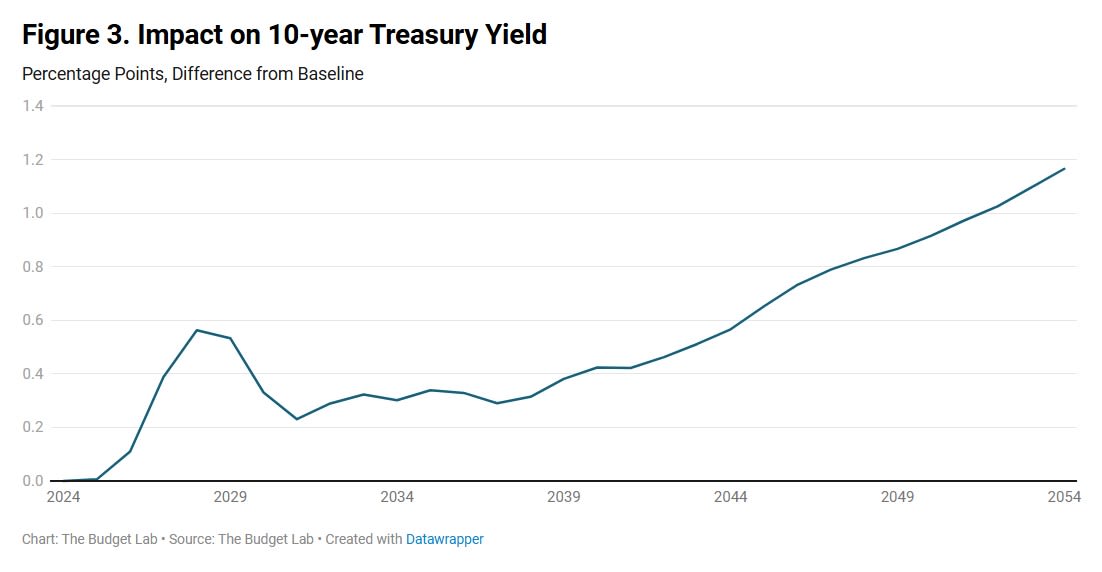

The Yale Budget Lab thus predicts that Trump’s OBBBA will cause long-term rates to rise:

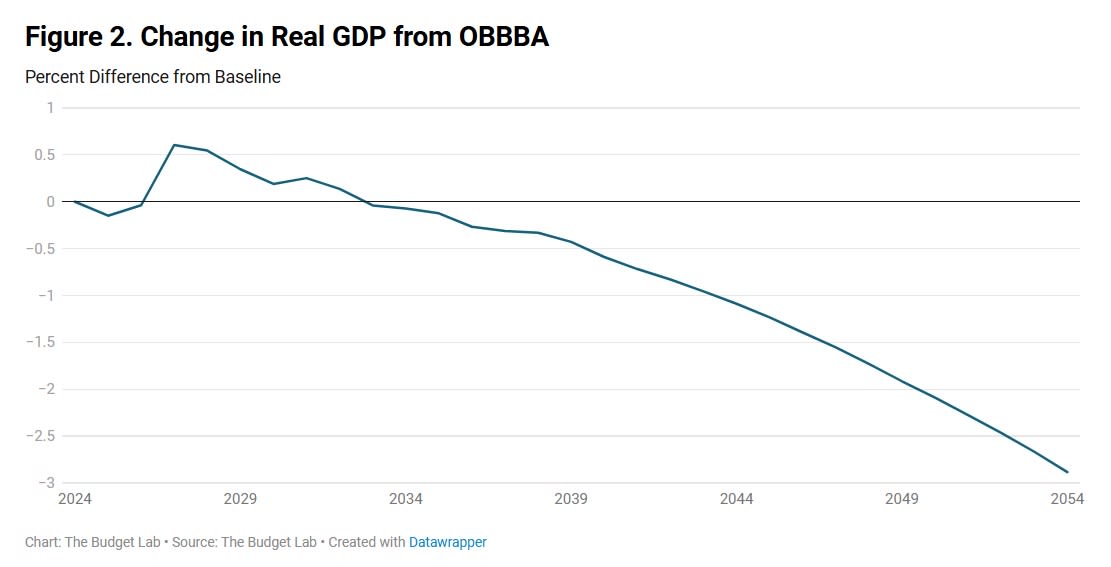

This would hurt U.S. economic growth, since higher interest rates make it harder for companies to borrow and invest and grow:

A 3% lower GDP is only a small hit to growth, but it’s in the wrong direction — instead of Trump’s new tax cuts paying for themselves, they’ll actually make themselves slightly harder to pay for. And increased rates will also put the U.S. government in a more perilous fiscal position, forcing it to borrow more and more just to service its debt in the future. This line is only going to go higher:

So I doubt that anyone in the administration or the GOP still believes the old line that tax cuts pay for themselves.

If juicing economic growth isn’t actually the point, why is the GOP running up government deficits in order to cut taxes by trillions of dollars? Essentially, they’re promising to tax tomorrow’s taxpayers — whether via higher taxes, inflation, or a sovereign default — in order to give today’s taxpayers a rebate. Why would they do that?

I can think of a number of reasons, none of them very encouraging about the state of our nation. The GOP might simply have an eye on the electoral cycle, hoping to hand out goodies in order to secure a donor base and shore up support among the wealthy voters who have been defecting to the Democrats in recent elections. They might think that a short-term Keynesian stimulus from tax cuts might outweigh the long-term economic drag for a couple of years, allowing them to do better in the 2026 midterms or the 2028 presidential election.

The darkest possibility is that some Republican leaders think that America is effectively a walking corpse of a nation — that its future is nonwhite and non-Christian, and therefore Republicans might as well simply raid and scavenge as much as they can before America turns into South Africa.

But that’s probably too dark. I think the most likely explanation is that Republicans and their existing donor base simply want the money. The U.S. electorate’s obsession with culture wars gives elites an opening to simply raid the U.S. Treasury without suffering major consequences. Few people really like the OBBBA, and the public probably realizes that the bill is an engine of upward redistribution. But maybe the GOP thinks that when the next election comes around, they’ll grumblingly ignore bread-and-butter issues and vote based on immigration, DEI, trans issues, or whatever the current culture war happens to be.

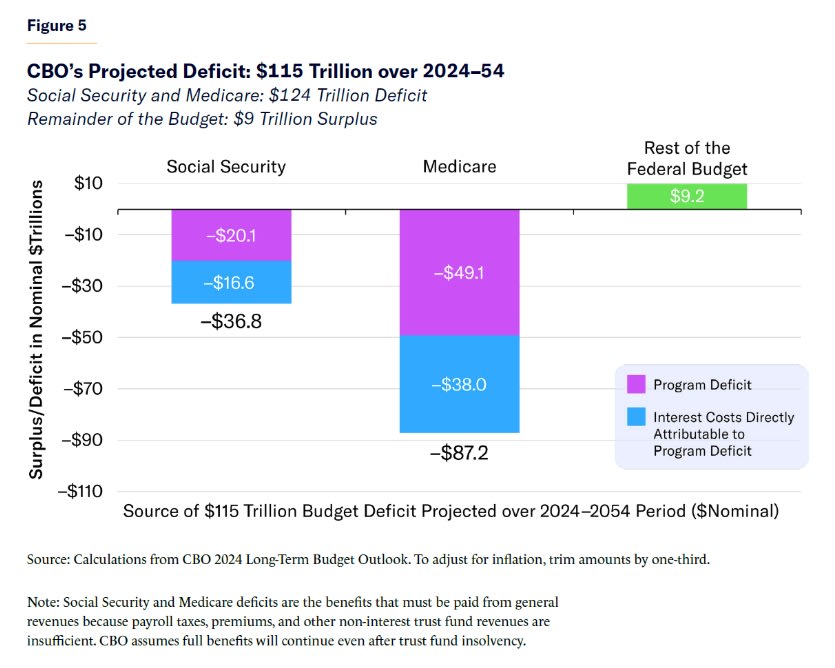

In any case, America simply can’t afford to keep giving big tax cuts to rich people. The government was already running massive deficits, and paying an increasing interest bill on its existing stock of debt. On top of that, the aging of the U.S. population means that Medicare (and to a lesser extent, Social Security) is going to end up costing a lot more over the next three decades:

All of this means that the U.S. has to both raise taxes and cut spending in order to maintain solvency and keep interest costs down.1 The Medicaid cuts that the Republicans are enacting are cruel, but unless our government comes up with some way to control health costs much more effectively than ever before, something along those general lines will eventually be necessary. There just isn’t much else that the government spends a lot of money on; defense spending has been cut to the bone, even as foreign threats proliferate:

So health spending was always going to have to be cut, and even if the poor could have been spared the brunt of those cuts, it was always going to hurt the middle class. That’s bad.

The way to make that bargain seem fair was always to tax the rich. In the 1990s, Bill Clinton hiked taxes on the rich, and managed to raise federal revenue from 16.7% of GDP in 1992 to 19% of GDP by 1998. 2.3% of GDP might not sound like a lot, but today that would be $690 billion a year, or $6.9 trillion over a decade. The rich didn’t even get particularly mad about this. And it ended up making Clinton’s fiscal austerity a lot more palatable to the masses, because people knew the rich were paying their fair share to help bring the deficit down.

And yet somewhere in the years since Clinton, the Democrats lost their appetite for taxing the rich. Obama taxed the rich a little bit, and Biden only by a token amount. The U.S. went from having one fiscally responsible party in the 1990s to having zero fiscally responsible parties. Debt soared under Obama, soared again in Trump’s first term, and then soared again under Biden:

If politicians keep getting rewarded for blowout deficits, massive health care spending increases, and tax cuts for the rich, the U.S. government’s solvency is eventually going to be called into question. We don’t know exactly when that will happen, but the amount of fiscal irresponsibility that bond market investors will be willing to tolerate is not infinite.

There are lots of things America’s government can no longer afford. One of those things is tax cuts for the rich.

Update: Why am I only saying “raise taxes on the rich” instead of “raise taxes on the middle class”? Commenter Dan writes:

The unpleasant reality that neither party wants to contemplate is that we don't just need to raise taxes and cut spending: we need to raise taxes _on the middle class_ and significantly cut spending. America already has one of the most steeply progressive tax systems in the world. There are lots of loopholes we can and should close to prevent the ultra-rich from paying less than their fair share -- I favor eliminating preferential capital gains tax, eliminating step-up in basis, taxing unrealized gains used as collateral to secure loans, and significantly reducing inheritance tax exemptions, myself -- but there just aren't enough ultra-rich to tax to plug the budget gaps, and they're already paying a significantly outsized share of taxes relative to their percentage of total national income.

People often idolize the welfare states of Northern Europe while neglecting to notice that those welfare states are not paid for with punitive taxes on the rich, but rather by tax rates on the middle class that are close to double what Americans pay. We've been living beyond our means as a nation for some time now, and that bill is coming due. And both parties are simply hoping the other one is forced to answer the door when the debt collectors come knocking.

Dan is absolutely right that if America wanted to have a welfare state like a European country, then we would have to raise taxes on the middle class — ideally through a VAT.

But America does not have a European-style welfare state. We have a welfare state, but it’s focused mostly on the poor, with highly targeted benefits. European countries, in contrast, provide broad, less-targeted services toward their middle classes and poor. This is why Europe’s government spending tends to be higher than America’s by about 8 to 12 percent of GDP (a really big gap):

Yes, if we want to be like Europe, we’re going to need big middle-class tax hikes. Progressives like Bernie Sanders don’t seem to understand this yet, but it’s true.

BUT, what America needs now is austerity. Before we decide whether we want a European-style welfare state, we need to get our fiscal house in order. That will involve painful spending cuts, especially to health care. Those cuts will fall heaviest on the middle class. To ask middle-class Americans to pay higher taxes on top of those benefit cuts would be both unfair and politically impossible.

Hence, for the sake of austerity, what we need are higher taxes on the rich. I’m absolutely looking forward to paying my fair share of that.

MMT people and some progressives will tell you that we don’t have to raise taxes or cut spending, because a sovereign country without much external debt can never be forced to default. This is technically true — with a few legal changes, the Fed could just print money to cover U.S. debts — but that would cause spiraling inflation, which is even more painful than austerity.

2025-07-01 16:38:15

America is an unsettled place. These days, it feels like every racial and religious group has reason to fear being singled out and persecuted, either with official discrimination or with outright violence.

Hispanic Americans — many of whom voted for Donald Trump — must now endure video after video of masked ICE agents storming buildings and carting away peaceable people, even some with U.S. citizenship. White Americans are subjected to an endless parade of stories about elite universities, publishers, and other institutions refusing to hire them no matter how qualified they are. Asian Americans were subjected to the terror of a wave of violent racist attacks a few years ago, even as they also hear about being kept out of the Ivy League. Black people have been somewhat less targeted for persecution in recent years than before, but still suffer some racist terror attacks, even as the Trump administration tries to scrub mentions of Black contributions from government communications. Muslim Americans suffered a wave of Islamophobic attacks in the mid-2010s, peaking around the time of Trump’s first election. Indian Americans, Haitian Americans, Somali Americans, and various other recent immigrant groups have all been the targets of xenophobia.

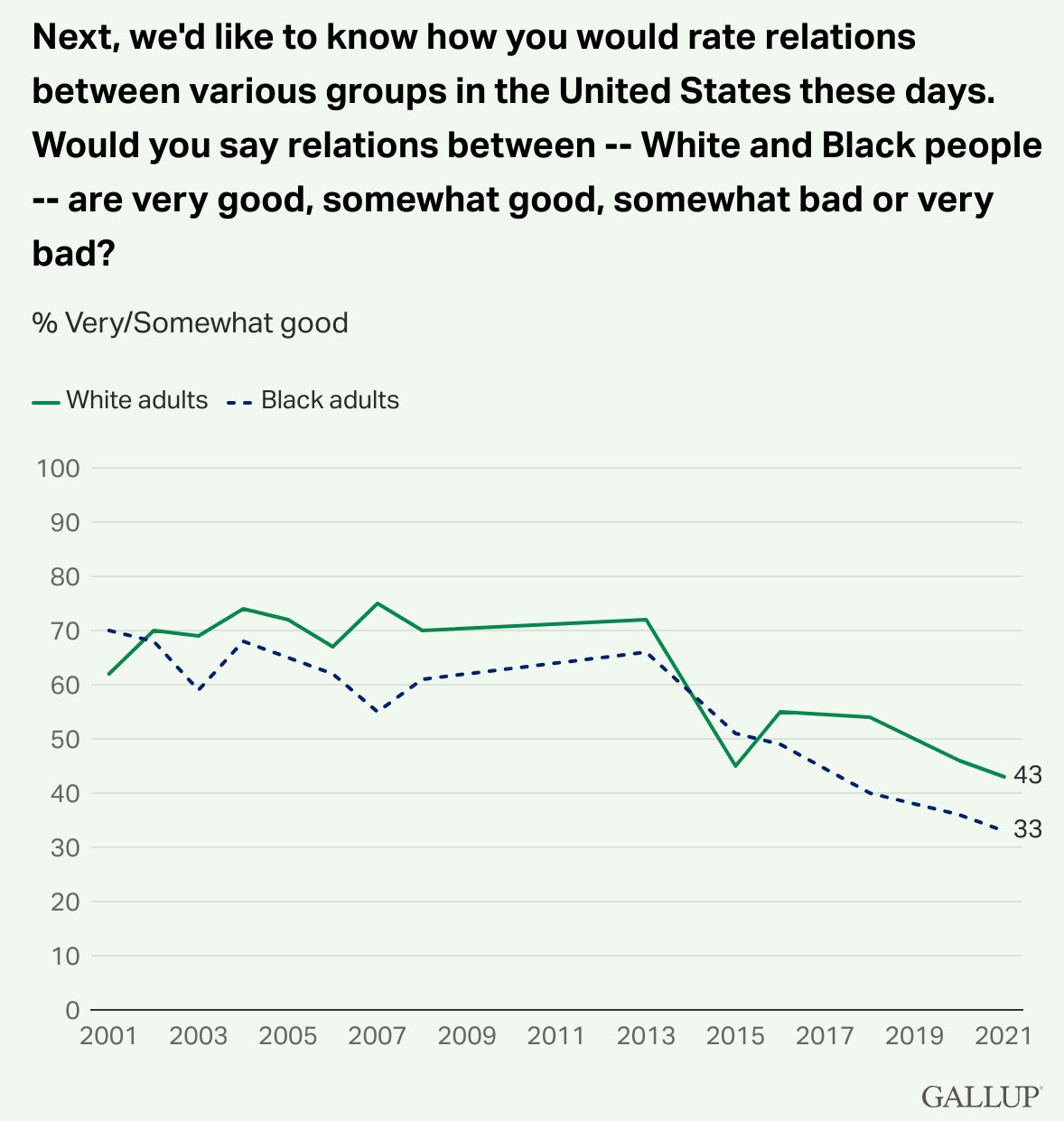

Americans keenly feel this general sense of racial, ethnic, and religious tension. Perceptions of race relations went into a steep long-term decline around a decade ago:

Jewish Americans1 have not been immune to this trend. In the 2010s, there was a wave of antisemitism on social media. In 2017, rightists at the infamous Charlottesville rally shouted “Jews will not replace us!”. And 2018 marked the deadliest antisemitic terror attack in the country’s history, when a white supremacist killed 11 Jews at a synagogue in Pittsburgh. There was also another less deadly synagogue shooting by another white supremacist in California in 2019. And in December of 2019, there were several attacks on Jews by a fringe sect called the Black Hebrew Israelites.

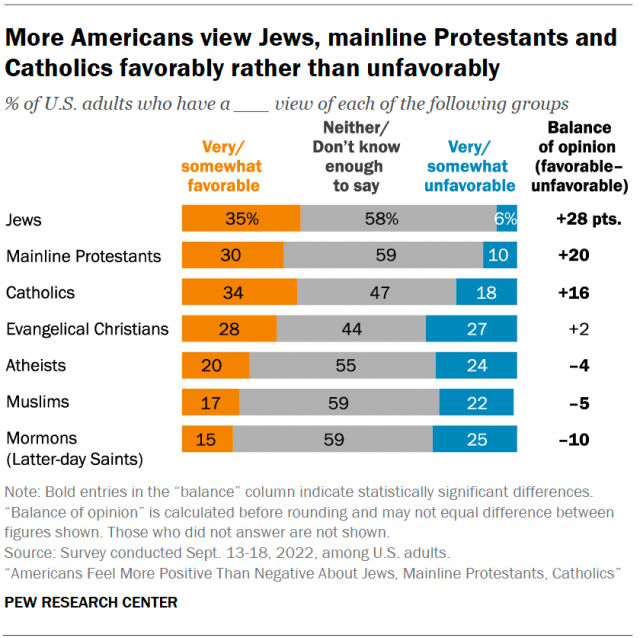

But despite these attacks, Jewish Americans could still tell themselves, even in the turbulent 2010s, that they were secure and accepted in their country. Surveys found that Americans were friendlier toward Jews than toward almost any other religious group:

This is probably not just lip service. Switching from face-to-face interviews to anonymous online surveys does NOT prompt Americans to express more antisemitism, as it would if people were just keeping their dislike of Jews under wraps for the sake of political correctness.

Jews also benefitted from not being at the center of any of the identity conflicts of the late 2010s. Although most Jews are racially White, their ancestors largely did not own any slaves, and they were not numerous or influential at the time of the founding of America; thus, they were able to partially dodge progressive accusations of white supremacy. And because they’re not a recent immigrant group, Jewish Americans also escaped the anti-immigrant backlash of the MAGA movement. At a time when conflicts over race and immigration made almost everyone in America feel threatened and unsettled, Jews were still able to feel relatively settled in their nation.

Then, of course, came October 7th, 2023. Hamas’ attacks on Israel, and Israel’s subsequent attacks on Gaza, rocketed the Palestinian cause from just one element in the left’s mosaic of issues to its central, defining cause. Although technically, opposition to the state of Israel is not the same thing as antisemitism, not all of the activists in the Palestine movement make such a clear distinction. Around the world, there has been a massive rise in antisemitic incidents — desecration of Jewish cemeteries and Holocaust memorials, vandalism of Jewish-owned businesses with no connection to Israel, attacks on synagogues, and so on.

The U.S. hasn’t been insulated from this wave. The FBI, which is unlikely to conflate anti-Israel sentiments with antisemitism (or at least, was unlikely to do this under President Biden), nevertheless recorded a 63% increase in anti-Jewish hate crimes from 2023 to 2024. The two people slaughtered in a gruesome execution-style killing in May at the Capital Jewish Museum in Washington D.C. ended up being Israeli embassy staffers, but the killer — who told the police “I did it for Palestine, I did it for Gaza” — couldn’t have known who they were. He just wanted to shoot someone at a Jewish museum, and assumed that this would strike a blow for Palestine.

It’s hard to forget this iconic image, from a rally of the New York chapter of the Democratic Socialists of America on October 8, 2023 — before Israel began its reprisals on Gaza:

To some, this simply seems like the continuation of a very old pattern, in which each country inevitably becomes hostile to Jews after a temporary period in which it was safe and welcoming, eventually forcing Jews to flee. In March of 2024, Franklin Foer wrote an article for The Atlantic entitled “The Golden Age of American Jews is Ending”. He wrote:

Liberalism helped unleash a Golden Age of American Jewry, an unprecedented period of safety, prosperity, and political influence. Jews, who had once been excluded from the American establishment, became full-fledged members of it. But that era is drawing to a close…Americans maintain a favorable opinion of Jews…But the memory of how quickly the best of times can turn dark has infused the Jewish reactions to events of the past decade. “When lights start flashing red, the Jewish impulse is to flee,” Jonathan Greenblatt, the head of the Anti-Defamation League, told me…

Back in 2016…after [Trump] won, many Jews actually hatched contingency plans…An immigration lawyer I know in Cleveland told me that he had obtained a German passport, and suggested that I call the German embassy in Washington to learn how many other American Jews had done the same…

I [recently] saw signs of flight in Oakland, where at least 30 Jewish families have been approved to transfer their children to neighboring school districts—and I heard similar stories in the surrounding area. Initial data collected by an organization representing Jewish day schools, which have long struggled for enrollment, show a spike in the number of admission inquiries from families contemplating pulling their kids from public school.

I wouldn’t panic just yet. But I think there are multiple forces that are causing American Jews to feel unsettled — a perfect storm. These are:

Extremists looking for clout in a particularly turbulent time

The resurgence of antisemitic memes, driven by the rise of social media and foreign influence campaigns

Jews’ declining importance in the American elite

2025-06-29 17:53:45

There are many things to despise about Trump’s deeply unpopular budget bill, known as the One Big Beautiful Bill, or BBB. It would expand the national debt to dangerous levels with irresponsible and unnecessary tax cuts. It would shift the distribution of income upward. But perhaps the most ridiculous, pointless, and downright insane feature of the BBB is its attack on American energy supply.

Previous versions of the BBB eliminated government subsidies for solar and wind energy. The new version now being debated in the Senate would actually add a tax on solar and wind energy. Politico reports:

Senate Republicans stepped up their attacks on U.S. solar and wind energy projects by quietly adding a provision to their megabill that would penalize future developments with a new tax…The new excise tax is another blow to the fastest-growing sources of power production in the United States, and would be a massive setback to the wind and solar energy industries since it would apply even to projects not receiving any [tax] credits…

Analysts at the Rhodium Group said in an email the new tax would push up the costs of wind and solar projects by 10 to 20 percent — on top of the cost increases from losing the credits…

The provision as written appears to add an additional tax for any wind and solar project placed into service after 2027…if a certain percentage of the value of the project’s components are sourced from prohibited foreign entities, like China. It would apply to all projects that began construction after June 16 of this year.

The language would require wind and solar projects, even those not receiving credits, to navigate complex and potentially unworkable requirements that prohibit sourcing from foreign entities of concern — a move designed to promote domestic production and crack down on Chinese materials.

In keeping with GOP support for the fossil fuel industry, the updated bill creates a new production tax credit for metallurgical coal, which is used in steelmaking.

Jesse Jenkins, a widely respected energy modeler and Princeton engineering prof, has estimated how much this GOP bill would raise taxes on solar energy, and it’s a lot:

Later in his thread, he explains how he arrived at these estimates.

But it gets worse! As Jenkins notes, the bill would also tax nuclear and geothermal energy and battery storage, and subsidize the coal industry:

The new draft of the 'One Big Beautiful Bill'…now contains FOUR tax increases on wind & solar projects — and two facing nuclear, geothermal, and batteries.

It ends tax cuts for wind & solar projects…

It kills accelerated depreciation available to wind & solar investments since 1986…

It imposes a new tax on wind & solar projects…

Raises taxes on US wind manufacturers…

The bill ALSO raises taxes on batteries, geothermal and nuclear projects that can't meet significant, burdensome requirements to prove not a drop of Chinese content as well. And they all lose accelerated depreciation too it seems…

The kicker: the bill raises taxes on the electricity technologies of the future while ALSO creating a new subsidy for coal used for steel making, coal that we…export to China so they can dump cheap dirty steel on the global market! THAT is the GOP's plan for energy dominance??…

And of course, it does that while murdering 100s of US manufacturing projects set to employ 100s of thousands of Americans in good paying jobs building the energy technologies of the future.

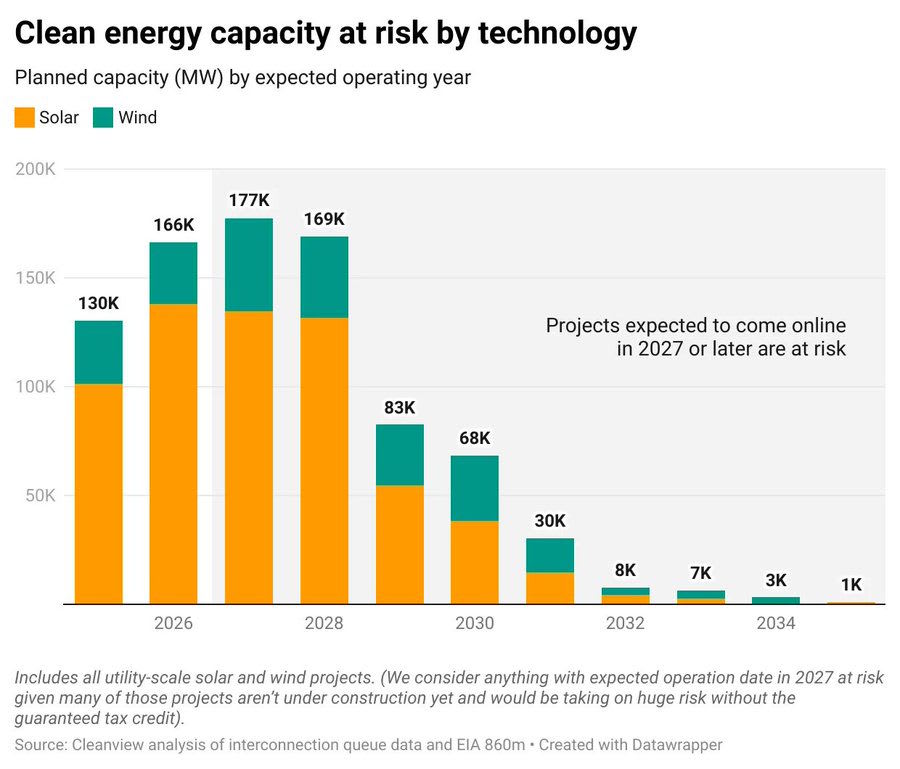

Michael Thomas of Clearview Energy, a company that tracks energy-related data, estimates that this bill will lead to the cancellation of more than 500 GW of planned energy supply in the U.S.:

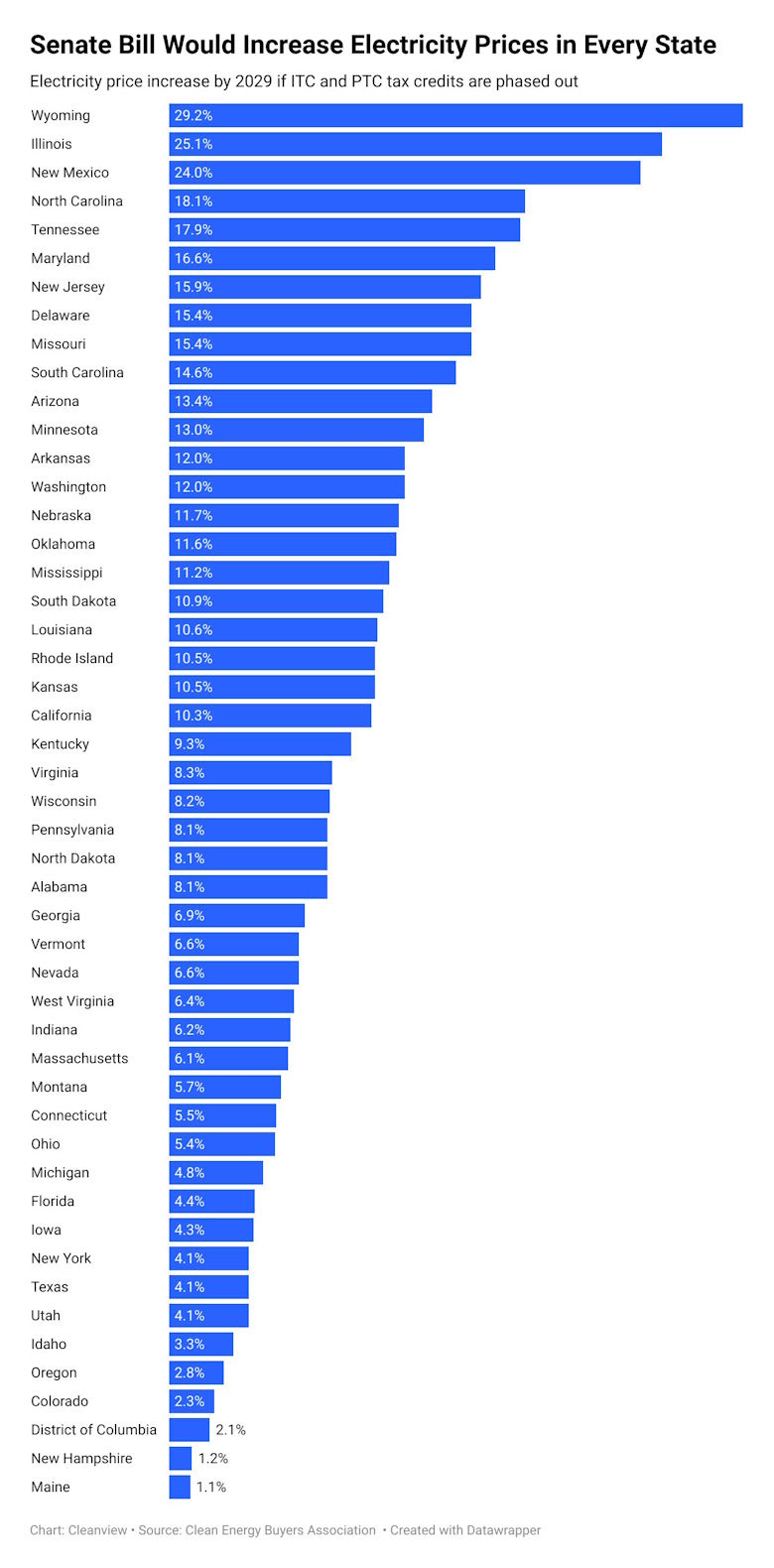

That would have represented more than a 42% increase in U.S. electricity production, and it would have gone to power homes, factories, offices, data centers, and more. Now, under Trump’s budget, that will all be gone. Thomas estimates that this will lead to substantial increases in electricity bills for Americans:

CNN has an interactive tool that allows Americans to see how much their energy bills could go up if Trump’s BBB passes, according to estimates from the think tank Energy Innovation. They write:

Red states including Oklahoma, South Carolina and Texas could see up to 18% higher energy costs by 2035 if Trump’s bill passes, compared with a scenario where the bill didn’t pass…Annual household energy costs could rise $845 per year in Oklahoma by 2035, and $777 per year in Texas. That’s because these states would be set to deploy a massive amount of wind and solar if Biden-era energy tax credits were left in place. If that goes away, states will have to lean on natural gas to generate power.

Trump’s bill wouldn’t just make energy more expensive; it would make it less reliable too. In Texas, adding solar and batteries to the grid has allowed it to avoid blackouts. This is from the New York Times last year:

During the scorching summer of 2023, the Texas energy grid wobbled as surging demand for electricity threatened to exceed supply. Several times, officials called on residents to conserve energy to avoid a grid failure.

This year it turned out much better — thanks in large part to more renewable energy.

The electrical grid in Texas has breezed through a summer in which, despite milder temperatures, the state again reached record levels of energy demand. It did so largely thanks to the substantial expansion of new solar farms.

And the grid held strong even during the critical early evening hours — when the sun goes down and the nighttime winds have yet to pick up — with the help of an even newer source of energy in Texas and around the country: batteries.

The federal government expects the amount of battery storage capacity across the country, almost nonexistent five years ago, to nearly double by the end of the year. Texas, which has already surpassed California in the amount of power coming from large-scale solar farms, was expected to gain on its West Coast rival in battery storage as well.

Here are some quotes from the CEO of ERCOT, Texas’ grid operator, which explain why adding solar and batteries makes electricity more reliable:

A little over 9,000 megawatts of new energy coming online, new supply. The bulk of that is in the solar and energy storage categories…Those are extremely helpful during the summer seasons…The peak in the summer of course is in the afternoon at the peak heat when air conditioning load is at its highest. Solar energy is very well suited to help support that. Energy storage resource is very well positioned to help during the evening ramps…We are seeing as a result of this that the peak risk hour which is generally around 9 PM in the summer evenings, that the risk of emergency events during that hour is shrinking, dropping from over 10% a year ago to under 1% this year, again because of the… new resources.

And of course nuclear and geothermal, which Trump also wants to raise taxes on, are among the most stable energy sources that exist.

It’s also notable how many GOP districts and red states would be hurt by this disastrous policy. Although I don’t believe the purpose of industrial policy should be to create jobs, it’s undeniable that the boom in solar, wind, and batteries has generated a ton of economic activity in Republican-leaning areas.

Why would Trump and the GOP Congress want to raise energy prices for Americans, make electricity less reliable, and destroy their own voters’ jobs? One possibility is that the GOP is captured by fossil fuel interests, which could explain why the BBB goes after nuclear and geothermal as well as renewables. Although I think this is probably true of some representatives and senators, I don’t think Trump himself is captured by Big Fossil Fuels — especially because he was perfectly willing to hurt those industries with his tariffs.

I find two additional reasons to be especially compelling:

Trump and his people are deeply ignorant and misinformed about how energy works, and

Trump and his people see attacks on solar, batteries, and other non-fossil-fuel energy technologies as part of a culture war against progressive culture, which often overrides economic concerns.

Trump’s budget bill claims a national security justification for its taxes on solar, batteries, nuclear and geothermal — it frames the taxes as being about blocking imports from China. But in his public communications, Trump’s Energy Secretary Chris Wright makes it clear that the real goal is to attack new energy technologies themselves:

How much would you pay for an Uber if you didn’t know when it would pick you up or where it was going to drop you off? Probably not much…Yet this is the same effect that variable generation sources like wind and solar have on our power grids…You never know if these energy sources will actually be able to produce electricity when you need it — because you don’t know if the sun will be shining or the wind blowing…Even so, the federal government has subsidized these sources for decades, resulting in higher electricity prices and a less stable grid.

President Donald Trump knows what to do: Eliminate green tax credits from the Democrats’ so-called Inflation Reduction Act, including those for wind and solar power…As secretary of energy — and someone who’s devoted his life to advancing energy innovation to better human lives — I, too, know how these Green New Deal subsidies are fleecing Americans…Wind and solar subsidies have been particularly wasteful and counterproductive…

Climate change activists are predictably up in arms over efforts to end the subsidies…

If sources are truly economically viable, let’s allow them to stand on their own, and stop forcing Americans to pick up the tab if they’re not. [emphasis mine]

Wright’s statements — which we should assume speak for the Trump administration and the BBB’s supporters in general — make three things clear.

First, Trump and GOP leaders deeply and honestly believe that non-fossil-fuel energy sources are unreliable. This is because they’re grossly misinformed. They have not examined the data from Texas’ positive experience with solar and batteries. They do not understand how much batteries have come down in cost, and how much cheaper they could get. They do not understand the basic fact that batteries can also be charged by natural gas plants, meaning that batteries make the grid more reliable no matter how the power is being produced.

This is partly because the Trump administration, and the GOP leadership, are trapped in an information bubble in which they only hear from people who bash solar and batteries. Consider this tidbit from a recent NYT article:

Alex Epstein, an author and founder of a think tank that argues fossil fuels are crucial for human prosperity, is one of the most influential figures calling for the permanent elimination of all clean-energy subsidies by 2028…He has described wind and solar subsidies as “immensely harmful” to the nation’s power grid and “a cancer we have to get rid of.”…In a recent interview on Mr. Epstein’s podcast, Representative Chip Roy, Republican of Texas, said wind and solar jobs made the United States “weaker” and likened subsidies that create such employment to the drug trade. Mr. Epstein agreed, calling them “fentanyl jobs.”

These are the kinds of people that Trump is listening to. They are not serious, respected, or honest energy modelers like Jesse Jenkins. They are polemicists who have a fixed belief that solar and batteries are bad, and they have never updated this belief in light of the incredible cost declines for those technologies.

Second, it’s clear that Trump and the GOP leadership think the main purpose of solar and batteries is to reduce carbon emissions to fight climate change. Their statements about these technologies constantly reference “climate”. The notion that solar and batteries actually make energy cheaper for Americans, with or without climate change, has not entered the collective Republican consciousness yet.

And finally, it’s clear that Trump and the GOP leadership see their attacks on American energy supply as part of a culture war rather than purely economic policy. This is apparent from Wright’s gleeful mention of how angry “climate change activists” will be at Trump’s bill. It’s also obvious from the words like “cancer” and “fentanyl” that opponents of solar and batteries use to describe those technologies. And from the disingenuousness of Wright’s argument — he says solar and batteries should “stand on their own”, while trying to levy heavy taxes on them — we should conclude that there are deeper motivations at work than pure dollars and cents.

Basically, most of the GOP decided long ago that solar and batteries were some hippy-dippy bullshit that lefty activists were trying to force on America in order to bring down capitalism. And they have simply not reconsidered, looked at new evidence, or updated their belief in decades, even as advances in solar and battery technology have utterly changed the game. They are confident that attacking solar and batteries won’t hurt the U.S. economy, and so will give them an easy, safe way to own the libs.

One Republican who clearly does understand both the power and the importance of solar and batteries is Elon Musk, who has been tweeting relentlessly against Trump’s bill, and who makes many good points about the importance of new energy technologies. For example:

Musk knows that if AI data centers can’t get batteries, they can’t operate cheaply in the U.S. And if data centers leave the U.S., China will have a much easier time winning the AI race.

But Trump and his people are not listening to Musk. They are not listening to anyone, except to ignorant fawning toadies who flatter their existing beliefs and insult their enemies for them. Their fingers are stuck firmly in their ears, so they won’t hear the sounds of catastrophe as they kick over the physical foundations of American prosperity.

This was also the pattern we saw with tariffs, an act of national self-sabotage Trump only paused when the bond market threatened to demolish the entire U.S. economy before Trump’s term was up. This time, bond markets are unlikely to intervene, since the threat comes from a stolen future rather than a disrupted present.

The American people, for the most part, get the general gist of Trump’s ideology-driven approach to economic policy, and they’re not happy about it. Poll after poll shows that even many Republicans despise the One Big Beautiful Bill. This is from NBC a few days ago:

Nonpartisan polls released this month show that voters have a negative perception of the bill…A Fox News poll found that 38% of registered voters support the “One Big Beautiful Bill” based on what they know about it, while 59% oppose it.

The survey found that the legislation is unpopular across demographic, age and income groups. It is opposed 22%-73% by independents, and 43%-53% among white men without a college degree, the heart of Trump’s base.

A Quinnipiac University poll found that 27% of registered voters support the bill, while 53% oppose it. Another 20% had no opinion. Among independents, 20% said they support it and 57% said they oppose it.

A KFF poll found that 35% of adults have a favorable view when asked about the “One Big Beautiful Bill Act,” while 64% have an unfavorable view. Just 27% of independents said they hold a favorable view of it.

A survey from Pew Research Center found that 29% of adults favor the bill, while 49% oppose it. (Another 21% said they weren’t sure.) Asked what impact it would have on the country, 54% said “a mostly negative effect,” 30% said “a mostly positive effect” and 12% said “not much of an effect.

A poll by The Washington Post and Ipsos found that 23% of adults support “the budget bill changing tax, spending and Medicaid policies,” while 42% oppose it. Another 34% had no opinion.

The bill’s self-defeating policies are probably only a small part of this, especially since the Senate version of the bill — which actively taxes new energy technologies instead of just removing subsidies — hadn’t even been released when these polls were taken. Debt increases, Medicaid cuts, and tax cuts for the rich are driving these negative polls. But if and when Americans realize that the GOP is also trying to make their energy bills go up in order to fight a stupid outdated culture war, I expect them to sour on the bill even further.

But I’m not sure how much that will matter. Trump and the GOP leadership have shown that appeasing public opinion is very low on their list of priorities.

This is a recipe for national failure. Countries that turn their back on the march of technology — whose leaders insist on ignoring extant reality in favor of internecine status battles and feuds — have historically declined, while countries that embrace technological progress and bow to physical realities have dominated. Right now, the country that is embracing progress and bowing to physical realities in the energy space is China, not America.

2025-06-27 14:45:23

“Can you picture what we’ll be/ So limitless and free/ Desperately in need of some stranger’s hand” — The Doors

In the 1990s and 2000s, a lot of science fiction focused on what Vernor Vinge called “the Singularity” — an acceleration of technological progress so dramatic that it would leave human existence utterly transformed in ways that it would be impossible to predict in advance. Vinge believed that the Singularity would result from rapidly self-improving AI, while Ray Kurzweil associated it with personality upload. But both believed that something big was on the way.

In the late 2000s and 2010s, as productivity growth slowed down, these wild expectations got tempered a bit. Cory Doctorow and Charles Stross poked fun at the idea of the Singularity as “the rapture of the nerds”. And some bloggers, like Brad DeLong and Cosma Shalizi, began to argue that the true Singularity was in the past, when the Industrial Revolution freed us from the constraints of daily hunger and scarcity. Here’s Shalizi:

The Singularity has happened; we call it "the industrial revolution" or "the long nineteenth century". It was over by the close of 1918…Exponential yet basically unpredictable growth of technology, rendering long-term extrapolation impossible (even when attempted by geniuses)? Check…Massive, profoundly dis-orienting transformation in the life of humanity, extending to our ecology, mentality and social organization? Check…Embrace of the fusion of humanity and machines? Check…Creation of vast, inhuman distributed systems of information-processing, communication and control, "the coldest of all cold monsters"? Check; we call them "the self-regulating market system" and "modern bureaucracies" (public or private), and they treat men and women, even those whose minds and bodies instantiate them, like straw dogs…An implacable drive on the part of those networks to expand, to entrain more and more of the world within their own sphere? Check…

Why, then, since the Singularity is so plainly, even intrusively, visible in our past, does science fiction persist in placing a pale mirage of it in our future? Perhaps: the owl of Minerva flies at dusk; and we are in the late afternoon, fitfully dreaming of the half-glimpsed events of the day, waiting for the stars to come out.

I agree that the Industrial Revolution represented an abrupt, unprecedented, and utterly transformational change in the nature of human life. Human life until the late 1800s had been defined by a constant desperate struggle against material poverty, with even the bounty of the agricultural age running up against Malthusian constraints. Suddenly, in just a few decades, humans in developed countries were fed, clothed, and housed, and had leisure time to discover who they really wanted to be. It was by far the most important thing that had ever happened to our species:

And it’s important to note that this transformation wasn’t just a result of technology giving humans more stuff. It depended crucially on reductions in human fertility. As Brad DeLong documents in his excellent book Slouching Towards Utopia, after a few decades, the Industrial Revolution prompted humans to start having fewer children, which prevented the bounty of industrial technology from eventually being dissipated by the old Malthusian constraints.

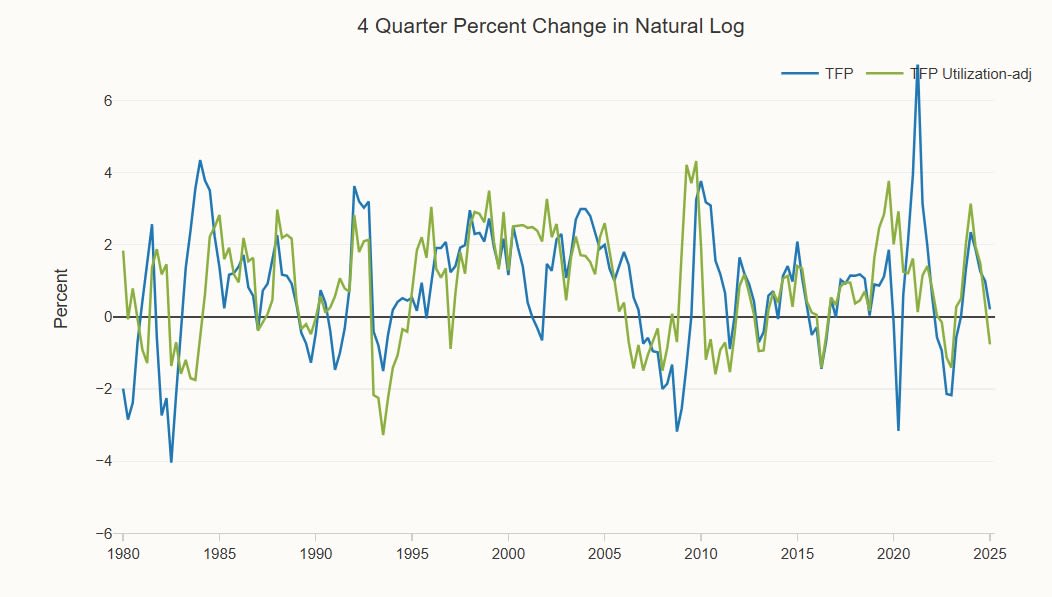

Since the productivity slowdown of the mid-2000s, it has become fashionable to say that the Singularity of the Industrial Revolution is over, and that humanity has reached a plateau in living standards. Although some people expect generative AI to re-accelerate growth, we haven’t yet seen any sign of such a mega-boom in either the total factor productivity numbers or the labor productivity numbers:

Of course, it’s still early days; AI may yet produce the vast material bounty that optimists expect. And yet even if it never does, I don’t think that means humanity is in for an era of stagnation. The Industrial Revolution was only transformative because it changed the experience of human life; a GDP line on a chart is only important because it’s correlated with so many of the things that matter for human beings.

And so if new technologies and social changes fundamentally alter what it means to be human, I think their impact could be as important as the Industrial Revolution itself — or at least, in the same general ballpark. In a post back in 2022 and another in 2023, I listed a bunch of ways that the internet has already changed the experience of human life from when I was a kid, despite only modest productivity gains. Looking forward, I can see even bigger changes already in the works.

In key ways, it feels like we’re entering a posthuman age.

When countries get richer, more urbanized, and more educated, their birth rates fall by a lot — this is known as the “fertility transition”. Typically, this means that the total fertility rate goes from around 5 to 7 to around 1.4 to 2. This is mostly a result of couples choosing to have fewer children. Here’s a chart where you can see the fertility transition for a bunch of large developing countries in Asia, Africa, Latin America, and the Middle East:

2 children per woman1 is around the level where population is stable in the long term — actually, it’s about 2.1 for a rich country and 2.3 for a poor country, to take into account the fact that some kids don’t survive until adulthood. But basically, going from 5-7 kids per woman to 2 means that your population goes from “exploding” to “stable”.

For some rich countries like Japan, fertility fell to an especially low level, of around 1.3 or 1.4. This implied long-term population shrinkage — Japan’s population began shrinking in the 2000s — and an increasing old-age dependency burden. But as long as this low level of fertility was confined to a few countries, it didn’t feel like an emergency — a few rich nations like America, New Zealand, France, and Sweden still managed to have fertility rates that were at or near replacement. For everyone else, there was always immigration.

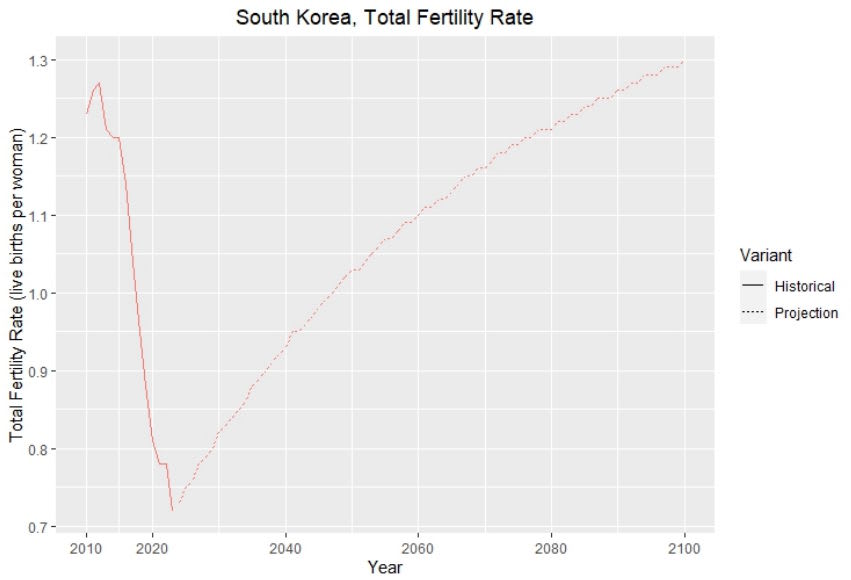

That’s where the dialogue on fertility stood in 2015. But over the past decade, there has been a second fertility transition in rich countries, from low levels to very low levels. Even countries like the U.S., France, New Zealand, and Sweden have now switched to rates well below replacement, while countries like China, Taiwan, and South Korea are at levels that imply catastrophic population collapses over the next century:

Meanwhile, the rate of fertility decline in poor countries has accelerated. The UN calls the drop “unprecedented”.

The economist Jesus Fernandez-Villaverde believes that things are even worse than they appear. Here are his slides from a recent talk he gave called “The Demographic Future of Humanity: Facts and Consequences”. And here’s a YouTube video of him giving the talk:

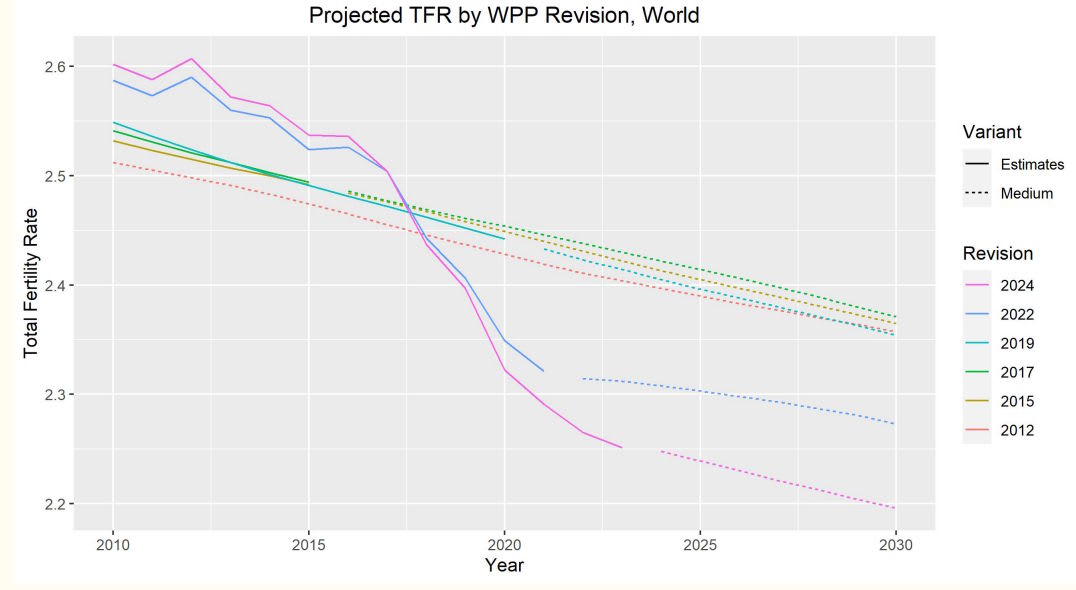

Fernandez-Villaverde notes that the statistical agencies tasked with estimating current global fertility and making future projections have consistently revised their numbers down and down:

This doesn’t just mean people are having fewer kids; it means that because of past errors in estimating how many kids people had, there are now fewer people to have kids than we thought. Fernandez-Villaverde shows that this is true across nearly all developing countries. As a result of these mistakes, Fernandez-Villaverde thinks the world is already at replacement-level fertility.

Furthermore, population projections are based on assumptions that fertility will bounce sharply back from its current lows, instead of continuing to fall. Those predictions look a little bit ridiculous when you show them on a graph:

As a result, Fernandez-Villaverde thinks total global population is going to peak just 30 years from now.

This is a big problem. The first fertility transition was a good thing — it was the result of the world getting richer, it saved human living standards from hitting a Malthusian ceiling, and it seemed like with wise policies, rich countries could keep their fertility near replacement rates. But this second fertility transition is going to be an economic catastrophe if it continues.

The difference between a fertility rate of 1 and a rate of 2 might seem a lot smaller than the difference between 2 and 6. But because of the math of exponential curves, it’s actually just as important of a change. Going from 6 to 2 means your population goes from exploding to stable; going from 2 to 1 means your population goes from stable to vanishing.

This is going to cause a lot of economic problems. I wrote about these back in 2023:

Shrinking populations are continuously aging populations, meaning that each young working person has to support more and more retirees every year. On top of that, population aging appears to slow down productivity growth through various mechanisms. Immigration can help a bit, but it can’t really solve this problem, since A) when the whole world has low fertility there is no longer a source of young immigrants, and B) immigration is bad at improving dependency ratios because immigrants are already partway to retirement.

And in the long run, shrinking populations could slow down productivity growth even more, by shrinking the number of researchers and inventors; this is the thesis of Charles Jones’ 2022 paper “The End of Economic Growth? Unintended Consequences of a Declining Population”. Unless AI manages to fully replace human scientists and engineers, a shrinking population means that our supply of new ideas will inevitably dwindle.2 Between this effect and the well-documented productivity drag from aging, the idea that we’ll be able to sustain economic growth through automation seems dubious.

What’s going on? Unlike the first fertility transition, this second one appears driven by increasing childlessness — people never forming couples or having kids at all, instead of simply having fewer kids. And although it’s not clear why that’s happening, the obvious culprit is technology itself — mobile phones and social media. This is Alice Evans’ hypothesis, and there’s some evidence to suggest she’s right. In China, “new media” (i.e. social media) use was found to be correlated with low desire to have children. The same correlation has been found in Africa.

Of course better research is needed, particularly natural experiments that look at the response to some exogenous factor that increases social media use. But the timing and the worldwide nature of the decline — basically, every region of the globe started getting sharply lower fertility starting in the mid to late 2010s — makes it difficult to imagine any other cause. And the general mechanism — internet use substituting for offline family relationships — is obvious.

Economic stagnation isn’t the only way the Second Fertility Transition will change our society. The measures we take to try to sustain our population will leave their mark as well. Last November, I looked at the history of pronatal policies, and concluded that things like paying people to have more kids, or making it easier to have kids, or encouraging cultural changes are unlikely to work:

Unfortunately, that’s likely to lead to more coercive solutions. In my post, I predicted that countries would try to cut childless people off from old-age pensions and medical benefits:

In the past, when fertility rates were high, children served an economic purpose — they were farm labor, and they were also people’s old-age pension. If parents lived past the point where they were physically able to work, their children were expected to support them. In order to make sure you had at least a few kids who survived long enough to support you, you had to have a large family.

Denying old-age benefits to the childless would be an obvious way to try to reproduce this premodern pattern. This would, of course, result in horrific widespread old-age poverty for those who didn’t comply…I predict that some authoritarian states — China, perhaps, or Russia, or North Korea — will eventually turn to ideas like this if no one ever finds a way to raise fertility voluntarily.

This idea actually comes from a 2005 paper by Boldrin et al., who find that if you model fertility decisions as an economic calculation, then Social Security and other old-age transfers are responsible for much of the fertility decline in rich nations:

In the Boldrin and Jones' framework parents procreate because the children care about their old parents' utility, and thus provide them with old age transfers…The effect of increases in government provided pensions on fertility…in the Boldrin and Jones model is sizeable and accounts for between 55 and 65% of the observed Europe-US fertility differences both across countries and across time and over 80% of the observed variation seen in a broad cross-section of countries.

Ending old-age benefits for the childless would be a pretty dystopian policy. But in the long run, extreme population aging, coupled with slower productivity growth, will make it economically impossible for young people to support old people no matter what policies government enact.3

And if desperate, last-ditch draconian measures fail, we will shrink and dwindle as a species. The vitality and energy of young people will slowly vanish from the physical world, as the youth become tiny islands within a sea of the graying and old. Already I can feel this when I go to Japan; neighborhoods like Shibuya in Tokyo or Shinsaibashi in Osaka that felt bustling and alive with young people in the 2000s are now dominated by middle-aged and elderly people and tourists.

And as population itself shrinks, the built environment will become more and more empty; whole towns will vanish from the map, as humanity huddles together in a dwindling number of graying megacities. Our impact on the planet’s environment will finally be reduced — we will still send out legions of robots to cultivate food and mine minerals, but as our numbers decrease, our desire to cannibalize the planet will hit its limits.

But even as humanity shrinks in physical space, we will bind ourselves more tightly together in digital space.

When I was a child, sometimes I felt bored; now I never do. Sometimes I felt lonely; now, if I ever do, it’s not for lack of company. Social media has wiped away those experiences, by putting me in constant contact with the whole vast sea of humanity. I can watch people on YouTube or TikTok, talk to my friends in chat groups or video calls, and argue with strangers on X and Substack. I am constantly swimming in a sea of digitized human presences. We all are.

Humanity was never fully an individual organism. Our families and communities were always collectives, as were the hierarchies of companies and armies and even the imagined communities of nation-states. But the internet has made the collective far larger than it was. In many ways it’s also more connected; one survey found that the average American spends 6 hours and 40 minutes, or more than a third of their waking life, online. About 30% of Americans say they’re online almost constantly.

The results of this constant global connectedness are far too deep and complex to deal with in one blog post. But one important result is to replace some fraction of individual human effort with the preexisting effort of the collective.

Instead of figuring out how to fix our own houses, build our own furniture, or install our own appliances, a human in 2021 could watch YouTube videos. Instead of figuring out how to write a difficult piece of code, a programmer could ask the Stack Exchange forum. Instead of creating a new funny video from scratch, a social media influencer could use someone else’s audio track. It simply became easier to stand on the shoulders of giants than to reinvent the wheel.

Whether this leads to an aggregate decrease in human creativity is an open question; some have made this argument, but I’m not sure whether it’s right.4 But what’s clear is that the more everyone is always relying on the collective for everything they do, the less individual effort matters. In the Industrial Age, we valorized individual heroics — the brilliant scientist, the iconoclastic writer, the contrarian entrepreneur, the bold activist leader. In an age when it’s always easier to rely on the wisdom of crowds, those heroes matter less.

Compare the activists of the 2010s to the activists of the mid 20th century. The 20th century produced Black activist leaders like MLK, John Lewis, Malcolm X, Rosa Parks, Bobby Seale, and many others. But who were the equivalent heroes of the Black Lives Matter movement of the 2010s? There were none.5 The movement was an organic crowd, birthed by social media memes instead of by rousing speeches. Each individual activist made tiny incremental contributions, and the movement rolled forward as a headless, collective mass.

Or consider science and technology in the age of the internet. China is now probably the world’s leader in scientific research, but it’s hard to name any big significant breakthrough that has come out of China in recent years; the innovations are important but overwhelmingly incremental. Even in the U.S., where incentives for breakthroughs are a little better, science has become notably less “disruptive” in recent years. Some of this may be because humans have already picked the low-hanging fruit of science, and some might be because of the increasing “burden of knowledge” for young researchers to get up to speed. But some might simply be because an age of seamless global information transmission makes it easier for researchers to get “base hits” while leaving the cost of “home runs” the same.

Even AI, the great breakthrough of the age, has been a massive collective effort more than the inspiration of a few geniuses. Even the people who have received the greatest honors for developing AI — Geoffrey Hinton, Yann LeCun, etc. — are not really regarded as the “inventors” of the technology. Towering figures are still somewhat common in biology — Kariko and Weissman, Doudna and Charpentier, Feng Zhang, Allison & Honjo, David Liu — but in the age of the internet, research is becoming a more collective enterprise.

And all that was before generative AI. Large language models are trained on the collected writings of humankind; they are an expression of the aggregated wisdom of our species’ collective past. When you ask a question of ChatGPT or DeepSeek, you’re essentially consulting the spirits of the ancestors.6

As with the internet, it’s unclear whether LLMs will make humanity more creative as a whole, or less. My bet is strongly on “more”. But at the individual level, AI substitutes for our own creative efforts. Kosmyna et al. (2025) recently did an experiment showing that people who use ChatGPT to help them write essays end up with weaker individual cognitive skills: