2025-06-27 21:05:00

Outrunning Giants: Building in OpenAI’s Shadow

From 2016-2021, a flurry of writing assistants, conversational chatbots, travel booking platforms, and meeting transcription tools popped up on the AI scene, many growing at breakneck paces.

And then along came ChatGPT’s steady rollout of new features: from freemium chatbots and writing tools in 2021 to, the release of Operator for booking in January 2025, and Record mode for meeting transcription in June 2025 — putting real strain on startups trying to compete.

This is a refrain I hear over and over in consumer AI investing: how can you build consumer applications when OpenAI has such a lion’s share of user attention (and data) to build any tool it wants.

When a single company begins to dominate a category, the instinct is to copy or cave. But the correct move is to find the spaces where the behemoth can’t compete. Where its own size might work against it. During Web 2.0, Google was that behemoth, bulldozing everything from Yahoo and MapQuest to AltaVista and AskJeeves. Still, e-commerce and social carved out their own ground beyond Google’s grasp.

Now OpenAI towers in consumer AI with hundreds of millions of weekly users and vast compute at its back. So where might value accrue outside its shadow?

The Gravity of the Giant

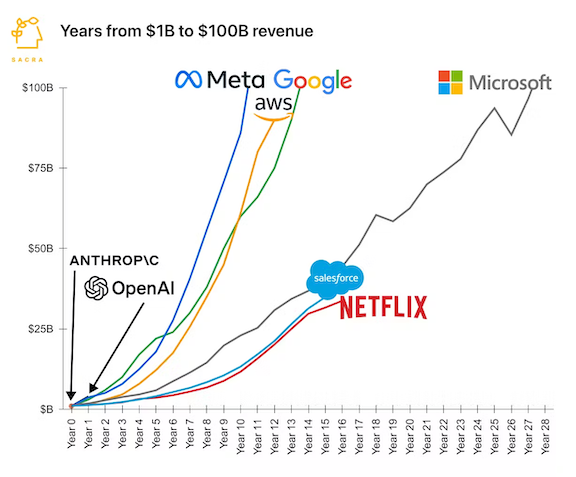

OpenAI’s ChatGPT has become a default destination for anyone exploring what consumer AI can do. Its freemium launch hit 1 million users in days and reached a record breaking 100 million MAUs in just two months.

What’s even more remarkable is the retention: over 80% of active users return regularly, and paid subscribers show retention north of 70% after six months.

That level of attention fuels personalization: the more you use it, the more it learns your patterns, preferences, and shortcuts. It’s human nature to stick with what feels familiar, but the ever-improving feedback loop of AI only magnifies that tendency. Consumer apps face both OpenAI’s head start and this natural stickiness. But just as Google could not put up a fight against Amazon and Facebook, there are areas where startups have the edge. Here are the three that I’m paying close attention to:

1. Vertical Trust and Domain Expertise

General-purpose chatbots struggle with high-stakes, regulated domains. Imagine asking for tax advice or mental health guidance from a generic model: liability concerns and nuanced regulations demand specialized expertise. In personal finance, healthcare, taxes, real estate and other fields built on trust, users need evidence of domain credibility. A startup that embeds clinicians, registered accountants, or licensed advisors into an AI workflow can differentiate.

The model may handle surface-level queries, but the human-vetted pipeline or validated data sources create a barrier OpenAI alone cannot clear without replicating specialized teams. It’s a classic dyanmic, but deep verticals reward focused players more than horizontal giants.

2. Bridging to the Physical World

As powerful as AI is, many high-value consumer experiences still benefit from (or even require) real-world integration. Startups like Doctronic show how AI triage leads to a telehealth session, then perhaps to in-person care. Travel can be reimagined: an AI plans an itinerary, but it also books local experiences, arranges guides, or curates surprise pop-ups — and then follows you there. Real estate might not just match listings and orchestrating visits, but guide you in real time through inspections, paperwork, and move-in services.

These require logistics, partnerships, and on-the-ground networks. The moat isn’t the model; it’s the operational backbone that connects AI suggestions to tangible outcomes. That’s a hard feat for a digital-first (and only) company like OpenAI.

3. Closing the Creativity Gap

A June 2025 study in Nature Human Behaviour investigated brainstorming tasks where participants used tools like ChatGPT versus relying on their own ideation plus web searches. It found that while AI can boost idea quantity, it often reduces variety: many AI-assisted participants produced very similar concepts, suggesting a “creativity gap” in generative models compared to unfettered human thinking. This echoes concerns that AI’s training on large but finite datasets drives convergence toward statistically common patterns, limiting novelty despite fluent output. Enhancing human creativity, mimicking human creativity will be tremendous efforts requiring specialized teams that think creatively themselves, and not just technically.

This isn’t to dismiss LLMs’ value (accelerating first drafts can meaningfully improve the creative process) but genuine creativity demands human curation, oddball connections, and serendipity. Startups that build tools to surface unexpected prompts, aggregate diverse human inputs, or structure hybrid workshops can capture value where vanilla AI falters.

Final Thoughts

It’s easy to feel outgunned by OpenAI’s budget and reach when single new feature release can eclipse months of effort. But success isn’t’ about trying to outscale OpenAI. It’s about finding places where AI is a means to something bigger. Giants excel at horizontal scale, but stumble on deep trust, real-world execution, or genuine novelty. That’s where value will continue to accrue. In the gaps that AI can’t fill.

Checklist for founders:

Trust gap: Which regulated domain do you know deeply? How will you embed vetted experts and compliance into your workflow?

Physical integration: What real-world services or partnerships can you build that a digital-first giant would find expensive to replicate?

Creative differentiation: How will you inject human serendipity or domain-specific inputs before or alongside AI to surface truly novel ideas?

Operational moat: What processes, partnerships, or data pipelines can you lock in early to defend against copycats?

User habit loops: How will you create feedback loops (e.g., personalized data, rewards) that cement engagement beyond a generic model’s appeal?

2025-06-26 01:31:00

Lately, it feels like every corner of my internet bubble is talking about venture returns—Carta charts one day, leaked DPI tables the next. I’ve seen posts on lagging vintages, mega-fund bloat, the “Venture Arrogance Score”, the rising bar for 99th‑percentile exits, and the PE-ification of VC.

But for all that noise, I haven’t seen much that actually walks through how returns metrics evolve over time in an early-stage fund. That’s a crucial gap. Many analyses focus on funds that are still mid-vintage, where paper markups can tell an incomplete or even misleading story.

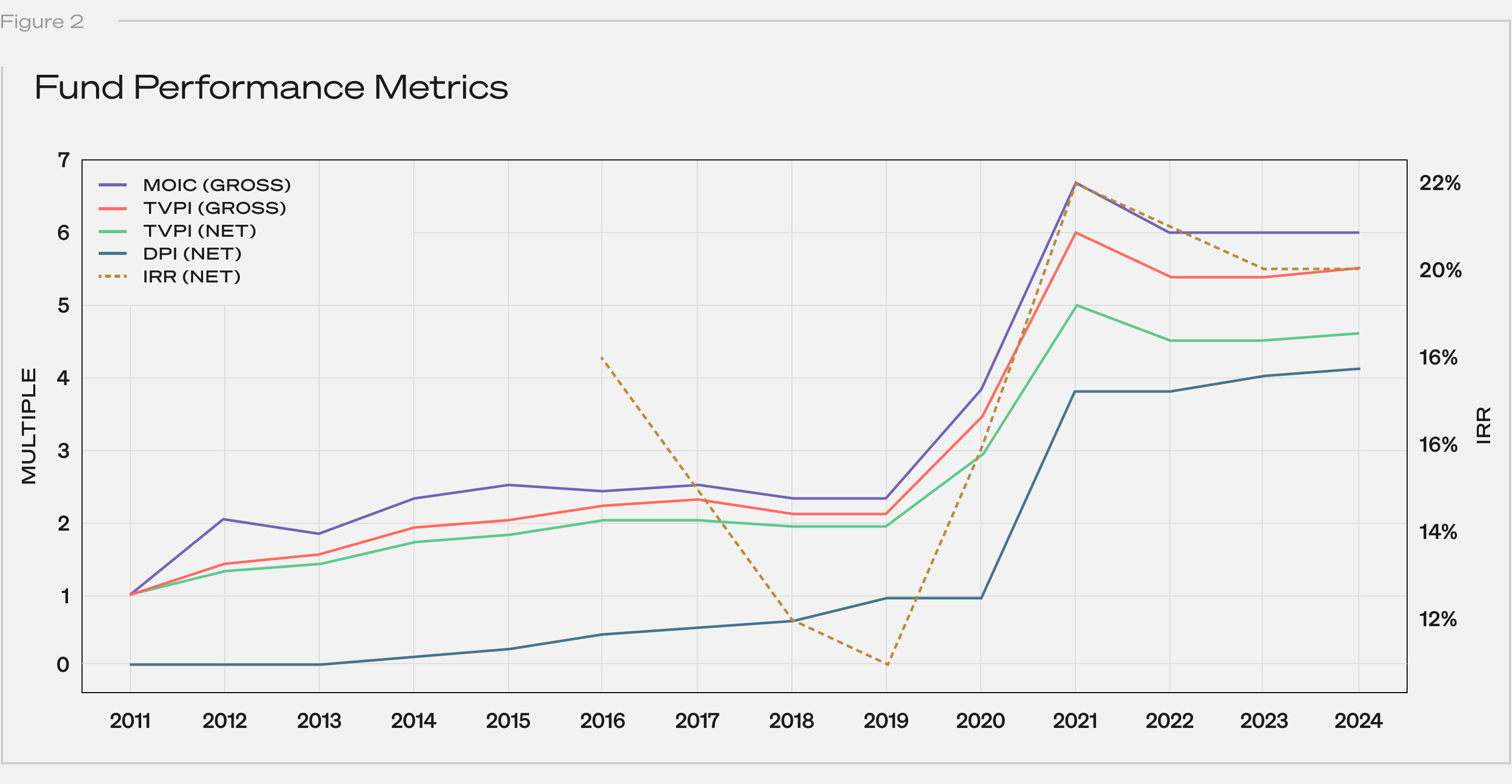

So I pulled the numbers on Collaborative’s first fund, a 2011 vintage that’s now nearly fully realized. It offers a concrete look at how venture performance can unfold across a fund’s full lifecycle.

Fund 1 was small: $8M deployed across 50 investments. Check sizes ranged from ~$10K to ~$400K, averaging around $100K for both initial and follow-on rounds. It was US-focused, sector-agnostic, and mostly pre-seed through Series A.

Without further ado, here’s how the fund performed over its lifetime across a few core metrics:

Note: Distributions are net to LPs; contributions reflect paid-in capital. IRR data was not meaningful (“NM”) for years 1–5.

Below is a line chart of the returns data:

Note: IRR is shown as a dashed line corresponding to the secondary axis.

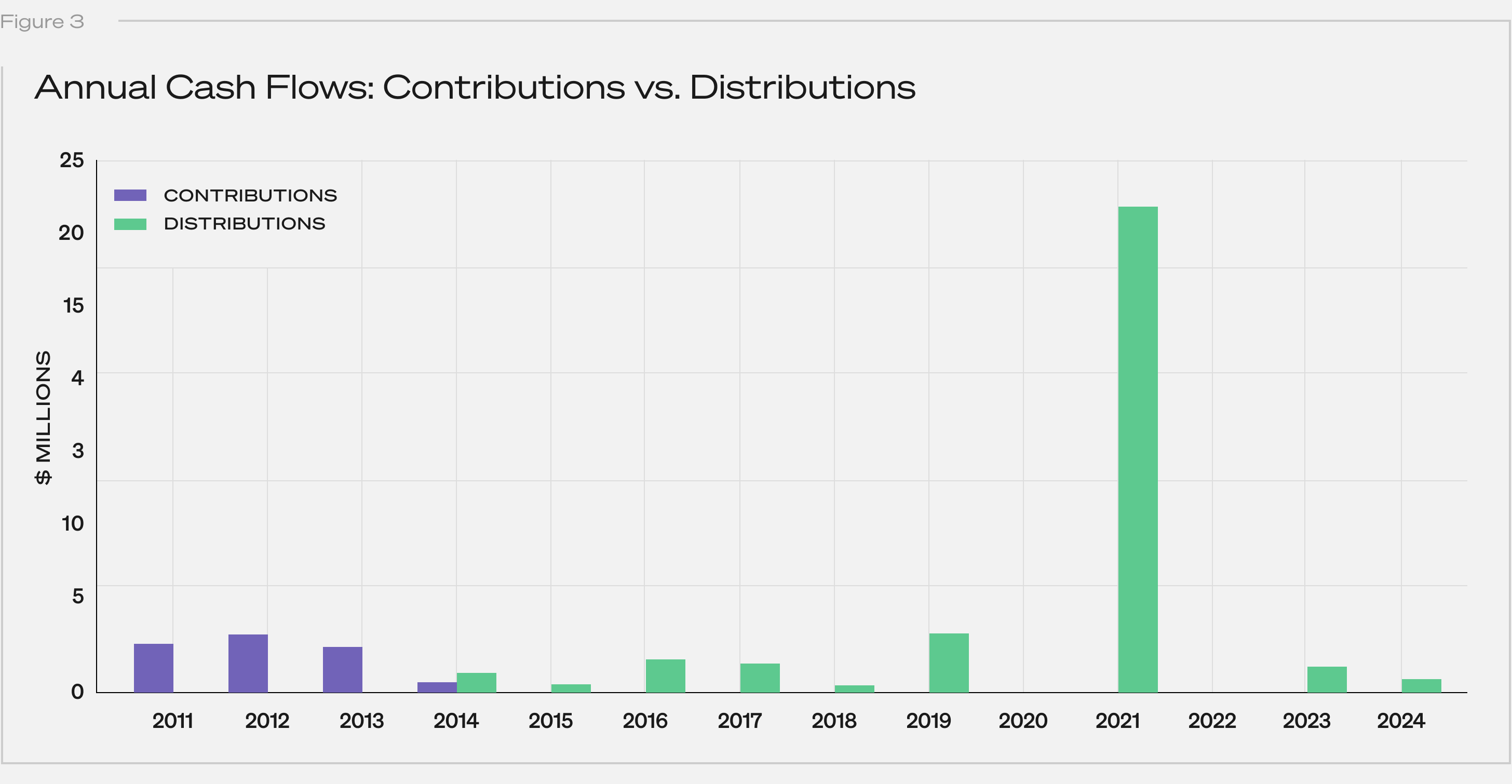

Contributions wrapped up by year 4, which is typical for early-stage funds. Distributions didn’t start until year 4 and peaked around year 10:

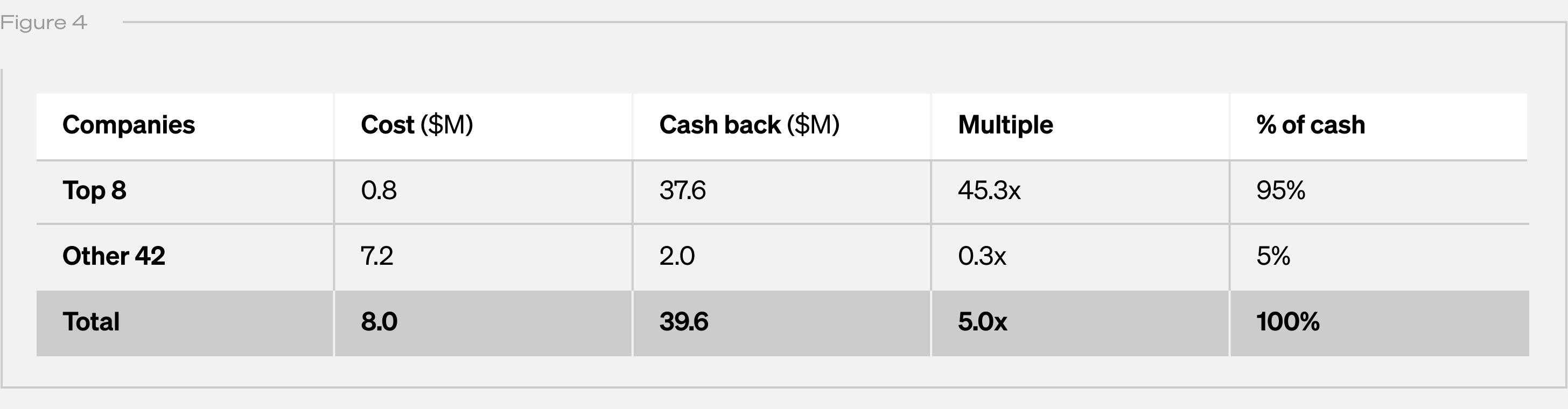

Returns were highly concentrated. Eight companies—Upstart, Lyft, Scopely, Blue Bottle Coffee, Maker Studios, Gumroad, Reddit, and Kickstarter—drove nearly all distributions.

Note: “Cost” is cumulative capital actually invested. “Cash Back” is cumulative proceeds received by the fund from realization events, and excludes (i) any remaining unrealized value and (ii) fund-level fees and expenses. “Multiple” is the quotient of Cash Back divided by Cost.

Together, these accounted for just $0.8M of invested capital but returned $37.6M—an average multiple of 45x. The remaining 42 investments, representing $7.2M, returned only $2.0M (a 0.3x multiple).

Within those eight, outcomes varied widely: one company alone delivered 73% of all cash returned. Adding the next three brought the cumulative share past 90%. Multiples ranged from 1.4x to 115x—illustrating just how concentrated and variable even a “winning” subset can be.

We believe the biggest risk in early-stage VC isn’t failure. It’s missing (or mis-sizing) the outlier. In Fund I, eight companies drove nearly all distributions. One check alone accounted for more than 70% of DPI. This is the power law at work.

Collaborative’s story now spans 15 years with four early funds in harvest mode. Three rank in PitchBook’s top quartile with two in the top decile by DPI. In each, a small number of companies drove the bulk of returns. Perhaps these will make for future posts.

Until then, I hope this serves as a reminder to take venture performance narratives based on unrealized funds with a grain of salt. Some trends are real and worth watching. But many of the loudest signals may fade or reverse as funds mature. Until they’re fully played out, their stories are still being written.

2025-06-18 21:38:00

Collaborative Fund recently launched AIR, a new kind of accelerator for design-led AI products. It draws inspiration from the institutions that reshaped creative possibility in their time, places that brought together unlikely collaborators at key moments of technological and cultural inflection.

As we recruit for the first cohort, we’re talking to people who had a hand in creating those lighting rod moments. A few weeks ago we asked Nicholas Negroponte, founder of the MIT Media Lab, to reflect on what happens when culture and technology collide to create new ways of thinking.

Today we’re talking to Tom McMurray, former General Partner at Sequoia, who helped shape Silicon Valley’s first golden age. Tom was an early investor in Yahoo, Redback Networks, C-Cube, NetApp—and, importantly, Nvidia. He now serves on multiple boards focused on science and impact. We spoke to him about pattern recognition, capital discipline, and why he’s an investor in AIR.

Tom McMurray: It was very clear where to invest in the networking space—bandwidth was in high demand. It was less clear in the pure Internet space. Our diligence process was pretty established so we continually developed more refined filters and leveraged off our core capability in chips and enterprise software. In the case of Nvidia we had what I call a Sequoia moment—that wonderful nonlinear diligence process where after parsing through the business, we reached a point where there’s only a single question left to decide. Our secret sauce was that we often knew many times more than the founders did about their business. We understood where the real risks were.

For companies like Nvidia, our expertise in semiconductors from investments in Cypress, Microchip, LSI Logic, and Cadence, plus our experience with gaming companies, meant the market risks were very low. We could easily do due diligence on the founders because many worked for friends of Sequoia Partners—this was our sweet spot in the 1990s. And in the Internet wave, we had special insight through our investments in Cisco and Yahoo. They were market pioneers who saw the world 3-5 years ahead of us. They pointed the way many times, and we just jumped on it.

Wilf Corrigan, a Sequoia Technology Partner and CEO at LSI Logic (where Jensen worked before starting Nvidia), told him to talk to Don Valentine at Sequoia about his “chip idea.” Jensen pitched the evolving game world and the need for more performance. Honestly, I had no idea at that point why we should invest in the company. But we asked harder and harder questions about team, competition, distribution, and got solid answers.

About 90 minutes into the pitch, Pierre Lamond asked Jensen how big the chip was. Jensen said “12 mm.” Pierre looked at Don and Mark; they nodded, and we committed to the investment on the spot. We led the Series A and Mark joined the board. The rest is history.

The critical question wasn’t about market size or vision—it was “can they build the chip, get the performance, and the price point?” That’s why the chip size question was the deciding factor. The semiconductor partners at Sequoia—Don, Pierre, and Mark—understood the significance immediately.

Because you’re reproducing the conditions that made Sequoia’s hallways electric in the ’90s—cross-pollination of builders, researchers, and designers who argue, prototype, and iterate in the same room. Great companies are rarely solo acts; they’re jazz ensembles riffing toward a common groove.

The secret is to lean on your wins, learn from them, apply it as you expand, iterate, and keep going.

“Stay cheap until it hurts.” Capital efficiency forces clarity. The companies that survived the dot-com crash had burn rates lower than their Series A checks.

Every wave starts messy. But history says two truths persist:

Jobs evolve faster than they evaporate. Cisco killed some circuit-switch jobs yet birthed the entire network-engineer class.

Bias follows data, not silicon. Fix the training data, and you fix 80 percent of the problem. That’s human homework, not machine destiny.

The “force for good” part kicks in when entrepreneurs bake guardrails into the business model, not just the codebase.

I’d look for that “Sequoia Moment”—where after all the questions about team, market, and technology, we identify the single critical factor that determines success. For Nvidia, it was “how many millimeters wide is the chip?” Sometimes, it’s these seemingly simple technical questions that reveal whether a company can execute on its vision.

And to founders who remember: progress isn’t inevitable—people make it so.

2025-06-13 02:54:00

A boy once asked Charlie Munger, “What advice do you have for someone like me to succeed in life?” Munger replied: “Don’t do cocaine. Don’t race trains to the track. And avoid all AIDS situations.”

It’s often hard to know what will bring joy but easy to spot what will bring misery. Building a house is complex; destroying one is simple, and I think you’ll find a similar analogy in most areas of life. When trying to get ahead it can be helpful to flip things around, focusing on how to not fall back.

Here are a few pieces of very bad advice.

Allow your expectations to grow faster than your income

Envy others’ success without having a full picture of their lives.

Pursue status at the expense of independence.

Associate net worth with self-worth (for you and others).

Mimic the strategy of people who want something different than you do.

Choose who to trust based on follower count.

Associate engagement with insight.

Let envy guide your goals.

Automatically associate wealth with wisdom.

Assume a new dopamine hit is a good indication of long-term joy.

View every conversation as a competition to win.

Assume people care where you went to school after age 25.

Assume the solution to all your problems is more money.

Maximize efficiency in a way that leaves no room for error.

Be transactional vs. relationship driven.

Prioritize defending what you already believe over learning something new.

Assume that what people can communicate is 100% of what they know or believe.

Believe that the past was golden, the present is crazy, and the future is destined for decline.

Assume that all your success is due to hard work and all your failure is due to bad luck.

Forecast with precision, certainty, and confidence.

Maximize for immediate applause over long-term reputation.

Value the appearance of looking busy.

Never doubt your tribe but be skeptical of everyone else’s.

Assume effort is rewarded more than results.

Believe that your nostalgia is accurate.

Compare your behind-the-scenes life to others’ curated highlight reel.

Discount adaptation, assuming every problem will persist and every advantage will remain

Use uncertainty as an excuse for inaction.

Judge other people at their worst and yourself at your best.

Assume learning is complete upon your last day of school.

View patience as laziness.

Use money as a scorecard instead of a tool.

View loyalty (to those who deserve it) as servitude.

Adjust your willingness to believe something by how much you want and need it to be true.

Be tribal, view everything as a battle for social hierarchy.

Have no sense of your own tendency to regret.

Only learn from your own experiences.

Make friends with people whose morals you know are beneath your own.

2025-06-12 00:39:00

“The older I get the more I realize how many kinds of smart there are. There are a lot of kinds of smart. There are a lot of kinds of stupid, too.”

– Jeff Bezos

The smartest investors of all time went bankrupt 20 years ago this week. They did it during the greatest bull market of all time.

The story of Long Term Capital Management is more fascinating than sad. That investors with more academic smarts than perhaps any group before or since managed to lose everything says a lot about the limits of intelligence. It also highlights Bezos’s point: There are many kinds of smarts. We know – in hindsight – the LTCM team had epic amounts of one kind of smarts, but lacked some of the nuanced types that aren’t easily measured. Humility. Imagination. Accepting that the collective motivations of 7 billion people can’t be summarized in Excel.

“Smart” is the ability to solve problems. Solving problems is the ability to get stuff done. And getting stuff done requires way more than math proofs and rote memorization.

A few different kinds of smarts:

1. Accepting that your field is no more important or influential to other people’s decisions than dozens of other fields, pushing you to spend your time connecting the dots between your expertise and other disciplines.

Being an expert in economics would help you understand the world if the world were governed purely by economics. But it’s not. It’s governed by economics, psychology, sociology, biology, physics, politics, physiology, ecology, and on and on.

Patrick O’Shaughnessy wrote an email to his book club years ago:

Consistent with my growing belief that it is more productive to read around one’s field than in one’s field, there are no investing books on this list.

There is so much smarts in that sentence. Someone with B+ intelligence in several fields likely has a better grasp of how the world works than someone with A+ intelligence in one field but an ignorance of that field just being one piece of a complicated puzzle.

2. A barbell personality with confidence on one side and paranoia on the other; willing to make bold moves but always within the context of making survival the top priority.

A few thoughts on this:

“The only unforgivable sin in business is to run out of cash.” – Harold Geneen

“To make money they didn’t have and didn’t need, they risked what they did have and did need. And that is just plain foolish. If you risk something important to you for something unimportant to you, it just doesn’t make any sense.” – Buffett on the LTCM meltdown.

“I think we’ve always been afraid of going out of business.” – Michael Moritz explaining Sequoia’s four decades of success.

A key here is realizing there are smart people who may perform better than you this year or next, but without a paranoid room for error they are more likely to get wiped out, or give up, when they eventually come across something they didn’t expect. Paranoia gives your bold bets a fighting chance at surviving long enough to grow into something meaningful.

3. Understanding that Ken Burns is more popular than history textbooks because facts don’t have any meaning unless people pay attention to them, and people pay attention to, and remember, good stories.

A good storyteller with a decent idea will always have more influence than someone with a great idea who hopes the facts will speak for themselves. People often wonder why so many unthoughtful people end up in government. The answer is easy: Politicians do not win elections to make policies; they make policies to win elections. What’s most persuasive to voters isn’t whether an idea is right, but whether it narrates a story that confirms what they see and believe in the world.

It’s hard to overstate this: The main use of facts is their ability to give stories credibility. But the stories are always what persuade. Focusing on the message as much as the substance is not only a unique skill; it’s an easy one to overlook.

4. Humility not in the idea that you could be wrong, but given how little of the world you’ve experienced you are likely wrong, especially in knowing how other people think and make decisions.

Academically smart people – at least those measured that way – have a better chance of being quickly ushered into jobs with lots of responsibility. With responsibility they’ll have to make decisions that affect other people. But since many of the smarties experienced a totally different career path than less intelligent people, they can have a hard time relating to how others think – what they’ve experienced, how they see the world, how they solve problems, what kind of issues they face, what they’re motivated by, etc. The clearest example of this is the brilliant business professor whose brain overlaps maybe a millimeter or two with the guy successfully running a local dry cleaning business. Many CEOs, managers, politicians, and regulators have the same flaw.

A subtle form of smarts is recognizing that the intelligence that gave you the power to make decisions affecting other people does not mean you understand or relate to those other people. In fact, you very likely don’t. So you go out of your way to listen and empathize with others who have had different experiences than you, despite having the authority to make decisions for them granted to you by your GPA.

5. Convincing yourself and others to forgo instant gratification, often through strategic distraction.

Everyone knows the famous marshmallow test, where kids who could delay eating one marshmallow in exchange for two later on ended up better off in life. But the most important part of the test is often overlooked. The kids exercising patience often didn’t do it through sheer will. Most kids will take the first marshmallow if they sit there and stare at it. The patient ones delayed gratification by distracting themselves. They hid under a desk. Or sang a song. Or played with their shoes. Walter Mischel, the psychologist behind the famous test, later wrote:

The single most important correlate of delay time with youngsters was attention deployment, where the children focused their attention during the delay period: Those who attended to the rewards, thus activating the hot system more, tended to delay for a shorter time than those who focused their attention elsewhere, thus activating the cool system by distracting themselves from the hot spots.

Delayed gratification isn’t about surrounding yourself with temptations and hoping to say no to them. No one is good at that. The smart way to handle long-term thinking is enjoying what you’re doing day to day enough that the terminal rewards don’t constantly cross your mind.

2025-05-30 00:02:00

In technology, the obvious revolutions often come last.

The internet was around for decades before it felt personal. It started in labs, crept into offices, and only later landed in our homes. The smartphone wasn’t just a new form of communication – it was the moment computing shifted from corporate IT departments to our front pockets.

AI is accelerating faster than any previous wave of technology. In less than five years, we’ve gone from jaw-dropping demos of GPT-3 to a reality where millions of people interact with AI every day (sometimes without realizing it). Most of the attention so far has focused on the technical layer: model performance, token pricing, enterprise use cases. But the most transformative changes are likely to happen at the level of interface, brand, and emotional resonance.

The tools that stick won’t just be the most accurate. They’ll be the most intuitive, the most culturally fluent, the ones that feel like they belong in your life without demanding to be learned. That’s where the real leverage lies, and where consumer AI is about to get very interesting.

Because something big is happening now. Token costs are falling (OpenAI has slashed prices by more than 90% since 2020) making it cheaper and more feasible for developers to build and ship consumer AI applications. Fine-tuning models is becoming easier. The technical moat (if there ever was one) is evaporating. And as infrastructure becomes cheaper and more accessible, AI’s next act is coming into focus: the consumer.

“What once felt modern now feels immovable. These bedrock apps ossified, and AI is revealing just how brittle they’ve become.”

Because consumers don’t care about your model size. They care whether it helps them get through the day with a little less friction.

Think about the tools we use every day: Gmail. iCalendar. Whatsapp. They were built for the web, optimized for mobile, and essentially left to rot. What once felt modern now feels immovable. These bedrock apps ossified, and AI is revealing just how brittle they’ve become. They weren’t designed for a world where your technology listens, adapts, and acts without instruction.

Consumer AI isn’t about chatbots. Not the way we’ve come to know them, anyway. It’s about escaping the tired web of buttons and dropdowns we’ve been clicking through for years. The real opportunity is interface: tools that don’t just respond to commands but anticipate context. It’s not just about better UX. It’s about a new class of interaction altogether.

This could mean a home-buying concierge that doesn’t just show you listings, but understands your daily commute, dog-walking routine, and what kind of light you like in the mornings. A personal finance system that syncs with your partner’s calendar and cash flow, so you plan together without talking about money every day. Or a piano coach that listens as you play and adjusts your practice routine in real time, because you finally have a teacher with infinite patience.

Chatbots might have kicked off the era of conversational AI, but the revolution is in what comes after: ambient, embedded, invisible tools that behave more like teammates than software.

“The winners will look less like OpenAI and more like Nike or Pixar: emotionally fluent, culturally embedded, behavior-shaping machines hiding in plain sight.”

Some of this is already happening. Well, sort of. Rewind.ai is giving users searchable memory across their digital lives. Rabbit’s R1 promises to remove the “app layer” entirely by turning natural language into actions. Humane’s Ai Pin, while flawed, is at least asking the right question: what does computing look like when you don’t have to look at a screen?

None of these products have nailed it. Most were met with bad reviews. Some feel like punchlines. But they’re reaching for something important. They’re trying to imagine a future where interface is no longer constrained by screens and keyboards. That matters. And while they’re clearly not the final form, they point toward a frontier we haven’t yet fully explored. These are the early missteps of a new genre; not failures of ambition, but signs that something new is struggling to be born.

History reminds us that form factor changes are rarely incremental. The PC didn’t lead to the smartphone – it required a complete reimagining of interface, distribution, and consumer behavior. We are due for another leap like that. Apple’s Vision Pro, Meta’s Ray-Ban smart glasses, and AI-driven wearables like the Oura Ring are early hints of what might come next.

Still, the path to mass adoption isn’t paved in code: it’s paved in trust, usability, and relevance. This is where many investors hesitate. Enterprise AI is easy to underwrite: there’s a sales pipeline, an efficiency metric, an ROI. Consumer AI feels squishier. It relies on taste. On cultural timing. On brand. It’s harder to spreadsheet.

But that’s what makes it so compelling.

“The best consumer AI product of the next five years won’t wow you with its intelligence. It’ll earn your trust. And never ask for your attention.”

Brand, after all, is a proxy for trust. And trust is the most valuable commodity in a world where AI agents will act on your behalf. Within five years, the most beloved AI product won’t have an app, a screen, or a UI, and its brand will be more trusted than your bank. Consumer AI isn’t a technical breakthrough; it’s a cultural one. The winners will look less like OpenAI and more like Nike or Pixar: emotionally fluent, culturally embedded, behavior-shaping machines hiding in plain sight. That’s where the defensibility lies – not in proprietary models, but in emotional resonance and behavioral lock-in. Just like Apple. Just like Spotify. Just like every consumer product that became infrastructure in disguise.

So where does this go?

In the next 18–24 months, most consumer AI experiments will flop. The hardware won’t work. The assistants will misfire. The reviews will be brutal. That’s fine. That’s how interface revolutions always begin; not with polish, but with friction. What matters is that a few teams will get it right. They’ll combine cultural intuition with technical leverage. They’ll design not for features, but for feelings. And when they launch, it won’t be clear whether they’re apps or brands or behaviors – only that they make life easier, more human, more yours. The best consumer AI product of the next five years won’t wow you with its intelligence. It’ll earn your trust. And never ask for your attention.