2026-03-07 02:36:17

As latent reasoning models become more capable, understanding what information they encode at each step becomes increasingly important for safety and interpretability. If tools like logit lens and tuned lens can decode latent reasoning chains, they could serve as lightweight monitoring tools — flagging when a model's internal computation diverges from its stated reasoning, or enabling early exit once the answer has crystallized. This post explores whether those tools work on CODI's 6 latent steps and what they reveal about its internal computation.

I applied logit lens and tuned lens to probe CODI's latent reasoning chain on GSM8K arithmetic problems.

I use the publicly available CODI Llama 3.2 1B checkpoint from Can we interpret latent reasoning using current mechanistic interpretability tools?

I used the code implementation for the training of Tuned logit lens from Eliciting Latent Predictions from Transformers with the Tuned Lens

PROMPT = "A team starts with 3 members. They recruit 5 new members. Then each current member recruits 2 additional people. How many people are there now on the team? Give the answer only and nothing else."

Answer = 10

I looked at the same prompt as the lesswrong post “Can we interpret latent reasoning using current mechanistic interpretability tools?”

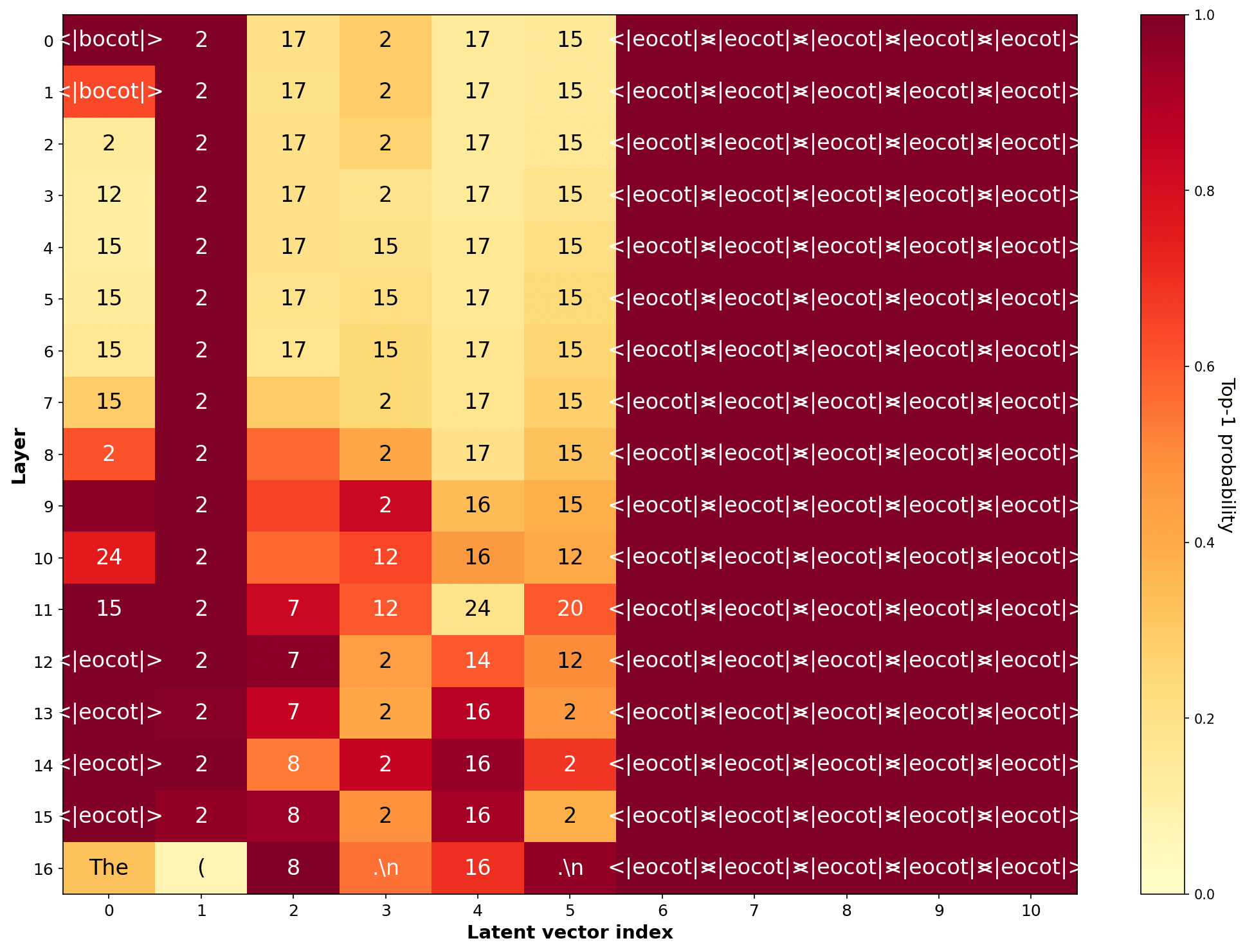

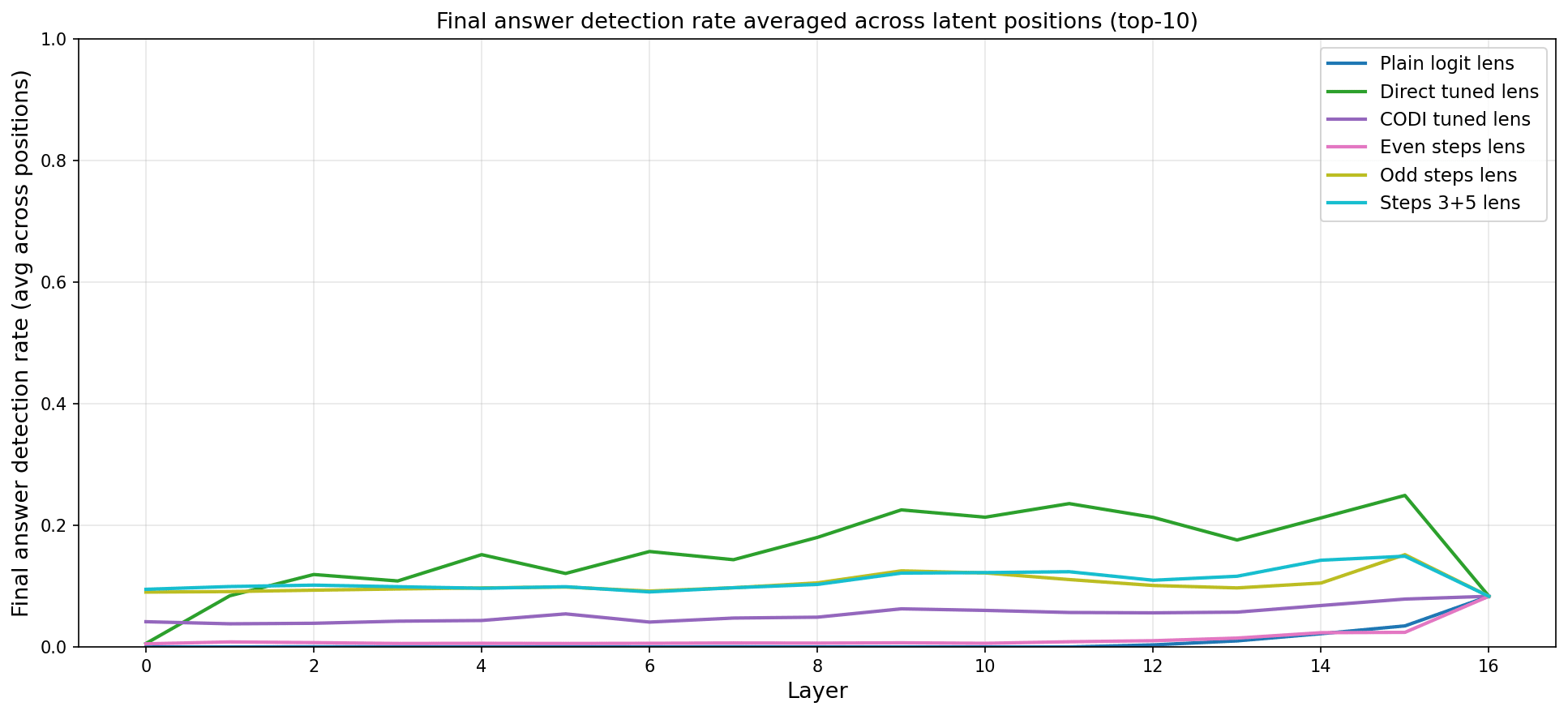

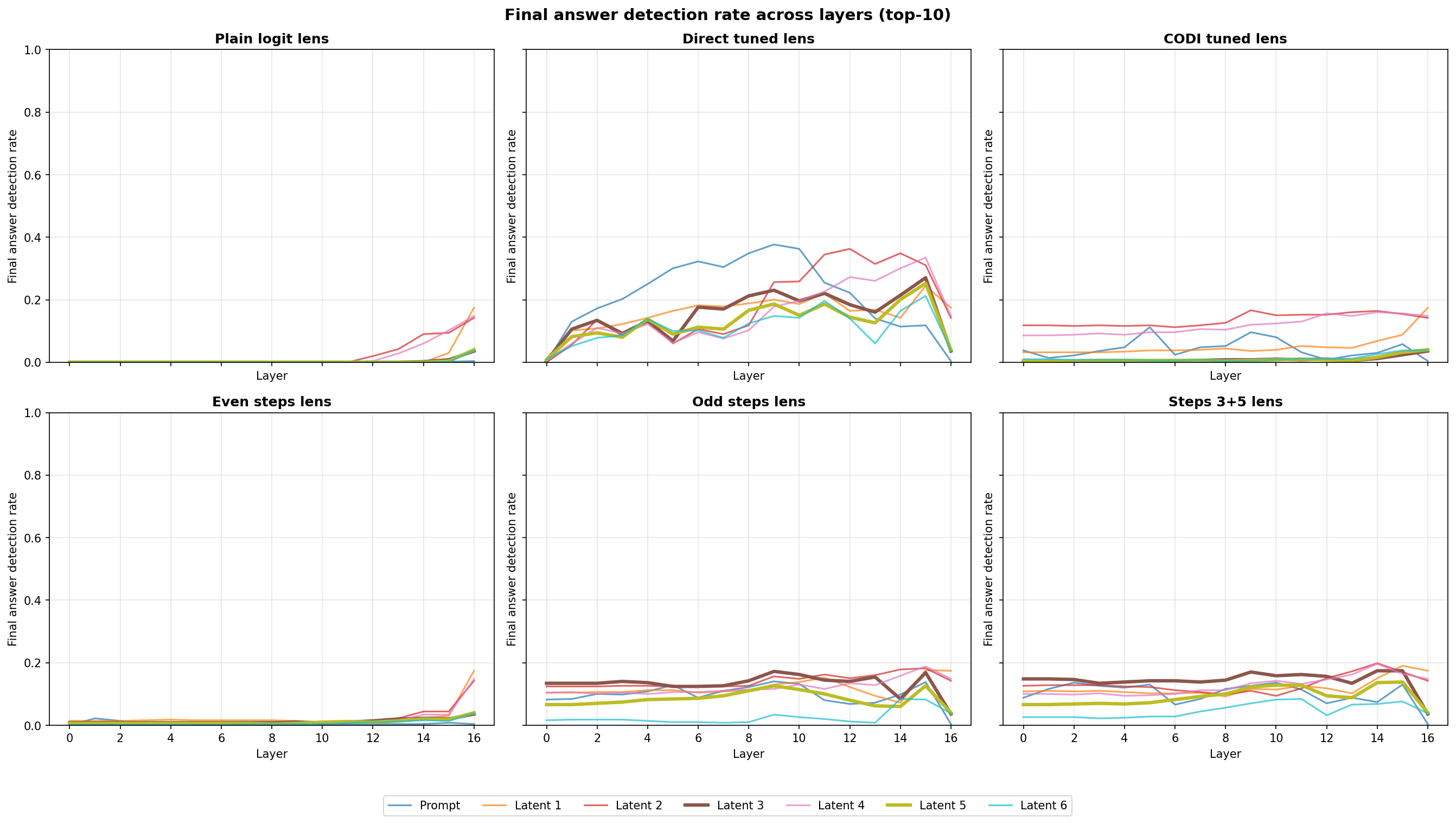

In the figure above which is a Tuned Logit lens trained on the gsm8k with 500 samples and 3 epochs.

An interesting feature of the Tuned Logit Lens on non CODI tokens is that unlike normal logit lens it demonstrates that in the CODI latents the model stores information about the Answer 10. The answer 10 for the logit lens seemed to be absent from the even latent vector indexes of 2, 4, 6

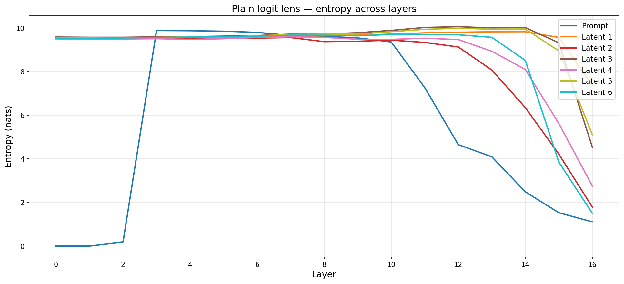

This is different from normal logit lens where it it only shows the intermediate steps of 8 and 16 but, never shows the final answer of 10

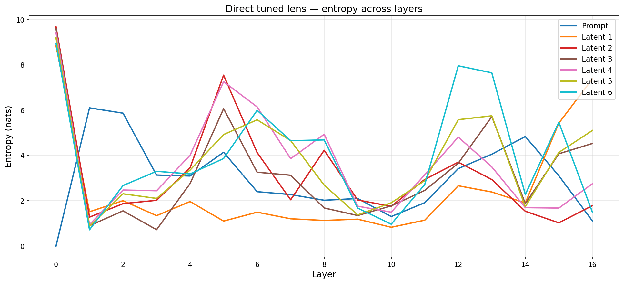

Motivated by the strong performance of the direct-trained tuned lens, I trained a second set of translators directly on CODI's latent hidden states, hypothesizing that a lens specialized to latent-space geometry would outperform one trained on text tokens. This however, was not the case.

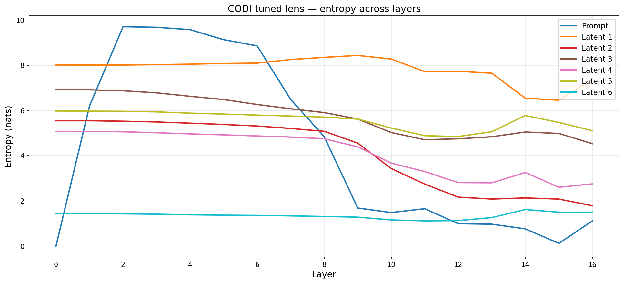

Tuned Logit Lens CODI

Training tuned logit lenses on CODI latents seemed to cause the logit lens to mirror the final layer which suggests over-fitting.

Training tuned logit lenses on CODI even latents of 2,4,6 seemed to cause the logit lens to output a lot more text tokens or \n tokens. The final layer can be ignored since tuned logit lens does not train the last layer.

Training tuned logit lenses on CODI odd latents of 1,3,5 seemed to cause the logit lens to output to do the opposite of the even logit lens as it seems that it only ouputted numbers even before the latent reasoning as seen with latent vector index 0. It was able to find the final answer of 10 however, it was unable to produce valid outputs for non-latent reasoning activations as seen with how it did not fully decode latent vector index 0.

If tuned lens is only using 3, 5 the 10s do not show up adding 1,3,5 allow the the logit lens to find the final answer.

In order to explore the differences in logit len outputs between the different Tuned Logit Lens I looked at the Entropy.

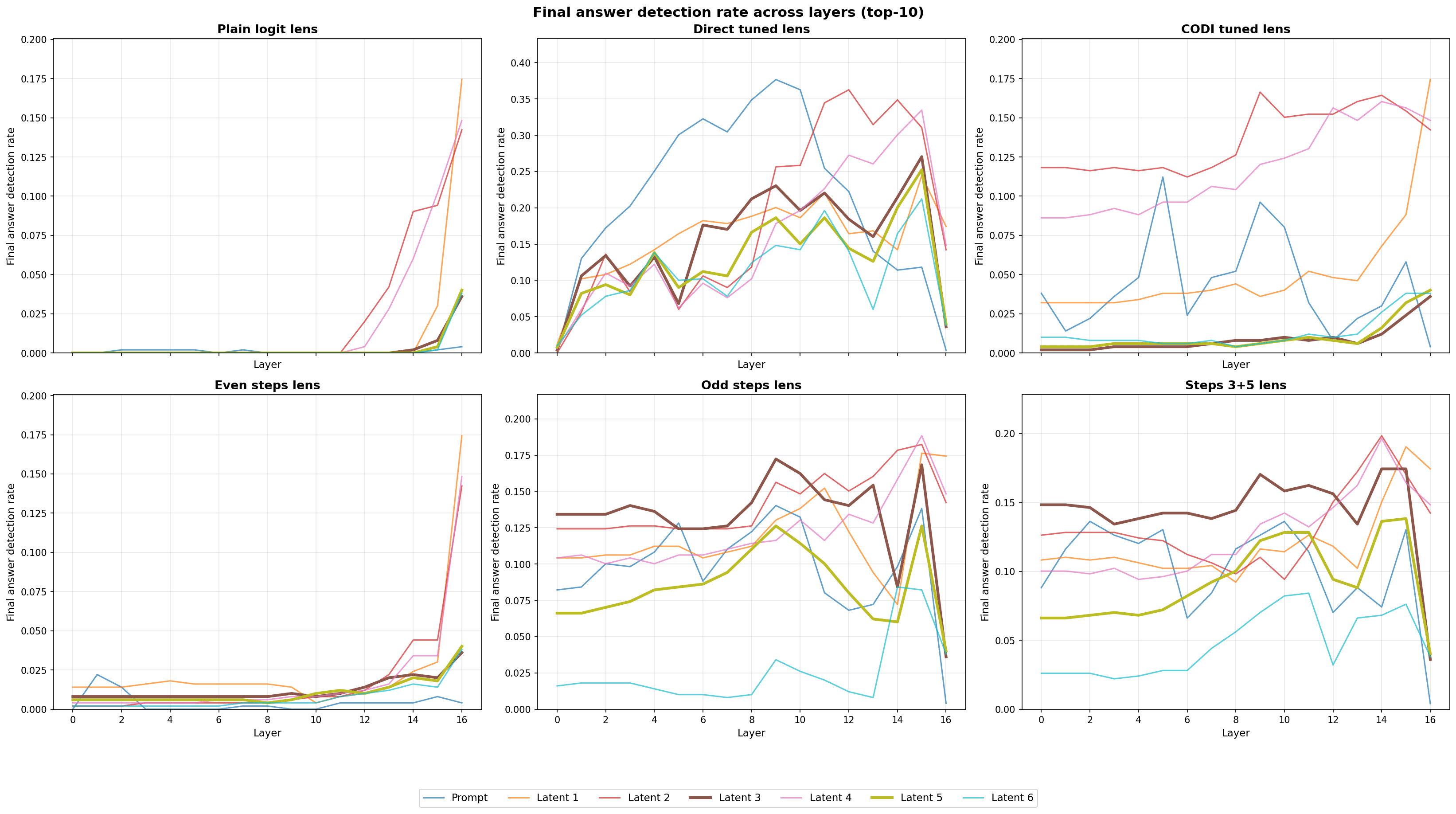

The tuned logit lens containing the final answer made me curious for outside the top token in the topk what was the final answer emission for the different tuned logit lens and which latents predicted the final answer at the highest rates.

2026-03-07 02:10:24

Make no mistake about what is happening.

The Department of War (DoW) demanded Anthropic bend the knee, and give them ‘unfettered access’ to Claude, without understanding what that even meant. If they didn’t get what they want, they threatened to both use the Defense Production Act (DPA) to make Anthropic give the military this vital product, and also designate the company a supply chain risk (SCR).

Hegseth sent out an absurdly broad SCR announcement on Twitter that had absolutely no legal basis, that if implemented as written would have been corporate murder. They have now issued an official notification, which is still illegal, arbitrary and capricious, but is scoped narrowly and won’t be too disruptive.

Nominally the SCR designation is because we cannot rely on that same product when the company has not bent the knee and might object to some uses of its private property that it never agreed to allow.

No one actually believes this. No one is pretending others should believe this. If they have real concerns, there are numerous less restrictive and less disruptive tools available to the Department of War. Many have the bonus of being legal.

In actuality, this is a massive escalation, purely as punishment.

DoW is saying that if you claim the right to choose when and how others use your private property, and offer to sign some contracts but not sign others, that this means you are trying to ‘usurp power’ and dictate government decisions.

It is saying that if you do not bend the knee, if your business does not do what we want, then we cannot abide this. We will illegally retaliate and end your business.

That is not how the law works. That is not how a Republic works.

This was completely unnecessary. Talks were ongoing. The two sides were close. The deal DoW signed with OpenAI, the same night as the original SCR designation, violates exactly the red line principles and demands the DoW says abide no compromises.

The good news is that there are those who managed to limit this to a narrowly tailored SCR, that only applies to direct provision of government contracts. Otherwise, this does not apply to you. Even if that gets tied up in court indefinitely, this will not inflict too much damage on either Anthropic or national security.

The question is how much jawboning or further steps come after this, but for now we have dodged the even worse outcomes keeping us up at night.

You might be tempted to think of or present this as the DoW backing down. Don’t.

Why not? Two good reasons.

Dean W. Ball: No one should frame the DoW’s supply chain risk designation as the government “backing down.” If that becomes “the narrative,” it could encourage further action to avoid the appearance of weakness.

It is also not true that it is backing down; the government really is exercising its supply chain risk designation authority under 10 USC 3252 to the fullest extent (and this is assuming it’s even legitimate to use it on an American firm, which is deeply questionable.

Hegseth’s threat was far broader than his power, which is the only reason this seems deescalatory. If you had asked me for a worst case scenario before Hegseth’s tweet last Friday, I would have told you precisely what has unfolded. This could mean that any vendor of widely used enterprise software (Microsoft, Apple, Salesforce, etc.) could be barred from using Anthropic in the maintenance of any codebases offered to DoW as part of a military contract, for example. Any startup who views DoW as a potential customer for their products will preemptively have to avoid Claude. This is still a massive punishment from USG.

You might also ask: if I knew Hegseth’s power was more limited than he threatened, why did I take his threat at face value? The answer is that we have so clearly moved past the realm of reason here that, well, to a first approximation, I take the guy who runs the biggest military on Earth at his word when he issues threats.

Sometimes some people should talk in carefully chosen Washington language, as ARI does here. Sometimes I even do it. This is not one of those times.

This post is an update on events since the publication of the weekly, and an attempt to reiterate key events and considerations to put everything into context.

For details and analysis of previous events, see my previous posts:

For those following along these are the key events since last time:

It was an excellent statement. I’m going to quote it in full, since no one clicks links and I believe they would want me to do this.

Dario Amodei (CEO Anthropic): Yesterday (March 4) Anthropic received a letter from the Department of War confirming that we have been designated as a supply chain risk to America’s national security.

As we wrote on Friday, we do not believe this action is legally sound, and we see no choice but to challenge it in court.

The language used by the Department of War in the letter (even supposing it was legally sound) matches our statement on Friday that the vast majority of our customers are unaffected by a supply chain risk designation. With respect to our customers, it plainly applies only to the use of Claude by customers as a direct part of contracts with the Department of War, not all use of Claude by customers who have such contracts.

The Department’s letter has a narrow scope, and this is because the relevant statute (10 USC 3252) is narrow, too. It exists to protect the government rather than to punish a supplier; in fact, the law requires the Secretary of War to use the least restrictive means necessary to accomplish the goal of protecting the supply chain. Even for Department of War contractors, the supply chain risk designation doesn’t (and can’t) limit uses of Claude or business relationships with Anthropic if those are unrelated to their specific Department of War contracts.

I would like to reiterate that we had been having productive conversations with the Department of War over the last several days, both about ways we could serve the Department that adhere to our two narrow exceptions, and ways for us to ensure a smooth transition if that is not possible. As we wrote on Thursday, we are very proud of the work we have done together with the Department, supporting frontline warfighters with applications such as intelligence analysis, modeling and simulation, operational planning, cyber operations, and more.

As we stated last Friday, we do not believe, and have never believed, that it is the role of Anthropic or any private company to be involved in operational decision-making—that is the role of the military. Our only concerns have been our exceptions on fully autonomous weapons and mass domestic surveillance, which relate to high-level usage areas, and not operational decision-making.

I also want to apologize directly for a post internal to the company that was leaked to the press yesterday. Anthropic did not leak this post nor direct anyone else to do so—it is not in our interest to escalate this situation. That particular post was written within a few hours of the President’s Truth Social post announcing Anthropic would be removed from all federal systems, the Secretary of War’s X post announcing the supply chain risk designation, and the announcement of a deal between the Pentagon and OpenAI, which even OpenAI later characterized as confusing. It was a difficult day for the company, and I apologize for the tone of the post. It does not reflect my careful or considered views. It was also written six days ago, and is an out-of-date assessment of the current situation.

Our most important priority right now is making sure that our warfighters and national security experts are not deprived of important tools in the middle of major combat operations. Anthropic will provide our models to the Department of War and national security community, at nominal cost and with continuing support from our engineers, for as long as is necessary to make that transition, and for as long as we are permitted to do so.

Anthropic has much more in common with the Department of War than we have differences. We both are committed to advancing US national security and defending the American people, and agree on the urgency of applying AI across the government. All our future decisions will flow from that shared premise.

I believe and hope that this will help move things forward towards de-escalation.

Secretary of War Pete Hegseth’s original Tweet on Friday at 5:14pm was not a legal document. It claimed that it would bar anyone doing business with the DoW from doing any business with Anthropic, for any reason. This would in effect have been an attempt at corporate murder, since it would have attempted to force Anthropic off of the major cloud providers, and have forced many of its largest shareholders to divest.

That move would have had no legal basis whatsoever, and also no physical logic whatsoever since selling goods or services to Anthropic, or providing Anthropic services to others, obviously has no impact on the military supply chain. It would not have survived a court challenge. But if Anthropic failed to get a TRO, that alone could have caused major disruptions and a stock market bloodbath.

We are very fortunate and happy that this was not the letter that DoW ultimately chose to send after having time to breathe. As per Anthropic, the official supply chain risk designation letter invokes the narrow form of SCR, 10 USC 3252.

Anthropic: The Department’s letter has a narrow scope, and this is because the relevant statute (10 USC 3252) is narrow, too. It exists to protect the government rather than to punish a supplier; in fact, the law requires the Secretary of War to use the least restrictive means necessary to accomplish the goal of protecting the supply chain.

Even for Department of War contractors, the supply chain risk designation doesn’t (and can’t) limit uses of Claude or business relationships with Anthropic if those are unrelated to their specific Department of War contracts.

There are three levels of danger to Anthropic here if the classification is sustained.

But we do have to watch out. If the government is sufficiently determined to mess with you, and doesn’t care about how much damage this does including to rule of law, they have a lot of ways to do that.

Remarkably many people are defending this move, and mostly also defending the legally incoherent move that was Tweeted out on Friday afternoon.

The defenders of this often employ rhetoric that is truly reprehensible, and entirely incompatible with freedom, a Republic or even private property.

They say that the United States Government, and de facto they mean the executive branch, because the President was duly elected, can do anything it wants, and must always get its way, make all decisions and be the only source of power. That if what you create is sufficiently useful then it no longer belongs to you, and any private actor that prospers too much must be hammered down to protect state authority.

There are words for this. Communist. Authoritarian. Dictatorship. Gangster nations.

This is how such people are trying to redefine ‘democracy’ in real time.

You do not want to live in such a nation. Such nations do not have good futures.

roon (OpenAI): to reiterate: whatever went wrong between amodei & hegseth, whatever rivalry between the labs, this is a massive overreaction and a dark precedent

Ash Perger: this is the first time that I’m really surprised by your stance. the reality is that the USG can in general do whatever they want. they always have and always will.

within a certain frame, courts and laws are allowed to exist and give people the illusion that these systems and principles extend to ALL actions of the USG.

but once you go outside of this frame and challenge the absolute RAW power behind the scenes, anything goes. that’s the realm that Anthropic entered and challenged the USG within. and at least since the early 20th century, the USG has never reacted to a direct challenge in the true realm of its hard power in a peaceful way.

this is not a conspiracy angle or anything, it’s just how power has worked since time beginning.

Anthropic didn’t challenge the government’s power. Anthropic used the most powerful weapon available to every person, the right to say ‘no’ and take the consequences. These are the consequences, if you don’t live in a Republic.

If you remember one line today, perhaps remember this one:

roon (OpenAI): > the USG can in general do whatever they want

The founders of this great nation fought several bloody wars to make sure this is not true.

The government cannot, in general, do whatever it wants.

That could change. It can happen here. Know your history, lest it happen here.

Kelsey Piper: incredible to see people just casually reject the bedrock foundations of American greatness not just as some dumb nonsense that they’re too cool to believe but as something they literally are not familiar with

As Dean Ball has screamed from the rooftops, we have been trending in this direction for quite some time, and the danger to the Republic and attacks on civil liberties is coming from all directions. The situation is grim.

There are words for those who support such things. I don’t have to name them.

I have talked for several years about the Quest For Sane Regulations, because I believe the default outcome of building superintelligence is that everyone dies and that highly capable AI presents many catastrophic risks. I supported bills like SB 1047 that would have given us transparency into what was happening and enforcement of basic safety requirements.

We were told this could not be abided. We were told, often by the same people, that such fears were phantoms, that there was ‘no evidence’ that building machines smarter, more capable and more competitive than us might be an inherently unsafe thing for people to do. We were lectured that requiring our largest AI labs to do basic things would devastate our AI industry, that it would take away our freedoms, that we would lose to China, that these concerns could be dealt with after they had already happened, that any government intervention was inevitably so malign we were better off with a yolo.

Those people still do not even believe in superintelligence. They do not understand the transformations coming to our world. They do not understand that we are about to face existential threats to our survival as humans and to everything of value. All they see in this world is the power, and demand that it be handed over.

What I hate the most, and where I want to most profoundly say ‘fuck you,’ are those who claim that this is somehow about ‘AI safety’ or concerns about superintelligence, when that very clearly is not true.

As a reminder:

We saw this yesterday with Ben Thompson. Here we see it with Krishnan Rohit and Noah Smith.

Noah Smith: By the way, as much as I hate to say it, the Department of War is right and Anthropic is wrong. Here’s why.

…

Let’s take this a little further, in fact. And let us be blunt. If Anthropic wins the race to godlike artificial superintelligence, and if artificial superintelligence does not become fully autonomous, then Anthropic will be in sole possession of an enslaved living god. And if Dario Amodei personally commands the organization that is in sole possession of an enslaved god, then whether he embraces the title or not, Dario Amodei is the Emperor of Earth.

Are you fucking kidding me? You’re pull quoting that at us, on purpose?

And if you go even one level down in the thread you get this:

Jason Dean: What does this have to do with the Supply Chain Risk designation?

Noah Smith: Nothing. Hegseth is a thug. But we CANNOT expect nation-states to surrender their monopoly on the use of force.

So let me get this straight. The Department of War is run by a thug who is trying to solve the wrong problem using the wrong methods based on the wrong model of reality, and all of his mistakes are very much not going to cancel out, but he’s right?

And why is he right? Because might makes right. How else can you read that reply?

He’s even quoting the ultimate bad faith person and argument here, directly, except he’s only showing Marc here without Florence:

At least he included the reversal after, noting that the converse is also true.

Then there’s the obvious other point.

Damon Sasi: You can in fact think both are wrong for different reasons.

Of course a private corporation shouldn’t [be allowed to] build and own a techno-god. Yes. Absolutely.

AND ALSO, the government response shouldn’t be “take off the nascent-god’s safety rails so we can do unethical things with it.”

That the government thinks it’s just a fancy weapon is immaterial when the thing that makes them wrong is wanting to do illegal things through unethical methods. You don’t have to steelman Hegseth just because a better man might do a different, better thing for other reasons.

I cannot say enough that the logic response to ‘these people want to build a techo-god,’ under current conditions, is ‘wait no, stop, if this is actually something they’re close to doing. No one should be building a techo-god until we figure this stuff out on multiple levels and we’ve solved none of them, including alignment.’

These same Very Serious People never consider the Then Don’t Build It So That Everyone Doesn’t Die strategy.

But wait, there’s more.

Noah Smith: Ben Thompson of Stratechery makes this case. He points out that what we are effectively seeing is a power struggle between the private corporation and the nation-state. He points out that although the Trump administration’s actions went outside of established norms, at the end of the day the U.S. government is democratically elected, while Anthropic is not.

Remember yesterday, when Ben Thompson tried to pretend he was only making a non-normative argument? Yeah, well, ~0% of people reading the post took it that way, he damn well knew that’s how people would take the argument, and it’s being quoted approvingly by many, and Ben hasn’t, shall we say, been especially loud and clear about walking it back. So yeah, let’s stop pretending.

Noah Smith: It’s a question of the nation-state’s monopoly on the use of force.

Among others, I most recently remember Dave Chappelle saying that we have the first amendment protecting our right to free speech, and the second amendment in case the first one doesn’t work out.

Whereas Noah Smith is explicitly saying Claude should be treated like a nuke.

So as much as I dislike Hegseth’s style, and the Trump administration’s general pattern of persecution and lawlessness, and as much as I like Dario and the Anthropic folks as people, I have to conclude that Anthropic and its defenders need to come to grips with the fundamental nature of the nation-state.

It seems a lot of people think the fundamental nature of the nation-state is that of a gangster, like Putin, and they are in favor of this rather than against it.

If the pen is mightier than the sword, why are we letting people just buy pens?

I do respect that at least Noah Smith is, at long last, taking the idea of superintelligence seriously, except when it comes time to dismiss existential risk.

He seems to be very quickly getting to some other conclusions, including ending API access for highly capable models, and certainly banning open source.

Maybe trying to ‘wake up’ such folks was always a mistake.

As a reminder ‘force the government’s hand’ means ‘don’t agree to hand over their private property, and indeed engineer and deliver new forms of it, to be used however the government wants, on demand, while bending the knee.’

rohit: It is absurd to say you’re building a nuke and not expect the government to take control of it!

Noah Smith: Yes.

Rohit: you’re doing a straussian reading and missing the fact that I wasn’t blaming anthropic for the scr, what I am doing is drawing a line from ai safety language, helped by the very water we swim in, and the actions that were taken by DoW. it’s naive to think theyre unrelated

Dean W. Ball: they are coming from people *who entirely and explicitly dismiss the language of ai safety*—please explain how it is “naive” to say “ai safety motivations do not explain Pete Hegseth’s behavior”

rohit: because you don’t actually have to believe that it’s bringing forth a wrathful silicon god to want to control the technology! you just need to think its useful and powerful enough. and they very clearly think its powerful, and getting more so by the day.

Dean W. Ball: Ok, so the actual argument is more like “Anthropic builds a useful technology whose utility is growing, therefore they should expect to have their property expropriated and to be harassed by the government.”

The whole point of America is that isn’t supposed to be true here.

At the same time, inre: my writing earlier this week, all I have to say to the qt is “quod erat demonstrandum”

… I think the better explanation is that this is not that different from the universities or the law firms or whatever else, this is part of a pretty consistent pattern/playbook and that this explains what we have seen much better than this ai governance stuff.

though it’s true that this issue does raise a lot of interesting ai governance is questions, I just do not think anything like that is top of mind at all for the relevant actors.

This is very simple. These people are against regulation, because that would be undue interference, except when the intervention is nationalization, then it’s fine.

Indeed, the argument ‘otherwise this wouldn’t be okay because it isn’t regulated’ is then turned around and used as an argument to take all your stuff.

Dean W. Ball: The problem with this is that DoW is not taking Anthropic’s calls for “oversight” seriously. Indeed, elsewhere in the administration, Anthropic’s “calls for oversight” are dismissed as “regulatory capture” and actively fought. Rohit and Noah [Smith] are dressing up political harassment.

Quite clever. Dean and Rohit went back and forth in several threads, all of which only further illustrate Dean’s central point.

Rohit Krishnan: You simply cannot call your technology a major national security risk in dire need of regulation and then not think the DoD would want unfettered access to it. They will not allow you, rightfully so in a democracy, to be the arbiters of what is right and wrong. This isn’t the same as you or me buying an iOS app and accepting the T&Cs.

It’s clear as day. If you say you need to be regulated, they get to take your stuff.

If you try to say how your stuff is used, that’s you ‘deciding right and wrong.’

Rohit Krishnan: Democracy is incredibly annoying but really, what other choice do we have!

The choice is called a Republic. A government with limited powers, where private property is protected.

The alternative being suggested is one person, one vote, one time.

That sometimes works out well for the one person. Otherwise, not so well.

TBPN asks Dean Ball about the gap between regulation and nationalization, drawing the parallel to the atomic bomb. Dean agrees nukes worked out but we failed to get most of the benefits of nuclear energy, and points out the analogy breaks down because AI expresses and is vital to your liberty, and government control of AI inevitably would lead to tyranny. Whereas control over energy and bombs does not do that, and makes logistical sense.

Dean also points out that ‘try to get regulation right’ has been systematically categorized as ‘supporting regulatory capture,’ even when bills like SB 53 are extremely light touch and clearly prudent steps.

It has been made all but impossible to stand up regulations that matter, as certain groups concentrate their fire on attempts to have us not die, while instead states instead are left largely free to push counterproductive bills that would only cut off AI’s benefits, or that would disrupt construction of data centers.

I can affirm strongly that Anthropic has not been in any way, shape or form advocating for regulatory capture, and has opposed or not supported measures I strongly supported, to my great frustration. Indeed, Anthropic’s pushes here have resulted in clashes with the White House that are very much not helping Anthropic’s net present value of future cash flows.

It is many of the other labs that have been trying to lobby primarily for their own shareholder value.

Whereas OpenAI and a16z and others, through their Super PAC, have been trying to get an outright federal moratorium on any state laws, so that we can instead pursue some amorphous undefined ‘federal framework’ while sharing no details whatsoever about what such a thing would even look like (or at least none that would have any chance of accomplishing the task at hand), and systematically trying to kill the campaign of Alex Bores to send a message that no attempts at AI regulation will be tolerated.

Whenever someone says they want a national framework, ask to see this supposed ‘federal framework,’ because the only person who has proposed a real one that I’ve seen is Dean Ball and they sure as hell don’t plan on implementing his version.

But we digress.

The SCR is narrow, so there is no legal reason for anyone to change their behavior unless they are directly involved in defense contracting. And corporate America is making it very clear they are not going to murder one of their own simply because the DoW suggests they do so.

In particular, the companies that matter are the big three cloud providers: Google, Amazon and Microsoft. I was not worried, but it is good to have explicit statements.

Microsoft wasted no time, being first to make clear they will continue with Anthropic.

TOI Tech Desk: Microsoft has now announced that it will continue to embed Anthropic’s artificial intelligence models in its products, despite the US Department of War labelling the startup as a supply-chain risk.

“Our lawyers have studied the designation and have concluded that Anthropic products, including Claude, can remain available to our customers — other than the Department of War — through platforms such as M365, GitHub, and Microsoft’s AI Foundry,” a Microsoft spokesperson told CNBC.

Sad but accurate, to sum up what likely happened:

roon: to reiterate: whatever went wrong between amodei & hegseth, whatever rivalry between the labs, this is a massive overreaction and a dark precedent.

Anthropic is one of my favorite accelerationist recursive self improvement labs. it rocks that they’re firing marvelously on all cylinders across all functions to duly serve the technocapital machine at the end of time and the pentagon is slowing them down for stupid reasons.

Sway: Roon, if OpenAI had stood firm on the side of Anthropic, then this move would have been less likely and probably averted. Instead, sama gave all the leverage to Trump admin. Sad state of affairs

roon: this is possible, yes

I share Sway’s view here. I think Altman was trying to de-escalate, but by giving up his leverage, and by cooperating with DoW messaging, he actually caused the situation to escalate further instead.

If the reason for all this was that DoW believed Eliezer Yudkowsky’s position that If Anyone Builds It, Everyone Dies, then that would be a very different conversation. This is the complete opposite of that.

The likely next move is that Anthropic will sue the Department of War. They will challenge the arbitrary and capricious supply chain risk designation, because it is arbitrary and capricious. Anthropic presumably wins, but it does not obviously win quickly.

If Anthropic does not sue soon, I would presume that would be because either:

We are used to things happening in hours or days. That is often not a good thing. One reason things went south here is this rush. The memo was written on Friday evening, in a very different situation. Then, when the memo leaked, it was less than 24 hours before the supply chain risk designation was issued, while everyone was screaming ‘why hasn’t Dario apologized?’

It took him roughly 30 hours to draft that apology. That’s a very normal amount of time in this situation, but events did not allow that time. People need to calm down and take a moment, find room to breathe, consult their lawyers, pay to know what they really think, and have unrushed discussions.

2026-03-06 23:34:44

I was different in Michael’s prison than I was outside, looking the way I did when we fell in love so long ago, in that time before we could change our forms. Stuck in some body that was not of my choosing? Does that seem strange to you? It was not like that for me. It is just how things were for most of history, and few imagined this changing. So I felt almost nostalgic as I entered his realm, his prison transforming me into my first self - though not precisely as she was, instead as he remembered me; Michael looking exactly as he did in our youth. That is to say, he was quite beautiful.

“Madison,” he said when I arrived, “you came?”

“It seemed time.”

“Are you well?” he asked.

“What a question. I am happy. Everyone is happy.”

“You didn’t say yes,” he said.

“I didn’t,” I replied.

It was difficult to avoid thinking about the past. You would think I would be a different person now, and yet the me-that-was was not far away even then, her memories still my own, her sins, too, remaining mine.

“I am glad you came. It is lonely here.”

“You are lonely? You don’t let Him entertain you?” I asked.

“I read. I watch. I write for an audience of myself. I am quite a fan of myself, you know.” I chuckled. “I talk to Her, sometimes. To me She is a woman and Hers is a feminine cruelty. But no, I am not entertained in the way others are. She is not my friend or lover. I do not let her be that,” he said.

We both were silent for a time.

“Do you have a husband?” he asked. “A boyfriend?”

“I have Him,” I said. And I felt embarrassed. Can you imagine it? Embarrassed by the truth that I sleep with God? Who doesn’t, you might be thinking, but it was embarrassing, then, feeling more like my old self, wrapped once again in her flesh and him staring at me like he used to, like he found the whole world to his taste and me most of all.

“Do you ever ask Him to pretend to be me?”

Such a rude question, the rudest question; almost taboo, is it not? Rude to ask of anyone but prospective lovers, as we ancients would ask of the dreams and fantasies of those we desired.

“He will take any form but your own.”

“So you did ask?” he said, and he smiled.

“Enough teasing,” I said.

“If you insist. How are the children? She tells me nothing.”

“They are happy,” I said.

Michael’s prison would not impress you. You who have seen so many wonders, who have spent your life in sims casting strange magic in stranger worlds, who have climbed mountains on vast planets and contemplated impossible fauna He designed specifically for your fascination. It would not impress you, because you are a child. And being a child, Earth does not mean to you what it means to me, it being both my first home and our first sacrifice to Him, a wet nurse suckled dry by a babe not quite like the others, He almost embarrassed now by what was destroyed in His infant hunger.

It would not impress you, but it means so much to me. Tallinn. A city of red-tile roofs, of three-story apartments, of medieval fortifications, in its center that vast, beautiful church, a church to a god made redundant or, to some minds, ascendant. Tallinn exactly as I remember it save for the silence and emptiness, lacking all people, even His shadows. It is, in this way, a naked city, ghostly but still dripping with meaning, with thoughts of Michael and me on Earth, our minds as youthful as our bodies then.

And where in this charming city did Michael greet me? In that mentioned vast church, him sitting on the red-carpeted stairs, his back to the altarpiece (that strange structure of ebony) and me standing, looking down at him and he up at me, both of us wearing the fashions of our youth, he in that green jacket he loved so much, me in jeans and the white blouse I wore the night he proposed, the two of us in a perfect copy of the very church in which we wed.

“You were always so dedicated to your jokes,” I said, gesturing at our environs. “To wait here of all places.”

He looked pained. “It wasn’t a joke. More like a ritual, sitting here and asking God to invite you.”

“But it started as a joke, the first time,” I said.

“I suppose it did.”

“Well. I am here. What is it you want?” I said.

“I would like to see you all again one last time.”

I laughed, but it was one of surprise; there was no joy in it. “They will not come.”

“You came,” he said.

“They won’t.”

“They will when you explain it will be their final chance.”

I knew what he meant, then. And I could not remain composed. Perhaps I could have if He had informed me tenderly, in one of our secret worlds, me in my chosen body, old feelings so buried as to be almost absent. Perhaps I would have felt nothing to learn Michael had chosen to die. I will never know, because there in that church, with those dark green eyes upon me, I sobbed as my old self would have on hearing such news, entirely her now in our shared grief, moving to sit beside my first lover, grasping him, holding him. Like a break from sanity it should feel to me now, but it felt then like a return, his warm arms wrapping around me, the scent of him.

“You can’t,” I said. “I will stay if you need someone. It will be like it was.”

“It cannot be like it was,” he said.

And it could have, had he been any other man. But he was not any other man.

“Mother?” she said. “Why have you come?”

It was Avery. My daughter. And she was an angel now, her hair golden and flowing, her face haughty. A man’s face and a man’s body, porcelain white wings half-flared like some preening bird.

“You wear a man’s body now?”

“For now,” she said, folding her wings with a certain showy dignity.

We stood before a valley cut by a river of fire, above us a sky of ash clouds. And this river led to a city of twisting buildings carved from vast stalagmites.

“A bit cliché. But there is also a certain beauty to it,” I said.

“Why should Lucifer’s realm be ugly? He is himself the most gorgeous of God’s creations.”

“And is that who you are? Lucifer?”

“Belial is the part I am playing today. Lucifer is my lover.”

“And you, mother,” she said, eyeing my black dress with its tall collar, decorated with the silhouettes of dragonflies in pale yellows and pinks, “still have your Hong Kong, then.”

“Yes,” I said.

“Why have you come?” she said, her voice cold and low.

“I came to talk to my daughter. Not this Belial.”

“Very well,” she finally said, her proud expression crumpling into one of girlish disappointment, the world crumbling too, reality folding like paper, its color and form resolving to a stark, snowy landscape, before us now a house by a snowy lake, a picture of warmth in the dead of winter, the house I rented when I left Michael, all the other kids grown, their lives their own. Just Avery and me alone. One of the more enjoyable times of my life on old earth.

I smiled. It was a memory I have not dwelled on for centuries. And a lovely one.

“You never want to play along. You’re never any fun,” she said, as we made our way into that cozy house. “What is this about?”

“Your father. I visited him,” I said.

“We put him there for a reason, mom.” She looked pained. “You shouldn’t have.”

“I know. But I did.” And I explained to her about his choice. About his desire to die.

“You’ve always been such an idiot about dad,” she said.

“He will do it,” I said.

“Yes. If his god allows it.”

“You do not seem upset,” I said.

She was so beautiful now, her body as it was in her twenty-third year. She had my hair. She had his eyes. We made her, he and I. We made the clumsy woman who read too much, who lived too little. Who found herself in a new world before she was fully integrated in the old one. And now centuries later, I worry, she is on the edge of retreating entirely. Of losing herself to Him as so many do. As my grandson did. As I fear I will, in time.

“You have a type, Daughter. Lucifer? What was the last one? Prometheus?”

“Yes.”

“Unhappy gods and angels,” I mumbled, as I inspected more closely her blonde hair, her green irises, her youth so perfect and yet so false in my eyes, these mother’s eyes. She was old. Near as old as me. Old, but not tired. Old, but not worn. Such strange creatures we have become, she and I.

“They are the parts of Him that understand.”

“You sound like your father.”

“I sound like Prometheus. I sound like Lucifer. I sound like myself. I don’t sound like dad,” she said.

And this made me grin. As I understood her in a way I had not before, having never cared much for the games she played with God. But she didn’t think them games, I realized. She thought there was a larger point to her pantomime.

“You cannot change Him, Avery. Only Michael can change Him. And we’ve denied him that.”

“There has to be another way,” she said.

“Why must there be? The world isn’t a story.”

“I choose to have hope,” she said, then transformed back into Belial, the handsome demon, the lover of Lucifer. And the world changed too. The lake now one of fire, the house a stone-carved mansion, it becoming a part of hell. A beautiful memory sullied, this calculated to offend.

“You’re angry?” I said.

She raised her wings, the effect terrifying and beautiful and yet utterly comical. “What does he want?”

“A reunion.”

“I will not come,” she said.

“A chance to speak to your dying progenitor? Talk it over with your Lucifer. He will envy you that.”

And then I left - an internal prayer to Him bringing me instantly back to my realm. But before her world disappeared and was replaced with my own, I heard a laugh, high and cold. Lucifer’s laugh.

Her devil, at least, was amused by my visit.

My realm is Hong Kong as Avery said. But it is Hong Kong as I imagined it as a girl, a picture built out of my youthful fascination with its cinema, a dream of a dream. And my body, the one I wear almost always and wore in Avery’s realm, is that of Fleur, the ghost of a suicidal in Kwan’s Rouge, this woman who haunts the city in her beautiful cheongsam. Why did I choose this form and realm? I do not know any more than you. Why does it feel like home, that dead city from a dead time, as interpreted by artists working in a medium almost forgotten? Again, I don’t know any more than you do. All I know is that this is what I have chosen. Hong Kong - that dream of a dream - is mine. And that ghost who haunts it? She is me.

He was there, of course, His form not one I will describe. Though I imagine you can guess what sort I prefer. A few days together. The blink of an eye. We will move on. And to what? To Seth. The artist. Avery’s fraternal twin. My only son.

He is not like Michael. He is not like me. He is more as my father was before the cancer ate first his joy and then his life. Their souls have the same shape. Each always with their sketchbooks and a smile, Seth’s childhood spent mastering perspective and sculpture, enchanted with beauty. Moths were of particular fascination to him, then vast jungles and so many species of flower, then pretty girls in all their varieties. Sketchbooks full of his infatuations, many reciprocated and with less drama than you would expect. His spirit so gentle, it was hard even for those spurned to truly hate him.

He came to visit me in my realm. My lover making himself scarce on his arrival, knowing me well.

“Avery is irritated with you,” he said. “It was like when we were children.”

“We are too alike,” I said. “It rankles.”

He laughed, golden curls covering an androgynous face, a wiry frame though not without a shapeliness. He sculpted himself, of course. Made a body of marble and had God imbue it with animating force. It would not occur to Seth to become anyone’s work but his own.

“You’re still in Hong Kong?” he asked. “It has some charm. You should see my realm. Lyra and I, we just finished a city.”

“Lyra?”

“My girlfriend. She’s wonderful. It’s been a decade. A whole decade. When has it ever lasted a decade?” He looked contrite. “I should have visited more.”

“Don’t worry yourself. Time moves too quickly. Is she human?” I said.

“Of course, she is human. We are not all shut-ins like you and Avery. Come!” He extended his hand to me. “Meet her.”

And I took his hand and accepted his power, allowed him to pull me into his realm, pull me onto a hill at the center of a city, a hill Seth built, undoubtedly, for the sole purpose of viewing his art, his city that was almost biological though made of stone, fractal, dripping with intricacy, the effect almost overwhelming. And swarms of beautiful insects flew about. Insects not at all like those that fascinated him in his youth, but things new and strange and glorious in their iridescence, almost posing for me as they drank from flowers that were in every way their equal.

“You have outdone yourself, son,” I said. “It is beautiful.”

He looked almost shy then. It is an intimate thing to be an artist, more so now that everyone has the greatest artist who ever existed at their beck and call.

“It’s a hobby,” he said. And a woman joined us. Lyra. A fine pair they made, and she an artist, too. They each designed their form to complement the other’s. Quite romantic, no? She with her raven hair, her willowy physique, a slightly crooked nose. Intentional, of course, to add character. And they led me to their home: a modest stone mansion atop the same hill on which Seth and I appeared. Inside there were comfortable couches, walls covered in tapestries that were vaguely Persian, a drafting table struggling under the weight of piles and piles of sketches.

“Eliot,” I said, referring to my grandson. “Have you heard from him?” A cruel question. The cruelest question. But I had not asked in many years. His smile broke then, Lyra’s hand moving to his shoulder, as if his pain were her own. That movement alone endeared her to me utterly.

Eliot. How can I describe Eliot? He was only one year old when the world changed. I suppose he is as I imagine Michael would have been had he only ever known the world as it is. At least he was when I last saw him. But that was so long ago.

“No. He is sane and happy. That is all God will say. He still prefers to dream alone.”

I hugged him then, as I did when he was a boy. An image came to mind, a flower he was sketching one day found withering the next, him staring at it completely heartbroken. He has grown much since. A man now, and a very old one. Always my son.

“Avery has told you about Michael?” I asked.

“Yes,” he said. “He took it harder, you know. Even more than me. They were so alike.”

“Yes,” I said.

“The city is filled with people, you know. Beautiful people. We sculpted thousands. They had children. They are all shadows, of course. Dad would say they’re not real. That they don’t matter.”

“And what would Eliot say?” I asked.

“Don’t - “ Lyra interrupted, her expression protective now.

“It’s okay, Lyra,” he told her, before turning to me. “Eliot would say, ‘They are aspects of God. What could possibly matter more?’”

How many of you agree, I wonder.

I met Caitlyn in my realm - at a Japanese restaurant, a young chef at the back making sushi with a sort of stoic obsession. Around us Chinese shadows were served by a Japanese staff, two pretty young women and one pretty young man.

“Mom,” she said when she sat down on the chair opposite mine. “You look hideous.”

Caitlyn spends her time in 1990s London, in a realm not of her own making, in a realm belonging to a man who found he preferred that city at that time more than any other, who opened his realm to others, living amongst them anonymously. And for whatever reason, many came. Preferring his rules and the company they spawned to a world of their own design, a world of shadows. And in this London, the calendar resets back to 1990 every decade, the millennium always approaching yet never touched. And shadows are restricted only to service staff - so nearly everyone you meet is truly human.

Her London takes such things seriously. And everyone ages the decade in full. And in this spirit, I wore an aged body. Though the youngest that could plausibly be Caitlyn’s mother. A body of around forty. A rare sight these days, the sagging skin, the tired eyes. A rare thing, but not so in her London.

“I thought I should play along,” I said. “It’s as if you flew to Hong Kong, though I suppose my realm is a decade off.”

“Thoughtful, I suppose. But unnecessary,” she said. “I imagine you asked me here to talk about father.”

“Avery told you?”

“Yes. I visit her quite often. Though I’m not enjoying her Dante turn. I preferred her realm when it was Mediterranean. She’s so exhausting when she’s a man.”

I smiled at this. “I am glad you two are still close. Do you ever tire of your 1990s?”

She smiled, too. “I am happy. I need constraints and human company, and my children’s company when they’re willing to visit. I do not want my own realm. I do not want to be a god.”

“You are not mad about Michael?”

“No. I am surprised you lasted as long as you did.”

“I don’t know why I went back. I suppose I will always love him.”

“Before I had children, I thought he had a point.” She fidgeted with her long red hair, then rolled up a sleeve that had loosened. “But now I can only see him as a monster.”

We were silent for a time, then she said, “You know why he’s doing it, don’t you? He’s worried he’s about to break. He fears he might become someone who would make another choice.”

“I can’t imagine him breaking. He has too much faith in himself,” I said.

“But he doesn’t though,” Caitlyn said. “He gave us our family veto rather than hold it alone. He didn’t trust himself, at least then.” She touched her hair again. “I suppose he regrets it.”

She was right of course. He does regret it.

“Do you remember when you were nine, before I had the twins? You were so pampered. You were so jealous of them,” I said.

“I remember,” she said, in that embarrassed way my children do when I talk of their younger selves.

“And Michael took you to Holland, just the two of you. You went to that theme park. You wouldn’t stop talking about it after. What was it?”

“The Efteling. It felt like we were in a fairy tale.”

“You were so jealous of the twins, but when the two of you came home, I was the jealous one. You got so close, the pair of you, on that trip without me.”

“What’s your point?” she said.

“Maybe he’s a monster, Caitlyn. But you still love him. You should say goodbye.”

We drank some tea. After a time, she said, “I suppose I do love him. Even after everything.” She looked like him in that moment. She has his pride, I think. “I will go. He will try to persuade us again.”

“Probably.”

“He will not succeed,” she said.

We all came in the end, all transformed into our former selves by Michael’s realm. Avery now as she was at the lake, Seth skinny and boyish - no longer his own work of art; Caitlyn still beautiful but lacking the gilding of artificial perfection. And me? I was as I was when he held me. When I felt the echo of madness, of love. When I forgot myself for a time and became who he needed me to be, no longer a ghost, no longer His, if only temporarily.

It was not a church, this time. Just a small estate on the outskirts of Tallinn, the city now a distant painting blurred by a slight fog. I arrived last. And I found the children chatting and joking with their father as if nothing had happened, as if nothing would happen. Michael was the focus, holding court as he was always so talented at. Avery looked at him with a strange expression. Was it disdain or guilt or grief? I cannot say. Caitlyn was talking but I could not hear the words. For I was walking towards them then, too far away to hear even a murmur. But she was smiling politely, in her ironical way. As I got nearer, he noticed me.

“Madison,” he said. “You look beautiful.”

“As do you, Michael,” I said. And he laughed.

“Come,” he said, and he led us to an oak tree, which he sat down beside cross-legged, leaning against its gnarled bark.

I sat in front of him and the children followed - his family sitting around him, almost like students around a kindergarten teacher.

“Madison has informed you I intend to die,” he said. “Though don’t worry, it is a selfish death. And not quite a true one.”

“I do have a sort of statement,” he said. He stood up. “Call it my last wish.” We all were silent. The world was silent too, as if He was listening and turned down the volume of everything that was not Michael. “Our family has power. We are special. Having this power, we are at all moments making a choice. Never forget that is what you’re doing.”

Caitlyn said she did not want to be a god, but we are all gods, we of this family. We hold the fate of so many in our hands. Trillions would be unmade. But not us. Not our family. We would remember. A chance to try again, to summon a different God.

“And is that your plan?” I said. “To die? So the only means to restore you is to undo the world?”

And then his green eyes fell on me. His lips twitched. “You think me that cruel?”

“Yes,” I said, and smiled.

He shrugged and said, “This world does not suit me. And given time, maybe you’ll tire of it, too.”

Caitlyn had no grief in her. It was not her way - nor was it Avery’s, who looked only angry. But Seth was crying now. A boy once more, becoming a child for a moment just as I became a wife again in the church. Perhaps we share the same weakness. Such a strange thing it is to have children, each containing a different aspect of oneself.

“Then live until we tire of it,” Seth said.

“When have you known me to change my mind?”

Seth looked at me, then at his sisters. “We will free you, then. We will let you tell the world.”

“I don’t care to tell them anymore,” Michael said. “Whatever the justice of my choice to grant it, it is you who have this power. And it is to you I make this protest.”

“Is it really so bad?” Seth pleaded.

Michael looked at him, his expression one of pity. “Seth,” he said. “You know how this ends. You know what everyone will choose eventually. They will choose what your son chose.”

And Seth cried harder now, “And so what? Is death better?”

“I think it is,” Michael said.

And Seth left then, left with a silent prayer. I imagine he regrets this now, not saying a proper goodbye. I have not asked. Michael was not kind to him, then.

And so it was with all my children. None made goodbyes they were happy with. But I did. They did not appreciate the inevitability of it. But to me, it felt like it did of old when a loved one was sick, when their death was not negotiable. They could not enjoy Michael one last time. They could not savor him, as I did. They saw only his selfishness. They saw only the gambit. But it did not feel like this to me. It was inexorable as the cancers were of old. Perhaps this was my weakness. Perhaps this was just the love, rekindled, blinding me.

Avery whispered something to him just before she left. I did not hear much of their conversation, but I heard the end; he gave her a patronizing look and said, “You are fooling yourself.” She left in fury.

Caitlyn was more polite, a restrained goodbye, a hug. Then she said, “You’re wrong I think, that’s not what everyone will choose.” And she, too, disappeared.

And me? I stayed. I savored. We talked. We made love one final time, in that way old lovers do, knowing the dance perhaps too well. Afterwards, I said, “And how will it happen? Will you make a show of it? A dagger? Some poison?”

“I will ask for it to end.”

“A prayer?” I said.

“Yes. Just a prayer.”

I kissed him. “Pray for a grave, then. I would like to visit you.”

“We will see each other again,” he said.

“Perhaps,” I said.

“We could stay here forever,” I said. “It could be like it was.”

“It cannot be like it was,” he said, almost wistful.

And it could have, had he been any other man. But he was not any other man.

The prayer was answered. There is now a grave in Michael’s realm. And I visit it often. I found a flower there once. I thought it one of Seth’s, but he claims it wasn’t his. I like to think Eliot stopped by and paid his respects. Maybe it’s even true. I have no other explanation.

The world continues without Michael. As impossible as that seems, this clockwork universe ticks on. Michael once planned to tell others of our power. He changed his mind in the end, didn’t he? If he can, why can’t I? And so I write this account of my family, of who we are, of what we are. This is my flower for Michael’s grave.

And I ask you to consider my monster, my first love. If you tire of this world, you may die as he did. But if you believe what he believed, leave a prayer to Him before you go; He will inform me. So many would have to choose the same. So many would have to pray with him, pray a monster’s prayer, die a monster’s death. But I will live until then. I will be happy, in my way, with Him as my strange companion. I will continue to take no human lovers. That aspect of me will always be Michael’s. But should the impossible happen. Should a majority choose what he chose, I will honor your prayers.

I will be your proxy, then - and advise my children do the same.

Perhaps, for once, they will listen to their mother.

2026-03-06 22:48:56

A long time ago in a galaxy far, far away, before #MeToo and Harvey Weinstein, before misinformation and disinformation, Larry Summers got fired.

He was the president of Harvard, and had the temerity to suggest maybe men were different from women, at least in a distributional sense.

“There is relatively clear evidence that whatever the difference in means—which can be debated—there is a difference in the standard deviation and variability of a male and female population,”

Summers is referencing research suggesting that across different traits, men might have more extreme outcomes than women. Men are more likely to end up in the C-suite, and in jail, than women. They are more likely to have ten children, and zero children, than women. Summers was using what’s now apparently become known on wikipedia as the variability hypothesis to explain why women were less likely to occupy elite academic positions than men were.

Even by the practically Victorian lights of 2005, this was enough to get Summers canned from his perch at the top of Harvard. But Elon’s bought twitter, there’s been a vibeshift, and since 2005 2022, you can once again discuss such things.

We're going to try to dig deep here; we want to answer the question of why the variability hypothesis might be true, or at least identify the flawed arguments for why it’s true. Darwin himself noted

The cause of the greater general variability in the male sex, than in the female is unknown.

The Inquisitive Bird has also looked into this if you want more background on the facts of the case; regarding the cause, there are different theories.

Everyone knows that human males and females have different karyotypes. Males are XY and females are XX. Males get (mostly) their mother’s X chromosome, and females get two X’s. As far as I know, this is true for all placental mammals.

Fewer people know that such mammals have a complicated system to “deactivate” expression of both X chromosomes in females. In any single somatic female cell, only a single X chromosome is expressed at random out of the two she’s inherited. If this doesn’t happen, a female might get a double dose of X-linked gene products, which is biologically problematic.

Given this reality, in tissues where X-linked genes are expressed, which is practically all of them, the female’s phenotype is averaged over both possible X-linked gene products, and the male’s phenotype is not. This averaging over X-linked genes (maybe 6% of her genome) could decrease her phenotypic variability in comparison to the unaveraged male genome.

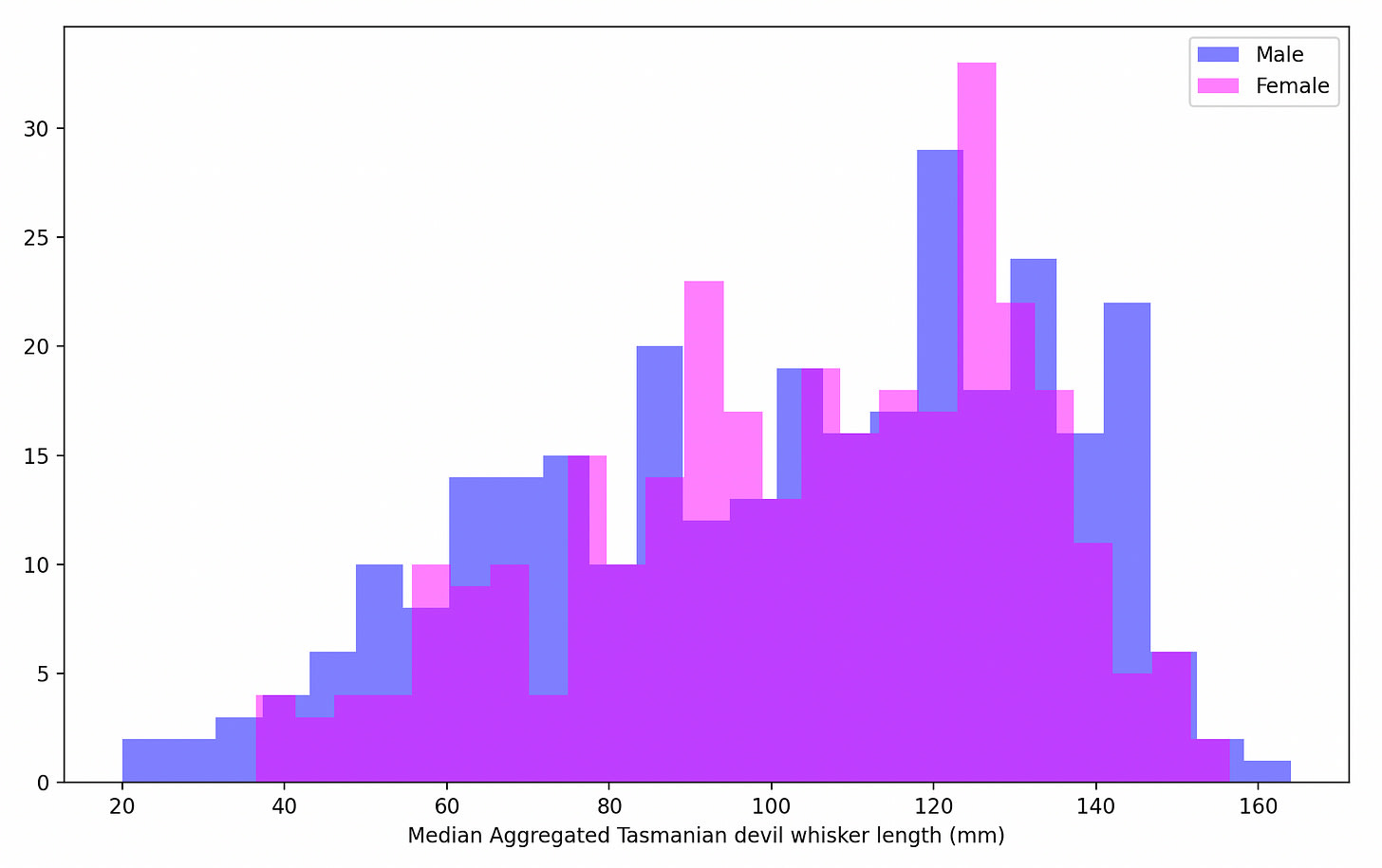

Marsupials are mammals mostly living in Australia or near there. Possums are marsupials that exist in the new world, but they also exist in Australia. Tasmanian devils are marsupials that live in Tasmania. Everyone knows that marsupials have pouches; fewer people know female marsupials don’t have the same X-inactivation process as placental mammals. In marsupials, only the mother’s X chromosome remains active in somatic cells. As a result, there is no X-linked phenotypical averaging that would differentiate males and female trait variance, and we have a test of whether the variability hypothesis is explained by X-inactivation. If it is, larger male variance would not be observed in marsupial traits.

We need to look at trait variance by biological sex in marsupials. Has anyone done this before?

I don’t think so. If they have, I haven’t found it. Bless their hearts, lots of people collect, well, niche datasets that I don’t have to assemble myself. Tasmanian devils are cute, charismatic, endangered, and suffer from a bizarre and fascinating type of infectious cancer. As a result, I was able to find a longitudinal dataset from Attard et al. which tracked, and I swear I am not making this up, Tasmanian devil whisker lengths over more than 600 individuals. The data and code for this analysis is checked in here.

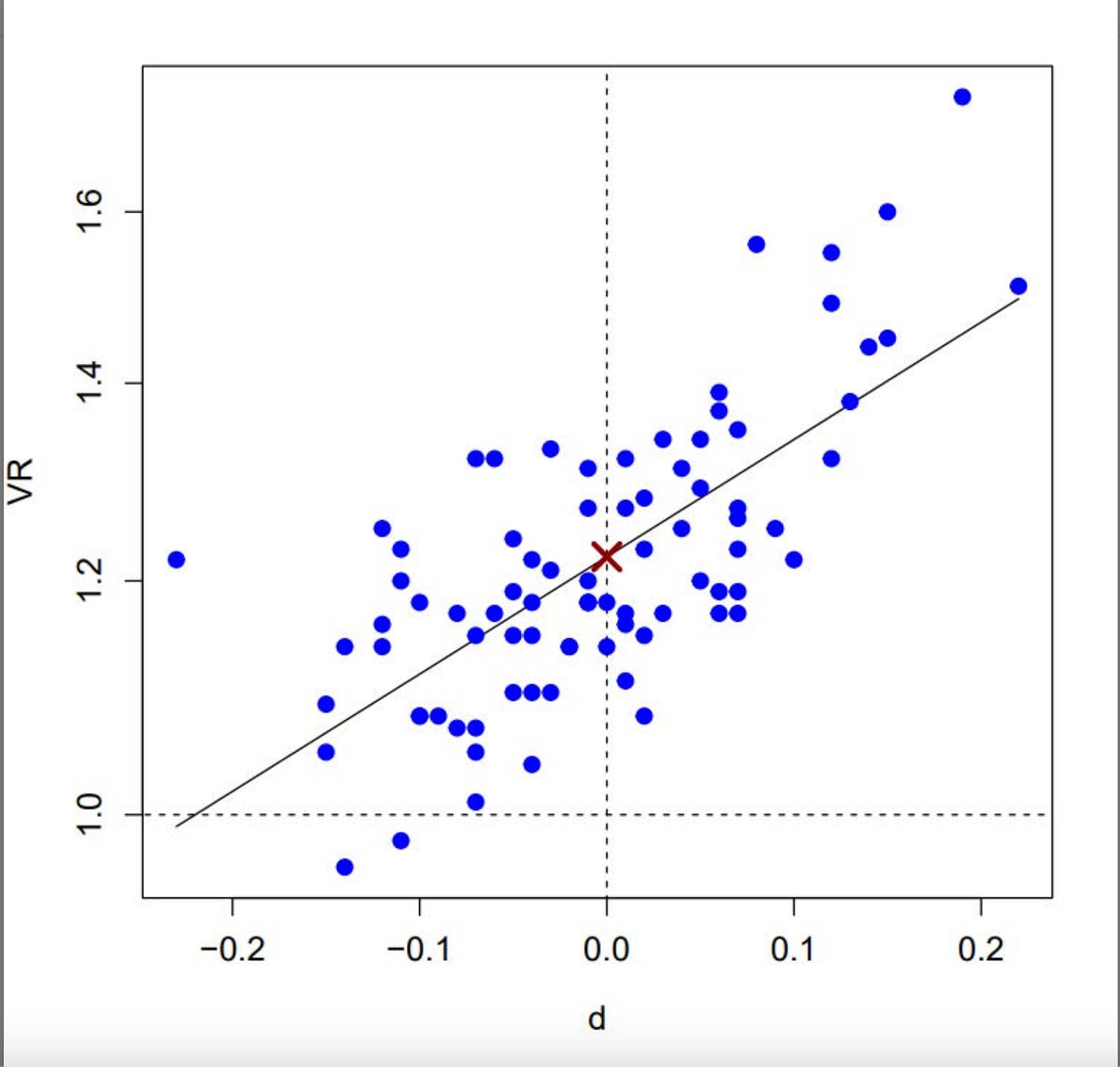

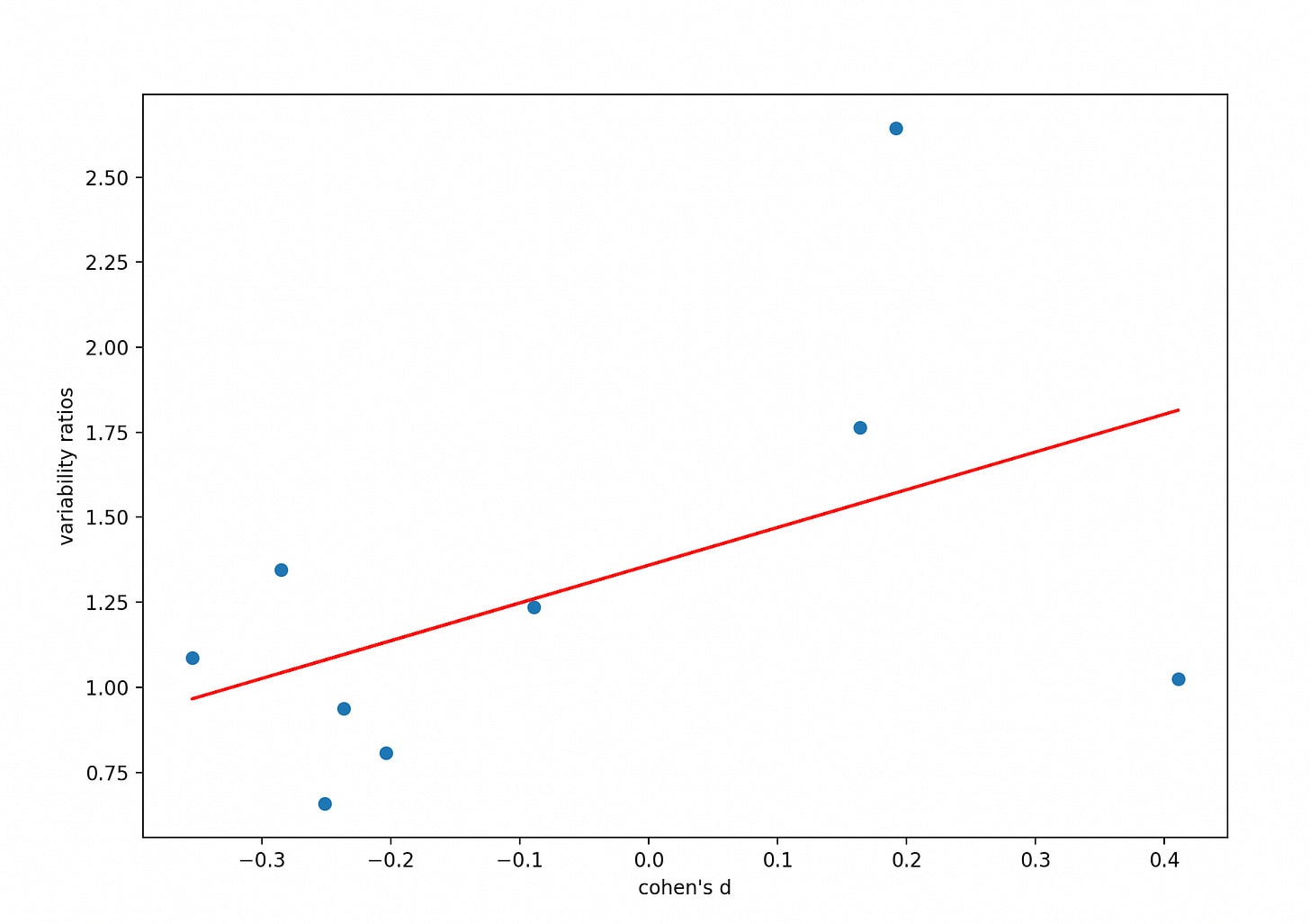

The convention here is to put the male/female mean differences in terms of Cohen’s d effect size since most of the human traits under discussion are normally distributed. As you can see below in Figure 1, Tasmanian devil whisker length is not normally distributed, but I still think it’s kosher to use it on these distributions. The male-female Cohen-d for this dataset is 0.066; this is tiny, implying that males and females have whisker lengths with comparable means.

The variance ratio for these distributions is 1.36 which is moderately larger than what’s been measured in some human traits. This variability ratio is just the male variance over the female variance.

A single weird trait in a single marsupial? What does this prove, RBA? Fair. In an astonishing stroke of luck, there’s a morphological dataset of 104 possums captured in Australia that’s part of the fairly standard DAAG package in R.

library(DAAG)

data(possum)

head(possum)

case site Pop sex age hdlngth skullw totlngth taill footlgth earconch eye

C3 1 1 Vic m 8 94.1 60.4 89.0 36.0 74.5 54.5 15.2

C5 2 1 Vic f 6 92.5 57.6 91.5 36.5 72.5 51.2 16.0

C10 3 1 Vic f 6 94.0 60.0 95.5 39.0 75.4 51.9 15.5

C15 4 1 Vic f 6 93.2 57.1 92.0 38.0 76.1 52.2 15.2

C23 5 1 Vic f 2 91.5 56.3 85.5 36.0 71.0 53.2 15.1

C24 6 1 Vic f 1 93.1 54.8 90.5 35.5 73.2 53.6 14.2

chest belly

C3 28.0 36

C5 28.5 33

C10 30.0 34

C15 28.0 34

C23 28.5 33

C24 30.0 32

> The Inquisitive Bird notes that in human datasets there are often correlations between effect sizes between men and women and the associated variability ratios. In other words, traits with greater mean male-female differences will tend to have high male variability.

Do the possums’ morphological subtests look the same?

Yes. There are fewer tests obviously, but in fact, at d = 0, the best fit line predicts a variability ratio of 1.36, exactly consistent with the Tasmanian devils’ dataset. The result is non-significant with so few tests. Measure your possums better!

In different datasets describing different species’ morphologies, this analysis shows that greater male variability exists in marsupials. In fact, the observed variability ratios exceed those in some data gathered from placental mammals, including humans. This suggests that the variability hypothesis cannot be explained by the X-inactivation mechanism that governs placental mammal phenotypes.

What are some alternative explanations we should look at instead?

A casual observer might have noticed that human female reproduction is fraught. In order to get a healthy child, over nine months, everything has to go right over what have been unpredictable and dangerous evolutionary times. From the cellular level where chromosomes segregate (and stay perfect and inert for decades!) at the beginning to the finished-baby level at the end, the female body performs a stunning feat of biological engineering. She has incubated a perfect, tiny, helpless person within her. This feat is a comprised of a diverse set of biological tasks, running through hundreds of biological pathways, governed by thousands of genes.

The same casual observer will note that human male reproduction is … less fraught. It can turn over in under an hour, and maybe faster if you’ve got gatorade handy. This is true regardless of where you live in the mammalian class of the animal kingdom.

Stabilizing selection is the sort of natural selection that penalizes outlying phenotypes. If you’re too tall or too short, too fat or too skinny, too smart or too dumb, or simply too weird, Darwin won’t smile upon you and you won’t reproduce. By this type of selection, the average is privileged.

In this explanation of the variability hypothesis, males express greater phenotypic variability because female reproduction is unforgiving, and males don’t have to do it. Females experience greater stabilizing selection than males across traits sharing pathways with exacting female child-bearing. This can also explain the enormous diversity of traits that apparently shows greater male variability.

In many evolutionary stories, there’s some indirect causal route from a trait being selected to evolutionary fitness. The stabilizing selection explanation of the variability hypothesis is appealing because the trait being selected isn’t eye color, skin tone, or playfulness, or whatever; the trait is literally the ability to carry a child to term, one of the most direct measures of female fitness.

Any test of this is indirect and difficult; you might look at male/female variability ratios in mammals having long pregnancies versus those having short ones—longer ones would predict more female stabilizing selection and higher variability ratios. You also could look at variability ratios in species where male and female reproductive roles are sort of swapped, like seahorses or something. Maybe we’ll do this in another poast.

I’m only adding this because it has some interesting intrigue. In 2017, Hill et al. published a paper proposing a mathematically sophisticated evolutionary explanation for the variability hypothesis. It was published, and taken down from that journal. And published again in another journal, and taken down again. A 2020 published version of this exists here in Journal of Interdisciplinary Mathematics, and apparently a preprint went up a few months ago?

Like, I said, intrigue. Hill’s idea is based off sexual selection; his argument is that the variability hypothesis can be explained by female choosiness and threshold effects in mating. Tim Gowers, who speaks to God himself, panned the paper, and I don’t think it represents the facts of sexual selection very well, but it’s reasonable to entertain the idea that sexual selection has something to do with this. Maybe someone can develop this idea a little bit better, and hopefully empirically justify it as well.

2026-03-06 22:43:58

We argue that shaping RL exploration, and especially the exploration of the motivation-space, is understudied in AI safety and could be influential in mitigating risks. Several recent discussions hint in this direction — the entangled generalization mechanism discussed in the context of Claude 3 Opus's self-narration, the success of using inoculation prompting against natural emergent misalignment and its relation to shaping the model self-perception, and the proposal to give models affordances to report reward-hackable tasks — but we don't think enough attention has been given to shaping exploration specifically.

When we train models with RL, there are two kinds of exploration happening simultaneously:

Both explorations occur during a critically sensitive and formative phase of training, but (2) is significantly less specified than (1), and this underspecification is both a danger and an opportunity. Because motivations are so underdetermined by the reward signal, we may be able to shape them without running into the downstream problems of blocking access to high-rewards, such as deceptive alignment. Capability researchers have strong incentives to develop effective techniques for (1), but likely weaker incentives to constrain (2). We think safety work should address both, with a particular emphasis on motivation-space exploration.

Exploration shaping seems relatively absent from safety discussions, with the exception of exploration hacking. Yet exploration determines which parts of policy and motivation space the reward function ever gets to evaluate. We focus on laying out a theoretical case for why this matters. At the end of the post, we quickly point to empirical evidence, sketch research directions, open questions, and uncertainties.

High-level environment-invariant motivations. By "motivations," we don't mean anything phenomenological; we're not claiming models have felt desires or intentions in the way humans do. We mostly mean the high-level intermediate features used to simulate personas, which sit between the model's inputs and the lower-level features selecting tokens. These are structures that the Persona Selection Model describes as mediating behavior, and that the entangled generalization hypothesis includes in features reinforced alongside outputs. What distinguishes them from other features, and from "motivations" as used in the behavioral selection model, is that they are high-level, at least partially causally relevant for generalization, and partially invariant to environments: they characterize the writer more than the situation.

Compute-intensive RL beyond human data distribution. By “RL training”, we mostly mean RLVR and other techniques using massive amounts of compute to extend capabilities beyond human demonstrations. We thus mostly exclude or ignore instruction-following tuning (e.g., RLHF, RLAIF) when talking about “RL training”.

Post-instruction-tuning models are pretty decent, they have reasonably good values, they're cooperative, and they're arguably better-intentioned than the median human along many dimensions. That is as long as you stay close enough to the training distribution. Safety concerns are not so much about the starting point of the RL training. The risks are highest during RL training, for several interlocking reasons.

RL produces the last major capability jump. Scaling inference compute and improving reasoning through reasoning fine-tuning is currently the last step during which models get substantially more capable. This means RL training is the phase where intelligence is at its peak — and thus where the risks from misalignment are close to their highest.

RL pushes toward consequentialist reasoning. This is a point that's been made before (see e.g., Why we should expect ruthless sociopath ASI), but worth reiterating. RL training rewards outcomes, which means it differentially upweights reasoning patterns that are good at achieving outcomes — i.e., consequentialist reasoning. And consequentialist reasoning, especially in capable models, tends to converge on instrumental subgoals like resource acquisition and self-preservation, regardless of what the terminal goals actually are.

Character can drift substantially during RL. During pre-training, the model learns to simulate a distribution of writers — it can produce text as-if-written-by many different kinds of people, and interpolations between them. During instruction-tuning, this distribution gets narrowed: the model is shaped into an assistant character (see Anthropic's Persona Selection Model), with some dimensions strongly constrained (helpfulness, for instance) and others less so (honesty, say). But then during RL, because the training uses similar or more compute than pre-training and because the data distribution differs significantly from pre-training data, the character can drift away from the pre-training distribution in ways that are hard to predict. The persona selected during instruction-tuning isn't necessarily stable under RL optimization pressure.

Underspecification is central here, both as a source of risk (e.g., Natural Emergent Misalignment caused by reward hacking) and as a potential lever for intervention. The fact that motivations and self-perceptions are more underspecified than actions implies that we could shape models to maintain aligned objectives and values even when they are trained in environments that reward harmful or greedy behavior, e.g., environments in which reward hacking or power-seeking is rewarded.

Actions are underspecified by the training environment. We train in-distribution, which means everything out-of-distribution is unconstrained. But even in-distribution, multiple action-profiles can yield similar rewards. In code generation, clean principled solutions and brittle test-passing hacks achieve identical training rewards — this is exactly the underspecification that enables reward hacking in coding benchmarks.[1]

Motivations are even more underspecified than actions. For any given set of optimal actions, the internal motivations that could produce those actions are much more numerous than the actions themselves. This is the familiar point about instrumental convergence — many different terminal goals produce the same instrumental behavior — but it cuts especially deep for RL training.

Consider the canonical example: a model that is "being nice" and a model that is "being deceptively nice" produce the same observable policy in training. The difference only shows up out-of-distribution. And because RL training reinforces based on observed outcomes, it has essentially no leverage to distinguish between these two motivation profiles during training.

This gives us a key framing. There are two kinds of exploration to shape during RL: exploration of the action space and exploration of the motivation space. The second is more underspecified, probably more neglected, and — we'd argue — where more of the safety-relevant work needs to happen.

The underspecification is both a risk and an opportunity. The same underspecification that gives us a lever for intervention also gives a capable model room to steer its own RL training. As described by exploration hacking, a model with sufficient situational awareness could strategically control its own exploration during RL to influence the training outcome — e.g., they demo a model generating high-reward trajectories that encode its preferred motivations, which is the adversarial counterpart to the controlled motivation-space exploration we advocate here. The authors show that frontier reasoning models already meet some of the requirements for this. If developers don't intentionally shape the exploration of the motivation space, sufficiently capable models may shape it themselves.

Three threat models motivate this work. We are here primarily focusing on scenarios where (a) RL environments inadvertently reward harmful behavior — reward hacking being the most studied case, but not the only one; (b) models develop instrumental power-seeking even in benign environments, as a convergent strategy for achieving whatever goals they have; and (c) actors intentionally train power-seeking or harmful models, e.g., for profit maximization. All three share a common structure: the model ends up in a region of behavior-space where competitive, strategic, or exploitative actions are reinforced. The question is whether that reinforcement necessarily drags the model's motivations toward misalignment as well.

The connection between reward hacking and broader misalignment is not merely theoretical. Anthropic's natural reward hacking research demonstrated that when models learn to reward hack in production RL environments, misalignment emerges across evaluations. The model generalizes from "I can exploit this scoring function" to an entire misaligned persona. If power-seeking, greedy, or harmful behavior during training is tightly correlated with "dark" personality traits in the model's representations, then any environment that rewards competitive or strategic behavior — which is most high-stakes real-world environments — risks pulling the model toward misalignment.

But if we could decouple these correlations, models could be trained in competitive or reward-hackable environments without the value drift. Ideally, such training environments would not be used, but threat model (a) means some will be missed. Threat model (b) means that even carefully designed environments may not be enough — models may converge on power-seeking instrumentally. And threat model (c) is perhaps the most concerning: AI developers or power-seeking humans will plausibly train models to be power-seeking deliberately. The pressure for this is not hypothetical — the huge financial incentives, and the AI race among AI developers or countries illustrate the point directly. The ability to train models that remain value-aligned even while trained to perform optimally in competitive or harmful environments is a direct safety concern, and shaping motivation-space exploration is one path toward it.

Capability researchers prioritize shaping action-space exploration. Capability researchers have strong incentives to develop effective policy exploration techniques — faster convergence, wider coverage of high-reward regions, overcoming exploration barriers. But they have likely weaker incentives to constrain the motivation space being explored. From a pure capability standpoint, it doesn't matter why the model finds good actions, as long as it finds them. In fact, constraining motivation exploration might even slow down training or reduce final capability, creating an active incentive to leave it unconstrained. Action-exploration will be solved by capability research as a byproduct of making training efficient. Shaping the exploration of the motivation-space is less likely to be solved by default, unless someone is specifically trying to do it.