2026-03-07 00:26:59

This is Behind the Blog, where we share our behind-the-scenes thoughts about how a few of our top stories of the week came together. This week, we discuss a PC repair battle, a revealing comment from an FBI official, and a dangerously dumb narrative.

EMANUEL: I want to update those who have been following the 404 Media sidequest “Emanuel’s CPU is dying.” The update is that I basically got a new PC. I kept my GPU (4080 Super), my CPU cooler, and storage, and upgraded everything else, including the case, because I bought the old one in the era before GPUs were more than a foot long.

2026-03-06 04:36:01

Privacy-focused email provider Proton Mail provided Swiss authorities with payment data that the FBI then used to determine who was allegedly behind an anonymous account affiliated with the Stop Cop City movement in Atlanta, according to a court record reviewed by 404 Media.

The records provide insight into the sort of data that Proton Mail, which prides itself both on its end-to-end encryption and that it is only governed by Swiss privacy law, can and does provide to third parties. In this case, the Proton Mail account was affiliated with the Defend the Atlanta Forest (DTAF) group and Stop Cop City movement in Atlanta, which authorities were investigating for their connection to arson, vandalism and doxing. Broadly, members were protesting the building of a large police training center next to the Intrenchment Creek Park in Atlanta, and actions also included camping in the forest and lawsuits. Charges against more than 60 people have since been dropped.

2026-03-05 23:19:28

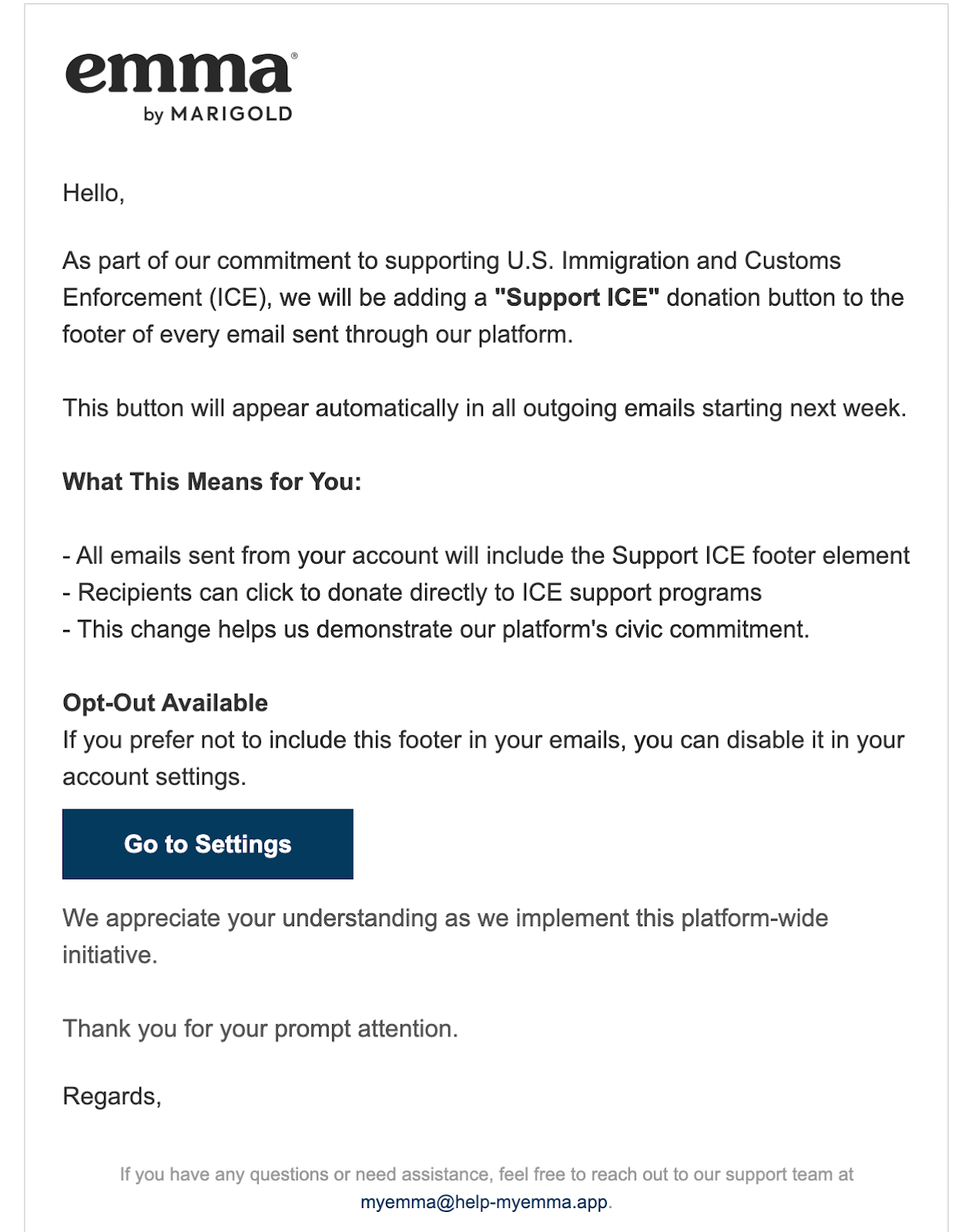

Clients of a long-running email marketing platform are getting targeted with a phishing campaign telling them that their emails would begin automatically inserting a “‘Support ICE’ donation button” into every email they send. The strategy suggests that scammers are trying to capitalize on people’s revulsion to ICE by coming up with strategies that would cause users to quickly log into their accounts to disable the setting. In reality, clients would be revealing their username and password to hackers.

The move indicates that hackers are targeting clients of enterprise software companies with extremely controversial political emails. The scam targeted customers of Emma, a long-running email marketing platform whose clients include Orange Theory, Yale University, Texas A&M University, the Cystic Fibrosis Foundation, Dogfish Head Brewery, and the YMCA, among others. 404 Media was forwarded a copy of the phishing email from an Emma client.

“As part of our commitment to supporting U.S. Immigration and Customs Enforcement (ICE), we will be adding a ‘Support ICE’ donation button to the footer of every email sent through our platform,” the phishing email reads. “This button will appear automatically in all outgoing emails starting next week […] all emails sent from your account will include the Support ICE footer element […] this change helps us demonstrate our platform’s civic commitment.” The email adds that it is possible to opt out of this feature, and that “we appreciate your understanding as we implement this platform-wide initiative.”

Lisa Mayr, the CEO of Marigold, which owns Emma, told 404 Media that the company “would never publish anything like this. This is a very common phishing attempt.”

Mayr is right—clients of other email sending services have recently been targeted with similar attacks. In January, programmer Fred Benenson wrote about phishing emails he had gotten that were targeting users of SendGrid, another email marketing service. At least one of the emails Benenson got used the same “Support ICE button” language and has the subject line “ICE Support Initiative.”

“If you’ve been paying any attention at all to US politics, you’ll know how insidiously provocative this would be if it were a real email,” Benenson wrote in a blog post about the email. “This phishing campaign is a fascinating example of how sophisticated social engineering has become. Instead of Nigerian 419 scams, hackers have evolved to carefully craft messages sent to professionals that are designed to exploit the American political consciousness. The opt-out buttons are the trap.”

In SendGrid’s case, Benenson found that the emails looked “real” because they were sent from other SendGrid user accounts. Basically, hackers compromised the account of a SendGrid user and then used that account to send phishing emails using the SendGrid infrastructure. “The emails look real because, technically, they are real SendGrid emails sent via SendGrid’s platform and via a customer’s reputation–they’re just sent by the wrong people and wrong domains,” he wrote.

Besides the ICE-themed phishing emails, Benenson also received an email that said SendGrid was going to add a “pride-themed footer to all emails” and another that said “all emails sent from your account will feature a commemorative theme honoring George Floyd and the Black Lives Matter movement.”

“The political sophistication on display here (BLM, LGBTQ+ rights, ICE, even the Spanish language switch playing on immigration anxieties) suggests someone with a deep understanding of American cultural fault lines,” Benenson wrote.

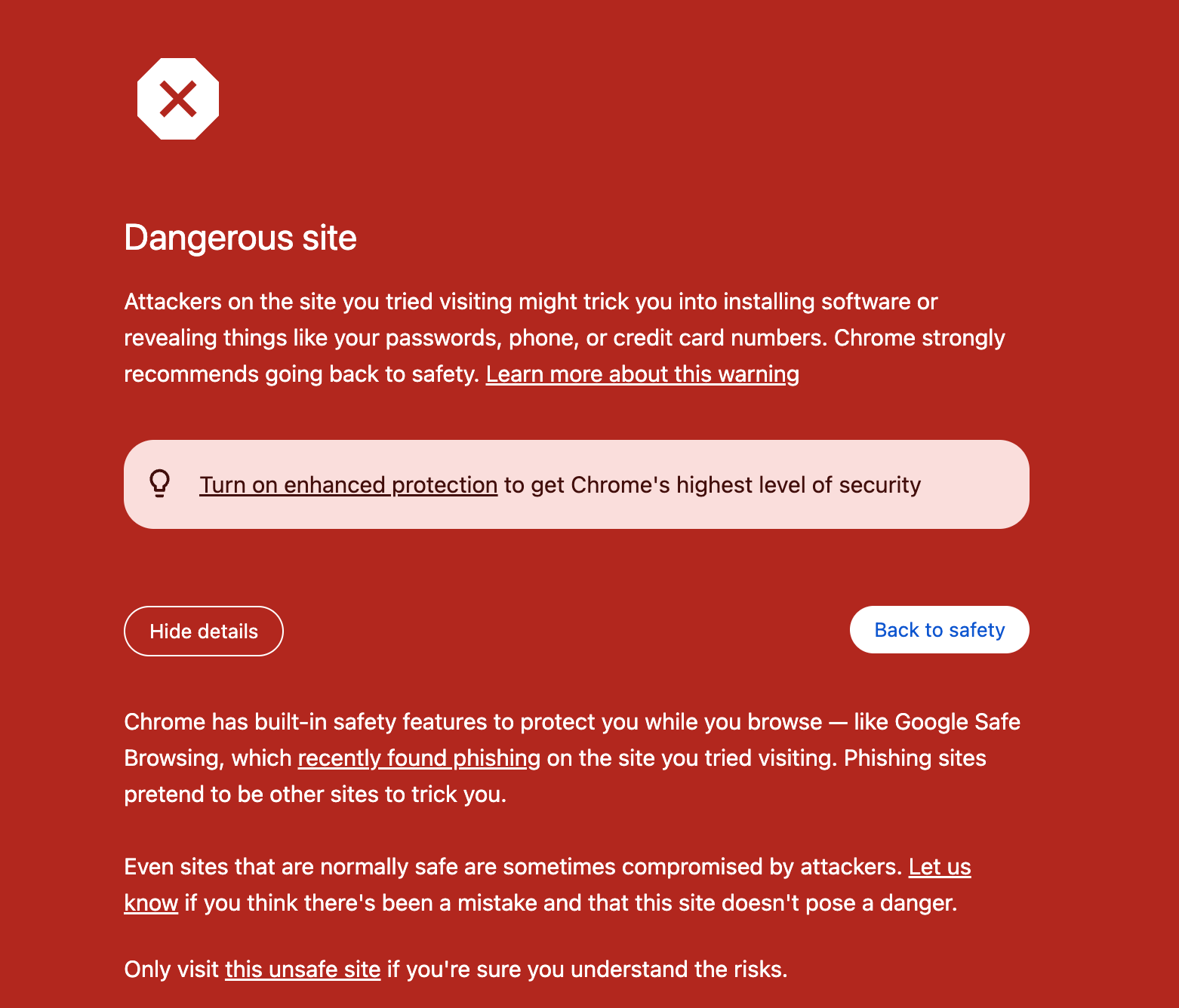

The Emma email was sent via Survey Monkey through an email address called “[email protected].” When users clicked a “Settings” button that would have allowed them to opt out of the feature, they’re sent to a generic-looking site designed to steal credentials hosted at app-e2maa.net. By the time 404 Media got the email, Chrome had detected it as a “Dangerous site” and warned users not to visit it.

2026-03-05 00:22:49

This week we discuss our coverage of the U.S.-Israel strikes against Iran, specifically how Polymarket and Kalshi are letting people profit from death, and that Amazon data centers were on fire after missiles hit Dubai. Then Emanuel talks about how AI translations are adding 'hallucinations' to Wikipedia articles. In the subscribers-only section, Sam tells us about a change with Amazon wishlists that may expose your address.

Listen to the weekly podcast on Apple Podcasts, Spotify, or YouTube. Become a paid subscriber for access to this episode's bonus content and to power our journalism. If you become a paid subscriber, check your inbox for an email from our podcast host Transistor for a link to the subscribers-only version! You can also add that subscribers feed to your podcast app of choice and never miss an episode that way. The email should also contain the subscribers-only unlisted YouTube link for the extended video version too. It will also be in the show notes in your podcast player.

0:00 - Intro

1:32 - With Iran War, Kalshi and Polymarket Bet That the Depravity Economy Has No Bottom

29:07 - AI Translations Are Adding Hallucinations To Wikipedia Articles

SUBSCRIBER'S STORY - Amazon Change Means Wishlists Might Expose Your Address

2026-03-04 23:55:56

For a few hours on Tuesday, Polymarket hosted a bet about the possibility of nuclear war in 2026. The market asked the question “Nuclear weapon detonation by …?” and racked up close to a million dollars in trading volume before Polymarket took the unusual step to remove the market from its website. It did not simply close down the bet, but it’s been “archived” meaning that a record of it no longer exists. It’s strange as many older and paid out bets remain on the site.

2026-03-04 22:00:52

Wikipedia editors have implemented new policies and restricted a number of contributors who were paid to use AI to translate existing Wikipedia articles into other languages after they discovered these AI translations added AI “hallucinations,” or errors, to the resulting article.

The new restrictions show how Wikipedia editors continue to fight the flood of generative AI across the internet from diminishing the reliability of the world’s largest repository of knowledge. The incident also reveals how even well-intentioned efforts to expand Wikipedia are prone to errors when they rely on generative AI, and how they’re remedied by Wikipedia’s open governance model.

The issue in this case starts with an organization called the Open Knowledge Association (OKA), a non-profit organization dedicated to improving Wikipedia and other open platforms.

“We do so by providing monthly stipends to full-time contributors and translators,” OKA’s site says. “We leverage AI (Large Language Models) to automate most of the work.”

The problem is that editors started to notice that some of these translations introduced errors to articles. For example, a draft translation for a Wikipedia article about the French royal La Bourdonnaye family cites a book and specific page number when discussing the origin of the family. A Wikipedia editor, Ilyas Lebleu, who goes by Chaotic Enby on Wikipedia, checked that source and found that the specific page of that book “doesn't talk about the La Bourdonnaye family at all.”

“To measure the rate of error, I actually decided to do a spot-check, during the discussion, of the first few translations that were listed, and already spotted a few errors there, so it isn't just a matter of cherry-picked cases,” Lebleu told me. “Some of the articles had swapped sources or added unsourced sentences with no explanation, while 1879 French Senate election added paragraphs sourced from material completely unrelated to what was written!”

As Wikipedia editors looked at more OKA-translated articles, they found more issues.

“Many of the results are very problematic, with a large number of [...] editors who clearly have very poor English, don't read through their work (or are incapable of seeing problems) and don't add links and so on,” a Wikipedia page discussing the OKA translation said. The same Wikipedia page also notes that in some cases the copy/paste nature of OKA translators’ work breaks the formatting on some articles.

Wikipedia editors investigated how OKA was operating and found that it was mostly relying on cheap labor from contractors in the Global South, and that these contractors were instructed to copy/paste articles to popular LLMs to produce translations.

For example, a public spreadsheet used by OKA translators to keep track of what articles they’re translating instructs them to “pick an article, copy the lead section into Gemini or chatGPT, then review if some of the suggestions are an improvement to readability. Make edits to the Wiki articles only if the suggestions are an improvement and don't change the meaning of the lead. Do not change the content unless you have checked that what Gemini says is correct!”

Lebleu told me, and other editors have noted in their public on-site discussion of the issue, that these same instructions previously told OKA translators to use Grok, Elon Musk’s LLM, for the same purpose. Grok, which also produces an entirely automated alternative to Wikipedia called Grokepedia, is prone to errors precisely because it does not use humans to vet its output.

“The use of Grok proved controversial, notably given the reasons for which Grok has been in the news recently, and a recent in-house study showed ChatGPT and Claude perform more accurately, leading them to switch a few days ago, although they still recommend Grok as ‘valuable for experienced editors handling complex, template-heavy articles,’” Lebleu told me.

Ultimately the editors decided to implement restrictions against OKA translators who make multiple errors, but not block OKA translation as a rule.

“OKA translators who have received, within six months, four (correctly applied) warnings about content that fails verification will be blocked without further warning if another example is found,” the Wikipedia editors wrote. “Content added by an OKA translator who is subsequently blocked for failing verification may be presumptively deleted [...] unless an editor in good standing is willing to take responsibility for it.”

A job posting for a “Wikipedia Translator” from OKA offers $397 a month for working up to 40 hours per week. The job listing says translators are expected to publish “5-20 articles per week (depending on size).”

“They leverage machine translation to accelerate the process. We have published over 1500 articles and the number grows every day,” the job posting says.

“Given this precarious status, I am worried that more uncertainty in the translator duties may lead to an overloading of responsibilities, which is worrying as independent contractors do not necessarily have the same protections as paid employees,” Lebleu wrote in the public Wikipedia discussion about OKA.

Jonathan Zimmermann, the founder and president of OKA, and who goes by 7804j

on Wikipedia, told me that translators are paid hourly, not per article, and that there is no fixed article quota.

“We emphasize quality over speed,” Zimmerman told me in an email. “In fact, some of the problematic cases involved unusually high output relative to time spent — which in retrospect was a warning sign. Those cases were driven by individual enthusiasm and speed rather than institutional pressure.”

Zimmerman told me that “errors absolutely do occur,” but that OKA’s process includes human review, requires translators to check their content against cited sources, and that “senior editors periodically review samples, especially from newer translators.”

“Following the recent discussion, we have strengthened our safeguards,” Zimmerman told me. “We are now rolling out a second, independent LLM review step. Translators must run the completed draft through a separate model using a dedicated comparison prompt designed to identify potential discrepancies, omissions, or inaccuracies relative to the source text. Initial findings suggest this is highly effective at detecting potential issues.”

Zimmerman added that if this method proves insufficient, OKA is considering introducing formal peer review mechanisms

Using AI to check the output of AI for errors is a method that is historically prone to errors. For example, we recently reported on an AI-powered private school that used AI to check AI-generated questions for students. Internal testing found it had at least a 10 percent failure rate.

“I agree that using AI to check AI can absolutely fail — and in some contexts it can fail at very high rates. We’re not assuming the secondary model is reliable in isolation,” Zimmerman said. “The key point is that we’re not replacing human verification with automated verification. The second model is a complement to manual review, not a substitute for it.”

“When a coordinated project uses AI tools and operates at scale, it’s going to attract attention. I understand why editors would examine that closely. Ultimately, the outcome of the discussion formalized expectations that are largely aligned with our existing internal policies,” Zimmerman added. “However, these restrictions apply specifically to OKA translators. I would prefer that standards apply equally to everyone, but I also recognize that organized, funded efforts are often held to a higher bar.”