2026-02-18 19:25:00

This is the only article of the week. The 2nd half is premium.

Elon Musk is betting his companies SpaceX and xAI on space datacenters.

He believes that, in three years’ time, AI companies won’t have access to enough electricity to power their data centers, so only those who move the data centers to space will be able to continue growing their AIs.

The question becomes: Can you build working datacenters in space at a reasonable price?

As I ran the numbers, I realized that Musk is on to something: Datacenters in space are already in the ballpark of costs of land-based ones, and might soon be cheaper! This article will explain why.

In the process of writing it, I studied dozens of sources, including many space datacenter reports. I also wrote a tweet to gather feedback from the community—which Musk responded to. That said, as it’s my first foray into space datacenters, I’m still guaranteed to have made mistakes; I just don’t know which ones. However, it doesn’t look like they would change the conclusions. Please point out mistakes if you find them.

At its core, a datacenter on land is pretty simple:

The GPUs1

Some other IT stuff, like memory, radiators to cool the system, connectors, etc.

A source of electricity. If you want your data center to be independent from the grid, you’ll want:

Solar panels to convert light into electricity

Batteries to store the excess electricity from the solar panels during the day and power the datacenters at night

If you want to put this in space, you need to fit that stuff into a rocket.

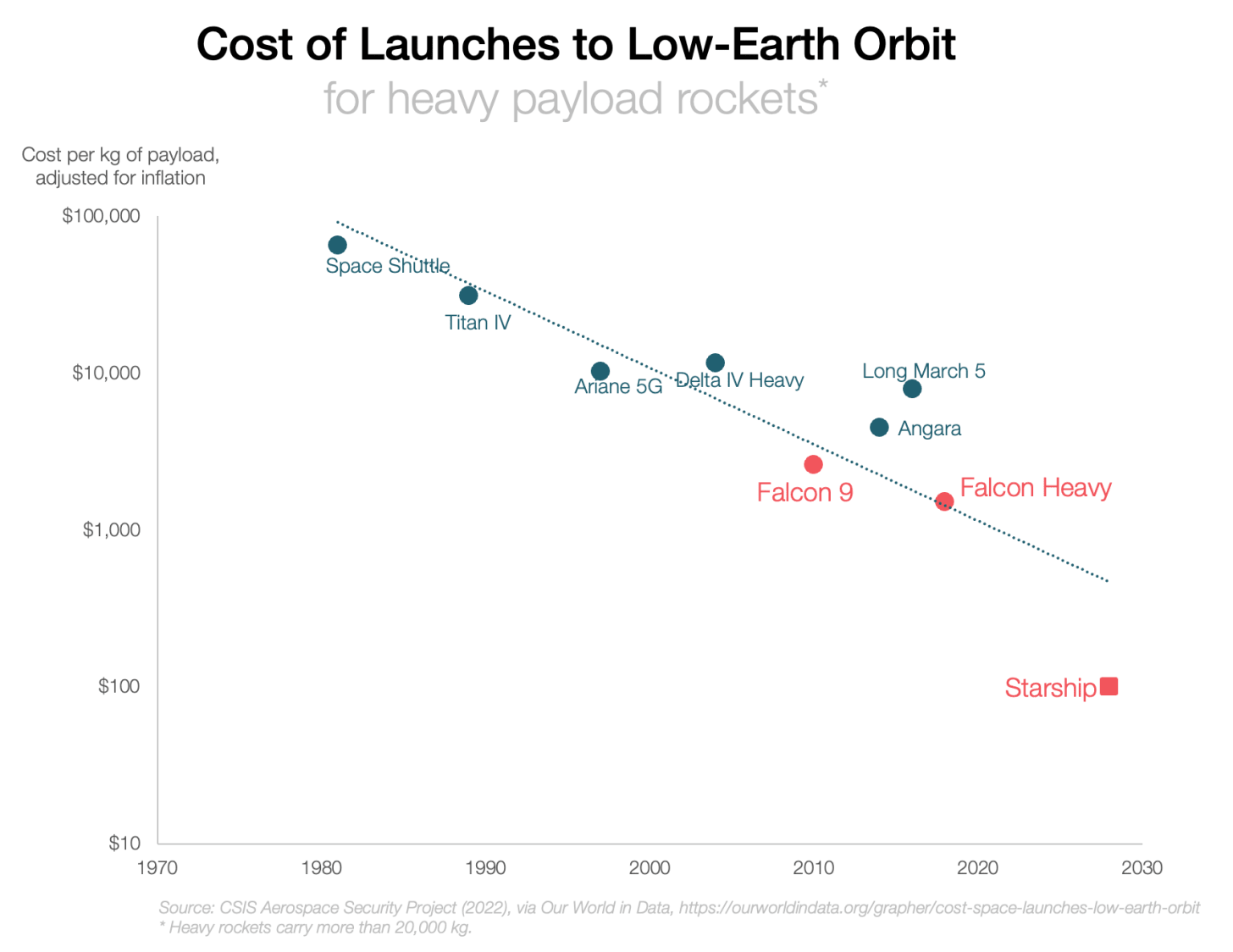

But that’s a lot of stuff, especially the three bulkiest elements: solar panels, batteries, and radiators. SpaceX is building Starship, a rocket that can carry 150-200 tons to space at a cost that should reach ~$100/kg. That’s ~15x cheaper than what they can do today (and 45x cheaper than the competition), but it’s still quite expensive. So you want to strip as much of that weight as you can. How do you do that?

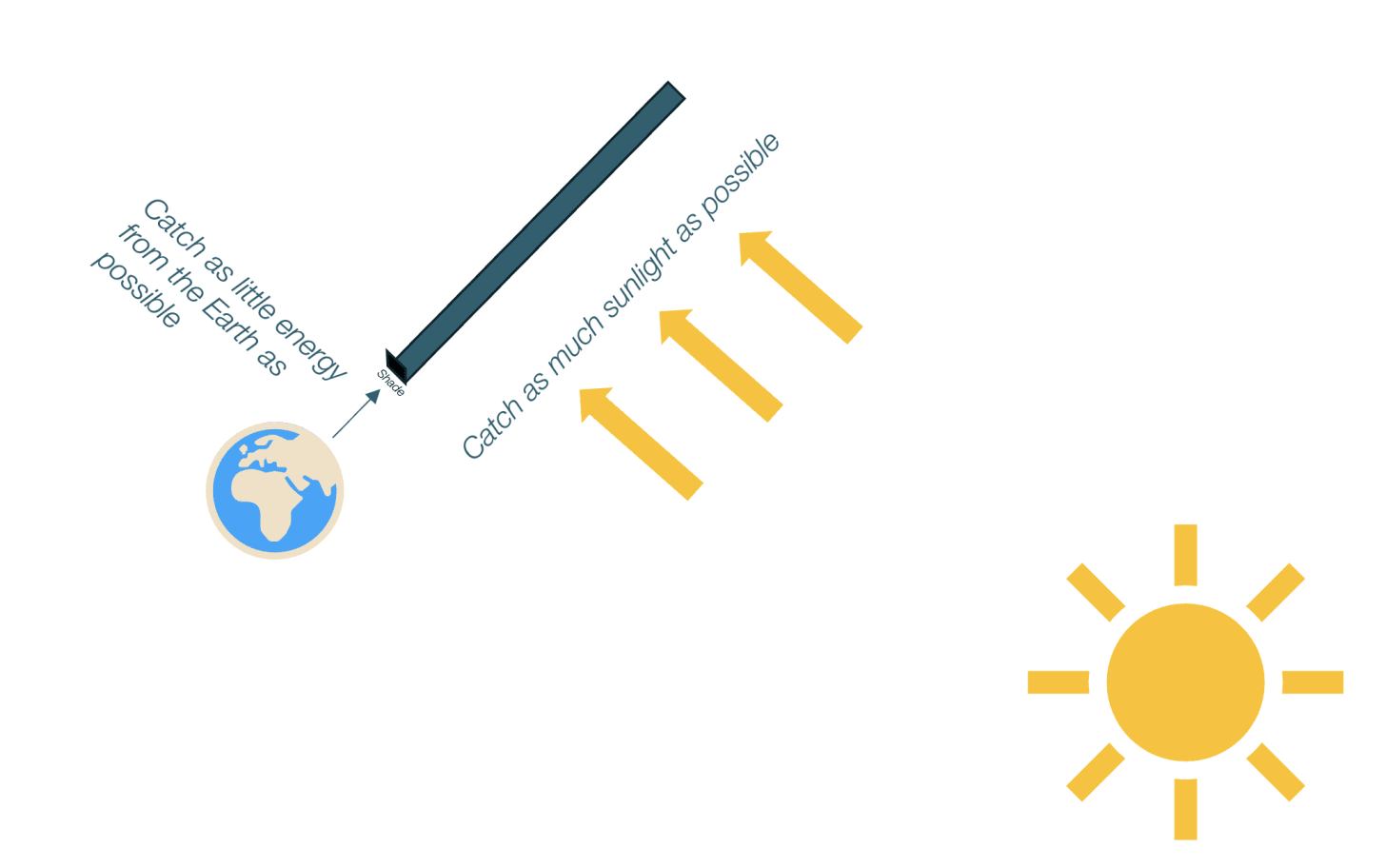

Solar panels produce about 25% of the electricity they could theoretically generate because of the day-night cycle, the seasons, and atmospheric conditions like clouds or storms. But in space, the Sun is always shining. If you have the right orbit, you can keep your solar panels lit all the time, and that provides two benefits.

First, you get ~4x more direct sunlight, because the Sun will be perpendicular to the panels all the time, and there will be no night.

Second, when solar rays cross the atmosphere, they lose about ~30% of their energy.2 Eliminate the atmosphere, and you eliminate this loss.

Add these factors together, and you get ~5x (and up to 9x) more energy from your solar panels than you would on Earth—or, in other words, you need 5x fewer solar panels (and their mass) in space than on Earth.3

If your solar panels are perfectly illuminated, you don’t need to buy and transport the very heavy batteries, which represent over half4 of the total weight. Massive win.

But how do you get your solar panels to always face the Sun?

One way to achieve that is by orbiting the Earth around the poles, in what’s called a sun-synchronous orbit:

The problem with this is that it’s quite expensive to get satellites into these orbits. Normally, rockets are launched from as close to the equator as possible, in order to use the rotation of the Earth to move faster.

But if you send them into a polar orbit, you can’t use that inertia, so it’s much less fuel-efficient. It’s easier if your satellite follows an orbit that isn’t too distant from the equator’s plane.

The problem here is that the satellite ends up in the shadow of the Earth… Another solution is to send the satellite far enough from Earth,5 and not quite on the same plane as the equator, so that it won’t pass through the Earth’s shadow.

The exact orbit will need to optimize for:

100% sunlight

Closest proximity to Earth (to reduce fuel costs of the rocket to reach a distant orbit)

Efficiency for rockets from Starbase to reach

But if the satellites are far from Earth, isn’t this going to create some latency? Won’t signal take too long to come back to Earth? Yes, but it doesn’t matter:

Even if the satellites were far away, say at 5,000 km, the time for the signal to go back and forth from Earth would be 30 ms.

For most AI uses, a few milliseconds (or even seconds) of delay doesn’t matter that much. Think about how long some AI tasks take today, from seconds to minutes, and even hours!6

OK we got rid of more than half of the weight from batteries and 5xed the efficiency of our solar panels. What else can we eliminate?

Once you’ve disposed of the batteries, the solar array is the biggest source of weight of Starlink satellites today,7 about a third of the total.

But in space, solar panels are much lighter than on Earth. A solar panel on Earth weighs8 about 10 kg/m2 or more9, while in space it can weigh as little as 1 kg/m2 or less.

That’s because, in space, there’s no gravity, atmosphere, rain, hail, dust… The panels only need to be lightly structured; they don’t need glass to protect them, aluminum frames, stiff backsheets, sturdy mounting, rails, clamps, grounding…

They do need some coating to protect against the intense solar radiation and flares, but if the satellites are close enough to Earth, they’re protected by its magnetic field, so with minimal coating, they can withstand failure rates of less than 1% per year.

Musk’s SpaceX (and Tesla!) design and participate in the supply chain of solar panels, so I assume they’ll adapt them as much as possible to their needs.

This is already quite optimized, though, so I assume there’s not too much more to do here.

Now we need to optimize the weight of GPUs, but these are actually not very heavy relative to their cost. To get a sense of this, an NVIDIA GPU today costs ~$25k and weighs from 1.2 – 2 kg, while a fully-loaded system (if you add all the other costs, like memory, cooling, etc) costs as much as $56k and weighs 16 kg.

Sending 16 kg to space today is expensive ($24k), but it won’t be with Starship ($3.2k when it costs $200/kg, half that when it costs $100/kg). And that assumes all the weight of what we use on Earth would be carried to space, which is unlikely. These systems will be streamlined for weight, so that the cost of shipping the GPUs to orbit will be a tiny fraction of the cost of the GPUs. We’ll see in a moment why.

But besides their weight, GPUs face another problem in space: radiation. On Earth, we’re protected from solar electromagnetic rays by the atmosphere and our magnetic field, but these protections are much weaker in space—when they exist. This is a serious problem for computers in space today, because these rays cause mayhem in computers. As a result, they need shielding, which is expensive and heavy.

One of the issues is that electromagnetic rays flip bits and cause havoc in existing systems. For example, imagine that a computer has the following number: 1000001000001, which in decimal is 4161. If that first bit receives a solar ray and flips to 0, that number is now 0000001000001, which in decimal is 65. Imagine that you’re calculating 4161*10 and instead of getting 41,610, you get 650. Every downstream calculation will be monumentally off. Catastrophe! As a result, computers in space today require electromagnetic shields that add to their weight.

But this is not how AI works. AIs are not deterministic, they’re probabilistic. AIs have massive files with billions of parameters that each add a tiny amount to the final value. Querying a GPU is kind of like taking a poll to millions of people. Another way to think about it: In your brain, connections between neurons are constantly dying and forming. Any single one of them is not important at all. They can be severed, and everything will continue as normal.

The result is that GPUs don’t need as much electromagnetic shields as traditional computers, so this added weight can be avoided.

The rest of the software can also be adapted to this situation, tolerating random errors instead of assuming perfect calculations all the time.

Some systems, like the memory, will still need shielding, but if you’re limiting the shielding to only a few small parts of the satellite, the cost in weight will be tiny.

With this type of treatment, Google believes their chips can last 5 years in space—about the lifetime of a datacenter:10

We tested Trillium, Google’s TPU, in a proton beam to test for impact from total ionizing dose (TID) and single event effects (SEEs).

The results were promising. While the High Bandwidth Memory (HBM) subsystems were the most sensitive component, they only began showing irregularities after a cumulative dose of 2,000 rad(Si) — nearly three times the expected (shielded) five year mission dose of 750 rad(Si). No hard failures were attributable to TID up to the maximum tested dose of 15 krad(Si) on a single chip, indicating that Trillium TPUs are surprisingly radiation-hard for space applications.—Google

GPUs have a high failure rate, but you can’t swap them in space. So what are you supposed to do?

One of the measures will be to make them more tolerant to heat (we’ll talk about this next). This should reduce failure rates, especially in space.

But aside from that, most failures happen at the beginning of a GPU’s life, so if you test the GPUs on land first, you should be able to reduce the failure rate dramatically, so that your average GPUs lasts ~5 years.

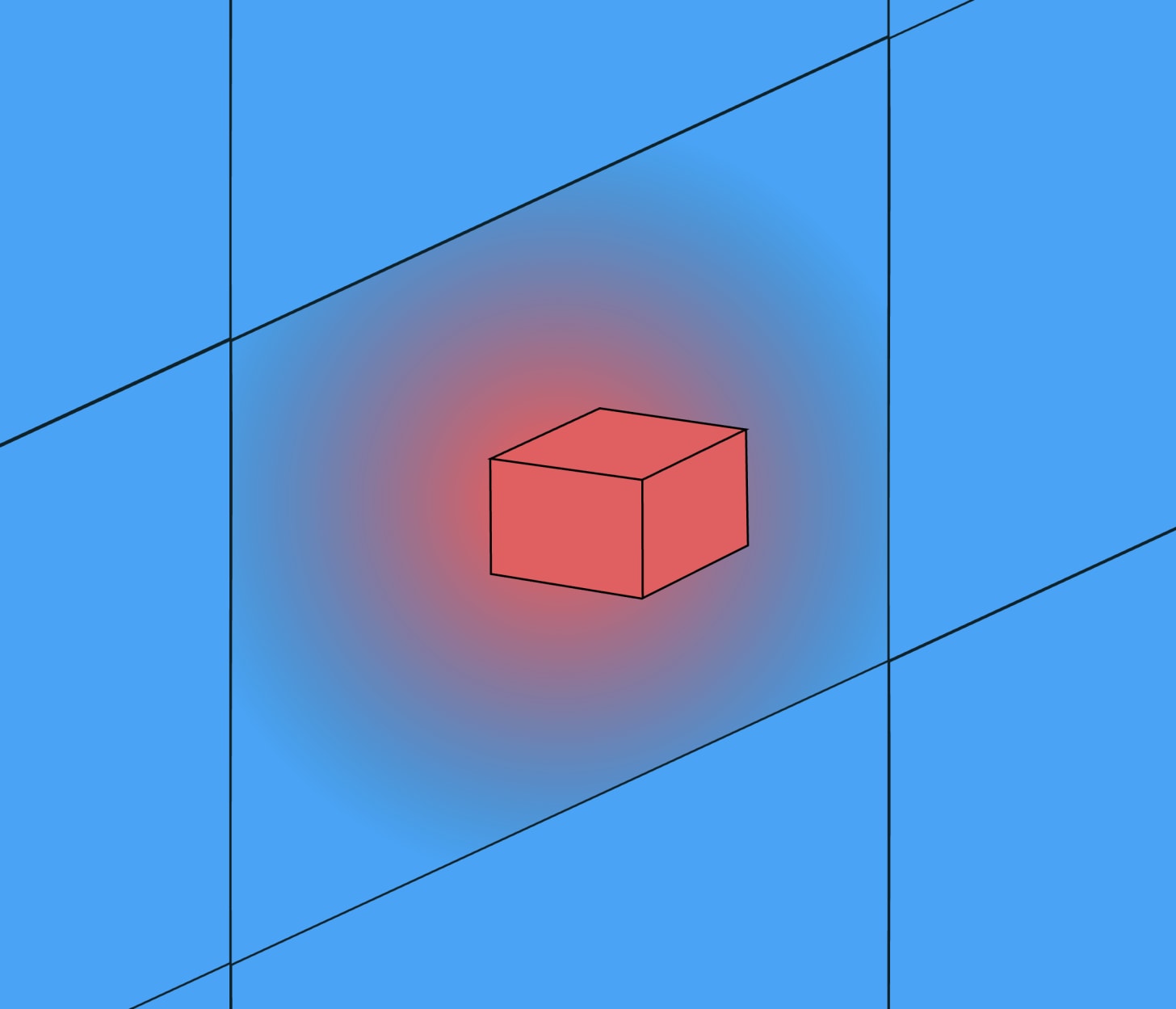

And now we get to radiators.

Before I started this article, this was my main concern. Radiators on Earth are very heavy, because you have the radiator, the fans, the cooling liquids… And in space, it’s even worse, because you can’t use the environment to cool off your machines! So I expected these systems to be huge, cumbersome, and prohibitively heavy.

But here’s the insight that blew my mind: Just using the front and back of the solar panels as radiators is enough to cool off the entire system! How is that possible? Here is the breakdown.

There are three ways of cooling something:

Conduction: Heat is transferred through a material, from a hot point to a cooler one. But in space your satellites are not connected to anything, so they can’t dissipate any heat out this way.

Convection: That’s like wind or water in contact with your surface, extracting heat from it. Same issue as before, there’s no wind or water in space.

Radiation: Like the heat from a lightbulb, a toaster, or the Sun: When something is hot, it radiates heat out. This is the only method you can use in space.

But it turns out that radiation is extremely powerful, because it transfers heat to the 4th power of temperature: T4!

The actual formula is ε*σ*A*T4, but it’s pretty simple. The first variable (ε) is how good your surface is at emitting heat. A good radiator will be close to 1. The second (σ) is just a constant. The third (A) is the area, but we’re going to look at this per square meter of solar panels, so it’s 1, too. The last item, Temperature, is the one that matters. For a given area that is efficient at emitting heat, it’s the only component that has a massive impact.

To give you a sense of how powerful this is, if you move from emitting radiation from 20ºC to 100ºC (68ºF to 212ºF), the heat emitted through radiation is going to increase by 2.6x.11

Why is it raised to the power of four? It was never explained to me in engineering school, but according to ChatGPT, it’s because the energy of the photons being emitted grows in proportion to the temperature (one T), and these photons are emitted throughout all three dimensions (three more Ts), for a total of four Ts.

Anyway, the point is that you can emit a lot of energy through radiation, and your solar panels are enough to cool the entire thing.

The way the datacenters work is that the solar panels get the energy from the Sun—about 1,361 W/m2. They reflect a bit less than 10% of that, so let’s say they absorb 1,225 W/m2. They convert about 20% into electricity (so ~272 W/m2) and the rest becomes heat. The electricity is sent to the GPUs, and in the process, that electricity becomes heat, too. The heat of the solar panels and GPUs must then be dissipated through radiators.

For the system to maintain a certain temperature, it must lose as much energy as it takes in, so the 1,225 Watts it absorbs per square meter must be radiated out. This happens through both the front and the back surface of the solar panels. So you have:12

That gives you T=60ºC (334 K, 140ºF)! Now this is a bit idealized. In reality, the satellites will also receive heat from the Earth, so they’ll be a bit hotter. But there are ways to minimize that heat:

More importantly, GPUs are normally cooled with heavy radiators and liquids, which xAI and SpaceX want to avoid, so they won’t perfectly dissipate their heat and will run at a higher temperature than on Earth (or the solar panels in space). Musk believes they should run at 97ºC (207ºF). For comparison, GPUs normally run at ~80°C, with a max around 88 – 93°C, but they can tolerate ~90-105°C in datacenters, so this is not too far off. Industrial- and military-grade silicon commonly stands up to ~125°C. The GPUs just need to be optimized to run at a slightly higher temperature than they’re used to on Earth.

This is another reason why it’s so important that SpaceX and xAI have merged: xAI is designing its own chips and will now start adapting them to this type of requirements.

The heat generated by the GPUs must be transferred out. It’s unclear whether this will be through radiators on the GPUs themselves, or by conducting heat back to the solar panels to be dissipated there. In any case, there will be some mass associated to that, but it doesn’t look like it will be too much

The bus is the thing that usually carries all the stuff a satellite actually wants in space.

In Starlink, they include the thrusters and communication devices like antennas and thrusters to maneuver. The datacenters would still need the thrusters, but the need for communication devices would be tiny. I’m not sure whether the GPUs would be lodged there or more distributed behind the solar panels for heat dissipation.

OK, so now we have a broad sense of what these space datacenters should look like:

Lightweight solar panels to gather electricity

Use their front and back for cooling

Add the GPUs and other computing systems, including the bus. Some of these parts will be shielded from solar radiation, others won’t

The system should run below 100ºC

That’s it! No radiators, no more heavy equipment!

Is this going to be cheaper than building datacenters on Earth? To answer that, let’s see what a real one would look like, and compare it to one on Earth.

2026-02-12 21:18:16

Over the last few weeks, massive changes have happened in Musk Industries:

Musk is saying he’s going to build datacenters in space

SpaceX and xIA are merging

The resulting company is seeking an IPO

SpaceX aims to build a base on the Moon instead of Mars

These facts are interconnected, in a way that isn’t obvious. Today, we’re going to make that explicit.

SpaceX’s valuation has exploded.

Why? Because its revenue has grown proportionally.

Where does that revenue come from? Mostly from Starlink, a global service of direct telecommunications via satellite.

This is SpaceX’s revenue breakdown:

Its number of subscribers has been growing exponentially:

The service is printing cash—about $12B in 2025, and growing fast.

How is this business growing so fast? Because of this:

There’s been an explosion in stuff being sent to space, and of course the vast majority is from SpaceX:

And that’s been possible because of this:

SpaceX’s rockets can carry payload to space much more cheaply than the competition.

So hold on. Why is a rocket company printing money as a telecom company?

In Starship Will Change Humanity Soon, we explained how SpaceX was crashing the cost of sending stuff to orbit, and dramatically increasing how much stuff could be sent to space.

The thing is that people take a long time to react to this type of change.

We’ve known about electricity since the 1600s, and electricity generation was developed in the 1800s. But electric companies sat idle with their built capacity. For example, a London electricity company had a load factor1 of only ~10% in the early 1900s. Why? People didn’t know how to use this electricity!

So electricity companies started load-building: figuring out uses for electricity and pushing people to adopt them.

The first big application was the light bulb, but it didn’t use much electricity, and the electricity it did use was in the evenings. So electricity entrepreneurs tried to get daytime use. They pushed for:

Electric streetcars

Industrial motors

Household appliances like refrigerators and irons

And more

Sometimes, the push was pretty direct, as electric companies owned electric car companies, electric railway companies, radio stations… It’s not a coincidence that General Electric was famous for both electricity generation and appliances.

This is not the only example of a new general technology creating overcapacity, waiting for society to catch up by creating demand, and doing everything it could to accelerate that demand in the meantime. The same thing happened with railroads and broadband.

Early on, many railroads remained barely used, and lots of railroad companies went bankrupt. To avoid this, many became land management and immigration companies! In the US West, many railroads received large land grants (or bought huge tracts), so they were simultaneously selling transportation and the land that would generate the transportation demand. They created immigration bureaus, sometimes with offices in Europe, explicitly to seed farms and towns that would ship freight and buy tickets. They created towns, frequently integrated with water transportation companies to be multimodal.

With the advent of the Internet, broadband companies laid out a lot of cable, but in 2000 only 7% of it was lit in the US. This is why AOL (cable) merged with TimeWarner (content), or why telecom operators around the world offered (and many still offer) content bundles.

The same problem has been happening with SpaceX. Its increase in capacity was so fast that society is not adapted to it yet. So Musk faced a problem: Either he created demand for his new service, or he would go bankrupt, like so many electricity, railroad, and broadband companies before him.

He found Starlink.

The way electricity companies found light, streetcars, industrial electric motors, and appliances.

The way railroads created new towns, farms, and immigrants.

The way broadband companies bundled content.

Here’s the problem with Starlink: Although it spits money, we only need so many telecom satellites to have full Earth coverage.

Starlink currently has a license for 15k satellites, and wants as many as ~30k. Assuming a five-year lifetime, that’s 3k-6k satellites per year. At ~1 ton per satellite, that’s 3–6kT per year of payload. Falcon 9 can deliver 22t to LEO, so that’s ~270 Falcon 9 flights per year. SpaceX is already at 170. Once Starship is ready, just 30 flights will be enough to cover the entire annual need for Starlink.

So if SpaceX succeeds with its Starship, it will have too much capacity to bring mass to space, and that emptiness will mean the entire program might not be viable.

If SpaceX wants more, bigger rockets, it needs to manufacture demand. It needs another Starlink, another lightbulb, industrial electric motors, streetcars, appliances…

Musk thinks that’s AI datacenters.

Musk has another company: Twitter, turned X when it rebranded it, and turned xAI when he decided to participate in the race to AGI—artificial general intelligence. And as you know, I don’t think AI is a bubble: All the AI corporations are hoping to reach AGI, so they’ll likely keep increasing their AI investments every year for at least a few more years. The demand for compute is insatiable.

In this race, each player obsesses about eliminating every bottleneck to growth. Until now, it’s been GPUs, so companies like TSMC and NVIDIA are among the most valuable ones in the world. But for how long is that going to be true? TSMC and NVIDIA are trying to increase their productivity as much as they can, as fast as they can. At some point, they won’t be the bottleneck anymore. When will we hit a different one? What will be the next one?

With so much money in play, the most likely bottleneck will be one that doesn’t respond to private capital. It will be politics. And what requirement to build GPUs depends on politics? Energy.

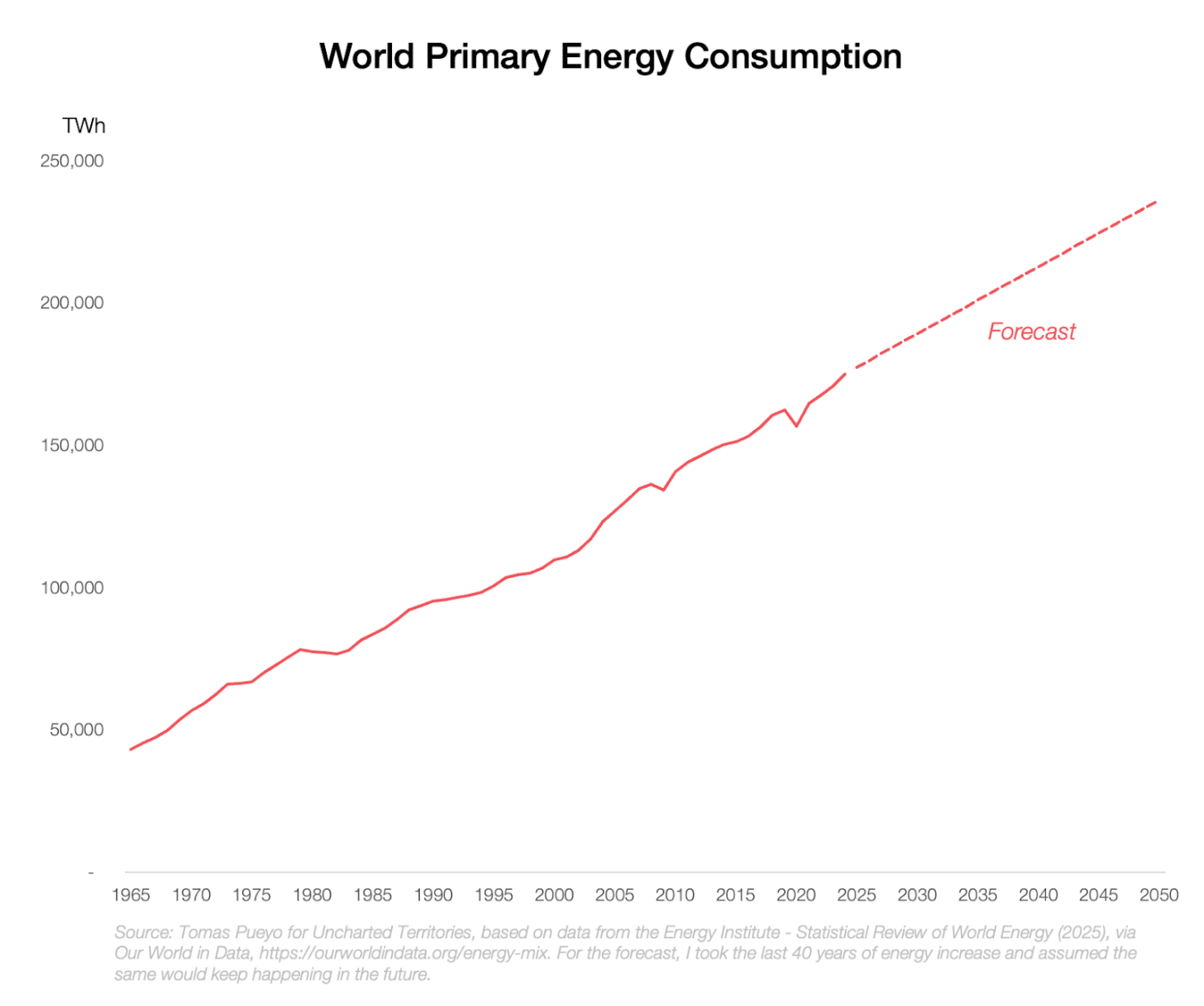

Energy generally requires lots of permits, and is affected by political decisions, like the massive tariffs Trump has imposed on Chinese solar panels. So Musk believes AI companies won’t be able to grow their supply of energy fast enough to feed their datacenters. His bet is that, within a few years, energy will be the limiting factor to grow intelligence.

His answer is to go to where there are no permits—space. He believes that in three years it will be easier to grow intelligence in space than on Earth. Why?

In space, energy from sunlight is not lost from passing through the atmosphere to reach your solar panels. There are no clouds, seasons, dust, or atmospheric damage to reduce efficiency. There are no nights2, so no need for batteries. And more importantly, no need for permits.

The bet is that the cost to send the panels and datacenters to space will shrink while the cost to build them on the Earth will increase so much (including bans) that, in three years, the cheapest will be space.

Is this true? I don’t know, I’ll look into the numbers in an article in the coming weeks.

But Musk trusts it is. So the plan would be to shoot solar panels and GPUs to space… using the spare capacity SpaceX is about to have!

Musk believes this kills two birds with one stone:

xAI gets the energy it needs to feed the compute to win the AGI race

SpaceX gets total utilization of its rockets for the foreseeable future

That’s not enough though. There’s another problem xAI had to solve, and this merger solves it too.

xAI’s new datacenter, Colossus 2, which will launch its new AI in 2026, will cost $44B. But spending on datacenters is projected to double every year, so by 2030, xAI would need to spend $700B per year, assuming one such huge training release per year. But the company only makes ~$0.5B–$3B per year. Do you see the mismatch between spending and revenue? Yeah, xAI is burning $1B per month. xAI recently raised $20B, but at this pace, that will only last a year and a half. What then?

Musk needed a way to bankroll the build-up of datacenters, which according to him need to be in space, so he decided to merge SpaceX with xAI. That way, the SpaceX cash can fund xAI’s AGI run.

But where does the SpaceX cash come from? One way is Starlink revenue, which is estimated to grow to $150B by 2030, with ~$100B of free cash flow by then.

This revenue growth is expected not just from satellite wifi, but through direct-to-phone communications.

But you can only use Starlink revenue for xAI datacenters if the two companies become one. So that’s why SpaceX bought xAI.

Alas, Starlink revenue is not growing as fast as xAI’s need for cash for datacenters right now. So where else can SpaceX + xAI get cash?

From you.

Remember how the SpaceX valuation is going stratospheric? Well, Musk has decided he can tap into that. He will place SpaceX into the public market and raise some money, which he’ll use to fund xAI datacenters, which he’ll send to space to get the electricity he needs because, according to him, nobody can get it on Earth.

In this process, analysts estimate SpaceX might be able to raise $50B or more, at a $1.5T valuation. My guess is that he hopes this money, along with an increase in Starlink and xAI revenue, will be enough to cover xAI’s cash needs for a few months or years. By then, Starship should be ready to bring solar panels, GPUs, and datacenters to space while the Earth is stuck in energy permitland.

In other words, if you participate in that IPO, you’re making a bet that one or more of these is true:

Starlink revenue will keep growing stratospherically

xAI revenue will start picking up and beating its competitors, OpenAI and Anthropic, in an exponential trajectory

Energy on Earth is enough of a bottleneck that the only way to win the AI war is by going to space

AGI is around the corner, and there’s a huge advantage to those who can reach it first

So what’s up with the Moon?

Since its inception, SpaceX’s mission has been to make humanity multiplanetary.

And the concrete, proximate, inspiring goal was to settle Mars.

But now, suddenly, at the same time as SpaceX merges with xAI and they IPO, suddenly Musk pivots to the Moon:

Musk has added details on this:

So the official reading is that SpaceX needs to iterate much faster on landing on another celestial body, building a habitat there, industries, and resupplying them.

But why go to the Moon anyway? If the goal is to build datacenters in space, what’s the point of the Moon?

Eventually, it can work as a place to produce solar panels and maybe chips, because the Moon has plenty of the main elements needed (aluminium and silicon, among others). But that will take muuuch longer than the few years we might need to get to AGI. So what’s the point of mentioning this pivot now?

Maybe the IPO.

I shared in the past that there’s no economic reason to go to Mars. It would be a passion project, worth ~$500B. An amazing, absolutely worthwhile objective, but a very expensive passion project nonetheless. If Musk were to die, that project would likely not happen soon.

It’s OK to have a private company to fund your passion project, but it’s a tougher pill to swallow for a public company. Imagine the roadshow:

INVESTOR: In a few years, how will future cash flow be used?

MUSK: You will see none of it, it will all be plugged into colonizing Mars.

Mars can only be defended as a money sink. But not the Moon.

Remember this graph?

We focused on the Starlink revenue, but the launch revenue is also there, and part of it comes from NASA for missions to the Moon. Specifically, NASA has already committed to pay SpaceX $4B to send astronauts to the Moon, with additional funding for other Moon missions.

So the reality is that SpaceX was already going to go to the Moon profitably, while Mars has already been postponed for over a decade and has no path to generating revenue today—something a public company should be more diligent justifying. Now that Musk has decided to merge SpaceX with xAI, the more urgent problem has become steering humanity through the AI singularity.

So this is why Musk merged SpaceX and xAI:

SpaceX needs cargo for its Starship, or it won’t be able to develop it.

Datacenters in space might be it, provided that energy is the key constraint on Earth.

xAI needs cash to buy these datacenters.

Musk is thus vertically integrating the two companies: the supplier (SpaceX) is funding the customer (xAI).

This allows Musk to fund the race to AGI, and maybe win if indeed the limiting factor is energy.

In the meantime, Mars could cost the merged company a lot, both as a distraction and a harder pill to swallow for investors. So Musk made it official that, in the foreseeable future, SpaceX will focus on the Moon, which makes more money (through NASA), allows for faster iteration for Starship development (which will help for datacenters), and might even help Mars colonization by testing it nearby first.

And that’s why all these events are connected.

But throughout all this, there’s a more subtle yet much more important thread we should pull. Did you notice what SpaceX’s mission was?

To make humanity multiplanetary.

Was.

Musk has shifted it to: Extend consciousness and life to the stars.

Consciousness doesn’t require life.

AI can be conscious.

Musk realizes that the consciousness that will conquer the universe is artificial.

And maybe, if we’re lucky, it will bring us along on the journey.

Making that maybe more probable is the bet of SpaceX + xAI.

Is energy really the next bottleneck?

Is it really going to be cheaper to build AI datacenters in space?

Will we really have a base on the Moon? What are the problems with that?

How much electricity is provided vs how much could be provided at a maximum.

In the right orbit, far enough from the Earth.

2026-02-10 22:00:57

As demand for oil and gas dries up, the countries that most depend on their sale will have to reinvent themselves. But how successful have countries been in doing this?

Unfortunately, there aren’t that many examples in history, because few resources in history have generated as much wealth as oil and gas, so few countries have depended so much on single resources, and hence few have faced a total collapse of their income due to a resource crash. But there are a few. Today, we’re going to review the ones I could identify, and we’re going to assess which countries are most exposed to the oil & gas drop today.

We talked about Dubai in this article, and unfortunately, it’s not a great example for other oil countries: Dubai was a trade port city decades before it was an oil city, and it used the oil exclusively to prop up its position as a trading hub—successfully. Few countries have been able to pull this off.

We explained this case in depth here.

Spain sourced 80% of the world’s silver for centuries. Unfortunately, it wasted this money on expensive wars that led nowhere. The Spanish monarchy didn’t improve as much as other European governments between 1500 and 1800, so governance was terrible in Spain by comparison. Prices in Spain rose 3x more than in the rest of Europe.

Its silver prevented Spain from developing other industries: Inflation had increased prices, making labor very expensive, which in turn made Spain’s manufacturing expensive compared to its European competitors. This led to a decrease of exports and an increase of imports. Herding, agriculture, industry, and trade all shrunk, and Spain’s GDP per capita ended up 40% lower than it would have been without silver.

Spain went from being the biggest empire in the world in the 1500s and 1600s to losing it all in the 1800s, and never fully recovering. Today, even within Europe, its economy is secondary to those of countries like France, Germany, the UK, or Italy.

Can you guess when Nauru ran out of phosphates?

Nauru is a Pacific island.

The outer edge is made up of trees, beaches, a road, and the airport. But if you zoom in on any part of the interior, this is what you see:

Zooming further in:

These are limestone pinnacles. They’re worthless, and that’s why they’re here. They’re the result of old corals, on which birds perched for millions of years. Their poop accumulated, and with rain and wind it filled all the space between them, and then kept accumulating above.

This solidified poop, called guano, is one of the richest sources of phosphate in the world—one of the three key elements of fertilizer. So for a century, it was mined, first by the British / Australians / New Zealanders, and after Nauru’s independence in 1968, by its own government.

Soon after, the guano began running out, so by the early 2000s GDP per capita was 8x lower than at its peak.

Did you see the uptick in GDP per capita in the early 2010s? That’s because of the immigration detention center that Australia paid to put on the island.

That, too, is ending, and as a result Nauru’s economy is plunging again.

Not a great precedent.

But islands are by nature not very diversified, while countries can be. So do we have examples of entire countries that suffered from the loss of a resource? We’re now going to explore Peru, Chile, and Yemen.

2026-02-06 21:04:21

What will happen to petro-states after oil demand dries up?

How can countries be successful in the 21st Century?

Dubai answers both questions, but not the way I thought before I visited the city last year.

My grandfather rode a camel; my father rode a camel. I ride a Mercedes. My son rides a Land Rover, and my grandson will ride a Land Rover. But his son will ride a camel.—Sheikh Rashid bin Saeed Al Maktoum, founding father of the UAE and ruler of Dubai.

Dubai faced an existential crisis.

In 1900, it was a village on a coastal creek in the middle of the desert and hadn’t changed much for centuries.

By the 1950s, it was still a small port city.

Then, it found oil.

But not much. The rulers knew it wouldn’t last long.

If Dubai was going to be anything, they had to act fast.

They had to use this oil as intelligently as possible.

That was the vision of Sheikh Rashid bin Saeed Al Maktoum, and he succeeded beyond his wildest dreams.

Today, Dubai is not only a bustling city. It’s one of the most dynamic city-states on Earth, and an example of what can be done to fight the curse of dwindling oil revenues.

This is how I see Dubai after studying it and visiting it: How it started, how it got where it is today, and what lessons others can learn from it.

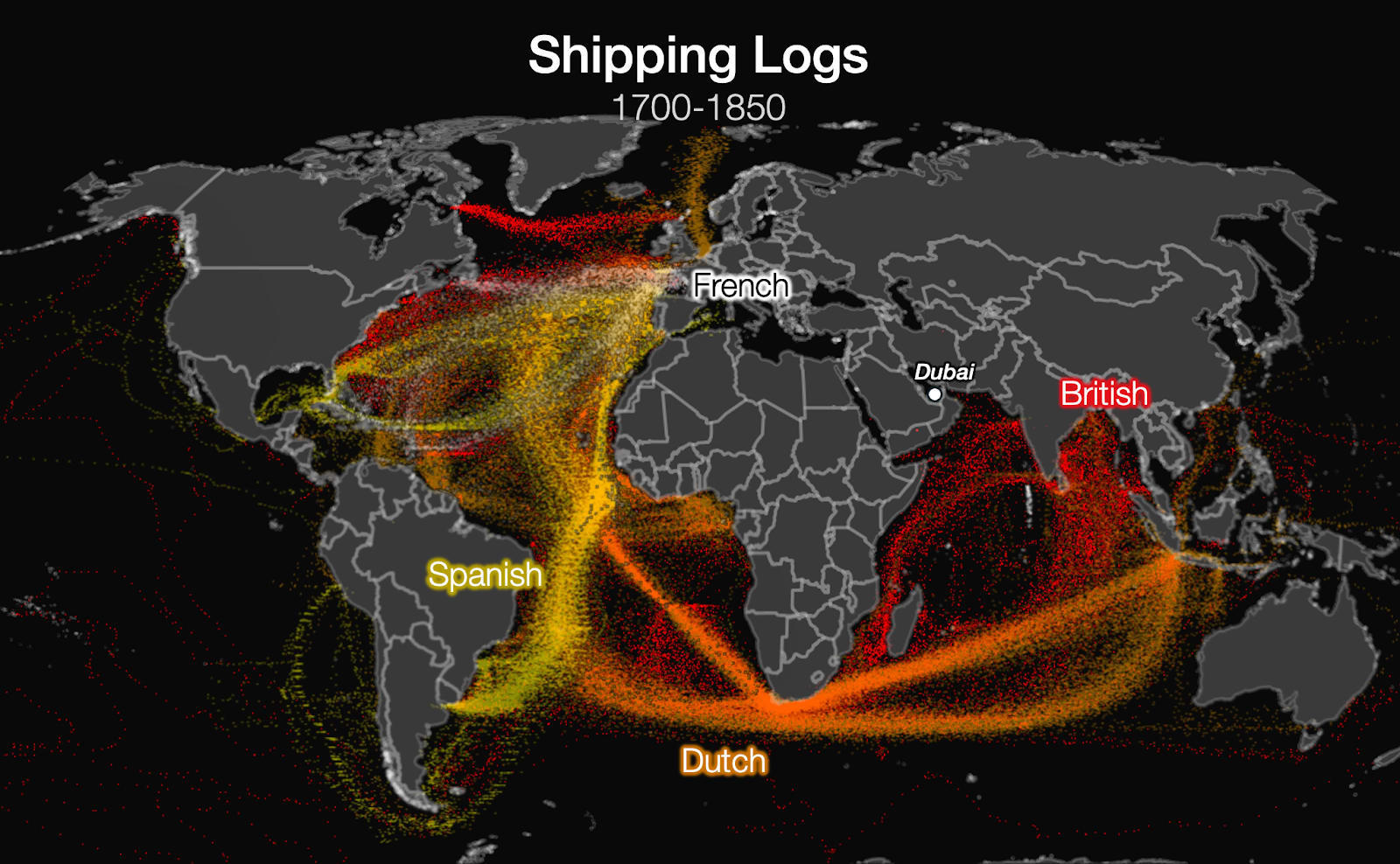

Dubai is one of the United Arab Emirates, on the Persian Gulf.

Dubai is the second biggest of the seven emirates of the United Arab Emirates.

But it has the biggest city.

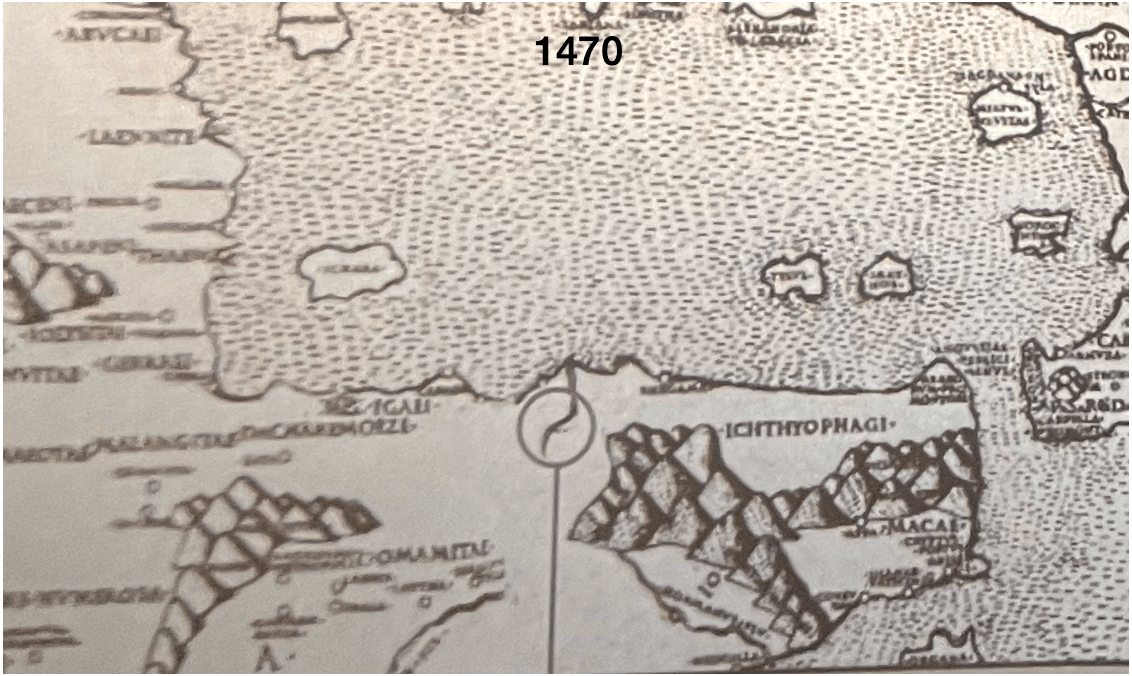

It’s on one of the most ancient trade routes.

Unfortunately, Dubai mostly saw ships pass by, as it’s on desert land, leading nowhere. It was never a crossroads for anything.

So as a result, it was not even a minor trade outpost at the height of Muslim power.

That did not get better with the Age of Discovery and its more euro-centric trade routes that completely bypassed the Persian Gulf.

The region didn’t have that much going on in that period. The little trade that went on suffered from pirate raids, so Dubai built a fort in the late 1700s.

In the 1800s, the region became a British Protectorate: Too poor for the UK to turn into a full-on colony, the Brits promised to protect the region in exchange for its help fighting against piracy.

So for centuries, the only remarkable economic activity in Dubai, and the Persian Gulf in general, was the pearl industry.

The Persian Gulf is a very shallow sea, about 35 m deep on average.1 In reality, it’s just the underwater continuation of Mesopotamia. They are both depressions caused by the weight of the Zagros Mountains to their northeast.

And this part of the world has lots of sunlight and little rainfall. This created a goldilocks environment for oysters and their pearls:

Shallow waters mean sunlight reaches the bottom, and there’s a lot of plankton that the oysters can eat.

They like warm temperatures.

No rain means few rivers, so little mud in suspension in the water to clog the oysters’ gills.

These conditions have persisted for millions of years, so the shells of trillions of animals have accumulated, forming a carbonate bed that oysters can use to build their own shells. This carbonate has not been diluted by sand from rivers, and with all this carbonate concentration, the water is alkaline, facilitating shell creation.

The warm and salty environment is ideal for parasites. They penetrate the oyster, which defends itself by surrounding the parasite with nacre, forming a pearl.2

It’s also close enough to the surface for divers to be able to reach them without any special breathing equipment.

This is why the entire Persian Gulf was the world’s biggest producer of pearls.

That industry got destroyed in the 1930s, after the Japanese had learned to cultivate pearls by artificially inserting something in the oysters’ sac, which then proceed to cover them in nacre. The Great Depression that started in 1929 finished off the industry. Only fishing was left.

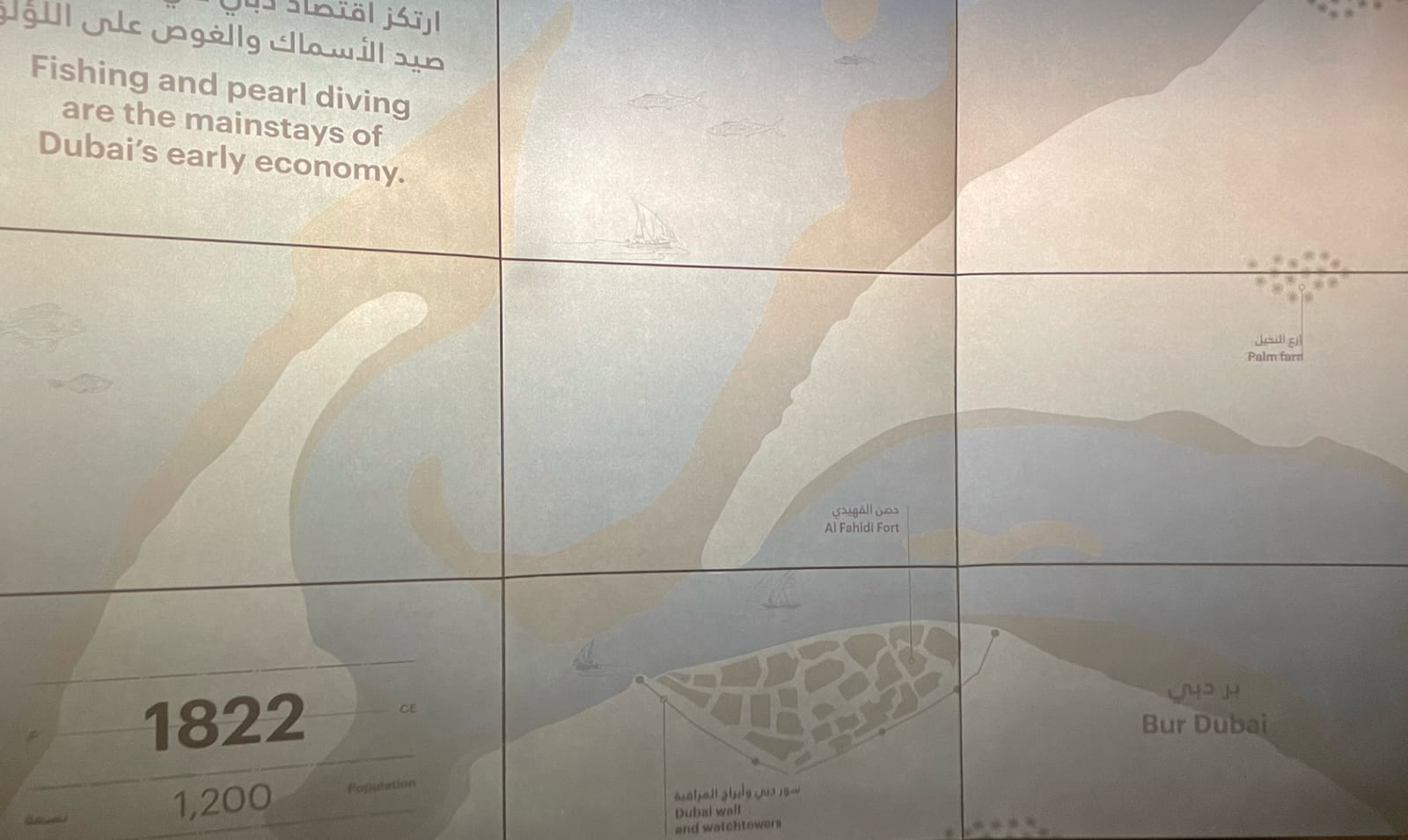

So Dubai was just a fishing village well into the 1950s:

But it had one thing that made it special: its creek.

The creek appears in ancient maps, showing how salient it was.

A creek provides protection for ships from sea storms and pirates, so Dubai could theoretically be a port. But how do you compete with all the other ports in the region, when you don’t have special goods to trade, as you’re in the middle of desert land?

Here’s the fundamental insight that Dubai’s rulers had around 1900 that made Dubai what it is today: In the modern age, you don’t need an amazing natural endowment to succeed as a city. You just need amazing governance.

In the late 1800s, Dubai’s rulers focused on ensuring Dubai was safe. Once they succeeded, they wondered: How can we use our creek to lure merchants from neighboring cities to settle here?

At the time, the biggest port in the gulf was Basra, in the Ottoman Empire. In the region, it was Lingeh, in Persia, on the northern coast of the Persian Gulf. Small creeks competed with Dubai for local trade.

But over the centuries, the Ottoman Empire started taxing the merchants in Basra more and more to finance its wars. This happened in Lingeh in the late 1800s, too. But there’s only so much you can tax merchants before they leave.

So in 1901-1902, the ruler of Dubai decided to make the port what we understand today as a Special Economic Zone (SEZ): Merchants were granted land on the creek, zero taxes, protection, and tolerance of their beliefs. Merchants left the surrounding regions and established themselves in Dubai. The new steamboats that were accelerating global trade began stopping in Dubai. By 1906, Dubai had replaced Lingeh as the major regional port. Here’s the same population graph as before, but this time logarithmic:

This was not a novel strategy: Many coastal cities had used similar strategies in the past, from the Phoenicians and Greeks to the Hanseatic League. Within the British Empire, this was common: Singapore (1819), Hong Kong (1842), and Aden (1839) all followed the same recipe. So Dubai knew what it was doing, and the British saw it positively, as an alternative to Persian and Ottoman trading power in the region.

Why did it work for Dubai? A big reason was that it was weak. That weakness was an asset, not a liability: The port was not huge, the Sheikh was not powerful, so if he tried anything, merchants would just pack up and go.

Instead, Dubai did whatever it could to improve the conditions for its merchants. Notably, as sandbanks had accumulated in the creek, Dubai worked with the merchants to dredge it. Dubai got the British Empire to establish a post office there in 1909—much earlier than in any other city in the vicinity—and later the telegraph.

With these measures, Dubai’s population 4xed between 1900 and 1950, despite the pearl crash. But more importantly, Dubai had planted the seed of what would make it great: a commitment to low taxes, trade, safety, and tolerance.

It’s now 1958, and the man from the beginning of this article, Sheikh Rashid bin Saeed Al Maktoum, succeeds his father as the ruler of Dubai.

Sheikh Rashid went all in on infrastructure.

This is what he did:

1959: Establish Dubai’s first telephone company

1960: The first airport opens

1961: Roll out the electricity network

Late 1950s, early 1960s: Dredging Dubai Creek, after which vessels of any size could dock at the port.

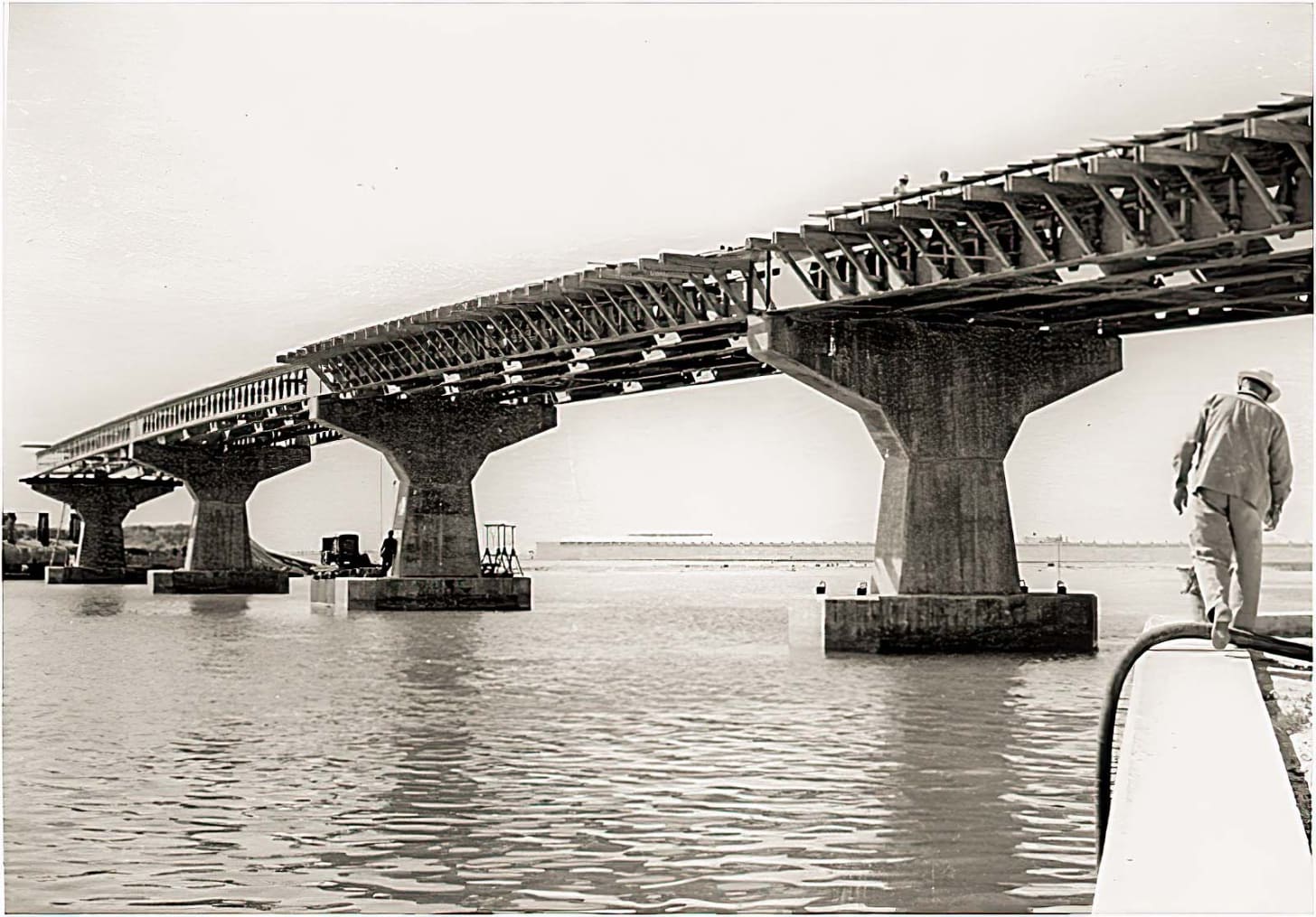

1963: First bridge over the Creek

All of these required a strong vision—and immense investment risk in the case of the dredging, the bridge, and the airport—because Dubai only discovered oil in 1966, and production started in 1969!

The oil was an incredible boon for the economy, but the Sheikh knew it wouldn’t last. He was right.

So, starting in the 1970s, Dubai’s challenge was: How can we use the windfall to create an economy that will sustain itself when there’s no more oil?

Very few countries think this way today, forget about the 1970s.

But Dubai had the answer. Dubai Creek was already the biggest import-export port in the Persian Gulf in 1960, if you extract oil cargo.3 So Dubai decided to double down on its strength: trade.

It used oil money to built Port Rashid, which opened in 1972, because Dubai Creek silted and had become too small.

1975: Al Shindagha Tunnel opens under Dubai Creek, closer to the entrance of the creek.

1979: Dubai opens its World Trade Centre, a convention and exhibition center.

1979: Aluminium production starts: This was very clever, as it moved the city from just a trading hub to one that could add value to the goods before reselling them, and generated both jobs and industry in the process.

1979: A new port opens south in Jebel Ali! This would become Dubai’s biggest port, and the largest one in the entire Middle East to this day.

1983: Drydocks open near Port Rashid to repair ships.

1985: Jebel Ali, to the south, becomes another free trade zone to expand the congested Port Rashid.

As oil dwindled, trade had already firmly established itself, so Dubai could continue its aggressive diversification across adjacent industries: finance, tourism, real estate…

Since the turn of the century, the city has gone all-in on urban development.

You can see this development not just in skyscrapers, but in retail, real estate and tourism, with famous landmarks like the Burj Al Arab hotel:

Dubai has the tallest building in the world, the Burh Khalifa.

It’s in front of the 2nd biggest mall in the world, Dubai Mall.4

It has reclaimed land on the coast to create Palm Jumeirah:

Several other coastal reclamation projects have begun, such as Palm Jebel Ali, The World Islands, and Dubai Islands, but none are complete yet.

Many of them commenced construction just before 2008 and stopped during the Great Recession. Most haven’t started redeveloping again,5 although an ad tells me they’re trying with Jebel Ali.

Dubai understood that there are huge synergies between transportation modes, so it didn’t only invest in its port and coast. It continued investing in its airport and airline. Today, Dubai International Airport is the 2nd busiest in the world, and the Dubai-based Emirates Airline is the world’s largest long haul airline. It acts as the bridge across Europe, Asia, and Africa.

But Dubai was not built only on physical investments. Since its beginnings in the early 1900s, its leaders knew that to attract people you needed the right regulations:

Very low taxes

Safety

Tolerance

Dubai is the 2nd most tax-friendly city in the world. No wonder it’s attracting huge numbers of millionaires escaping high taxation around the world. Here, Dubai appears as part of the United Arab Emirates:

Dubai is also the 5th safest city in the world. When I was there, all the locals and expats I talked with had stories about how they left a computer behind and the police tracked it back to them within a couple of hours, how they can leave their cars open and running without fear of theft, or how their children can take the local Uber equivalent (Careem) alone. When I was there, I felt as safe as in Taipei, Tokyo, or Singapore, and way safer than anywhere in the US, or in most European cities.

Finally, as a Muslim monarchy, the UAE is not known for freedom. Criticism of rulers, state institutions, religion, or “social harmony” can lead to detention. Online speech, including social media posts, is closely monitored. Media outlets are state-owned or closely aligned with authorities. Public demonstrations require permission and are rarely allowed. Independent civil society organizations are limited; strikes are illegal in many sectors. Public morality laws affect dress, alcohol, relationships.

That said, I don’t find any of these fundamentally different from those of, say, Singapore. They only differ in degree. And in practice, in Dubai I saw plenty of drunk expats and scantily dressed women. In Singapore as in Dubai, it felt like people say: Live and let live. Do your thing in private, leave others alone, and we’ll leave you alone.

This felt liberating. Knowing you can go anywhere, at any time, do your thing, be respectful, and you’ll be free? That’s amazing. When I lived in San Francisco, I had to be aware of my surroundings when walking on the streets, for fear of attacks. And my speech was not much more free in SF, as I always had to be very conscious of what I said and how it would land with people, for fear of making a faux pas or hurting sensitivities.

That’s the difference between freedom and tolerance. If I had to choose only one for the rest of my life and the entire world, I would choose the freedom of the US. But to live in a city that you can leave at any time, the tolerance of a place like Dubai, with the safety that comes with it, is much more comfortable.

So this is how Dubai went from a village of fishermen and pearl divers to one of the world’s most dynamic metropoles: It tried to become the biggest trading hub it could. For that, it focused on two things:

The right infrastructure for transportation and communication: ports, postal services, telegraph, bridges, tunnels, airports, malls, artificial islands…

The right regulations: low taxes, security, and tolerance.

This is how it became a trade hub, and as we’ve seen in previous articles, once cities become hubs, network effects are so strong that they keep growing.6

Today, Dubai still makes $500M in oil revenue per year, but that’s less than 1% of the city’s GDP. Given its unbelievable position for trade and transportation, and all the services burgeoning around it, it seems to me like it’s well positioned for the future.

The same can’t be said of Abu Dhabi, the biggest emirate in the United Arab Emirates, which still prints money from oil sales. If Abu Dhabi can’t manage the transition away from oil, will it carry Dubai down with it?

While Western media is filled with stories of intolerant, aggressive, backward-looking Islam, Dubai shows that this narrative doesn’t need to prevail. A Muslim monarchy can be tolerant, welcoming, rich, dynamic, and cosmopolitan.

When I first heard the story of Dubai, I thought it was a role model for other oil countries to transition out of their oil dependence, but now I’m not so sure: Dubai did not go from oil city to trading city. It was always a trading city, and oil just helped it reach its ambitions. Crucially, this means it avoided the resource curse because its institutions and culture were so strong to begin with.7 Oil did not make Dubai. It just helped.

The world is hyper competitive. You never control your population. People are always trying to go to the next best place. So governments can’t act as if their population is a hostage to milk. The countries that treat their populations (and immigrants) well, with low taxes, tolerance, and security, will attract money and power and outgrow the others.

Approximately the same in yards. The sea is frequently less than 10 – 15 m deep.

Also, the high salinity, extreme summer heat, rapid temperature swings in shallow water, and periodic lack of oxygen create stress that weakens the oysters’ shells, which facilitates parasite penetration.

Other ports were focused on the transition of bulk goods inland, not as entrepots. Persia and Iraq were too controlling of their ports for them to flourish, while Dubai had been a SEZ for decades.

By surface

A big chunk of the World Islands were sold, so the incentive for further investing isn’t there, yet many of the acquirers did it for speculation, so the project is stalled. Plus, the cost of connecting all these islands with roads, electricity, and water are pretty high. My guess is Palm Jebel Ali or Dubai Islands will finish before The World.

As long as they’re not throttled by some force, usually regulation.

It looks like it was mostly the rulers: Dubai’s Al Maktoum family has shepherded the emirate for over two centuries of modernization pretty impressively.

2026-02-03 21:02:43

Renewable electricity is so cheap that it’s taking over the world. It will replace most fossil fuels: in power generation, car propulsion, heating… When it does, the budgets of dozens of countries will be destroyed because they mostly rely on oil and gas production today. What will happen to these countries? To global geopolitics? This is what we’ll explore in this series, starting by asking ourselves: When will oil sales start shrinking?

Energy has driven the geopolitics of the last two centuries:

First, the expansion of coal in the 19th Century drove the Industrial Revolution. Then, the expansion of oil and gas in the 20th fueled the world’s wealth explosion.

Everybody won, but the suppliers of oil and gas won an outsized return. The USSR was only viable as long as oil prices were high. Today, Russia’s war in Ukraine is financed by Russian gas.

Many countries are dead without oil and gas income, as their entire governments’ budgets depend on these resources:

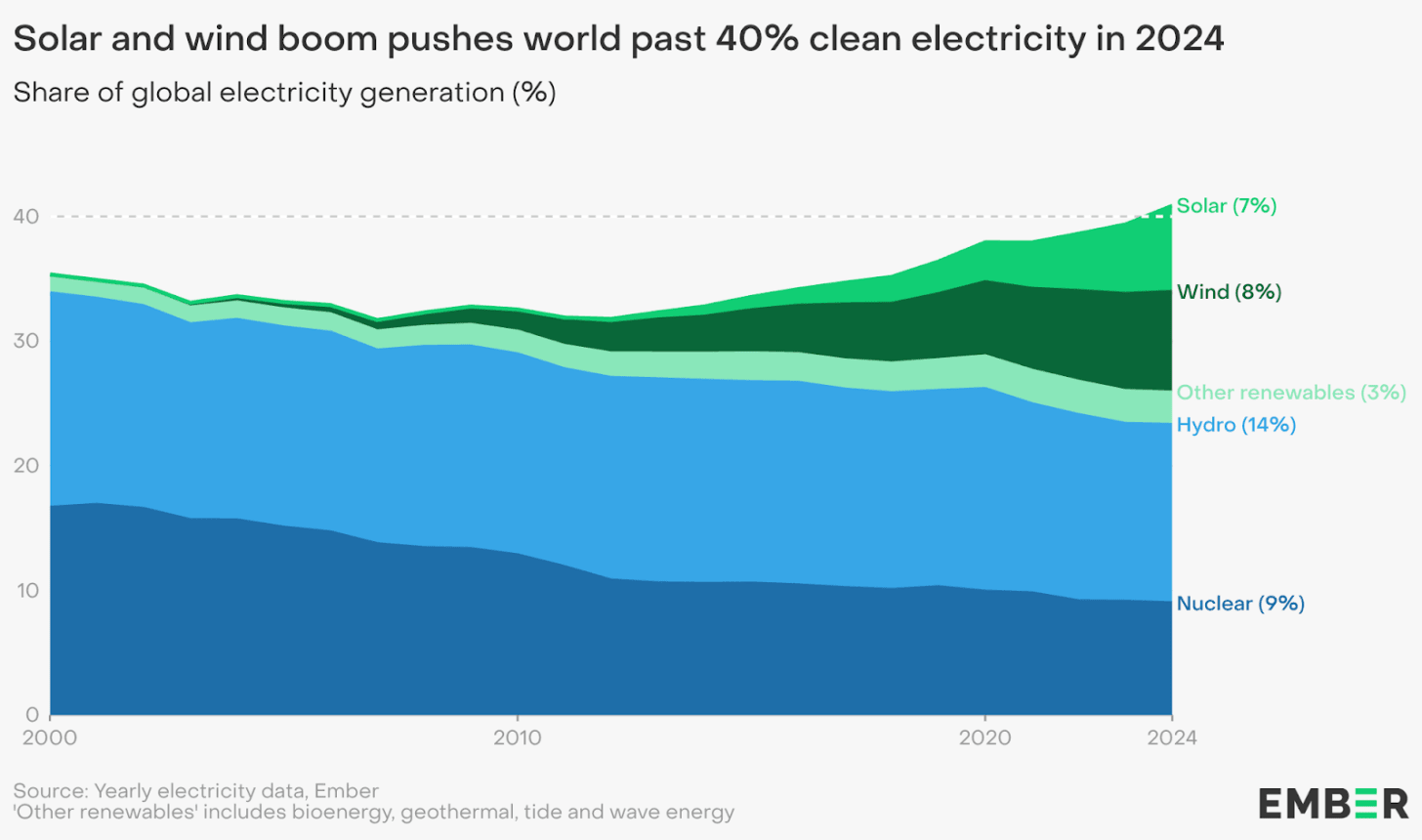

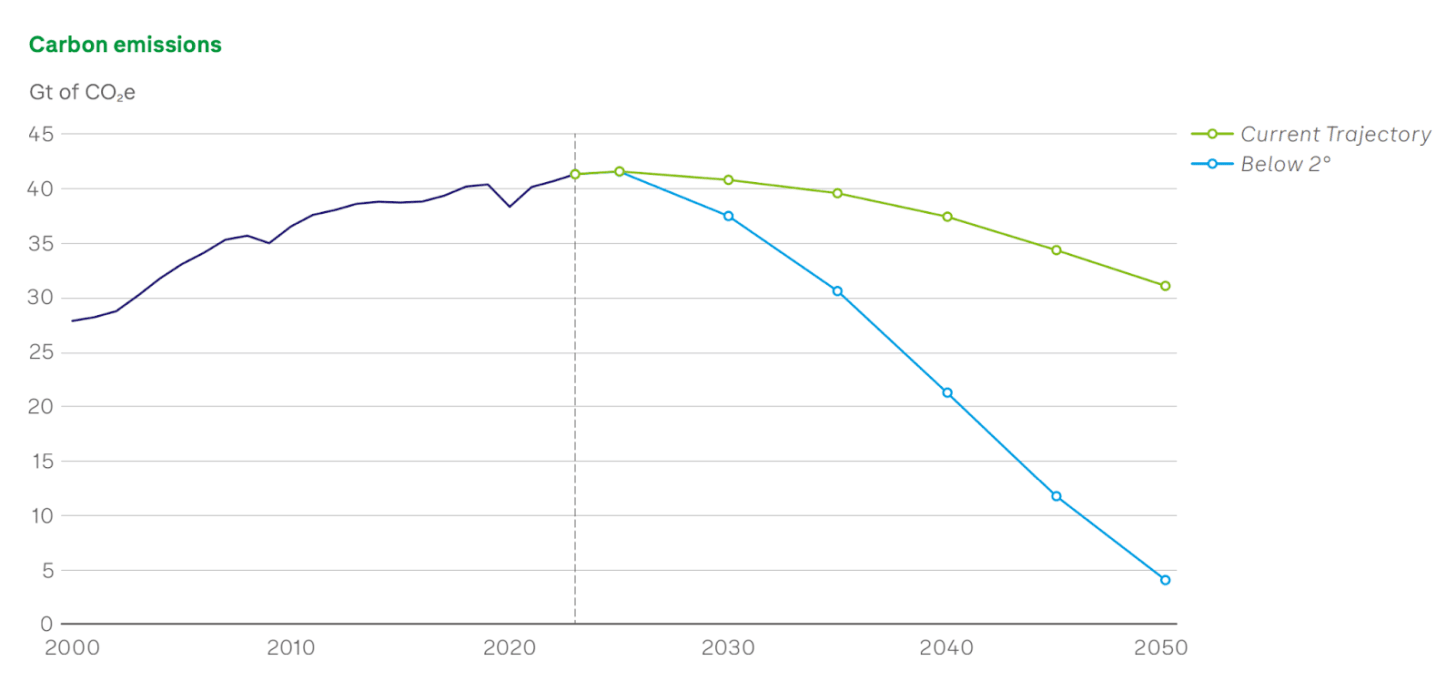

So what happens if fossil fuel incomes crater? That’s entirely possible. The share of all world energy coming from fossil fuels is shrinking:

And this shrinking is accelerating because of electricity.

The world is electrifying, and that will accelerate: Unfortunately for fossil fuel countries—and fortunately for the world’s climate—renewable energy will completely take over electricity generation.

The fact that solar generation in particular is accelerating can be seen through the installed base of solar capacity:

This exponential is fueled by the virtuous cycle of production and costs:

Indeed, solar costs keep shrinking:

That’s just for solar panels, but overall solar electricity generation costs are also shrinking and will continue to do so.

The Sun only shines during the day, but batteries bring sunshine to the night, and their cost is shrinking too.

Which is why battery installations are exploding too.

It doesn’t take a genius to connect the dots:

Solar is already the cheapest source of electricity, and its costs keep shrinking. Together with batteries, their combined cost will keep getting cheaper vs alternatives.

Installed capacity will continue soaring globally to cover demand. What we’re seeing in China will happen everywhere.

This will further accelerate electrification: Everybody wants cheap energy! Electric vehicles will replace internal combustion engines (ICE) faster, electric heat pumps will replace gas-fueled heaters, electric arc furnaces will replace combustion ones…

As electricity eats up global energy consumption, via solar and batteries, the demand for oil & gas will plummet.

The countries whose economies and government budgets depend on oil & gas…

What happens to them? Well, it depends on when all of this happens.

This is no easy calculation. Dozens of organizations project demand for oil and gas in the coming decades, but this is the type of stupid mistake they make:

And conversely, for coal:

Why are these forecasts so flawed? I think one reason is vested interests: For example, of course OPEC forecasts see an increase in oil demand by 2045!1 Its existence depends on it.

The other reason is that people assume the world will continue with business as usual. They don’t realize that, in energy, transitions can be extremely fast.

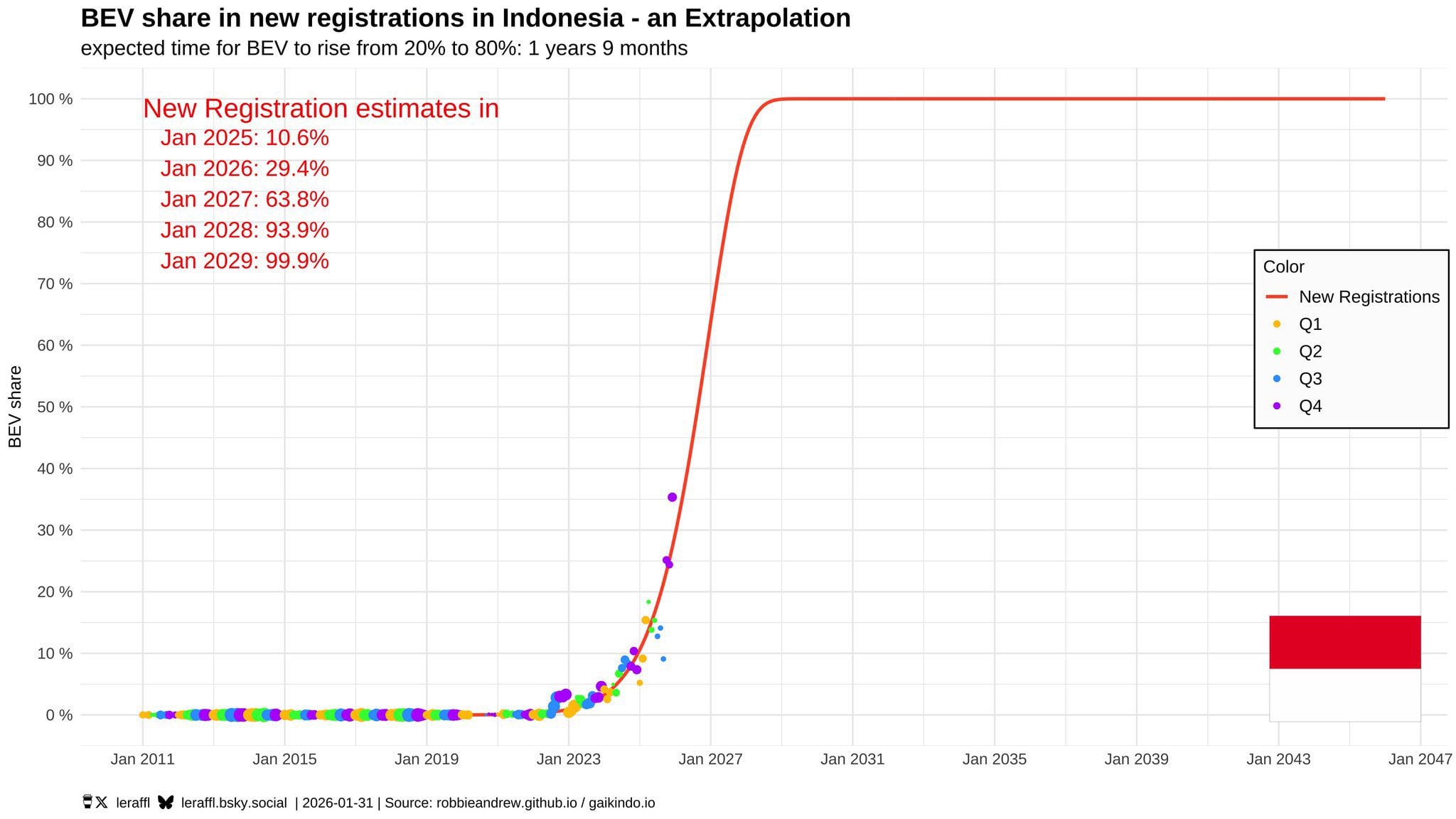

Look at transportation:

Here’s industrial heating:

Here’s lighting:

Electricity went from less than 5% electric to over 90% in less than 20 years!

At the beginning, new technologies take some time to figure out, but once they’re ready, uptake can be vertiginous.

Solar, wind, and batteries seem to be on that path.

If we assume that’s indeed the case, how will oil demand change in the coming decades?

Energy demand has been quite stable for decades. Let’s assume it continues.

Here’s where we face a much harder problem.

It’s clear to me that electrification will start accelerating due to cheaper electricity prices from renewables, which will prop up electric vehicles (EVs), heat pumps, electric arc furnaces, and other electrification technologies. How do you model this?

The future is already here, it’s just not evenly distributed.—William Gibson

According to this, China’s consumption of O&G will shrink by 40% by 2050 and 60% by 2060. I personally think that the transition will be much faster, because of solar.

If you just project the growth of the last few years into the future, you get this:

The amount of solar capacity that China is installing is so massive that, if the annual growth continues, solar electricity would surpass the current trend of all electricity generation within 10 years! Of course that’s impossible, so what would happen instead is that:

This would accelerate electrification. That’s why China is a leader in solar panels, batteries, and EVs: The three technologies go hand in hand.

Primary energy would also grow faster, given such cheap electricity prices.

China will flood the world market with these electric devices.

Solar capacity growth will have to slow down in the coming years.

For that, we should be seeing exponential growth for China’s solar generation, electricity generation, EVs, heat pumps… Is that what we see?

Yes for solar.

Yes for electricity (although of course its growth must look less aggressive because solar is still just a tiny part). To put this in context:

In 2024, the total installed electricity capacity of the planet—every coal, gas, hydro, and nuclear plant and all of the renewables—was about 10 TW. The Chinese solar supply chain can now pump out 1 TW of panels every year.—Source

Let me repeat that: China can produce every year solar capacity equivalent to 10% of all electricity in the world today!

This is why China (and India) have cut emissions from electricity generation for the first time in decades.

What about cars?

Yes for electric vehicles. For the first time, sales of EVs have surpassed those of ICE.

What about heat pumps?

It’s less true for heat pumps, although I’d assume these would take longer to penetrate the market, because:

Electricity wasn’t so cheap in the past

The real estate market crashed in 2020-21

Once a heating system is installed, it requires huge savings in energy to be retrofitted, and electricity prices are not quite there yet (but will be).

I think what’s clear is this: By 2050, the share of China’s primary energy coming from O&G will be tiny.

And if you think this is just China…

Exponentials everywhere! Look at these lines and try to project them into the future. No, actually, let me do that for you:

For the last few years, after a great start, EV sales didn’t look so good in Europe, but now it’s finally true: There are more EV sales than ICE.

I think the slowdown in EV sales is because of a series of one-off issues:

Richer customers bought Teslas fast, but Elon Musk’s politicization slowed that down

EV fiscal support has shrunk, making them more expensive to buy for citizens

The charging infrastructure isn’t quite there yet

Europeans can’t yet feel the reduction in electricity prices from solar

Car makers focused on premium models (which competed against Tesla), when the true market was in cheap EVs like those of China’s BYD

But it’s not just China and the rich world. Medium income and poor countries are seeing similar trends. The Pakistani story is especially interesting: In a country where electricity is expensive and unreliable, people have flocked to solar so quickly that it has gone from less than 1% of electricity generation to more than 10% in 5 years! The more cheap electricity there is, the more EVs people buy.

In Turkey, sales of EVs and hybrids2 are soaring so much that, even in a growing overall car market, gasoline and diesel cars are shrinking.

Look at Indonesia!

This will happen across the world, especially in places where electricity from the grid is expensive and unreliable but the Sun shines a lot, like Africa.3

It might take a bit longer there, because poor countries don’t buy new cars (only second-hand ones), and the EV car market is not old enough yet,4 but it will happen within a decade.

All these trends are the reason why so many models predicting O&G demand are off:

They try to figure out primary energy and electricity from past trends

Except you can’t do that because renewables are coming in like a bullet train in a china shop. They will upend everything, drive prices to the bottom, and with that electricity consumption will grow faster than in the past.

This will drive a massive electrification of the world, which will increase overall energy consumption, but it will shrink the share of that coming from O&G.

What if you just take the share of all energy coming from renewables, and assume it continues accelerating at the same pace as in the last 20 years, what would fossil fuel energy look like in that case?

According to this, fossil fuels would peak by the early 2030s:

The first to crash would be coal, and later it’d be followed by oil & gas.

Gas remains stable for the longest

Peak oil seems to happen in the early 2030s.

For years, society has been dreaming of Net Zero: A world where all governments get together to limit their own emissions. It turns out the naiveté of rich countries was exposed by poor countries when they said: “No way we’re remaining poor.”

But what we’re saying here is that something akin to that is going to happen. Not because governments can agree—they can’t agree on anything—but because the economics of new tech overwhelmed politics. What it means is we can take the Net Zero modeling exercises and use them as a proxy for what might happen. BP has a good one:

The shrinking would be due to renewables and batteries (“power” below), industry (both electricity and heat, which can be achieved through heat pumps and electric arcs), and transportation (EVs):

So it doesn’t look like we’re far off.

If solar, wind, batteries, and EVs keep going as they have been, peak oil will come soon, and by 2050, demand for oil and gas will have shrunk considerably. It’s the dusk of the age of fossil fuels.

This will be great for the environment!

But the consequences for geopolitics are up in the air.

What will happen then to countries like Russia, Venezuela, or Saudi Arabia?

Will their economies crater?

Will this reorganize global geopolitics?

That’s what we’re going to explore in the next articles.

Just to give you an example: This OPEC study projects the demand for oil & gas (O&G) to increase from now to 2045. Why? It assumes:

An optimistic increase in global population

That electrification won’t go as fast as China suggests—notably OPEC assumes that in poor countries, people will buy lots of cars with internal combustion engines (ICE)!

That oil & gas electricity generation will barely budge!

That electric heat pumps won’t take over the heating market!

Of course, OPEC has a vested interest in O&G demand increasing, so we can’t blame them. But everybody has vested interests, making it really hard to estimate when it will peak and shrink.

In early markets, hybrids always prevail because there’s not enough electric infrastructure. As it develops and people can charge their cars more easily, the share of EVs increases.

And as we know, warmer countries are poorer.

Plus, battery aging will be a problem there. What’s most likely to happen is that 10 year old EVs will find themselves in places like Africa, coupled with new batteries, which will be much cheaper then.

2026-01-30 16:01:48

Tech companies are trying to attract workers with over $100M compensation packages. How can this make sense?

It does.

After today’s article, not only will you understand the logic. You’ll wonder why there aren’t more companies doing the same, and spending even more money.

Not only that. You’ll also understand how fast AI will progress in the coming years, what types of improvements we can expect, and by when we’re likely to reach AGI.

Here’s what we’ve said so far in AI:

First: We’re investing a lot in AI, but there’s massive demand for it. This makes sense if you believe we’re approaching AGI.1 So it’s unlikely that there is an AI bubble.

Second: It’s likely that we’re approaching AGI because we’re improving AI intelligence by orders of magnitude every few years. As long as we can keep improving the effective compute of AI, its intelligence will continue growing proportionally. We should hit full automation of AI researchers within a couple of years, and from there, AGI won’t be far off.

Third: So how likely is it that we’re going to continue growing our effective compute? It depends on how much better our computers are, how much more money we spend every year, and how much better our algorithms are. On the first two, it looks like we can keep going until 2030.

Computers will keep improving about 2.5x every year, so that by the end of 2030 they will be 100x better.

We will also continue spending 2x more money every year, which adds up to 32x more investment by the end of 2030.

These two together mean AI will get 3,000x better by 2030, just through more (quantity) and more efficient (quality) computers.

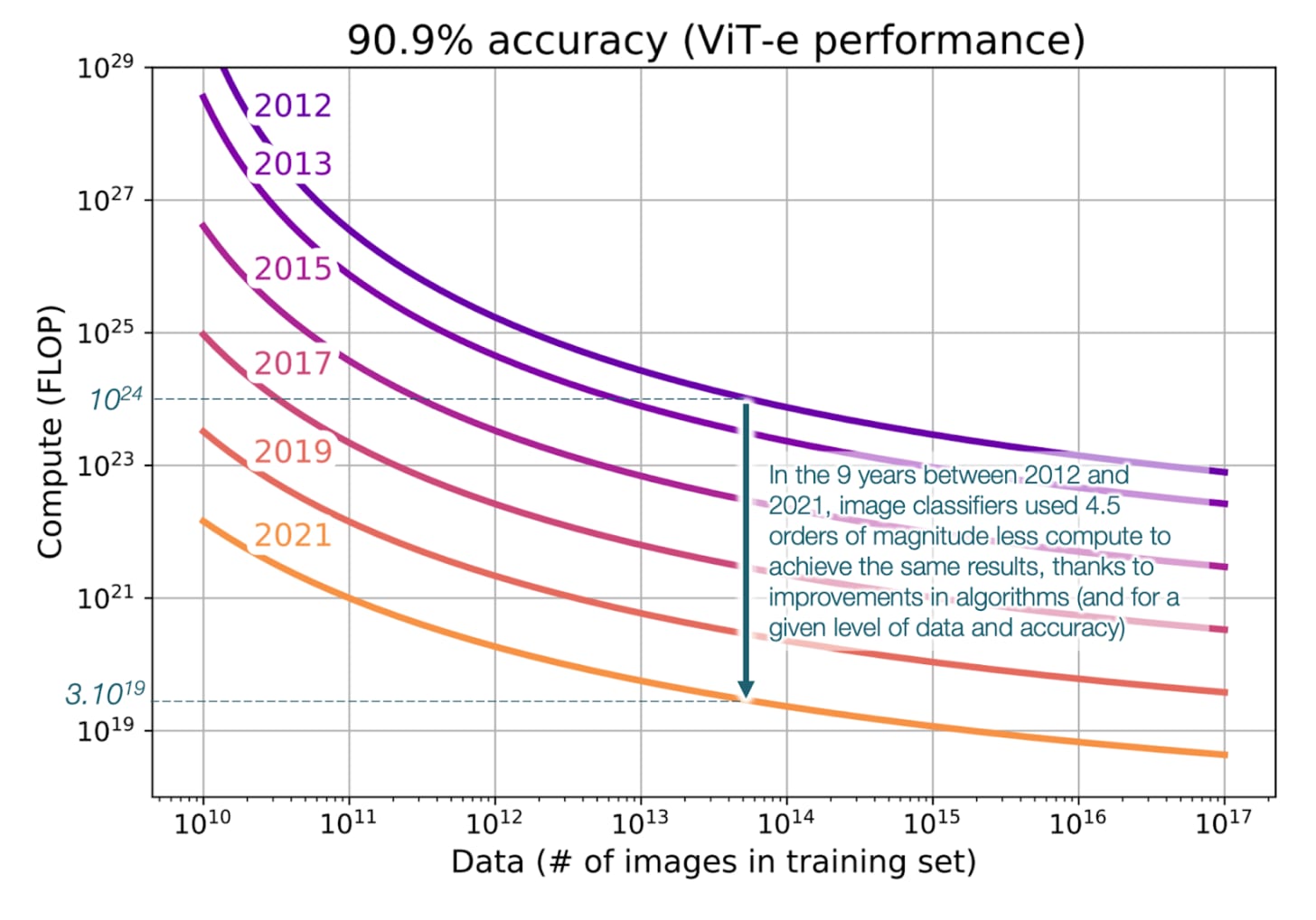

4: But we also get better at how we use these machines. We still need to make sure that our algorithms can continue improving as well as they have until now. Will they? That’s what we’re going to discuss today.

In other words, today we’re going to focus on the two upper areas of the graph below.

Algorithm optimization and unhobbling refer to similar things:

Algorithm optimization means improving them little by little, through intelligent tweaks here and there.

Algo unhobbling means big, radical changes that are uncommon but yield massive improvements.2

Let’s start by looking at how things have evolved in the past.

This is how much algorithms for image classification improved in the 9 years between 2012 and 2021:

About 0.5 orders of magnitude (OOMs) per year. Crucially, you can see the distance between lines is either the same or increases over time, suggesting that algorithmic improvements don’t slow down, they actually accelerate!

That’s for images. This paper found that, between 2012 and 2023, every 8 months LLM3 algorithms required half as much compute for the same performance (so they doubled their efficiency). That translates to ~0.5 OOM improvements per year, too.

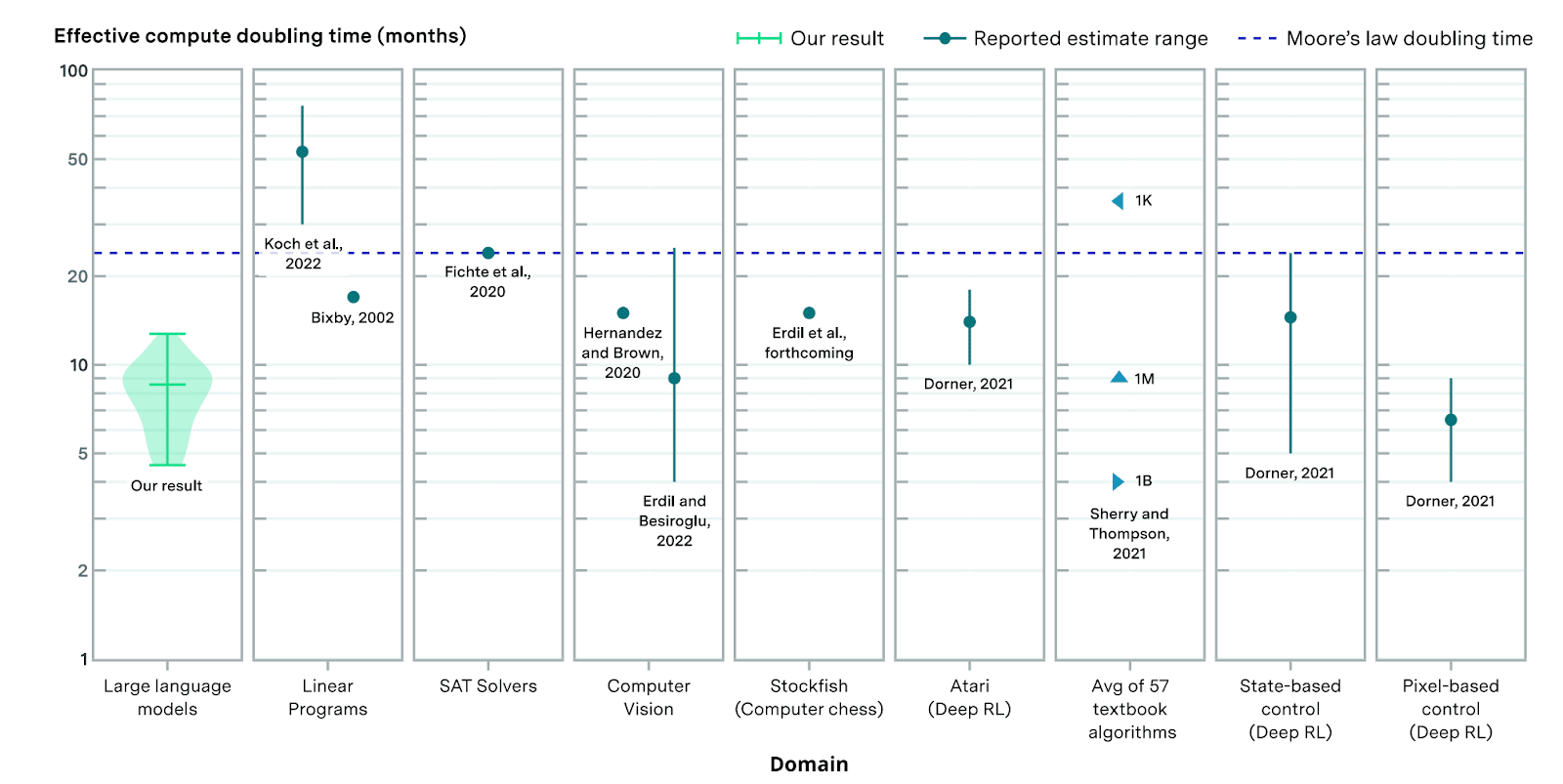

This paper looked at general AI efficiency improvements between 2012 and 2023 and saw a 22,000x improvement, which backs out to 0.4 OOMs per year.4

So across the board, it looks like we can get ~0.4 to 0.5 OOMs of algorithmic optimization per year. We won’t have that forever, but we have had it for a bit over a decade, so it’s likely that we’ll continue enjoying it for at least 5 years. If so, by 2030, we should have optimized our algorithms by ~300x.

The three papers I mentioned above quantify improvements at the heart of algorithms, but not the things we do on top of them to make them better, called unhobblings.5 These include things like reinforcement learning, chain-of-thought, distillation, mixture of experts, synthetic data, tool use, using more compute in inference rather than pre-training…

I covered some of these in the past when I discussed DeepSeek, but I think it’s quite important to understand these big paradigm shifts: They are at the core of how AIs work today, so they are basically the foundations of god-making. So I’m going to describe some of the most important ones. If you know all this, just jump to the next section.

LLMs are at their core language prediction machines. They take an existing text and try to predict what comes next. This was ideal for things like translations. They were trained to do this by taking all the content from the Internet (and millions of books) and trying to predict the next word.

The problem is that text online is not usually Q&A, but a chatbot is mostly Q&A. So LLMs didn’t usually interpret requests from users as questions they had to answer.

Supervised instruction fine-tuning does that: It gives LLMs plenty of examples of questions and answers in the format that we expect from them, and LLMs learn to copy it.

Even after instruction tuning, the models often gave answers that sounded fine but humans disliked: too long, evasive, unsafe, or subtly misleading.

So humans were shown multiple answers from the model and asked which one they preferred, and we trained the AIs on that.

What’s the difference between these two? The way I think about them is that SFT was the theory for AIs: It gave plenty of examples, but didn’t let them try. RLHF asks AIs to try, and corrects them when they’re making mistakes. RLHF is practice.

We then took all the questions and answers (both good and bad) from the previous approach (RLHF) and other sources and fed it to models,6 telling them: “See this question and these two answers? Your answer should be more like the first one, and less like the second one.”

In scientific fields, answers are either right or wrong, and you can produce them programmatically. For example, you create a program that calculates large multiplications, so you know the questions and answers perfectly. You then feed them to an AI for training. You can also generate bad answers to help the AI discern good from bad.

The result is that, the more scientific a field, the better LLMs are. That’s why Claude Code is so superhuman, and why LLMs are starting to solve math problems nobody has solved before.

Human feedback was expensive and inconsistent, especially for safety and norms, so we gave AIs explicit written principles (e.g. be honest, avoid harm) and trained them to critique and revise its own answers using those rules.

For example, Anthropic recently updated Claude’s constitution.

When you’re asked a question and you must answer with the first thing that comes to mind, how well do you respond? Usually, pretty badly. AIs too.

Chain-of-thought asks them to break down the questions being asked, then proceed step by step to gather an answer. Only when the reasoning is done does the AI give a final answer, and the reasoning is usually somewhat hidden.

Why does this work? The thinking part is simply a section where the LLM is told “The next few words should look like you’re thinking. They should have all the hallmarks of a thinking paragraph.” And the LLM goes and does exactly that. It basically produces paragraphs that mimic thinking in humans, and that mimicry turns out to have a lot of the value of the thinking itself!

Here’s another example:

Chain-of-thought basically means that you force the AI to think step by step like an intelligent human being, rather than like your wasted uncle spouting nonsense at a family dinner.

Before this was integrated into the models, you could kind of hack it with proper prompting. For example, by telling the AI “Please first think about the core principles of this question, then identify all the assumptions, then question all the assumptions. Then summarize these principles and validated assumptions, until you can finally answer the question.”

Now start combining some of the principles we’ve outlined already, and you can see how well they work together. For example, it’s very hard to learn math just by getting a question and the answer. But if you get a step-by-step answer, you’re much more likely to actually understand math. That’s what’s happening.

One consequence of this is that now we don’t use compute mostly to train a new model. We also use compute to run existing models, to help them think through their answers.

Distillation is a nice word for extracting intelligence from another LLM.

Researchers ask a ton of questions to another AI and record the answers. They then feed the pairs of question-answers into their new model to train it with great examples of how to answer. That way they train the new AI with the intelligence of an existing AI, at little cost.

This is one way of producing synthetic data, and it’s especially useful for cheap models to get a lot of the value of expensive ones, like DeepSeek distilling OpenAI’s ChatGPT:

The Chinese keep doing it.7 Apparently the Chinese model Kimi K2.5 is as good as the latest Claude Opus 4.5, it’s 8x cheaper, and it believes it’s Claude when you ask it.

This is interesting: The better our AIs are, the more we can use them to train the next AIs, in an accelerating virtuous cycle of improvement.

It’s especially valuable for chain-of-thought, because if you copy the reasoning of an intelligent AI, you will become more intelligent.

Do you have a PhD in physics and gender studies? Are you also a jet pilot? No, each of these require substantial human specialization. Why wouldn’t AIs also specialize?

That’s the concept behind mixture of experts: Instead of creating one massive model, developers create a bunch of smaller models, each specialized in one area like math, coding, and the like. Having many small models is cheaper and allows each one to focus on one area, becoming proficient in it without tradeoffs from attempting other specializations. For example, if you need to talk like a mathematician and a historian, you will have a hard time predicting the next word. But if you know you’re a mathematician, it’ll be much easier. Then, the LLM just needs to identify the right expert and call it when you’re asking it a question.

Note that this is something you could partially achieve with simple prompting before. You might have heard advice to tell LLMs things like You’re a worldwide expert in social media marketing, used to make the best ads for Apple. This goes in the same direction.

What’s better, to calculate 19,073*108,935 mentally, or to use a calculator? The same is true for AI. If they can use a calculator in this situation, the result will always be right.

This also works for searches. Yes, LLMs have been trained on all the history of Internet content, but all of that blurs a bit. If they can look up data in search engines, they’ll stop hallucinating false facts.

Before, LLMs could only hold so much information from the conversation in their brain. After a few paragraphs, they would forget what was said before. Now, context windows can reach into the millions of words.8

If every time you start a new conversation your LLM doesn’t remember what you discussed in your last one, it’s as if you hired an intern to help you work, and after the first conversation, you fired her and hired a new one. Terrible. So LLMs now have some memory of the key facts of your conversations.

This coordinates across many of the tools outlined above. For example, it forces the LLM to begin by planning the answer. Then, it pushes it to use tools to seek information. Then, it registers that information into its context window. Then, it uses chain-of-thought to reason with the data, and finally it replies.

With agents, you can take scaffolding to a superpower. Instead of having only a few steps, you can have several AIs take lots of different roles, and interact with each other. One can plan, another records information, another calls tools, several others answer with the data, others assess the answers, others rate them, others pick the right one, which they send to the planner to go to the next step, etc.

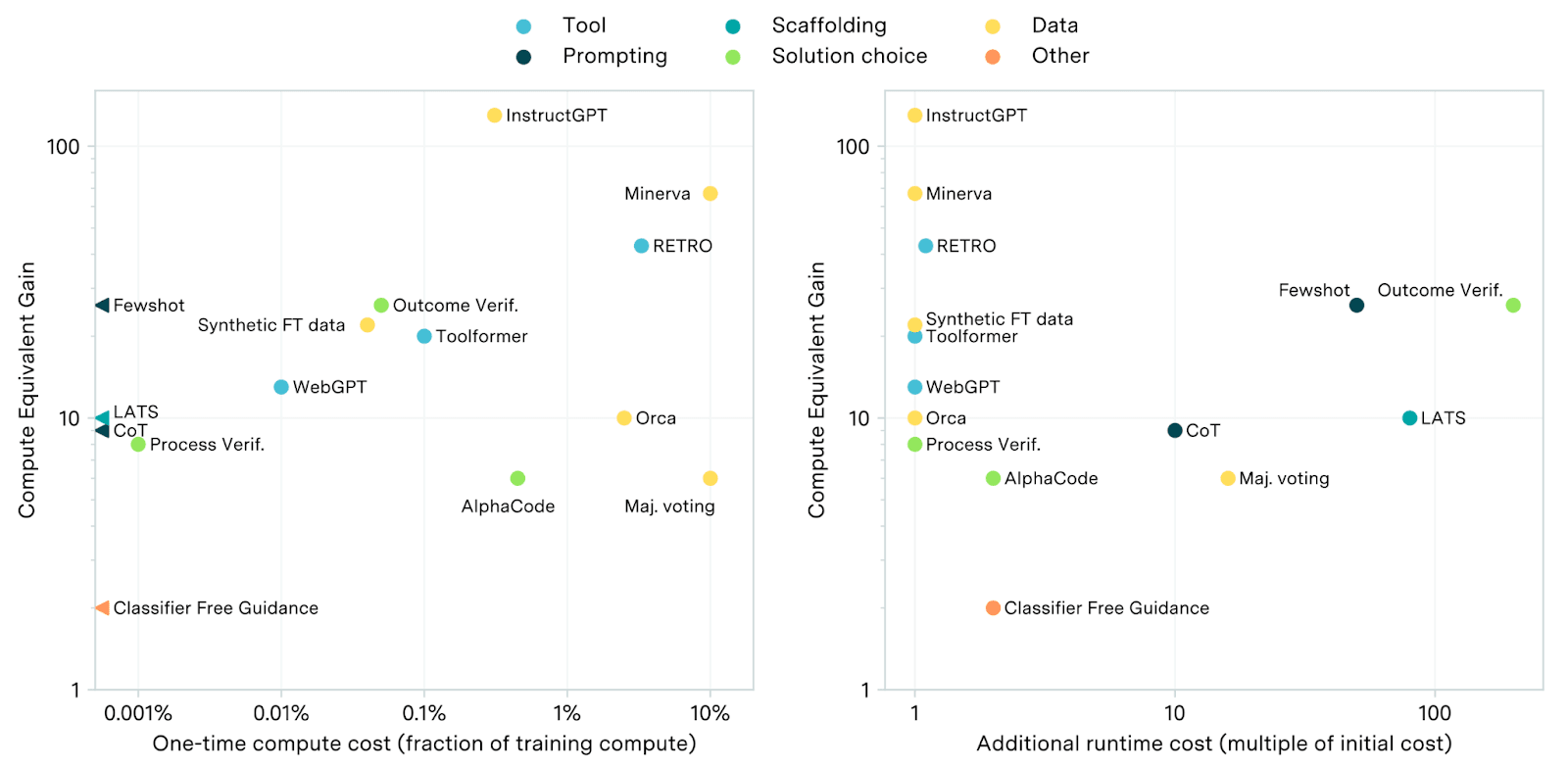

So these are the biggest unhobblings. How powerful have they been?

As you can see, many of these provide gains from 3-100x in effective compute.

Let’s take 10x as the average improvement per unhobbling mentioned above. AFAIK, they were all released between 2020 and 2023, so in just four years, over a dozen unhobblings were described just in this article! And if each one improves effective compute by 10x, that’s a 5,000x improvement per year! Obviously, this is not true because you can’t multiply the increase in performance of all these unhobblings, but it gives you a sense of how impactful they have been.

The estimation of 0.5 OOMs per year from Situational Awareness sounds quite reasonable, even conservative given all this.9

Will we continue finding these breakthroughs? How much will AI improve overall in the coming years? And how does that justify salaries over $100M?