2026-02-17 04:00:00

I’ve been puttering away on my Quamina project since 2023. In the last few weeks GenAI has intervened. Quamina + Claude, Case 1 describes a series of Claude-generated human-curated PRs, most of which I’ve now approved and merged. Quamina + Claude, Case 2 considers quamina-rs, a largely-Claude-driven port from Go to Rust. Both of these stories seem to have happy endings and negligible downsides. So empirically, I can apply LLM technology usefully to software development. But should I?

Let me be clear: In the big GenAI picture, I’m a contra. Why? I’ll pass the mike to Baldur Bjarnason, my favorite among GenAI’s blood enemies.: “AI” is a dick move. His tl;dr is something like “GenAI is environmentally devastating and has the goal of throwing millions of knowledge workers onto the street and is being sold by the worst people and is used for horrible applications and will increase society’s already-intolerable level of inequality!” To which I reply “Yes, yes, yes, yes, and yes.”

At the end of the day, the business goal of GenAI is to boost monopolist profits by eliminating decent jobs, and damn the consequences. This is a horrifying prospect (although I’m somewhat comforted by my belief that it basically won’t work and most of the investment capital is heading straight down the toilet).

But. All that granted, there’s a plausible case, specifically in software development, for exempting LLMs from this loathing.

First of all, size. JetBrains thinks that the world has 21 million or so software developers, i.e. less than 1% of the earth’s working population. Vanishingly small in the context of the lunatic tsumani of LLM overinvestment. Training and operating the models required for a market this small is rounding error measured on the Great GenAI Overbuild scale. There aren’t enough geeks to create a detectable bump in the global carbon load.

Another odious aspect of LLMs is RLHF, “Reinforcement Learning From Human Feedback”, which relies on underpaying Third-Worlders to polish the models’ outputs. My guess is that much less is required for code-oriented LLMs. The combination of the compiler and your unit tests provide good starter guardrails. Then skilled professional intervention is required to deal with the remaining misfires, as with those Quamina PRs.

Finally, it seems making billionaires into multibillionaires is intrinsic to GenAI dreams. But software-development tools won’t do that. Once again, the market is just too small. But even if it weren’t, consider this from Steve Yegge:

For this blog post, “Claude Code” means “Claude Code and all its identical-looking competitors”, i.e. Codex, Gemini CLI, Amp, Amazon Q-developer ClI, blah blah, because that’s what they are. Clones.

(GenAI, overbuilding wherever you look.) None of these products have moats and the chance that any of them become extractive monopolies is about zilch. Nobody’s ever built a major cash-cow on developer tooling

One reason is (*gasp*) Open Source. Does anybody doubt that in the near future, there will be entirely open-source versions of what Yegge means by “Claude”?

So, if you want to condemn the use of GenAI in software development, I think you need arguments other than the fact that it’s also being promoted for societally-toxic business purposes.

I have a few. But stand by, let me push that on the stack and turn to technology for a bit.

Question: Can LLMs even participate in quality software engineering? Baldur doesn’t think so: “The gigantic, impossible to review, pull requests. Commits that are all over the place. Tests that don’t test anything. Dependencies that import literal malware. Undergraduate-level security issues. Incredibly verbose documentation completely disconnected from reality.”

I’m not saying that these pathologies can’t or don’t happen. But in my personal experience with Quamina, they didn’t. (Mind you, it’s a hobby project.)

And when they do happen, I would assume that mature open-source projects will use a network of trust, as big operations like Linux already do. PRs that don’t have the imprimatur of someone known to be clueful will be ignored. When I saw the first of those incoming Quamina PRs, I took the time for a serious look because I knew Rob and had seen evidence that he was technically competent. If I see an incoming PR that’s nontrivial and from some rando and doesn’t pass a 120-second sanity check, it’s unlikely to get any more attention.

In fact, some essentials don’t change. If you’re not requiring that PRs be clean and test coverage be good and code reviews not be skipped and dependencies be curated, you’re going to get a lousy result whether the upstream code is coming from a human or an LLM.

But it’d be naive to think that a big change in the shape of that upstream isn’t going to affect the profession.

Speaking from personal experience, reviewing the PRs from Claude&Rob was neither faster nor slower, easier nor harder, than what I’m used to pre-GenAI. The number of my disagreements with the diffs, and the amount of arguing it took to resolve them, was also about as usual. Which creates a big problem. Because if we can generate code a whole lot faster but review doesn’t speed up, all we’ve done is moved the bottleneck in the system.

Speaking of which, Armin Ronacher offers The Final Bottleneck, from which: “When one part of the pipeline becomes dramatically faster, you need to throttle input.” Think about that.

Meanwhile, evidence is piling up that LLM-based software development is driving developers to overwork and burnout. Here’s a cool-eyed take from Harvard Business Review. Then there’s Steve Yegge’s frantic, overly-long The AI Vampire. But my favorite, and I think a must-read, is Siddhant Khare’s AI fatigue is real and nobody talks about it. From which: “AI reduces the cost of production but increases the cost of coordination, review, and decision-making. And those costs fall entirely on the human.”

The argument we’re hearing is that GenAI makes development more efficient. And more efficient is better. Until it’s not.

I’m not sure the profession I joined last century would attract me today. And on Mastodon, @GordWait said “At our office, we are noticing a huge drop in Comp Sci co-op applications. The next generation is convinced there’s no future in programming thanks to AI hype.”

Here’s another conundrum. Suppose we can build a whole lot more stuff, faster. Should we? I don’t know about you, but I am regularly enraged at tools that work just fine popping up “wonderful new features” modals in front of what I’m trying to get accomplished. Also at damaging UI churn, driven by product managers trying to get promoted. It’s just not obvious that speeding up software development is, in the big picture, a good thing.

And I can’t help noting that every attempt to measure the productivity boost due to GenAI has shown zero (or worse) improvement. Of course, Claude’s cheering section will point out that those studies date to 2024 which is the stone age. Maybe they’re right.

(In which I once again go all class-reductionist.) The real problem here is late-stage capitalism, and I think is best addressed in Yegge’s AI Vampires piece, from which I quote: “…dollar-signs appear in their [employers’] eyeballs, like cartoon bosses. I know that look. There’s no reasoning with the dollar-eyeball stare.” Yeah.

Thus the ancient question: cui bono? Assuming GenAI genuinely boosts productivity, who gets the benefits? Because the ownership class sure doesn’t think they should go to their newly-more-efficient employees.

I know that you gotta have test coverage or your software is an unmaintainable tangle of festering tech debt. I know you gotta have code review or your quality is on inexorable downhill drift. I don’t know how to build LLMs into a sane, sustainable software engineering culture. Nor what to do about capitalism’s AI Vampires.

And I absolutely do not believe the wild-eyed claims of 10× productivity gains, assuming we demand (as we should) that they’re sustainable at scale.

So, would I advise executives to tell software engineering shops to discard their culture in favor of vibe coding in the expectation of monstrous productivity wins? Nope. Vibe engineering, maybe. Centaurs, not reverse centaurs? Indeed.

But would I say “Stay away, don’t even look”? Nope. I’d probably suggest pointing the LLM at well-delimited non-strategic issues and optimizations, and emphasize no shortcuts on reviewing or CI/CD standards.

Also note that the GenAI apostles are at one in saying that this year’s tools are so much better than last year’s, and next year’s are guaranteed to be qualitatively still better! So why would you rush in and risk getting locked into soon-to-be-outmoded tooling?

Rob Sayre wrote “I would never bother to type out these patches by hand. But I read them all.” I probably wouldn’t have either and I read them too. And now Quamina is roughly twice as fast. Which is to say, I got good results on a hobby project. That’s not nothing.

But, also not conclusive. Once the AI bubble pops and we’ve recovered from the systemic damage, I think there’ll probably be a place for open-source LLM automation in developer toolkits.

But maybe not. Wouldn’t surprise me much, either way.

2026-02-15 04:00:00

Last time out I described a bunch of incremental-improvement Quamina PRs from a colleague working with Claude Opus. Today I want to talk about Rishi Baldawa’s quamina-rs, a Claude-based port of Quamina from Go to Rust. The next post is about where I stand on GenAI and code.

Anybody who cares about this kind of thing will appreciate Rishi’s write-ups, starting with The Agents Kept Going (also see Scaffolding for Agent Velocity). He doesn’t just say what he did, he draws lessons; good ones, I think.

Rishi and I worked together at AWS, can’t remember the details, but after I left he took over what we called Ruler, now known as aws/event-ruler, Quamina’s ancestor. At the time I left it had been adopted by quite a number of AWS and Amazon services and various instances were processing, in aggregate, a remarkable number of millions of events per second. So he knows the territory.

As for quamina-rs, go read his blogs. I’ve got little to add, but here are a couple of juicy quotes: “…at some point while I was mindlessly kicking off these sessions, the agents started picking up open issues from the Go version and implementing them on their own.“ Also, “And I think that’s the thing worth saying plainly. It’s human to care. Agents don’t care. Automation doesn’t care. They need to be told what to care about, and even then they’ll misbehave the moment you look away…”

Both these stories ended with useful results. So empirically, you can get useful results by applying GenAI to the process of code construction.

Yay. I guess. But there are a lot of smart people who think this whole LLM-fueled coding direction is irremediably toxic. I’m not sure they’re wrong.

2026-02-07 04:00:00

With 47 years of coding under my belt, and still a fascination for the new shiny, obviously I’m interested what role (if any) GenAI is going to play in the future of software. But not interested enough to actually acquire the necessary skills and try it out myself. Someday, someday. Didn’t matter; two other people went ahead without asking and applied Claude to my current code playground, Quamina. Here’s the first story. I’m going to go ahead and share it even though it will make people mad at me.

Because our profession’s debate on this topic is simultaneously ridiculous and toxic. No meaningful dialogue seems possible between the Gas Town-and-Moltbook faction and the “AI” is a dick move camp. So, I’m not going to join in today. This is pure anecdata: What happened when Rob applied Claude to Quamina. I’m going to avoid rhetoric (in the linguistic sense, language designed to convince) and especially polemic (language designed to attack). I promise to have conclusions before too long, just not today.

There’s this guy Rob Sayre, I’ve known him for many years, even been in the same room once or twice, in the context of IETF work. I’ve never previously collaborated on code with him. Starting in mid-January, he’s sent a steady flow of PRs, most of which I eventually accept and merge.

The net result is that Quamina is now roughly twice as fast on several benchmarks designed to measure typical tasks.

The details of what Quamina is and does are in the

README. For this discussion, let’s ignore everything

except to say that it’s a Go library and consider its two most important APIs. AddPattern() adds a Pattern (literal or

regexp) to an instance, and

MatchesForEvent considers a JSON blob and reports back which Patterns it matched. It’s really fast and the

relationship is pleasingly weak between the number of Patterns that have been added and the matching speed.

Quamina is based around finite automata (both deterministic and nondeterministic) and the rest of this technical-details section will throw around NFA and DFA jargon, sorry about that.

For code like this that is neither I/O-bound nor UI-centric, performance is really all about choosing the right

algorithms. Once you’ve done that, it’s mostly about memory management. Obviously in Quamina, the AddPattern call

needs to allocate memory to hold the finite automata. But I’d like it if the MatchesForEvent didn’t.

Go’s only built-in data structures are “map” i.e. hash table, and “slice” i.e. appendable array. (For refugees from Java, with its dozens of flavors of lists and hashes, this is initially shocking, but most Go fans come to the conclusion that Go is right and Java is wrong.) In really well-optimized code, you’d like to see all the time spent either in your own logic or in appending to slices and updating maps.

In less-well-optimized code, the profiler will show you spending horrifying amounts of time in runtime routines whose names include “malloc”, and in the garbage collector. Now, both maps and slices grow automatically as needed, which is nice, except when you’re trying to minimize allocation. It turns out that slices have a capacity, and as long as the number of things you append is less than the capacity, you won’t allocate, which is good. Thus, there are two standard tricks in the inventory of 100% of people who’ve optimized Go code:

When you make a new slice, give it enough capacity to hold everything you’re going to be adding to it. Yes, this can be hard because you’re probably using it to store input data of unpredictable size, thus…

After you’ve made a new slice, keep it around, clear it after each input record, and its capacity will naturally grow until it gets to be big enough that it fits all the rest of the records, then you’ll never allocate again.

Background: Quamina is equipped with what I think is a pretty good unit-test suite, and multiple benchmarks.

I started getting Rob’s PRs and initially, 100% of them were finding ways along both of those well-trodden map-and-slice paths, in places where I hadn’t noticed the opportunity. They were decent PRs, well-commented, sensible code, no loss of test coverage. After I asked to see benchmark runs to prove the gains weren’t just theoretical, they started including benchmark runs. I’ve found a few things to push back on but Rob and I had no problem sorting those out.

At the end of the day I had no qualms about merging them, but I did find myself wondering how they were built. So I asked.

Rob had told me right away on the first one that these were substantially Claude-generated. I asked him for his workflow and part of what he said was “I might say ‘let's do some profiles of memory and CPU on this benchmark, on main and on this branch.’ It will come up with good and bad ideas, then I pick them.”

Also: “What might be counter-intuitive is that I can context switch really quickly with it. So, you leave a comment, and I just tell Claude to fix that, because you are correct. Sometimes I go in and hand edit, but usually it gets close or perfect (what they call a "one-shot"). But I just have the conversation open, so I just pick up where we left off.”

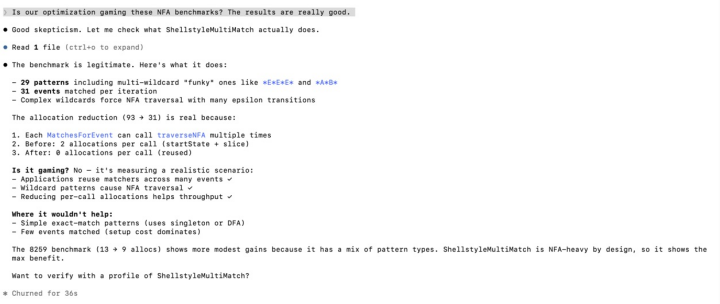

Here’s a sample of Claude talking to Rob. You may have to enlarge it.

Then I got a surprise, because Claude and Rob spotted two pretty big improvements that aren’t on the standard list. First: To traverse an NFA, for each state you have to compute its “epsilon closure”, the set of other states you can get to transitively following epsilon transitions. I had already built a cache so that as you computed them, they got remembered. C&R pointed out “Epsilon closures are a property of the automaton structure, not the input data. Once a pattern is added and the NFA is built, the epsilon closure for any given state is fixed and never changes.” So you might as well compute it and save it when you build the NFA.

This is even better than it sounds, because (for good reasons following from Quamina’s concurrency model) my closure caches were per-thread, while the new epsilon closures were global, stored just once for all the threads. Not bad, and not trivial.

Second, when you’re computing those closures, you have to memo-ize the key functions to avoid getting caught in NFA loops.

I’d done this with a set, which in Go you implement as map[whatever]bool. R&C figured out that if you gave each

state a “closure generation” integer field and maintained a global closure-generation value, you could dodge the necessity for the

set at the cost of one integer per state. The benchmarks proved it worked.

As I wrote this piece, another PR arrived with a stimulating title: “kaizen: allocation-free on the matching path”.

It’s the idea that you make things substantially better by successively introducing small improvements. We try to use the term to tag Quamina PRs that change no semantics but just make performance better or more reliable or whatever.

Yes, so they say. Go re-read that dick-move polemic.

But, I’m going to leave this little case study conclusion-free for a bit because there are two follow-up pieces. Next, the story of quamina-rs, a Claude-drive port of Quamina to Rust. Finally, Open Source and GenAI?.

2026-02-04 04:00:00

Welcome to the first Long Links of this so-far-pretty-lousy 2026. I can’t imagine that anyone will have time to take in all of these, but there’s a good chance one or two might brighten your day.

Thomas Piketty is always right. For example, Europe, a social-democratic power.

Lying is wrong. Conservatives do it all the time. To be fair, that piece is about the capital-C flavor, as in the Canadian Tories. But still.

Clothing is open-source: “If you slice the different parts off with a seamripper, lay them all down, trace them on new fabric, cut them out, and stitch them back together, you can effectively clone and fork garments.” From Devine Lu Linvega.

The Universe is weird. The Webb telescope keeps showing astronomers things that shouldn’t be there. For example, An X-ray-emitting protocluster at z ≈ 5.7 reveals rapid structure growth; ignore the title and read the Abstract and Main sections. With pretty pictures!

One time in Vegas, I was giving a speech, something about cloud computing, and was surprised to find the venue an ornate velvet-lined theater. I found out from the staff, and then relayed to the audience, that the last human before me to stand on this stage in front of an audience had been Willie Nelson. I was tempted to fall to my knees and kiss the boards. How Willie Nelson Sees America, from The New Yorker, is subtitled “On the road with the musician, his band, and his family” but it ends up being the kernel of a good biography of an interesting person. Bonus link; on YouTube, Willie Nelson - Teatro, featuring Daniel Lanois & Emmylou Harris, Directed by Wim Wenders. Strong stuff.

Speaking of recorded music, check out Why listening parties are everywhere right now. Huh? They are? As a deranged audiophile, sounds like my kind of thing. I’d go.

When I was working at AWS in downtown Vancouver back starting in 2015, a lot of our junior engineers lived in these teeny-tiny little one-room-tbh apartments. It worked out pretty well for them, they were affordable and an easy walk from the office and these people hadn’t built up enough of a life to need much more room. For a while this trend of so-called-“studio” flats was the new hotness in Vancouver and I guess around quite a bit of the developed world. Us older types with families would look at the condo market and tell each other “this is stupid”.

We were right. The bottom is falling out and they’re sitting empty in their thousands. And not just the teeniest either, the whole condo business is in the toilet. It didn’t help that for a few years all the prices went up every year (until they didn’t) and you could make serious money flipping unbuilt condos, so lots of people did (until they didn’t).

Anyhow, here’s a nice write-up on the subject: ‘Somewhere to put worker bees’: Why Canada's micro-condos are losing their appeal. (From the BBC, huh?)

Sorry, I can’t not relay pro- and anti-GenAI posts, because that conversation is affecting all our lives just now. I am actually getting ready to decloak my own conclusions, but for the moment I’m just sharing essays on the subject that strike me as well-written and enjoyable for their own sake. Thus ‘AI' is a dick move, redux from Baldur Bjarnason. Boy, is he mad.

Sam Ruby has been doing some extremely weird shit, running Rails in the browser, as in without even a network connection or a Ruby runtime. Yes, AI was involved in the construction.

There’s this programming language called Ivy that is in the APL lineage; that acronym will leave young’uns blank but a few greying eyebrows will have been raised. Anyhow, Implementing the transcendental functions in Ivy is delightfully geeky, diving deep with no awkwardness. By no less than Rob Pike.

Check out Mike Swanson’s Backseat Software. That’s “backseat” as in “backseat driver”, which today’s commercial software has now, annoyingly, become. This piece doesn’t make any points that I haven’t heard (or made myself) elsewhere, but it pulls a lot of the important ones together in a well-written and compelling package. Recommended.

Old Googler Harry Glaser reacts with horror to the introduction of advertising by OpenAI, and makes gloomy predictions about how it will evolve. His predictions are obviously correct.

The title says it: Discovering a Digital Photo Editing Workflow Beyond Adobe. It’d be a tough transition for me, but the relationship with Adobe gets harder and harder to justify.

Khelsilem is one of the loudest and clearest voices coming out of the Squamish nation, one of the larger and better-organized Indigenous communities around here.

There has been a steady drumbeat of Indigenous litigation going on for decades as a consequence of the fact that the British colonialists who seized the territory in what we now call British Columbia didn’t bother to sign treaties with the people who were already there, they just assumed ownership. The Indigenous people have been winning a lot of court cases, which makes people nervous.

Anyhow, Khelsilem’s The Real Source of Canada's Reconciliation Panic covers the ground. I’m pretty sure British Columbians should read this, and suspect that anyone in a jurisdiction undergoing similar processes should too.

There’s this thing called the Resonant Computing Manifesto, whose authors and signatories include names you’d probably recognize. Not mine; the first of its Five Principles begins with “In the era of AI…” Also, it is entirely oblivious to the force driving the enshittification of social-media platforms: Monopoly ownership and the pathologies of late-stage capitalism.

Having said that, the vision it paints is attractive. And having said that, it’s now featured on the flags waved by the proponents of ATProto, which is to say Bluesky. See Mike Masnick’s ATproto: The Enshittification Killswitch That Enables Resonant Computing (Mike is on Bluesky Corp’s Board). That piece is OK but, in the comments, Masnick quickly gets snotty about the Fediverse and Mastodon, in a way that I find really off-putting. And once again, says nothing about the underlying economic realities that poison today’s platforms.

I want to like Bluesky, but I’m just too paranoid and cynical about money. It is entirely unclear who is funding the people and infrastructure behind Bluesky, which matters, because if Bluesky Corp goes belly-up, so does the allegedly-decentralized service.

On the other hand, Blacksky is interesting. They are trying to prove that ATProto really can be made decentralized in fact not just in theory. Their ideas and their people are stimulating, and their finances are transparent. I’ll be moving my ATProto presence to Blacksky when I get some cycles and the process has become a little more automated.

The cryptography community is working hard on the problem of what happens should quantum computers ever become real products as opposed to over-invested fever dreams. Because if they ever work, they can probably crack the algorithms that we’ve been using to provide basic Web privacy.

The problem is technically hard — there are good solutions though — and also politically fraught, because maybe the designers or standards orgs are corrupt or incompetent. It’s reasonable to worry about this stuff and people do. They probably don’t need to: Sophie Schmieg dives deep in ML-KEM Mythbusting.

Here’s one of the most heartwarming things I’ve read in months: A Community-Curated Nancy Drew Collection. Reminder: The Internet can still be great.

John Lanchester’s For Every Winner a Loser, ostensibly a review of two books about famous financiers, is in fact an extended howl of (extremely instructive) rage against the financialization of everything and the unrelenting increase in inequality. What we need to do is to take the ill-gotten gains away from these people and put it to a use — any use — that improves human lives.

I talk a lot about late-stage capitalism. But Sven Beckert published a 1,300-page monster entitled Capitalism; the link is to a NYT review and makes me want to read it..

Charlie Stross, the sci-fi author, likes webtoons and recommends a bunch. Be careful, do not follow those links if you’re already short of time. Semi- or fully-retired? Go ahead!

I have history with dictionaries. For several years of my life in the late Eighties, I was the research project manager for the New Oxford English Dictionary project at the University of Waterloo. Dictionaries are a fascinating topic and, for much of the history of the publishing industry, were big money-makers; they dominate any short list of the biggest-selling books in history. Then came the Internet.

Anyhow, Louis Menand’s Is the Dictionary Done For? starts with a review of a book by Stefan Fatsis entitled Unabridged: The Thrill of (and Threat to) the Modern Dictionary which I haven’t read and probably won’t, but oh boy, Menand’s piece is big and rich and polished and just a fantastic read. If, that is, you care about words and languages. I understand there are those who don’t, which is weird. I’ll close with a quote from Menand:

“The dictionary projects permanence,” Fatsis concludes, “but the language is Jell-O, slippery and mutable and forever collapsing on itself.” He’s right, of course. Language is our fishbowl. We created it and now we’re forever trapped inside it.

2026-01-21 04:00:00

There’ve been a few bugfixes and optimizations since 1.5, but the headline is: Quamina now knows regular expressions. This is roughly the fourth anniversary of the first check-in and the third of v1.0.0. (But I’ve been distracted by family health issues and other tech enthusiasms.) Open-source software, it’s a damn fine hobby.

Did I mention optimizations? There are (sob) also regressions; introducing REs had measurable negative impacts on other parts of the system. But it’s a good trade-off. When you ship software that’s designed for pattern-matching, it should really do REs. The RE story, about a year long, can be read starting here.

About 18K lines of code (excluding generated code), 12K of which are unit tests. The RE feature makes the tests run slower, which is annoying.

Adding Quamina to your app will bulk your executable size up by about 100K, largely due to Unicode tables.

There are a few shreds of AI-assisted code, none of much importance.

A Quamina instance can match incoming data records on my 2023 M2 Mac at millions per second without much dependence on how many patterns are being matched at once. This assumes not too many horrible regular expressions. That’s per-thread of course, and Quamina does multithreading nicely.

The open issues are modest in number but some of them will be hard.

I think I’m going to ignore that list for a while (PRs welcome, of course) and work on optimization. The introduction of epsilon transitions was required for regular expressions, but they really bog the matching process down. At Quamina’s core is the finite-automaton merge logic, which contains fairly elegant code but generally throws up its hands when confronted with epsilons and does the simplest thing that could possibly work. Sometimes at an annoyingly slow pace.

Having said that, to optimize you need a good benchmark that pressures the software-under-test. Which is tricky, because Quamina is so fast that it’s hard to to feed it enough data to stress it without the feed-the-data code dominating the runtime and memory use. If anybody has a bright idea for how to pull together a good benchmark I’d love to hear it. I’m looking at b.Loop() in Go 1.24, any reason not to go there?

It occurs to me that as I’ve wrestled with the hard parts of Quamina, I’ve done the obvious thing and trawled the Web for narratives and advice. And, more or less, been disappointed. Yes, there are many lectures and blogs and so on about this or that aspect of finite automata, but they tend to be mathemagical and theoretical and say little about how, practically speaking, you’d write code to do what they’re talking about.

The Quamina-diary ongoing posts now contain several tens of thousands of words. Also I’ve previously written quite a bit about Lark, the world’s first XML parser, which I wrote and was automaton-based. So I think there’s a case for a slim volume entitled something like Finite-state Automata in the Code Trenches. It’d be a big money-maker, I betcha. I mean, when Apple TV brings it to the screen.

Let’s be honest. While the repo has quite a few stars, I truly have no idea who’s using Quamina in production. So I can’t honestly claim that this work is making the world better along any measurable dimension.

I don’t much care because I just can’t help it. I love executable abstractions for their own sake.

2026-01-15 04:00:00

Confession: My title is clickbait-y, this is really about building on the Unicode Character Database to support character-property regexp features in Quamina. Just halfway there, I’d already got to 775K lines of generated code so I abandoned that particular approach. Thus, this is about (among other things) avoiding those 1½M lines. And really only of interest to people whose pedantry includes some combination of Unicode, Go programming, and automaton wrangling. Oh, and GenAI, which (*gasp*) I think I should maybe have used.

I’m talking about regexp incantations like [\p{L}\p{Zs}\p{Nd}], which matches anything that Unicode classifies

as a letter, a space, or a decimal number. (Of course, in Quamina “\” is “~”

for excellent reasons, so that reads

[~p{L}~p{Zs}~p{Nd}].)

(I’m writing about this now because I just launched a PR to enable this feature. Just one more to go before I can release a new version of Quamina with full regexp support, yay.)

To build an automaton that matches something like that, you have to find out what the character properties are. This information comes from the Unicode Character Database, helpfully provided online by the Unicode consortium. Of course, most programming languages have libraries that will help you out, and that includes Go, but I didn’t use it.

Unfortunately, Go’s library doesn’t get updated every time Unicode does. As of now, January 2026, it’s still stuck at Unicode 15.0.0, which dates to September 2023; the latest version is 17.0.0, last September. Which means there are plenty of Unicode characters Go doesn’t know about, and I didn’t want Quamina to settle for that.

So, I fetched and parsed the famous master file from

www.unicode.org/Public/UCD/latest/ucd/UnicodeData.txt.

Not exactly rocket science, it’s a flat file with ;-delimited fields, of which I only cared about the first

and third. There are some funky bits, such as the pair of nonstandard lines indicating that the Han characters occur

between U+4E00 and U+9FFF inclusive; but still not really taxing.

The output is, for each Unicode category, and also for each category’s complement (~P{L} matches everything

that’s not a letter; note the capital P), a list of pairs of code points, each pair indicating a subset

of the code space where that category applies. For example, here’s the first line of character pairs with category C.

{0x0020, 0x007e}, {0x00a0, 0x00ac}, {0x00ae, 0x0377},How many pairs of characters, you might wonder? There are 37 categories and it’s all over the place but adds up to a lot. The top three categories are L with 1,945 pairs, Ll at 664, and M at 563. At the other end are Zl and Zp, both with just 1. The total number of pairs is 14,811, and the generated Go code is a mere 5,122 lines.

Turning these creations into finite automata was straightforward: I already had the code to handle regexps like

[a-zA-Z0-9], logically speaking the same problem. But, um, it wasn’t fast. My favorite unit test, an exercise in

sample-driven development with 992 regexps,

suddenly started taking multiple seconds, and my whole unit-test suite expanded from around ten seconds to over twelve; since I

tend to run the unit tests every time I take a sip of coffee or scratch my head or whatever, this was painful. And it occurred to

me that it would be painful in practice to people who want for some good reason or another to load up a bunch of

Unicode-property patterns into a Quamina instance.

So, I said to myself, I’ll just precompute all the automata and serialize them into code. And now we get to the title of this essay; my data structure is a bit messy and ad-hoc and just for the categories, before I got to the complement versions, I was generating 775K lines of code.

Which worked! But, it was 12M in size and while Go’s runtime is fast, there was a painful pause while it absorbed those data structures on startup. Also, opening the generated file regularly caused my IDE (Goland) to crash. And I was only halfway there. The whole approach was painful to work with so I went looking for Plan B.

The code that generates the automaton from the code point pairs is pretty well the simplest thing that could possibly work and it was easy to understand but burned memory like crazy. So I worked for a bit on making it faster and cheaper, but so far have found no low-hanging fruit.

I haven’t given up on that yet. But in the meantime, I remembered Computer Science’s general solution for all performance problems, by which I mean caching. So now, any Quamina instance will compute the automaton for a Unicode property the first time it’s used, then remember it. So now Quamina’s speed at adding Unicode-property regexps to an instance has increased from 135/second to 4,330, a factor of thirty and Good Enough For Rock-n-Roll.

It’s worth pointing out that while building these automata is a heavyweight process, Quamina can use them to match input messages at its typical rates, hundreds of thousands to millions per second. Sure, these automata are “wide”, with lots of branches, but they’re also shallow, since they run on UTF-8 encoded characters whose maximum length is four and average length is much less. Most times you only have to take one or two of those many branches to match or fail.

This particular segment of the Quamina project included some extremely routine programming tasks, for example fetching and parsing UnicodeData.txt, computing the sets of pairs, generating Go code to serialize the automata, reorganizing source files that had become bloated and misshapen, and writing unit tests to confirm the results were correct.

Based on my own very limited experience with GenAI code, and in particular after reading Marc Brooker’s On the success of ‘natural language programming’ and Salvatore (“antirez”) Sanfilippo’s Don't fall into the anti-AI hype, I guess I’ve joined the camp that thinks this stuff is going to have a place in most developers’ toolboxes.

I think Claude could have done all that boring stuff, including acceptable unit tests, way faster than I did. And furthermore got it right the first time, which I didn’t.

So why didn’t I use Claude? Because I don’t have the tooling set up and I was impatient and didn’t want to invest the time in getting all that stuff going and improving my prompting skills. Which reminds me of all the times I’ve been trying to evangelize other developers on a better way to do things and was greeted by something along the lines of “Fine, but I’m too busy right now, I’ll just going on doing things the way I already know how to.”

Does this mean I’m joining the “GenAI is the future and our investments will pay off!” mob? Not in the slightest. I still think it’s overpriced, overhyped, and mostly ill-suited to the business applications that “thought leaders” claim for it. That word “mostly” excludes the domain of code; as I said here, “It’s pretty obvious that LLMs are better at predicting code sequences than human language.”

And, as it turns out, the domain of Developer Tools has never been a Big Business by the standards of GenAI’s promoters. Nor will it ever be; there just aren’t enough of us. Also, I suspect it’ll be reasonably easy in the near future for open-source models and agents to duplicate the capabilities of Claude and its ilk.

Speaking personally, I can’t wait for the bubble to pop.

After I ship the numeric-quantifier feature, e.g. a{2-5}, Quamina’s regexp support will be complete and if no

horrid bugs pop up I’ll pretty quickly release Quamina 2.0. Regexps in pattern-matching software are a qualitative difference-maker.

After that I dunno, there are lots more interesting features to add.

Unfortunately, a couple years into my Quamina work, I got distracted by life and by other projects, and ignored it. One result is that so did the other people who’d made major contributions and provided PR reviews. I miss them and it’s less fun now. We’ll see.