Tableau puts self-service visual analytics within reach of everyone, from small businesses with just a few licenses to massive enterprises deploying it across their entire organization. But as adoption grows, activity increases. Users generate prolific amounts of content. More dashboards are rendered, more databases are queried. Groups and permissions become more complex. Invariably, a point comes when help is needed to lead, organize, and shepherd these efforts—lest they devolve into an ungoverned mess that does more harm than good.

Having spent a good portion of my career as a Tableau administrator myself, I believe that every admin has three core jobs: Ensure that a platform is safe, functional, and valuable to its users. This means securing the platform from bad actors (external or internal), complying with regulatory requirements, monitoring for operational issues and performance degradations, and ensuring everyone gets the most value by using the platform efficiently.

Observability for enterprises

Doing all those things is easier said than done, especially for large deployments with hundreds, or even thousands, of users working across time zones, geographies, and different sites. When the platform you administer reaches this scale, the traditional built-in tools and processes that worked well at the beginning often just aren’t fit for purpose anymore. Working one-on-one with users and clicking through pages manually can work when you have hundreds of users, but it’s not sustainable when you have thousands. It’s at this point that admins need true observability—access to data about what is happening inside their application at scale. For many admins, this data-based approach means integrating event logs into SIEM tools for monitoring, data warehouses for usage analytics, and building automation to execute tasks autonomously.

For some time, the Activity Log feature of Tableau Cloud has helped meet some of those needs. With Tableau 2025.3, a huge step forward is delivered in giving Tableau Cloud admins more observability data than ever before: the Platform Data API!

Introducing the Tableau Cloud Platform Data API

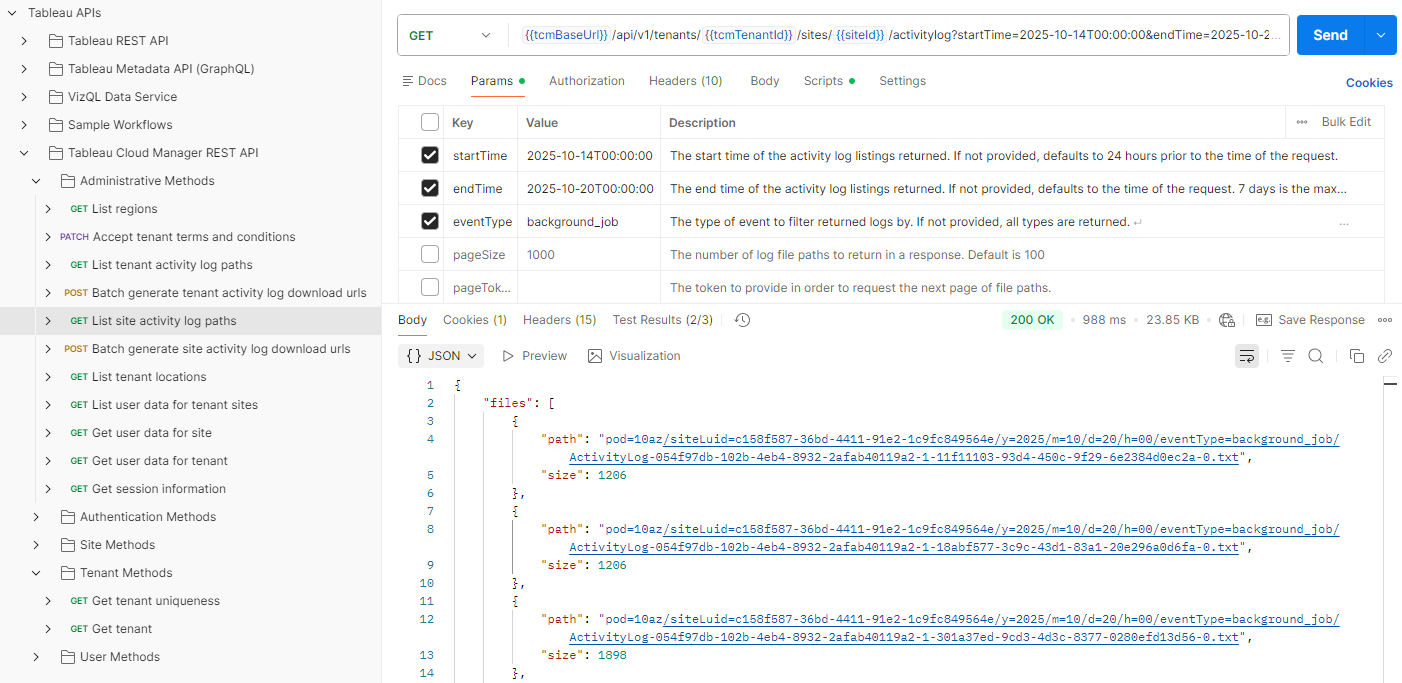

Accessible via new endpoints in Tableau Cloud Manager (TCM), the Platform Data API provides a central, unified way to retrieve event log data from your entire Tableau Cloud deployment. Want to see who logged into TCM to create a new site? There’s auditing for that. How about a permissions change someone made to their workbook? You can pull that data, too. What about monitoring extract refreshes? We’ve got you covered (including the ones that run on Bridge)!

Unlike "push" integrations that only support specific platform vendors, our API allows you to "pull" data down to store, integrate, and analyze it using the systems you prefer. This platform-agnostic approach means more customers can benefit from this observability data. It also gives our partners and DataDev community a standardized way to create new tools and products that enrich the Tableau ecosystem for everyone.

I'm excited to share that this feature is available for all customers of Tableau Cloud! This means that for the first time ever, any customer, regardless of edition, can access this same observability data using the new API. We know how important and valuable this data is, and we want to maximize its benefit for you. For our Tableau Enterprise and Tableau+ edition customers with strict monitoring and resiliency requirements, you’ll enjoy access to near real-time data to ensure you’re always on top of anything that needs immediate attention, and extended retention periods that keep the data available for a full year—just in case.

What's next for enterprise observability

The Platform Data API is just the first step in our journey to provide you with a comprehensive set of tools for managing your Tableau Cloud deployment. We are actively working on new features that will further expand the site's observability options:

- Entity Snapshots: While event data can tell you what's happened, it can’t tell you what things are like now. We’re working to supplement the eventing data with "snapshots" of your deployment's entities—such as workbooks, data sources, users, and groups. This will allow you to answer questions like, "Which workbooks haven't been used in the last six months?" and "What users are no longer active?"

- Accelerated Admin Insights: As we build this robust data foundation, we are committed to improving our existing admin features. We plan to re-base Admin Insights on this new, more efficient data pipeline, which will allow us to accelerate the data refresh time and provide you with faster, more timely information about your deployment.

The Tableau Cloud Platform Data API is the foundation for a new level of control and insight. It's available to all Tableau Cloud customers, helping everyone—from individual teams to large enterprises—build a more secure, efficient, and data-driven analytics environment. It's the key to truly understanding your deployment at scale.

Get started with the Platform Data API today

You can learn more about how to use the new Platform Data API by reading our Activity Log overview in the Help documentation. Detailed technical specs for the API calls can be found in the Platform Data Methods section of the TCM REST API, and our official Postman collection will help you get up and running quickly. And as always, you can join us in the DataDev Slack workspace if you need some help!