2026-01-25 12:03:00

文 | 本原财经

80年代,赴日交流回到北京的外公,一落地就被亲友簇拥着:“有外汇券吗?”这张人人都想要的券,是用来去友谊商店购买日本进口家电的。

那时候,日本电视几乎垄断了全球高端电视市场,谁家拥有一台上千元的日立、索尼、松下牌电视,谁才是“真正过上了城里的生活”。一个稀罕的归国人员,几乎是一大家子亲友凑齐“三大件”的希望。

三十年河东,三十年河西,更何况是四十多年。

据产业咨询机构Sigmaintell最新统计,2025年全球电视品牌总出货量预计为2.2亿台,前十电视品牌为三星、TCL、海信、LG、小米、创维、Vizio、飞利浦+AOC、海尔、索尼。

三星蝉联销量冠军20年,中国TCL、海信等品牌强势崛起,日系电视品牌仅剩下索尼这根独苗还具有较高市占率。

2026年1月,消费电子产业格局再迎新变:中国TCL宣布与日本索尼成立合资公司,以51%控股比例接手其家庭娱乐业务,其中包括电视机和家庭音响等产品。索尼持有49%的股份,合资公司的相关产品将继续沿用SONY及BRAVIA等核心品牌。

网友们戏称:TCL+SONY=TONY。

值得注意的是,Sigmaintell数据显示,TCL以3041万台位居第二,市场占有率为13.8%;索尼以410万台的出货量排名第十,市占率1.9%;第一名的三星出货量3530万台,市占率达16%。

若将索尼品牌纳入TCL系,或将直接挑战三星的市场份额,拿下“全球电视第一”的王座指日可待。

这又将是一个中国制造业转型升级直至巅峰的典型样本。

全球电视产业的主导权在过去60年经历了三次重大更替:日本黄金时代、韩国崛起、中国逆袭,每次权力转移都伴随着技术路线变革、产业链重构与市场格局重塑。

在CTR彩电时代,日本是绝对霸主。索尼特丽珑(Trinitron)单枪三束技术,亮度是普通电视的2倍,色彩还原度极高;松下、东芝的CRT制造工艺也领先全球。

21世纪,体型轻薄的平板电视成为新趋势,液晶技术(LCD)逐渐取代笨重的晶体管,但几乎所有日本厂商都认为这只是一项过渡技术,索尼坚持CRT技术,松下豪赌等离子。

技术路线选择失误,让日企普遍错失转型窗口。

而韩国厂商却在亚洲金融危机中,进行大规模投资布局,押注液晶面板,最终实现反超。

三星和LG这两个双子星率先布局5-6代液晶面板生产线,在显示面板上得突破;另一个关键转折点是,李健熙提出“卷死自己累死对手” 策略,将三星半导体的500名工程师调至电视部门,研发“超现实引擎”片上系统,拉平和等离子电视之间品质差距。

2007年,三星LCD电视出货量已超越索尼,开启韩系主导的时代。

权利更迭之际,日系品牌则陷入集体收缩,大型电子企业陆续退出电视面板业务。

松下,2013年停止等离子显示面板生产,2023年退出LCD业务,工厂转产新能源电池。

夏普,2016年被鸿海(富士康)收购66%股权;2024年前后关闭大阪府堺市电视面板工厂,彻底退出面板制造。

东芝,在2017年将电视业务出售给了中国海信,成立合资公司TVS REGZA,海信控股95%。其家电业务主体也卖给了中国美的。

日立,2018年宣布停止销售自有品牌电视;三菱电机公司,也在2021年停止向主要零售商发货。双双退出了彩电大战。

其实还有不少消费者坚信“索尼大法好”,索尼已经算日企电视坚持最久的一个。但从2005开始,其电视业务经历连续13年的亏损,陆续出售海外工厂,缩减规模。

日韩大战的之外的中国,则因为“缺芯少屏”,没赶上前两次变革。等到中国厂商意识到LCD技术的重要性时,LCD战争已步入下半场。

中国追赶LCD的难,可以酣畅淋漓地写本书了。

前期忙着“市场换技术”,直到2010年时,中国液晶面板进口额还高达460亿美元以上,仅次于芯片(1569亿美元)、石油(1351亿美元)和铁矿石(794亿美元)。

简言之,2005年时,深圳政府曾计划投资京东方,建设一条高世代产线,日本夏普立马跑去游说,把京东方踢了;京东方后来打算跟上海的上广电合作,外企居然梅开二度,京东方又出局了。

直到合肥这个伯乐出现,2009年拿出当年财政收入的三分之一支持京东方,我国第一条高世代LCD生产线才落地,结束了中国液晶电视屏100%依赖进口的历史。

同年,TCL决定投资245亿元建设华星光电第8.5代液晶面板生产线。董事长李东生力排众议:“我们必须进入面板行业,否则永远受制于人。”这个大胆的决定也为TCL日后在全球市场的竞争奠定了坚实基础。

LG、三星闻讯就变脸了,集体来大陆建设高世代液晶面板生产线,说白了,就是想打压中国大陆的面板产业,封死技术上升通道。

中国企业挺了过来,这里再省略100万字。

根据Omdia年度报告,中国品牌在2016年总出货量首次超过韩国,全球占有率达到33.9%,跃居世界第一。

2017年,中国面板产能首次超过韩国,全球占比达35%。

至今,全球电视产业由日韩游戏变为中韩两国的游戏,技术路线由LED转向Mini LED、OLED、激光混战。

当前,全球电视产业形成Mini LED、OLED、激光电视等多技术路线并存的新格局。

在OLED市场,韩国具有早一步转型的先发优势。

LG是全球唯一的WOLED大尺寸面板供应商,子公司LG Display掌握全球90%以上WOLED面板产能,形成技术与产能双重壁垒,已连续13年位列全球第一,尤其在超高端市场优势显著。

三星则在QD-OLED技术上领先,QD-OLED通过量子点增强OLED,亮度达2000-4500nits(2026款峰值),解决了传统OLED亮度不足和烧屏隐患。

三星QD-OLED与LG WOLED在全球高端市场形成双寡头格局。

有意思的是,2007年,索尼也曾推出全球首台11英寸的OLED电视产品——XEL-1。这比三星、LG等竞争对手推出消费级OLED电视产品早了近10年,却因价格高昂、尺寸过小、销量有限,中途被放弃了。

直到2017年,索尼的大尺寸OLED电视重返战场,这时的WOLED面板依赖LG供应。2018年,索尼通过高端定位的4K/8KOLED扭亏为盈,但市场地位逐渐下滑。

如今索尼在OLED、QD-OLED、Mini LED、RGB高密度LED四大高端技术路线方面均有布局。

高端市场,索尼还是具有较高溢价的,尤其是XR认知芯片让其在画质调教方面独树一帜。同时,索尼还掌握着从拍摄到播放的全链路影音生态。

中国厂商没有死磕韩国擅长的OLED,在Mini LED和激光电视领域实现了弯道超车。

激光电视的领军者是海信,海信也是中国电视市场品牌出货量的佼佼者,并在全球市场持续扩张。在2026年CES展上,海信推出RGBMiniLEDevo技术,通过加入第四种“天青色”LED光源,将屏幕色域提升至新高度。

TCL是最早量产Mini LED电视的厂商,其Mini LED电视全球出货量占比超40%,每卖出两台Mini LED电视就有一台来自TCL,相关专利也位列行业第一。TCL华星还是全球第二大电视面板厂商,这意味着TCL电视整合了从面板研发、生产到终端制造的垂直供应链,成本优势明显,为技术普惠打下根基。

近日TCL动作频频。TCL电子已发布业绩预喜公告,2025年,公司预计实现经调整归母净利润23.3亿-25.7亿港元,同比增长45%至60%。

此外,TCL创始人李东生卸任CEO,交棒给部下王成,王成是推动TCL全球化的重要操盘手。TCL电子又宣布与索尼合作,这也是日本最后一家独立运营电视业务的巨头与中国企业深度合作。

对于索尼来说,电视业务多年不振,改组早在其安排中。高层也早已将公司未来押注在“创意娱乐愿景”上,游戏、音乐、影视和图像传感器业务才是利润的主要贡献者。

索尼电视带着自己的高品质画面、音频技术、品牌价值向中企投诚,可借势TCL的制造能力和供应链优势以降低成本。在日系家电时代落幕之时,索尼以放弃主导权换取活下去的机会,留住了49%的灵魂。

对于TCL来说,它拥有先进显示技术、全球规模优势、垂直供应链和端到端的成本效率,再获得SONY、Bravia等品牌的加持,可加速切入1500美元以上高端市场,改善“规模领先、高端偏弱”的结构性短板,进一步夯实其全球终端影响力,缩小与三星的差距。

若交易顺利,新的合资公司预计在2027年4月开始运营。

日企时代落幕,以后全球消费者能不能用TCL的价格,享受索尼的调色技术呢?

更多精彩内容,关注钛媒体微信号(ID:taimeiti),或者下载钛媒体App

2026-01-25 10:40:01

文 | ICT解读者—老解

近期,国内商业航天领域呈现加速爆发态势。2025年底,我国一次性向国际电联申报20.3万颗低轨卫星,规模超星链计划近5倍;2026年初,低轨卫星互联网星座连续两次在海南发射升空,保持密集组网节奏;蓝箭航天、中科宇航等民营火箭公司集体进入IPO辅导期,带火资本市场相关题材板块。

在政策扶持、资本追捧、技术突破三重加持下,商业航天的市场热度被推向顶峰,万亿级市场的畅想不绝于耳。看好者认为,卫星互联网实现全球无缝覆盖、构建空天地一体化网络的战略价值,将为商业航天带来万亿级市场的巨大想象空间。

但热闹背后,商业航天的商业前景究竟是触手可及的风口,还是资本催生的泡沫?答案或许藏在产业链各环节的价值分布与回报逻辑中。

要看清问题,需先明确界定讨论范围:纯粹商业化运作的航天卫星业务不包括出于国防军工和国家安全需要的航天工程,专业领域的遥感卫星和由政府控制的导航卫星也不在讨论范围内,本文将主要聚焦于市场高关注度的通信卫星产业。

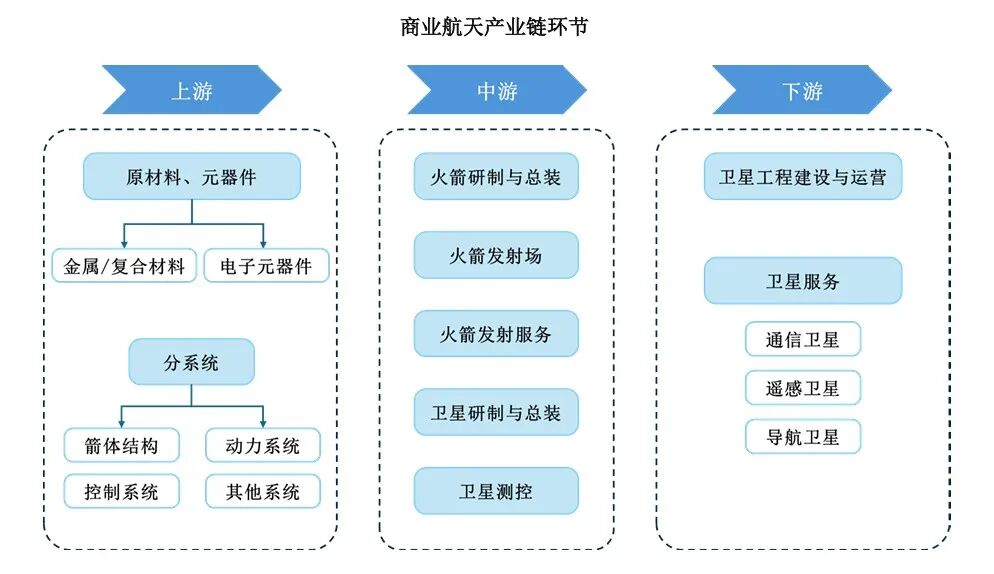

完整的商业航天产业链可划分为上游基础装备(材料、元器件等)、中游卫星制造与火箭发射、下游卫星工程建设与运营三大核心环节,其景气度呈现“下游牵引中游、中游带动上游”的传导逻辑。。

从当前行业动态来看,全产业链的热度均由下游通信卫星的规模化建设需求所拉动。无论是20.3万颗卫星的频轨资源占位,还是中国星网、垣信卫星等企业的星座组网计划,都直接催生了中游卫星制造与火箭发射的订单爆发。国家航天局的最新报告显示,2025年,我国商业航天完成发射50次,全年入轨商业卫星311颗,占比高达84%。

火箭密集升空、卫星大规模入轨,无疑在短期内令中游的卫星制造与火箭发射企业及上游的配套供应商受益。

卫星制造端,作为国家队核心平台的中国卫星2025年前3季度营收同比增长了85%,股价也从年初的29元到年底飙升至95元,市值突破千亿规模。

火箭发射端,以蓝箭航天为代表的民营航天企业发射密度大增,其仅在2025年就进行了11次发射,上半年营收超过3500万元,是2024年全年的12倍。

上游基础装备环节也同步受益,主营火箭系统集成与发射场配套的航天工程2025年前三季度营收同比暴涨 79.16%;火箭核心锻件配套企业航宇科技、主营测控系统的航天电子等业绩也大幅增长,充分体现出商业航天产业发展对产业链的拉动作用。

但繁荣的表象下,隐藏着一个核心矛盾:作为需求源头的下游卫星运营环节,商业回报逻辑至今仍不清晰。当资本和产能持续向中游聚集,若下游无法形成稳定且可观的盈利模式,中期必将反噬中游的成长空间,整个产业链的繁荣也可能沦为无源之水。

蓝箭航天在其最新招股书中也明确提示了“市场需求”方面的风险:“倘若相关星座组网进度延迟或规模调整,将对公司产品商业化节奏及经济效应产生不利影响”,这无疑是对整个产业链的预警。

通信卫星运营是商业航天产业链的价值终端,其盈利能力直接决定了全产业链的商业可行性。当前国内通信卫星运营市场主要包括传统的高通量卫星互联网和新兴的低轨卫星互联网两种格局,但均面临各自的发展瓶颈。

中国卫通作为国家队代表,运营着我国首张覆盖国土全境及 “一带一路” 重点区域的高轨Ka高通量卫星互联网。作为拥有自主可控通信广播卫星资源的基础电信运营企业,其聚焦广电、军政、行业、国际、海空五大领域,在国内高轨通信卫星的G端和B端市场近乎垄断。

业务层面,“海星通” 全球网覆盖超 95% 海上航线,广泛应用于海上能源项目;航空卫星互联网覆盖国内外航线,已部署于国内主要大中型航司。海外市场方面,其在印尼签约农村普遍服务项目,在老挝部署卫星终端,在泰国推进高通量卫星落地。

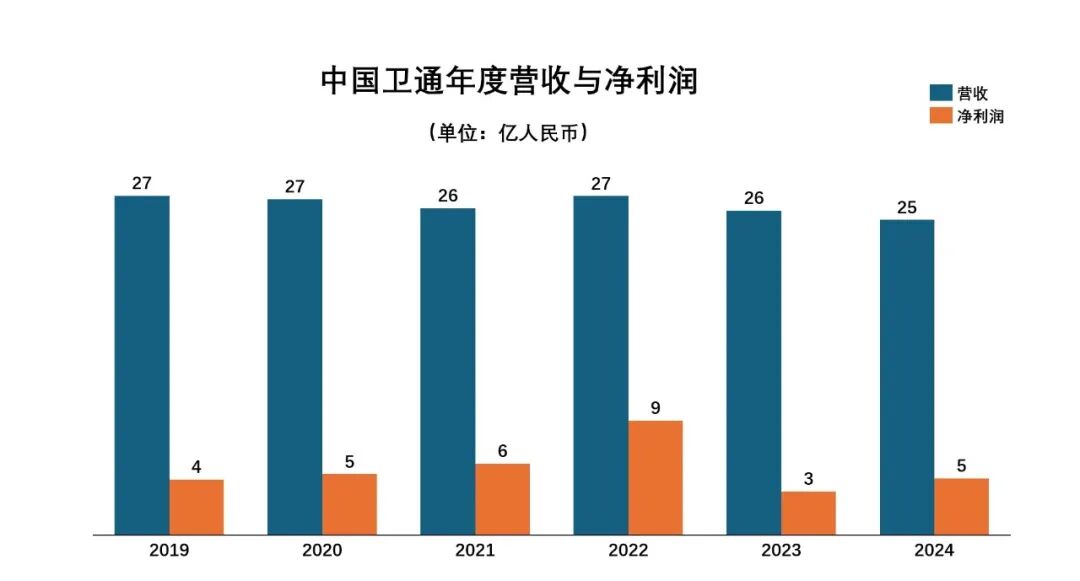

但是,尽管中国卫通在资质、资源、运营能力上优势显著,其近6年的业绩表现却一直平平:年度营收长期稳定在26亿元左右,从未突破30亿元,盈利水平低于10 亿元,与垄断地位严重不符。

具体分析其背后原因,一方面是因为其核心的军政领域营收占比超 60%,但该领域年度政府采购总额的限制制约着营收增长空间,另一方面是其服务的广播电视、应急通信、远洋航运等专业领域的市场容量依然有限,难以支撑大规模营收增长,30亿的营收规模或许正是通信卫星在G端和B端市场的天花板。

正因如此,中国卫通在新卫星发射上也非常谨慎,仅在2023年和2025年陆续增加了3颗高轨卫星,使其运营管理的商用通信广播卫星数量达到18颗。由此可见中国卫通作为传统高轨卫星运营龙头,对于商业航天中下游产业链的拉动作用非常有限。

当前商业航天领域的热度主要由低轨卫星互联网星座庞大的建设需求来激发。

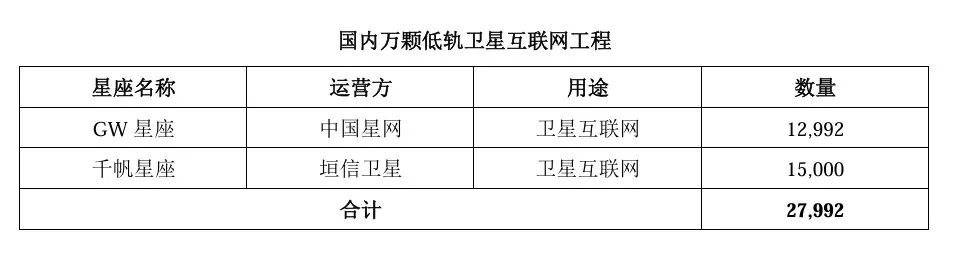

2020年,卫星互联网被纳入“新型基础设施”,低轨卫星互联网星座建设和运营成为商业航天的主要方向,各地和相关企业纷纷加快布局。央企中国星网运营的“GW星座”计划发射约1.3万颗卫星,由上海国资牵头的垣信卫星规划超1.5万颗卫星的“千帆星座”,均将SpaceX星链(Starlink)视为赶超目标,因此备受市场关注。截至2025年底,GW星座已完成17次组网发射,在轨组网卫星136颗;“千帆星座”的在轨卫星数量也达到108颗。

相较于央企中国星网的低调,垣信卫星凭借着市场化运作效率更获资本追捧,累计募资超110亿元,投前估值突破400亿元人民币,被资本市场冠以“中国版星链”标签,给予极高预期。

对照SpaceX星链的实际经营数据,国内市场对于低轨卫星互联网的追捧或许存在过度乐观。

马斯克带领SpaceX在全球范围布局星链多年,累计在轨卫星已超8600颗,但截至2025年底的用户规模才刚突破900万,预计全年营收为100-120亿美元,无论是用户规模还是收入规模均难与传统的电信运营商相匹敌;并且由于星链组网卫星数量庞大,其业务规模化盈利仍遥遥无期。

从技术角度看,低轨卫星互联网虽然在覆盖范围上存在优势,但在连接能力和连接速度上天然与传统电信运营商的4/5G通信网络存在差距;从市场角度看,星链服务对于5G网络就绪的高收入人群并不具备吸引力,而未被5G网络覆盖的广大人口显然缺乏星链所预期的支付能力,因此星链在C端市场的发展并不理想。

2024年开始,星链将其业务收入规模增长的重心放在了机上Wi-Fi的航空互联网上,陆续与包括卡塔尔航空、联合航空等在内的全球多家大型航空公司签约,并将2026年迎来部署高峰期,或有助于其营收增长。

相较于SpaceX星链的全球布局和运营,GW星座和千帆星座在国内面临的市场环境更为复杂。

我国C端个人用户市场留给低轨卫星互联网的空间更为有限。工信部最新数据显示,截止到2025年底我国已建成5G基站483.8万座,全国所有乡镇以及95%的行政村已通5G,5G用户已达12.4亿,远高于全球其他市场;因此国内的卫星互联网在C端的应用场景将主要服务于户外探险、偏远地区工作者等小众群体。

虽然手机直连卫星技术的成熟开始推动卫星通信向大众消费市场的渗透,但应用场景并不普遍,且其入口仍然掌握在电信运营商手中,在手机用户ARPU值不足50元/月的市场环境下,卫星互联网服务商能分到的利润空间非常有限。

由此低轨卫星互联网普遍将B端市场视为商业变现的核心阵地。但B端的连接市场同样也是5G赋能千行百业的使命所在,电信运营商依托5G网络大带宽、低时延和海量连接的技术优势抢占工业互联网、政企专网、智慧城市等高价值的应用场景;低轨卫星互联网的用武之地仍被局限在航空、海洋、高原、荒漠等地面网络的盲区场景。

垣信卫星高级副总裁陆犇在接受媒体采访时,曾明确指出:“未来千帆星座建设完成后,将面向全球市场、以商业化方式运营,集低轨宽带、手机直连、VDES(甚高频数据交换系统)等应用功能于一体,解决沙漠、海洋、戈壁、高空、山区等地面通信网络无法覆盖或断续覆盖的互联网接入问题”。可见,纵使垣信卫星怀揣宏大组网目标,亦认可低轨卫星互联网仅能作为地面通信网络补充的定位。

但在地面通信网络无法覆盖的盲区里,如航空机载、远洋船舶、偏远地区宽带、应急通信等卫星通信的刚需场景,同样也是高通量卫星运营商中国卫通的传统势力范围,作为新兴势力的低轨卫星互联网运营商的进入无疑将进一步加剧卫星通信市场的激烈竞争。

综上所述,从市场容量来看,我国地面通信市场能够容纳三家乃至四家电信运营商并存,核心依托于万亿级的庞大市场规模;而低轨卫星互联网的国内市场空间远小于地面通信,有限的市场容量注定难以同时支撑多家企业实现盈利。对于中国星网和垣信卫星这些上游企业而言,在将前期资本大力投入到星座建设之际,也需要对其后期运营的商业模式进行更审慎的论证与落地,明确差异化竞争路径与可持续盈利逻辑,方能避免陷入 “重建设、轻运营” 的泡沫陷阱。

卫星互联网的本质仍是通信基础设施,与传统通信网络共享 “重资产、长回报周期” 的核心特征,但资本市场却以高科技企业的估值体系对其过度炒作,完全偏离通信运营商的行业本质,这无疑是泡沫化的表现。正因如此,更需清醒认知低轨卫星互联网所面临的商业化困境。

低轨卫星的组网特性决定了需部署数千至上万颗卫星才能实现有效覆盖,制造、发射、运维全流程需持续投入千亿级资本,且运营过程中的卫星补网、轨道维持等仍需不断消耗资金。这与电信运营商对5G等地面通信网络的固定投资和运维支出模式毫无二致,因此其商业化也必然遵循“设备投资-网络覆盖-发展用户-商业回报”的正向循环。

但与地面5G网络部署可以依托人口密集区优先覆盖,先城镇后乡村的分步投资策略不同,低轨卫星的星座必须具备一定卫星在轨规模才能开始商业运营:如垣信卫星的千帆星座建设,第一阶段完成648颗卫星的发射才能实现区域网络覆盖,第二阶段发射648颗卫星,累计达到1296颗在轨才能实现全球网络覆盖。因此低轨卫星互联网的前置投入更大,回收周期更长。

反观其营收端,作为地面通信网络补充的定位已经限定了低轨卫星互联网的市场空间,盲区场景的个人用户ARPU值普遍偏低,难以快速实现营收对成本的覆盖;而航空、海事等核心B端赛道的用户基数相对固定,市场格局已趋于稳定。

B端市场的决策逻辑,往往更看重长期合作稳定性与全场景服务保障能力。中国卫通等高通量卫星运营主体,已与航空、海事、能源等行业龙头企业形成深度绑定的合作生态,构建起极高的行业准入壁垒。低轨卫星运营商若想在B端市场打破现有格局争抢份额,不仅需要构建显著差异化优势,还需承担额外的替换成本,极易陷入“低价换市场”的恶性竞争,大幅摊薄盈利空间。

说到底,低轨卫星互联网虽然是新技术,但解决的依然是连接的老问题,所以必然要在有限市场空间内与高轨卫星、5G、Wi-Fi等既有连接方案展开存量争夺。作为地面通信新一代技术的5G已经商用6年时间,但电信运营商在B端市场推进5G专网业务的进展仍不及预期,传统Wi-Fi连接仍然占据绝大多数流量份额;殷鉴不远,低轨卫星互联网在技术、成本、生态链等多个环节仍然不成熟,其商业化变现之路无疑更为漫长和曲折。

不可否认,商业航天是承载国家战略价值的新兴产业 —— 政策托底筑牢发展根基,技术突破打开想象空间,上游核心器件与中游制造发射环节已涌现出具备国际竞争力的企业,短期订单爆发也带来营收规模的增长。但判断一个产业是否为真风口,终究要看商业回报的闭环能力,而非单纯的技术突破或资本堆砌的虚假繁荣。

当前行业最突出的悖论,便是 “估值倒挂” 的非理性狂欢:下游运营环节盈利前景模糊,高轨卫星触顶营收天花板、低轨卫星深陷商业化困局,中游企业却仅凭组网建设的短期订单预期,撑起脱离通信行业本质的高估值,这种 “重建设、轻运营” 的逻辑注定难以长久。毕竟,若成千上百颗卫星的运营无法产生持续收益,上游的零部件供应、中游的卫星制造与火箭发射,不过是无源之水、无本之木,短暂的订单红利终将耗尽。

商业航天的未来,从来不是单一技术或资本的独奏,而是技术、资本与商业模式的三重共振。卫星互联网作为通信基础设施的 “延伸者”,其长期价值在于打通全域通信 “最后一公里”,与 5G 互补共生,为 6G 时代空天地一体化网络筑牢底座,这一战略意义毋庸置疑。但战略价值的兑现,必须建立在可持续的商业基础之上,唯有让 “发射升空” 的卫星转化为 “持续造血” 的资产,产业链才能形成正向循环。

在此之前,行业更需要的是理性布局而非盲目扩张,资本更需要的是审慎研判而非狂热追捧。商业航天不该是资本催生的泡沫游戏,而应是久久为功的产业深耕,唯有褪去概念炒作的浮躁,聚焦商业化本质的突破,才能真正从 “火爆” 走向 “长红”,让战略价值真正落地为产业价值与商业价值。

更多精彩内容,关注钛媒体微信号(ID:taimeiti),或者下载钛媒体App

2026-01-25 10:39:55

文 | 字母AI

“我和黄仁勋的共同点找到了——都爱吃耙耙柑啊!”

1月24日下午,黄仁勋突然一个闪现,出现在上海浦东陆家嘴附近一个很普通的社区菜市场,逛逛吃吃买买。

大口吃着耙耙柑,还买了一大袋,黄仁勋看起来极其松弛。现场迅速围上来很多人,大家热热闹闹,黄仁勋给人签名、给水果摊老板发了个签名红包。

而人们高高举起的手机拍摄到的画面,也很快传遍了社交媒体。要说最懂得拿捏流量,还得是黄仁勋。

以后就知道了,春节之前哪怕逛菜市场都要随身带着纸和笔,说不定就和黄仁勋偶遇了。

春节前来中国,尤其是北京、上海、深圳和台湾,已经是黄仁勋每年的例行活动。大家都知道他要来,但还是没想到这次会这么“接地气”。

去年春节前的中国行,黄仁勋是真低调:整个行程严格保密,聚焦在和员工、供应商、合作伙伴的交流上。今年则看似低调,实则“偷偷高调”。

背后,是黄仁勋2026年的一场硬仗。

黄仁勋知道自己在做什么。

他的每一次公开露面,往往都像精心策划的流量事件,精准捕捉公众注意力。相比埃隆·马斯克(Elon Musk)那种高调张扬、常常引发争议的风格,黄仁勋更懂得“低调中带点高明”的艺术。

马斯克是硬刚,黄仁勋是软着陆:穿黑夹克、爱吃中国菜、懂发红包、会说几句中文梗。

其实每年春节前他都会来中国参加公司年会(尾牙),这是固定节目。

跟员工吃顿饭、发红包、见见供应商、聊聊业务,顺便感受一下中国过年的氛围。

但每次来的“味道”都不尽相同,基本能看出英伟达当时过得怎么样。

去年他来中国的时候,基本是全隐身状态。行程高度保密,几乎未在公共场合露过脸,就是深圳、北京、上海三个城市的分公司转一圈,以内部活动为主。

当时中美芯片管制正闹得凶,特朗普即将上任,英伟达的游说还在进行中,不稳定因素颇多。

彼时黄仁勋的中国行,主要任务在于“稳定军心”,任何高调的行为都有可能影响在华盛顿的游说效果。换句话说,他必须低调。

今年明显不同。

1月23日下午刚落地上海,黄仁勋先去张江的新办公室转了转,第二天下午直接“杀”到菜市场。

AI芯片H200的出口管制已经有松动的可能性。此前特朗普明确在社交媒体表示,将允许英伟达向中国出售H200人工智能芯片,但是要收25%的费用。

虽然前方不确定因素犹在,但已经不是去年那种必须极致小心的状态了。

此时,黄仁勋在中国行中适当的高调也许已经不会对在华盛顿的游说有负面影响,但是却同时能在更广泛的层面起到安抚员工、供应商以及客户、合作方的作用。

纷纷扰扰的几年间,黄仁勋早已反复用行动表态:中国市场,英伟达是不会轻易言弃的。

去年10月,黄仁勋曾在一次采访中透露:“中国市场此前(在出口管制前)占英伟达数据中心业务收入的20%到25%。”

黄仁勋也已经做好两手准备,在H200外,新一代Blackwell架构下的“中国特供版”芯片很可能已经在路上。多家媒体曾报道,新“特供版”芯片为B30A,性能是上一代“特供版”芯片H20的六倍,定价也远高于H20,高达2.4万美元。

来上海之前,黄仁勋刚在达沃斯世界经济论坛跟黑石的CEO进行了一场重磅对话。

这场对话是今年达沃斯最受关注的环节之一,黄仁勋说的内容也可谓大开大合,猛猛给市场来了一针强心剂。

他提出AI的“五层蛋糕”理论,从最底下往上分别是:

· 能源(power)——AI太吃电了,得先解决电从哪来;

· 芯片 + 计算基础设施(chips & compute)——英伟达的核心地盘;

· 云数据中心(cloud data centers)——亚马逊、微软、谷歌这些在建的巨型仓库;

· AI模型(AI models)——像ChatGPT、Claude、Grok这样的“大脑”;

· 应用层(applications)——最后落到大家手机、工厂、汽车里的各种AI工具。

黄仁勋表示,人工智能基础设施在未来几年需要数万亿美元的额外投资。

建厂、拉电线、铺光纤需要一大堆电工、水管工、建筑工,这些蓝领工人的工资会被拉到年薪六位数(几十万美元起步)。AI不是要抢人类饭碗,而是创造就业。

至于AI泡沫论,黄仁勋也再次表达了不同意见:

“之所以出现所谓AI泡沫(的争议),是因为投资规模巨大。而投资规模之所以巨大,是因为我们必须为上方所有的AI层级构建必要的基础设施。”

换句话说,需求都是实打实的,何来泡沫?

去年的春节中国行之前,黄仁勋也参与过一次重要活动——CES消费电子展。

彼时黄仁勋的keynote演讲时长约90分钟,更像一场产品发布会,焦点集中在具体的技术创新和硬件落地,强调AI从概念向实际应用的转变。

黄仁勋一贯的幽默风格贯穿全程,他穿着标志性黑皮夹克,现场互动频繁,整体调性是“秀肌肉”——通过密集的产品发布和演示,展示英伟达在消费级和企业级领域的领先地位,吸引开发者、消费者和投资者。

达沃斯的对话与去年CES演讲的调性不同,固然有活动本身的影响,但同时也传达出黄仁勋在当下、站在2026年的开端,最需要给世界传达的讯息——整个AI行业大有可为,新的一年仍然需要大力投入,英伟达依然凶猛。

达沃斯论坛的发言结束后,英伟达股价出现明显上涨,从1月20日收盘的178.07美元上涨到1月23日收盘的187.67美元,累计涨幅约5.38%。

全球芯片板块也随之走高,1月22日,韩国综合股价指数(KOSPI)盘中首次突破5000点大关,创历史新高。半导体龙头三星电子和SK海力士盘中涨幅分别高达4.48%和4.19%。

黄仁勋也在一档播客节目中回应了“英伟达在台积电的优先级高于苹果”的传闻,承认英伟达目前确实已经成为了台积电的最大客户。

据财联社,有业内人士估计称,英伟达现在占到台积电总营收的13%,位居第一。

然而,这并不意味着压力已经消失。相反,2026年对黄仁勋和英伟达而言,更像是一场必须落地兑现的考验。

2025年,业界讨论的焦点集中在“AI泡沫是否已经形成”。

大量资金涌入,估值快速膨胀,但实际回报路径仍不清晰。进入2026年,争论的中心已经转向另一个问题:这么多钱砸下去,基础设施能否真正建起来,产出能否匹配预期。

今年有几项关键事件会让这场仗变得格外艰难。

首先,大规模基础设施建设必须从纸面走向现实。

五层蛋糕的底层——能源供应、芯片工厂、数据中心——需要在2026年看到实质性进展。

如果项目延期、成本超支,或者终端需求没有如期跟上,泡沫质疑就会迅速放大。

其次,明星AI公司的IPO窗口正在临近。

Anthropic最早可能在下半年上市,估值传闻在3500亿美元左右;OpenAI的估值更高,可能接近5000亿美元。

这些公司一旦进入公开市场,盈利能力、现金流消耗速度、竞争格局都会被置于放大镜下审视。

英伟达作为上游芯片供应商,也不可避免地会被一同评估。

如果这些头部玩家上市后表现疲软,整个AI生态的信心都会受到连锁影响。

最后,中国市场仍需努力。

尽管H200芯片已获部分放行,但英伟达在中国市场的份额已经大幅受挫(去年初黄仁勋曾表示,英伟达在中国的市场份额从95%降到0%),本土厂商正在加速追赶。同时,审批流程、关税成本等问题依然存在。

中国市场份额的进一步流失将直接冲击英伟达的收入结构。

菜市场吃耙耙柑因此有了更深的意味。表面上是黄仁勋个人风格的延续——亲民、接地气、喜欢中国市井生活。

但放在2026年的背景下,它更像一场提前布局的公关动作。

黄仁勋依然穿着那件黑夹克,笑容温和,但每个人都清楚,这一年真正要面对的考验才刚刚开始。

更多精彩内容,关注钛媒体微信号(ID:taimeiti),或者下载钛媒体App

2026-01-25 09:19:08

文 | 不慌实验室

继摩尔线程、沐曦股份、壁仞科技之后,“国产GPU四小龙”中最后一家未上市公司——燧原科技,于2026年1月22日正式向科创板递交了IPO申请,国产高端芯片核心阵营终于在资本市场聚首。

此次科创板IPO,燧原科技拟募集资金60亿元,将全部用于五代、六代AI芯片研发及产业化与软硬件协同创新项目,持续加码云端AI芯片核心赛道。

成立于2018年的燧原科技,由两位拥有AMD核心任职经历的创始人赵立东、张亚林联合创立。近八年间,公司持续聚焦云端AI芯片领域,已相继完成四次5款云端AI芯片的迭代更新,构建出芯片、加速卡、智算系统及软件平台的完整产品体系。

相较于其他“四小龙”,燧原科技的核心优势在于“差异化卡位+全栈落地能力+产业深度绑定”。

在摩尔线程、沐曦股份、壁仞科技等国产同行纷纷选择跟随英伟达GPGPU技术路线的背景下,燧原科技亦然选择了非GPGPU架构。

不同于GPGPU架构,非GPGPU架构具有更高效能、更大数据吞吐量和更低功耗特性,在推理场景下展现出显著优势,开始加速部署。

燧原科技预计,随着大模型行业逐渐从大规模训练阶段走向推理落地阶段,2026年全球AI推理对AI加速卡需求将超过AI训练场景,中国市场这一趋势更加明显。而推理场景对于CUDA生态的依赖在持续减弱,非GPGPU架构AI加速卡需求占比将逐步提高。

行业趋势印证了这一判断。谷歌于2025年11月发布的Gemini 3大模型,正是基于自研TPU(非GPGPU架构)训练完成,打破了英伟达在大模型训练领域的垄断格局。

高盛全球投资研究部的模型预测,在AI服务器的AI芯片中非GPGPU芯片的出货占比将呈现明确上升趋势,预计将从2024年的36%,逐步增长至2027年的45%。

在技术层面,燧原科技构建了完整的软硬件协同体系。其自研的“驭算TopsRider”软件平台,大幅降低了大模型开发与迁移成本;在系统层面,ESL超节点产品可支持单节点32卡或64卡的高密度部署,精准匹配千亿参数大模型的训练与推理需求。

这种从芯片到系统的全栈能力,使燧原科技不仅提供算力硬件,更能交付端到端的解决方案,在国产AI芯片厂商中形成了独特的竞争优势。

燧原科技与腾讯的深度绑定,同样构成了其商业模式的另一大支柱。

自燧原科技Pre-A轮起,腾讯便相继参与了多轮融资,目前已成为燧原科技最大的机构股东,与一致行动人合计持股约20.26%。

这种资本纽带迅速转化为业务协同。2022年至2025年前三季度,燧原科技对腾讯科技(深圳)有限公司的销售金额(包括直接销售和AVAP模式销售)占总营收比重由8.53%飙升至71.84%,为第一大客户。

凭借差异化的技术路径、深度的产业协同和完整的产品体系,燧原科技展现出令人瞩目的成长动能。2022年至 2024年,燧原科技营业收入从0.9亿元增长至7.22亿元,复合增长率高达183.15%。

2024年燧原科技AI加速卡及模组销量达3.88万张,以1.4%的市场份额在国内厂商中名列前茅,在“国产GPU四小龙”中,其营收与净利润规模均位居第二。

然而,光鲜的增长背后是“高投入、长周期、慢回报”的成长痛点。自2022年起,燧原科技已累计研发投入近45亿元,期间研发费用率虽有所下滑,但截至2025年三季度仍在164.77%高位,导致公司尚未转盈。

2022年至2025年9月,燧原科技分别录得净亏损分别为11.16亿元、16.65亿元、15.10亿元、8.80亿元,尽管亏损面有所收窄,但盈利之路依然充满挑战。

值得注意的是,燧原科技预计最早于2026年达到盈亏平衡点,但该测算不构成盈利预测或业绩承诺。这意味着,公司至少还需要承受一年的亏损压力,而能否如期实现盈利,仍取决于产品商业化进展、研发投入控制等多重不确定因素。

但在硬科技的漫长赛道上,亏损已是常态,资本的耐力往往决定着谁能走到最后。

的存货数据的变化已经开始令人警觉。2022年至2025年9月末,燧原科技的存货账面价值由2.75亿元增至9.58亿元,公司将其归结于"战略性备货"。

然而在半导体行业,技术的迭代速度堪称残酷,"战略储备"很可能随时面临价值缩水的风险。期间公司存货跌价准备金额也同步攀升,由3596.50万元增至1.90亿元。

其次,应收款同样值得关注。2022年至2025年9月末,燧原科技应收账款由0.82亿元增至3.85亿元,主要因为腾讯等大客户拖欠账款所致。

从客户集中度整体情况来看,燧原科技常年超9成收入来自五大客户,其中7成来自腾讯,客户结构极度集中,代表着议价能力薄弱。从公司应收账款坏账准备计提比例来看,已从2022年的1.01%升到2025年9月的9.18%。

截至2025年 9月末,燧原科技货币资金27.34亿元,同期短期借款1.76亿元、一年内到期非流动负债1.42亿元、长期借款4.01亿元。

尽管从静态财务数据看,公司当前并无迫在眉睫的短期偿债压力,但若以当前的亏损速度持续消耗现金储备,其资金安全边际将面临持续收紧的考验。未来的经营持续性,将在很大程度上取决于此次IPO能否成功募资,以及后续商业化进程能否加速兑现。

在现金流与存货等财务压力之外,燧原科技更深层的挑战来自于技术、生态与市场之间的系统性矛盾。

首先,技术迭代的节奏已超越单一产品突破的维度。云端AI芯片的竞争已进入全栈能力的比拼阶段。燧原科技虽在非GPGPU架构上确立了差异化优势,但这一优势的维持需要持续的代际升级与生态适配。公司的五代、六代芯片研发不仅需要追赶摩尔定律的物理极限,还需在软件栈、系统集成及能效优化上实现同步突破。在行业标准快速演进、算法模型持续迭代的背景下,任何技术路线的领先窗口期都在不断收窄。

与此同时,产业生态的博弈正成为新的竞争壁垒。当前国内AI算力市场呈现多元竞合格局:华为昇腾依托全栈自主生态持续扩大场景落地;其他国产GPU厂商加速向推理市场渗透;而英伟达凭借CUDA生态的长期积累,仍主导着开发者心智与高端市场。燧原科技自研的“驭算”平台虽在特定场景验证了可行性,但要构建具有广泛吸引力的开发生态,仍需在工具链完整性、框架兼容性及社区运营上投入长期资源。生态建设的滞后可能使硬件优势难以充分转化为市场占有率。

更宏观的挑战在于供应链安全与技术自主的平衡。尽管国内半导体制造能力不断提升,但高端AI芯片所需的先进封装、存储及互联技术仍面临供给约束。而在全球科技竞争动态变化的背景下,如何确保技术路线的持续演进不受外部制约,已成为所有国产芯片企业必须面对的战略课题。

总体而言,燧原科技的科创板IPO,既是企业自身近8年技术积累和商业化探索的成果,也是国产非GPGPU架构AI芯片企业走向资本市场的重要标志。

在AI推理场景爆发的行业背景下,公司凭借差异化的技术路线、深度的客户绑定和完整的产品体系,迎来了良好的发展机遇,但同时也面临着客户集中、研发投入、市场竞争等多重挑战。

未来随着60亿元募资的落地和五代、六代芯片的研发推进,燧原科技能否顺利实现2026年盈亏平衡的目标,能否在激烈的市场竞争中进一步扩大市场份额,能否成功实现客户多元化布局,值得市场持续关注。

而燧原科技的发展之路,也将为国产AI芯片产业的多元化发展提供重要参考,推动国产算力在AI推理赛道实现更大的突破,成为中国算力自主化版图中的重要拼图。

更多精彩内容,关注钛媒体微信号(ID:taimeiti),或者下载钛媒体App

2026-01-25 09:03:29

文 | 一点财经编辑部

“跟大家重新建立连接,之前被骂惨了。”

最近,王小川又密集跟媒体见面,这样解释重新回归公众的原因。

去年4月,这位前搜狗输入法创始人、现在的百川智能创始人,经历了一场“至暗时刻”:业务线收缩、团队目标摇摆、高管陆续离职......质疑声潮水般涌来。后来,他公开反思错误,承认战线拉得过长,以后将重点押注医疗AI。

此后,这位曾喊出“理想上比Open AI慢一步,落地上快三步”的明星CEO,一度消失在公众面前。

如今9个月过去,王小川又频繁出现并且密集发声。账上约30亿现金,聚焦医疗AI赛道,2027年启动上市——这是王小川现在反复强调的三件事。

医疗AI的热度最近确实在上升:OpenAI、Claude、蚂蚁阿福都有新动作。但对于王小川来说,巨头入局往往意味着竞争加剧,而非机会增多。不过,选择这条赛道本身已是一种勇气——医疗AI门槛高、周期长、监管严,敢于All in的创业者并不多。

只是,当智谱、MiniMax等同属“AI六小龙”的玩家已经登陆资本市场,百川的路径还不太明朗。

还有一年时间,它真的能成功上市吗?

王小川喜欢用“回归初心”来解释百川的转型,但事实可能更残酷。

看看时间线就明白了。2023年百川成立时,王小川画了一张大饼:底层模型要对标OpenAI,C端做中国的ChatGPT,B端要横扫金融、教育、法律,医疗领域还要造出“AI医生”。

一年后,这幅画面就彻底变了。

深层的业务调整发生在2024年下半年。百川的To B团队被裁撤,金融业务组解散。说好的现金奶牛,转眼成了需要砍掉的负担。

更直接的压力来自竞争对手。2025年初DeepSeek的爆火,让百川停止预训练新的超大规模通用大模型。一些潜在客户直接转向DeepSeek,已经准备合作的客户甚至直接“反悔”。高管的接连离职,更是折射出团队的不稳定状态。

王小川把这一切归结为“战线过长、过早商业化”。他在全员信中反思,这极大增加了组织的复杂度,要进行战略收缩,押注医疗AI。

然而,AI战场瞬息万变,一步错就会大变天。当百川收缩战线时,其他玩家正在加速前进:智谱AI和MiniMax已经上市、月之暗面又拿到新融资.......

医疗AI赛道正变得越来越拥挤。国际方面,OpenAI上线Health能力;国内方面,蚂蚁集团推出AI健康应用阿福,短期内月活用户突破3000万。

巨头入场往往意味着竞争加剧,他们有更丰富的生态资源、更雄厚的资金实力、更庞大的用户基础。百川作为创业公司,如何在这些巨头夹击中找到生存空间?

王小川对竞争对手的看法很直接,他说蚂蚁阿福广告太多,而且主要是给普通消费者用的,医生端的决策感受不强。

谈到国外的OpenEvidence,王小川觉得只是服务医生的工具。他说百川的产品不一样,能让患者听懂、能帮患者做决定、还能告诉患者该怎么做,是直接服务患者的。他认为,自家这样的产品定位在全球是独一无二的。

对那些一窝蜂做医疗模型的公司,王小川更不客气,直言现在市面上有500多个垂直医疗模型,但他们根本不懂什么叫模型。

王小川的确擅长技术,但百川自己真的能在医疗AI这个赛道,找到全部的未来吗?

王小川最近抛出了个新概念,叫“权力让渡”。说白了,就是想让AI把医疗决策权从医生手里分一点给患者。

他认为医疗的核心问题是优质医生供给不够、医患权力不对等。这话没错,谁看病没遇到过三言两语就被打发走的经历?患者确实比较被动,医生说什么就是什么。

王小川的想法是,让AI当翻译官,帮患者理解病情、比较方案、参与决策。这种理念让人想起《超能陆战队》中的“大白”——一个温暖、贴心的家庭医疗机器人,但现实中要打造这样的AI,远不止技术那么简单。

第一,患者是否真的愿意并能够承接这份“权力”?

医疗决策不是买菜。买贵了买错了,顶多亏点钱。医疗决策错了,可能直接影响病情甚至生命。让一个没学过医的普通人,去决定用A方案还是B方案,这责任他恐怕不敢担,也担不起。

王小川举了个例子,说医生可能给出保守和激进两种方案。AI可以帮患者分析利弊。听起来挺好,但问题在于,医疗决策中有大量灰色地带。连专家都会吵起来的病例,AI能否帮普通人做出更好选择是个大问题。

第二,医生是否会心甘情愿地“让渡”权力?

王小川反复强调,这不是动医生的蛋糕。但是,中国的医疗体系里,医生不仅仅是技术提供者,还是风险过滤器、资源分配者。突然插进来一个AI,说要帮患者做决定,势必会打破现有的体系格局。

现在用AI的多数是年轻医生,拿它当工具用。真正的话事人,那些主任、专家,他们对AI的态度可没那么热情。现在说要“权力让渡”,可能很多医生不会同意。

一个有三十多年丰富经验的医生告诉我,他并不相信AI,更相信自己的知识和经验。另一个刚进入医院的年轻医生则表示,自己刚开始会用AI查病情,但有时会给出错误答案,所以现在自己还是偏向查阅权威资料。

在技术层面,百川的医疗大模型Baichuan-M3在评测中的确表现不错,幻觉率降到3.5%。但这也意味着每100次问诊,可能有3到4次给出错误建议。

在别的领域,3.5%的错误率也许能接受。但在医疗领域,一次错误就可能酿成大祸。

王小川与张文宏的隔空对话,更能反映医疗AI落地的现实困境。

张文宏担心年轻医生过度依赖AI,王小川回应:“医生的成长不能以当下患者作为成本。”但是,如果AI真的深度介入医疗决策,医生该怎么培养?现在的医学教育体系要不要推倒重来?这些都是问题。

在回应张文宏“拒绝把AI引入医院病历系统”的言论时,王小川还表示:“他有他的道理,只是说屁股会决定脑袋的位置。”

最实在的问题还是钱。

王小川表示,百川将在今年上半年发布两款To C的新产品,重点服务于患者的辅助决策。上线初期免费开放,之后探索付费模式。

但是,医疗行业的付费逻辑没那么简单。中国老百姓为医疗服务付费的习惯还没完全养成,为AI医疗建议付费更是新鲜事。这块市场有多大?能撑起一家公司的估值吗?

目前中国医疗AI赚钱主要靠两条路:要么医院采购,要么医保支付。百川选了一条最难的路——直接向患者收费,但直接向患者收费的产品没几个活得好。健康管理、在线问诊这些轻量服务还行,真要涉及严肃医疗决策,患者愿意掏多少钱?

王小川还盯上了院外市场,说未来增量在院外不在院内。

院外健康咨询市场确实大,但从商业角度看,头疼脑热上网查查,这种服务的商业化空间并不大。真正值钱的都在院内。大病手术、重症治疗、慢性病管理,这些才是医疗行业赚钱的地方。但这些场景,AI能进去吗?患者敢完全依靠AI做决定吗?

王小川的“权力让渡”听起来很美好,但医疗体系是个复杂的利益网络,牵一发而动全身。AI想在这里面分一杯羹,光有技术不够,光有理念也不够。它需要面对医生的疑虑、患者的恐惧、监管的审慎、商业的残酷。

当然了,世界也需要王小川这样的理想主义者。如果真的能够解决这些难题,那么将创造出巨大的社会红利。

百川的目标是在2027年启动上市。时间看似还有两年多,但资本市场的耐心是有限的,留给医疗AI的窗口期不会一直敞开。

根据天眼查的信息,百川上一轮融资停在2024年7月,拿了50亿,估值200亿。之后就没再有公开的融资消息。

△来源:天眼查

现在的环境和前两年不一样了,钱不好拿了。投资人更谨慎,都要看实际的东西:用户有多少、收入从哪来、增长稳不稳定。通用模型公司尚且被追问盈利,医疗AI周期更长、门槛更高、支付环节更复杂,投资人的等待自然会更慎重。

王小川常提账上那30亿现金,这是百川的底气。但医疗AI确实是个高投入的领域,每一分钱都得算着花。

光是医疗数据的合规处理,就是一大块固定支出。清洗、标注、脱敏,每一步都得花钱,还得紧跟越来越严的隐私法规。这还不包括临床试验——做医疗AI不能只靠论文和实验室数据,得进医院、跑流程、跟踪病例,这些都需要真金白银的投入。

用户获取也不轻松。做面向消费者的医疗产品,不打广告很难触达用户,但营销投入一上去,成本压力就来了。医疗产品建立信任比普通产品慢,用户尝试门槛高,转化起来自然更费劲。

这么看,30亿要支撑到2027年,每一步都得走得扎实。

王小川的策略是走差异化,聚焦“严肃医疗”,但做起来并不容易。

严肃医疗场景价值高,门槛也高。想进去,需要行业里的积累和信任,这不是技术参数好就能解决的。百川和北京儿童医院、肿瘤医院这些机构合作,方向是对的,但合作能带来多少收入验证、产品能否真的跑通,还需要时间。

出海是另一条路。王小川说“不能出海的医疗公司不是好公司”,决心有,难度也不小。各个国家的医疗体系差别很大,监管要求各不相同,从FDA到CE认证,每一关都要时间和资源。

2027年不远,时间其实挺紧。百川得在这期间完成产品验证、用户积累、收入提升和合规建设,每一项都是硬任务。另外,到了2027年,资本市场对医疗AI还有多少热情,现在谁也说不好。

这条路注定不容易,但方向如果能走通,机会还是有的。

王小川最近在采访中分享了一个细节:“生命比天气预报还复杂,凭什么背后有规律?所以我花时间去研究,总想找到背后的数学模型。”

这种对底层规律的执着,是技术人的浪漫,也是创业者的挑战。医疗体系的复杂性,可能远超天气预报和基因组学。它不仅涉及技术问题,更涉及人性、制度、文化等多重因素。

百川的未来,不仅取决于王小川的技术洞察,更取决于他对商业现实的理解和把握。2027年启动上市是一个目标,但能否实现还要看接下来每一步走得是否扎实。

在AI医疗这条漫长而艰难的路上,理想主义是必要的灯塔,但只有同时握紧技术、商业与现实的舵,才有可能穿越风浪。

更多精彩内容,关注钛媒体微信号(ID:taimeiti),或者下载钛媒体App

2026-01-25 08:57:25

文 | 雷达财经,作者 | 丁禹,编辑 | 孟帅

中国工程院院士李立浧、智元机器人CMO邱恒、演员黄景瑜、探路者品牌创始人王静、啟赋资本董事长傅哲宽等业界名流,甚至还有硅基生物众擎机器人PM01,即将成为国内首批太空游客。

而将前述游客送“上天”的创业者,正是90后湘妹子、穿越者创始人兼CEO——雷诗情。

1月22日,雷诗情身着干练西装,亮相穿越者太空旅游全球发布会,向外界公布了穿越者的商业载人航天产品线规划。

按照穿越者规划的蓝图,其将在2028年前后实现首飞,将游客送至距地面100公里的“卡门线”;2032年挺进距地400公里的轨道;2038年进一步突破至月球轨道,开启地月空间探索之旅。

可以作为参照的是,杨利伟2003年乘坐神舟五号进入的轨道高度为343km,中国空间站轨道高度为400-450km。

而就在发布会召开前的1月18日,穿越者刚刚完成了中国商业航天首次载人飞船全尺寸试验舱着陆缓冲试验,成功跻身全球第三家研发并验证载人飞船着陆缓冲技术的商业航天企业。

值得一提的是,不久前IPO申请获正式受理的蓝箭航天,其创始人张昌武从金融行业跨界而来。而穿越者的掌舵人雷诗情,同样有着跨度极大的跨界履历。

雷达财经了解到,本科就读于暨南大学新闻专业的雷诗情,曾担任中央电视台记者、主持人,后在中国人民大学读研期间由文转理,将研究方向锁定在载人航天人因工程。2023年,雷诗情正式创办穿越者,借此开启充满未知与挑战的创业征程。

如今,点开穿越者官网,即可预定这张价值300万元的航天门票。有媒体透露,预付10%即可锁定名额。

从官网简介来看,承接本次太空之旅的飞船是穿越者壹号(CYZ1)亚轨道载人飞船,其重量不超过8吨,最大直径5m,舱内空间为25立方米,可重复使用率高达99%。

据悉,穿越者壹号单次可携带最多七名旅客,抵达距离地球表面100km高的“卡门线”(地球大气层与外太空的分界线),让旅客享受3-6分钟的失重体验。

目前,穿越者公布了其三步走的技术路线。第一步在第3-4年,实现亚轨道可重复使用商业载人飞船(CYZ1)研制,2028年实现中国乃至亚洲的太空旅游,开启普通人、商业载荷低成本、常态化上太空新纪元。

第二步是在第6-10年,实现入轨级可重复使用商业载人飞船(CYZ2)研制和目标飞行器交会对接,以及点对点运输,实现普通人、商业载荷上太空的层次升级,为国家送人送货,开展太空酒店常态化运营,开启太空商业综合体建设,全球1小时到达洲际运输,颠覆长旅行交通运输方式。

第三步是在第12-15年,实现登月级、深空探测可重复使用商业载人飞船(CYZ3)研制,开展商业载人登月旅行,打造地月经济圈。

2023年10月10日,穿越者迎来首位太空游客签约,智元机器人CMO邱恒自费购买中国首张商业航天“太空船票”,成为“中国001号商业航天员”。

据穿越者壹号技术团队介绍,截至目前,穿越者2028年的商业太空旅行已预订超三艘船,合计20余位太空游客,单张船票价格为300万元。

在发布会上,雷诗情指出,中国商业航天正从技术验证迈向“技术+运营”双轮驱动,太空旅游作为唯一面向全球消费端的赛道,将成为撬动在轨服务与地月经济的战略支点。

同时,雷诗情表示,穿越者是目前国内唯一获得了国家级商业载人航天项目立项批复的民营企业,计划在2028年前后实现亚轨道首飞。

穿越者正在打造的中国首艘全可重复使用的商业载人飞船计划通过复用,首先将票价从亿元级推向百万级,最终走向所期待的亲民性。

此外,穿越者还将联合多方打造覆盖科技、医疗、文旅、教育等领域的“太空经济生态树”,推动中国太空产业实现商业闭环。

作为中国首家商业载人航天民营企业,穿越者致力于打造中国的“龙飞船”,实现为国家和商业低成本送人送货。

天眼查显示,自2023年成立以来,穿越者已顺利完成4轮融资,累计融资金额至少达数千万人民币级别。

而站在其穿越者身后的投资方阵容堪称豪华,包括启迪之星创投、中天汇富、启榕创投、彬复资本、啟赋资本、南粤基金等多家知名机构。

至于身为穿越者创始人、CEO的雷诗情,则是公司的实际控制人,其目前持有公司34.66%的股份。

回溯穿越者的发展历程,公司成立第二年,穿越者壹号(CYZ1)亚轨道载人飞船技术方案可行性研究报告通过国内载人航天领域院士、专家的评审,正式进入工程研制阶段,开始初样飞船的投产研制。

据悉,穿越者原本计划2025年下半年开展中国商业航天首次全尺寸载人飞船着陆缓冲试验,验证着陆系统设计的有效性,打造“云感降落”极致体验。

雷达财经了解到,本次试验最终于2026年1月18日圆满完成,该试验完全模拟飞船再入返回后的真实着陆工况。

试验结果表明,在试验舱初始速度约7米/秒的条件下,“云感着陆”系统能够将舱体整体触地冲击过载稳定控制在5g以内,这一关键指标表现超出试验预期。

同时,试验后经检查确认,缓冲过程平稳,舱体结构完好,船载设备正常,充分验证了“云感着陆”系统设计的先进性、可靠性与适应性。

此外,穿越者还计划在2026年开展载人飞船零高度逃逸试验,验证载人飞船总体方案及逃逸救生关键技术。

尽管穿越者前进的步伐从未停歇,但和国外相对更为成熟的商业航天巨头相比,穿越者目前仍处于起步探索的阶段,存在明显的差距。

以SpaceX的载人龙飞船为例,其早在2019年3月便完成首次无人试验飞行任务,并成功实现与国际空间站的对接任务。

一年之后,SpaceX又顺利完成全球首次商业载人试飞任务,将NASA宇航员Bob Behnken和Doug Hurley安全送回地球,开启了商业载人航天的新纪元。NASA计划每隔几个月用SpaceX公司的航天器将宇航员送入太空,每个座位的费用估计为5500万美元。

据外媒统计,截至去年6月底,SpaceX已发射18次载人太空飞行,其中11次为NASA发射,7次为商业客户,共将68人送上太空。

若将亚轨道载人飞行也纳入其中,2021年7月,蓝色起源在西德克萨斯一号发射场利用“新谢泼德”系统成功实施首次载人亚轨道飞行,将公司创始人贝佐斯等4人送入亚轨道。

而在前述飞行完成的几天前,维珍银河抢先一步将公司创始人布兰森送入太空。2023年6月底,维珍银河又完成首次太空船搭载普通游客的亚轨道飞行。

据界面新闻,维珍银河开发的最新飞行器将于2026年中下旬投入使用,计划每年总载客量约750人,每次飞行设6-8个席位,此次太空总费用为60万美元(约合人民币435万元)。

而在国内,中科宇航、深蓝航天等企业也在积极布局亚轨道飞行。2024年10月,深蓝航天通过淘宝直播间售出两张次亚轨道载人旅行飞船船票,每张订金5万元,直播间优惠价100万元。

与马斯克、贝索斯等自带硬核科技标签的“航天前辈”不同,雷诗情是一位文科生出身的90后湘妹子,其跨界逐梦的经历为穿越者这家企业注入了独特气质。

高中时期,雷诗情就读于湖南衡阳顶尖的衡阳市第八中学,还曾担任学生会主席,早早展现出出众的组织与领导力。

之后,雷诗情成功考入暨南大学新闻与传播学院学习。从暨南大学毕业后,雷诗情曾在央视中文国际频道(CCTV-4)工作,主持过多档聚焦大国重器、科技前沿与国家名片的大型系列专题节目。

在此期间,雷诗情曾参与录制央视大型商业航天专题系列片《逐梦太空》,这成为她与商业航天结缘的契机,也彻底点燃了她对这一领域的探索热情。

为了更深入地深耕航天领域,雷诗情在中国人民大学读研期间,毅然做出由文转理的大胆抉择,将研究方向锁定在载人航天人因工程,师从著名航天专家陈善广院士。

为了研究商业载人航天,雷诗情曾特意前往世界最大的航天发射场肯尼迪航天中心调研,并希望购买一张太空旅游的船票体验飞行全过程,却四处碰壁,均遭到婉拒。

这份遗憾更坚定了她的初心,雷诗情回忆道,“2021年,我看到贝索斯、布兰森坐着自己的飞船上了太空,开启了太空旅游元年。我就想,中国一定要有人去做这件事情。”

随着相关支持政策的相继出台,商业航天迎来发展风口,雷诗情于2023年与志同道合者携手创办穿越者公司,正式踏上跨界创业之路。

创业初期,雷诗情倾尽心力组建了国内唯一具备商业载人航天全方位、全流程研制、开发和运营能力的团队,其中不乏国家载人航天工程领导专家、航天五院载人飞船技术专家以及国家航天员中心专家。

战略与愿景的契合,是雷诗情打动各方技术专家的关键。而她自身则化身技术团队与运营团队之间的桥梁纽带,在商业载人航天安全、舒适与经济性的“不可能三角”中探寻最优解。

此外,身为文科生,雷诗情为“太空旅游”这一硬核IP赋予了独有的浪漫内核。在她看来,让硬科技“潮玩”起来,是中国亟待填补的空白。

“由于载人飞船跟火箭卫星相比有天然的互动性和文旅属性,所以我们落地的每一个项目都是集研制、展示于一体的。如何基于地面体验+俱乐部社群,利用名人效应来吸引各层次的人群,达到航天文旅先行变现,是我们一直在探索的。我们的产品其实是服务和体验,它是一段科技文化之旅。”

更多精彩内容,关注钛媒体微信号(ID:taimeiti),或者下载钛媒体App