2026-03-03 06:40:45

Jason and Myke try to predict what Apple will be announcing this week, except for the stuff that was announced Monday. But they discuss the new iPad Air and iPhone 17e too! Also: Apple’s F1 plans and some Report Card follow-up.

2026-03-03 01:45:19

A reader of my book Take Control of iOS 26 and iPadOS 26 had a perplexing problem. I had written of the menus available in iPadOS 26’s Windowed Apps and Stage Manager modes in Settings: Multitasking & Gestures:

With a mouse or touchpad, pushing the cursor to the top edge above the status bar reveals the menu bar.

Yet, for this reader, they were unable to use a pointing device to get the menu to appear in that fashion. The cursor sometimes disappeared, and clicking didn’t help. They had to swipe, like some kind of animal, to have the menus appear. This is less than ideal when you’re using an input device and a keyboard on an iPad, as you typically position it differently than when you’re using it with touch input.

We went through some troubleshooting steps, but then it occurred to me that the culprit might be their Mac. That’s right—Universal Control could be the issue! Universal Control is Apple’s name for using a keyboard and mouse or other input devices on a Mac with one or two nearby Macs or iPads. (Follow that link to see the minimum system and hardware requirements.)1

You configure Universal Control on your Mac in System Settings: Displays. Click Advanced, and three Link to Mac or iPad options appear if the feature is available:

With the first setting enabled, the second is the key issue: Push through. I asked my email correspondent if they had this feature enabled and, more crucially, when they clicked the Arrange button at the bottom of the Displays setting, did they see their iPad below their Mac (see figure).

The answer was yes. Which is why they couldn’t move their pointer to the top of the iPad and have menus appear: when they did this, they slid through to the bottom of their Mac. I was able to reproduce this, and with some fine motor control, could sometimes get the menu to appear before I slid onto my Mac display.

They moved the iPad to one side of their Mac in Arrange, and the problem went away.

[Got a question for the column? You can email [email protected] or use /glenn in our subscriber-only Discord community.]

2026-03-02 22:42:52

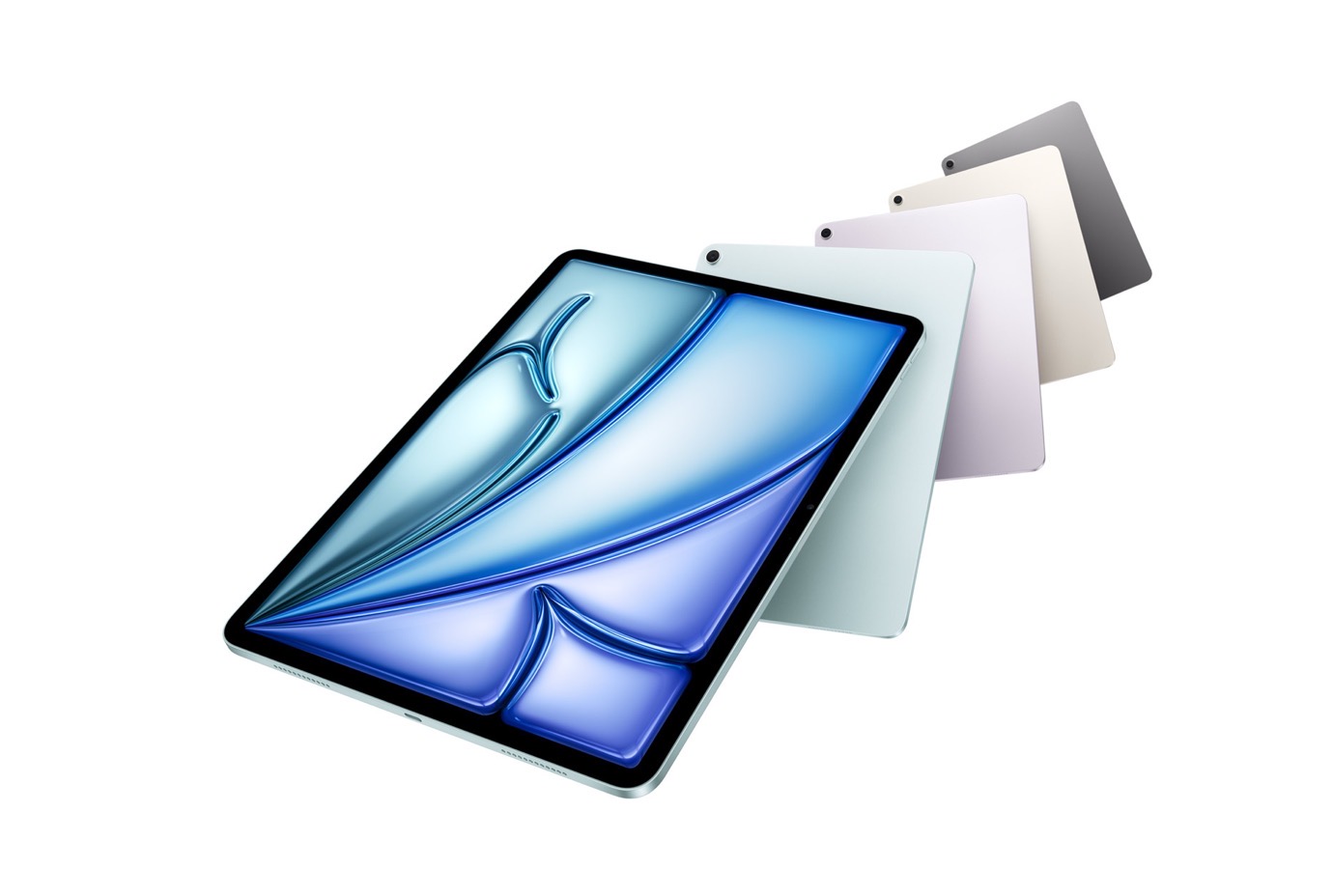

Continuing its cavalcade of product announcements, Apple also rolled out an updated iPad Air on Monday, upping the tablet’s processor to the M4 and increasing its RAM and memory bandwidth.

Don’t expect the iPad Air to look much different to its predecessor: not only does it feature the same dimensions1, but it comes in the same Space Gray, Blue, Purple, and Starlight colors as the M3 model and the M2 before it. Battery life is unchanged as well, with up to 10 hours. The new models remain compatible with all the accessories of the previous versions, including the Apple Pencil Pro and Apple Pencil (USB-C), and the 11-inch and 13-inch Magic Keyboards.

All the major improvements are under the hood. In addition to the M4 processor’s 30-percent performance improvement, there’s now 12GB of unified RAM—up from 8GB of memory in the M3 Air—and memory bandwidth of 120GB/s, compared to the 100GB/s offered by the earlier model. The M4 also unlocks hardware acceleration for 8K in more formats, including H.264, ProRes, and ProRes RAW.

There are some improvements on the connectivity side as well. The M4 Air also gets Apple’s own N1 wireless chip, adding support for Wi-Fi 7 and Bluetooth 6 and better performance for 5GHz Wi-Fi networks. And cellular models will use Apple’s C1X, the same chip inside the new 17e, which Apple says offers more energy efficient performance.

As with the iPhone 17e, Apple is continuing to tout its environmental friendly construction. The new iPad Air is made with 30-percent recycled content, including 100 percent recycled aluminum for the exterior, and 100 percent recycled cobalt in the battery.

The new iPad Air will be available for pre-order on Wednesday, March 4, and will arrive on Wednesday, March 11. The 11-inch model starts at the same $599 price point, with the 13-inch beginning at $799.

2026-03-02 22:25:24

Apple on Monday kicked off its week of announcements by rolling out the new iPhone 17e, the successor to last year’s lower cost 16e.

The 17e boasts the same A19 chip that powers the iPhone 17, a step up over the A18 in the 16e, including a 4-core GPU enhanced with Neural Accelerators. The 17e also has the same C1X Apple cellular chip as last year’s iPhone Air, the successor to the C1 that debuted in the 16e; Apple says the C1X provides up to 2x better performance and uses 30 percent less energy.

Apple’s also bumped the storage this year, with the 17e now starting at 256GB, twice the 16e’s base level, at the same $599 price point. Upgrading to 512GB will raise the price to $799.

Perhaps the biggest addition to the 17e is the inclusion of MagSafe, a feature that was strangely missing from the 16e. That includes charging up to 15W with a compatible charger, and full compatibility with MagSafe accessories as well as support for Qi2 wireless charging.

In addition, the 17e’s front display has been updated with Ceramic Shield 2 technology to help protect against cracks and breaking as well as reduce glare.

Otherwise, the specs remain largely unchanged, including the same 6.1-inch Super Retina XDR display, up to 26 hours of battery life, and support for both Emergency SOS features and Apple Intelligence.1

Apple also says that the 17e has a 48MP Fusion camera system, which on the face of it seems identical to last year’s “2-in-1 camera system” although Apple touts the 17e’s “next-generation” portrait mode that adds the ability to recognize people, dogs, and cats as well as to add portrait mode effects after the fact. The 12MP TrueDepth camera in front likewise has the same specs as last year, with the same addition of “next-generation portraits.” Apple attributes this ability to improvements in its image pipeline.

And in case you thought Apple’s environmental promises were out the window in this day and age, the company does say that the 17e is made with 30 percent recycled content. That includes 85 percent of its enclosure, made with recycled aluminum, and 100 percent recycled cobalt in its battery.

The iPhone 17e is available in three colors: black, white, and what Apple is calling “soft pink.” It goes up for pre-order this Wednesday, March 4, and will be available for sale next Wednesday, March 11. There are also six colors of Silicone Case with MagSafe—black, anchor blue, light moss, vanilla, bright guava, and soft pink—as well as a clear case, each retailing for $49.

2026-03-01 11:45:43

Jason Snell returns to John Gruber’s talk show to discuss the 2025 Six Colors Apple Report Card, MacOS 26 Tahoe, Apple Creator Studio, along with what we expect/hope for in next week’s Apple product announcements.

2026-03-01 02:20:13

I’m a Six Colors Subscriber who likes to draw pictures of data. As in previous years, Jason Snell kindly asked me if I wanted to try drawing some additional graphs based on the 2025 Report Card. In prior years, I’ve looked at the questionnaire data in ways that a social scientist might, mostly focusing on how the answers cluster together across respondents.

This year, Jason’s discussion of the results here at Six Colors and on Upgrade highlighted not just this or that question but a more general feature of the data: the bad vibes. The vibes around Apple seem worse this year. Naturally, we want to know: what can … (here you should imagine me turning my head dramatically while the camera suddenly zooms in) … science … tell us about these vibes?

Well, if we were just relying on the survey, not that much. But when your panel of fifty or more also write tens of thousands of additional words of commentary, your polite attempts to dissuade them from doing so notwithstanding … Well, maybe that can be grist for our mill. Of science. It’s a science mill, OK? One that can be made to do a little sentiment analysis of the 2025 commentary to see how it compares to the vibes from 2024.

First, let’s just take a quick look at the survey questions. Non-response patterns are always worth looking at. Here’s a chart showing which questions were most likely not to be answered by panelists.

Everyone on the panel, or almost everyone, has an opinion on the Mac, Hardware reliability, and OS Quality. Last year, everyone had an opinion on the iPhone, too. In 2025, even more people than in 2023 had no opinion on the Vision Pro (over 35 percent of respondents). This is plausible, given that no one who doesn’t have a podcast owns a Vision Pro. Twenty of the 57 respondents have no views on Developer Relations, because they are not developers. This is also a consistent divide in the panel. While its membership shifts a little from year to year, it has a constituency of developers who have somewhat different preoccupations from their non-developer co-panelists. There’s also a steady group of people who have little to no interest in Home-related things.

The fact that the panel is not that big presents some appealing possibilities for visualization. Normally, when it comes to data, more is better. But the Report Card panel is, at its core, fifty or so people answering twenty or so questions. You can very nearly take it all in at once. Just not quite. Visualizations can help here. Here’s one of my favorite ways to try to see everything at the same time. The data is just a spreadsheet where the rows are the respondents and the columns are the questions. Each spreadsheet’s cell contains a particular respondent’s score for a particular question. It may be missing, but otherwise, all of them are on the same scale from 1 to 5. Now imagine you have some method for shuffling around the rows and the columns in a systematic way until both the respondents (in the rows) and the questions (in the columns) are as similar as we can make them in each direction. This is a way to see patterns of correspondence between the rows and the columns, i.e., between your cases and your variables.

One of my favorite ways to do this for data of this size is to make a Bertin Plot. Named for the French geographer Jacques Bertin, who developed it in the 1960s, plots like this involve permuting or “seriating” both the rows and columns of your table. They were originally done by hand using a matrix of Lego-like blocks that could be skewered in the rows and columns. Now we can make the computer do it for us. In this case, the result is easier to see if we flip the spreadsheet on its side and put the respondents in the columns and the questions in the rows.

The nice thing about this representation is that by coloring in only the “good” scores (4s and 5s) but still showing the “bad” ones (3s and lower), and keeping the non-responses, we get a very good sense of how both the questions and groups of respondents hang together. The result is that we get an immediate sense of the entire dataset at a glance, and see both which questions and which panelists tend to hang together.

So much for the questionnaire. What about the long-form textual responses? Now, perhaps, like Jason, you dutifully read every word of the complete commentary. But maybe your reaction was, as per the meme, “i ain’t reading all that; i’m happy for you tho; or sorry that happened”. For this bit of the analysis, I took everyone’s full-length responses (which Jason very helpfully labels and categorizes) for both this year and last year. The question at hand is: have the vibes shifted? And if so, how?

You already know the answer. The vibes are, in a word, bad. With about sixty thousand words of increasingly bad vibes to play with, we can do a little text-mining to contrast how panelists felt in 2025 and 2024. First, let’s get some overall sense of the keywords. To do that, we’ll construct TF-IDF scores for every word in the data. Some words appear often in the report card commentary just because they appear often everywhere: “a”, “the,” “really,” etc. Those aren’t very informative at all. Net of those, we want to pick out words that are relatively important compared to words in our corpus of text. TF-IDF downweights words that are common across our text groupings (e.g., if we divide it by year, or question, or both at once) and upweights words that are concentrated in particular groups. Here’s a picture of the most common words across all responses in 2024 as compared to 2025:

This gives us a very rough sense of how the focal topics have shifted from 2024 to 2025. We can do this by question, too, because that’s how Jason organizes the responses. Within the categories, many of the distinctive terms are what one would expect, like “MacBook” under Hardware Reliability or “HomeKit” under Home. We also don’t have to restrict ourselves to single words in an analysis like this. For instance, we can count up the most distinctive two-word phrases, or bigrams. Now, for many of the sub-categories, the most common bigrams are just the ones you’d expect, like “Liquid Glass”, “Mac Mini” or “Apple Watch”. So let’s just look at the open-ended “Anything else to say?” question and the “World Impact” question to get a sense of topical shifts from 2024 to 2025.

Tim Cook dominates both these categories in both years, as one might expect. One thing that’s worth noting is that, because of the timing of the Report Card survey, the Trump Administration was already very much on the minds of panelists when they were answering the 2024 version. By the time they were reflecting on Apple in 2024, it was already early 2025 and not only had the U.S. Presidential election already happened, but Tim Cook had attended Trump’s inauguration, and also personally donated a million dollars to the Trump campaign. Still, the additional shift from 2024’s “carbon neutral” and “environmental impact” to “24k gold” and “bottom line” is notable.

Now, what about people’s feelings around these terms? I used two tools from computational text analysis to characterize the tone and emotional content of the long-form responses. Both work on the same principle: they match individual words in each response against a pre-built dictionary (or “lexicon”) of words that have been scored or tagged by human raters. The results are statistical summaries. They capture broad patterns across many responses rather than close-reading any single one. On a corpus this size—-too big for someone to immediately digest, but in the grand scheme of things really rather small—-they’re not going to do a whole lot better than the sense you’d get from using your own ability to read, one of many remarkable capacities that the lump of watery cholesterol sitting between your ears somehow possesses.

First, the AFINN lexicon is a list of about 2,500 English words, each rated on a scale from -5 (very negative) to +5 (very positive). The ratings were originally compiled by Finn Arup Nielsen. Words like “outstanding” or “love” score positively; words like “terrible” or “hate” score negatively. Most everyday words are not in the list at all and just get skipped. To score the 2024 and 2025 full reports, I find every word in them that appears in the AFINN list and take the average of their scores. A response whose matched words average out to, say, +1.2 is mildly positive in tone; one averaging -0.5 is mildly negative. I then aggregate these scores by topic and compare them between years. Here’s what that looks like:

Again, this method works purely at the word-level. It does not understand sarcasm, or even simple negation (“Not great, Bob”), let alone more sophisticated things like context or irony. A sentence like “I can’t believe how great every new Mac is” will score positively because of “great,” even though “can’t believe” might signal surprise more than straightforward praise. Averaging the scores has its costs, too. Scores near zero can mean either genuinely neutral language or a mix of positive and negative words that just cancel out.

Let’s try a different approach. The NRC lexicon, developed by Saif Mohammad and Peter Turney at the National Research Council of Canada, tags about 14,000 English words with the emotions they tend to be associated with. The system uses eight categories of emotion based on Plutchik’s wheel of emotions. This is a model of general emotional responses, not a game show, relationship experience, or torture device. The emotions are anger, anticipation, disgust, fear, joy, sadness, surprise, and trust. A single word in the NRC lexicon can have more than one tag. “Abandoned,” for instance, is tagged with both fear and sadness.

Like with AFINN, we match every word in the panelists’ long-form responses against the NRC list, count how many times each emotion category appears, and convert those counts to proportions. If 20% of all emotion-tagged words in the 2025 responses are tagged “trust” and 8% are tagged “anger,” that tells us something about the overall emotional texture of the commentary that year. Here’s an overall comparison of the differences in the vibe between 2024 and 2025:

The same caveats apply here as with AFINN: the method is wholly context-free and works at the word-level only. It is better at pinning down vibes from a largeish body of text. Its context-free character can also pollute the analysis in unexpected ways. For example, “trust” and “anticipation” tend to appear as “emotions” in English-language writing about technology, but that is because the relevant vocabulary (words like “support,” “reliable,” “expect,” and “update”) is prevalent in this domain for reasons that often have little to do with the emotion of trust as such. Relative differences between years or topics are probably more informative than absolute proportions. We can see that joy and fear were ahead in 2024, relatively speaking, with anger and disgust being more prominent in 2025 than in 2024, relatively speaking. We can also break this out by topic area. Let’s look at four:

We can see distinct shifts in emotional valence in the categories. In the open-ended “Anything else to say?” prompt, there are notably more joy flips in 2024 than in 2025. Sadness, anger, and disgust flip in the opposite direction, with relatively more of them in 2025 than in 2024. Once again, it’s quite tricky to quantify the scope and meaning of these shifts with tools as crude as these. Breaking things out by category makes it clear that the nature or meaning of one’s anger may be quite different across contexts. Panelists in 2025 are comparatively much more angry about Apple Software than they were in 2024, for example. But this anger might not have the same character as that expressed in the “Anything else to say?” category. Still, crude as our vibesometer is, it does seem to register the shifts Jason was feeling in the responses.

Finally, on Upgrade this past week, Jason wondered if being angry about Apple’s World Impact might cause people to downgrade the company on other dimensions. It’s a good question. There are several more and less complicated ways to assess it, but with data like this, none of them is really decisive. Here is a very simple way to just look at the association between the two.

A low impact score is reasonably strongly associated with lower scores on other questions, on average, but we’re not really in a position to say that it caused people to assign those lower scores.

So there you have it. You had a sense that the vibes were bad; now you know that the numbers maybe kinda confirm it. Pinning down exactly how people feel using only descriptive numerical methods is quite tricky. But that, I suppose, is the nature of vibes.