2025-09-04 00:38:57

One of the questions I hear a lot is "will AI be as big of a catalyst for a consumer AI wave as mobile?"

It’s a narrow question, but still an interesting one to think through. There were three elements at play with mobile:

1. Mobile enabled a dramatic expansion in internet minutes that were up for grabs. Rather than the zero-sum game of fighting for the limited time people spent in front of a desktop computer, suddenly there were all these new minutes up for grabs -- when someone was commuting, waiting in line, on the toilet, etc. This is why the consumer mobile wave was biased towards a particular set of players: those that offered more dopamine per minute by connecting to the internet than staying in the analog world.

2. Mobile introduced new ingredients with which a consumer founder could build (multiple cameras, GPS chip, accelerometer), creating greenfield opportunities for startups.

3. The AppStore dramatically reduced the friction of installing a new application, making it easier for people to download apps to their heart's content.

Now let's do a thought experiment:

If we didn't have (2), and we had these powerful computers in our pockets *without* any cameras/GPS/etc, would mobile have still been a huge catalyst for consumer startups? Definitely. True, we wouldn't have had Uber, Instagram, Snap, or TikTok, but apps like FB, WhatsApp, Twitter, LinkedIn, Pinterest, Reddit, Airbnb, YouTube, etc. would still be the dominant players they are today.

What if we didn't have (3)? I think consumer internet would have been fine -- the motivation was so strong. And the friction would have gotten ironed out over time.

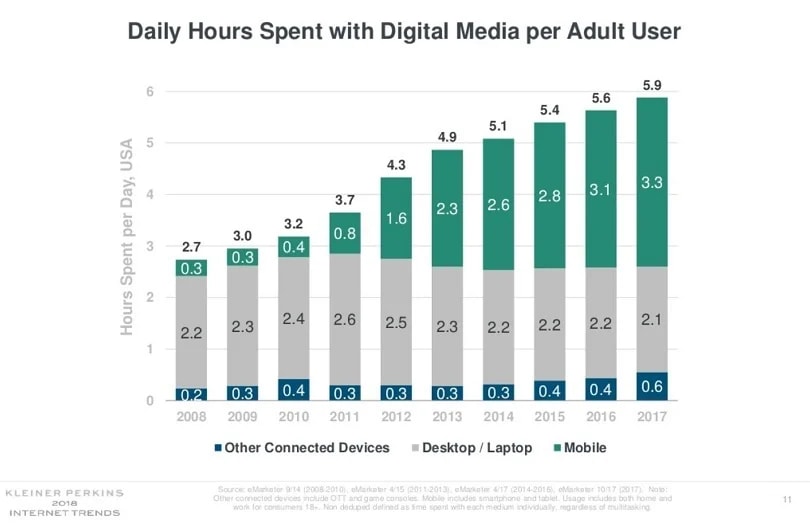

But for (1), what if we held the # of minutes a person could spend online constant pre-mobile vs post? Instead of the almost seven hours we now spend on our devices, you’d have companies fighting for ~2hrs of daily internet time. This would definitely have tempered the magnitude of the mobile consumer internet wave.

If we take this thought experiment back to AI consumer apps, there are broadly two categories of AI consumer apps: There are going to be those that will orient towards consumer attention, and those that will use AI as more of an enabling technology for a utility (think Google Maps or Uber in the mobile era). The mobile era saw an explosion of startups going after both categories. How about the consumer AI era?

Let’s start with the first category: AI apps that orient towards consumer attention. Here, the question comes down to how much AI is able to expand the # of minutes we spend connected digitally, and then what % of existing digital minutes AI can cannibalize.

For example:

You can engage with an AI friend or romantic partner instead of a real life friends / romantic partners.

You can talk to your AI / AI friend / AI therapist / AI cooking coach / etc while you are cleaning the house, prepping dinner, walking your dog, commuting, instead of listening to music / podcast / radio, watching TV, etc. (Related post: A framework for consumer attention. Voice AI is a “liquid”.)

The education use cases are obvious for younger people — AI will be a constant companion through school work.

You might have more leisure time in a world where AI means you need to work less. etc.

(As an aside: Yes of course, there are COUNTLESS use cases where you will use AI during the day to accomplish work, but I’m focused here on the strictly consumer use cases.)

In a way, the success of the consumer internet (including gaming) has created the conditions for one of the biggest AI consumer opportunities: loneliness.

Still, this isn’t like the mobile era where the dopamine maximizing drive of consumers made changing behavior easy. An AI friend has to compete for time against the dopamine optimized torrent of TikTok et al (which will all be taking advantage of LLMs in their own way to further optimize engagement), whereas when Instagram was ascendent, it was mostly competing with the unoptimized analog world. Earning a minute today is a way harder battle to win than it was in the early days of mobile.

Don’t get me wrong: AI will win a lot of consumer minutes (maybe too many, if you think about the importance of human-to-human connection), but it won’t be the same magnitude as the minute land-grab of the mobile era… until we have new AI-native devices or neural implants that will once again dramatically expand the amount of time we connect digitally. So by this measure, the consumer AI wave should be smaller than the mobile wave.

But consumer minutes is not the only battleground. There will be countless consumer AI companies that emerge that are more akin to Uber or Google Maps: companies that take advantage of AI to make consumer’s lives easier or give consumers new superpowers (think companies like Manus* or Lovable). In other words, consumer AI companies that are more about (2) than (1). I think this will be more of the battleground for consumer AI startups, at least in terms of quantity of companies.

It won’t be easy, and as I’ll write about next, we’re early in the wave. But get ready. We’re in for a lot of change.

If you are building on the frontier of consumer AI, I'd love to hear from you. My email remains Sarah at benchmark dot com.

*A Benchmark portfolio company.

2025-08-20 23:02:16

[I'm embarrassed how long this post took me to sit down to write. Got distracted by a couple other drafts, vibing, and a lot of reading this summer. Hopefully better late than never!]

As I mentioned in my last post, An AI Metamorphosis, I love Borislav’s perspective on AI because I feel like he has fully immersed himself in it, he thinks deeply about the craft of coding, and he has a similar philosophical perspective on things. This is the second half of our discussion, which contains three sections:

Why Rekki’s CTO, Borislav, still codes two days a week without any help from an LLM.

The theory of mind, and reviewing code generated by an LLM.

What the future holds as more and more code is written by LLMs.

One of the things that I hadn't heard before is how Borislav insists on coding two days per week in a blank terminal, with no AI help.

"I have days where I don't code with any AI.... I open a blank terminal and then I just code. I don't do any syntax highlighting or autocomplete or anything, just code... Because you start to forget... If you just use language models all the time, so much code gets written. You start losing touch with what is a lot of code, because [ideally] you want zero code. The more you use an AI, the more you forget. It gives you a bunch of code — you would never have written code like that, but.. the code is in front of you, in just a few seconds, and it works. You accept it and feel kind of ok because you don’t consider it ‘my’ code, ‘I’ didn't write this code. You have no empathy towards it. You just read it and accept it like some alien code."

There are two things embedded here: first, is this "slow boiling frog" element that Borislav elaborates on below, where you lose touch with what great code looks like. Second is how an engineer can get abstracted from the prior pride of authorship of the code they create. They didn’t create the code and don’t feel the same level of ownership of it, so now, it's less about the code itself, and more "does it get the job done"? But as we all know, the test "does it get the job done" can be short term gain, long term pain.

Back to Borislav:

"On one of the AI-free days, as you are writing a bunch of boilerplate yourself, you start hating it. Why, why am I writing this? This code is just nonsense. So your nonsense filter stays sharp. The next day, when the LLM spits out 500 lines of code, I tell it 'that's too complex, I don't want it’, I can still feel the spidey sense. Otherwise, it's slow boiling the frog. When you're pushed little by little, your tolerance changes so much. And you forget what is good or not."

This comment made me think of how so many engineering teams now report on what percentage of their code is written by AI, but this can be a bit of a vanity metric (and long time readers know how much I hate vanity metrics!). I get the desire to show how much they are embracing AI by reporting on this, but if you optimize for this metric, you won’t be motivated to edit the extreme amounts of code the AI creates, creating challenges in the future. Something to watch out for. Back to Borislav:

"The key is you have to grow your capacity to make the system do what you want it to do, for it to be a way to express yourself. How easy is it for you to tell the computers what to do? For example in 1980 you just poke an address and a pixel appears, the distance between the code, the wires and the display is in the order of a few hundred lines of machine and micro code, now, there are easily 10 million lines of code. It is easier to add new abstractions and new indirections than to fix anything, and in the last 15-20 years we have been doing that, and this is what language models learned from. Why do we even add layers of indirection and abstractions? Because we are humans, and this is our way to manage complexity. The language model is a stochastic ghost of a human, so it also abstracts. When there are 10 billion lines, I won’t even try to comprehend what is going on, I will have to settle for a summary of the code. It's just going to be too much if you don't try to take control of it... You want to conserve complexity, not let accidental complexity bubble up. But it's going to be really hard to distinguish accidental complexity from actual complexity when you are 100k lines of alien code deep."

One of the challenges with editing LLM-generated code Borislav talks about is the "theory of mind" -- basically, how humans have an intuition for how other humans think through a problem, but that that intuition doesn't map to how a LLM thinks.

Borislav gives the classic example of being in the room with Sally. Sally leaves her keys on the table and then leaves the room. You take those keys and put them in a box on the table. When Sally comes back to the room, she knows to look in the box because she can pretend to be you in her mind, and know that that is probably where you would hide the keys.

Back to code, "when I read somebody else's code, there are all kinds of reasons for this code's existence, for example historical. At Booking.com, there was a column in a table that was called country code, and inside of it, it had currency. You read code that seems like nonsense, but you know there is a human reason for it. You ask yourself ‘why did they do it like that?’ But when you have this stochastic machine that produces tokens, this is just not true. You don't have a theory of mind for the symbols. Imagine it produces a comment ‘this is a clean version of the function’, you read it and read the function and ask ‘clean for who?’. You can't reason about it in the same way. You can't think about it in the same way. You can’t understand the reason for their existence. It did it because vectors aligned like that, that’s it."

And so you end up "disconnecting" from the code. Instead of refactoring it to a way that makes sense in your brain, many engineers instead act more like a product owner of the code -- "you don't want to read the code, you just want it to work."

"That's a completely valid option, I think, and many people do it and that's fine. And they just have to recognize what exactly we are doing. But the other option is to treat it as your code. And you have to ask yourself, okay, is this what I would have written? And that's what your critique should be, what your bar should be. You must still have to have a strong sense of responsibility and ownership."

I put that part in bold because it really resonated. I wonder if it will stand the test of time?

FWIW, I asked Borislav what percentage of his code is written by an LLM, he wasn't sure but guessed 70%.

"Imagine your life depending on something and it's like 20 million tokens of code. This thing drives your car or whatever it does. The fact that we tested a black box doesn't really help us, we have to really think carefully about what we are doing. What is this doing? How are we building on top of these layers, because the layers are also going to increase.

“If a language model can just write the program for all kinds of architectures, maybe we don't even have a compatibility layer anymore. And now humans cannot even read or write the programs that the language models can. And that's like a very disturbing thought because imagine, well, maybe the next instruction set is actually designed by just AI. A compiler takes our code and compiles it for this thing, which we don't even know how it works. It's just ten times faster than our thing. Now we can't even step through it, it's just a complete black box. So we move the black box deeper and deeper into the silicon, and then we completely lose touch with how this is working."

One of the risks Borislav highlights here, is that basically our ability to build programs with true complexity is going to get harder and harder, because just accepting what code an LLM produces will introduce "fake" complexity and force us to completely "dissociate" from the code itself, making it harder to build real complexity over time.

Part of the journey Borislav has gone on is learning when to use an LLM.

"You must think of the pressure of the tokens. Imagine you ask it to do sentiment analysis, and you expect a single Positive or Negative from it. Now, that's too much pressure, you must allow it to get to the answer. For example, make a plan of how it would do the analysis and maybe instead of asking it to do positive or negative, ask it to give you a score for each, so if it samples ‘negative’ it can still recover. I don’t think people realize how much is lost when a token collapses. Think of how much is lost when a word is written. Imagine you write the word ‘positive’ on a piece of paper, and then 1 year later, you read the piece of paper and you have to add one more word, you think for a second and write ‘attitude’, then 2 years later you see ‘positive attitude’ what do you write next? All the high dimensional nuance is lost after the collapse.

Another good way to think of it is imagine a dark room with a document and a note, the note says ‘do sentiment analysis on the document, answer only positive/negative’, so you pick a random person from the street, drop them in the room, you see the result, you don’t like it, you change the instructions on the note, and then you pick another random person. Each document will get a random person, your instructions better be good.

I really advise anybody just to watch Karpathy's lectures. It's like 10 hours. I watched them like four times. Watch them, pause them, and it will help you to kind of have a mental model. Because it's a machine that we have never experienced. And you try to think of it either as a human or a computer, and it's neither."

Thank you Borislav for your insights!

2025-05-19 23:17:25

What does it mean to become an AI-native company?

For too many company leaders, I’ve noticed they think this means having your engineers “tab” their way through their coding tasks with a tool like Cursor, for their employees to be able to ask ChatGPT questions of their internal data, and for them to adopt AI vertical tools.

But these things, while important, are all on the surface. To become AI-native, it takes, what one company I’m lucky to work with describes as a full “metamorphosis.”

What follows is an interview I did with Borislav Nikolov, the CTO of Rekki. The transcript for our conversation was 50+ pages, so I did my best to edit for conciseness and clarity. Still, I consider Borislav as AI-native as you can get so in order not to cut some of the nuance out, I am going to publish this in two posts.

As background, Rekki has a marketplace for restaurants and their wholesale suppliers. They deal with thousands of restaurants making thousands of orders every day. This creates a stream of nitty-gritty engineering tasks to glue things together like payments, risk management, and complicated business logic. Every time the operations team had a new need or a tweak to the system, they’d create a task for the engineering team. Until one day Borislav realized: what if I can empower everyone on the team to “solve their own problems?”

I’m not sure what the company of the future looks like once AI fully permeates, but I think we all have something to learn from how Borislav approached this question.

In this post, we get into:

The “ah ha” moment of realizing that every single person in the company would soon be able to code, and how this would let him push tasks back to the domain experts in the company and have everyone “solve their own problems”.

How this changed his job as CTO from leading a team that executes on tasks to one that creates the internal infrastructure almost like a PaaS provider, where the whole company is full of developers he “can’t trust”.

His realization that if an employee can’t do something themselves, it is his fault for not giving them the right primitives.

How he pushed the behavior change internally. This started by first, having everyone in the company deeply understand large language models, and then challenging the conditioning everyone had of assuming they couldn’t solve their own problems because they couldn’t code.

In the second post, we’ll explore:

Why he still codes two days a week without any help from an LLM.

The theory of mind, and reviewing code generated by an LLM.

What the future holds as more and more code is written by LLMs.

“As they say, necessity is the mother of invention.”

Shortly after GPT 3.5 came out, Borislav, was staring down a massive backlog of engineering requests from the company’s operations team. The backlog was daunting, both in its count, and also because each task itself, while potentially trivial from an engineering perspective, required real time to ramp up on the problem before any code could even be written (let alone tested). Then it hit him: literally everyone in Rekki could now write SQL.

“So I was like, look, why can't I just enable the people that are actually asking for the tasks to do the task? If a restaurant orders three times very close together, that's probably a fraud thing. So instead of me making a system that has to check the data, has to have some state, etc., they can just do that themselves… This was the first real moment where I realized that everybody would soon be able to code and solve the tasks in whatever domain they are in.”

This was something that wasn’t possible with other no-code tools he’d tried, because inevitably the no-code platforms were not expressive enough.

“Previously what was always missing and why the low code and no code solution would fall flat was because at some point, it's just not expressive enough. Somebody has to write the code. There is this law of irreducible complexity.” But he realized that if he could give his team the right infrastructure for their code to run somewhere safely, with things like privacy considerations, security, and notifications if there is a problem, he could empower everyone in the company to code and solve their own problems.

Rather than divide the company into developers and non-developers, if everyone could write SQL, Borislav realized that the jump to building automations on a service like Zapier or Make.com was finally possible.

“Now, if we could just make this SQL into API primitive, someone can create the rest of the workflow at Zapier or Make.com, and of course the testing they can do herself by placing orders with a testing restaurant.”

So, his thought was "how can I make it possible for everyone to do their job, without me defining what they can and cannot do? What primitives are needed? How can I make it reliable and as safe as possible?”

By primitives he meant things like load/store/search, turn a SQL query into an API, or SQL query into a trigger that pushes to a webhook when there is new data, monitoring, alerting, etc.

If an employee gets stuck not being able to do something they want, Borislav considers it his fault for not giving them the right primitives.

“I almost think of myself as an internal PaaS provider, the whole company is full of developers that I cannot trust, kind of how AWS engineers think about REKKI's engineers, they have to run our code, but they don't trust any of their users, but at the same time they have to empower us giving us the right primitives to express ourselves and do what we want to do with as little friction as possible… In the future I hope I can empower everyone to use Cursor and just deploy code, but we have a long way to go to make it safe and secure.”

Depending on the company, the primitives will be different. But to Borislav, “the important part is to think of the whole company as people who can truly build anything they put their mind to, and the only limit is you, your attitude, and your infrastructure.”

“You will be surprised how much you can do with a workflow with SQL to API, ChatGPT classifier and few API calls.”

Now, rather than a company divided into developers and non-developers, the company has developers, semi-developers, and a small (~10%) non-developer make-up. Yes, the developers are still needed to unblock or enable the semi-developers to do their jobs, but that work is amortized because the developers are giving the semi-developers the “fishing rod, not the fish”.

I asked Borislav what advice he’d give other companies to make this transition from pre-AI to AI-native.

“If you put a company in front of me and I can ask them one question, it would just be ‘do you understand this technology? Can you use this machine?’” In Borislav’s experience, most people are quick to point out the AI’s limitations – hallucinations, producing bad code. But they haven’t taken the time to truly understand what the machine is and how it works.

Borislav himself studied Andrej Karpathy’s videos and others on LLMs for close to 50 hours until he could back-propagate by hand on pen and paper, and deeply understood “what a plus means and what a multiplication means and what the collapse of the vector space into a token means.” As he said, “it’s a machine we’ve never experienced.” To use it, first understand it. Once he could understand “the way the transformer programs itself to emit the token” and therefore its limitations, he understood how to take full advantage of a LLM to code, and transformed Rekki’s culture to be AI-first.

“You have to understand that by talking to it you're programming it. That's what you're doing.”

At Rekki, Borislav had the entire team watch Karaphy’s Zero to Hero series, as well as a bunch of videos from other teachers like Justin Johnson, Fei-Fei Li, and 3Blue1Brown. They did Learning Fridays where they’d watch lectures together and discuss the content.

Then, he started pushing all the requests back to the teams that had the domain expertise. His default changed from doing the tasks for people, to teaching them how to do it themselves, and making sure that they had the infrastructure that empowered them.

At first, this was hard.

“You have to believe in the capability of your non-tech people. They are conditioned to think they cannot code, to think that only engineers can not solve their technical problems. So the very first step is for you to believe in them. Then the second step is having the infrastructure to support them, and the third is to teach them to solve their problems themselves.”

For example, someone on the operations team needed to get a bunch of data. Borislav could have written a scraper for him that gets the data, parses it, and analyzes it. Instead, he told the employee to download Cursor, and just “type the request in.” “Now, he has 20 scrapers that he just uses nonstop.”

“So again, first believe that non-coders can code, then figure out where the code lives, because that is a massive problem, e.g. how do they deploy and monitor it, make.com, zapier, bolt, their own laptops etc.? And then the real issues with security, PII and GDPR, anonymization, etc. How many outages are you willing to tolerate because ChatGPT wrote a stupid infinitely recursive query?”

What Borislav demonstrates so clearly is that becoming AI-native isn't about layering AI features onto existing processes. It requires a fundamental restructuring of how we think about work, capability, and organizational design. This is the "metamorphosis" he references.

Three critical insights emerge from Rekki's transformation:

1. The democratization of technical capability is real and imminent. When everyone can code, the traditional bottlenecks between technical and non-technical teams dissolve. Rekki transformed from an engineering backlog to hundreds of automation workflows built by the people who understand the problems best.

2. Leadership roles fundamentally change. Technical leaders shift from task executors to platform builders. They create secure, reliable primitives that enable domain experts to safely solve their own problems. The parallels to cloud computing's transformation of infrastructure are striking: CTOs become an internal PaaS provider to the whole company rather than gatekeepers.

3. Organizational psychology is the biggest barrier. Both engineers who are used to being the exclusive "problem solvers" and non-technical staff who've been conditioned to believe they "can't code" must undergo significant mental shifts. The limitation isn't the technology - it's our deeply ingrained beliefs about who can do what.

The question for founders isn't whether this transformation happens, but how quickly your organizations adapt. The companies that drive this change aren't just adding AI features - they're fundamentally rethinking what it means to be an organization in the AI era.

More soon.

2025-04-30 05:02:27

Hi All -

After eight years at Benchmark, I’m shifting to a Venture Partner role. In this new role, I'll continue to make new investments on behalf of Benchmark, serve on my existing boards, and contribute to Benchmark and our portfolio. What's changing is my focus and time allocation.

When I joined Benchmark, I was drawn to its distinctive model in venture capital—a small equal partnership of no more than six general partners, all generalists, deeply committed to partnering with the most ambitious founders of our generation. With no junior people or platform team, the structure demands total dedication from each partner. It's a model that has proven remarkably successful, and also one that demands a certain approach to venture.

AI isn’t just another technology wave – it’s a transformational force that will reshape every corner of our lives. As I've watched the space evolve, I've increasingly realized that for me to best support founders building in AI, I need more space. More space to go AI-native by fully immersing myself in AI tools at the edge, more space to reflect on the last ten years and try to understand what the next ten might bring, and maybe even (don't hold me to this!) more space to think through it all by writing.

After reflecting on this with my partners, it became clear that transitioning to a Venture Partner role would give me the flexibility I need while maintaining my connection to Benchmark's core mission. I remain fully committed to Benchmark and our craft of deep partnership with the founders we back, just now with more room for curiosity and exploration (and yes… vibe coding).

For the extraordinary founders I currently work with: my commitment remains unchanged. I’m grateful for our partnership.

For founders building at the edge of AI: I'll now have more bandwidth to engage deeply with your vision. Whether you're just beginning your founder journey or scaling a team that's already found product-market fit, my email remains sarah at benchmark com.

Sarah

2025-02-03 21:45:12

James Raybould came recommended to me by multiple people when I posted about asking for AI savants, and he did not disappoint. Thank you Maisy Samuelson for the introduction!

There are so many tidbits he shared on his mindset which he describes as “AI-Forward”. I am going to include a bunch of quotes on his thinking (slightly edited for brevity). And then he shared a few fun examples on how he uses AI day-to-day.

"I just assume anything I'm doing, like almost every decision I'm making is better with AI. At this point, it's been built into almost every single thing. If I'm trying to name my team. I'm trying to write my LinkedIn post. I'm trying to write a strategy. I'm trying to think of anything I do. I go to Claude, my favorite, or my default tab is Perplexity."

"I'd say if you look at my podcast time, it's probably gone from three to five hours a week to probably one hour a week because for the other three to four hours advanced voice mode has taken over.... I think that my biggest realization is again, AI knows more about everything than we know about anything. And so therefore, why wouldn't I be curious? And so therefore if you have a choice of talking to the most knowledgeable person who has ever lived, why wouldn't I do that on just about everything?"

"I think that you and I grew up with this idea that 'Effort equals output'. I'm like, oh cool, you must have put so much time into this Sarah. Oh, what an amazing dossier you wrote. What an amazing briefing. Now we're moving to this world where the connection between effort and output is completely disappearing." To this point, James mentioned the example Ethan Mollick gives in his book Co-Intelligence on writing recommendation letters for his students. It used to be a signal when a Professor would take the time to write a recommendation letter for a student, because that effort would create scarcity. The professor isn't going to say yes to everyone who asks. What happens to the value of a recommendation letter when AI can do it in three minutes?

"I keep on reminding myself, like Ethan Mollick says, AI is the worst it will ever be today."

The three big themes for James are about 1) brainstorming and writing partner, 2) synthesizing information for him, and 3) scouring the web and other sources for information that is relevant to him (vs him having to go out and get it himself).

Three examples he gives:

"Anything, LinkedIn posts, LinkedIn long form articles, and internal stuff, like documents internally, phrasing's a lot. I find there's a lot of like, what should I call this team? What should I call this? Is there a really pithy phrase? The key there is that you ask for 25 ideas. And then say, I only like two and four. Give me 25 more. And you say, I only like one, two, and three. You just keep going.”

"Each average idea for any human is probably better than each average idea for an AI. But the AI can give you 200 of them in 10 seconds and the human can give you like 13 in like two hours. So Claude can give you 200 taglines, 150 are absolute garbage. But 50 are pretty good and 10 are magic."

He often prompts Claude after it's written something, "Let's remove the jargon and make it more like me."

James has started experimenting with OpenAI’s Operator. "It's not that each individual task is amazing. My mind was blown because I'm watching TV with with my kids, and in parallel, I'm preparing a dossier on you, and I'm finding some candidates for a search, and I'm researching our dishwasher. The average person doesn't have an EA or a Chief of Staff to give work to, but now they do."

To show how simple it was for James to get value out of Operator, here is the prompt he used:

"I'm preparing to meet Sarah Tavel from Benchmark on Monday. Can you please create a full Google document dossier style that tells me as much as you can about her. Please start with broader information and then narrow down to anything that she's written about AI on LinkedIn, X, her blog, or other public sources in the past 12 months."You can watch Operator in action here. And the output here (with some formatting from James). Definitely not perfect, but as James reminds us, “worst it will ever be.”

One of the core use cases James uses AI for is synthesizing content. A great example of this is for newsletters. Like all of us, he is subscribed to a lot of email newsletters (make this one more!), and often doesn’t read everything or know what to focus on. So he built a tool using Relay that ingests the newsletters in his Gmail, and then synthesizes the content so he gets an easy-to-glance summary every morning, and he can then dive in on the posts he’s curious about.

This use case is a classic example to me of something I’m noticing the “AI Savants” I’ve spoken to all have in common — they are willing to do the extra upfront effort to get the downstream benefits.

James kindly put together 10 slides to show how he does it here: Getting a Daily Digest of the So Whats from a Series of Newsletters using relay.app.

Here’s an example of what the output is. Check it out!!

2025-01-23 23:59:36

One thing that’s hit me lately: Google trained us all to be self-sufficient information hunters and synthesizers. When we need to diagnose a health issue, fix a coding bug, choose a vacation destination, or write a paper, we've learned to search, read, compare sources, and synthesize into personalized informed decisions or work. All the world’s knowledge at our fingertips. Better learn how to leverage it. “Let me Google that for you?” captured this ethos. How dare you ask a question that you could find or figure out yourself!

Well… thanks Google. The more I find myself taking advantage of AI, the more I realize I’ve been unlearning this trained self-sufficiency. What we all have now is an expert available to us to ask any question or even delegate many tasks. Yes, sometimes we need to give it some back-up documents, or clearer instructions, and sometimes it has no idea what it’s talking about (not that it will tell you). But if you can move from a mindset of self-sufficiency by default, to asking the LLM expert at your fingertips by default, a world starts to open.

This isn’t about moving queries off of Google into ChatGPT. It’s also a shift in the kind of problems we can tackle, and how we’d approach them:

Google Era: I’m stuck on a bug. Let me search and read a bunch of StackOverflow threads to debug this.

AI Era: Pull request to an AI developer who has access to your entire codebase.

Google Era: I wonder what diet changes or supplements I should be taking given my latest blood test results. [Does a lot of searching.]

AI Era: Uploads latest blood test result into a ChatGPT Project, “You are a medical analysis expert specializing in interpreting blood work results and creating personalized health recommendations….” [full prompt instructions here].

Google Era: I wonder how good our team is at communicating together. [Bookmarks a lot of articles on team communication to read later.]

AI Era: "Tell us something we don't know, or wouldn't want to know about ourselves" [uploads file of team Slack conversations]. (-idea and instructions from Tom Lawrence)

Google Era: My wife just did an embryo transfer. Let me search for articles on what she should be eating to maximize the changes of a healthy pregnancy.

AI Era: "You are the world’s foremost expert on maternal and pediatric nutrition like Dr. Lily Nichols. You’ve studied the nutrition studies and lore from every culture in the world that best promote maternal and baby health and brain development. Help me put together a week by week menu — breakfasts, lunches, dinner, snacks — for my wife from embryo transfer to end of fourth trimester.

My wife is a pescatarian but will do bone broths, butter, dairy, etc. She just doesn’t like eating meat, though maybe that may change with pregnancy. We have a worldly palate.” (-instructions from Aike Ho)

Are the experts perfect for every question? No, of course not. (I like how Ethan Mollick describes the “jagged frontier.”) Is this the worse they will ever be? Yes.

This isn’t just about an AI doing the work we once did of finding/synthesizing information. One thing that hits me is how much more personalized an AI result can be than a Google search will ever be. The paradigm is just so different. When I do a Google search, we all have some expectation of objectivity in the results. We critique Google when two people do the same search (e.g., on a news event) and get different results. And of course the means by which Google personalizes results has been more implicit - inferring from my searches, geo, etc. So personalization gets pushed to the edges to sites that are also stuck in a Google / search paradigm. Take TripAdvisor as an example. I have to figure out the best hotel for me by looking for clues in reviews that are relevant to me personally. Whereas with an AI, I can just tell it about my family and vacation preferences. I can store those preferences in a project, add my own reviews on hotels or trips we’ve taken, and get personalized recommendations.

This transition won't happen automatically. At least for everyone older than GenZ, self-sufficiency is deeply ingrained. Unlearning it will take conscious effort. But once you start, you realize how different the future will be. Have fun.