2026-03-05 23:14:24

Welcome to the 727 newly Not Boring people who have joined us since our last essay! Join 259,712 smart, curious folks by subscribing here:

Hi friends 👋,

Happy Thursday! It’s late in the week and late in the morning, so…

Let’s get to it.

Every week, at the bottom of the Weekly Dose, I thank my teammates, Aman and Sehaj, for their contributions. Thanks isn’t enough though. They do great work, for which they deserve to get paid. Challenge is: they’re based in India. Or, challenge was. Now, I pay them with Deel.

Deel is payroll for global teams, like not boring and many of our portfolio companies. I grabbed coffee with a founder in our portfolio the other day, and unprompted, he brought up how easy Deel made it to expand his team globally. He’d tried the alternatives and switched, and he was gushing, which is rare for a payroll provider.

Hiring talent outside your home country can open up new growth, but it also comes with unfamiliar rules, local labor laws, and compliance risks. That’s where an Employer of Record (EOR) comes in. If you’re a startup exploring global hiring for the first time, Deel’s free guide walks you through the basics. Whether you’re hiring your first international employee or planning your next market expansion, Deel can help.

The Old Testament’s 2 Samuel tells the tale of King David as he unifies the tribes of Israel and establishes Jerusalem as the nation’s capital. The world was slower then, there were only like 50 to 75 million people around at the time, and so God could be more actively involved in the day-to-day management of human affairs.

As, for example, when King David ordered a census of Israel to put a number on its military strength, he angered God, who expected his servant to rely not on soldiers but on Him. God sent a plague down that killed 70,000 men, then instructed David, through the prophet Gad, to build an altar on the threshing floor of Araunah the Jebusite in order to stop it.

When David arrived, Araunah offered to just give him the land, the oxen, and the wood with which to sacrifice them for free as a gift to the king.

“But the king replied to Araunah, ‘No, I insist on paying you for it. I will not sacrifice to the Lord my God burnt offerings that cost me nothing.’ So David bought the threshing floor and the oxen and paid fifty shekels of silver for them.”

Costless sacrifice is not sacrifice. And we, like the Gods, demand sacrifice.

I read an essay in The Argument by economist yesterday: The Tinder-ization of the Job Market.

The job market is stuck, Darling argues. It’s not bad: unemployment was relatively low in January at 4.3%; Prime Age (25-54) Employment at 80.9% is higher than it was at any point in the Obama or first Trump presidencies. Just stuck. The hiring rate averaged 3.3% in H2 2025, a level only touched during COVID and the Global Financial Crisis.

BLS Hires Data

The weird part is… we’re not in the middle of a crisis, financial or biological. Employment is high, remember! Unemployment is low! “Generally, a high employment rate and a ‘tight’ labor market are associated with high hiring rates,” Darling writes, “not low ones.”

Darling’s theory is that LLMs have made it easier for people to apply for a ton of jobs, with custom-written cover letters and everything.

He cites Greenhouse data which “showed recruiting workload rose 26% in the third quarter of 2024 and that 38% of job seekers reported ‘mass applying,’ flooding firms with far more resumes than before.” Per Business Insider, “the applications-to-recruiter ratio is now about 500-1, four times what it was just four years ago.” He links to a Kelsey Piper essay from last year that provides more data to back this up.

Because it’s easier to apply, the volume of applications is up. Because AI is writing cover letters, their quality is no longer a useful way to pull signal from all that noise. “A recent paper showed that after Freelancer.com introduced an AI-generated cover letter tool, the correlation between cover letter customization and offers dropped 79%.”

You used to get rewarded for customizing your cover letter, when it cost you something. Now it doesn’t, so you don’t, but you still gotta customize, because everyone else is.

We find ourselves running an incredibly stupid Red Queen’s Race.

“Here’s the hard thing about easy things: if everyone can do something, there’s no advantage to doing it, but you still have to do it anyway just to keep up.” I wrote that way back in August 2020, about DTC brands and Shopify. “When every rebel is armed, none really is. It’s like when you played GoldenEye 007 as a kid. Getting the Golden Gun the hard way was dope. Everyone getting the Golden Gun with a cheat code made the game suck.”

This is an idea I’ve been obsessed with for a long time: the asymmetric ability of the laggards to make the leaders’ life a little bit worse.

You might produce the very best widget by far, but your potential customers will undoubtedly be bombarded by inferior alternatives claiming that they make the very best widget. At worst, your potential customer will fall for it; at best, your cost to acquire that customer goes up a little bit.

You spend hour after quiet hour handcrafting your widgets, smoothing their edges, polishing their faces, giving each one a little kiss before sending it out. You sacrifice. Your competitors do none of that. They yeet millions of these suckers out in Yiwu. Then they stamp HANDCRAFTED WITH LOVE IN USA on their website and who’s to say?

Some people really want a particular job. It’s their dream job. They spend hour after quiet hour poring over resume details, crafting a heartfelt cover letter, and saying a little prayer before hitting “Submit.” They sacrifice. Their sacrifice gets lost among the 1,732 less-lovingly-crafted (but who’s to say? who has the time to read them all) applications that came in that same day.

The burnt offerings that cost nothing and the burnt offerings that cost everything smell the same, because we make our offerings not to an omniscient God, but to fallible, overwhelmed humans.

This is happening more and more with writing, too. Claude in particular has gotten much better at writing, which means that more people are publishing essays.

There will be times when there’s an interesting topic to write about, and by the time I’ve thought about what path I might take through the argument, there are dozens of versions of the essay on X. Some of them are even pretty good!

But I’ve noticed something happening in my brain that’s worth sharing, because I would imagine a lot of people are thinking similar things about whatever it is that they do.

I’ll be interested in a topic, start chewing on it, start thinking through unique ways to present it, to shape the essay, the research I might need to do, the people I might need to talk to, all this stuff I’d need to do to make something great. Then I see a handful of pretty good versions on similar-ish topics, and I think, “Well, I guess they beat me to it. On to the next one.”

And who knows if the version I would have written would have ended up being better than what these AI-human teams whipped up in a couple hours. Who knows if it would have been great, or even good.

What I do know is that if I’d written it, I would have put in a lot more effort, agonized over it, sweat the details, tried to present the ideas in unexpected ways. I would have sacrificed something that cost me something, hours and hours, sometimes weeks and weeks of my life.

And half of the value of the post would have been the result of that effort, but half would have been the effort itself, my pointing to an idea and saying, “THIS IS AN IDEA THAT I WAS WILLING TO SPEND FIFTY HOURS WRESTLING WITH.”

And maybe I’ll still spend the time to write it, and maybe many someone elses will spend the time making the full and beautiful version of the things they want to create despite the easy-come existence of their bloodless simulacra, but a lot of the time, we won’t, and the world will be left with many hollow versions of a thing filling up the place where the one full one should have gone.

The pretty good is the enemy of the potentially great.

Right, like someone will somehow, eventually, once the recruiters have gathered themselves up and faced the never-ending application pile, basically just win the lottery and get offered the job, and they’ll be happy that they got it, because a paycheck is a paycheck, but two things will probably be true: 1) it wasn’t their dream job; they applied to 347 of them, this was the one they got, and 2) the person whose dream job it actually was, who would have been overjoyed to get to work in this specific job, who actually did write the cover letter the old-fashioned way for this one because they reallllllllly wanted it… no human ever even saw that person’s application. They’re still looking. Dream crushed, they’ve now applied to 123 jobs themselves, none of which they really care about, but all of which someone really wants and now is a little bit less likely to get.

I don’t know code as well as I know words, but it looks like the same story is playing out there. SemiAnalysis has started publishing this chart of the number of Claude Code GitHub Commits Over Time. The number, let me tell you, is going up. It’s going up big time.

“4% of GitHub public commits are being authored by Claude Code right now. At the current trajectory, we believe that Claude Code will be 20%+ of all daily commits by the end of 2026. While you blinked, AI consumed all of software development.” Claude Code Is The Inflection Point, is what this means.

And again, I am not a coding expert, but like…

Of course the machines that can happily print tokens at high speed 24/7 are going to produce more code than us meatbags. I can’t imagine what a similar graph of “% of Words Committed to X” would look like. We have to be approaching 50%; we might be way past it. That does not mean the Singularity is Near.

I think if you asked me how I’d position myself on an AI trade, it would be something like: short ASI, long tokens.

As so perfectly captured in Tool Shaped Objects:

The market for feeling productive is orders of magnitude larger than the market for being productive. Most people, most of the time, want to click and watch the number go up. They do not want to be told the number is fake. They will pay— in time, in attention, in actual money— to keep the number going up.

But his is a “come for the tool” view of token demand, and misses the “stay for the network” part. It’s not just that we want to watch our own number go up. It’s that if everyone else’s numbers are going up, we need ours to go up just to keep up.

More words, more applications, more code, MORE, in the world’s stupidest Red Queen’s Race.

I don’t blame the tools. We do this all the time. Venkatesh Rao wrote about Premium Mediocre just two months after Attention is All You Need and years before the transformer’s significance became apparent. Kylie Jenner made her face look a certain way because she could afford it, then everyone started doing it, and it got more affordable, and now Instagram Face has become middle class, a costless sacrifice to the gods of vanity, signifying nothing.

Like, what does the number of Claude Code GitHub Commits signify? What does the number of words written by an LLM signify? What do thousands of applications for every single low-wage job signify?

If they cost nothing, they signify nothing.

I started reading this book recently, The Control Revolution by James R. Beniger, written in 1986 and, from what I’ve read so far, criminally underread today. I haven’t read much of it yet, so I’ll need to save a deeper analysis for a future essay, but Beniger has this idea that is relevant to our conversation.

He believes that modern information technology was built in response to the Industrial Revolution, a direct response necessitated by industrial scale and complexity:

Until the Industrial Revolution, even the largest and most developed economies ran literally at a human pace, with processing speeds enhanced only slightly by draft animals and by wind and water power, and with system control increased correspondingly by modest bureaucratic structures. By far the greatest effect of industrialization, from this perspective, was to speed up a society’s entire material processing system, thereby precipitating what I call a crisis of control, a period in which innovations in information-processing and communication technologies lagged behind those of energy and its application to manufacturing and transportation.

Modern bureaucracy, the telegraph and telephone, mass media, computing, sensors… all of this, Beniger says, fell out of the crisis of control precipitated by the Industrial Revolution. The Industrial Revolution massively accelerated production. We could make things faster than ever. But then we had a distribution crisis: how do we move all this stuff? Railroads and logistics solved that. But then we had a communication crisis: how do all of those far flung hubs coordinate with each other? Enter telegraphs, telephones, train schedules, standardized clocks, and the bureaucracy to manage it all. And a consumption crisis: how do we get people to want and buy all this stuff we’re producing, all over the world? That’s where mass marketing, advertising, and eventually consumer culture come from.

All of which I bring up because it seems that whatever the Crisis of Control was, where we did not have enough information technology to deal with the material abundance we were creating, we have the opposite Crisis now, where the information has outstripped that which it was born to control.

Like going off the gold standard, but for information. We can mindlessly and costlessly print information with no reference to the underlying stuff about which it was meant to inform.

This is the fast takeoff, the runaway scenario: information has reached escape velocity. Claude Code GitHub commits are going stratospheric. But what is the relationship between GitHub Commits and Total Factor Productivity? Between GitHub Commits and actual economic output?

A sacrifice needs to cost something.

The bureaucracy was established on the backs of the hard, provable work of making things. Today, the bureaucracy is the thing, the simulacra of productivity that has become more real than the thing itself, and it lets society make things occasionally, if slowly.

An essay is valuable insofar as it costs the writer something. They may have paid the costs in the years of experience that seasoned the piece that took hours to write, like that Picasso story where he asks for $100,000 for a picture that took him 30 seconds to draw. They may pay the costs in hours and hours, weeks and weeks of researching and writing and editing and re-writing and crumpling and throwing into waste basket overflowing with previous drafts and re-writing again, and again, until it’s good enough.

Even if a machine wrote the same essay, word for word, it wouldn’t have paid the same cost. You can read the costlessness of it, feel it, even as the prose improves. We demand a cost.

This is what King David realized. Fifty shekels wasn’t much money to him, and God, being God, could have divined KD’s sincerity with or without the coin. But a sacrifice has got to cost something.

The bad news is: it seems that we’re in a downward spiral. Systemically, I don’t know how we pull out of this. There will be many, many more Claude Code GitHub Commits and Claude-written words in 2026 than there were in 2025, many more in 2027 than 2026, etc.

Anything can look like the Singularity with a dumb enough y-axis.

The good news is: you don’t need to play this game. You can make yourself pay a cost and you will be rewarded: externally, maybe. Internally, for sure. Eternally, I think so.

There is a school of thought that believes humans were brought into this universe to create. To live and struggle and love and sin and pay all of the costs required to create new things. The reason we are here, this belief, is precisely to experience the limitations and frictions that God cannot. We are here to pay the cost.

There is a joy that comes from conviction, and meaning in doing something only you can do, well. And somehow, that soul shines through. People can feel its presence as strongly as they can feel its absence.

I find that whenever I go comically over the top in the amount of work I do on something, spending a month to write an essay that AI could write ~75% as well in minutes, for example, that sacrifice is rewarded. People want to see that you care, that the work cost you something, that you had to make a choice, in order for them to pay a cost to you.

Pay the shekels.

That’s all for today. If reading this made you want to hire real humans, check out Deel.

We’ll be back in your inbox tomorrow with a Weekly Dose.

Thanks for reading,

Packy

2026-02-27 21:37:12

Hi friends 👋,

Happy Friday! It’s quasi-warm in New York City, America won two golds in ice hockey (sorry, Sean, Dan didn’t have anything funny to say but we’re pumped), and the good guys just keep on doing things that make us optimistic.

Let’s get to it.

(1) Form Gets $1 Billion Google Order for 30 Gigawatt-Hour Battery System

Steve Levine for The Information

The Decade of the Battery is charging ahead, now with American batteries.

Earlier this week, Google announced a deal with Xcel Energy to provide 1.9 GW of wind, solar, and battery power for a planned data center in Pine Island, Minnesota, part of a push for hyperscalers to Bring Your Own Electricity (BYOE). Wind and solar are clean, and cheap when the sun shines and the wind blows, but the sun doesn’t always shine, and the wind doesn’t always blow, so the deal includes $1 billion for a nine-year-old startup Form Energy to provide backup.

Form will be providing two kinds of batteries: standard lithium-ion batteries for instant surges of power, and iron-air batteries for longer-term backup.

Iron-air batteries work through a process that’s like reverse rusting. To discharge, the battery breathes in oxygen from the air. Iron metal at the anode reacts with that oxygen and water-based electrolyte to form iron rust (iron hydroxide/iron oxide). This oxidation reaction releases electrons, which flow through an external circuit as electricity. To charge and store electricity, you apply electricity (say, from solar or wind), and the process reverses. The rust is electrochemically reduced back into metallic iron, and oxygen is released back into the air. The iron is “de-rusted” and ready to discharge again.

Iron-air batteries are low power density and slow response, but extraordinarily cheap on a per-kWh basis, roughly 10% of the cost of lithium-ion. They’re perfect for longer-term storage, up to 100 hours, for multi-day lulls in sun and wind. These lulls are whimsically named “Dunkelflaute” events.

Form will provide a 300 MW iron-air battery system for the project, and the batteries can store 100 hours of power, making it a 30 GW-hr system. It will be the biggest battery system by energy capacity in the world when delivered.

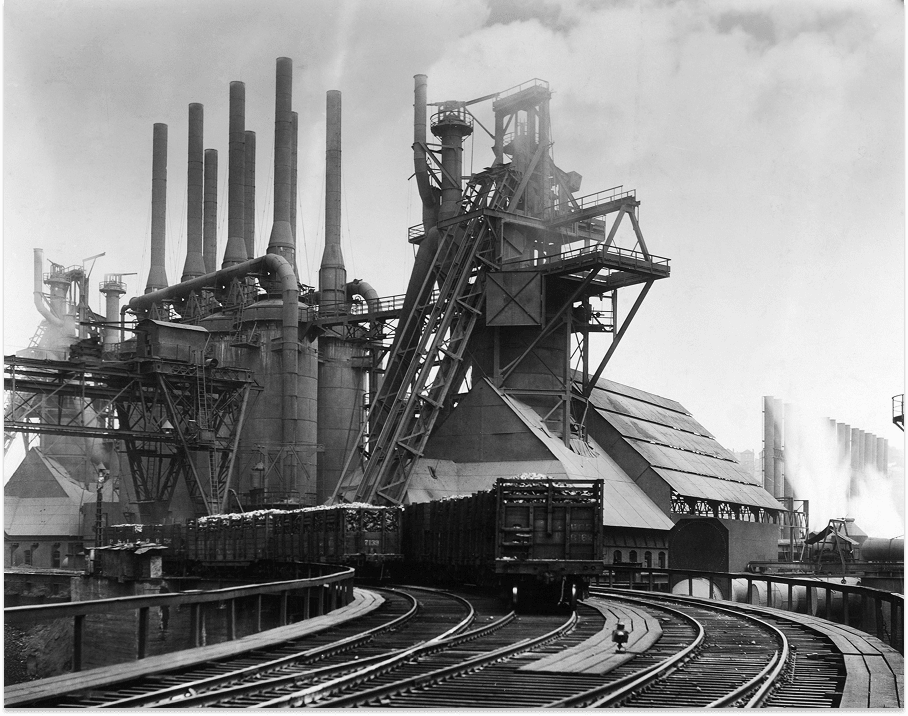

This is good news because we love batteries here at Not Boring, and because it’s a rare win for a western battery company in a category that’s been dominated by China. That also means good US jobs; the batteries for this project will be manufactured at Form Factory 1 in Weirton, West Virginia, on the site of a historic former steel mill.

So yeah, maybe AI is going to take all of our white-collar jobs (kidding, we’re on Team Thompson), but powering all that AI is going to create new ones, too.

(2) Proxima Fusion Signs MoU to Build Stellarator Fusion Power Plant

Proxima Fusion, a German startup out of the Max Planck Institute, signed an MoU with the Free State of Bavaria, RWE, and Max Planck Institute for Plasma Physics (IPP) to put the world’s first commercial stellarator fusion power plant on the grid in Europe.

It will need €2 billion to build a demonstration facility, Alpha, prior to the commercial plant, Stellaris, €400 million of which will come from Bavaria and up to €1.2 billion of which may come from the German government. It’s an expensive way to say “Es tut mir Leid” for shutting down all of the country’s nuclear power in favor of coal, but a very cool way to get German energy back on track.

Of course, it’s just an MoU. The plant still needs to be built, and then there’s the tricky matter of achieving Q>1 and, eventually, Q> whatever it needs to get to to be economically viable, but we love the stellarator.

Stellarators are a type of fusion reactor. In The Fusion Race, we wrote:

Designed by Lyman Spitzer at the Princeton Plasma Physics Laboratory, Stellarators are devices designed to confine hot plasma within magnetic fields in a twisted, torus-shaped configuration to sustain nuclear fusion reactions.

Stellarators, while promising, were difficult to design and build due to their complex magnetic field configurations.

But that was the 1950s! We have better technology today. On Age of Miracles, Julia and I interviewed Proxima CEO Francesco Sciortino, who told us something that’s stuck with us: we made Tokamaks first not because they were best, but because they were the easiest to design and manufacture. Stellarators, with their weird twists, were harder to design and manufacture, but closer to the platonic ideal of a fusion generator. Now, with better software and manufacturing capabilities, we can make reactors closer to the platonic idea.

Proxima has a long way to go before it’s putting electrons on the grid, but we’re happy to see progress towards that goal. Gut gemacht, Germany.

(3) Magical Month for Psilocybin

Psilocybin, the psychoactive compound in magic mushrooms, has been classified as Schedule I drug under the Controlled Substances Act since 1970. The classification means that it has “no accepted medical use and a high potential for abuse.”

That “no accepted medical use” part is coming under intense pressure from reality. Last week, Compass Pathways announced that it had achieved its primary endpoint in a second Phase 3 trial evaluating COMP360 psilocybin for treatment-resistant depression. Two doses of COMP360 25mg demonstrated a highly statistically significant reduction in depression symptoms versus control (p<0.001, -3.8 point MADRS difference). This makes Compass 3-for-3 on trials. The company plans to meet with the FDA to discuss a rolling approval submission between October and December 2026, which would make COMP360 the first “classic” psychedelic cleared in the U.S.

The week prior, a Johns Hopkins pilot study of 20 adults with well-documented post-treatment Lyme disease found that psilocybin, given with psychological support, produced significant and lasting reductions in multi-system symptom burden, including improved mood, fatigue, sleep, pain, and quality of life, that persisted for up to six months. The numbers are striking: general symptom scores dropped roughly 40% and mental/physical quality of life scores rose about 13% from baseline, with benefits maintained through the six-month follow-up. The durability is noteworthy, because a placebo would typically diminish over six months.

The Lyme study is especially interesting because it extends the psilocybin evidence base beyond the depression/anxiety lane into a chronic neuroimmunological condition where there are essentially no accepted treatments. If psilocybin is doing something meaningful for post-treatment Lyme, it starts to suggest mechanisms (anti-inflammatory, neural connectivity remodeling) that go well beyond the “it helps you process emotions in therapy” framing.

From a pharma business perspective, one of the biggest challenges with psilocybin is that it just works. There’s no opportunity to keep patients on (and paying for) meds for the rest of their lives like there is with SSRIs. That’s all the more reason we should be taking psilocybin very seriously. If this same logic applies to other conditions, like Lyme, it could be a major win for humanity.

A miracle drug like GLP-1 whose side effects include euphoria and spiritual experiences instead of muscle loss and upset stomach would be magical indeed.

(4) Stripe’s 2025 Annual Letter

Patrick and John Collison for Stripe

Stripe published its annual letter this week. It’s one of the best pieces of economic writing you’ll read all year, because Stripe has some of the world’s best data on the internet economy, and because those Irishmen can write.

Last week, in Power in the Age of Intelligence, I made the case that tech-native category leaders like Stripe are more valuable because they’re going to eat more of their categories than second-best. Stripe is eating. They shared that they did $1.9 trillion in total payment volume in 2025, up 34% year-over-year, and that roughly 1.6% of global GDP is flowing through the company's pipes.

More interesting is the data they have on companies using Stripe. Their 2025 cohort of new businesses is growing 50% faster than the 2024 cohort. The number of companies hitting $10 million ARR within three months of launch doubled. iOS app releases jumped 60% year-over-year in December. GitHub pushes surged 41% between Q3 2024 and Q3 2025. All of their indicators suggest that the pace is accelerating, and the Collisons’ best guess is that it isn’t an anomaly.

The whole letter is worth a read, but three sections stand out.

First, their framework for “agentic commerce” lays out five levels, from agents that just fill out checkout forms for you all the way up to agents that anticipate what you need and buy it before you ask. They’re honest that we’re hovering between levels 1 and 2, but the comparison to the mid-90s — when HTTP, HTML, and DNS were being hashed out — feels apt.

Second, crypto is happening, even if crypto prices aren’t. Bitcoin is down 50% from its October peak, but stablecoin payments volume doubled to around $400 billion last year, with 60% estimated to be B2B payments. Bridge, the Not Boring Capital portfolio company acquired by Stripe, quadrupled its volume. Stripe is betting on stablecoin payments infrastructure hard with Tempo, a built-for-payments blockchain they’re building with Paradigm. Visa, Nubank, Shopify, and even Klarna (whose CEO was once a self-proclaimed crypto skeptic) are already testing it. Mainnet is launching soon.

Third, the letter closes with what might be its most important idea: we live in a “Republic of Permissions.” Technologies succeed or fail not just on their merits but on whether the web of regulators, committees, and courts lets them through. The Collisons cite Joel Mokyr’s Nobel-winning work on how culture, not just capital or technology, drives progress. They argue that AI could transform drug discovery, nuclear could deliver energy abundance, and drones could slash logistics costs, but only if we don’t let “a slurry of local ordinances harden into a blockade.” Hear hear.

It’s a letter about payments that’s really a letter about whether civilization can keep up with its own tools, co-written by a guy whose website has the canonical list of projects from a time when we were able to build fast. They think we can.

“We’re reminded of the phenomenon of falling into a large black hole… We write this letter at what may well turn out to be the advent of a different and hopefully much more beneficent singularity. While much around us in 2026 feels similar to prior years, it is also clear that the next decade will look very different to those just gone by.”

(5) Vitamin B2 and B3 nutrigenomics reveals a therapy for NAXD disease

Arc Institute in Cell

Pulling one of the most interesting entries from Ulkar Aghayeva’s Scientific Breakthroughs this week above the paywall. Here’s Ulkar on a fresh finding out of Arc Institute:

It may seem that vitamin biology has been figured out decades ago (for reference, all 13 classical vitamins were discovered by 1948). But this paper shows that there’s still a lot of unexplored territory in nutritional genomics.

Instead of starting out with a target disease and trying to find a drug that treats it, they chose the well-known vitamins, B2 and B3, and did a genome-wise CRISPR screen in K562 cancer cells to identify genetic diseases responsive to vitamin supplementation. NAXD, a repair enzyme essential in redox biology, emerged as the top hit for vitamin B3. Mutations in NADX are known to be lethal in early childhood. The team generated knockout mice that showed very similar disease profile to humans, and adding vitamin B3 to their food from birth increased their lifespan more than 40-fold.

How many other diseases are out there that could be so easily cured with various vitamin supplementations?

Additional sources: Arc Institute blogpost; twitter thread

Scientific Breakthroughs from Ulkar

Jeremy Stern on Shyam Shankar

Alex Konrad on William Hockey

Mario joins Hummingbird

2026-02-20 21:55:19

Hi friends 👋,

Happy Friday and welcome back to our 181st Weekly Dose of Optimism!

This week has it all, from transformers to aliens.

We are also introducing a new segment for not boring world members: Scientific Breakthroughs from Ulkar Aghayeva. In December, we shared Frontier of the Year 2025, in which Ulkar, Gavin Leech, and Lauren Gilbert reviewed 202 pieces of scientific news from the year and assigned a score based on a probability each generalizes and how big it would be if they did. We loved it, so we asked Ulkar to do a roundup of the most important stories in science, which she did this week.

It’s our attempt to bring you even closer to the frontier. I hope you enjoy it.

Let’s get to it.

Today’s Weekly Dose is brought to you by… not boring world

The Dose is for everyone, but we’re adding more and more great stuff behind the paywall for not boring world members. We also have a deep slate of co-written essays in flight and going out to members over the next couple months. If you want to support not boring, help us make it as great as it can be, and stay at the cutting edge of technology, science, and business…

(1) Heron Power Raises $140M to Build 40GW Solid State Transformer Factory

Everything that can go electric economically will, including the grid itself.

Heron Power, founded by former Tesla SVP of Powertrain and Energy Drew Baglino, raised $140 million in a Series B led by a16z American Dynamism to build solid state transformers. This is pure not boring catnip: power electronics from the guy in charge of a lot of the Tesla power electronics stuff we wrote about in The Electric Slide, a16z putting its new funds to work, and fixing the grid by attacking one of its key constraints.

The constraint is that transformers, which take electricity at one voltage and converts it to a different voltage (step up or step down) using coiled wires, are outdated (the fundamental design hasn't really changed since the late 1800s and is based on a one-way grid), passive (electricity goes in, gets stepped up or down, and comes out), massive (built with 10 tons of grain-oriented electrical steel and copper submerged in oil), and worst, in desperately short supply. Lead times stretch up to 24 months, U.S. manufacturing meets less than 20% of demand, manufacturing has been moving to China, prices have spiked 60-80% since 2020, and demand is projected to double in the next decade.

So Heron is building Heron Link, a modular solid-state transformer that uses wide-bandgap semiconductors instead of scarce electrical steel and copper. It’s starting by selling to solar and battery farm operators and data centers, who represent huge and growing demand and for whom the solid state transformer eliminates the need for inverters. Traditional transformers can’t handle direct current, so an inverter turns DC from a solar panel or battery into AC. Heron Link can do that, so customers can skip the inverter. HL is software-defined, meaning it can use software to regulate voltage and frequency to actively manage grid stability. And it’s modular, meaning that if one of the device’s tens of power conversion modules fails, it can be swapped out in ten minutes. They also contain lithium-ion batteries that can discharge quickly to provide 30 seconds of power to smooth the transition to backup power sources.

The funding will go to building a factory that can produce 40GW worth of Heron Links per year. At 5MW per Link, that’s 8,000 transformers.

Heron isn’t the only company making solid state transformers. I hope they all do great. But I do want to call out one competitor: Raleigh-based DG Matrix, whose co-founder and CTO is Subhashish Bhattacharya, a professor at NC State University who is a long-time collaborator of… B. Jayant Baliga, the inventor of the IGBT, The GATEway of India, the hero of the Power Electronics section of The Electric Slide!

Anyway, if we have a lot of smart people using wide bandgap semiconductors to fix the grid, the future of American energy is going to be SiC.

(2) DoW Transports a Valar Atomics Nuclear Reactor to Utah on a C-17

Isaiah Taylor and Valar Atomics

The title pretty much says it all here: the Department of War transported a Valar Atomics Ward nuclear reactor from California to Utah on a C-17. It’s another sign that the government is serious about turning on reactors by the United States 250th birthday on July 4, 2026. Not much more for me to add here other than to watch the video and the others in Valar’s Operation Windlord series.

In other nuclear news, Lockheed Martin is investing in Radiant Nuclear, which we wrote about in 2024, as it prepares to turn on its first reactor at the DOME this summer. Defense is starting to play offense on nuclear.

(3) Single vaccine could protect against all coughs, colds and flus, researchers say

James Gallagher for the BBC h/t Simon Taylor for the find

Researchers at Stanford have built a vaccine that protects against… everything. Like, everything they’ve tested, it works against. The nasal spray works by putting immune cells in the lungs on permanent “amber alert,” ready to fight whatever shows up. In animal tests, it reduced viral breakthrough by 100-to-1,000-fold and worked against flu, Covid, common cold viruses, two species of bacteria, and even house dust mite allergens. Prof Bali Pulendran calls it “a radical departure from the principle by which all vaccines have worked” since Edward Jenner figured out the originals in the 1790s.

It’s early (animal studies, not human trials) but the team is planning deliberate infection studies in people next. The big questions are whether the effect translates to human lungs, how long the protection lasts (about three months in mice), and whether keeping the immune system dialed up causes friendly fire. The researchers don’t think it should be permanent; they envision a seasonal spray at the start of winter or a rapid-deployment tool at the start of a pandemic to buy time while a targeted vaccine is developed.

That pandemic use case alone is worth getting excited about. One of the hardest lessons of early 2020 was how long it took to develop, test, and distribute a vaccine while people died waiting. A universal nasal spray sitting on the shelf, ready to deploy on day one, could change the math on the next pandemic entirely.

And if the seasonal version works, imagine a world where “cold and flu season” just no longer exists. As a parent of little kids, please for the love of God work in humans.

(4) DeepMind Veteran David Silver Raises $1B for Ineffable Intelligence

George Hammond for Financial Times

When interviewed Richard Sutton last year, the father of Recursive Learning (RL) and author of The Bitter Lesson said, basically, that LLMs are a dead end and that the real Bitter Lesson-pilled approach would be to just let AI learn from experience. That resonated with me. Even with all of the recent coding and agent advances, these things are still missing something ineffably intelligent to me.

David Silver wants to fix that. Silver, who joined DeepMind in 2010, was one of the team’s star researchers. He worked on AlphaGo, AlphaStar, and the Gemini family of models. And last year, with Sutton, he wrote a paper titled Welcome to the Era of Experience.

Silver and Sutton argue that we’re moving from an era where AI mainly learns by imitating static human data (like text on the internet) to an “Era of Experience,” where the most powerful systems will learn predominantly from their own ongoing interaction with environments. Agents will improve by generating vast streams of experiential data, acting over long time horizons, grounded in real environments and rewards, which will unlock truly superhuman capabilities beyond the limits of human-written data. Which makes sense intuitively!

Sequoia is leading a $1 billion investment at a $4 billion valuation. I’m excited for this one. And it couldn’t come at a better time because…

(5) The US Government is Going to Release the ET, UAP, and UFO Files

Maybe the greatest proof that we’re in some other beings’ simulation is the fact that just as we are about to figure out how to create intelligence ourselves, we finally begin to get the truth about other intelligent life in the universe.

This is just one Truth post, and the government has a spotty (redacted) record releasing the important details in important files recently, but it seems that disclosure might finally be upon us.

It’s too early to know or speculate when we’ll get more information, what’s in the files, and how ontologically shocking it will be; it could be anything from “we’ve been holding a few craft that we don’t quite understand” to “we’ve reversed engineered the craft and understand antigravity” to “humanity is just an experiment run by far more intelligent beings” to … anything, really.

But it’s funny and kind of beautiful that just as so many people are worrying about humanity’s place in a world with AI, we may get proof that we’re part of something much larger, weirder, and more wondrous than we could ever imagine.

EXTRA DOSE: Scientific Breakthroughs, Two Podcasts, and Research Revival Fund

Subscribe to learn about 8 scientific breakthroughs this week, including gene drive-like systems to fight antibacterial resistance, potential early cancer diagnosis method from Arc Institute, DMT for major depressive disorder, and using laser writing in glass for long-term storage. Let us know what you think!

2026-02-18 21:33:05

Welcome to the 737 newly Not Boring people who have joined us since our last essay! Join 258,985 smart, curious folks by subscribing here:

Hi friends 👋,

Happy Wednesday!

Émile Borel once said that given enough time, a bunch of monkeys banging on typewriters would come up with Shakespeare. And yet, despite the innumerable X Articles on software moats in the face of AI, I haven’t read a single one that’s satisfying.

I think it’s because thinking about software moats, how a software company might protect itself from abundant code, is the wrong frame altogether, and that the more interesting and relevant frame is which companies, SaaS, hardware, or otherwise, stand to benefit the most from newly abundant inputs.

Those companies, not vibe coders, are the ones that point solutions should be worried about. They will win enormous market shares and fortunes. They will come to dominate large industries by using new technology to compete, capturing the High Ground, and expanding further outward than companies with lesser technological tools could have ever dreamed of. They will be the Standard Oils of this era.

This essay is about those companies.

Let’s get to it.

In 2025, crypto returned to the financial mainstream. It is, as they say, so back.

What’s ahead for 2026? I’m glad you asked. Silicon Valley Bank is out with their annual crypto outlook, featuring proprietary insights and data from over 500+ crypto clients. We love a bank that banks crypto.

Silicon Valley Bank makes five predictions for the year ahead, including:

Institutional capital goes vertical with increased VC investment and corporate adoption.

M&A posts another banner year after the highest-ever deal count in 2025.

Real-World Asset (RWA) tokenization goes mainstream on prediction market strength.

Last year, Silicon Valley Bank predicted that stablecoins would be the big breakout use case. That was correct. They think that will continue this year, too. Read their take on what comes next, free:

One of the more head-scratching anomalies in the market is the valuation gap between Stripe and Adyen. The two payments companies handle similar amounts of Total Payment Volume. Stripe is growing faster. Adyen reports exceptional margins and cash conversion. Stripe is reportedly doing a tender offer at a $140 billion valuation in the private markets. Adyen is valued at $34 billion in the public markets. There are a number of theories for why this is the case, most of which boil down to: VCs are idiots, as they’ll find out if Stripe ever goes public.

Ramp versus Brex is another example of the same idea. Ramp was most recently valued at $32 billion in the private markets. Brex, which had been valued at $12 billion in the private markets, sold to Capital One for $5.15 billion. Ramp is doing more revenue and growing faster, but not 6x more revenue or 6x faster. Once again, the actual market disagrees with the VCs.

Or does it?

I have a different theory, one that neatly fits those two cases, the SaaSpocalypse, SpaceX’s $1.25 trillion valuation, and even the evolving structure of venture capital itself: winner takes more.

The history of business is basically the history of increasing concentration of value, accelerated in spurts by technological change. Centuries ago, firms operated within cart-hauling distance of their customers, creating a system of local monopolies. A brewer in 1800 served a single town, if that. Canning, railroads, telegraphs, mass production, electrification, containerization, planes, and the internet, among other technologies, expanded companies’ available market, and winners captured a greater and greater share of value.

Economic data backs this up.

In a 2020 paper, Jan De Loecker and Jan Eeckhout found that aggregate markups rose from 21% above marginal cost to 61% between 1980 and the late 2010s, and this increase was driven almost entirely by the upper tail of the distribution; median firm markups barely changed, while the 90th-percentile markups surged.

In The Fall of the Labor Share and the Rise of Superstar Firms, Autor et al. find that industry sales concentration trends up over time across measures, and it rises more in sales than in employment, what Brynjolfsson et al. call “scale without mass.” In their account, this shift reflects reallocation toward superstar firms with high markups and profits and low labor shares.

A 2023 paper by Spencer Y. Kwon, Yueran Ma, and Kaspar Zimmermann at UChicago, 100 Years of Rising Corporate Concentration, uses IRS Statistics of Income to show that the top 1% of U.S. corporations by assets accounted for about 72% of total corporate assets in the 1930s and about 97% in the 2010s. The top 0.1% of U.S. corporations by assets increased its share of total corporate assets from 47% to 88% over the same period. Power laws within power laws.

Today, the Magnificent 7 accounts for one-third of the market cap of the S&P 500, and Apollo showed that those seven companies are driving the vast majority of equity returns.

Venture capital is responding to this information as you would expect. Per Pitchbook, as of August last year, 41% of all VC dollars deployed in the US in 2025 went to just ten companies. Per Axios, more recent Pitchbook data shows that “The estimated aggregate valuation of unicorns hasn’t actually changed too much — $4.4 trillion vs. $4.7 trillion at the end of 2025 — because the top 10 companies account for around 52% of value (up from only 18.5% in 2022 and the highest such figure in a decade).”

Venture capital firms themselves are concentrating. As I wrote in a16z: The Power Brokers, “a16z accounted for over 18% of all US VC funds raised in 2025.” Just yesterday, Thrive announced that it raised $10 billion: $1 billion for early-stage investments, and $9 billion for late-stage investments, which is the kind of split you put in place if you believe your winners are going to keep winning.

The theory for investing in very large funds like a16z, Founders Fund, Thrive, General Catalyst, and Greenoaks is that they are best positioned to win large allocations in the handful of companies that matter, and that those companies will capture most of the value in this vintage. Notably, all five of the firms I just mentioned are investors in Stripe, and three of the five (Founders Fund, Thrive, and General Catalyst) are investors in Ramp.

All of that data suggests increasing concentration. Through this lens, the SaaSpocalypse (the violent sell-off in software stocks) is less about software writ large dying, and more about point solution software finally facing economic gravity. They are no longer getting a free pass simply for having a good business model.

The past few decades of software exceptionalism have been an exception based on a business model so sweet and capabilities so universally useful that the rules of strategy, while not wholly unimportant, were less consequential than normal. A venture capitalist could look at a standard set of SaaS metrics (ARR, growth rate, net retention rate, gross margin, LTV:CAC, Rule of 40, etc…) and underwrite whatever new business they encountered to them. This is why you hear things like “Here’s how much ARR you need to raise a Series A.” The companies are basically all the different flavors of the same thing.

There are, of course, idiosyncrasies between selling software to professional services firms and selling software to energy companies, for example, but the basic model is the same. Invest upfront to hire engineers, write software to make someone’s job easier, and sell the software to as many customers as possible at high margins. Different industries may need software to do different things, have different buyers, be larger or smaller, be more or less willing to pay, and be more or less expensive to acquire. A bigger, less crowded market with a strong need and a high willingness to pay is better than the opposite. But figuring that out is relatively straightforward. Since software operates at the edge of value in most industries, and does not attempt to strike at its core or compete with it, it doesn’t require thorough competitive analysis.

Since the SaaSpocalypse, people have gotten AI to write tens of thousands of words on which types of software companies have moats given AI and posted the resulting essays to X. They’ve gotten a little more specific than “SaaS is good.” Data is a moat, or a particular type of data at least. Or it isn’t, maybe, because The Agent Will Eat Your System of Record. Certainly, dealing with regulatory hair earns you a moat. No?

Most of the takes I’ve seen miss what matters.

On paper, Stripe and Adyen have basically the same moats, as do Ramp and Brex. I love a good hardware moat more than the next guy, as I’ve been writing since The Good Thing About Hard Things in July 2022, before ChatGPT or Claude Code but when it was clear that good software alone would offer no moat. I was too unspecific in that piece, too. Some hardware businesses will get very large, and others will fail. Hardware itself isn’t a moat. Good luck making LFP cells in America.

No, what matters is becoming the leader in your industry in a way that is incredibly specific to that industry and in such a way that your business benefits from, instead of being threatened by, abundant improvements in general purpose technologies like AI and batteries.

What matters now is the same stuff that has always mattered but that software forgave for a while: own the scarce, defensible asset in an industry and use it as the High Ground from which to dominate. Ricardo said this.

If you’re a startup, and you don’t already own the scarce asset, then you need to identify the constraint holding the industry back, focus everything on breaking it, and expand from there.

History’s most influential military strategist, Carl von Clausewitz, said this. He called it Schwerpunkt, the center of gravity. “The first task, then, in planning for a war is to identify the enemy’s center of gravity, and if possible trace it back to a single one,” he wrote in On War. “The second task is to ensure that the forces to be used against that point are concentrated for a main offensive.”

For our purposes, the Schwerpunkt is the constraint you attack. The High Ground is the scarce and valuable position you win by breaking it. Moats are what keep others from taking it.

Even while forces must be concentrated against the Schwerpunkt, our attackers must plan for victory before it is won. The company that breaks the constraint needs to build the complementary assets (the distribution, the manufacturing, the customer relationships) to capture the value from its own innovation. Otherwise, its competitors will.

David Teece argued this in 1986, in Profiting from Technological Innovation. The paper, he wrote, “Demonstrates that when imitation is easy, markets don’t work well, and the profits from innovation may accrue to the owners of certain complementary assets, rather than to the developers of the intellectual property. This speaks to the need, in certain cases, for the innovating firm to establish a prior position in these complementary assets.” Which is the point I am making: innovation alone, software or hardware, isn’t enough.

Figuring out which companies might capture the Schwerpunkt and use it as a High Ground from which to expand is an entirely different kind of underwriting, impossible to do in a spreadsheet alone, even with Claude in Excel.

Companies that don’t own the High Ground face existential risk from technological progress. If you’re just selling point solution software, then software abundance is a threat. Hardware isn’t necessarily the moat people think it is, either, even if it’s less susceptible to AI, because AI isn’t the only technology improving rapidly. “Hardware is a moat” is the same kind of lazy thinking that “SaaS is the greatest business of all time” is. If you’re selling better mouse traps, you’re at risk every time someone builds a slightly better mouse trap.

Similarly, incumbents that currently own the High Ground but can’t wield modern technology face existential risk from those who can. This is why there is such a large opportunity for startups today. New technologies mean old constraints are finally attackable, and it’s likely to be newer companies doing the attacking.

Companies that do own the High Ground, on the other hand, and are tech-native, benefit from technological progress, just as land owners captured the gains from more farming labor and better farming tools, but more pronounced, because these modern landowners will corner the best talent, the most capital, and the richest veins of distribution. A glib way to put it is that a Ramp engineer with an AI will build something better than a CFO with an AI, no matter how good the AI gets. It’s not the vibe coders you should be worried about.

Newly abundant resources can have opposite effects on your business depending on your position, and it is likely to be the company with the High Ground wielding those resources that dooms the companies in weaker positions. Ask Slack how it felt to compete with Microsoft Teams; companies like Microsoft can now build a lot more “Teams.”

The game on the field is all about understanding who can own the High Ground in a given industry.

The moats are the same as they’ve always been. Study 7 Powers. When you’re starting out, you need to understand what your moats might be, but in order for moats to matter, you need to have something worth protecting. You need to own the High Ground.

If we really are living through the most consequential technology revolution in history, why are you spending so much time hand-wringing about protecting small, old castles when you could be thinking about how to build history’s most magnificent businesses?

The abundant inputs keep getting cheaper. The scarce asset keeps getting more valuable. The companies that own the latter and leverage the former will become larger than ever before.

These businesses themselves are scarce assets, valued on their strategic importance and industry size, because the opportunity is no longer to sell software into industries in order to marginally improve them, but to win those industries and capture their economics.

If your aim is to build or invest in these companies, old heuristics will do you no good. You need a brain of your own, some sweat on your brow, and some good ol’ fashioned strategy frameworks to help you reason about the opportunity at hand.

This essay is a guide to thinking through where power might concentrate, for those willing to think. If winner takes more, it’s about what it takes to build, or invest in, the companies that have a shot at winning large industries. And it’s about how to position yourself to gain strength from technological progress instead of running from it while throwing weak “moats” in your wake.

It is about Power in the Age of Intelligence.

Or, it’s about Power in any age of rapid technological change, really.

While the advances we are experiencing today feel unprecedented, my thesis has been that we are going through a modern version of the Industrial Revolution.

Then, machines did what only human muscles could previously. Now, machines are doing what only human brains could previously, in new bodies built to house those brains. This is The Techno-Industrial Revolution.

So it is useful to study Rockefeller, Carnegie, Swift, and Ford. None created an industry from scratch. They all fit this pattern: identify the Schwerpunkt in an existing industry, break it, seize High Ground, integrate outward, dominate.

When John D. Rockefeller met the oil industry, it was young, valuable, and incredibly volatile. Oil itself was abundant. There were a lot of refineries – roughly 30 in his hometown of Cleveland alone when he got to work – but their quality was inconsistent, and their processes were inefficient. Per Austin Vernon, “Refining methods were so inefficient in the mid-1860s that a barrel of oil (42 gallons) sold for almost the same price as a gallon of refined kerosene. Today, the price ratio of refined products to crude oil is ~1.25x instead of 42x.”

There was one big constraint to the profitable growth of the oil industry – the volatility – which could only be broken through scale and control. To get to scale and control, Rockefeller needed to drive down costs to capture the market. Refining was the place to get scale, given its inefficiency and the fact that, per Vernon, “A typical rule of thumb in chemical engineering is that capital costs increase sublinearly with capacity, usually by (capacity ratio)^0.6. A plant with double the output is only 50% more expensive to build, and operating costs tend to follow similar trends.”

So in partnership with chemist Samuel Andrews, one of the first people to distill kerosene from oil, Rockefeller continued to improve the kerosene yield. At the same time, he aggressively grew revenue and lowered costs by eating the whole cow, so to speak. He sold the non-kerosene byproducts others threw out (paraffin wax, naphtha, and gasoline) and used some of the fuel oil to power his own plants. He also integrated into barrels (by buying an oak tree forest and a barrel-making shop).

As it lowered costs and delivered a more consistent product, Rockefeller’s refinery (the predecessor to Standard Oil) grew, and as it grew, it lowered costs. Vernon again:

Standard Oil and its predecessor firms increased production ~20x between 1865 and the end of 1872, meaning their costs could have fallen more than 85%. At that point, they were the largest refiner in the world with a double-digit share of capacity, and it was their game to lose. If we understand this short period, then we know how the company eventually won.

The company that would become Standard Oil won the High Ground by breaking the constraint. Then it integrated outward, horizontally and vertically.

By 1870, Standard Oil was a joint stock company capitalized with $1 million that owned 10% of the oil trade in the United States. Rockefeller got busy acquiring struggling refineries or putting them out of business, increasing scale and efficiency in the process. Rockefeller also did favorable deals with the railroads, which Vernon argues actually had less to do with Standard Oil’s success than did its growing scale and efficiency. He kept growing, acquiring refiners in Pennsylvania, New York, and New England. He vertically integrated into pipelines (which replaced railroads), into distribution, into retail (ExxonMobil and Chevron are Standard successors), and into production itself.

By the late 1880s, Standard Oil controlled 90% of American refining, a share it held until it was broken up in 1911, when its $1.1 billion market cap represented 6.6% of the entire US stock market. To hear Vernon explain it, the outcome was a fait accompli by the time he’d attacked the Schwerpunkt and gained the High Ground in the 1860s.

Andrew Carnegie’s story is so similar it’s almost suspicious. Like Rockefeller, Carnegie didn’t invent his product (steel); Bessemer did. Like Rockefeller, Carnegie realized the constraint to steel’s growth was inefficiency and inconsistency. Like Rockefeller, Carnegie hired a chemist (in his case, to measure what was happening inside the furnaces) and obsessed over cost, which he knew was the only thing he could control:

Show me your cost sheets. It is more interesting to know how well and how cheaply you have done this thing than how much money you have made, because the one is a temporary result, due possibly to special conditions of trade, but the other means a permanency that will go on with the works as long as they last.

His chemical knowledge allowed Carnegie to run his furnaces hotter and longer than anyone else, producing more steel at lower cost. His cost obsession lowered costs further. Low cost, high quality steel was the High Ground.

And from there, he integrated outward. Backward into coke (Frick) and iron ore (the Mesabi Range), into railroads to transport raw materials, and forward into finished products. He certainly didn’t sell his services and know-how to incumbents; he used them to destroy competitors on price until US Steel bought him out for $480 million in 1901 (roughly $18 billion today) to create the first billion-dollar corporation in history.

“Congratulations, Mr. Carnegie,” JP Morgan told him upon closing, “you are now the richest man in the world.”

Gustavus Swift, like Rockefeller, would also “eat the whole cow.” He just did it later in his arc, and more literally.

The constraint was this: only about 60% of a live animal’s mass is edible, and meat goes bad. Which meant that, prior to the 1870s, the meat industry shipped 1,000 pound live cattle by rail from wherever they were raised to wherever they were going to be eaten. They paid by the pound (an extra 40%), had to feed animals to keep them alive and healthy, and lost some to death in transit anyway.

So Swift, building on early experiments by Detroiter George Hammond, hired an engineer to design him a refrigerated railcar. Then, he could slaughter the beef in Chicago and ship the cuts to their final table much more efficiently. Railroads, not wanting to lose livestock shipping cash cow, refused to pull his cars, so Swift leased his own and partnered with smaller lines to move them. Then, he built icing stations along the routes, and replenished them with ice he contracted directly with ice harvesters in Wisconsin and other cold midwestern states. By necessity, he built the whole cold chain from scratch.

This combination of centralized slaughter in Chicago and cold chain to the coast was his High Ground. He was forced into vertical integration because none of the pieces made sense on their own, but once he had it, he used it to drive down costs.

Like Rockefeller, Swift was appalled by waste, and because he controlled his own slaughterhouses, he could do something about it: he turned cow byproducts into soap, glue, fertilizer, sundries, even medical products, which allowed him to increase revenue and lower prices. He also maximized his refrigerated cars by stacking butter, eggs, and cheese beneath the swinging carcasses of dressed beef heading East.

By 1884, after only six years in operation as a slaughterer, Swift had become the second largest meatpacking firm in the US. By 1900, the meatpacking industry, unconstrained, had grown to become the second largest in the country to iron and steel.

It was a visit to a Chicago slaughterhouse that inspired Henry Ford’s assembly line. “Along about April 1, 1913, we first tried the experiment of an assembly line,” Ford writes in My Life and Work. “We tried it on assembling the flywheel magneto. I believe that this was the first moving line ever installed. The idea came in a general way from the overhead trolley that the Chicago packers use in dressing beef.”

Ford didn’t invent the automobile. By 1908, there were hundreds of American car companies selling expensive, hand-built machines to the wealthy. A typical car cost $2,000-$3,000, or roughly two and a half years’ wages for an average worker. Manufacturing costs were the constraints to the nascent automobile industry’s growth, so manufacturing costs were Ford’s Schwerpunkt.

Ford broke the constraint with the moving assembly line. Before it, a single worker assembled a complete flywheel magneto in about 20 minutes. Ford split the work across 29 operations, cutting the time to 13 minutes. Then he raised the line eight inches and cut it to seven minutes. Then he adjusted the speed of the line and cut it to five. The same progression played out across the whole car: total assembly time fell from over 12 hours per chassis to 93 minutes.

That manufacturing capability was the High Ground. The Model T launched in 1908 at $850, already half the price of the competition. As the assembly line improved, Ford kept cutting: $550 by 1913, $360 by 1916, below $300 by 1924. What had been 18 months’ wages for an average worker became four months’.

From that High Ground, Ford integrated ferociously. Rubber plantations in Brazil. Iron mines and timberland in Michigan. A glass plant, a railroad, a steel mill, even soybean farms for plastic components. All of it flowed into the Rouge River complex, where raw materials entered one end and half of the finished cars on the world’s roads rolled out the other.

The result was that Ford’s sales went from 12,000 in 1909 to half a million in 1916 to over two million in 1923. At its peak, more than half the cars in the world were Fords.

Across the Industrial Revolution’s most successful entrepreneurs, there was a clear pattern that looks almost nothing like how you’d think about scaling a SaaS business: identify the Schwerpunkt in an existing industry, break it, seize High Ground, integrate outward, dominate.

Pause for a second. Think about how people are telling you to analyze businesses today. Would those AI-generated moat lists, or the equivalent for their time, have given you any advantage whatsoever in identifying Rockefeller, Carnegie, Swift, or Ford, let alone becoming one of them? It is never that easy, and it always takes work.

I want you to feel those examples, because what’s old is new again. The biggest companies in the world today are executing against the same framework, in ways that are specific to their industry.

SpaceX Goes Vertical

The funny thing about today’s biggest software companies is just how much they spend on hardware. This year, the world’s four largest companies that started as software companies plan to spend an estimated $600-700 billion on data center buildouts, equivalent to roughly 2% of US GDP, a level of infrastructure buildout comparable to laying America’s railroads in the 1850s.

Amazon, an online bookseller, will spend $200 billion. Google, the search engine giant, will spend $175-185 billion. Meta, the social network for college students, will spend $115-135 billion. And Microsoft, which makes operating systems and office applications, will spend $100-150 billion.

Except, of course, that’s not what those businesses are. They are technology conglomerates that used the early internet to break the Schwerpunkt in their respective industries, gain their respective High Grounds, and integrate outward so far that they’re all running into each other at this new frontier. And despite their best efforts and hundreds of billions of dollars spent on terrestrial data centers, Elon Musk still thinks we’re going to need to put them in space.

Before SpaceX, the constraint in the space industry was cost to orbit. SpaceX broke the constraint with reusable rockets, drove costs down an order of magnitude, and quite literally gained the High Ground. From there, it integrated outward into Starlink communications satellites, which it can launch more cheaply than competitors because it owns the rockets and which fund the development of even bigger Starship rockets, which bring the cost per kg to launch things into orbit down even further. SpaceX used vertical integration the same way Rockefeller did: it is simultaneously its own largest customer and its own cheapest supplier. Casey Handmer’s The SpaceX Starship is a very big deal is an excellent read on the topic.

In 2023, Elon Musk founded xAI to build maximally truth-seeking AI. He then merged it with X (née Twitter). xAI got a late start, and it doesn’t have the best models yet, but what it is best in the world at is building data centers very fast. So the world took note when Elon said that we’d never be able to build enough data centers on earth to meet demand for AI, and that we will need to start building them in space.

So on February 2nd, 2026, SpaceX announced that “SpaceX has acquired xAI to form the most ambitious, vertically-integrated innovation engine on (and off) Earth, with AI, rockets, space-based internet, direct-to-mobile device communications and the world’s foremost real-time information and free speech platform.” According to Musk, SpaceX will get the data centers it needs in space via ~10,000 Starship launches per year, or roughly one per hour, every hour. Simultaneously, it will also build a self-growing Moon city, from which it plans to build a mass driver in order to make a terawatt per year of more worth of AI satellites, far more energy than Rockefeller could have conceived of, en route to eventually colonizing Mars and fulfilling SpaceX’s mission to “extend consciousness and life as we know it to the stars.”

It remains to be seen whether the High Ground will also give SpaceX a decisive advantage in the AI race, but it certainly demonstrates that the stakes have grown since the Industrial Revolution, even as the strategy has remained the same.

But no matter how that plays out, SpaceX (and Google, Microsoft, Amazon, Meta, Apple, Tesla, NVIDIA, OpenAI, and Anthropic) aren’t going to eat everything, or else I wouldn’t be investing in startups.

Boulton and Watt did not capture the entire value of the Industrial Revolution they steam powered, although they did vertically integrate, Boulton into the Soho Manufactory, the steam engine-based Gigafactory of its day, and Watt, via his son, into steamships. Nor did Rockefeller eat everything in the internal combustion era of the Revolution despite owning the oil on which it all ran.

In addition to Rockefeller (oil), Boulton and Watt (steam engine), Carnegie (steel), Swift (meatpacking), and Ford (automobiles), the Industrial Revolution gushed multi-generational wealth for the Vanderbilts (railroads), Morgans (finance), Sears and Roebucks (retail), Havemayers (sugar), McCormicks (agricultural equipment, sadly unrelated), Westinghouses (power), Otises (elevators), Pullmans (luxury rail cars), Bells and Vails (telecommunications), Pulitzers and Hearsts (publishing), Eastmans (photography), Kelloggs (processed food), Pillsburys (milling), Singers (sewing machines), Nobles (Dynamite), DuPonts (chemicals), and Dukes (tobacco). This list is incomplete.

What’s notable is the diversity of industries that produced these fortunes. Machines made “labor” more abundant, and the companies that seized upon the technological innovation to break the Schwerpunkt in their specific industry, gain the High Ground, and expand were all wildly successful. Far from simply defending against mechanization, they seized the complementary assets to which value flowed as key inputs became abundant.

There are clear differences between AI, developed by huge labs and distributed at the speed of bits, and Industrial Era machine-filled factories, but I expect the Techno-Industrial Era to play out similarly. Each industry has unique constraints and resulting High Grounds, very few of which can be cracked and captured with digital intelligence alone.

The diversity that creates unique opportunities in each industry, however, makes underwriting those opportunities a different and more difficult beast than underwriting SaaS companies, which are more homogenous. There is no list, no spreadsheet, no agreed-upon metrics that will tell you which will become today’s Standard Oils. There is only the evaluation of constraints and hunt for High Grounds.

Instead of a list, then, let me give you my favorite example: Base Power Company.

Base Power Company doesn’t just make batteries. It buys cells (the commoditized piece of the value chain), manufactures battery packs, installs them on homes (starting in Texas), writes software to coordinate them, trades in the power market, and partners with utilities to help balance the grid. Base is built on the type of logic companies (and their investors) will need to exercise if they want to compete in the modern era, and it goes something like this.

We want to fix power. What’s the bottleneck? The grid. Companies are competing to build power generation and the electric machines that consume that power, and the better they do, the more strain there will be on the grid. The grid is the chokepoint. So how do you fix the grid? Laying new transmission and distribution is slow and expensive, and the grid we have is already structurally underutilized because it’s built to serve peak demand, so to smooth it out, you need batteries. Where should you put the batteries? Centralized battery farms are helpful, but they need to wait in interconnect queues, which makes them slower to turn on, and those batteries still need to distribute power to end users when demand peaks, which means they don’t fully solve the bottleneck. So you need to put the batteries right next to demand. Fill them up when the grid has capacity, and use them to smooth demand when demand is high. And if you want to put batteries next to demand (homes, to start), where is the best place in the country to do that? Texas, which operates its own deregulated grid, ERCOT, is volatile (which means potential for higher trading profits and greater need on the part of customers and utilities), and is regulatorily friendly. So you start by putting batteries on the homes of early adopters within Texas. Those slots are scarce - it would take a lot for a customer to rip and replace their batteries, and no one is installing two companies’ batteries. Then, connect them with software, improve the grid and each customer’s experience with more batteries on the network, and use the richest source of demand available in the country to begin to scale. Bring manufacturing in-house, continue to improve the batteries, decrease their costs, get more efficient at installing them, connect more of them, sign more early adopter utilities, get more scale. At which point, it’s hard to imagine a viable way to beat Base at its own game. Then, expand. Integrate upstream into grid hardware and generation and downstream into electronic devices to sell into customers with whom you’ve built trust. Expand geographically, leveraging scale, experience, and software to offer a better product than a potential competitor attempting to grab a foothold by starting in the next-best market. Keep expanding. Dominate. Expand some more.

There are a couple things I want you to take away from that paragraph.

First, it is a very long paragraph. This is not simple or easy. I think investors bemoaning the Death of SaaS are in part sad that the era of underwriting software businesses on known, straightforward metrics is over. Underwriting the biggest companies of this generation will be a much more bespoke process. The time has come to move from simple analysis to strategy. It is not a coincidence that my first Deep Dive on Base was structured as a walk through the evolution of the strategy memos that Zach and Justin wrote before touching a single atom.

Second, as technology improves – from AI to the Electric Stack – the vast majority of the returns will accrue to the companies that figure out the right place to attack and execute violently against their conviction. A simple way to think about this is that better software is more valuable to Base than it is to a smaller competitor, to a battery farm operator, or to a power generation company, as is better hardware. Better robots for manufacturing and logistics would make Base faster and more profitable, and making the game more CapEx intensive would give it an advantage over would-be competitors.

The lesson from Base is not that hardware is a moat, or that you should put your product next to Texans’ homes.

It’s that you need to deeply understand the problem you’re trying to solve, the constraint that’s bottlenecking it, how you’re going to unblock it with technology (and why now?), and how you might expand to capture the market once you do. It applies differently in every industry.

For airlines, the constraint is the engine: today’s turbofan engines carry planes as fast and efficiently as they can. Everything bad in air travel is downstream of that. So Astro Mechanica is building a new engine that is faster and efficient at every speed. But certifying a commercial airline is a long and expensive process, so Astro plans to sell into Defense first, then build private supersonic planes (which are cheaper to certify and can be cost-competitive with first class tickets immediately), then build larger supersonic planes that are cost-competitive with commercial air travel, and use the advantage in speed and cost to build its own full-stack airline, from booking to flight.

For internet, the constraint is the architecture: incumbent telcos froze their architectures around early-2000s assumptions about what was expensive, locked themselves into passive optical networks and vendor dependence, and now spend billions every few years on upgrades that still deliver shared, degraded bandwidth with no redundancy. They do zero R&D. So Somos Internet is rebuilding the full stack from scratch: an Active Ethernet architecture borrowed from data centers, physically simple with complexity pushed to software, that delivers dedicated bandwidth to every home at a fraction of the CapEx. As it grows, Somos eats more of its supply chain: “It’s been this never-ending game of doing something janky, getting credibility, doing crazier stuff, getting more resources, getting smarter people so that we can fix the things that were messed up in the janky past iteration,” Forrest explained. “Then gaining credibility to get more resources to get cooler people to do crazier stuff. It’s like this self-sustaining fission process.” Somos is expanding geographically, into new markets, vertically, by making its own hardware and laying its own fiber, and horizontally, building hydro-powered data centers. From the position of delivering one of the few home utilities everyone pays for, better, faster, and cheaper than incumbents, it plans to expand what it offers the customers with whom it’s built trust and loyalty. Maybe one day, it will offer batteries and power. Maybe one day, it will use its growing cash position to enter the United States.

There are a lot of similarities between Base and Somos: both own a core home utility and deliver it better than incumbents, which earns them the right to expand. But there are differences, too. Base is starting in the very best market for its technology, because that’s where the need is greatest and the regulatory environment is friendliest. If Somos started somewhere like New York City, it would be caught up in red tape and slow, expensive telco lawsuits for years; so it’s starting in a high-need, regulatorily friendly environment and building up cash for bigger battles. And Astro’s approach is almost entirely different from both Base’s and Somos’, apart from using better technology, now feasible thanks to Curve Convergence, to break a constraint and capture the High Ground. For one thing, people go to planes, so Astro can’t capture their real estate in the same way that Base or Somos can.

There I go with the long paragraphs again. Fine. There is endless nuance to this.

I am talking my own book here, not because I think my portfolio companies are the only businesses that will succeed in the Age of Intelligence, but because I understand their strategies much more thoroughly. Very smart people will disagree with me on each industry’s Schwerpunkts and potential High Grounds. And even once you’ve done all this work on paper, so much comes down to execution. Will the team that identified the right strategy be the same one that can build against it to capture the opportunity? Only time will tell. That’s what makes this so much fun - it’s not obvious!

What is obvious, and I hope clear at this point, is that there is no one answer, no handy guide that will tell you how to win in the Age of Intelligence. Which means that there is also not one business model.

While the “Death of SaaS” is overblown, what I hope this freak out does is to end the default investor assumption that every business should try to be a SaaS business.