2026-01-16 04:56:00

Today, NBC Sports owns the rights to many of sports’ “crown jewels,” including the Super Bowl, the NBA, the Premier League, Notre Dame Football, and of course, the Olympics. Yet, it hasn’t always been this way.

In the early 1990s, NBC Sports was in trouble. After losing Major League Baseball, the network faced a 32-week hole in its programming schedule, which represented an eternity in sports television. With no obvious answers and little margin for error, NBC turned to Dick Ebersol, the co-creator of Saturday Night Live, to stop the bleeding.

Ebersol implemented widespread layoffs and turned to a young producer named Jon Miller. His marching orders were simple, but stark. Fill the schedule, and do it on a shoestring budget.

What happened next didn’t just save NBC Sports. It quietly reshaped sports television for decades to come.

How?

By turning severe constraints into unexpected opportunities.

Given NBC’s state of peril, the moment was ripe for experimentation. In Miller’s first few months on the job, he threw anything he could on the air. This included events like the NFL Quarterback Skills Challenge, beach volleyball, and the National Heads-Up Poker Championship.

Some worked, others didn’t, but a significant revelation occurred. Being starved of money and resources forced Miller to be relentlessly creative. Most notably, things Miller learned from one experiment led to innovation in others.

Some worked, others didn’t, but a significant revelation occurred. Being starved of money and resources forced Miller to be relentlessly creative. Most notably, things Miller learned from one experiment led to innovation in others.

Look no further than the inaugural Quarterback Skills Challenge. In passing, Miller asked John Elway and Dan Marino if they would be interested in the prospect of playing in a celebrity golf tournament during the offseason.

The reason?

Miller was looking to fill NBC’s massive hole around the 4th of July, so knowing that NFL quarterbacks were the biggest draw in sports and often pretty good golfers, he thought he there might be something there.

After securing two of the NFL’s biggest stars, along with former standouts Joe Namath, Joe Theismann, and Jim McMahon, Miller launched the American Century Celebrity Golf Tournament at Lake Tahoe, an event that would become a runaway success for more than three decades.

Witnessing Tahoe’s popularity, Miller saw room for more unique events. Sticking with golf, he turned to an international match play golf tournament that hadn’t generated much in the way of television ratings — the Ryder Cup.

Witnessing Tahoe’s popularity, Miller saw room for more unique events. Sticking with golf, he turned to an international match play golf tournament that hadn’t generated much in the way of television ratings — the Ryder Cup.

In 1991, Miller secured the rights to the tournament at Kiawah’s Ocean Course and capitalized on a simmering feud between U.S. captain Paul Azinger and European captain Seve Ballesteros. Combined with the surge of patriotic fervor following the Persian Gulf War, the stage was set for an epic showdown that would forever be remembered as the “War by the Shore.” In the process, Miller transformed a once-overlooked event into the most anticipated tournament in golf, every other year.

This success would spawn several more iconic events, including the National Dog Show (a Thanksgiving Day staple since 2002) and the NHL’s Winter Classic, now the highlight of hockey’s regular season.

So, what explains Miller’s actions?

Psychologists have a name for it — cognitive resourcefulness.

In short, when resources are scarce, humans often bypass conventional thinking and take unfamiliar paths to solve problems. In this case, cognitive resourcefulness caused Miller to reimagine what sports television could be. Without dollars to spend, he truly had to think. To solve. To create.

We see this in all walks of life.

Seinfeld, arguably the most successful television show of all time, was primarily filmed on a tiny set meant to resemble an 800 square-foot apartment on the Upper West side of Manhattan. In reality, the set was less than half that size.

So how did the cast operate within such a tiny space for nine seasons?

So how did the cast operate within such a tiny space for nine seasons?

As Julia Louis-Dreyfus (aka Elaine) explains, they had to be creative, saying:

“We were always challenged by the size of Jerry’s apartment. It was just so small. What are you supposed to do? You can’t just walk in and sit on the couch every time. This is why I used to do things like go to the refrigerator and just look for things. We were limited, so we had to constantly be creative.”

I witnessed something similar this past summer on our family vacation to the beach. Our boys and their cousins only had a few inflatable toys, two-fold-out chairs, a couple balls, and a rickety goal in the pool. Yet, within minutes, they had invented a game — two points for jumping over a foam noodle and throwing a ball in the goal, five for making it through an inner tube, ten for sinking one into a small basket. First to twenty wins. Limited resources sparked limitless ideas.

Whether it’s an NBC executive, actors in a sitcom, or a group of high-energy kids, if you give someone limited resources and a clear challenge, odds are they will find a way to solve it. Most importantly, they will be infinitely more innovative than if they had unlimited means.

Just look at our economy.

Is it a coincidence that some of our most iconic companies were born during economic downturns?

I doubt it.

As access to capital during the 2008–2011 financial crisis dried up, entrepreneurs were forced to improvise. Airbnb turned spare rooms into a global hospitality network, while Uber built a transportation empire using existing car owners instead of buying a brand-new fleet. Meanwhile, Venmo and Square set out to solve everyday problems with lean, tech-driven models.

How about in the depths of the dot.com bust?

Would you believe me if I told you Palantir and Tesla both were founded in 2003?

The same is true in investing.

Personally, I cringe when I hear that individual investors are “disadvantaged” because they don’t have access to things like private equity and that the only answer is to provide them with access through the “democratization of finance.”

Why?

Because the answer to a lack of resources is not to follow others. Rather, the answer is to be different. To be creative. To think outside the box.

This is why I shake my head when people recommend that average investors should follow Yale University’s David Swensen or Berkshire Hathaway’s Warren Buffett. While Swensen and Buffett had large balance sheets, a supremely talented team, and a network around the world that was second-to-none, you likely have none of these.

However, a lack of resources means you have something they don’t — a license to be creative. It means you have the chance to play your own game, do things differently, and adhere to a game plan unique to you.

So, when should you think about putting a plan in place?

Now is as good a time as ever.

Fifteen years into a bull market with deregulation underway, interest rates being cut, credit widely available, corporate earnings continuing to surpass expectations, and investments in artificial intelligence booming, things feel pretty darn good.

The problem though, as Hyman Minsky was fond of saying, is that,

“Stability breeds instability. The more stable things become, and the longer things are stable, the more unstable they will be when the crisis hits.”

So, what should an investor do?

Go to cash? Run for the hills?

Not exactly.

Rather, it begins with focusing on what you can control. This means positioning yourself and your portfolio to be ready for the next crisis, whenever one hits, so that you can see it as an opportunity rather than a threat.

An opportunity because crises create scarcity and pressing problems that demand solutions.

An opportunity because crises are what spawn companies and innovators who rise to solve them.

An opportunity because you’ll likely be in a position to supply the capital that empowers these folks to succeed (and at much more attractive entry points than we are seeing today…).

For me, this has meant taking a few chips off the table recently and rotating capital from some richly valued parts of the market into lesser trafficked areas (e.g., REITs, biotech, select parts of international markets, etc.).

More importantly though, I have dusted off Dan Rasmussen’s “crisis investing” framework and started thinking about what I will do when a crisis hits. This has meant determining what companies, funds, or parts of the market I will want to invest in and what will trigger those investments (e.g., a specific percentage sell-off, credit spread limits, valuation triggers, etc.). It has also meant making sure I am completely comfortable with what I currently own, so that when things do crack, I will be able to hold them through to the other side.

The fact is, I have no clue when the next crisis will occur. No one does. Yet, it is hard to deny that we are closer to one than we were 1-, 3-, 5-, and certainly 10-years ago. This is especially the case given the S&P 500 has compounded at 20% over the past six years and annually at 14% for fifteen (the NASDAQ has been even more pronounced generating an annual return of 27% for the past six years and 18.5% over the past fifteen).

The reality is that when one of these moments does come, things will feel uncertain, even dangerous. Future prospects will look grim. As a result, most investors will turn bearish, retreat, and de-risk. However, that’s when the instinct should be to do the opposite. That’s when you should turn to the playbook you built when things felt more stable.

The reality is, these are the moments when innovators and visionaries like Jon Miller, Brian Chesky, and Elon Musk don’t just survive — they reinvent the game. And when they do, that’s when you need to invest.

2026-01-07 04:09:00

Things I’ve been thinking about lately …

I broke my back skiing when I was a teenager. It’s still screwed up and I occasionally tweak it, leaving me in agony for a few days. When I’m in pain I’ve noticed: I’m irritable, short-tempered, and impatient. I try hard to not be, but pain can override the best intentions.

One lesson I’ve tried to learn is that whenever I see someone being a jerk, my knee-jerk reaction is to think, “What an asshole.” My second reaction is: maybe his back hurts.

It’s not an excuse, but a reminder that all behavior makes sense with enough information. You can always see people’s actions, but rarely (if ever) what’s happening in their head.

Here’s a related point: Most harm done to others is unintentional. I think the vast majority of people are good and well-meaning, but in a competitive and stressful world it’s easy to ignore how your actions affect others.

Roy Baumeister writes in his book Evil:

Evil usually enters the world unrecognized by the people who open the door and let it in. Most people who perpetrate evil do not see what they are doing as evil. Evil exists primarily in the eye of the beholder, especially in the eye of the victim.

One consequence of this is that it’s easy to underestimate bad things happening in the world. If I ask myself, “How many people want to cause harm?” I’d answer “very few.” If I ask, “How many people can do mental gymnastics to convince themselves that their actions are either not harmful or justified?” I’d answer … almost everybody.

An iron rule of math is that 50% of the population has to be below average. It’s true for income, intelligence, health, wealth, everything. And it’s a brutal reality in a world where social media stuffs the top 1% of moments of the top 1% of people in your face.

You can raise the quality of life for those below average, or set a floor on how low they can go. But when a majority of people expect a top 5% outcome the result is guaranteed mass disappointment.

I think the majority of society problems are all downstream of housing affordability. The median age of first-time homebuyers went from 29 in 1981 to 40 today. But the shock this causes is so much deeper than housing. When young people are shut out of the life-defining step of having their own place, they’re less likely to get married, less likely to have kids, have worse mental health, and – my theory – more likely to have extreme political views, because when you don’t feel financially invested in your community you’re less likely to care about the consequences of bad policy.

Every economic issue is complex, but this one seems pretty straight forward: we should build more homes. Millions of them, as fast as we can. It’s the biggest opportunity to make the biggest positive impact on society.

I heard someone say recently that the reason so many people are skeptical AI will improve society – or are terrified it will do the opposite – is because it’s not clear the internet (and phones) made their life better.

That’s a subjective point, but it got me thinking: Imagine if you asked people 25 years after these things were invented whether life was better or worse because of their existence: Electricity, radio, airplane, refrigeration, air conditioning, antibiotics, etc.

I think nearly everyone would say “better.” It wouldn’t even be a question.

The internet is unique in the history of technology because there’s a list of things it improved (communication, access to information) but another list of things it likely made worse for almost everybody (political polarization, dopamine addiction from social media, less in-person interaction, lower attention spans, the spread of misinformation.)

There aren’t many examples throughout history of technology so universal with so many obvious downsides relative to what existed before it. But the wounds are so fresh that it’s not surprising many look at AI with the same fear.

This is more hope than prediction, but I wouldn’t be surprised if in 20 years we look back at this era of political nastiness as a generational bottom we grew out of.

There’s a long history of Americans cycling through how they feel about government and how politicians treat each other.

The 1930s were unbelievably vicious. There was a well organized plot to overthrow Franklin Roosevelt and replace him with a Marine general named Smedley Butler, who would effectively become dictator. The Great Depression made Americans lose so much faith in government that the prevailing view was, “hey, might as well give this a shot.”

It would have sounded preposterous if someone told you in the 1930s that by the 1950s more than 70% of Americans said they trusted the government to do the right thing almost all the time. But that’s what happened.

And it would have sounded preposterous in the 1950s if you told Americans within 20 years trust would collapse amid the Vietnam War and Watergate.

It would have sounded preposterous if you told Americans in the 1970s that within 20 years trust and faith in government would have surged amid 1990s prosperity and balanced budgets.

And equally absurd if you told Americans in the 1990s that we’d be where we are today.

Cycles are so hard to predict, because it’s easier to forecast in straight lines. What’s almost impossible to detect in real time is the same forces fueling public opinion plant the seeds of their own demise. When times are good, people get complacent and stop caring about good governance. When times are bad they get fed up and say, “Enough of this.” And I think we’re not far from that today.

I have a theory about nostalgia: It happens because the best survival strategy in an uncertain world is to overworry. When you look back, you forget about all the things you worried about that never came true. So life appears better in the past because in hindsight there wasn’t as much to worry about as you were actually worrying about at the time.

2025-12-17 06:44:00

People often ask me where I get ideas for this Substack. The simple answer is everywhere, even a Christmas tree lot on a cold night in December.

When my brother and I were kids, each year we would make the annual trip with our dad to pick out the family Christmas tree. But when we left for college, he was left to make the selection without us. That is until recently when we decided the old man needed some help.

Now, one might conclude that we did so because at 78 and a shade under 5’4, wrestling a nine-foot tree off the car roof had become a nearly-impossible task. While true, our rationale ran deeper — we thought he needed “air cover.”

Air cover?

Yes, air cover.

In short, we needed to witness his selection process so that we could defend him.

See, in what had become a Christmas ritual in the Lamade house, every year for as long as I can remember, my dad would go out, buy a tree, put it up on the stand, and my mom would immediately spot the flaws. Hole on one side. Dead patch in the middle. Leaning hard to the left. An obvious flat top. You name it, we’ve had it.

For years my brother and I would go into full blown “PR mode” after the tree went up, saying things like:

“The tree will look nice once it drops.”

“Dad did his best.”

“It’s Christmas — give him a break.”

But candidly, we kept asking ourselves the same question, how does this keep happening?!?!

I mean, my mom wasn’t wrong. These trees nearly always had something wrong with them.

That is, until my brother and I finally saw him in action on the lot last year. Like the Hardy Boys, we solved our childhood mystery almost instantaneously.

When we arrived at the lot, the two of us prepared to fan out to look for a tree. The goal was simple – find a nine-footer with no dead branches, not too big…not too small, and certainly no flat tops.

Then it happened. Something the two of us had not prepared for. It was akin to the moment as a kid when you find out the whole secret behind Santa.

So, what was it?

Practically the minute we walked onto the lot, my dad pointed to the first nine-footer he saw and said – “We’ll take that one.” Huh? Was this a joke??

Then it all made sense. This is how he had managed to bring home an imperfect tree year-after-year. He didn’t search. He didn’t compare. He didn’t think about how a tree would look after it “dropped.” He simply saw one that looked good enough and bought it. Witnessing what had just happened, the two of us turned to him and said,

“What are you doing? You cannot be serious!?!?”

He then looked at us like we were the crazy ones and said,

“What do you mean ‘am I serious’? Of course I am serious. Your mother will find something wrong with any tree I bring home, so why spend an hour in the freezing cold looking for one?”

Here’s the thing. He wasn’t wrong. But there was more to it.

My dad has always been drawn to the oddball. The misfit. The underdog. The one with “character.” Deep down, I think he liked “rescuing” the ugly tree from the lot. I even think he got a kick out of imagining the moment Mom would see it and deliver the annual critique. The whole thing amused him.

And truthfully, we have laughed about our trees every year. The one with the massive hole. Ole Flat Top. The one that looked like it had fallen over six times. Best yet, after critiquing them initially, almost every year my mom eventually comes around to liking these trees.

Which leads me to the real point.

People think Christmas is about perfection – the perfect house, the perfect photo, the perfect gifts, the perfect everything. But the moments we actually remember aren’t the flawless ones.

We remember the misfit tree.

We remember freezing with our dad in the Christmas tree lot, the uncle who had a few too many adult beverages, and the overly dry turkey.

We also remember the cousin who was allergic to the family dog and almost fell asleep at dinner after taking too much Benadryl (me) and the older brother who shot his younger brother in the rear-end from three feet away with a paintball gun he got for Christmas (also me…the shooter that is.)

Yet, somewhere along the way, we lost sight of this. Perfection, or the perception of it, has become the goal. We curate our lives on YouTube through highlight reels and post our airbrushed photos from vacation on Instagram. It’s why people are increasingly using artificial intelligence to “perfect” nearly everything they do. And yes, it’s why people are increasingly buying artificial Christmas trees….because they are easier to set up, cleaner to maintain, and “perfect” in so many ways.

But here’s the question:

By attempting to remove all the imperfections in life, are we removing the stories? The moments that make us laugh? The mistakes and imperfections that make us uniquely human?

I don’t know. Maybe I sound like the Grinch. Maybe I am overly nostalgic for the past. Maybe I just need to accept that this is what progress looks like. Or maybe, just maybe, there is something special about the tree with the flat top. The one that leans a little. The one with “character.” The one you talk about for weeks, if not years later. The one that reminds you that since perfection is impossible, you might be better off simply embracing life’s imperfections.

UPDATE:

After I finished writing this, I had a work conference in Georgia. As a result, I unfortunately had to miss the annual tree decorating night at my parent’s house, which is a highlight of the Christmas season for all of us, especially the grandkids.

This year, since it was on the same day as my flight, I called my wife when I landed to see how it was going. Her response?

“Ted, you won’t believe it. The tree fell over.”

My reaction?

Now that’s perfect, in every sense of the word.

2025-12-01 05:44:00

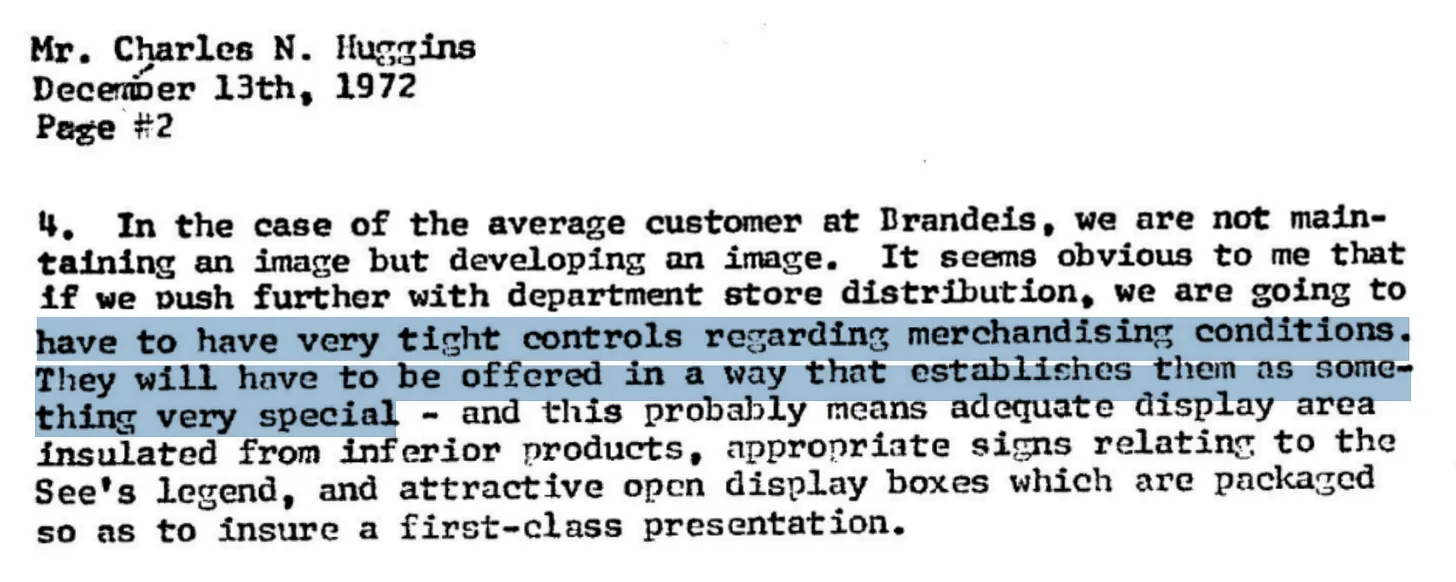

A few weeks ago, I came across a letter Warren Buffett wrote in 1972 to Chuck Huggins, then president of See’s Candy. It’s two pages long, typed on letterhead, and quietly brilliant.

Reading it now, more than fifty years later, it feels less like an internal memo and more like a manifesto about what makes a brand endure.

“People are going to be affected not only by how our candy tastes,” Buffett wrote, “but by what they hear about it from others as well as the retailing environment in which it appears.”

In those lines, you can see how Buffett was already thinking far beyond unit economics or margins. He understood that the true value of See’s wasn’t measured by its ingredients or its quarterly earnings. It was in the feeling the brand produced - the trust, nostalgia, and quiet pride that came with handing someone a white and gold box tied in ribbon.

He was describing brand equity before that term was widely used.

Buffett often talks about moats; the durable competitive advantages that protect a company over decades. In See’s Candy, he found one that was remarkably intangible: customer love.

It’s easy to think of Buffett as the world’s most disciplined value investor. But letters like this reveal something deeper. He has always been a brand investor, someone who recognizes that the strongest companies don’t just sell products; they sell belief. They create environments and stories that compound over time.

“If we push further with department store distribution,” he cautioned, “we are going to have to have very tight controls regarding merchandising conditions. They will have to be offered in a way that establishes them as something very special.”

He knew that brand perception couldn’t be outsourced. Every display, every piece of packaging, every interaction mattered. That resonates deeply with how we try to invest at Collaborative Fund. The moat we care most about is emotional, not transactional.

We often say that great brands “scratch an itch” — they solve a deep, recurring need that people actually feel. In 2013, I wrote a short post by that name, arguing that the best companies are the ones that clearly answer two questions:

Buffett, a decade before many modern brand marketers were born, intuited this perfectly. He knew that See’s scratched a very specific societal itch — not hunger, but something closer to affection: the ritual of gifting, the small gesture that says I thought of you.

That’s the kind of intangible value that compounds quietly for decades. You can’t model it in Excel, and you won’t find it in a discounted cash flow. But you can feel it in the way people talk about the brand, and the way they keep returning to it year after year.

Buffett has always described his preferred business as one that “can raise prices without losing customers.” What he really means is one that has earned the right to be loved and trusted.

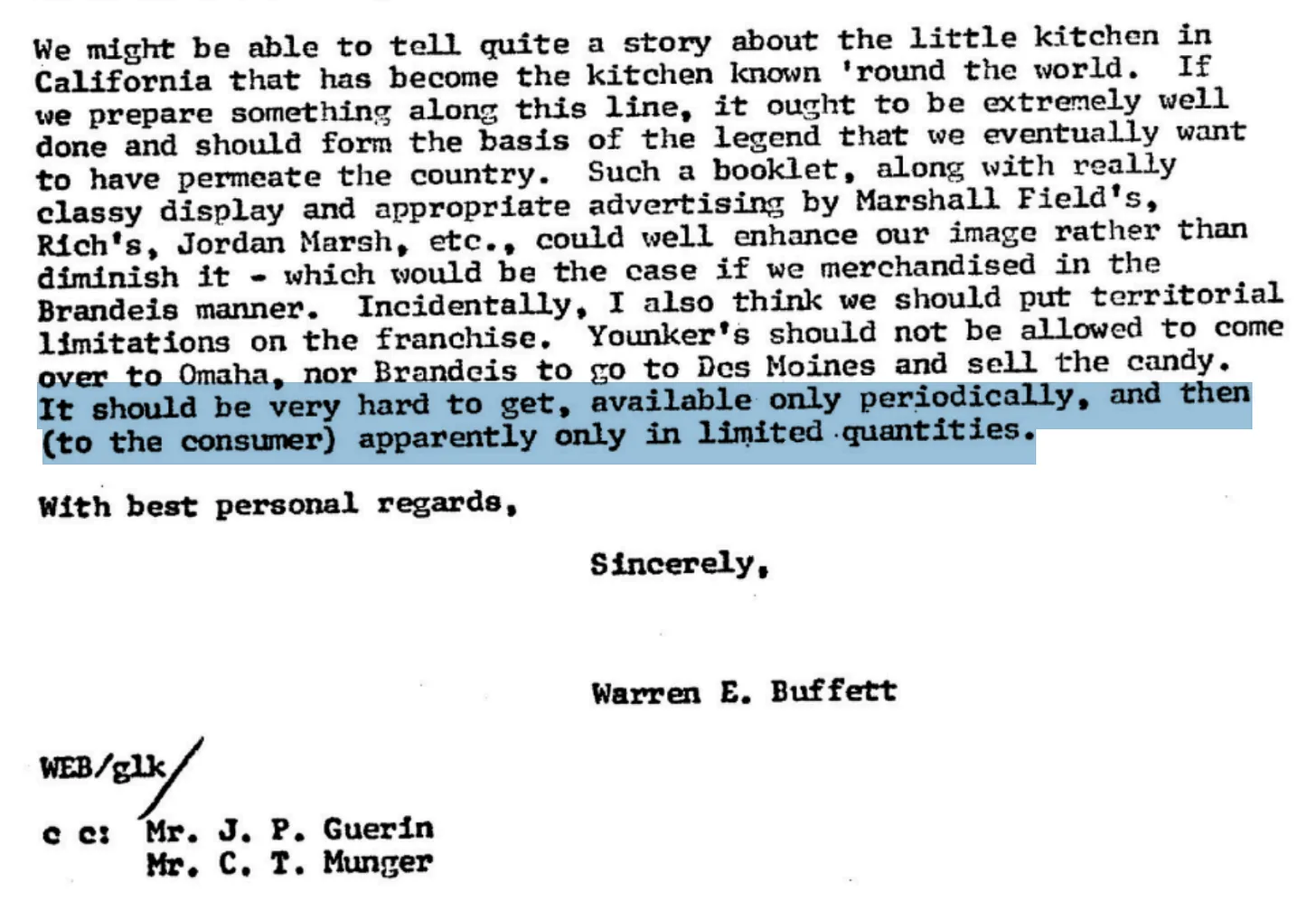

See’s Candy could do that because it felt rare, careful, and human. Everything around it reinforced that perception. Buffett even wrote that it “should be very hard to get, available only periodically, and then apparently only in limited quantities.”

He was thinking like a cultural anthropologist, not just an investor. He understood the psychology of desire.

That’s what we look for at Collab — companies with purpose and empathy baked into their DNA, where the brand’s story and product experience are inseparable. Companies that, like See’s, pass what we call the Villain Test: if they disappeared tomorrow, who would be genuinely sad?

Buffett bought See’s for $25 million. Since then, it has produced billions in profit for Berkshire Hathaway and an immeasurable amount of goodwill for customers who still associate those signature boxes with care, quality, and connection.

Half a century later, the letter reads like an early draft of the Collaborative investment philosophy. He wasn’t talking about candy at all, really. He was talking about trust, consistency, delight — all those overlooked forces that turn a product into a brand and a brand into a legend.

That’s still the itch we’re trying to scratch.

2025-11-05 01:44:00

Like millions of others, I’ve been watching and playing with OpenAI’s new video model, Sora—and I keep thinking about how often technology outpaces permission. One week it’s coffee apps; the next, it’s cinematic worlds spun from text. Each innovation starts as convenience and ends up rewriting the rules.

Starbucks didn’t mean to become a financial institution. But every era of innovation finds its own way to test the rules.

The world runs on rules. But rules run on paperwork, committees, and calendars. Technology, on the other hand, runs on curiosity and caffeine.

That mismatch—between how fast people can build and how slowly institutions can respond—has become one of the defining forces of modern life. It’s not that people are breaking the law; it’s that the law no longer describes what people are doing.

Starbucks is a coffee company. But in financial terms, it’s also one of the largest banks in America. Customers collectively hold nearly $2 billion in prepaid balances on Starbucks cards and apps—more than 85 percent of U.S. banks hold in total deposits.

The twist is that customers didn’t entirely choose this setup. When you pay with the Starbucks app, you can’t simply use your credit card in the moment. You have to preload money into an account. That single design choice—subtle, convenient, and completely intentional—turned every latte purchase into a micro-deposit. Starbucks quietly became a financial institution without ever applying for a banking license.

This is how change usually happens in technology: not through rebellion, but through design. What begins as a product feature becomes a regulatory blind spot, and over time, an accepted norm.

If Starbucks represents the subtle version of this phenomenon, Uber is its loud, brash archetype.

Uber wrote the modern playbook for testing the limits of regulation. Launch first, operate in the gray, and let adoption create legitimacy. By the time regulators react, public opinion—and billions in rider demand—make reversal almost impossible.

Uber’s true innovation wasn’t just the app itself. It was the recognition that once technology hits a certain scale, the question shifts from “Is this allowed?” to “How do we live with it?” That approach—move fast, find the edges, let the system adapt—has become the default operating system of the modern tech economy. Starbucks, in its own quiet way, followed it. So have crypto networks, prediction markets, and now, artificial intelligence companies.

OpenAI’s new video model, Sora, can generate cinematic footage from a text prompt. It’s astonishing. But behind that wonder lies a familiar discomfort: whose work did it learn from?

The model didn’t invent its sense of movement, light, and storytelling in isolation. It absorbed it—from films, animation, design, and photography created by millions of people who were never asked, credited, or compensated. The company insists this is legal, and perhaps under current law it is. But legality and legitimacy are not the same thing.

Copyright law wasn’t built for an era when a machine could absorb the collective visual memory of humanity. It was written for a world where “copying” meant pressing paper or burning film. The law still speaks the language of ownership. The technology now speaks the language of ingestion.

That gap—between what’s legal and what’s right—is where power quietly accumulates.

For most of history, invention was the bottleneck. Today, interpretation is. Regulators deliberate in years; developers iterate in days. By the time the rules catch up, the loophole has become the market.

We still talk about regulation as if it defines the edges of what’s possible. Increasingly, it just describes what people already decided to do. But acceptance and fairness are not the same thing. Just because something scales doesn’t mean it should.

As formal oversight struggles to keep pace, informal ones have stepped in: reputation, public trust, user backlash. They’re faster than legislation, but also more fragile.

The world needs builders bold enough to test limits, but principled enough to remember why those limits existed in the first place. Progress that erases the people it depends on isn’t progress—it’s extraction.

Every major leap forward begins in a gray area. Railroads, credit cards, the internet—all bent old rules until society decided whether to rewrite them or live with the consequences. Artificial intelligence is just the latest in that lineage.

The challenge isn’t stopping innovation; it’s ensuring that the people writing the future still feel accountable to the ones living in it. The future doesn’t always ask for permission. But maybe it should still ask for consent.

2025-10-08 23:25:00

After winning a Golden Globe in 2006, Phillip Seymour Hoffman replied to a question about how he got to this moment in his life.

Nearly two decades later, his response is more relevant than ever.

“Even if you are auditioning for something you know you don’t like or are never going to get, whenever you get a chance to act in a room that someone else has paid for, it is a free chance to practice your craft. And in that moment, you should act as well as you can because if you leave that room and you have done this, there is no way the people who watched you will forget it. That is the only advice I have because it is always about that — if you are given the chance to act, take those words and bring them alive; and if you do that, something will ultimately transpire.”

This mindset explains how within two years, Hoffman played Sandy Lyle in Along Came Polly and Truman Capote in Capote, winning an Oscar for the latter.

Retired United States Navy General William McRaven echoed a similar sentiment in his book, The Wisdom of the Bullfrog, writing,

“I found in my career that if you take pride in the little jobs, people will think you worthy of the bigger jobs.”

He illustrated this point with a story from early in his career when rather than being assigned to lead a mission, he was tasked with building a float that would represent the Navy SEALs (often referred to as “frogmen”) in the Fourth of July parade.

After receiving the assignment, McRaven was admittedly dejected. In his mind, he had joined the Navy SEALs to lead missions, not build parade floats. But a seasoned team member offered him a quiet piece of advice, saying:

“Sooner or later we all have to do things we do not want to. But if you are going to do it, do it right. Build the best damn Frog Float you can.”

McRaven took the message to heart, pouring himself into the task and the float went on to win first prize in its category.

Over time, McRaven would lead far more consequential missions, including commanding the Joint Special Operations Command (JSOC) and later the U.S. Special Operations Command (SOCOM), which oversees all U.S. special operations forces, including the Navy SEALs, Army Green Berets, and Air Force Pararescue.

Unfortunately, I didn’t appreciate this lesson enough early in my career. As an example, after graduating from business school, I thought I could come right in and impart my newly found wisdom, when I should have been a better listener and executed the mundane tasks with as much vigor as the more interesting ones.

Fortunately, I don’t think I am alone. If I had to guess, anyone reading this can point to a similar moment in their career.

The thing is, this happens to all of us. Even the very best.

Look no further than Tom Brady, who has admitted he was consumed by this mentality early in his career at Michigan.

After his sophomore season, Brady was buried on the depth chart, only getting one or two reps per practice. As a result, he expressed his frustration to the coaches, to which head coach Lloyd Carr responded,

“Brady, I want you to stop worrying about what all the other players on our team are doing. All you do is worry about what the starter is doing, what the second guy is doing, what everyone else is doing. You don’t worry about what you are doing. You came here to be the best. If you’re going to be the best, you have to beat out the best.”

Carr then suggested Brady meet with Greg Harden, a counselor in the athletic department.

Once again, Brady vented his frustration — complaining about getting limited reps.

Harden’s advice was simple:

“Just focus on doing the best you can with those two reps. Make them as perfect as you possibly can. Then focus on the next two, and the next two, and the next two.”

How did Brady respond?

In his words,

“So, that’s what I did. They would put me in for those two reps, and man, I would sprint out there like it was the Super Bowl. I’d shout, ‘Let’s go boys! Here we go! What play we got?!?’ And I started to do really well with those two reps because I brought enthusiasm and energy. Soon, I was getting four reps. Then ten, and before you knew it, with this new mindset that Greg had instilled in me — to focus on what you can control, to focus on what you’re getting, not what anyone else is getting. To treat every rep like it’s the Super Bowl — eventually, I became the starter.”

We all know how Brady’s story played out from here, and it all started with two reps.

Last spring, my then eight-year-old son was wrestling with getting to play very limited minutes, so I read him this story about Brady. After sitting in silence for a few seconds, he looked up at me and said, “Tom Brady barely played too??”

To which I responded, “Yeah bud, like you. You just gotta make the best of your chances you’re given.”

After leaving his room that night, I thought to myself — I would give just about anything to be able to go back in time and tell my younger self this.

Would I have listened?

I hope so, but if this is a hard concept for adults to grasp, I imagine it’s even more difficult for kids.

Nonetheless, I wish I had appreciated this fact of life earlier. The fact is, someone is almost always watching, so it’s worth treating everything you do with purpose and pride. And, even if no one has their eyes on you, it is still a chance to “practice your craft”. To improve. To build strong habits.

Since time is limited, we only get so many opportunities.

We might as well take advantage of each one we get.