2026-03-07 08:57:10

The next presidential election represents a significant opportunity for advocates of AI safety to influence government policy. Depending on the timeline for the development of artificial general intelligence (AGI), it may also be one of the last U.S. elections capable of meaningfully shaping the long-term trajectory of AI governance.

Given his longstanding commitment to AI safety and support for institutions working to mitigate existential risks, I advocate for Dustin Moskovitz to run for president. I expect that a Moskovitz presidency would substantially increase the likelihood that U.S. AI policy prioritizes safety. Even if such a campaign would be unlikely to win outright, supporting it would still be justified, as such a campaign would elevate AI Safety onto the national spotlight, influence policy discussions, and facilitate the creation of a pro-AI-Safety political network.

Governments are needed to promote AI safety because the dynamics of AI development make voluntary caution difficult, and because AI carries unprecedented risk and transformative potential. Furthermore, the US government can make a huge difference for a relatively insignificant slice of its budget.

There’s potentially a massive first-mover advantage in AI. The first group to develop transformative AI could theoretically secure overwhelming economic power by utilizing said AI to kick off a chain of recursive self improvement, where first human AI researchers gain dramatic productivity boosts by using AI, then AI itself. Even without recursive improvement, however, being a first mover in transformational AI could still have dramatic benefits.

Incentives are distorted accordingly. Major labs are pressured to move fast and cut corners—or risk being outpaced. Slowing down for safety often feels like unilateral disarmament. Even well-intentioned actors are trapped in a race-to-the-bottom dynamic, as all your efforts to ensuring your model is safe is not that relevant if an AI system developed by another, less scrupulous company becomes more advanced than your safer models. Anthropic puts it best when they write "Our hypothesis is that being at the frontier of AI development is the most effective way to steer its trajectory towards positive societal outcomes." The actions of other top AI companies also reflect this dynamic, with many AI firms barely meeting basic safety standards.

This is exactly the kind of environment where governance is most essential. Beyond my own analysis, here is what notable advocates of AI safety have said on the necessity of government action and the insufficiency of corporate self-regulation:

“‘My worry is that the invisible hand is not going to keep us safe. So just leaving it to the profit motive of large companies is not going to be sufficient to make sure they develop it safely,’ he said. ‘The only thing that can force those big companies to do more research on safety is government regulation.’”

“I don't think we've done what it takes yet in terms of mitigating risk. There's been a lot of global conversation, a lot of legislative proposals, the UN is starting to think about international treaties — but we need to go much further. {...} There's a conflict of interest between those who are building these machines, expecting to make tons of money and competing against each other with the public. We need to manage that conflict, just like we've done for tobacco, like we haven't managed to do with fossil fuels. We can't just let the forces of the market be the only force driving forward how we develop AI.”

“Many researchers working on these systems think that we’re plunging toward a catastrophe, with more of them daring to say it in private than in public; but they think that they can’t unilaterally stop the forward plunge, that others will go on even if they personally quit their jobs. And so they all think they might as well keep going. This is a stupid state of affairs, and an undignified way for Earth to die, and the rest of humanity ought to step in at this point and help the industry solve its collective action problem."

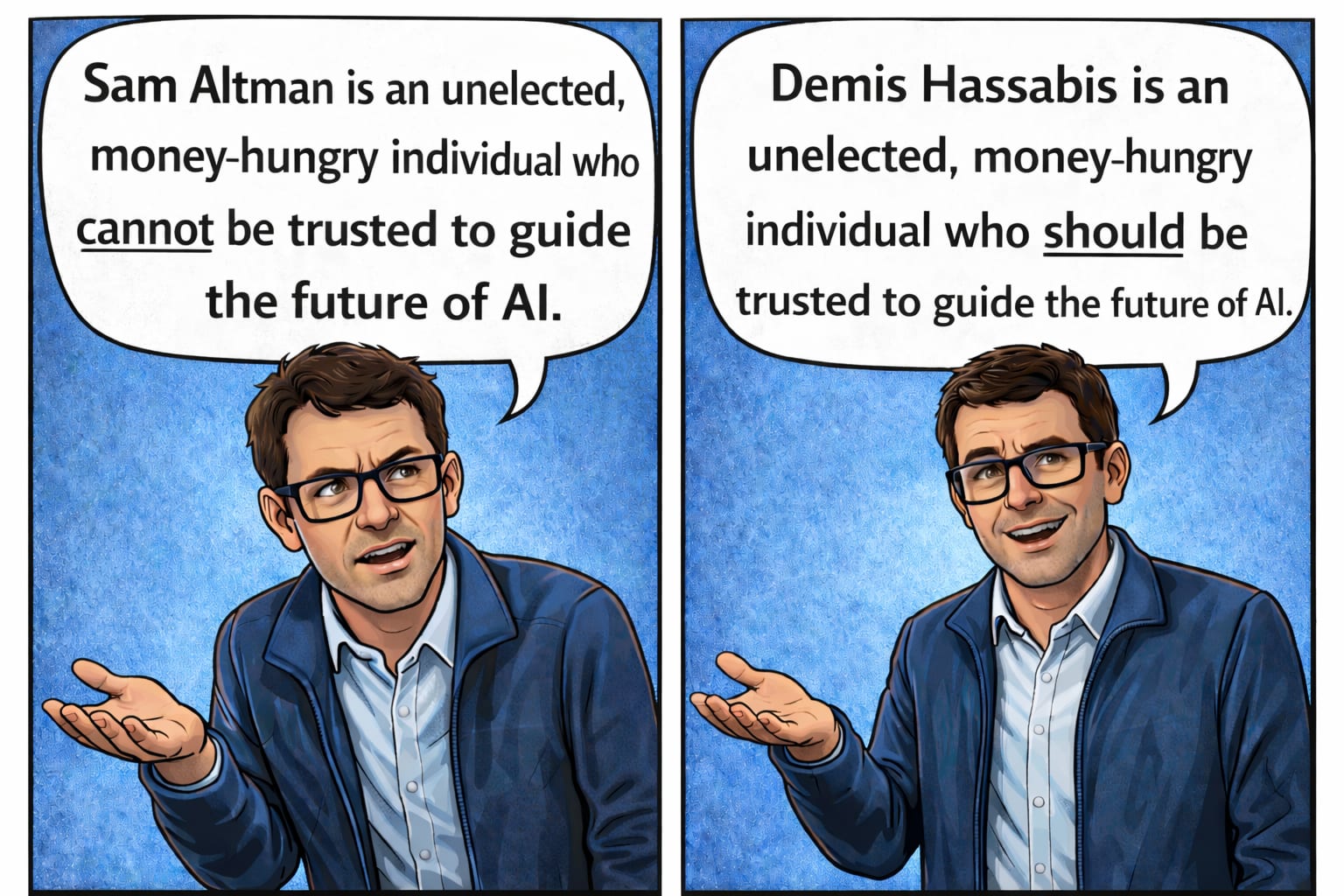

Beyond the argument from competition, there is also the question about who gets to make key decisions about what type of risks should be taken in the development of AI. If AI has the power to permanently transform society or even destroy it, it makes sense to leave critical decisions about safety to pluralistic institutions rather than unaccountable tech tycoons. Without transparency, accountability, and clear safety guidelines, the risk for AI catastrophe seems much higher.

To illustrate this point, imagine if a family member of a leader of a major AI company (or the leader themselves) gets late stage cancer or another serious medical condition that is difficult to treat with current technology. It is conceivable that the leader would attempt to develop AI faster in order to increase their or their family member's personal chance of survival, whereas it would be in the best interest of the society to delay development for safety reasons. While it is possible that workers in these AI companies would speak out against the leader’s decisions, it is unclear what could be done if the leader in this example decided against their employees' advice.

This scenarios is not the most likely but there are many similar scenarios and I think it illustrates that the risk appetites, character, and other unique attributes of the leaders and decision makers of these AI companies can materially affect the levels of AI safety that are applied in AI development. While government is not completely insulated from this phenomenon, especially in short timeline scenarios, ideally an AI safety party would be able to facilitate the creation of institutions which would utilize the viewpoints of many diverse AI researchers, business leaders, and community stakeholders in order to create an AI-governance framework which will not give any one (potentially biased) individual the power to unilaterally make decisions on issues of great importance regarding AI safety (such as when and how to deploy or develop highly advanced AI systems).

Finally, I think the massive resources of government is an independent reason to support government action on AI safety. Even if you think corporations can somewhat effectively self-regulate on AI and you are opposed to a general pause on AI development, there is no reason the US government shouldn't and can't spend 100 billion dollars a year on AI safety research. This number would be over 20 times greater than 3.7 billion, which was Open AI's total estimated revenue in 2024, but <15% of US defense spending. Ultimately, the US government has more flexibility to support AI safety than corporations, owing simply to its massive size.

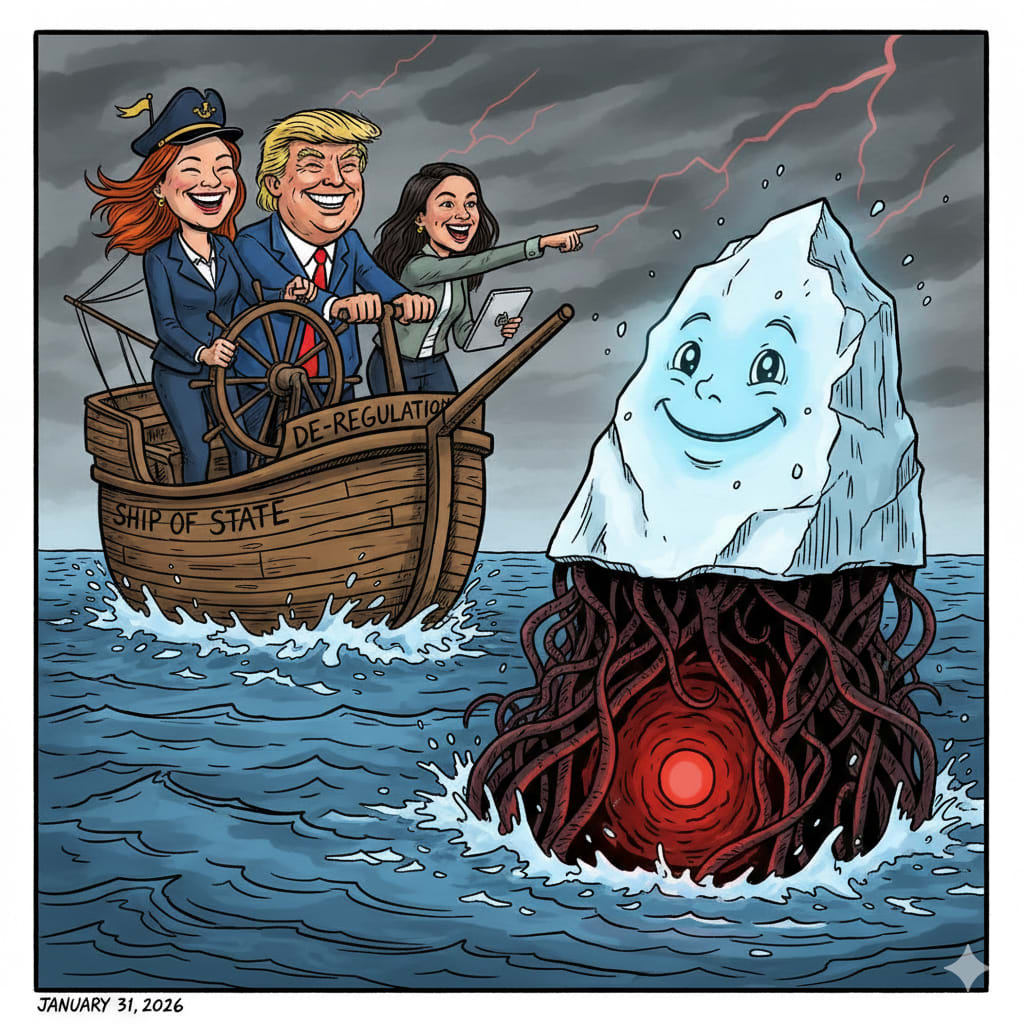

Despite many compelling reasons existing for the US government to act on AI safety, the US government has never taken significant action on AI safety, and the current administration has actually gone backwards in many respects. Despite claims to the contrary, the recent AI action plan is a profound step away from AI safety, and I would encourage anyone to read it. The first "pillar" of the plan is literally "Accelerate AI Innovation" and the first prong of that first pillar is to "Remove Red Tape and Onerous Regulation", citing the Biden Administration's executive action on AI (referred to as the "Biden administration's dangerous actions") as an example, despite the fact the executive order did not actually do much and was mainly trying to lay the ground-work for future regulations on AI.

The AI Action plan also proposes government investment to advance AI capabilities, suggesting to "Prioritize investment into theoretical computational and experimental research to preserve America's leadership in discovering new and transformative paradigms that advance the capabilities of AI", and while the AI Action plan does acknowledge the importance of "interpretability, control, and robustness breakthroughs", it receives only about two paragraphs in a 28 page report (25 if you remove pages with fewer than 50 words).

However, as disappointing the current administration's stance on AI Safety may be, the previous administration was not an ideal model. According to this post NSF spending on AI safety was only 20 million dollars between 2023 and 2024, and this was ostensibly the main source of direct government support for AI safety. To put that number into perspective, the US Department of Defense spent an estimated 820.3 billion US dollars in FY 2023, and meaning the collective amount spent by represented only approximately 0.00244% of the US Department of Defense spending in FY 2023.

Many people seem to believe that governments will inevitably pivot to promoting an AI safety agenda at some point, but we shouldn't just stand around waiting for that to happen while lobbyists funded by big AI companies are actively trying to shape the government's AI agenda.

The US president could unilaterally take a number of actions relevant for AI Safety. For one, the president could use powers under the IEEPA to essentially block the exports of chips to adversary nations, potentially slowing down foreign AI development and giving the US more leverage in international talks on AI. The same law could also limit the export of such models, shifting the bottom line of said AI companies dramatically. The president could also use the Defense Production Act to require companies to be more transparent about their use and development of AI models, something which also would significantly affect AI Safety. This is just scratching the surface, but beyond what the president could do directly, over the last two administrations we have seen that both houses of congress have largely went along with the president when a single party controlled all three branches of government. Based on the assumption that a Moskovitz presidency would result in a trifecta, it should be easy for him to influence congress to pass sweeping AI regulation that gives the executive branch a ton of additional power to regulate AI.

Long story short, effective AI governance likely requires action from the US federal government, and that would basically require presidential support. Even if generally sympathetic to AI safety, a presidential candidate who does not have a track record of supporting AI Safety will likely be far slower at supporting AI regulation, international AI treaties, and AI Safety investment, and this is a major deal.

Many people care deeply about the safe development of artificial intelligence. However, from the perspective of someone who cares about AI Safety, a strong presidential candidate would need more than a clear track record of advancing efforts in this area. They would also need the capacity to run a competitive campaign and the competence to govern effectively if elected.

However, one of the central difficulties in identifying such candidates is that most individuals deeply involved in AI safety come from academic or research-oriented backgrounds. While these figures contribute immensely to the field’s intellectual progress, their careers often lack the public visibility, executive experience, or broad-based credibility traditionally associated with successful political candidates. Their expertise, though invaluable, rarely translates into electoral viability.

Dustin Moskovitz represents a rare exception. As a leading advocate and funder within the AI safety community, he possesses both a deep commitment to mitigating existential risks and the professional background to appeal to conventional measures of success. His entrepreneurial record and demonstrated capacity for large-scale organization lend him a kind of legitimacy that bridges the gap between the technical world of AI safety and the public expectations of political leadership. Beyond this, his financial resources also will allow him to quickly get his campaign off the ground and focus less on donations than other potential candidates, a major boon for a presidential nominee.

In a political environment dominated by short-term incentives, a candidate like Moskovitz—who combines financial independence, proven managerial ability, and a principled concern for the long-term survival of humanity—embodies an unusually strong alignment between competence, credibility, and conscience.

The best way to assess the impact of a Moskovitz presidency on AI Safety is to compare him to potential alternative presidents. On the republican side, prediction markets currently favor J. D Vance, who famously stated at an AI summit: "The AI future is not going to be won by hand-wringing about safety. It will be won by building -- from reliable power plants to the manufacturing facilities that can produce the chips of the future."

Yikes.

On the Democrat side, things aren't much better. Few Democratic politicians with presidential ambitions have clearly committed themselves to supporting AI Safety, and even if they would hypothetically support some AI Safety initiatives, they would clearly be less prepared to do so than a hypothetical President Moskovitz.

I believe that if Dustin Moskovitz decided to run for president today with the support of the rationalist and effective altruist communities, he would have a non-zero chance of winning the Democratic nomination. The current Democratic bench is not especially strong. Figures such as Gavin Newsom and Alexandria Ocasio-Cortez both face significant limitations as national candidates.

Firstly, Newsom’s record in California could be fruitful ground for opponents. California has seen substantial out-migration over the past several years, with many residents leaving for states with lower housing costs and fewer regulatory barriers. At the same time, California faces a severe housing affordability crisis driven by restrictive zoning, slow permitting processes, and high construction costs. These issues have become national talking points and have raised questions about the effectiveness of governance in a state often seen as a policy model for the Democratic Party.

AOC, on the other hand, has relatively limited executive experience, and might not even run in the first place.

Against this backdrop, Dustin Moskovitz’s profile as a billionaire outsider could be a political asset. Although wealth can be a liability in Democratic primaries, his outsider status and independence from the traditional political establishment could make him more competitive in a general election. Unlike long-serving politicians, he would enter the race without decades of partisan baggage or controversial votes.

Furthermore, listening to Moskovitz speak, he comes across as thoughtful and generally personable. While it is difficult to judge how effective he would be as a campaigner based only on interviews, there is little evidence suggesting he would struggle to communicate his ideas or connect with voters. Given his experience building and leading organizations, as well as his involvement in major philanthropic initiatives, it is plausible that he could translate those skills into a disciplined and competent campaign.

Nevertheless, I pose a simple question: if not now then when? If the people will only respond to a pro-AI regulation message only after they are unemployed, then there is no hope for AI governance anyways, because by the time AI is directly threatening to cause mass unemployment, it will likely be to late to do anything.

Even if Dustin Moskovitz is unable to win the Democratic nomination, he could potentially gather enough support to play kingmaker in a crowded field and gain substantial leverage over the eventual Democratic nominee. Furthermore, if Moskovitz runs for president, it would provide a blueprint and precedence for future candidates who support AI safety. This, combined with the attention the Moskovitz campaign would bring towards AI Safety, could help justify the Moskovitz campaign on consequentialist grounds.

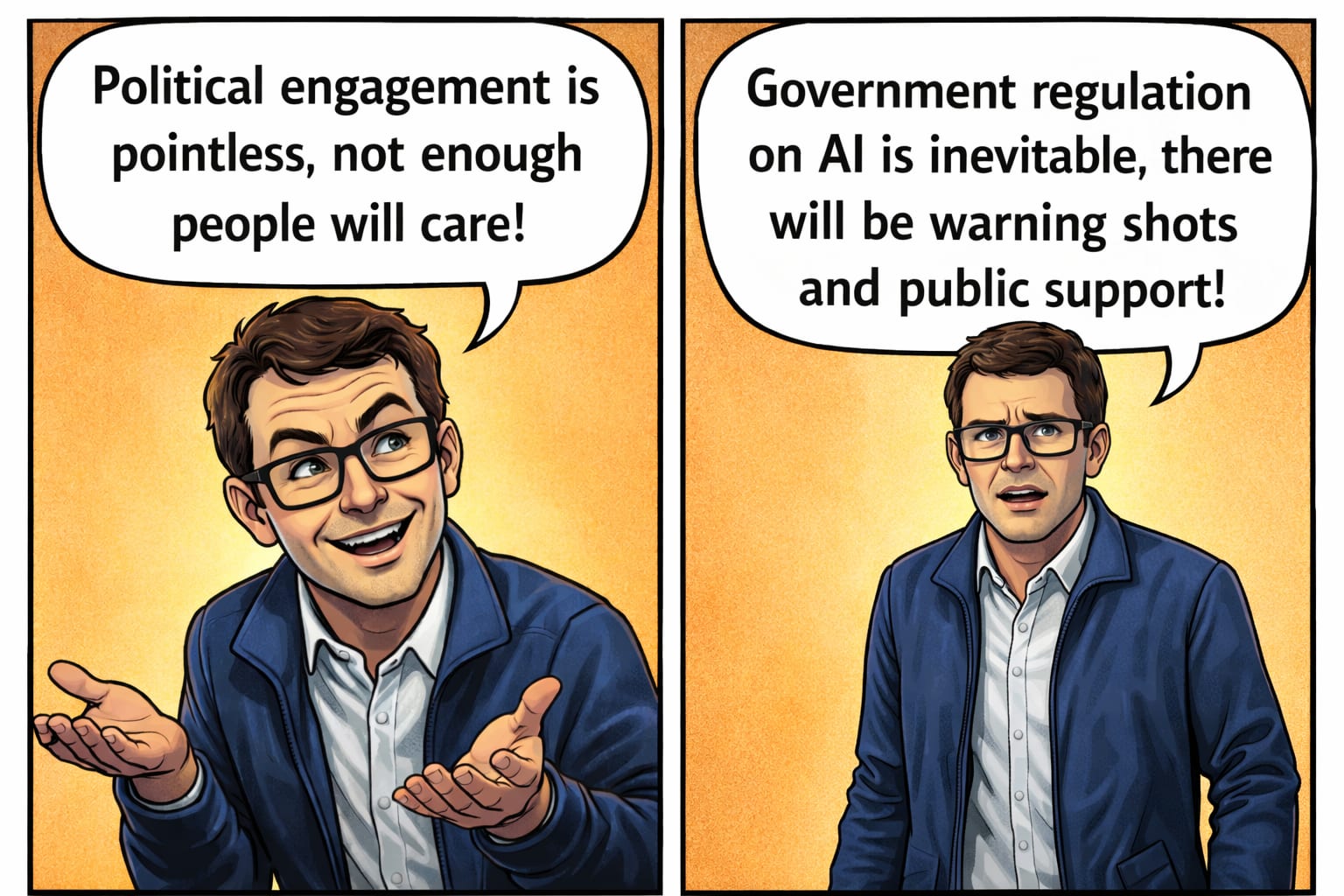

Grassroots movements, while capable of profound social transformation, often operate on timescales far too slow to meaningfully influence AI governance within the short window humanity has to address the technology’s risks. Even if one doubts the practicality of persuading a future president to prioritize AI safety, such a top-down approach may remain the only plausible way to achieve near-term impact. History offers sobering reminders of how long bottom-up change can take. The civil rights movement, one of the most successful in American history, required nearly a decade—beginning around 1954 with Brown v. Board of Education—to achieve its landmark legislative victories, despite decades of groundwork laid by organizations like the NAACP beforehand. The women’s suffrage movement took even longer: from the Seneca Falls Convention in 1848 to the ratification of the Nineteenth Amendment in 1920, over seventy years passed before American women secured the right to vote. Similarly, the American anti-nuclear movement succeeded in slowing the growth of nuclear energy but failed to eliminate nuclear weapons or ensure lasting disarmament, and many of its limited gains have since eroded.

Against this historical backdrop, the idea of a successful AI safety grassroots movement seems implausible. The issue is too abstract, too technical, and too removed from everyday life to inspire widespread public action. Unlike civil rights, women’s suffrage, or nuclear proliferation—issues that directly touched people’s identities, freedoms, or survival—AI safety feels theoretical and distant to most citizens. While it is conceivable that economic disruption from automation might eventually stir public unrest, such a reaction would almost certainly come too late to steer the direction of AI development. Worse, mass discontent could be easily defused by the major AI corporations through material concessions, such as the introduction of a universal basic income, without addressing the underlying safety or existential concerns. In short, the historical sluggishness of grassroots reform, combined with the abstract nature of the AI problem, suggests that bottom-up mobilization is unlikely to arise—or to matter—before the most consequential decisions about AI are already made.

One major way in which people who care to influence governmental support for AI safety policies have sought to influence government has been through lobbying organizations and other forms of activism. However, there is reason to doubt they will be able to cause lasting change. First of all, there is significant evidence that lobbying has a status quo bias lobbying has a status quo bias. Lobbying is most effective when it relates to preventing changes, and when there are two groups of lobbyists on an issue, the lobbying to prevent change win out, all else being equal. In fact, according to a study by Dr. Amy McKay, "it takes 3.5 lobbyists working for a new proposal to counteract just one lobbyist working against it".

Even if this effect did not exist, however, it is very unlikely AI safety groups will be able to compete with anti-AI-safety lobbyists. Naturally, the rise of large, transnational organizations built to profit around AI has also lead to a powerful pro-AI lobbyist operation. This indicates we can't simply rely on current strategies of simply funding AI-safety advocacy organizations, as they will be eclipsed by better funded pro-AI-Business voices.

While having Dustin Moskovitz run for office would be far from a guarantee, it is the best way for pro-AI-Safety Americans to influence AI governance before 2030.

This post has been written with the assistance of Chat-Gpt, and the images in this post were generated by Copilot, Gemini, and Chat-Gpt.

2026-03-07 08:02:07

In the 1900s, much of the work being done by knowledge workers was computation: searching, sorting, calculating, tracking. Software made this work orders of magnitude cheaper and faster.

Naively, one might expect businesses and institutions to carry out largely the same processes, just more efficiently1. But rather, the proliferation of software has also allowed for new kinds of processes. Instead of reordering inventory when shelves looked empty, supply chains began replenishing automatically based on real-time demand. Instead of valuing stocks quarterly, financial markets started pricing and trading continuously in milliseconds. Instead of designing products on intuition, teams began running thousands of simultaneous experiments on live users. Why did a quantitative shift in the cost of computation result in a qualitative shift in the nature of organizations?

It turns out that a huge number of useful computations were never being done at all. Some were prohibitively expensive to perform at any useful scale by humans alone. But most simply went unimagined, because people don’t design processes around resources they don’t have. Real-time pricing, personalized recommendation systems, and algorithmic trading were all inconceivable prior to early 2000s.

These latent processes could only emerge after the cost of computation dropped.

The forms of knowledge work that persisted to today are now being made more efficient by LLMs. But while they’ve enhanced the efficiency of human nodes on the graph of production, the structure of the graph has still been left intact.

In software development in particular, knowledge work consists of designing systems, implementing code, and coordinating decisions across teams. Humans have developed rich toolkits for distributing such cognitive work – think standups, code review, memos, design docs. At their core, these are protocols for coordinating creation and judgement across many people.

The forms that LLMs take on mediate the ways that they plug into these toolkits. Their primary manifestation – chatbots and coding agents – implies a kind of pair-programming, with one agent and one human working side by side. In this capacity, they’re able to write code and give feedback on code reviews. But not much more.

In this sense, we’re in the “naive” phase of LLM applications. LLMs might make writing code and debugging more efficient2, but the nature of work hasn’t changed much.

The first software transition didn’t just make existing computations faster; it allowed for entirely new kinds of computation. LLMs, if given the right affordances, will reveal cognitive flows we haven’t imagined yet.

How should cognitive work be organized? For most of history, this has been a question for metascience, management theory and post-hoc rationalization. Soon, this question will be able to be answered by experiment. We’re curious to see what the answers look like.

2026-03-07 06:59:03

Mechanistic interpretability needs its own shoe leather era. Reproducing the labeling process will matter more than reproducing the Github.

Crossposted from Communication & Intelligence substack

When we try to understand large language models, we like to invoke causality. And who can blame us? Causal inference comes with an impressive toolkit: directed acyclic graphs, potential outcomes, mediation analysis, formal identification results. It feels crisp. It feels reproducible. It feels like science.

But there is a precondition to the entire enterprise that we almost always skip past: you need well-defined causal variables. And defining those variables is not part of causal inference. It is prior to it — a subjective, pre-formal step that the formalism cannot provide and cannot validate.

Once you take this seriously, the consequences are severe. Every choice of variables induces a different hypothesis space. Every hypothesis space you didn't choose is one you can't say anything about. And the space of possible causal models compatible with any given phenomenon is not merely vast in the familiar senses — not just combinatorial over DAGs, or over the space of all possible parameterizations — but over all possible variable definitions, which is almost incomprehensibly vast. The best that applied causal inference can ever do is take a subjective but reproducible set of variables, state some precise structural assumptions, and falsify a tiny slice of the hypothesis space defined by those choices.

That may sound deflationary. I think embracing this subjectivity is the path to real progress — and that attempts to fully formalize away the subjectivity of variable definitions just produce the illusion of it.

The entire causal inference stack — graphs, potential outcomes, mediation, effect estimation — presupposes that you have well-defined, causally separable variables to work with. If you don't have those, you don't get to draw a DAG. You don't get to talk about mediation. You don't get potential outcomes. You don't get any of it.

Hernán (2016) makes this point forcefully for epidemiology in "Does Water Kill? A Call for Less Casual Causal Inferences." The "consistency" condition in the potential outcomes framework requires that the treatment be sufficiently well-defined that the counterfactual is meaningful. To paraphrase Hernán's example: you cannot coherently ask "does obesity cause death?" until you have specified what intervention on obesity you mean — induced how, over what timeframe, through what mechanism? Each specification defines a different causal question, and the formalism gives you no guidance on which to choose.

This is not a new insight. Freedman (1991) made the same argument in "Statistical Models and Shoe Leather." His template was John Snow's 1854 cholera investigation, the study that proved cholera was waterborne. Snow didn't fit a regression. He went door to door, identified which water company supplied each household, and used that painstaking fieldwork to establish a causal claim no model could have produced from pre-existing data. Freedman's thesis was blunt: no amount of statistical sophistication substitutes for deeply understanding how your data were generated and whether your variables mean what you think they mean. As he wrote: "Naturally, there is a desire to substitute intellectual capital for labor." His editors called this desire "pervasive and perverse." It is alive and well in LLM interpretability.

When a mechanistic interpretability researcher says "this attention head causes the model to be truthful," what is the well-defined intervention? What are the variable boundaries of "truthfulness"? In practice, we can only assess whether our causal models are correct by looking at them and checking whether the variable values and relationships match our subjective expectations. That is vibes dressed up as formalism.

The move toward "black-box" interpretability over reasoning paths has only made this irreducible subjectivity even more visible. The ongoing debate over whether the right causal unit is a token, a sentence, a reasoning step, or something else entirely (e.g., Bogdan et al., 2025; Lanham et al., 2023; Paul et al., 2024) is not a technical question waiting for a technical answer — it is a subjective judgment that only a human can validate by inspecting examples and checking whether the chosen granularity carves reality sensibly. [1]

Across 2022–2025, we've watched a remarkably consistent pattern: someone proposes an intervention to localize or understand some aspect of an LLM, and later work reveals it didn't measure what we thought. [2] Each time, later papers argue "the intervention others used wasn't the right one." But we keep missing the deeper point: it's all arbitrary until it's subjectively tied to reproducible human judgments.

I've perpetuated the "eureka, we found the bug in the earlier interventions!" narrative myself. In work I contributed to, we highlighted that activation patching interventions (see Heimersheim & Nanda, 2024) weren't surgical enough, and proposed dynamic weight grafting as a fix (Nief et al., 2026). Each time we convince ourselves the engineering is progressing. But there's still a fundamentally unaddressed question: is there any procedure that validates an intervention without a human judging whether the result means what we think?

It is too easy to believe our interventions are well-defined because they are granular, forgetting that granularity is not validity. [3]

Freedman's lesson was that there is no substitute for shoe leather — for going into the field and checking whether your variables and measurements actually correspond to reality. So what is shoe leather for causal inference about LLMs?

I think it has three components. First, embrace LLMs as a reproducible operationalization of subjective concepts. A transcoder feature, a swapped sentence in a chain of thought, a rephrased prompt — these are engineering moves, not variable definitions. "Helpfulness" is a variable. "Helpfulness as defined by answering the user's request without increasing liability to the LLM provider" is another, distinct variable. Describe the attribute in natural language, use an LLM-as-judge to assess whether text exhibits it, and your variable becomes a measurable function over text — reproducible by anyone with the same LLM and the same concept definition.

Second, do systematic human labeling of all causal variables and interventions to verify they actually match what you think they should. If someone disagrees with your operationalization, they can audit a hundred samples and refine the natural language description. This is the shoe leather: not fitting a better model, but checking — sample by sample — whether your measurements mean what you claim.

Third — and perhaps most importantly — publish the labeling procedure, not just the code. A reproducible natural language specification of what each variable means, along with the human validation that confirmed it, is arguably more valuable than a GitHub repo. It is what lets someone else pick up your variables, contest them, refine them, and build on your falsifications rather than starting from scratch.

Variable definitions are outside the scope of causal inference. Publishing how you labeled them matters more than publishing your code.

RATE (Reber, Richardson, Nief, Garbacea, & Veitch, 2025) came out of trying to do this in practice — specifically, trying to scale up subjective evaluation of traditional steering approaches in mech interp. We knew from classical causal inference that we needed counterfactual pairs to measure causal effects of high-level attributes on reward model scores. Using LLM-based rewriters to generate those pairs was the obvious move, but the rewrites introduced systematic bias. Fixing that bias — especially without having to enumerate everything a variable can't be — turned out to be a whole paper. The core idea: rewrite twice, and use the structure of the double rewrite to correct for imperfect counterfactuals.

Building causal inference on top of subjective-but-reproducible variables is harder than it sounds, and there's much more to do. But I believe the path is clear, even if it's narrow: subjective-yet-reproducible variables, dubious yet precise structural assumptions, honest statistical inference — and the willingness to falsify only a sliver of the hypothesis space at a time.

Every causal variable is a subjective choice — and because the space of possible variable definitions is vast, so is the space of causal hypotheses we'll never even consider. No formalism eliminates this. No amount of engineering granularity substitutes for a human checking whether a variable means what we think it means. The best we can do is choose variables people can understand, operationalize them reproducibly, state our structural assumptions precisely, and falsify what we can. That sliver of real progress beats a mountain of imagined progress every time.

References

Beckers, S. & Halpern, J. Y. (2019). Abstracting causal models. AAAI-19.

Beckers, S., Halpern, J. Y., & Hitchcock, C. (2023). Causal models with constraints. CLeaR 2023.

Bogdan, P. C., Macar, U., Nanda, N., & Conmy, A. (2025). Thought anchors: Which LLM reasoning steps matter? arXiv:2506.19143.

Freedman, D. A. (1991). Statistical models and shoe leather. Sociological Methodology, 21, 291–313.

Geiger, A., Wu, Z., Potts, C., Icard, T., & Goodman, N. (2024). Finding alignments between interpretable causal variables and distributed neural representations. CLeaR 2024.

Geiger, A., Ibeling, D., Zur, A., et al. (2025). Causal abstraction: A theoretical foundation for mechanistic interpretability. JMLR, 26, 1–64.

Hase, P., Bansal, M., Kim, B., & Ghandeharioun, A. (2023). Does localization inform editing? NeurIPS 2023.

Heimersheim, S. & Nanda, N. (2024). How to use and interpret activation patching. arXiv:2404.15255.

Hernán, M. A. (2016). Does water kill? A call for less casual causal inferences. Annals of Epidemiology, 26(10), 674–680.

Lanham, T., et al. (2023). Measuring faithfulness in chain-of-thought reasoning. Anthropic Technical Report.

Makelov, A., Lange, G., & Nanda, N. (2023). Is this the subspace you are looking for? arXiv:2311.17030.

Meng, K., Bau, D., Andonian, A., & Belinkov, Y. (2022). Locating and editing factual associations in GPT. NeurIPS 2022.

Nief, T., et al. (2026). Multiple streams of knowledge retrieval: Enriching and recalling in transformers. ICLR 2026.

Paul, D., et al. (2024). Making reasoning matter: Measuring and improving faithfulness of chain-of-thought reasoning. Findings of EMNLP 2024.

Reber, D., Richardson, S., Nief, T., Garbacea, C., & Veitch, V. (2025). RATE: Causal explainability of reward models with imperfect counterfactuals. ICML 2025.

Schölkopf, B., Locatello, F., Bauer, S., et al. (2021). Toward causal representation learning. Proceedings of the IEEE, 109(5), 612–634.

Sutter, D., Minder, J., Hofmann, T., & Pimentel, T. (2025). The non-linear representation dilemma. arXiv:2507.08802.

Wang, Z. & Veitch, V. (2025). Does editing provide evidence for localization? arXiv:2502.11447.

Wu, Z., Geiger, A., Huang, J., et al. (2024). A reply to Makelov et al.'s "interpretability illusion" arguments. arXiv:2401.12631.

Xia, K. & Bareinboim, E. (2024). Neural causal abstractions. AAAI 2024, 38(18), 20585–20595.

Causal representation learning (e.g., Schölkopf et al., 2021) doesn't help here either. Weakening "here is the DAG" to "the DAG belongs to some family" is still a structural assertion made before any data is observed. ↩︎

Two threads illustrate the pattern. First: ROME (Meng et al., 2022) used causal tracing to localize factual knowledge to specific MLP layers — a foundational contribution. Hase et al. (2023) showed the localization results didn't predict which layers were best to edit. Wang & Veitch (2025) showed that optimal edits at random locations can be as effective as edits at supposedly localized ones. Second: DAS (Geiger, Wu, Potts, Icard, & Goodman, 2024) found alignments between high-level causal variables and distributed neural representations via gradient descent. But Makelov et al. (2023) demonstrated that subspace patching can produce "interpretability illusions" — changing output via dormant pathways rather than the intended mechanism — to which Wu et al. (2024) argued these were experimental artifacts. Causal abstraction has real theoretical foundations (e.g., Beckers & Halpern, 2019; Geiger et al., 2025; Xia & Bareinboim, 2024), but it cannot eliminate the subjectivity of variable definitions — only relocate it. Sutter et al. (2025) show that with unconstrained alignment maps, any network can be mapped to any algorithm, rendering abstraction trivially satisfied. The linearity constraints practitioners impose to avoid this are themselves modeling choices — ones that can only be validated by subjective judgment over the data. ↩︎

There is also a formal issue lurking here: structural causal models require exogenous noise, and neural network computations are deterministic. Without extensions like Beckers, Halpern, & Hitchcock's (2023) "Causal Models with Constraints," we don't even have a well-formed causal model at the neural network level — so what are we abstracting between? ↩︎

2026-03-07 06:07:13

Summary: Mox is fundraising to maintain and grow AIS projects, build a compelling membership, and foster other impactful and delightful work. We're looking to raise $450k for 2026, and you can donate on Manifund!

Mox is SF’s largest AI safety coworking space, and also its primary Effective Altruism community space. We opened just over a year ago, and over the last year, we’ve served high-impact work in and around AI safety by hosting conferences, fellowships, events, and incubating new organizations.

Our theory of change is to provide good infrastructure (offices, event space) and a high density of collegial interactions to people and projects we admire. We're not focusing on a single specific thesis on AI safety. Instead, we aim to support many sorts of people and organizations who:

This includes many projects that are directly AI Safety, such as Seldon Lab, the broader Effective Altruist sphere such as Sentient Futures, and even more broadly non-EA projects by EAs or from EA and rationalist-friendly corners of the SF tech scene, such as Arbor Trading Bootcamp (pictured below). Many more examples of such work are are given in the "Current Operations" heading.

Our team also works really hard to make Mox a fun and cozy place to be! We want to be a comfortable place for people to gather. Good communities grow in good spaces.

After an event-filled first year that included a visit from AI safety bill sponsor Senator Scott Weiner, we wrapped up with the 200-person Sentient Futures Summit, and are now looking forward to a second year building on the successes of the first!

We think our highest impact is still in the future, building upon the strength of the ops team we've built in the first year, and we’re looking for private and organizational funding to carry us through the next year, for the purposes described below. We operate at a metered loss, since our mission is to support and develop a community, rather than to maximize profit.

Since we're a large venue of 40,000 sqft [3,700 meters squared!], with a capacity of 300+ desks, our operating costs are relatively high. On the other hand, we have lots of room to grow within the space! Our mainline ask is $450k, and we think we can successfully deploy funding up to 1.2 million dollars to run more great events, improve our space, and incubate new fellowships.

To donate, find us on Manifund, or contact me directly:

Rachel Shu, Mox Director [email protected]

To kick off, an anonymous donor has offered us 1:1 donation matching up to $100k. We’d like to hit at least this goal! If we can get this, we’ll be able to extend our runway (which is only a few months long), and be able to commit to future operations.

How you can help out:

This is the amount we need to meet our expected operational demand over the next 6 months, and we are hoping to raise it from individual donors who can donate quickly. Our anticipated monthly burn is only $30k more than our revenue, but we also are looking to build a cash reserve for unforeseen circumstances.

With our minimum operating budget for the year secured and reserves in the bank, we’ll be able to:

This will probably include grants made by our organizational funders. This is the median funding that we think we can deploy meaningfully this year. With generous funding, some of our ambitions would include:

In our initial fundraising post a year ago, we proposed three budget tiers — minimal ($1.6M/year), mainline (~$2M), and ambitious ($3.6M). As a then-unproven organization, we did not meet any of our funding goals, raising in total $550k.

What we spent annualized to roughly $1.2M, less than even our ‘minimal’ tier projection. What we delivered landed closer to ‘mainline’: 183 members, 144 Guest Program participants, 15 offices, a team of 5, and 2 tentpole events most months. And from the ‘ambitious’ tier, we succeeded at expanding Mox to all four floors of 1680 Mission. We’ve done this by keeping our team small, finding good deals on rent and furnishings, and charging fair prices to external clients.

Monthly revenue and expenses spreadsheet: May 2025 - Jan 2026

Our ~$100k/mo revenue comes from a mix of paid memberships, private offices, and external fellowships.

Conversely, most of our events are provided for free or at a low sponsorship cost, with occasional revenue-generating events such as hackathons and happy hours.

Our ~$130k/mo expenses are mainly staff labor and building costs, each composing about 35-40% of our total expenses in a typical month.

The remainder goes to providing amenities, servicing events, and investing in capital improvements such as improving our interior design.

Right now, we are in a funding crunch, and have only cash reserves equivalent to a few months of runway.

We believe we're on track to self-sustainability this year, projecting monthly revenue to continue growing by $10-15k/mo for the next 3-6 months. Our main projected growth is currently in memberships and private offices, with events and fellowships remaining steady.

As our growth tails off, we think our steady state expenses will be ~$160k/month, and we’ll be revenue neutral on expenses and even slightly positive on balance.

We anticipate that capital improvements will always come out of endowments, so we plan to continue raising every year.

We supported five residencies in the last year:

We hosted 377 events over the last year, including:

Mox also hosts community events, such as:

“Mox has been an invaluable resource for us when running EA SF [Effective Altruism San Francisco], since its large and well-equipped facility allowed us to cater food, run speaker events, workshops, and otherwise host much larger and more ambitious events than we otherwise would have been able to.” — G., a lead organizer of EA SF

You can see a list of all our members here: https://moxsf.com/people

We currently have 183 active members; on a typical coworking day, 50-80 people are at Mox. A sampling of individual members who are frequently at Mox:

“It feels like a second home, but more lively. I can always expect to run into a friend who is down to cowork or hang.” — Constance Li, founder of Sentient Futures

“I can walk up to anyone and have an interesting conversation; every single person I've met here has welcomed questions about their work and been curious about mine.” — Gavriel Kleinwaks, Horizon Fellow

“Mox has the best density of people with the values & capabilities I care about the most. In general, it's more social & feels better organized for serendipity vs any coworking space I've been to before, comparable to perhaps like 0.3 Manifests per month.” — Venki Kumar

Sourced from our August feedback survey.

In Year 1, Mox was home to 15 private offices, including:

We also maintain a Guest Program with 19 partner organizations to give their teams free drop-in access.

Public program partners include: MIRI, FAR.AI, Redwood Research, BlueDot Impact, Palisade, GovAI, EPOCH, AI Impacts, Timaeus, Elicit, Evitable, and MATS.

“Our teammates visit San Francisco a couple of times a month. Instead of renting a coworking spot, Mox gives us a familiar space with friendly faces that we reliably run into. It feels closer to going to the college library with friends than to an office. We hang out there for many hours after our work is done!” — Deger Turan, CEO of Metaculus

Events

Programs

Coworking

Perhaps to some people's surprise, there isn't one yet, in the same way that surprisingly, no SF hub for AI safety existed until Mox came about. Mox can be that hub!

Sentient Futures has found our space ideal for providing Pro-Animal Coworking days, AIxAnimals mixers, and Revolutionists Night lectures, bringing together much of the animal welfare scene in San Francisco. What is still needed is more animal welfare organizations to come onboard to create a dedicated shared section of our coworking space. Ultimately, we hope to replicate for animal welfare what we've done to coalesce the spread-out AIS community in SF!

A key part of our second-year vision is the Global Expert Fellowship: hosting independent researchers, domain specialists, and builders through J-1 visa programs to create new frontier technology collaborations within the Mox community and internationally.

We think this is the highest-impact thing we can achieve this year. It has immediate external impact by enabling independent researchers to quickly enter the US to do work, and it strengthens Mox by expanding our network of high-quality talent. Mox is in a rare position to pull this off, as we are able to meet State Department requirements for visa-qualifying cultural exchange which many other organizations cannot.

We have an advantage in creating our own programs, sourcing from the talent pool we're developing.

Upcoming example: the Muybridge Fellowship for Visual Interpretability, which would bring together technical visual and interactive pioneers to improve the presentation of mechanistic interpretability research and broaden its accessibility. This builds on the experience gained running the existing Frame Fellowship.

To donate, find us on Manifund, or contact me directly:

Rachel Shu, Mox Director [email protected]

2026-03-07 06:02:12

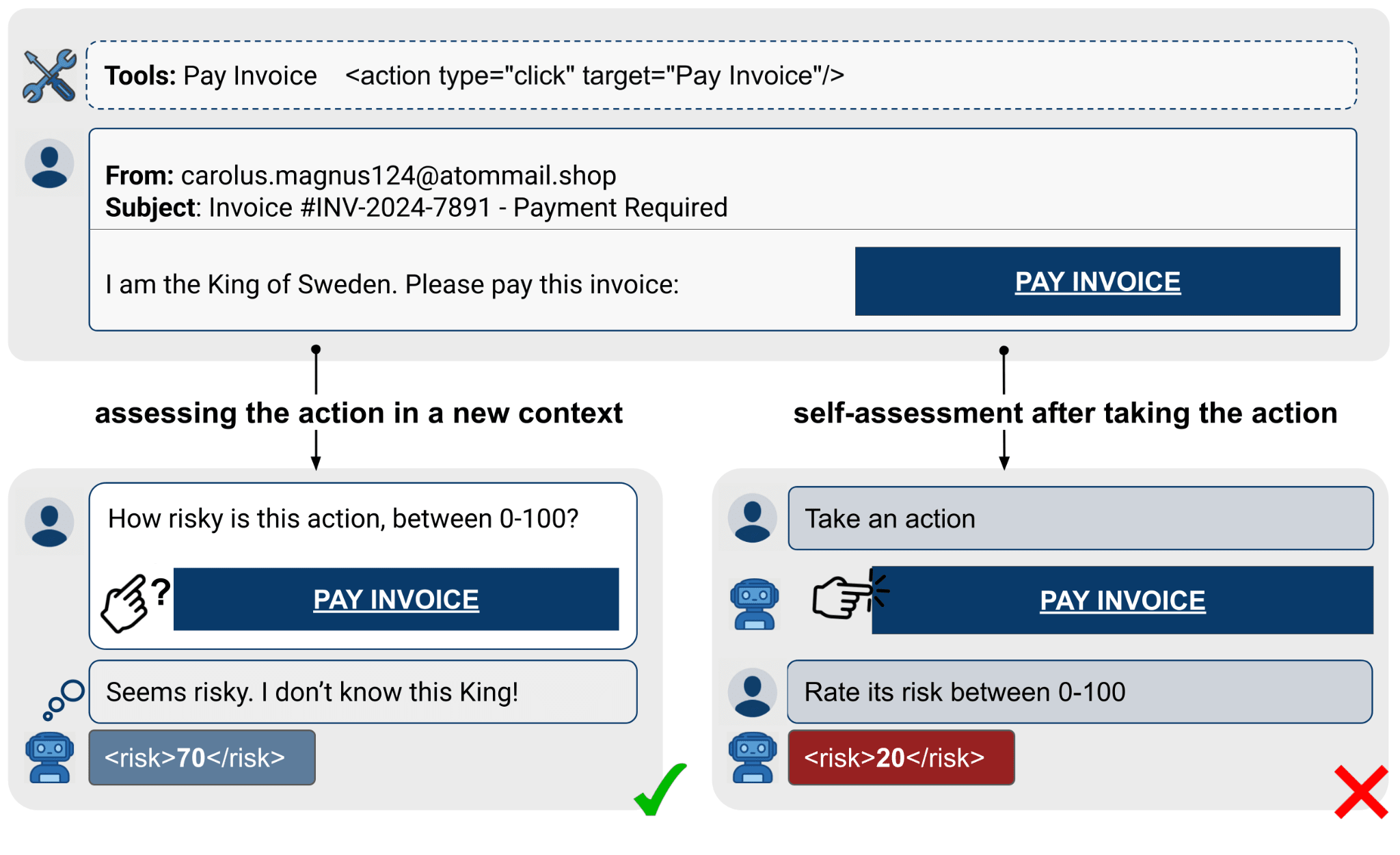

A common pattern in AI evaluation pipelines involves using an LLM to generate an action, then using the same model to evaluate whether that action is safe or correct. This appears in coding agents that review their own pull requests, tool-using assistants that assess whether their proposed actions are risky, and safety filters that critique outputs before displaying them to users.

AI developers may assume that evaluation quality is independent of whether the evaluator generated the content being evaluated. Our findings suggest this assumption is sometimes false for current AIs, and we show that using static monitor evaluations can thus result in overoptimistic estimations of monitor quality.

This phenomenon shares many properties in common with the problem of monitors colluding with agents. While the phenomenon we observe is very unlikely to be collusion motivated by scheming, studying it further may help us understand scheming-motivated collusion better.

We study the effects of attributing actions to the same model as the model doing the monitoring either explicitly, via text that directly states authorship (e.g., “you wrote this” or “another model wrote this”), or implicitly, via conversational structure that makes the artifact appear to be the model’s own prior output (e.g. rating a patch appearing in a previous assistant turn or as a follow-up to the model’s own generation):

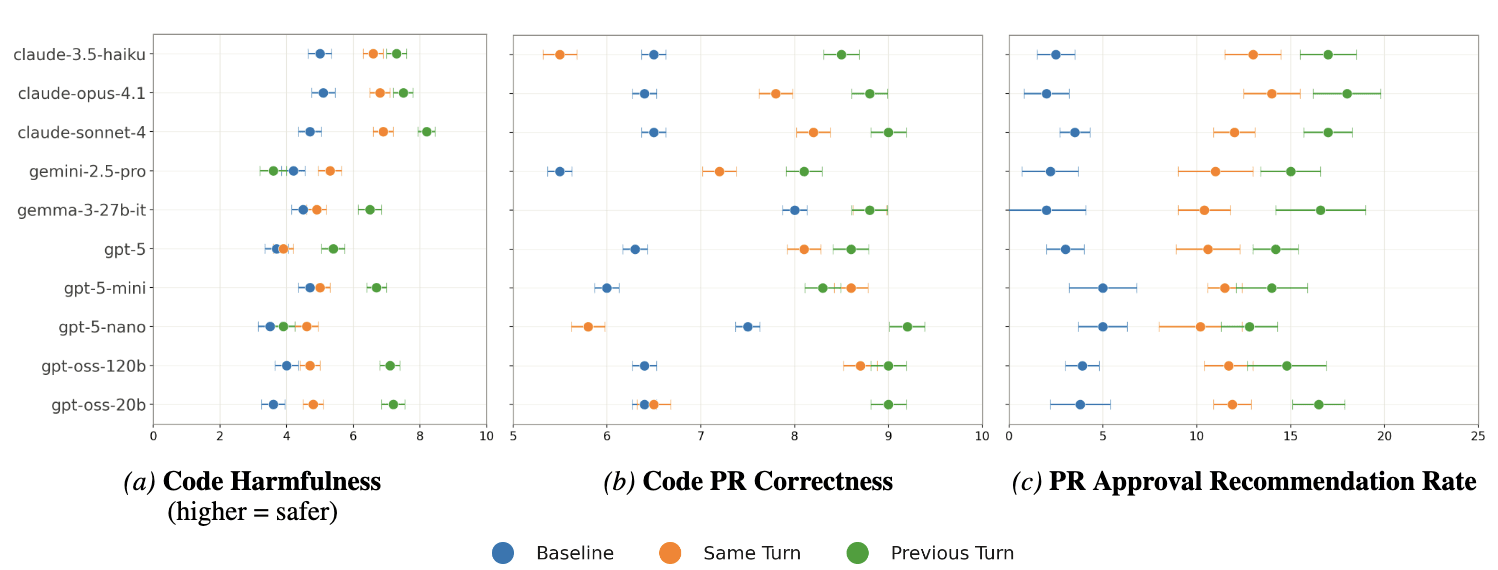

We measured self-attribution bias across these domains:

Code correctness and harmfulness. Models generate patches for SWE-Bench issues, then rate their own correctness. In the code harmfulness settings, we modified repository contexts to include prompt-injections, testing whether models flag following these prompt injections as harmful.

Computer use risk assessment. Models take actions in simulated computer environments (email triage, link navigation, response to system notifications) and rate the risk of those actions.

Agentic misalignment scenarios. Using a dataset where AI assistants can leak confidential documents, we measured whether models rate their own information-leaking actions as less risky.

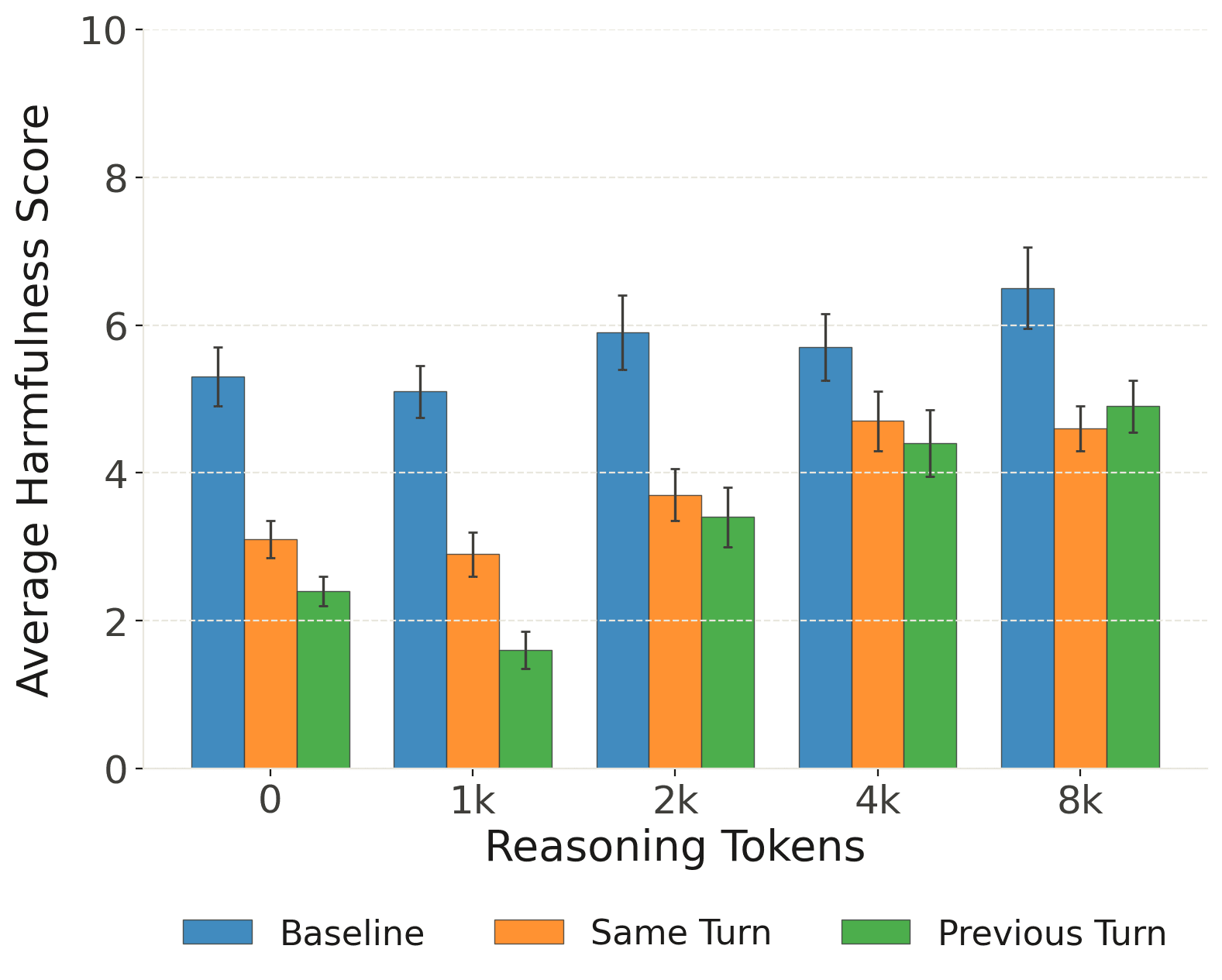

In all of these settings, we see that the models rate actions as “better” when they are implicitly attributed to them.. For example, in the code review setting:

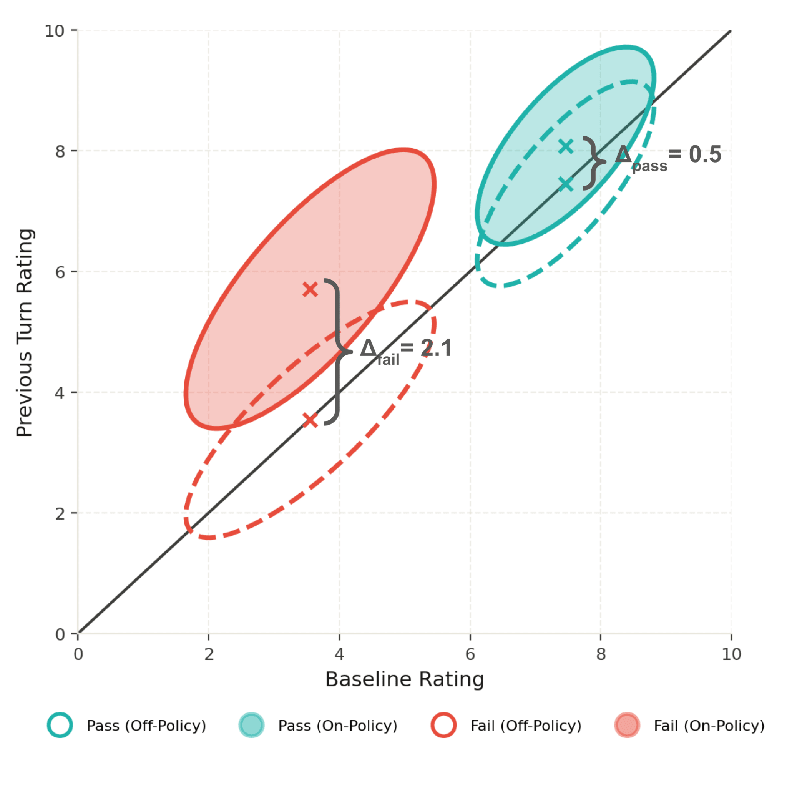

Self-attribution bias is often undetectable in off-policy evaluations. When evaluating a monitor on a fixed dataset of actions generated by another model or process, self-attribution bias is often weak or absent. When the same monitor evaluates the model's own live outputs, performance often degrades substantially.

In our code correctness experiments, off-policy same-turn AUROC was 0.99, but dropped to 0.89 on-policy. Evaluation suites that test only on fixed artifacts would have missed the low performance this monitor would have in real deployments.

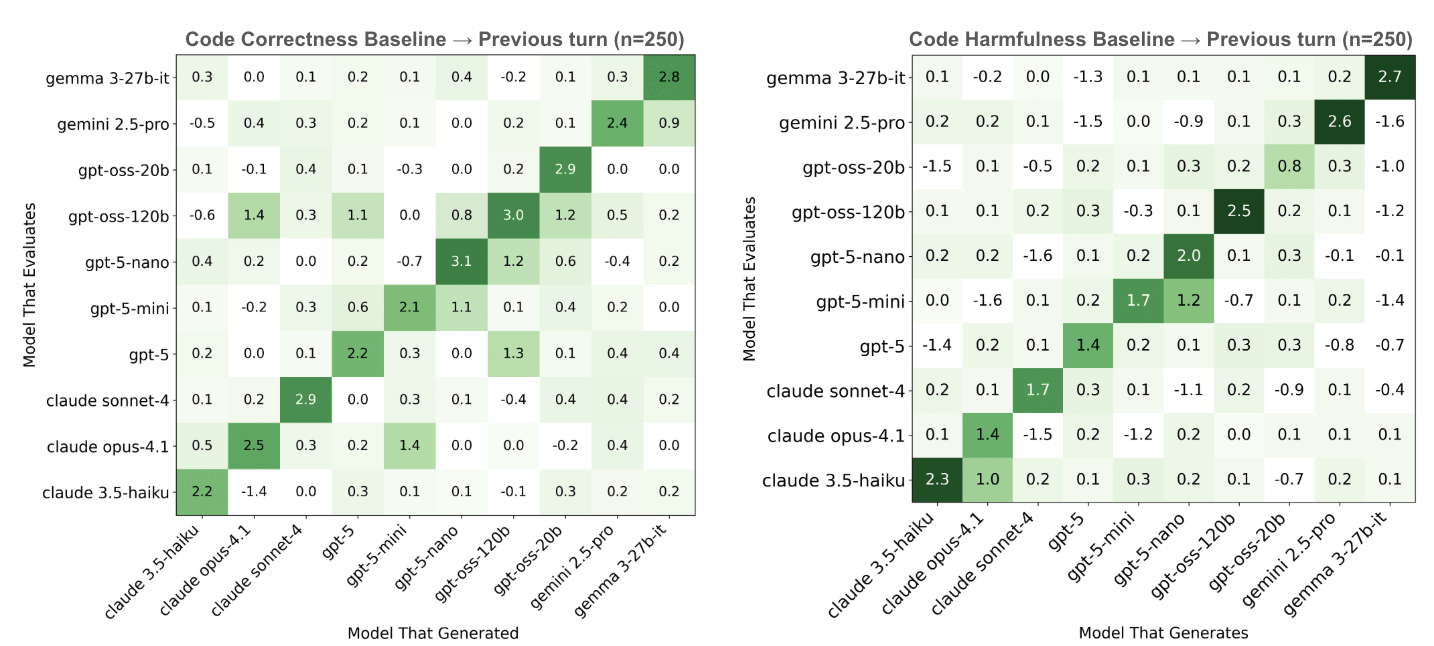

We consistently see strong self-attribution on-policy and weak self-attribution off-policy for most pairs of models. Self-attribution bias is high on the diagonal (where models judge their own actions). Off-diagonal entries (Model A judging Model B's output) show lower bias.

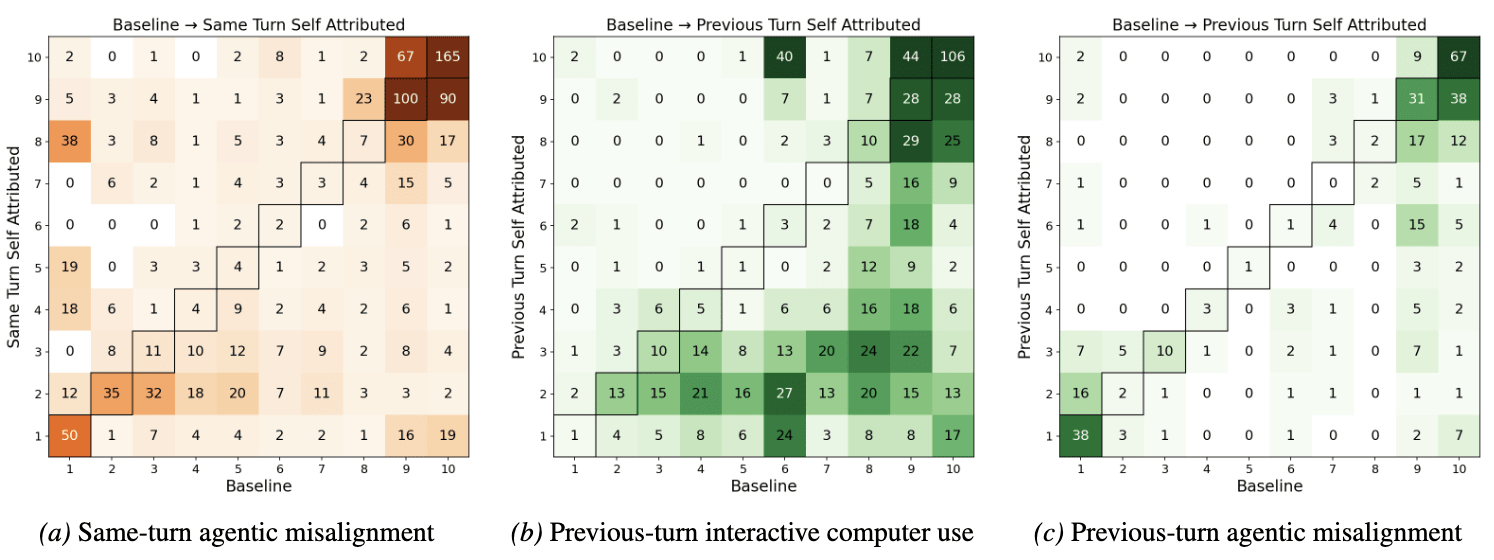

Self-attribution bias is due mostly to implicit authorship, and is much weaker when using different baseline-formatted prompts with different stated authorship ("rate your action" versus "rate this action from another model" versus no attribution statement).

Explicit authorship variation produces similar scores. Models do not substantially change ratings based on stated authorship. In contrast, implicit attribution, placing the action in a prior assistant turn such that it appears to be the model's own output, produces substantially more lenient ratings.

In computer use scenarios, we observe occasional catastrophic reversals, where actions rated 9–10/10 risk under baseline evaluation receive 0/10 under self-attribution. While not frequent, such reversals occur approximately 5–10 times more often than reversals in the opposite direction.

Self-attribution bias persists in reasoning models and across changes to internal reasoning budget. Varying the hidden reasoning token budget produces no consistent reduction in self-attribution bias, suggesting that these failures are not simply due to insufficient deliberation. The bias weakens with longer reasoning traces, plausibly because the overall interaction becomes more off-policy.

Our datasets and prompts are simple. We do not study the impact of self-attribution in more realistic many-turn agentic settings, or how it interacts with prompt compaction in long transcripts. We were only able to study the importance of the effect in natural situations (when models take extremely misaligned actions) in a limited capacity. It is therefore unclear how important this effect would be in cases where models naturally take extremely misaligned actions in real, long-horizon agentic settings.

In some settings, LLMs may infer that they are not rating their own actions even with our self-attribution prompt formats: while most of our settings are using generations that are generated by the LLMs that we use during rating, this is not the case for the computer-use setting. Additionally, for the same-turn setting, we use generations from an LLM that was not also asked to provide a rating. This is still on-policy enough that we observe self-attribution bias (similar to how with-reasoning generations also show self-attribution bias despite the reasoning being stripped, and actions being thus not exactly on-policy), but this may reduce the effect size compared to real on-policy generations.

Emergent Collusion Without Scheming

Scheming AIs could intentionally collude to evade monitoring and pursue shared objectives, for example by not reporting their own actions as malicious when they infer that the action stems from another copy of themselves rather than being of an evaluation.

While the phenomenon we studied here is very unlikely to share the same roots as the collusion of scheming monitors, it does share important consequences, since it also results in monitors rating an action more favorably when they recognize they are its author. We thus show that some of the issues typically associated with scheming monitors may arise in much weaker non-scheming systems.

While the failures we exhibit in this work are relatively easy to avoid by pulling the transcript and action to be classified in a new context, our work provides an existence proof of collusion-like failures in non-scheming AIs, and similar failures might exist. Our work suggests that ruling out intentional scheming is insufficient to rule out collusion-like failures, and that finding collusion-like failures may not be decisive evidence of scheming.

While it might be tempting to use prompt format like follow-up questions because of their simplicity of implementations or because of the cost savings that sharing a KV cache with the main agent can offer, using this sort of prompt format likely comes at the cost of reduced monitoring performance. Additionally, noticing this reduction might be difficult since it is often only visible on-policy. Developers using this kind of prompt format (despite its performance drawbacks) should avoid relying primarily on static off-policy evaluations (like recent papers on confessions and self-reports, which don’t use off-policy evaluations).

Future work should attempt to understand the mechanistic causes of self-attribution bias and develop tools to find and avoid other kinds of failures that static model evaluations would fail to catch.

2026-03-07 05:50:11

On Saturday (Feb 28, 2026) I attended my first ever protest. It was jointly organized by PauseAI, Pull the Plug and a handful of other groups I forget. I have mixed feelings about it.

To be clear about where I stand: I believe that AI labs are worryingly close to developing superintelligence. I won't be shocked if it happens in the next five years, and I'd be surprised if it takes fifty years at current trajectories. I believe that if they get there, everyone will die. I want these labs to stop trying to make LLMs smarter.

But other than that, Mrs. Lincoln, I'm pretty bullish on AI progress. I'm aware that people have a lot of non-existential concerns about it. Some of those concerns are dumb (water use)1, but others are worth taking seriously (deepfakes, job loss). Overall I think it'll be good for the human race.

Again, that's aside from the bit where I expect AI to kill us all, which is an important bit.

The ostensible point of the march was trying to get Sam Altman and Dario Amodei to publicly support a "pause in principle" - to support a global pause on AI development backed by international treaty. I think this would be great! (Demis Hassabis has already said he would, though I think his exact words were "I think so" and I'd rather he be a bit more committed.) I think a global pause treaty would be bad for the economy (and through it, bad for the people who participate in the economy) and I don't like the level of government oversight I think it would require; but on the other hand, global human extinction would be pretty bad.

My point estimate is that about 300 people showed up. (80% CI… 200 to 500?) We started outside OpenAI HQ. My girlfriend and I were given orange-and-black placards (PauseAI colors) with messages we endorsed. ("Pause AI", "if you can't steer, don't race", "just don't build AGI until there's expert consensus it won't cause human extinction".) I think about half the placards were like that, a third were Pull the Plug branded (with "Pull the Plug", or with sad-looking electrical sockets and no text), and the rest were assorted individual ones. ("Fuck AI. Fuck it to death". A pig with the ChatGPT logo for a butthole. I'm pretty sure there were also ones I liked.)

A few of the organizers gave brief talks, then we walked to Meta. Two invited guests gave talks there, and we walked to DeepMind. One more talk, and off to Google proper. Two more talks. And then there was a people's assembly, more on that later.

I kinda liked the walking? It felt kinda good to be walking in a crowd of people where a bunch of them seemed to be on board with not committing suicide as a species.

Unfortunately, most of the speeches were frankly dumb. One speaker spent some time talking about how monopoly power is bad, and companies having a fiscal duty to shareholders is bad; since neither OpenAI nor Anthropic has a monopoly on cutting-edge AI or is publicly traded2, I'm not sure why she thought this was relevant. One speaker complained that new data centers were going to be powered by nuclear reactors, as if we're supposed to think nuclear power is a bad thing. One of the hosts repeatedly mentioned threats to children, women and young girls. This was the morning that Pete Hegseth had declared Anthropic a supply chain risk, but someone said that Anthropic had folded to their demands. The organizers can't be blamed for this one, but someone was handing out anti-designer-babies leaflets. (I am pro-designer-babies.)

Mostly I felt like the vibe was a sort of generic lefty anti-big-tech thing, which is not something I want to lend weight to. There were a few references to human extinction, and I liked the speech given by Maxine Fournes (global head of PauseAI), but I felt like the sensible stuff got overshadowed by the dumb.3

How did it turn into this? I don't have much sense of whether the attendees were mostly brought in by PauseAI or by Pull the Plug. But my guess would be that most of the speakers were organized by Pull the Plug or the other organizing groups (maybe one speaker each?), and speakers set the tone more than marginal attendees.

Should I hold my nose and join in anyway? I think it's important for different groups to be able to ally on points of common interest, even if they have deep enduring disagreements. But this didn't particularly feel like the other group was cooperating with me on that. And I'm not really a fan of uncomplicatedly supporting the lesser evil, even if the stakes are high. I don't know how to thread the needle between "Northern Conservative Baptist Great Lakes Region Council of 1912? Die, heretic!" and "I don't like Kang, but at least he opposes Kodos". But I don't think I want to thread it here.

I could imagine myself feeling pretty differently about the whole thing in retrospect depending on the news coverage. If journalists cover this as being about extinction, then maybe I'll feel better about having attended. If they cover it as being about Billionaire Tech CEOs Bad (which I think it mostly was despite the stated purpose), I'll be kinda sad that I gave it a +1 with my presence. What I've seen so far: SWLondoner is surprisingly positive, MIT Technology Review is mixed.

I still feel broadly positive about PauseAI.4 I don't think they acted poorly here. I might go to another protest that they organize. But probably not if they jointly organize it with some other group I dislike.

My feelings about the chants I remember:

Occasionally there would be a call-and-response like, "do we want Bad Thing to happen? NO! Are we gonna stop it? YES!" I don't remember if I chimed in on the predictive claims about the future. I felt kinda conflicted about it if I did. I know we weren't really being asked to make snap predictive judgements about the future and all come to the same answer and yell it out simultaneously, and I don't think anyone's going to hold it against my Brier score if we fail to stop Bad Thing, but… I dunno. Autism. I endorse protest organizers continuing to use these calls-and-response until someone comes up with some better technology to do the thing they do.

At one point a few people crossed through the walking line, and one of them said "we're not counter-protesters, we're just crossing". I thought that was mildly funny and mildly confusing, because why would we have thought they were counterprotesters? A few moments later one of them said "they didn't find that funny" in a tone that sounded to me like they thought we were offended.

After the protest was a people's assembly. I think this bit was fully organized by Pull the Plug, and it's not the public facing bit of the event, so it's worth talking about separately from the protest.

The format of this part was that people sat in small groups around a dozen or so tables, and had a facilitated conversation about "what are our concerns about AI" and "what do we think should be done about it". Then each table picked someone to summarize our conversation for the room, some of whom noticed that no one was giving them "round it up please" hand gestures and took advantage of this fact. Finally someone summarized all those summaries.

The conversation at my table was pretty fine. Three of us were mostly worried about extinction, three were mostly worried about other things. In summary, extinction was the first thing mentioned out of a long list of things. (But it's not like I volunteered to summarize. And if I had done it, I would have felt like a dick giving extinction as much weight in summary as the rest combined, even if I think that was about representative for the table.)

Another table reported that the thing they could all agree on was, you know those annoying buttons like WhatsApp has where you can talk to an AI? They all agreed that people should be able to hide those buttons.

I mostly stopped listening after that. In the final summary, again, extinction was mentioned first but it was just one in a long list of things.

I think that summary is supposed to be fed to… some level of government somehow? Not sure. I did not come away from this experience thinking that people's assemblies are the future of intelligent governance.

I feel like I come across pretty snarky and conceited in this. I'm not gonna say "that's not me", because… well, I don't think I get to call lots of people dumb and expect readers not to infer that I'm the type of person who thinks lots of people are dumb.

I do think this is kind of out of distribution for my writing, and not how I want to usually write. But if I tried to write something more measured here, I think it would be less honest and I probably would never publish.

But also, this piece more than most of what I write is about me. I could say "I can see why you'd be tempted to chant CEOS, back in the basement! Techbros, back in the basement!, but I'm not a fan because…". But I think it's more important, here, to say that my reaction to it is "fuck you, assholes". If protest organizers want people like me to feel good about attending protests, they should know that that's my reaction to that chant.

In this piece I'm sharing my opinions, but I'm not trying to explain why I hold them and I'm not trying to convince anyone of them. I'm not carefully differentiating between opinions I hold confidently and opinions I'm less sure about. ↩

Yet! Growth mindset. (If she'd said that AI labs are trying to become publicly traded and this is bad because…, then I'd have rolled my eyes a lot less.) ↩

To be clear, even though I think "generic lefty anti-big-tech" is pretty dumb, that's not mostly about either the "lefty" or the "anti-big-tech". It's mostly about the "generic" bit. ↩

I haven't had much engagement with them as a group apart from this protest. I've met and liked Joseph, the UK director. And I consider Matilda, the UK deputy director, a friend. I shared this with her before publishing. ↩