2026-03-02 19:00:00

Intelligence is not elemental. Neither is artificial intelligence. Both are complex compounds composed of more primitive cognitive elements, some of which we are only now discovering. We don’t yet have a periodic table of cognition (see my post The Periodic Table of Cognition), so we have not finished identifying what the fundamental elements of intelligence are.

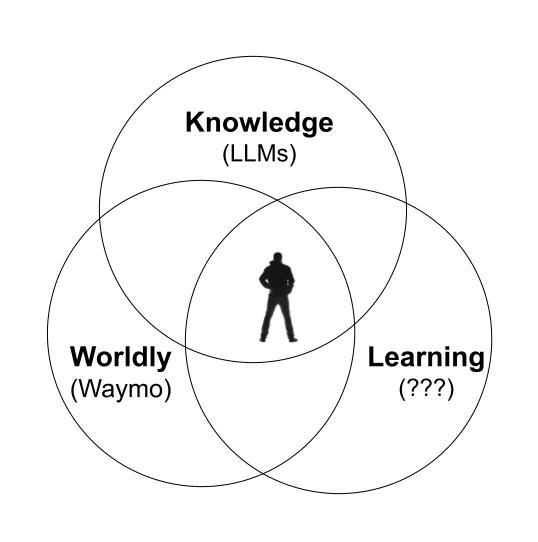

In the interim I propose three general classes of cognition that together can make something like a human intelligence. The three modes are: 1) Knowledge reasoning, 2) World sense, and 3) Continuous memory and learning.

Knowledge Reasoning is the kind of cognition generated by LLMs. It is a type of super-smartness that comes from reading (and remembering) every book ever written, and ingesting every written message posted. This knowledge-based intelligence is incredibly useful in answering questions, doing research, figuring out intellectual problems, accomplishing digital tasks, and perhaps even coming up with novel ideas. One LLM can deliver a whole country of PhD experts. Already in 2026 this book-smartness greatly exceeds the capabilities of humans.

World Sense is a kind of intelligence trained on the real world, instead of being trained on text descriptions of the real world. These are sometimes called world models, or Spatial Intelligence, because this kind of cognition is based on (and trained on) how physical objects behave in the 3-dimensional world of space and time, and not just the immaterial world of words talking about the world. This species of cognition knows how things bounce, or flow, or how proteins fold, or molecules vibrate, or light bends. It incorporates a recognition of gravity, an awareness of continuity, a sense of matter’s physicality, an intimate knowledge of how mass and energy are conserved. This is the cognition that drives Waymo cars better than humans drive. We don’t yet have a flood of robots in 2026 because this kind of cognition relies upon more than LLMs. It requires layers of other cognitive elements working along with neural nets, such as vision algorithms, and World Models such as Genie 3, which was trained on hundreds of thousands, perhaps millions, of YouTube videos. The videos of real life teach the lessons of operating in the real world. Tesla’s self-driving intelligence was trained on its billions of hours of driving videos grabbed from its human-driven cars, that taught it how cars and pedestrians and environments behave in the real world. Central to this type of physical smartness is a common sense, the kind of common sense that a human child of 5 years would have, but most AIs to date do not. For instance, the awareness that objects don’t vanish just because you can’t see them. For robots to take over many of our more tedious tasks, this kind of world sense and spatial intelligence will be needed.

Continuous Learning is essential to the compound of human intelligence, but absent right now in artificial intelligence. Some even define AGI as continuous learning intelligence. When we are awake, we are constantly learning, trying to recover from mistakes (don’t do that again!), to figure out new ways based on what we already know. A major reason why AI agents have not replaced human workers in 2026 is that the former never learn from their mistakes while the latter, even if not as smart, can learn on the job, and can get better each day. Despite our expectations, current LLMs do not learn from each other, nor do they learn when you correct them again and again. They currently do not have a robust way to remember their mistakes or corrections, nor to get smarter more than once a year when they are retrained from 4.0 to 5.0. Every time you correct ChatGPT’s mistake, it forgets by the next conversation. Every time a robot fails at a task, it will fail the exact same way tomorrow. This is why AIs can’t hold a real job in 2026. At this moment we lack the software genius to install continuous learning (at scale) to the machines. This quest is a major area of research; it is unknown whether the current neural net models will be capable of evolving this, or whether new model architectures are needed. Continuous learning requires a continuous persistent memory, which is computationally taxing, among other problems. When AI experiences another sudden quantum jump in capabilities, it will likely be when someone cracks the solution for a continuous learning function. Human employees are unlikely to lose their jobs to AIs that can not continuously learn because a lot of the work we need done requires continuous learning on the job.

There may be other elemental particles of cognition in the mixture of our human intelligence, but I am confident it includes these three as primary components. For manufacturing artificial intelligence we have an ample supply of Knowledge IQ, and we have some preliminary amounts of World IQ, but we seriously lack Learning IQ at scale.

It is important to acknowledge that for many jobs we do not need all three modes. To drive our cars, we chiefly need world sense. To answer questions, smart LLM book knowledge is most of what we need. There may be use cases for an AI that only learns but does not have a world sense or even that much knowledge. And of course, there will be many hybrid versions with two parts, or only a bit of two or three.

In brief, while current (February 2026) LLMs greatly exceed humans in their knowledge-based reasoning, they lack two other significant cognitive skills before they can actually replace humans: they don’t have a flawless grasp of the real world (thus no robots), and they don’t learn. I expect the mainstream adoption of AI in the next 2 years will depend hugely on how much of the other two modes of cognition can be implemented into AIs.

2026-02-14 04:11:00

2026-02-04 02:11:35

Until the sale of contraception pills in 1960, no one needed a reason to have children. It was the biological consequence of sex, so it was also the cultural default. There were only reasons NOT to have children.

Now after only two generations of contraception use, the settings have flipped and people don’t need reasons to not have children: Rather, no children is the default. Now we need good reasons to have kids.

There are good altruistic arguments to have kids, and there are very fine religious and societal arguments to having kids, but there should also be selfish reasons to have kids. Those would be the optimal motivations.

I am fully aware of the long list of very good arguments as to why having children is hard, expensive, unfair to women, anti-environmental, egotistical, undesirable, and or undoable. I don’t dispute them; they are all true to some extent. Because not having children is the default, this long list is everywhere, including in the comments here.

I simply offer here my six selfish reasons why I had children, with the hope others might find them useful.

1) Having children is a good – perhaps the best – way to disseminate your values to the next generation. It is a solid way to extend your influence on the world beyond your own lifespan. If you think your values should be disseminated, then you should have kids who will have kids. While there is no guarantee your children will carry your set of values, you have a much higher chance of passing it on to them, than to anyone else. And while you could write a book, or start a foundation, with the hope of passing on your values through time, starting children is a much more feasible option for most normal people.

2) Children are entertaining, much better than any other streaming option you might pay for. The questions they ask, their antics, watching them play, witnessing or being the recipient of their creativity, sometimes on a daily basis, is the best streaming there is. Their creativity is often inspiring. They can be creative in negative ways, too, but in all ways they will not be boring, and they are right there in your presence.

3) There is a profound and primeval joy in helping a helpless infant become a functioning adult. It is very clear they cannot do this on their own, so the role of teacher, trainer, coach, parent is essential and this need is felt deep. The singular bond that arises from this dependency also entails worry, as well as joy, but for most parents the joy outweighs the worry. But for a long while, they depend on you, and if you provide, the rewards of giving, of helping, are poured upon you.

4) A primeval and foundational need of all humans everywhere is to belong, and to be loved. For at least the first decade of their lives, your children will love you to a degree adults do not experience otherwise. This unconditional love is so potent, that humans will often surrender their own lives to maintain and culture it. It is so potent, it can change lives, change the behavior and even world views of parents. The joy of being loved, admired, and needed to such a degree is unmatched in the rest of our lives.

5) It is exceedingly rare for anyone born to later regret having been born, so the gift of birth is huge. There is a real sense of accomplishment and satisfaction in bringing a human being into existence and nurturing it to independence. For women, this miracle is especially gratifying, because of their literal gift of life and the physical price they pay. A lot of the pride of parenthood is having participated in this immense and precious gift.

6) If it all works out through adolescence, you will have friends for life. As your children age, they will keep surprising you. Even strained times can’t dissolve your relationship, and as they reach the age that you were when you had them, they often become more than just your children. They are special, unique people, worthy of attention, with abilities you do not have, and they will also know you very well. It is a deep pleasure to have people who know you so well. Of course, as you get much older they will help move furniture, maybe drive you to appointments, and eventually they will decide which affordable nursing home to put you into (who else do you want to decide?), so they forever remain your allies.

I’ve heard other selfish reasons to have kids mentioned by others that did not resonate with me, but might work for some. One was having kids was a way to redo a childhood they felt they had messed up or missed out on. Another popular reason with very young parents was that having children was a way to be taken seriously by their peers or parents, or a way to be accepted by their family-oriented community.

There is a decent list of reasons why it would be good for the world to have children, and why it would be better if you specifically have children, but while that is a worthy list, it is different from this one, which focuses on the selfish benefits you gain when you have children.

If I have missed a selfish reason let me know.

2026-01-27 00:21:12

In the modern world we measure things a lot. Even betterment is given a number so we can measure quality and progress. For instance we can designate our water tank as 90% full after a rain, or a powder 99% pure. We grade tests, performances, purity, occupancies and all kinds of qualities as a percentage of what we think is perfect. As things improve their metric will go from say 90% to 99% (pretty good out of 100% perfection). To get better we could increase the purity of a material, or the availability of electricity, from 99% to 99.9% which is even better. If we keep adding nines, we keep significantly improving as we reach, say 99.999%. With advanced knowledge and the best practices, we could keep going forward further, adding up to 6 nines or even 9 nines!

This is called the ”march of nines”, and it has been very common in high tech for many years. The companies making silicon wafers for chips, for example, have been engaged in a long struggle to add nines to the purity of their crystals. Premier web hosting companies brag about their 5 nines of uptime, hoping to reach 6 nines someday.

This lift is tremendously hard for very mundane reasons. The addition of a nine in the march of nine is not linear. It seems as if we are adding only a tiny amount with each nine, smaller and smaller, but it is the opposite. The difference between having no electricity for 1 hour a year (99.99%) versus missing one whole working day a year (99.9%) is significant, and not just a little more.

But each additional nine requires an extraordinary increase in effort. Workplace folklore suggests that each additional nine requires just as much work as the one previous. So that going from 99% to 99.9 percent requires as much time/money as going from 90 to 99%. Some technologists claim that for some cases it is even more severe and that you need an order of magnitude more effort to achieve an additional nine. To go from two nines to three, or three to four requires 10 times the time and money than the last step. This would imply that each step in the march of nines needs more resources than all the previous steps together, which is a very sobering thought.

Whether each step in the march of nines is just as much or 10 times as much previous, the reason for this expanding input is that you cannot reach the next nine simply by doing more of what you have been doing. Extrapolation doesn’t work. The only way to reach the next nine is to do something in a new way, or to re-organize what you are doing, or to invent a new thing. And that is expensive. And easy to resist because what you are currently doing is working great! If you want to move your uptime from 99.9% to 99.99% you need whole new levels of redundancy, new work flows, new degrees of monitoring, new kinds of devices, new work habits, and a new company organization. The next nine will require the same degree of effort.

Recently Andrej Karpathy, the AI superstar who worked on self-driving cars, noted that we are still stuck at a level of nines way below what we really need for self-driving cars to become mainstream. When a SDV (self-driving vehicle) is 90% accurate in its driving, it will have a human emergency minder sitting in the car, a 1:1 ratio. After tons of new research, billions of dollars, and radical innovation the accuracy reaches 99% and that co-pilot minder will move to a remote service center, as they do in Waymos. The minders are no longer in the car but they still operate a 1:1 human per car at a distance. Spend some more billions and the innovations get the SDV to 99.9%, and now one minder can mind 6 cars. As SDV marches up the nines, human minds spread and dilute their attention, till eventually only a few humans are needed for tens of thousands of cars. Only then would the average citizen be able to afford a SDV.

But each of these steps of nine require at least as much work and ingenuity as the previous work. Today human drivers are actually very good. They create a collision causing an injury only about once every 1 million miles, and they cause a fatality only about once per 100 million miles driven. In terms of injuries human drivers operate at 99.9999% safety, and for fatal collisions their performance is 99.999999% if measured per mile. That is an astounding 8 nines!

But the far side of the march of nines is a weird domain. When you reach beyond 5 nines, the chance factor of rare events balloons to such extremes that such events become so improbable as to literally defy description. You are designing for things that have never happened or been seen. The event might only happen once in a 100 billion times, or once every hundred billion samples, that it is way outside human experience. The design process starts to veer to the meaningless.

This zone of extremity at the far tail of the march of nines is yet another reason why trying to lift a system up to another step is so hard. You enter a territory governed by rare and black swan occurrences, where uncertainty is rampant, and ignorance reigns.

Yet Waymo today is actually 90% safer than humans, but that safety still hinges on some humans in the loop. It is probable that today’s tech without those humans would be less safe than human drivers, but we don’t know. And in fact, despite billions of miles driven in some form of self-driving mode with human assistance, those SDV still have not driven enough miles to give us reliable safety measurements compared to human drivers.

The feeling among some observers of SDV is that taking humans out of the loop (exposing its true level of safety if truly autonomous), means that despite appearances, SDV is not a solved problem. Tesla’s FSD is not genuine autonomy. As long as the driver can grab the wheel to steer, a human is in the loop. In other words SDV is several nines away. Which means that it will require just as much time and money and effort to solve this next step as it has taken to get SDV to where they are today. That is worth repeating: to reach full autonomy may take as much effort as has been spent getting to Waymo today.

Waymo was founded as a Google Self Driving Car Project 16 years ago and was recognized as Waymo 10 years ago, and has so far spent about $25 billion getting to their current level of nines. I believe it will take at least another decade and another $25 billion for Waymo to step up to the point where one human can facilitate 100,000 cars, while the SDV achieves all the nines they need to be genuinely autonomous and still safer than humans.

It seems we are so close to fully human-free autonomous driving – all we need is a few more nines! – but in the march of nines, those additional nines will require as much investment as we’ve spent so far. To a rough order of magnitude, I don’t expect we will reach the state where even a third of the vehicles on the road will be truly SDV (no humans in the loop) until 2036, or later.

2026-01-24 03:05:00

2026-01-10 03:12:00