2026-03-03 10:40:22

I recently talked with a contact who repeated what he’d heard regarding agentic AI systems—namely, that they can greatly increase productivity in professional financial management tasks. However, I pointed out that though this is true, these tools do not guarantee correctness, so one has to be very careful letting them manage critical assets such as financial data.

It is widely recognized that AI models, even reasoning models and agentic systems, can make mistakes. One example is a case showing that one of the most recent and capable AI models made multiple factual mistakes in drawing together information for a single slide of a presentation. Sure, people can give examples where agentic systems can perform amazing tasks. But it’s another question as to whether they can always do them reliably. Unfortunately, we do not yet have procedural frameworks that provides reliability guarantees that are comparable to those required in other high-stakes engineering domains.

Many leading researchers have acknowledged that current AI systems have a degree of technical unpredictability that has not been resolved. For example, one has recently said, “Anyone who has worked with AI models understands that there is a basic unpredictability to them, that in a purely technical way we have not solved.”

Manufacturing has the notion of Six Sigma quality, to reduce the level of manufacturing defects to an extremely low level. In computing, the correctness requirements are even higher, sometimes necessitating provable correctness. The Pentium FDIV bug in the 1990s caused actual errors in calculations to occur in the wild, even though the chance of error was supposedly “rare.” These were silent errors that might have occurred undetected in mission critical applications, leading to failure. This being said, the Pentium FDIV error modes were precisely definable, whereas AI models are probabilistic, making it much harder to bound the errors.

Exact correctness is at times considered so important that there is an entire discipline, known as formal verification, to prove specified correctness properties for critical hardware and software systems (for example, the manufacture of computing devices). These methods play a key role in multi-billion dollar industries.

When provable correctness is not available, having at least a rigorous certification process (see here for one effort) is a step in the right direction.

It has long been recognized that reliability is a fundamental challenge in modern AI systems. Despite dramatic advances in capability, strong correctness guarantees remain an open technical problem. The central question is how to build AI systems whose behavior can be bounded, verified, or certified at domain-appropriate levels. Until this is satisfactorily resolved, we should use these incredibly useful tools in smart ways that do not create unnecessary risks.

The post An AI Odyssey, Part 1: Correctness Conundrum first appeared on John D. Cook.2026-03-03 00:08:49

In grad school I specialized in differential equations, but never worked with delay-differential equations, equations specifying that a solution depends not only on its derivatives but also on the state of the function at a previous time. The first time I worked with a delay-differential equation would come a couple decades later when I did some modeling work for a pharmaceutical company.

Large delays can change the qualitative behavior of a differential equation, but it seems plausible that sufficiently small delays should not. This is correct, and we will show just how small “sufficiently small” is in a simple special case. We’ll look at the equation

x′(t) = a x(t) + b x(t − τ)

where the coefficients a and b are non-zero real constants and the delay τ is a positive constant. Then [1] proves that the equation above has the same qualitative behavior as the same equation with the delay removed, i.e. with τ = 0, provided τ is small enough. Here “small enough” means

−1/e < bτ exp(−aτ) < e

and

aτ < 1.

There is a further hypothesis for the theorem cited above, a technical condition that holds on a nowhere dense set. The solution to a first order delay-differential like the one we’re looking at here is not determined by an initial condition x(0) = x0 alone. We have to specify the solution over the interval [−τ, 0]. This can be any function of t, subject only to a technical condition that holds on a nowhere-dense set of initial conditions. See [1] for details.

Let’s look at a specific example,

x′(t) = −3 x(t) + 2 x(t − τ)

with the initial condition x(1) = 1. If there were no delay term τ, the solution would be x(t) = exp(1 − t). In this case the solution monotonically decays to zero.

The theorem above says we should expect the same behavior as long as

−1/e < 2τ exp(3τ) < e

which holds as long as τ < 0.404218.

Let’s solve our equation for the case τ = 0.4 using Mathematica.

tau = 0.4

solution = NDSolveValue[

{x'[t] == -3 x[t] + 2 x[t - tau], x[t /; t <= 1] == t },

x, {t, 0, 10}]

Plot[solution[t], {t, 0, 10}, PlotRange -> All]

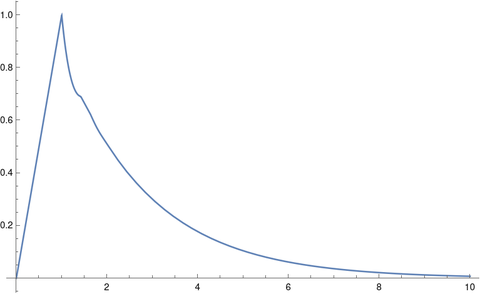

This produces the following plot.

The solution initially ramps up to 1, because that’s what we specified, but it seems that eventually the solution monotonically decays to 0, just as when τ = 0.

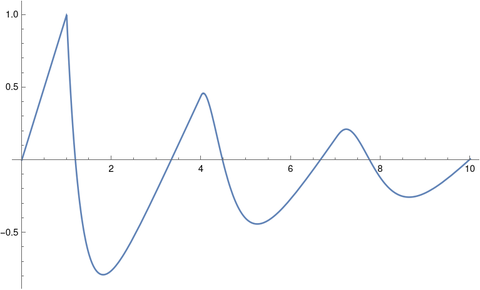

When we change the delay to τ = 3 and rerun the code we get oscillations.

[1] R. D. Driver, D. W. Sasser, M. L. Slater. The Equation x’ (t) = ax (t) + bx (t – τ) with “Small” Delay. The American Mathematical Monthly, Vol. 80, No. 9 (Nov., 1973), pp. 990–995

The post Differential equation with a small delay first appeared on John D. Cook.2026-03-02 02:15:16

After writing the previous post, I poked around in the bash shell documentation and found a handy feature I’d never seen before, the shortcut ~-.

I frequently use the command cd - to return to the previous working directory, but didn’t know about ~- as a shotrcut for the shell variable $OLDPWD which contains the name of the previous working directory.

Here’s how I will be using this feature now that I know about it. Fairly often I work in two directories, and moving back and forth between them using cd -, and need to compare files in the two locations. If I have files in both directories with the same name, say notes.org, I can diff them by running

diff notes.org ~-/notes.org

I was curious why I’d never run into ~- before. Maybe it’s a relatively recent bash feature? No, it’s been there since bash was released in 1989. The feature was part of C shell before that, though not part of Bourne shell.

2026-03-01 02:20:37

I’ve never been good at shell scripting. I’d much rather write scripts in a general purpose language like Python. But occasionally a shell script can do something so simply that it’s worth writing a shell script.

Sometimes a shell scripting feature is terse and cryptic precisely because it solves a common problem succinctly. One example of this is working with file extensions.

For example, maybe you have a script that takes a source file name like foo.java and needs to do something with the class file foo.class. In my case, I had a script that takes a La TeX file name and needs to create the corresponding DVI and SVG file names.

Here’s a little script to create an SVG file from a LaTeX file.

#!/bin/bash

latex "$1"

dvisvgm --no-fonts "${1%.tex}.dvi" -o "${1%.tex}.svg"

The pattern ${parameter%word} is a bash shell parameter expansion that removes the shortest match to the pattern word from the end of the expansion of parameter. So if $1 equals foo.tex, then

${1%.tex}

evaluates to

foo

and so

${1%.tex}.dvi

and

${1%.tex}.svg

expand to foo.dvi and foo.svg.

You can get much fancier with shell parameter expansions if you’d like. See the documentation here.

The post Working with file extensions in bash scripts first appeared on John D. Cook.2026-02-26 09:10:37

The post A curious trig identity contained the theorem that for real x and y,

This theorem also holds when sine is replaced with hyperbolic sine.

The post Trig of inverse trig contained a table summarizing trig functions applied to inverse trig functions. You can make a very similar table for the hyperbolic counterparts.

The following Python code doesn’t prove that the entries in the table are correct, but it likely would catch typos.

from math import *

def compare(x, y):

print(abs(x - y) < 1e-12)

for x in [2, 3]:

compare(sinh(acosh(x)), sqrt(x**2 - 1))

compare(cosh(asinh(x)), sqrt(x**2 + 1))

compare(tanh(asinh(x)), x/sqrt(x**2 + 1))

compare(tanh(acosh(x)), sqrt(x**2 - 1)/x)

for x in [0.1, -0.2]:

compare(sinh(atanh(x)), x/sqrt(1 - x**2))

compare(cosh(atanh(x)), 1/sqrt(1 - x**2))

Related post: Rule for converting trig identities into hyperbolic identities

The post Hyperbolic versions of latest posts first appeared on John D. Cook.2026-02-25 19:00:54

I ran across an old article [1] that gave a sort of multiplication table for trig functions and inverse trig functions. Here’s my version of the table.

I made a few changes from the original. First, I used LaTeX, which didn’t exist when the article was written in 1957. Second, I only include sin, cos, and tan; the original also included csc, sec, and cot. Third, I reversed the labels of the rows and columns. Each cell represents a trig function applied to an inverse trig function.

The third point requires a little elaboration. The table represents function composition, not multiplication, but is expressed in the format of a multiplication table. For the composition f( g(x) ), do you expect f to be on the side or top? It wouldn’t matter if the functions commuted under composition, but they don’t. I think it feels more conventional to put the outer function on the side; the author make the opposite choice.

The identities in the table are all easy to prove, so the results aren’t interesting so much as the arrangement. I’d never seen these identities arranged into a table before. The matrix of identities is not symmetric, but the 2 by 2 matrix in the upper left corner is because

sin(cos−1(x)) = cos(sin−1(x)).

The entries of the third row and third column are not symmetric, though they do have some similarities.

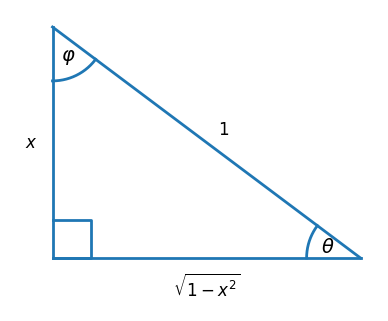

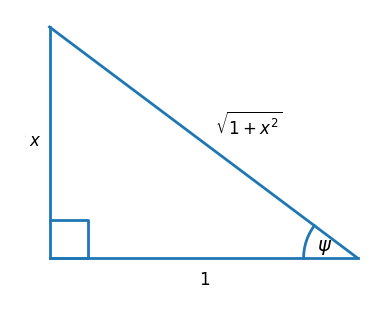

You can prove the identities in the sin, cos, and tan rows by focusing on the angles θ, φ, and ψ below respectively because θ = sin−1(x), φ = cos−1(x), and ψ = tan−1(x). This shows that the square roots in the table above all fall out of the Pythagorean theorem.

See the next post for the hyperbolic analog of the table above.

[1] G. A. Baker. Multiplication Tables for Trigonometric Operators. The American Mathematical Monthly, Vol. 64, No. 7 (Aug. – Sep., 1957), pp. 502–503.

The post Trig of inverse trig first appeared on John D. Cook.