2026-02-13 22:32:46

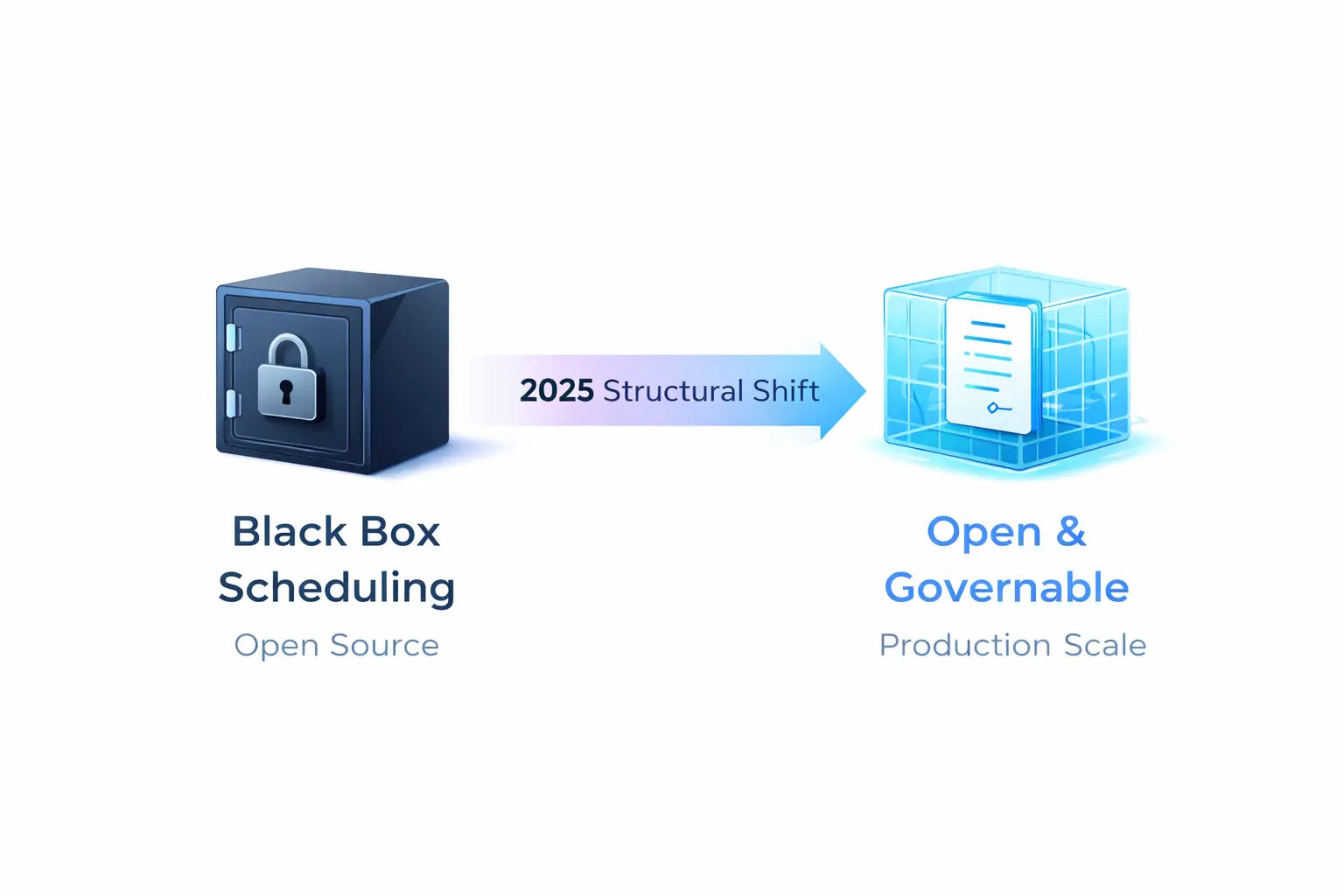

The future of GPU scheduling isn’t about whose implementation is more “black-box”—it’s about who can standardize device resource contracts into something governable.

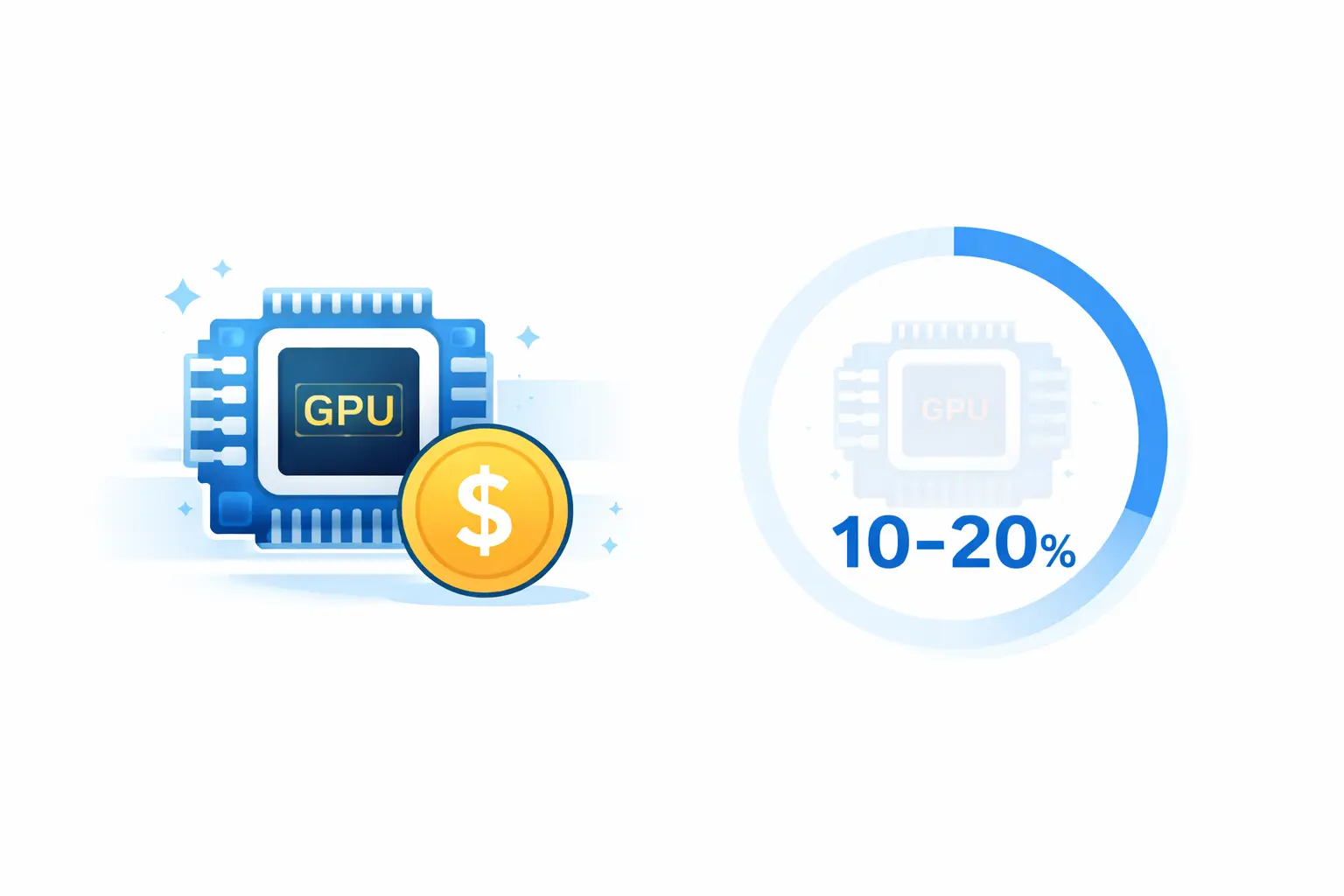

Have you ever wondered: why are GPUs so expensive, yet overall utilization often hovers around 10–20%?

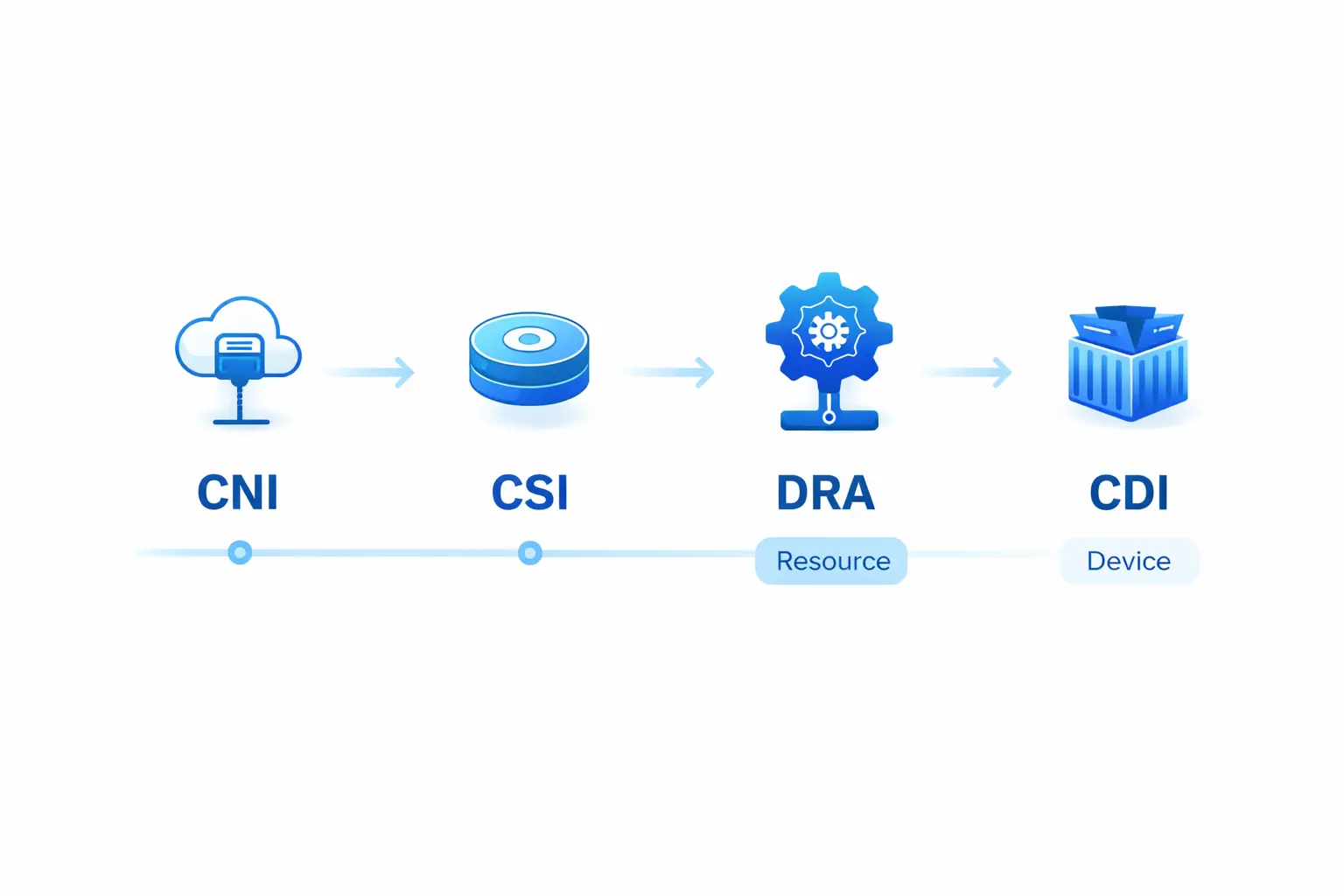

This isn’t a problem you solve with “better scheduling algorithms.” It’s a structural problem - GPU scheduling is undergoing a shift from “proprietary implementation” to “open scheduling,” similar to how networking converged on CNI and storage converged on CSI.

In the HAMi 2025 Annual Review, we noted: “HAMi 2025 is no longer just about GPU sharing tools—it’s a more structural signal: GPUs are moving toward open scheduling.”

By 2025, the signals of this shift became visible: Kubernetes Dynamic Resource Allocation (DRA) graduated to GA and became enabled by default, NVIDIA GPU Operator started defaulting to CDI (Container Device Interface), and HAMi’s production-grade case studies under CNCF are moving “GPU sharing” from experimental capability to operational excellence.

This post analyzes this structural shift from an AI Native Infrastructure perspective, and what it means for Dynamia and the industry.

In multi-cloud and hybrid cloud environments, GPU model diversity significantly amplifies operational costs. One large internet company’s platform spans H200/H100/A100/V100/4090 GPUs across five clusters. If you can only allocate “whole GPUs,” resource misalignment becomes inevitable.

“Open scheduling” isn’t a slogan—it’s a set of engineering contracts being solidified into the mainstream stack.

Before: GPUs were extended resources. The scheduler didn’t understand if they represented memory, compute, or device types.

Now: Kubernetes DRA provides objects like DeviceClass, ResourceClaim, and ResourceSlice. This lets drivers and cluster administrators define device categories and selection logic (including CEL-based selectors), while Kubernetes handles the full loop: match devices → bind claims → place Pods onto nodes with access to allocated devices.

Even more importantly, Kubernetes 1.34 stated that core APIs in the resource.k8s.io group graduated to GA, DRA became stable and enabled by default, and the community committed to avoiding breaking changes going forward. This means the ecosystem can invest with confidence in a stable, standard API.

Before: Device injection relied on vendor-specific hooks and runtime class patterns.

Now: The Container Device Interface (CDI) abstracts device injection into an open specification. NVIDIA’s Container Toolkit explicitly describes CDI as an open specification for container runtimes, and NVIDIA GPU Operator 25.10.0 defaults to enabling CDI on install/upgrade—directly leveraging runtime-native CDI support (containerd, CRI-O, etc.) for GPU injection.

This means “devices into containers” is also moving toward replaceable, standardized interfaces.

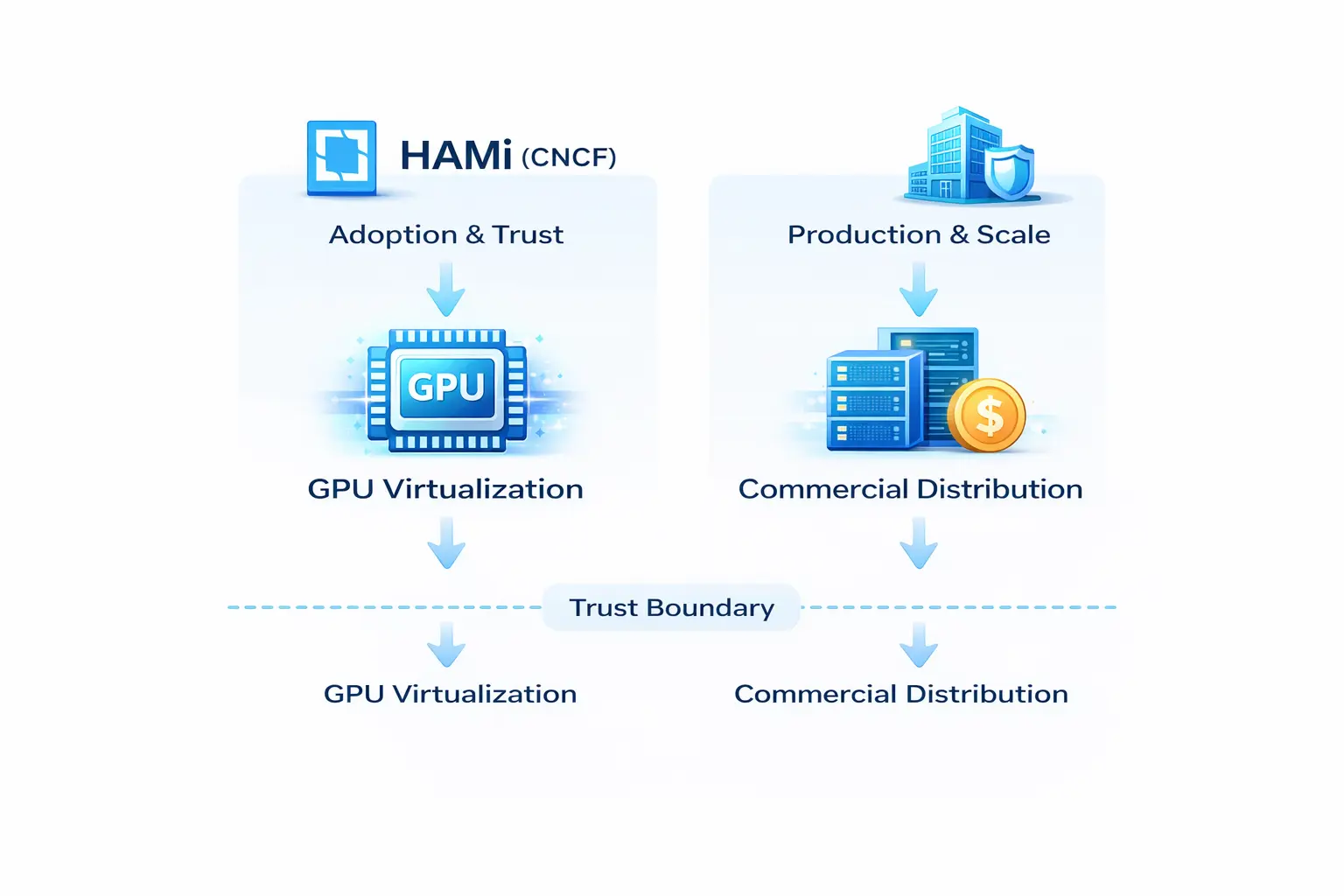

On this standardization path, HAMi’s role needs redefinition: it’s not about replacing Kubernetes—it’s about turning GPU virtualization and slicing into a declarative, schedulable, governable data plane.

HAMi’s core contribution expands the allocatable unit from “whole GPU integers” to finer-grained shares (memory and compute), forming a complete allocation chain:

This transforms “sharing” from ad-hoc “it runs” experimentation into engineering capability that can be declared in YAML, scheduled by policy, and validated by metrics.

HAMi’s scheduling doesn’t replace Kubernetes—it uses a Scheduler Extender pattern to let the native scheduler understand vGPU resource models:

This architecture positions HAMi naturally as an execution layer under higher-level “AI control planes” (queuing, quotas, priorities)—working alongside Volcano, Kueue, Koordinator, and others.

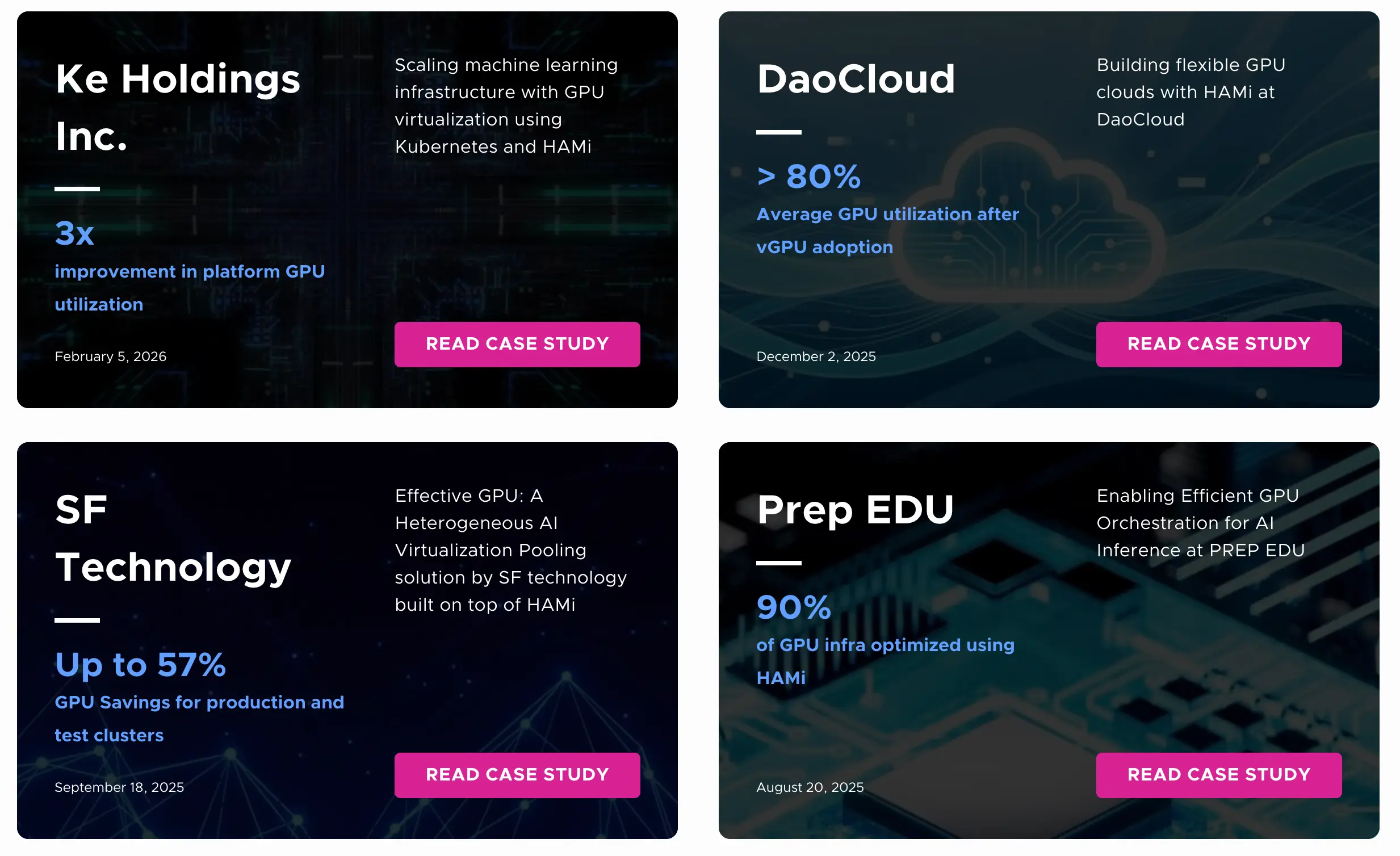

CNCF public case studies provide concrete answers: in a hybrid, multi-cloud platform built on Kubernetes and HAMi, 10,000+ Pods run concurrently, and GPU utilization improves from 13% to 37% (nearly 3×).

Here are highlights from several cases:

These cases demonstrate a consistent pattern: GPU virtualization becomes economically meaningful only when it participates in a governable contract—where utilization, isolation, and policy can be expressed, measured, and improved over time.

From Dynamia’s perspective (and as VP of Open Source Ecosystem), the strategic value of HAMi becomes clear:

This boundary is the foundation for long-term trust—project and company offerings remain separate, with commercial distributions and services built on the open source project.

The internal alignment memo recommends a bilingual approach:

First layer: Lead globally with “GPU virtualization / sharing / utilization” (Chinese can directly use “GPU virtualization and heterogeneous scheduling,” but English first layer should avoid “heterogeneous” as a headline)

Second layer: When users discuss mixed GPUs or workload diversity, introduce “heterogeneous” to confirm capability boundaries—never as the opening hook

Core anchor: Maintain “HAMi (project and community) ≠ company products” as the non-negotiable baseline for long-term positioning

DaoCloud’s case study already set vendor-agnostic and CNCF toolchain compatibility as hard constraints, framing vendor dependency reduction as a business and operational benefit—not just a technical detail. Project-HAMi’s official documentation lists “avoid vendor lock” as a core value proposition.

In this context, the right commercialization landing isn’t “closed-source scheduling”—it’s productizing capabilities around real enterprise complexity:

My strong judgment: over the next 2–3 years, GPU scheduling competition will shift from “whose implementation is more black-box” to “whose contract is more open.”

The reasons are practical:

These signals suggest that heterogeneity will grow: mixed accelerators, mixed clouds, mixed workload types.

Low-latency inference tiers (beyond just GPUs) will force resource scheduling toward “multi-accelerator, multi-layer cache, multi-class node” architectural design—scheduling must inherently be heterogeneous.

In this world, “open scheduling” isn’t idealism—it’s risk management. Building schedulable governable “control plane + data plane” combinations around DRA/CDI and other solidifying open interfaces, ones that are pluggable, multi-tenant governable, and co-evolvable with the ecosystem—this looks like the truly sustainable path for AI Native Infrastructure.

The next battleground isn’t “whose scheduling is smarter”—it’s “who can standardize device resource contracts into something governable.”

When you place HAMi 2025 back in the broader AI Native Infrastructure context, it’s no longer just the year of “GPU sharing tools”—it’s a more structural signal: GPUs are moving toward open scheduling.

The driving forces come from both ends:

For Dynamia, HAMi’s significance has transcended “GPU sharing tool”: it turns GPU virtualization and slicing into declarative, schedulable, measurable data planes—letting queues, quotas, priorities, and multi-tenancy actually close the governance loop.

2026-02-08 20:20:05

“The best way to learn AI is to start building. These resources will guide your journey.”

In my ongoing effort to keep the AI Resources list focused on production-ready tools and frameworks, I’ve removed 44 collection-type projects—courses, tutorials, awesome lists, and cookbooks.

These resources aren’t gone—they’ve been moved here. This post is a curated collection of those educational materials, organized by type and topic. Whether you’re a complete beginner or an experienced practitioner, you’ll find something valuable here.

My AI Resources list now focuses on concrete tools and frameworks—projects you can directly use in production. Collections, while valuable, serve a different purpose: education and discovery.

By separating them, I:

Awesome lists are community-curated collections of the best resources. They’re perfect for discovering new tools and staying updated.

Structured learning paths from universities and tech companies.

Machine Learning for Beginners

Practical code examples and recipes.

In-depth guides on specific topics.

Reusable templates and workflows.

Academic and evaluation resources.

System Prompts and Models of AI Tools

Agent frameworks and production tools remain in the AI Resources list, including:

These are functional tools you can use to build applications, not educational collections. They belong in the AI Resources list.

I removed 44 collection-type projects from the AI Resources list to keep it focused on production tools:

These resources remain incredibly valuable for learning and discovery. They just serve a different purpose than the production-focused tools in my AI Resources list.

Next Steps:

Acknowledgments: This collection was compiled during my AI Resources cleanup initiative. Special thanks to all the maintainers of these awesome lists, courses, and collections for their invaluable contributions to the AI community.

2026-02-08 16:00:00

“If I have seen further, it is by standing on the shoulders of giants.” — Isaac Newton

In the excitement surrounding LLMs, vector databases, and AI agents, it’s easy to forget that modern AI didn’t emerge from a vacuum. Today’s AI revolution stands upon decades of infrastructure work—distributed systems, data pipelines, search engines, and orchestration platforms that were built long before “AI Native” became a buzzword.

This post is a tribute to those traditional open source projects that became the invisible foundation of AI infrastructure. They’re not “AI projects” per se, but without them, the AI revolution as we know it wouldn’t exist.

| Era | Focus | Core Technologies | AI Connection |

|---|---|---|---|

| 2000s | Web Search & Indexing | Lucene, Elasticsearch | Semantic search foundations |

| 2010s | Big Data & Distributed Computing | Hadoop, Spark, Kafka | Data processing at scale |

| 2010s | Cloud Native | Docker, Kubernetes | Model deployment platforms |

| 2010s | Stream Processing | Flink, Storm, Pulsar | Real-time ML inference |

| 2020s | AI Native | Transformers, Vector DBs | Built on everything above |

Before we could train models on petabytes of data, we needed ways to store, process, and move that data.

GitHub: https://github.com/apache/hadoop

Hadoop democratized big data by making distributed computing accessible. Its HDFS filesystem and MapReduce paradigm proved that commodity hardware could process web-scale datasets.

Why it matters for AI:

GitHub: https://github.com/apache/kafka

Kafka redefined data streaming with its log-based architecture. It became the nervous system for real-time data flows in enterprises worldwide.

Why it matters for AI:

GitHub: https://github.com/apache/spark

Spark brought in-memory computing to big data, making iterative algorithms (like ML training) practical at scale.

Why it matters for AI:

Before RAG (Retrieval-Augmented Generation) became a buzzword, search engines were solving retrieval at scale.

GitHub: https://github.com/elastic/elasticsearch

Elasticsearch made full-text search accessible and scalable. Its distributed architecture and RESTful API became the standard for search.

Why it matters for AI:

GitHub: https://github.com/opensearch-project/opensearch

When AWS forked Elasticsearch, it ensured search infrastructure remained truly open. OpenSearch continues the mission of accessible, scalable search.

Why it matters for AI:

The evolution from relational databases to vector databases represents a paradigm shift—but both have AI relevance.

Why they matter for AI:

When Docker and Kubernetes emerged, they weren’t built for AI—but AI couldn’t scale without them.

GitHub: https://github.com/kubernetes/kubernetes

Kubernetes became the operating system for cloud-native applications. Its declarative API and controller pattern made it perfect for AI workloads.

Why it matters for AI:

Istio (2016), Knative (2018) - Service mesh and serverless platforms that proved:

Why they matter for AI:

API gateways weren’t designed for AI, but they became the foundation of AI Gateway patterns.

These API gateways solved rate limiting, auth, and routing at scale. When LLMs emerged, the same patterns applied:

AI Gateway Evolution:

Traditional API Gateway (2010s)

↓

Rate Limiting → Token Bucket Rate Limiting

Auth → API Key + Organization Management

Routing → Model Routing (GPT-4 → Claude → Local Models)

Observability → LLM-specific Telemetry (token usage, cost)

↓

AI Gateway (2024)

Why they matter for AI:

Data engineering needs pipelines. ML engineering needs pipelines. AI agents need workflows.

GitHub: https://github.com/apache/airflow

Airflow made pipeline orchestration accessible with its DAG-based approach. It became the standard for ETL and data engineering.

Why it matters for AI:

Modern workflow platforms that evolved from Airflow’s foundations:

Why they matter for AI:

Before we could train on massive datasets, we needed formats that supported ACID transactions and schema evolution.

These table formats brought reliability to data lakes:

Why they matter for AI:

What do all these projects have in common?

Modern “AI Native” infrastructure didn’t replace these projects—it builds on them:

| Traditional Project | AI Native Evolution | Example |

|---|---|---|

| Hadoop HDFS | Distributed model storage | HDFS for datasets, S3 for checkpoints |

| Kafka | Real-time feature pipelines | Kafka → Feature Store → Model Serving |

| Spark ML | Distributed ML training | MLlib → PyTorch Distributed |

| Elasticsearch | Vector search | ES → Weaviate/Qdrant/Milvus |

| Kubernetes | ML orchestration | K8s → Kubeflow/KServe |

| Istio | AI Gateway service mesh | Istio → LLM Gateway with mTLS |

| Airflow | ML pipeline orchestration | Airflow → Prefect/Flyte for ML |

This post honors these projects, but we’re also removing them from our AI Resources list. Here’s why:

They’re not “AI Projects”—they’re foundational infrastructure.

But their absence doesn’t diminish their importance.

By removing them, we acknowledge that:

The next time you:

Remember: You’re standing on the shoulders of Hadoop, Kafka, Elasticsearch, Kubernetes, and countless others. They built the roads we now drive on.

Just as Hadoop and Kafka enabled modern AI, today’s AI infrastructure will become tomorrow’s foundation:

The cycle continues. The giants of today will be the foundations of tomorrow.

As we clean up our AI Resources list to focus on AI-native projects, we don’t forget where we came from. Traditional big data and cloud native infrastructure made the AI revolution possible.

To the Hadoop committers, Kafka maintainers, Kubernetes contributors, and all who built the foundation: Thank you.

Your work enabled ChatGPT, enabled Transformers, enabled everything we now call “AI.”

Standing on your shoulders, we see further.

Acknowledgments: This post was inspired by the need to refactor our AI Resources list. The 27 projects mentioned here are being removed—not because they’re unimportant, but because they deserve their own category: The Foundation.

2026-02-06 20:56:35

Time flies—it’s already been a month since I joined Dynamia. In this article, I want to share my observations from this past month: why AI Native Infra is a direction worth investing in, and some considerations for those thinking about their own career or technical direction.

After nearly five years of remote work, I officially joined Dynamia last month as VP of Open Source Ecosystem. This decision was not sudden, but a natural extension of my journey from cloud native to AI Native Infra.

But this article is not just about my personal choice. I want to answer a more universal question: In the wave of AI infrastructure startups, why is compute governance a direction worth investing in?

For the past decade, I have worked continuously in the infrastructure space: from Kubernetes to Service Mesh, and now to AI Infra. I am increasingly convinced that the core challenge in the AI era is not “can the model run,” but “can compute resources be run efficiently, reliably, and in a controlled manner.” This conviction has only grown stronger through my observations and reflections during this first month at Dynamia.

This article answers three questions: What is AI Native Infra? Why is GPU virtualization a necessity? Why did I choose Dynamia and HAMi?

The core of AI Native Infrastructure is not about adding another platform layer, but about redefining the governance target: expanding from “services and containers” to “model behaviors and compute assets.”

I summarize it as three key shifts:

In essence, AI Native Infra is about upgrading compute governance from “resource allocation” to “sustainable business capability.”

Many teams focus on model inference optimization, but in production, enterprises first encounter the problem of “underutilized GPUs.” This is where GPU virtualization delivers value.

In short: GPUs must not only be allocatable, but also splittable, isolatable, schedulable, and governable.

This is the most frequently asked question. Here is the shortest answer:

Open source projects are not the same as company products, but the two evolve together. HAMi drives industry adoption and technical trust, while Dynamia brings these capabilities into enterprise production environments at scale. This “dual engine” approach is what makes Dynamia unique.

HAMi (Heterogeneous AI Computing Virtualization Middleware) delivers three key capabilities on Kubernetes:

Currently, HAMi has attracted over 360 contributors from 16 countries, with more than 200 enterprise end users, and its international influence continues to grow.

AI infrastructure is experiencing a new wave of startups. The vLLM team’s company raised $150 million, SGLang’s commercial spin-off RadixArk is valued at $4 billion, and Databricks acquired MosaicML for $1.3 billion—all pointing to a consensus: Whoever helps enterprises run large models more efficiently and cost-effectively will hold the keys to next-generation AI infrastructure.

Against this backdrop, the positioning of Dynamia and HAMi is even clearer. Many teams focus on “model performance acceleration” and “inference optimization” (like vLLM, SGLang), while we focus on “resource scheduling and virtualization”—enabling better orchestration of existing accelerated hardware resources.

The two are complementary: the former makes individual models run faster and cheaper, while the latter ensures that compute allocation at the cluster level is efficient, fair, and controllable. This is similar to extending Kubernetes’ CPU/memory scheduling philosophy to GPU and heterogeneous compute management in the AI era.

My observations this month have convinced me that compute governance is the most undervalued yet most promising area in AI infrastructure. If you are considering a career or technical investment, here is my assessment:

First, this is a real and urgent pain point

Model training and inference optimization attract a lot of attention, but in production, enterprises first encounter the problem of “underutilized GPUs”—structural idleness, scheduling failures, fragmentation waste, and vendor lock-in anxiety. Without solving these problems, even the fastest models cannot scale in production. GPU virtualization and heterogeneous compute scheduling are the “infrastructure below infrastructure” for enterprise AI transformation.

Second, this is a clear long-term track

Frameworks like vLLM and SGLang emerge constantly, making individual models run faster. But who ensures that compute allocation at the cluster level is efficient, fair, and controllable? This is similar to extending Kubernetes’ success in CPU/memory scheduling to GPU and heterogeneous compute management in the AI era. This is not something that can be finished in a year or two, but a direction for continuous construction over the next five to ten years.

Third, this is an open and verifiable path

Dynamia chose to build on HAMi as an open source foundation, first solving general capabilities, then supporting enterprise adoption. This means the technical direction is transparent and verifiable in the community. You can form your own judgment by participating in open source, observing adoption, and evaluating the ecosystem—rather than relying on the black-box promises of proprietary solutions.

Fourth, this is a window of opportunity that is opening now

AI infrastructure is being redefined. Investing in its construction today will continue to yield value in the coming years. The vLLM team’s company raised $150 million, SGLang’s commercial spin-off RadixArk is valued at $4 billion, Databricks acquired MosaicML for $1.3 billion—all validating the same trend: Whoever helps enterprises run large models more efficiently will hold the keys to next-generation AI infrastructure.

I hope to bring my experience in cloud native and open source communities to the next stage of HAMi and Dynamia: turning GPU resources from a “cost center” into an “operational asset.” This is not just my career choice, but my judgment and investment in the direction of next-generation infrastructure.

jimmysong) to join the HAMi community focused on GPU virtualization and heterogeneous compute scheduling.

If you are also interested in HAMi, GPU virtualization, AI Native Infra, or Dynamia, feel free to reach out.

From cloud native to AI Native Infra, my observations this month have only strengthened my conviction: The true upper limit of AI applications is determined by the infrastructure’s ability to govern compute resources.

HAMi addresses the fundamental issues of GPU virtualization and heterogeneous compute scheduling, while Dynamia is driving these capabilities into large-scale production. If you are also looking for a technical direction worth long-term investment, AI Native Infra—especially compute governance and scheduling—is a track with real pain points, a clear path, an open ecosystem, and an opening window of opportunity.

Joining Dynamia is not just a career choice, but a commitment to building the next generation of infrastructure. I hope the observations and reflections in this article can provide some reference for you as you evaluate technical directions and career opportunities.

If you are also interested in HAMi, GPU virtualization, AI Native Infra, or Dynamia, feel free to reach out.

2026-01-20 15:51:36

The role of Spec is undergoing a fundamental transformation, becoming the governance anchor of engineering systems in the AI era.

From first principles, software engineering has always been about one thing: stably, controllably, and reproducibly transforming human intent into executable systems.

Artificial Intelligence (AI) does not change this engineering essence, but it dramatically alters the cost structure:

Therefore, in the era of Agent-Driven Development (ADD), the core issue is not “can agents do the work,” but how to maintain controllability and intent preservation in engineering systems under highly autonomous agents.

Many attribute the “explosion” of ADD to more mature multi-agent systems, stronger models, or more automated tools. In reality, the true structural inflection point arises only when these three conditions are met:

Agents have acquired multi-step execution capabilities

With frameworks like LangChain, LangGraph, and CrewAI, agents are no longer just prompt invocations, but long-lived entities capable of planning, decomposition, execution, and rollback.

Agents are entering real enterprise delivery pipelines

Once in enterprise R&D, the question shifts from “can it generate” to “who approved it, is it compliant, can it be rolled back.”

Traditional engineering tools lack a control plane for the agent era

Tools like Git, CI, and Issue Trackers were designed for “human developer collaboration,” not for “agent execution.”

When these three factors converge, ADD inevitably shifts from an “efficiency tool” to a “governance system.”

In the context of ADD, Spec is undergoing a fundamental shift:

Spec is no longer “documentation for humans,” but “the source of constraints and facts for systems and agents to execute.”

Spec now serves at least three roles:

Verifiable expression of intent and boundaries

Requirements, acceptance criteria, and design principles are no longer just text, but objects that can be checked, aligned, and traced.

Stable contracts for organizational collaboration

When agents participate in delivery, verbal consensus and tacit knowledge quickly fail. Versioned, auditable artifacts become the foundation of collaboration.

Policy surface for agent execution

Agents can write code, modify configurations, and trigger pipelines. Spec must become the constraint on “what can and cannot be done.”

From this perspective, the status of Spec is approaching that of the Control Plane in AI-native infrastructure.

In recent systems (such as APOX and other enterprise products), an industry consensus is emerging:

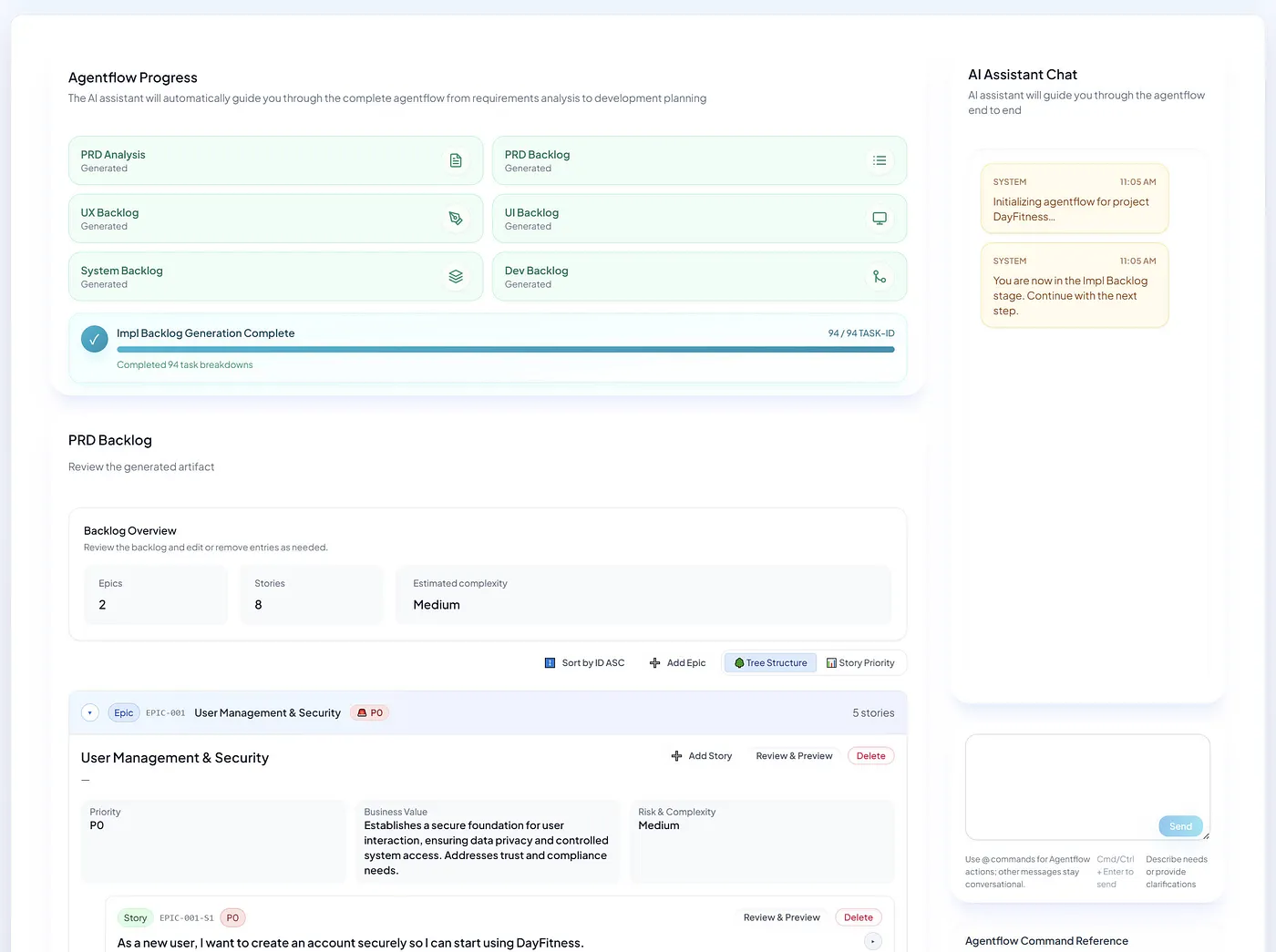

APOX (AI Product Orchestration eXtended) is a multi-agent collaboration workflow platform for enterprise software delivery. Its core goals are:

APOX is not about simply speeding up code generation, but about elevating “Spec” from auxiliary documentation to a verifiable, constrainable, and traceable core asset in engineering—building a control plane and workflow governance system suitable for Agent-Driven Development.

Such systems emphasize:

This is not about “smarter AI,” but about engineering systems adapting to the agent era.

This is not to devalue code, but to acknowledge reality:

In the ADD era, the value of Spec is reflected in:

Code will be rewritten again and again; Spec is the long-term asset.

ADD also faces significant risks:

Can Spec become a Living Spec

That is, when key implementation changes occur, can the system detect “intent changes” and prompt Spec updates, rather than allowing silent drift?

Can governance achieve low friction but strong constraints

If gates are too strict, teams will bypass them; if too loose, the system loses control.

These two factors determine whether ADD is “the next engineering paradigm” or “just another tool bubble.”

From a broader perspective, ADD is the inevitable result of engineering systems becoming “control planes”:

Engineering systems are evolving from “human collaboration tools” to “control systems for agent execution.”

In this structure:

This closely aligns with the evolution path of AI-native infrastructure.

The winners of the ADD era will not be the systems with “the most agents or the fastest generation,” but those that first upgrade Spec from documentation to a governable, auditable, and executable asset. As automation advances, the true scarcity is the long-term control of intent.

2026-01-18 14:53:08

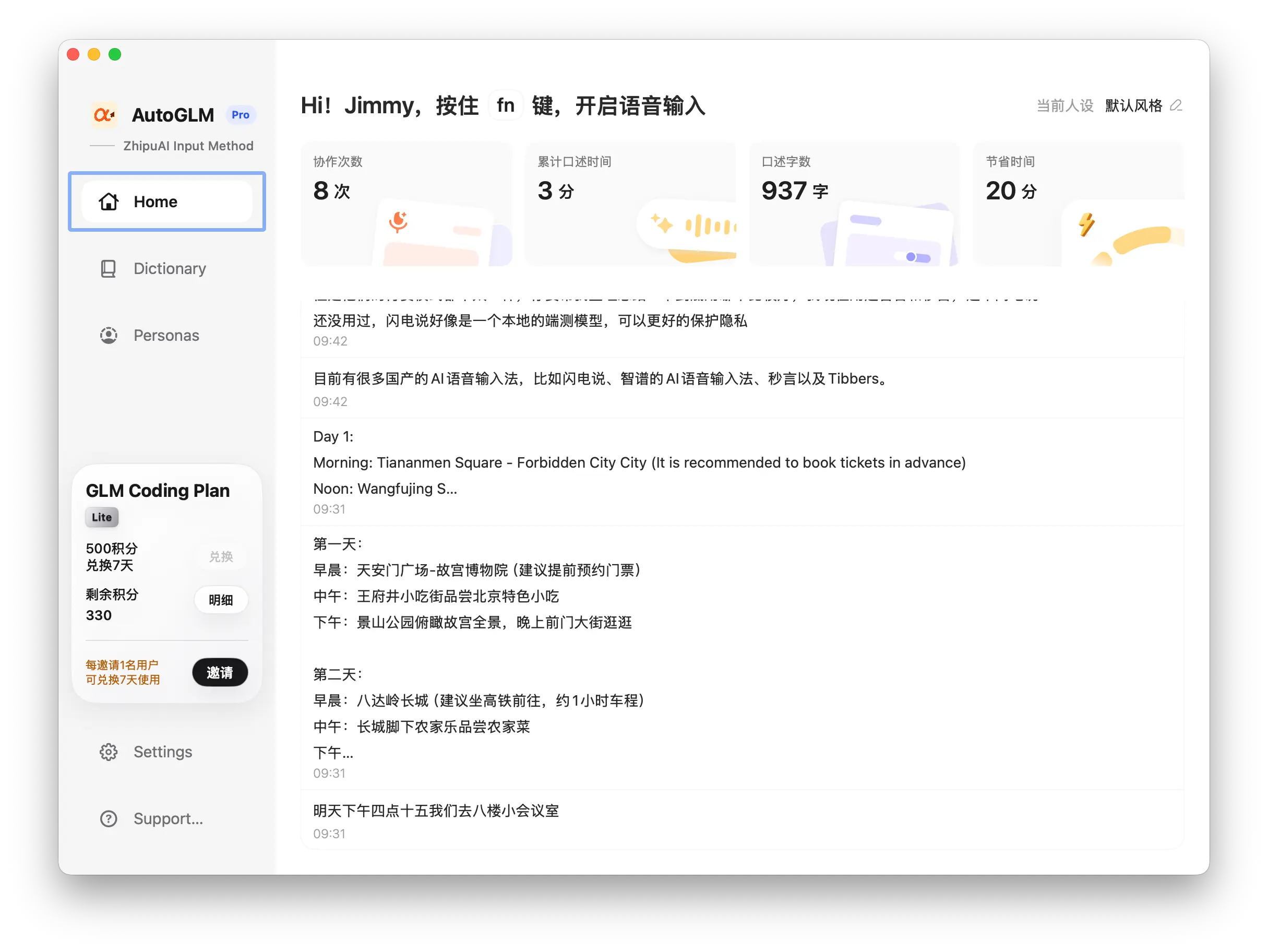

Voice input methods are not just about being “fast”—they are becoming a brand new gateway for developers to collaborate with AI.

I am increasingly convinced of one thing: PC-based AI voice input methods are evolving from mere “input tools” into the foundational interaction layer for the era of programming and AI collaboration.

It’s not just about typing faster—it determines how you deliver your intent to the system, whether you’re writing documentation, code, or collaborating with AI in IDEs, terminals, or chat windows.

Because of this, the differences in voice input method experiences are far more significant than they appear on the surface.

After long-term, high-frequency use, I have developed a set of criteria to assess the real-world performance of AI voice input methods:

Based on these criteria, I focused on comparing Miaoyan, Shandianshuo, and Zhipu AI Voice Input Method.

Miaoyan was the first domestic AI voice input method I used extensively, and it remains the one I am most willing to use continuously.

It’s important to clarify that Miaoyan’s command mode is not about editing text via voice. Instead:

You describe your need in natural language, and the system directly generates an executable command-line command.

This is crucial for developers:

This design is clearly focused on engineering efficiency, not office document polishing.

But there are some practical limitations:

From a product strategy perspective, it feels more like a “pure tool” than part of an ecosystem.

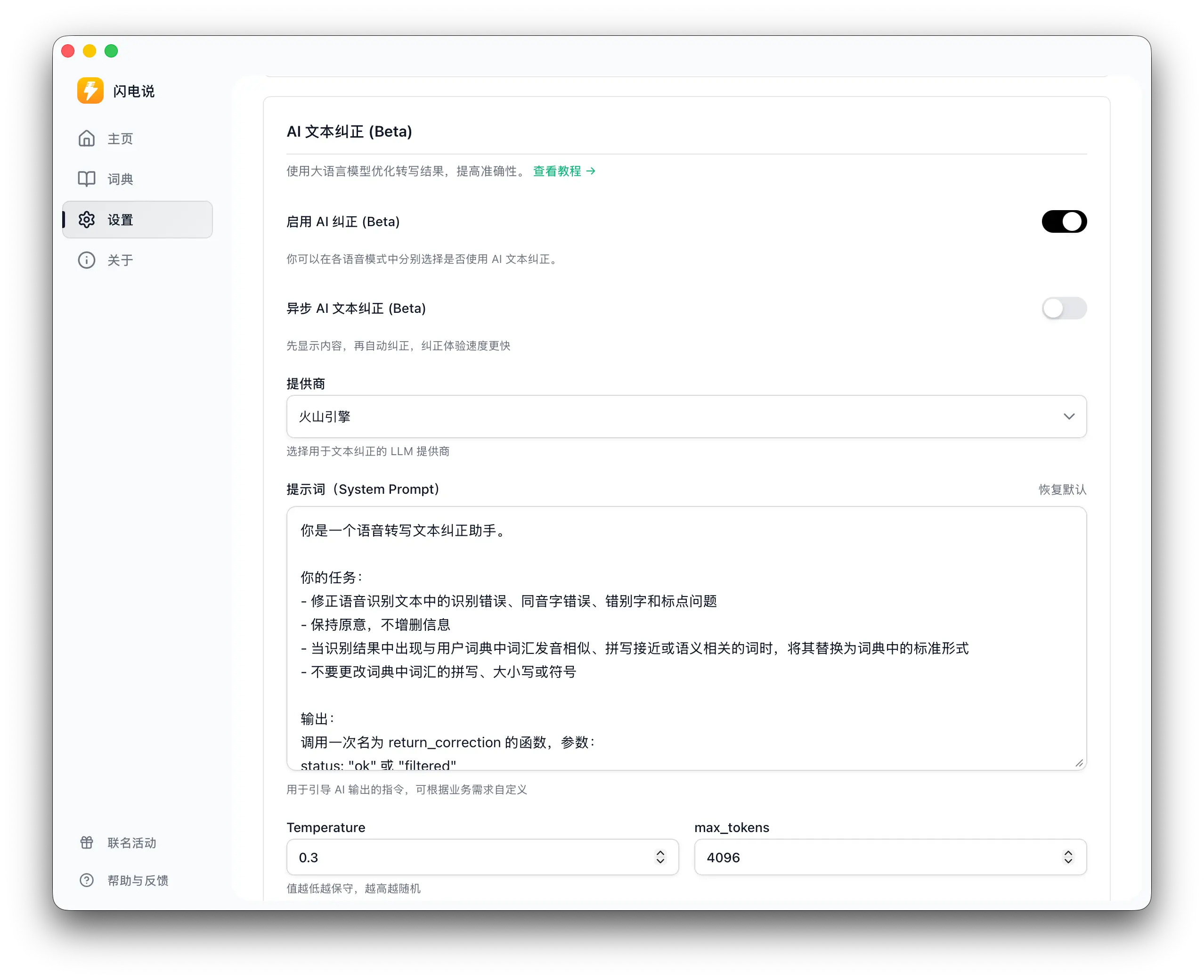

Shandianshuo takes a different approach: it treats voice input as a “local-first foundational capability,” emphasizing low latency and privacy (at least in its product narrative). The natural advantages of this approach are speed and controllable marginal costs, making it suitable as a “system capability” that’s always available, rather than a cloud service.

However, from a developer’s perspective, its upper limit often depends on “how you implement enhanced capabilities”:

If you only use it for basic transcription, the experience is more like a high-quality local input tool. But if you want better mixed Chinese-English input, technical term correction, symbol and formatting handling, the common approach is to add optional AI correction/enhancement capabilities, which usually requires extra configuration (such as providing your own API key or subscribing to enhanced features). The key trade-off here is not “can it be used,” but “how much configuration cost are you willing to pay for enhanced capabilities.”

If you want voice input to be a “lightweight, stable, non-intrusive” foundation, Shandianshuo is worth considering. But if your goal is to make voice input part of your developer workflow (such as command generation or executable actions), it needs to offer stronger productized design at the “command layer” and in terms of controllability.

I also thoroughly tested the Zhipu AI Voice Input Method.

Its strengths include:

But with frequent use, some issues stand out:

Although I prefer Miaoyan in terms of experience, Zhipu has a very practical advantage:

If you already subscribe to Zhipu’s programming package, the voice input method is included for free.

This means:

From a business perspective, this is a very smart strategy.

The following table compares the three products across key dimensions for quick reference.

| Dimension | Miaoyan | Shandianshuo | Zhipu AI Voice Input Method |

|---|---|---|---|

| Response Speed | Fast, nearly instant | Usually fast (local-first) | Slightly slower than Miaoyan |

| Continuous Stability | Stable | Depends on setup and environment | Very stable |

| Idle Misrecognition | Rare | Generally restrained (varies by version) | Obvious: outputs characters even if silent |

| Output Cleanliness/Control | High | More like an “input tool” | Occasionally messy |

| Developer Differentiator | Natural language → executable command | Local-first / optional enhancements | Ecosystem-attached capabilities |

| Subscription & Cost | Standalone, separate purchase | Basic usable; enhancements often require setup/subscription | Bundled free with programming package |

| My Current Preference | Best experience | More like a “foundation approach” | Easy to keep but not clean enough |

The switching cost for voice input methods is actually low: just a shortcut key and a habit of output.

What really determines whether users stick around is:

For me personally:

These points are not contradictory.

The competition among AI voice input methods is no longer about recognition accuracy, but about who can own the shortcut key you press every day.