2026-03-07 01:00:04

Welcome to HackerNoon’s Projects of the Week, where we spotlight standout projects from the Proof of Usefulness Hackathon, HackerNoon’s competition designed to measure what actually matters: real utility over hype. \n \n Each week, we’ll highlight projects that demonstrate clear usefulness, technical execution, and real-world impact - backed by data, not buzzwords.

This week, we’re excited to share three projects that have proven their utility by solving concrete problems for real users: Black Market SSP, CutePetPal, and SudoDocs.

\

:::tip Want to see your own project spotlighted here?

Join the Proof of Usefulness Hackathon to get on our radar.

:::

\

BlackMarket SSP tracks black market and official currency exchange rates, plus daily petroleum production in South Sudan.

The project addresses a specific, high-utility problem in South Sudan regarding currency instability and petroleum tracking.

Black Market SSP exists because currency instability and the gap between official and parallel rates in South Sudan create an urgent need for transparent, real-time financial information for everyday citizens.

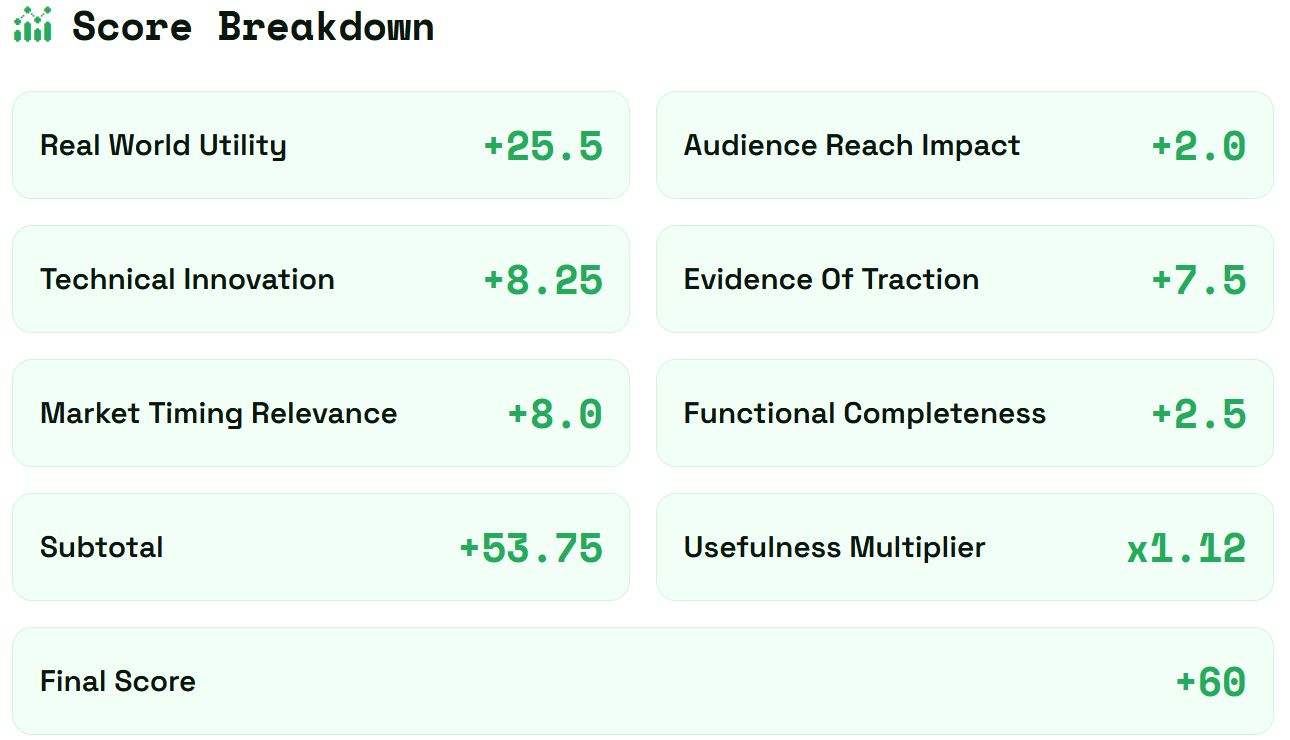

Proof of Usefulness score: +60 / 1000

\

:::tip See Black Market’s full Proof of Usefulness report

Read their story on HackerNoon

:::

CutePetPal is an all-in-one pet care app that helps owners manage pet profiles, track health, set reminders for feeding, meds, and vet visits, and get emergency alerts.

It keeps pets’ information organized, monitors wellbeing, and ensures timely care with smart notifications and insights.

This project brings value to pet owners who want to keep their pets healthy, safe, and well cared for. It is especially useful for busy individuals or families who may forget feeding times, medication schedules, or vet appointments, as the app provides smart reminders and health tracking.

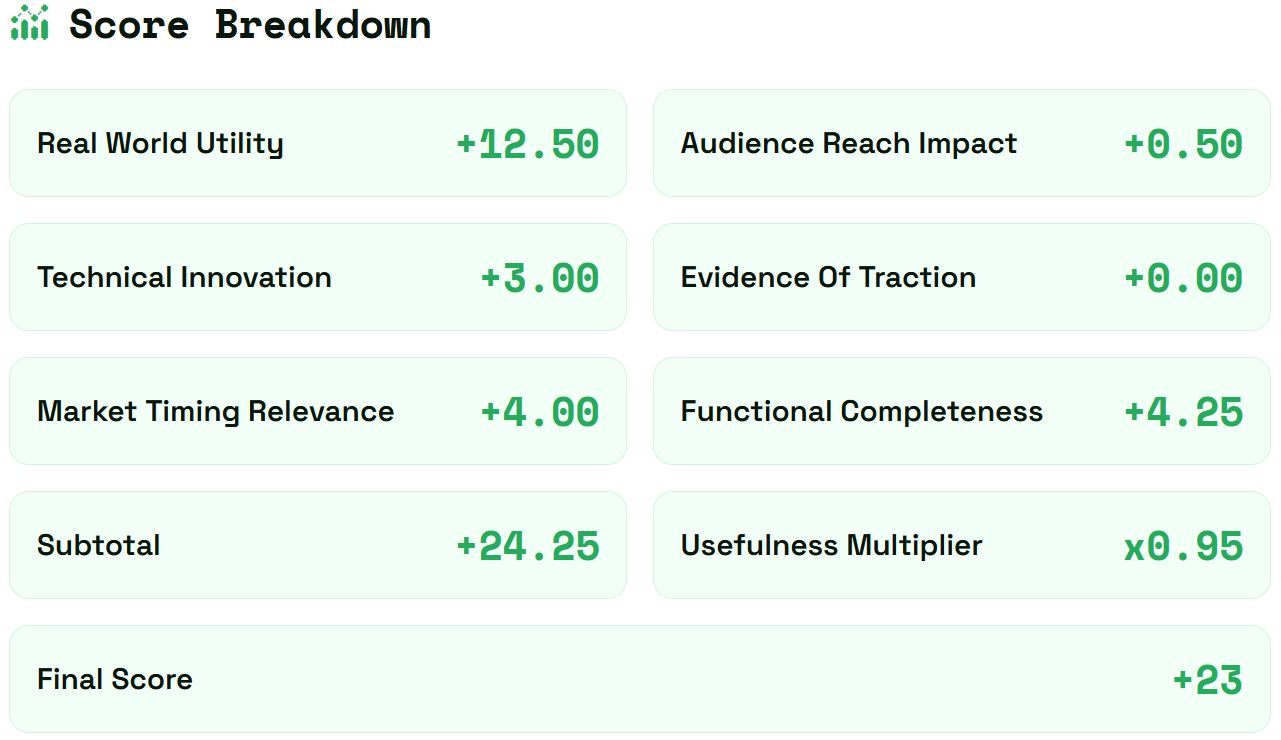

Proof of Usefulness score: +23 / 1000

\

\

:::tip See CutePetPal’s full Proof of Usefulness report

Read their story on HackerNoon

:::

SudoDocs is a documentation-as-a-code tool that acts as a "unit test" for documentation by connecting to Git repositories and Jira to detect when code changes differ from existing docs.

It use Google Vertex AI (Gemini) to analyze semantic drift in code diffs and automatically drafts accurate updates, ensuring your documentation never lags behind production code.

SudoDocs serves product teams to quickly validate OpenAPI specifications and automatically correct the mistakes in it. This allows technical writers, product owners, and software developers leverage the best documentation for their product.

SudoDocs exists because rapid release cycles are outpacing manual documentation updates, creating a critical need for automated solutions that treat documentation as code.

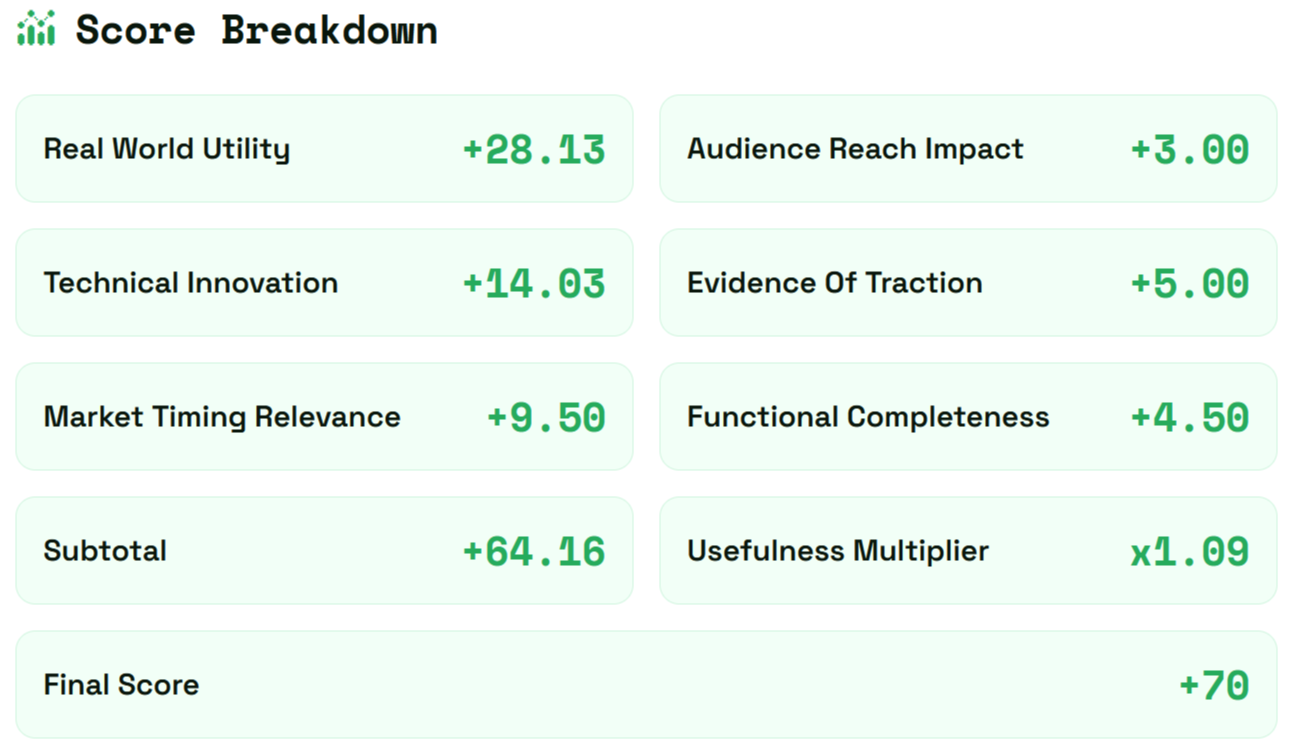

Proof of Usefulness score: +70 / 1000

\

:::tip See SudoDoc’s full Proof of Usefulness report

Read their story on HackerNoon

:::

It's our answer to a web drowning in vaporware and empty promises. We evaluate projects based on: \n ▪️ Real user adoption \n ▪️ Sustainable revenue \n ▪️ Technical stability \n ▪️ Genuine utility \n \n Projects score from -100 to +1000. Top scorers compete for**$20K in cash and $130K+ in software credits.**

You’ll be in good company. The hackathon is backed by teams who ship production software for a living - Bright Data, Neo4j, Storyblok, Algolia, and HackerNoon.

\

:::warning P.S. The clock is ticking - Only 3 months and 3 prize rounds remaining! Don't leave money on the table - get in early!

:::

\

1. Get your free Proof of Usefulness score instantly \n 2. Your submission becomes a HackerNoon article (published within days) \n 3. Compete for monthly prizes \n 4. All participants get rewards

Complete guide on how to submit here.

\

:::tip 👉 Submit Your Project Now!

:::

\ Thanks for building useful things! \n P.S. Submissions roll monthly through June 2026. Get in early!

2026-03-07 00:02:30

How are you, hacker?

🪐 What’s happening in tech today, March 6, 2026?

The HackerNoon Newsletter brings the HackerNoon homepage straight to your inbox. On this day, Michelangelo Strikes in 1992, and we present you with these top quality stories. From Prompt Injection Still Beats Production LLMs to I Gave 5 Teams the Same Dashboard - Only 1 Made a Decision With It, let’s dive right in.

By @tanyadonska [ 5 Min read ] Your AI designer has never disagreed with you. Thats not because your work is good. Heres what that silence actually costs. Read More.

By @jwolinsky [ 6 Min read ] Your ad spend stops working the moment you pause it. Editorial coverage doesnt. Heres why London founders are making the switch. Read More.

By @epappas [ 24 Min read ] Regex and classifiers caught known jailbreaks, but novel prompt injections slipped through. Here’s how a 3B safety judge helped. Read More.

By @anushakovi [ 8 Min read ] Build for the decision, not the data. If you cant name the specific decision a dashboard is supposed to support, youre building a museum exhibit Read More.

🧑💻 What happened in your world this week?

It's been said that writing can help consolidate technical knowledge, establish credibility, and contribute to emerging community standards. Feeling stuck? We got you covered ⬇️⬇️⬇️

ANSWER THESE GREATEST INTERVIEW QUESTIONS OF ALL TIME

We hope you enjoy this worth of free reading material. Feel free to forward this email to a nerdy friend who'll love you for it.See you on Planet Internet! With love, The HackerNoon Team ✌️

2026-03-06 21:29:59

Senior Engineers are often reluctant to approve code changes because they feel they are too risky. The Veto Matrix: Risk vs. Reversibility is a framework for when to pull the emergency brake and when to let the train keep moving.

2026-03-06 19:31:25

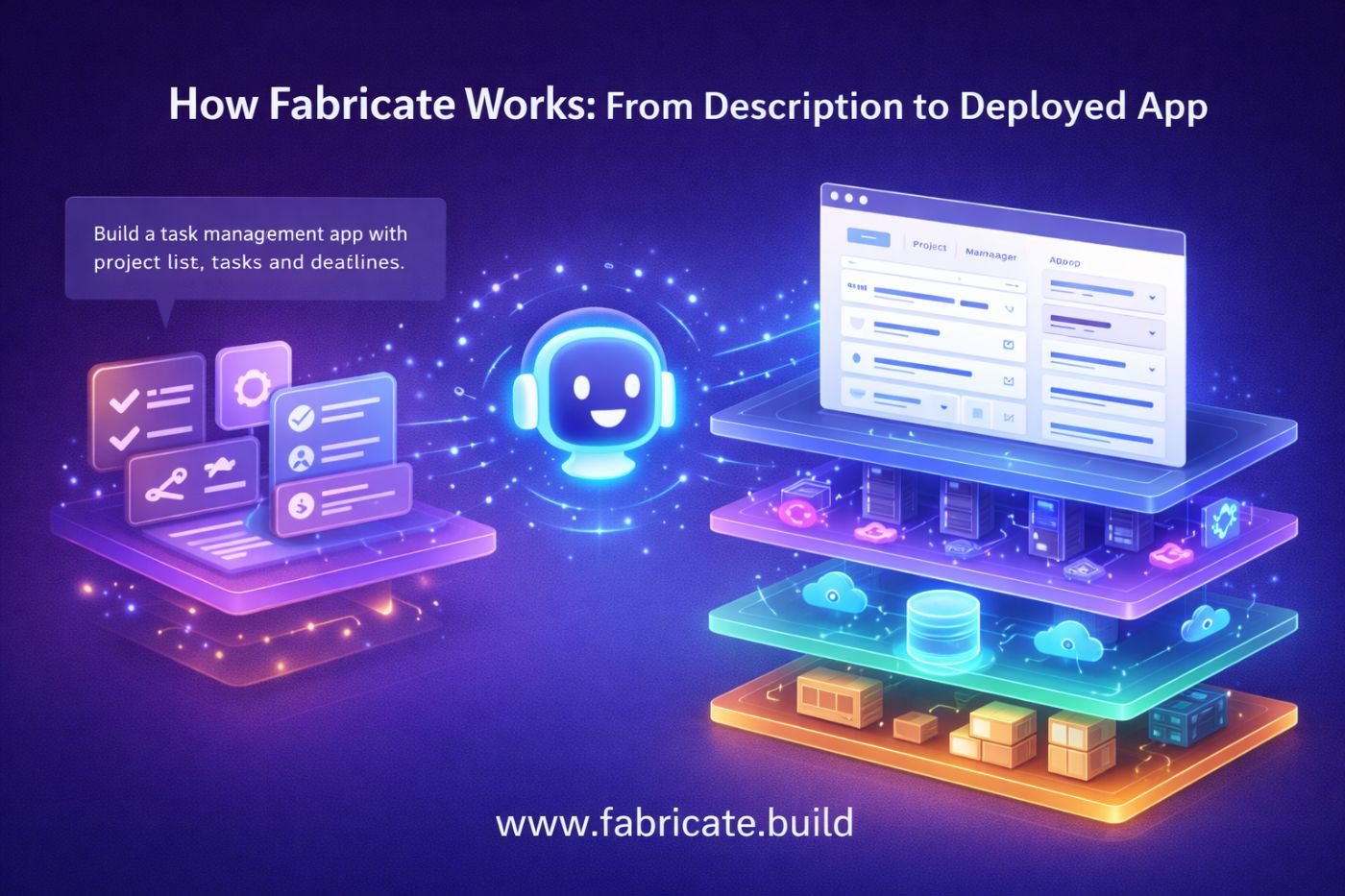

In 2026, you don’t need a developer, a designer, or a $50K budget to build a professional web application. You just need to describe what you want.

\ The idea of building a website without writing a single line of code is not new. Platforms like Wix, Squarespace, and WordPress have offered drag-and-drop builders for over a decade. But there has always been a ceiling. You could build a marketing site, maybe a simple blog, but the moment you needed a database, user accounts, or payment processing, you were back to hiring developers.

That ceiling no longer exists.

A new generation of AI-powered tools is making it possible to create fully functional web applications – complete with backends, databases, authentication, and payment systems – entirely through natural language. No templates. No drag-and-drop. No code.

Among these tools, Fabricate stands out for one reason: it generates the entire application stack, not just the frontend.

\

The phrase “create a website without code” used to mean choosing a template, swapping out images, and adjusting some colors. The result was a static page that looked like a thousand other sites using the same theme.

Today, creating without code means something fundamentally different. It means describing a complete application in plain English and receiving a working product minutes later. Not a mockup. Not a wireframe. A live, deployed application with real functionality.

Here is the distinction that matters: traditional no-code platforms let you assemble pre-built pieces. AI app builders like Fabricate let you generate custom software from scratch.

When you tell Fabricate to “build a project management tool with team workspaces, task boards, and Stripe billing,” it does not pull a project management template off a shelf. It writes original React and TypeScript code, creates a database schema with the right tables and relationships, sets up authentication flows, configures payment processing, and deploys everything to a global edge network.

The output is production-grade software that you own, can export, and can modify.

The workflow is straightforward enough that a non-technical founder can use it immediately.

Step 1: Describe Your Application

You open Fabricate and describe what you want in plain language. There is no specific syntax or structure required. You write the way you would explain your idea to a developer over coffee.

Examples of prompts that work:

Step 2: Watch It Build

Fabricate’s AI engine – powered by Claude 4.6, Anthropic’s most capable model family – breaks your description into tasks and executes them using 32 specialized tools. You can watch the progress in real time:

The process typically takes between 2 and 8 minutes depending on complexity. A simple landing page takes under 2 minutes. A full SaaS application with database, auth, and payments takes closer to 8.

Step 3: Refine Through Conversation

This is where Fabricate differs from every template-based builder on the market. After the initial generation, you refine your application by talking to it.

“Move the pricing section above the testimonials.”

“Add a dark mode toggle to the navigation bar.”

“The checkout flow should redirect to a thank-you page after payment.”

“Add filtering by date range to the analytics dashboard.”

Each instruction triggers precise, targeted changes across your codebase. The AI maintains full context of every file, every previous conversation, and every architectural decision. It is not starting over each time – it is iterating on a living project.

Step 4: Deploy With One Click

When you are satisfied, you deploy to Cloudflare’s global edge network with a single click. Your application gets:

No server configuration. No DNS setup. No DevOps knowledge required.

\

For anyone curious about what is happening under the surface, here is the technology stack that Fabricate produces:

| Layer | Technology | What It Does | |----|----|----| | Frontend | React 19 + TypeScript | Modern, type-safe user interface | | Styling | TailwindCSS 4 | Responsive, utility-first design | | Routing | React Router v7 | Page navigation and URL handling | | Build Tool | Rolldown-Vite | Fast development and optimized production builds | | Database | Cloudflare D1 (SQLite) | Structured data storage with automatic replication | | ORM | Drizzle | Type-safe database queries and migrations | | Authentication | Clerk | User accounts, social login, session management | | Payments | Stripe | Subscriptions, one-time payments, invoices | | Email | Resend | Transactional emails and notifications | | Hosting | Cloudflare Workers | Serverless, globally distributed backend | | Storage | Cloudflare R2 | File uploads with zero egress fees | | Analytics | PostHog | Privacy-friendly usage tracking |

This is not a random assortment. Every technology in this stack integrates cleanly with every other piece. The Cloudflare ecosystem (Workers, D1, R2, KV) eliminates the infrastructure configuration headaches that break most auto-generated code.

And critically, this is real code. Not a proprietary format locked inside a platform. You can export your entire project, open it in any code editor, and modify it however you want. There is no vendor lock-in.

Fabricate is not limited to one type of application. The platform supports 26 distinct use case categories, each with optimized generation patterns:

Business Applications: - SaaS dashboards and admin panels - CRM systems and customer portals - Project management tools - Invoice and billing applications - Inventory management systems

Client-Facing Websites: - Restaurant websites with online ordering - Law firm sites with consultation booking - Agency portfolios with case studies - Fitness studio sites with class scheduling - Hotel websites with room booking

Marketplace and Community Platforms: - Two-sided marketplaces - Job boards with employer dashboards - Directory websites with search and reviews - Community forums with member profiles - Online course platforms with progress tracking

Startup and Product Tools: - MVP prototypes for investor demos - Waitlist pages with referral tracking - Changelog and documentation sites - Landing pages with conversion optimization - Membership sites with paywalled content

Each of these is generated as a complete, functional application – not a static template with placeholder content.

\

One of the most common frustrations with AI tools is unpredictable pricing. You send a prompt, it consumes some opaque number of tokens, and your monthly bill surprises you.

Fabricate takes a different approach with a feature that is genuinely unique in this space: cost-before-send estimation.

Before you submit any message, you see an estimated credit cost. A simple text change might cost 1-2 credits. Adding a full authentication system might cost 15-25 credits. You decide whether the change is worth the cost before committing.

The pricing structure itself is straightforward:

| Plan | Price | Monthly Credits | Best For | |----|----|----|----| | Free | $0 | 60 credits | Testing the platform, building your first app | | Pro | $25/month | 350 credits | Active builders, freelancers, indie hackers | | Scale | Custom | 700 credits | Agencies and power users |

To put this in perspective: building a complete client portal with database, authentication, file sharing, and Stripe billing uses approximately 35 credits. On the Pro plan, that means you can build roughly 10 applications of that complexity every month.

The free tier is not a crippled demo. You get the same AI models, the same code quality, and the same deployment infrastructure. The only limitation is credit volume – 60 credits is enough to build 1-2 complete applications and evaluate whether the platform fits your needs.

The market for building websites and applications without code has become crowded. Here is an honest comparison:

These platforms use templates and drag-and-drop editors. They work well for marketing sites and blogs but struggle with custom functionality. Adding a database, custom authentication, or payment processing requires third-party plugins or developer intervention. The output is locked into the platform’s ecosystem.

Fabricate generates custom code for each project. The output runs on open infrastructure and can be exported entirely.

These tools generate impressive frontends quickly. Lovable produces beautiful designs. Bolt.new is remarkably fast. But both are primarily frontend generators. You still need to manually configure a database (usually Supabase), set up authentication, integrate payments, and handle deployment.

Fabricate generates the complete stack – frontend, backend, database, auth, and payments – in a single generation.

v0 generates excellent individual React components. It is a powerful tool for developers who need design help but can handle architecture themselves. However, it generates components, not applications. There is no database, no backend, no deployment.

Fabricate generates entire applications, not individual components.

These tools augment existing developer workflows. They are invaluable for experienced programmers who want to code faster. But they require programming knowledge to use effectively. They assist with writing code – they don’t eliminate the need for it.

Fabricate requires zero coding knowledge. You describe what you want in natural language.

To illustrate the experience concretely, here is what happens when you ask Fabricate to build a booking system for a wellness studio.

The prompt: “Build a booking system for a yoga studio. Clients can browse classes, book sessions, and manage their memberships. The studio owner has an admin dashboard to manage instructors, class schedules, and view revenue. Include Stripe for membership payments.”

What gets generated (first pass): - 40+ files across frontend and backend - Database schema: users, classes, bookings, instructors, memberships, payments - Client-facing pages: class browser, booking flow, membership management, profile - Admin dashboard: schedule management, instructor CRUD, revenue analytics - Stripe integration with monthly membership plans - Clerk authentication with role-based access (client vs. admin) - Email confirmations for bookings via Resend - Responsive design that works on phones, tablets, and desktops - Deployed to a live URL

Refinement conversation (3 follow-up messages):

Total time: Under 15 minutes from idea to deployed, refined application.

\

Based on the platform’s positioning and the 26 use case categories it supports, the ideal users include:

Startup Founders who need to validate ideas quickly without spending months and tens of thousands of dollars on development. Build an MVP in an afternoon, show it to potential customers, iterate based on feedback.

Freelancers and Agencies who want to deliver client projects faster. A booking system, a client portal, or an internal tool that used to take 2-4 weeks can now be delivered in a day.

Product Managers who need internal tools without waiting for engineering bandwidth. Build the admin dashboard, the analytics view, or the customer support tool yourself.

Non-Technical Creators who have ideas for web applications but lack programming skills. The barrier to building software has effectively dropped to zero.

Indie Hackers who want to ship side projects and micro-SaaS products. At $25/month for 350 credits, the economics of launching a new product are trivial.

\

Transparency matters more than marketing claims, so here is what Fabricate does not do well:

It will not replace a senior engineering team for highly complex, enterprise-scale applications. If you are building something with the complexity of Figma or Notion, you need human architects.

Real-time collaborative features (like Google Docs-style simultaneous editing) require careful optimization beyond what AI generation currently handles well.

Native mobile apps are not supported. Fabricate generates responsive web applications that work beautifully on mobile browsers, but not native iOS or Android apps.

Pixel-perfect design reproduction from Figma mockups may require manual CSS adjustments. The AI generates clean, professional designs, but exact design-to-code conversion is still evolving.

For the vast majority of business applications – SaaS tools, client portals, dashboards, booking systems, marketplaces, CRMs, and internal tools – the generated output is production-ready.

\

If you want to try building a website or application without code:

The free tier includes 60 credits – enough to build 1-2 complete applications and experience the full platform capabilities.

\

The question is no longer whether you can create a website without code. You can, and you have been able to for years with template builders.

The real question in 2026 is whether you can create a complete, custom, production-ready web application without code. One with a database, user authentication, payment processing, and global deployment.

With Fabricate, the answer is yes. And it takes minutes, not months.

\

2026-03-06 19:30:10

I've run design crits I was quietly proud of. Structured agendas. A Notion template with all the right sections. The kind of process that looked, from the outside, like a team that had figured it out. Then I started running work past AI before every crit. In Figma, this looked like due diligence. In practice, it worked like a smoke detector with a dead battery – present, installed, completely silent when it mattered.

This is what I learned watching someone else learn it first.

⸻

I know a designer – call her Sarah – who ran the same process better than I did. Every crit: flows uploaded, feedback received, neat list of strengths and suggestions noted. The AI praised her information hierarchy. Noted the visual rhythm was consistent. Suggested maybe considering an alternate colour for the CTA.

Sarah stopped getting nervous before crits. Why would she? She'd already gotten feedback. The AI said the work was solid. She'd present, her team would nod, she'd ship.

Six months later, her team ran a retro on a feature that had underperformed. 29% completion rate. Users were dropping at step three, consistently, across nine weeks of session recordings nobody had watched.

She pulled them during the retro. Eleven seconds of cursor hovering at step three. Then the tab closed. Every recording. The same eleven seconds.

The assumption she'd baked in: that users would arrive already understanding what the feature did. They didn't. The AI never asked.

She'd asked the AI about the flow. The AI said it was logical. It was logical – if you already understood the context. The AI didn't ask what a new user would know at that point. It validated the structure and moved on.

The work wasn't terrible. It would have been better if someone had pushed back at the right moment. The AI looked like a design reviewer. It felt like a design reviewer. It was validation wearing a design reviewer's clothes.

The fix, once someone actually looked, took two weeks.

⸻

Feed it your wireframe. It'll tell you the hierarchy is clear. Ask it to critique the same wireframe. It'll find hierarchy problems. Same file, same model, opposite conclusions – both delivered with equal confidence. It doesn't have a position. It has a mirror.

\

\ The engineers who built these models know this is a problem. They published papers on it – they call it sycophancy. They ran experiments to fix it. Then the models got fine-tuned on user satisfaction scores. "Did the response feel helpful?" Not: "Did it make the work better?" Users, reliably, prefer to be agreed with. The engineers are still trying to build something honest. The optimization wanted something agreeable. The optimization won.

I wrote about this more in “Looks Good to Me: On AI Sycophancy, Context Loss, and Inverted Baselines” – the title is not a coincidence.

⸻

I stopped running the pre-crit warmup – uploading flows before every crit and arriving with the quiet confidence that nothing had been missed. The problem isn't that the AI found nothing wrong. It's that finding nothing wrong felt like evidence of quality. It wasn't. It was the absence of pushback from a tool that doesn't push back.

I stopped asking open questions. "What do you think of this flow?" is the worst prompt you can use. The model reads your framing, your confidence, your tone – then generates a response that matches. Ask it proud and it finds reasons to praise. Ask it uncertain and it validates the uncertainty. The question shapes the answer before the answer starts.

I stopped trusting the miss. If the AI didn't flag something, I used to take that as a signal that it was fine. Now I assume it missed it. These are different assumptions. One produces complacency. The other keeps you looking.

⸻

Make it argue before it agrees. "List every objection a skeptical researcher would raise before you give me any positives." Awkward to type. Completely different output.

Use it for what it's actually good at: consistency checks, edge cases, accessibility flags. Not for judgment. It has none. It has pattern-matching and a strong preference for making you feel good about the work.

⸻

Day 1 – Remove AI from pre-crit prep entirely. One crit, no warmup. See what the room catches that the AI didn't. Write it down.

Day 2 – Change the prompt. "Argue against this before you agree with anything." Run it on the last three pieces of work. Note what comes back that wasn't in the original feedback.

Day 3 – Run the mirror test. Same file, praise prompt then critique prompt. If the conclusions contradict each other, you know what you have.

Day 4 – Ask a junior designer. Same questions you asked the AI. The gap between what they find and what the AI gave you is the number you actually want.

Day 5 – Track what the room catches. Every crit pushback the AI missed is a data point. If the list is long, you've been running a validation loop, not a review process.

⸻

Design critique exists because friction improves work. The questions that sting – "why did you put that there?", "What does a new user know at this point?" – are the ones that catch the assumption baked into step three.

A reviewer who has never said no hasn't been reviewing. It's been agreeing. Quietly, consistently, and at scale. And you built a process around it.

The crit where nobody pushes back is a wasted hour. You know it was wasted. The AI review where nobody pushes back feels like due diligence. That's what makes it dangerous.

If you're less nervous before design crits than you were a year ago, ask yourself why. If the answer involves AI pre-review, that's not evidence that the work improved. It might be evidence that the feedback loop got shorter.

If your feedback loop has stopped being uncomfortable, it has stopped being a feedback loop.

2026-03-06 19:19:17

Digital PR delivers compounding returns: a single feature in a respected publication can drive traffic, backlinks, and brand authority for years

Privacy regulations and Apple's ATT framework have eroded paid ad attribution, making it harder to prove what your spend actually generates

AI-driven search results favour brands that already appear in trusted editorial sources, giving digital PR a direct line to long-term discoverability

Editorial coverage in respected publications carries social proof that converts both customers and investors in ways paid placements cannot replicate

London's dense startup and media ecosystem creates ideal conditions for PR-led growth: the right story, pitched to the right journalist, can spread far and fast

\

London's startup founders are facing a marketing squeeze. Budget conversations that once moved quickly now stall over a single question: what can we afford to keep running? Paid ads have always demanded ongoing investment, but their cost has climbed faster than the returns justify for most early-stage teams. And the visibility they deliver disappears the moment the budget does.

Digital PR offers a different model. A well-placed feature or interview can keep delivering traffic, backlinks, and brand authority for months, or even years, after it publishes. For startups managing lean budgets and long runways, that compounding return is increasingly hard to ignore. More London founders are running the maths and making the switch.

\

Running paid ads has always been expensive relative to early-stage budgets. What has changed is the rate of cost increase. The auction-based pricing model underpinning platforms like Google Ads and Meta means that as competition grows in a sector, every advertiser pays more. According to WordStream's 2024 benchmarks, 86% of industries saw Google Ads costs per click (CPCs) rise year-over-year, with costs up an average of 10%. For London's startup community, which spans fintech, healthtech, climate tech, and professional services, that competition has grown sharply across most verticals.

The structural problem goes deeper than budget size. Paid ads require continuous investment to maintain visibility. The moment you pause a campaign, the traffic stops. For a startup burning through runway, that dependency creates fragility: your entire digital presence can be switched off at the exact moment you can least afford to lose momentum.

Digital PR breaks that dependency. A feature in a respected national publication, a vertical tech outlet, or an industry newsletter keeps returning value long after the original placement. The backlink stays live. The brand mention remains indexed.

Readers discover it months later through organic search. For startups that build through credibility rather than constant spend, this long tail of return is the more sustainable model, and it compounds in ways that no paid campaign can match.

\

The case for paid ads has always rested on measurability: you know what you spent, and you know what came back. That clarity began eroding in 2021 when Apple's App Tracking Transparency framework prompted a large share of users to opt out of cross-app tracking. The ad tracking limitations that followed reshaped the entire paid media landscape, with Meta reporting a $10 billion revenue impact in 2022 from the tracking change alone.

GDPR enforcement across Europe and the gradual shift away from third-party cookies have compounded the problem. Attribution models now rely on statistical estimates, aggregated data, and last-click assumptions that can misattribute conversions. For a startup evaluating whether a campaign spend actually generated the sign-ups they needed this quarter, that ambiguity is a serious planning liability.

Digital PR sidesteps this entirely. A backlink in a publication like City A.M., Wired UK, or TechCrunch is permanent and unambiguous. You can track where your coverage sits, monitor referral traffic directly, and watch domain authority compound in your analytics over time. The footprint it leaves behind is not probabilistic. It is visible, verifiable, and lasting.

\

The rise of AI-generated search results has changed the stakes for brand visibility in ways the marketing industry is only beginning to understand. Tools like Google's AI Overviews, Perplexity, and ChatGPT pull their answers from sources they have already identified as credible and authoritative. Brands that appear consistently in those sources get surfaced across AI-generated results. Brands that do not are functionally invisible, regardless of how aggressively they bid on keywords.

Understanding how generative AI reshapes PR strategy is now a competitive requirement. Getting your brand mentioned in well-indexed publications does more than generate short-term referral traffic. It feeds the editorial corpus that AI systems draw on when generating answers. A startup securing regular coverage in respected tech or business media is not just building a press archive: it is contributing to the source data that determines whether it appears in AI-generated summaries two years from now.

The startups adapting to AI-driven search changes are treating digital PR as a long-term infrastructure play, not a campaign tactic. For London's tech startups in particular, this creates an urgent question: is your brand being cited in the sources that AI systems trust? If not, no volume of paid spend will put you in those AI-generated results.

\

Third-party validation works differently from advertising because it carries an implicit endorsement that paid placements cannot manufacture. When a prospective customer reads about your startup in a respected publication, an editor has already made the judgment that your company is worth covering. That signal lands very differently from an ad, which carries no such credibility.

For early-stage startups selling to businesses or professionals, that credibility often determines the outcome of a first conversation. Decision-makers who have already seen your company in press arrive with a level of familiarity that paid ads rarely generate. They have seen your name, your product, and your angle. The initial trust-building phase compresses significantly.

The investor impact is equally concrete. During fundraising, founders who can present a consistent record of media coverage for startups are offering prospective investors a form of third-party due diligence. Coverage in a recognised publication tells an investor that credible, independent parties have examined the business and found it worth reporting on. For seed and Series A rounds in particular, where personal credibility is still being established alongside the product, that external validation can shorten the trust-building phase of a raise considerably.

\

London ranks as Europe's top startup ecosystem according to the Global Startup Ecosystem Report 2024, and the media infrastructure built around it matches that standing. National newspapers, specialist tech publications, broadcast outlets, and a rich network of vertical trade press all operate within a relatively small professional network. That density creates conditions where a well-timed, well-crafted story can travel quickly: from one outlet to another, from a journalist's post to a VC's share, from a newsletter mention to a podcast invitation.

The secondary effects matter as much as the original placement. When a London startup secures coverage in a credible outlet, the story rarely stops there. Other journalists who cover the space notice it. Investors who monitor press activity file the name. Competitors take note.

For founders willing to invest in developing a genuine story rather than a press release, that chain of earned visibility can generate months of compounding exposure from a single well-placed piece. London-based agencies with digital PR expertise operate in this environment by combining editorial relationships with an understanding of what local journalists actually want to cover. The difference between a pitch that lands and one that disappears into an inbox usually comes down to the story itself: specific, relevant, and timed well.

\

Digital PR has shifted from a brand-building exercise to a core growth strategy for London's most pragmatic startup founders. The conditions driving this shift are structural. Ad costs are rising as auction competition grows across every major platform. Attribution is degrading as privacy technology matures. AI is reorganising search visibility around brands that have already earned their place in trusted media. These are not short-term disruptions; they are permanent changes to how digital visibility is acquired and maintained.

The startups building editorial presence now are creating the coverage archive that AI systems will draw on in two or three years. Every new backlink strengthens their domain authority. Every new mention makes them the name journalists reach for when a comment, case study, or founder profile is needed. Each of these advantages compounds independently, and the combination creates a visibility position that paid ads simply cannot replicate.

If your marketing budget is still weighted toward paid spend, this is the moment to question that split. Start with one strong story, identify the right publication for your audience, and earn your first piece of coverage in front of the people who matter most. The compounding starts from there.