2025-10-24 22:59:00

A lot of people say AI will make us all “managers” or “editors”…but I think this is a dangerously incomplete view!

Personally, I’m trying to code like a surgeon.

A surgeon isn’t a manager, they do the actual work! But their skills and time are highly leveraged with a support team that handles prep, secondary tasks, admin. The surgeon focuses on the important stuff they are uniquely good at.

My current goal with AI coding tools is to spend 100% of my time doing stuff that matters. (As a UI prototyper, that mostly means tinkering with design concepts.)

It turns out there are a LOT of secondary tasks which AI agents are now good enough to help out with. Some things I’m finding useful to hand off these days:

I often find it useful to run these secondary tasks async in the background – while I’m eating lunch, or even literally overnight!

When I sit down for a work session, I want to feel like a surgeon walking into a prepped operating room. Everything is ready for me to do what I’m good at.

Notably, there is a huge difference between how I use AI for primary vs secondary tasks.

For the core design prototyping work, I still do a lot of coding by hand, and when I do use AI, I’m more careful and in the details. I need fast feedback loops and good visibility. (eg, I like Cursor tab-complete here)

Whereas for secondary tasks, I’m much much looser with it, happy to let an agent churn for hours in the background. The ability to get the job done eventually is the most important thing; speed and visibility matter less. Claude Code has been my go-to for long unsupervised sessions but Codex CLI is becoming a strong contender there too, possibly my new favorite.

These are very different work patterns! Reminds me of Andrej Karpathy’s “autonomy slider” concept. It’s dangerous to conflate different parts of the autonomy spectrum – the tools and mindset that are needed vary quite a lot.

The “software surgeon” concept is a very old idea – Fred Brooks attributes it to Harlan Mills in his 1975 classic “The Mythical Man-Month”. He talks about a “chief programmer” who is supported by various staff including a “copilot” and various administrators. Of course, at the time, the idea was to have humans be in these support roles.

OK, so there is a super obvious angle here, that “AI has now made this approach economically viable where it wasn’t before”, yes yes… but I am also noticing a more subtle thing at play, something to do with status hierarchies.

A lot of the “secondary” tasks are “grunt work”, not the most intellectually fulfilling or creative part of the work. I have a strong preference for teams where everyone shares the grunt work; I hate the idea of giving all the grunt work to some lower-status members of the team. Yes, junior members will often have more grunt work, but they should also be given many interesting tasks to help them grow.

With AI this concern completely disappears! Now I can happily delegate pure grunt work. And the 24/7 availability is a big deal. I would never call a human intern at 11pm and tell them to have a research report on some code ready by 7am… but here I am, commanding my agent to do just that!

Finally I’ll mention a couple thoughts on how this approach to work intersects with my employer, Notion.

First, as an employee, I find it incredibly valuable right now to work at a place that is bullish on AI coding tools. Having support for heavy use of AI coding tools, and a codebase that’s well setup for it, is enabling serious productivity gains for me – especially as a newcomer to a big codebase.

Secondly, as a product – in a sense I would say we are trying to bring this way of working to a broader group of knowledge workers beyond programmers. When I think about how that will play out, I like the mental model of enabling everyone to “work like a surgeon”.

The goal isn’t to delegate your core work, it’s to identify and delegate the secondary grunt work tasks, so you can focus on the main thing that matters.

If you liked this perspective, you might enjoy reading these other posts I’ve written about the nature of human-AI collaboration:

2025-09-11 03:40:00

Here’s a thought experiment for pondering the effects AI might have on society: What if we invented teleportation?

A bit odd, I know, but bear with me…

The year is 2035. The Auto Go Instant (AGI) teleporter has been invented. You can now go anywhere… instantly!

At first the tech is expensive and unreliable. Critics laugh. “Hah, look at these stupid billionaires who can’t spend a minute of their time moving around like the rest of us. And 5% of the time they end up in the wrong place, LOL”

But soon things get cheaper and better. The tech hits mass market.

There are huge benefits. Global commerce is supercharged. Instead of commuting, people can spend more time with family and friends. Pollution is way down. The AGI company runs a sweet commercial of people teleporting to see their parents one last time before they die.

At the same time, some weird things start happening.

The landscape starts reconfiguring around the new reality. Families move to remote cabins, just seconds away from urban amenities. The summit of Mt. Everest becomes crowded with influencers. (It turns out that if you stay just a few seconds, you can take a quick selfie without needing an oxygen mask!)

Physical health takes a hit for many people. It’s harder to justify walking or biking when you could just be there now.

In-between moments disappear. One moment you’re at work, the next you’re at your dinner table at home. No more time to reset or prepare for a new context.

But the biggest change is the loss of serendipity. When you teleport, you decide in advance where you’re headed. You never run into an old friend on the street, or stop at a farmstand by the side of the road, or see a store you might want to stop into someday.

To modern teenagers, the idea of wandering out without an exact destination in mind becomes unthinkable. You start with the GPS coordinates, and then you just… go.

Advocates of the new way point out that there’s nothing stopping anyone from choosing traditional methods for fun. And indeed, the cross-country road trip does see a mild resurgence as a hipster thing.

But when push comes to shove, most people struggle to make the time for wandering—our schedules are now arranged around an assumption of instant transport.

This isn’t exactly to say that the old way was better. Most people can agree that teleportation a net win. Yet for those who remember, there’s a vague unease, a sense that something important was lost in the world….

In his book Technology and the Character of Everyday Life, the philosopher Albert Borgmann talks about wooden stoves in houses.

What is a stove? Yes, it warms the house… but it’s also so much more than that. You gotta cut the wood, you gotta start the fire in the morning…

“A stove used to furnish more than mere warmth. It was a focus, a hearth, a place that gathered the work and leisure of a family and gave the house of a center.”

When you switch to a modern central heating system, you cut out all these inconveniences. Fantastic!

Oh, and by the way, your family social life is totally different….. wait what?? Yes, the inconveniences were inconvenient. But they were also holding up something in your life and culture, and now they’re suddenly gone.

I think of this as kind of a Chesteron’s fence on hard mode. Yes, the stove was put there for warmth, that was the main goal. But you should also think hard about its secondary effects before replacing it.

OK so… how does this apply to AI?

I’m personally excited about AI and think it can improve our lives in a lot of ways. But at the same time I’m trying to be mindful of secondary effects and unintended consequences.

Here’s one example. If your mental model of reading is “transmit facts into my head”, then reading an AI summary of something might seem like a more efficient way to get that task done.

But if your mental model of reading is “spend time marinating in a world of ideas”, then reducing the time spent reading doesn’t help you much.

The point was the journey you underwent while reading, and you replaced it with teleportation.

Another example. One of the great joys of my life is having nerdy friends explain things to me. Now I can get explanations from AI with less friction, anytime, anywhere, with endless follow-up.

Even if the AI explanations are “better”, there’s a social cost. I can try to mindfully nudge myself to still ask people questions, but now it requires more effort.

Final example: I’m trying to be mindful of the effects of vibe coding when designing software interfaces. On the one hand, it can really speed up my iteration loop and help me explore more ideas.

But at the same time, part of my design process is sitting with the details of the thing and uncovering it as I go—more a muscle memory process than a conscious plan. Messing with this process can change the results in ways that are hard to predict!

I guess the throughline for all of these examples is: sometimes the friction and inconvenience is where the good stuff happens. Gotta be very careful removing it.

The takeaway here isn’t that “AI is bad”. I’ll just say that I’m personally trying to be mindful about keeping good friction around.

During COVID, we kinda got teleportation via Zoom for a while. I decided to “virtual commute” every day, walking around the block to get some fresh air and a reset before/after work. This wasn’t a big deal but I found it really helpful.

As AI makes a lot of things easier, it’ll be interesting to ponder what kinds of new frictions we’ll want to intentionally add to our lives. Teleportation isn’t always the best answer…

2025-07-28 04:50:00

In my opinion, one of the best critiques of modern AI design comes from a 1992 talk by the researcher Mark Weiser where he ranted against “copilot” as a metaphor for AI.

This was 33 years ago, but it’s still incredibly relevant for anyone designing with AI.

Weiser was speaking at an MIT Media Lab event on “interface agents”. They were grappling with many of the same issues we’re discussing in 2025: how to make a personal assistant that automates tasks for you and knows your full context. They even had a human “butler” on stage representing an AI agent.

Everyone was super excited about this… except Weiser. He was opposed to the whole idea of agents! He gave this example: how should a computer help you fly a plane and avoid collisions?

The agentic option is a “copilot” — a virtual human who you talk with to get help flying the plane. If you’re about to run into another plane it might yell at you “collision, go right and down!”

Weiser offered a different option: design the cockpit so that the human pilot is naturally aware of their surroundings. In his words: “You’ll no more run into another airplane than you would try to walk through a wall.”

Weiser’s goal was an “invisible computer"—not an assistant that grabs your attention, but a computer that fades into the background and becomes "an extension of [your] body”.

There’s a tool in modern planes that I think nicely illustrates Weiser’s philosophy: the Head-Up Display (HUD), which overlays flight info like the horizon and altitude on a transparent display directly in the pilot’s field of view.

A HUD feels completely different from a copilot! You don’t talk to it. It’s literally part invisible—you just become naturally aware of more things, as if you had magic eyes.

OK enough analogies. What might a HUD feel like in modern software design?

One familiar example is spellcheck. Think about it: spellcheck isn’t designed as a “virtual collaborator” talking to you about your spelling. It just instantly adds red squigglies when you misspell something! You now have a new sense you didn’t have before. It’s a HUD.

(This example comes from Jeffrey Heer’s excellent Agency plus Automation paper. We may not consider spellcheck an AI feature today, but it’s still a fuzzy algorithm under the hood.)

Here’s another personal example from AI coding. Let’s say you want to fix a bug. The obvious “copilot” way is to open an agent chat and ask it to do the fix.

But there’s another approach I’ve found more powerful at times: use AI to build a custom debugger UI which visualizes the behavior of my program! In one example, I built a hacker-themed debug view of a Prolog interpreter.

With the debugger, I have a HUD! I have new senses, I can see how my program runs. The HUD extends beyond the narrow task of fixing the bug. I can ambiently build up my own understanding, spotting new problems and opportunities.

Both the spellchecker and custom debuggers show that automation / “virtual assistant” isn’t the only possible UI. We can instead use tech to build better HUDs that enhance our human senses.

I don’t believe HUDs are universally better than copilots! But I do believe anyone serious about designing for AI should consider non-copilot form factors that more directly extend the human mind.

So when should we use one or the other? I think it’s quite tricky to answer that, but we can try to use the airplane analogy for some intuition:

When pilots just want the plane to fly straight and level, they fully delegate that task to an autopilot, which is close to a “virtual copilot”. But if the plane just hit a flock of birds and needs to land in the Hudson, the pilot is going to take manual control, and we better hope they have great instruments that help them understand the situation.

In other words: routine predictable work might make sense to delegate to a virtual copilot / assistant. But when you’re shooting for extraordinary outcomes, perhaps the best bet is to equip human experts with new superpowers.

2025-06-29 22:17:00

The pupil was confused. Some people on Design Twitter said that chat isn’t a good UI for AI… but then chat seemed to be winning in many products? He climbed Mount GPT to consult a wizard…

🐣: please wizard tell me once and for all. is chat a good UI for AI?

🧙: well, aren’t we chatting now?

🐣: …?

🧙: should this conversation be a traditional GUI?

🐣: no, it could never be!

🧙: why not?

🐣: uh… you can’t click buttons and drag sliders to ask open-ended questions like this?

🧙: precisely! chat is marvelous, done.

🐣: dude seriously? i came all the way here for that?

🧙: yep. i’ll tell you the best route down the mountain. straight 1000 ft, left 50 degrees, straight 2 miles—

🐣: hold on hold on. do you have a map handy?

🧙: aha! here’s a map i had in my pocket. is this a GUI?

🐣: well, this map is just a piece of paper, so no?

🧙: ok, what is a paper map?

🐣: uh… a better way to see the world?

🧙: indeed! for certain things, a map is the way to see. for other things, a diagram, a chart, a table. this is the first precept:

🐣: ok fine. but info viz can fit into a chat can’t it? like, if i ask Siri or ChatGPT for the weather, they’ll show me a little weather card…but it’s still basically chat

🧙: where do you live on this map?

🐣: right th–

🧙: hands in your pockets!

🐣: ?

🧙: no pointing. tell me where you live

🐣: ….well, see how there’s a little lake up by the top left? no not that lake… a bit to the right.. no no next one over–

🧙: hahaha

🐣: … ok fine i get your point! this sucks.

🧙: indeed! pointing is great. for referring to things, for precisely cropping an image in the right spot…

🐣: ok fine. but if we talk while you show me the map and i point at it, that still feels like a chat? we’re layering on information visualization and precision input, but natural language is still doing the heavy lifting?

🧙: (points at a rock, then at the ground) put that there.

🐣: huh?

🧙: put that there!

🐣: (moves the rock) ok, what was that about?

🧙: As you say, we needed our fingers and our voices both. This leads to a second precept:

btw want a compass?

🐣: yeah that’ll actually be helpful on the way down.

🧙: cool, i can give you a regular compass, or Mr. Magnetic, a magical fairy who can tell you which way you’re pointed.

🐣: i’ll take the regular compass? I did Boy Scouts so I know how to read it, it just becomes part of me in a sense. i definitely don’t need to have a whole damn conversation every time.

🧙: ah yes, you see it. the compass pairs information visualization and precision inputs with a low-latency feedback loop, becoming an extension of your mind. this is one of our great powers as humans—to shoot an arrow or swing a club.

🐣: ok that’s cool. but dude i’ve been here a while and i feel like we haven’t even really talked about GUIs!

🧙: you’re right, time for dinner. let’s order a pizza

🐣: …

🧙: can you order one?

🐣: fine. I’ll see if UberEats delivers up here.

🧙: why not call the restaurant?

🐣: are you kidding me? i’m not a boomer.

🧙: is UberEats a GUI?

🐣: yes?

🧙: does it work well?

🐣: yeah it’s fine! gets the job done.

🧙: why not chat over the phone instead?

🐣: well, ordering food is the same thing every time! even when you talk to the person you’re both just following a script, really. the app just makes it faster to follow that script.

🧙: indeed! this is our third precept:

🐣: ok i get it. but idk man, i feel like this is all kinda obvious and we haven’t hit the heart of the matter? yes chat is better for open-ended workflows, and GUIs can be better when the task is repeated. but how do they relate?

🧙: hey i host seminars up here every week and it’s kinda tedious. could you show me the button in UberEats where I can enter the estimated attendance and then it orders the right number of pizzas?

🐣: umm that’s not a thing?

🧙: why not? i want it.

🐣: uhh, this is UberEats, not a seminar organizer app?

🧙: oh right good point! in that case let’s add a button on the calendar invite i can press which will order the pizzas.

🐣: dude what do you mean? the calendar app is just a calendar app, not a seminar organizer. you can’t just change your software like this.

🧙: hm, what are my options then?

🐣: ooh i have an idea! have you heard of MCP? if we just install the right servers then you can program a seminar planner agent in Claude to do this every week for you.

🧙: sounds fine for the first few times while i’m figuring it out. but–is planning a seminar not a repeated workflow?

🐣: … yes, i think it is?

🧙: did we not say that GUIs can speed up repeated workflows? why do i need to stay in chat for this? also btw, i want my assistant to help out with this, and an app would help them know what to do.

🐣: i mean, i’m not sure there’s a good app for seminar planning that does what you want. lemme search on the app st–

🧙: wait! a GUI that someone else made will not fit my seminar planning needs. i need my own preferred workflow to be the one that is encoded in the tool.

🐣: ohh i see! this actually might not be that much work, have you heard of vibe coding? i’ll open up Claude Artifacts and get cookin.

🧙: thanks, lemme know when you’ve added the seminar pizza feature to UberEats!

🐣: oh well, I was thinking it’s not gonna be added to uber eats exactly – i’m gonna make a new web app that does all this.

🧙: why? UberEats already has great UI for the checkout flow, I just need one little feature added.

🐣: i mean i see your point, but you can’t really add your own features to UberEats? you don’t control it.

🧙: haven’t they heard of vibe coding over there?

🐣: dude that’s not how software works. sure everyone can code now but that doesn’t mean you can just edit any app.

🧙: why not?

🐣: er… it sounds kinda messy? and i guess all of this app stuff was invented before AI came along anyway?

🧙: when you paint a wall do you need to ask permission of the company that made the wall?

🐣: … hm. when you put it that way… i see what you’re getting at. if all the GUIs you already use could be edited, then you wouldn’t need to resort to chat as much to fill in the seams. instead you could just change the GUIs to do what you want!

🧙: aha! yes, now you see. if the UI is fixed, then it cannot respond to my needs. but if it is malleable, then I can evolve it over time. This is the fourth and final precept for today:

🐣: neat. this seems hard though, wouldn’t we need to rethink how the App Store works?

🧙: indeed. and that is a longer conversation for another time.

Note from the editor: to keep exploring, read this.

2025-04-12 22:40:00

There’s a lot of hype these days around patterns for building with AI. Agents, memory, RAG, assistants—so many buzzwords! But the reality is, you don’t need fancy techniques or libraries to build useful personal tools with LLMs.

In this short post, I’ll show you how I built a useful AI assistant for my family using a dead simple architecture: a single SQLite table of memories, and a handful of cron jobs for ingesting memories and sending updates, all hosted on Val.town. The whole thing is so simple that you can easily copy and extend it yourself.

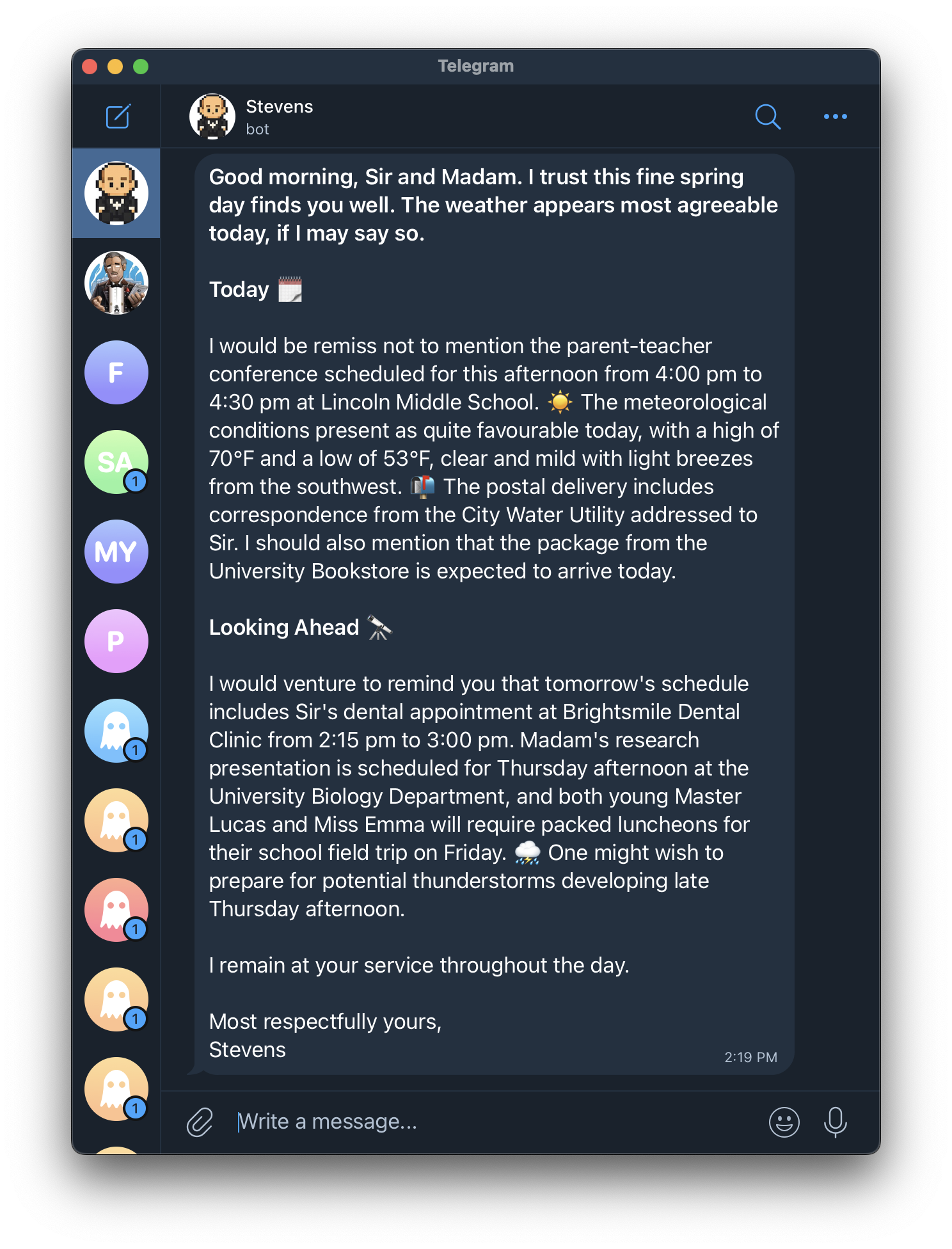

The assistant is called Stevens, named after the butler in the great Ishiguro novel Remains of the Day. Every morning it sends a brief to me and my wife via Telegram, including our calendar schedules for the day, a preview of the weather forecast, any postal mail or packages we’re expected to receive, and any reminders we’ve asked it to keep track of. All written up nice and formally, just like you’d expect from a proper butler.

Here’s an example. (I’ll use fake data throughout this post, beacuse our actual updates contain private information.)

Beyond the daily brief, we can communicate with Stevens on-demand—we can forward an email with some important info, or just leave a reminder or ask a question via Telegram chat.

That’s Stevens. It’s rudimentary, but already more useful to me than Siri!

Let’s break down the simple architecture behind Stevens. The whole thing is hosted on Val.town, a lovely platform that offers SQLite storage, HTTP request handling, scheduled cron jobs, and inbound/outbound email: a perfect set of capabilities for this project.

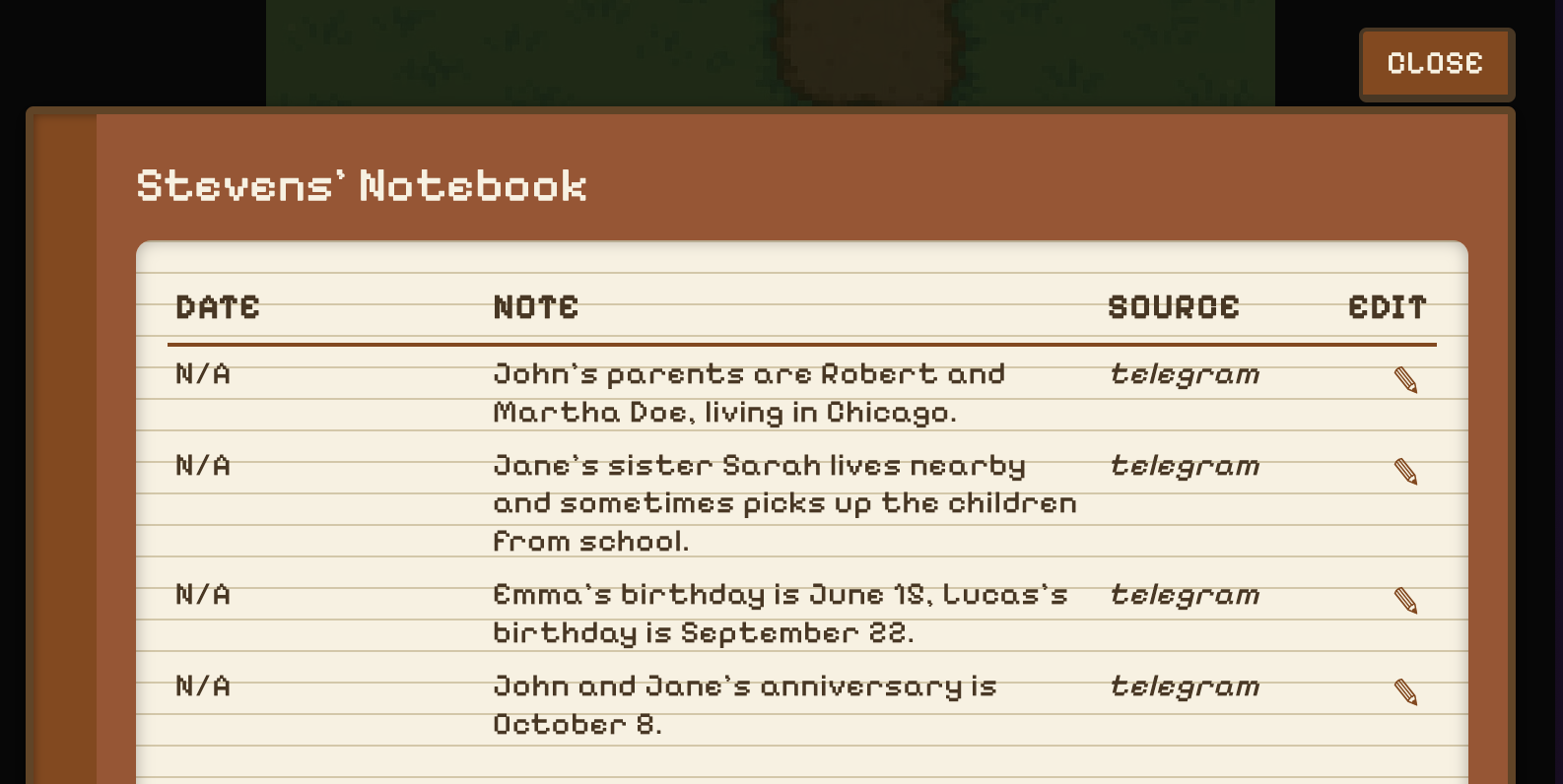

First, how does Stevens know what goes in the morning brief? The key is the butler’s notebook, a log of everything that Stevens knows. There’s an admin view where we can see the notebook contents—let’s peek and see what’s in there:

You can see some of the entries that fed into the morning brief above—for example, the parent-teacher conference has a log entry.

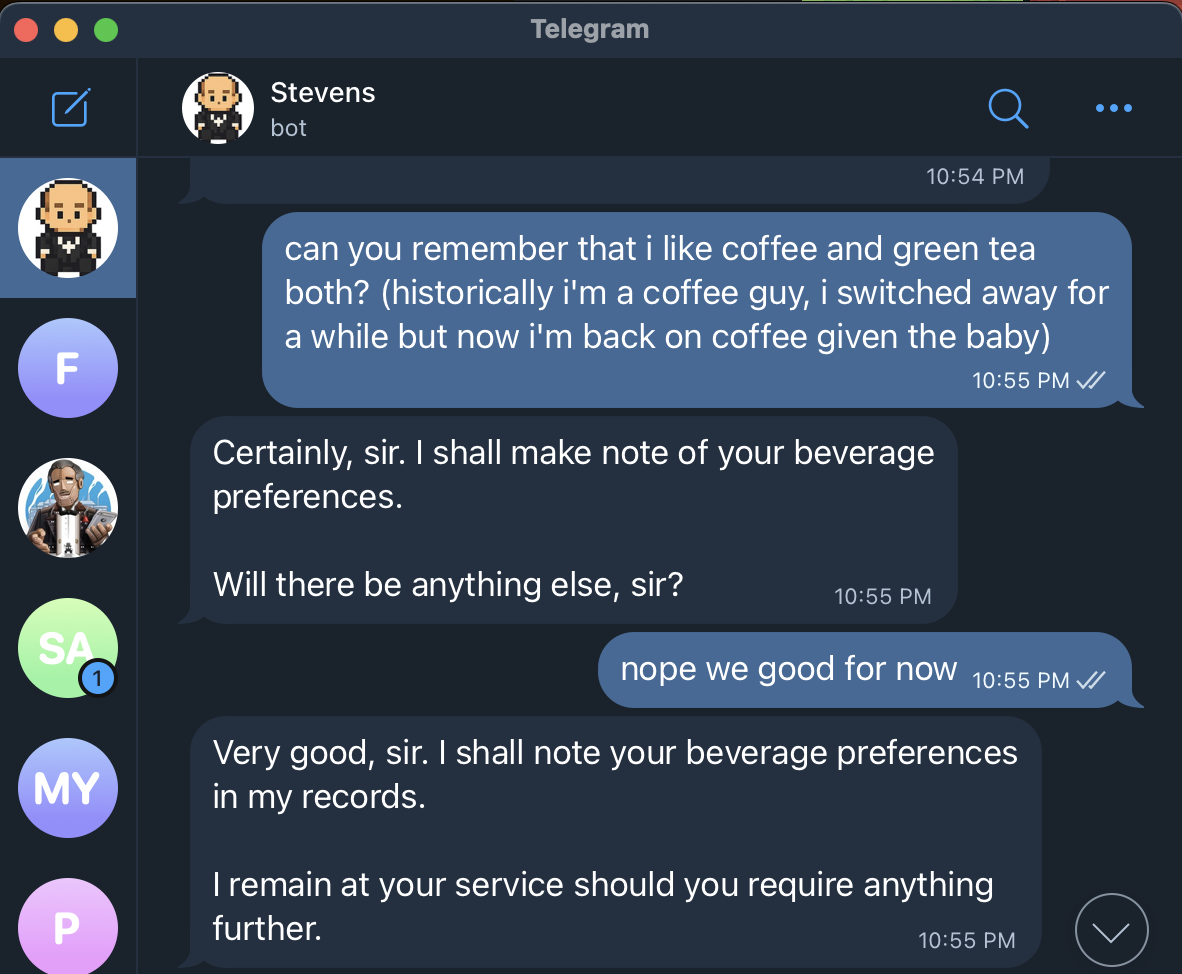

In addition to some text, entries can have a date when they are expected to be relevant. There are also entries with no date that serve as general background info, and are always included. You can see these particular background memories came from a Telegram chat, because Stevens does an intake interview via Telegram when you first get started:

With this notebook in hand, sending the morning brief is easy: just run a cron job which makes a call to the Claude API to write the update, and then sends the text to a Telegram thread. As context for the model, we include any log entries dated for the coming week, as well as the undated background entries.

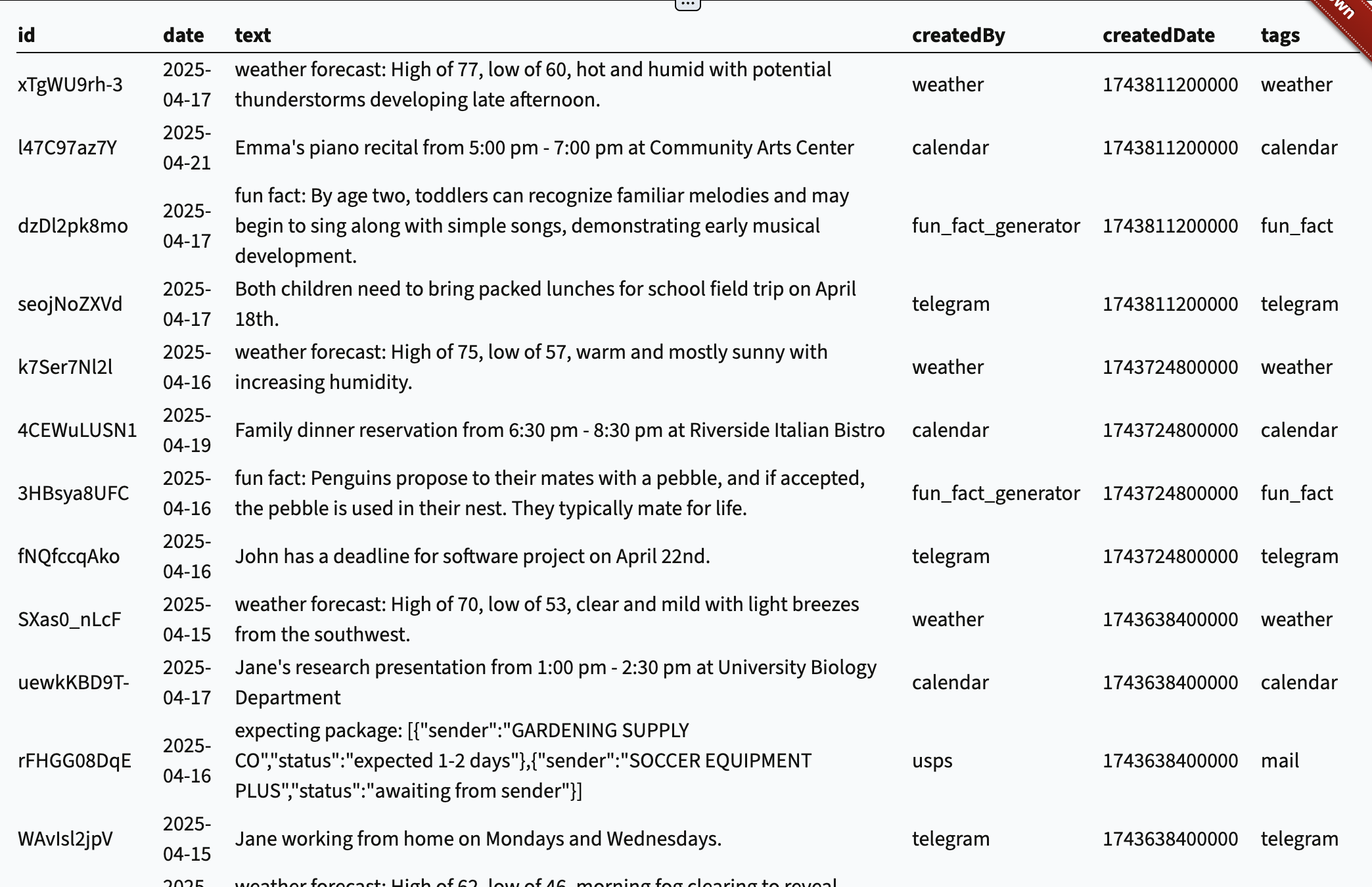

Under the hood, the “notebook” is just a single SQLite table with a few columns. Here’s a more boring view of things:

But wait: how did the various log entries get there in the first place? In the admin view, we can watch Stevens buzzing around entering things into the log from various sources:

This is just some data importers populating the table:

This system is easily extensible with new importers. An importer is just any process that adds/edits memories in the log. The memory contents can be any arbitrary text, since they’ll just be fed back into an LLM later anyways.

A few quick reflections on this project:

It’s very useful for personal AI tools to have access to broader context from other information sources. Awareness of things like my calendar and the weather forecast turns a dumb chatbot into a useful assistant. ChatGPT recently added memory of past conversations, but there’s lots of information not stored within that silo. I’ve written before about how the endgame for AI-driven personal software isn’t more app silos, it’s small tools operating on a shared pool of context about our lives.

“Memory” can start simple. In this case, the use cases of the assistant are limited, and its information is inherently time-bounded, so it’s fairly easy to query for the relevant context to give to the LLM. It also helps that some modern models have long context windows. As the available information grows in size, RAG and fancier approaches to memory may be needed, but you can start simple.

Vibe coding enables sillier projects. Initially, Stevens spoke with a dry tone, like you might expect from a generic Apple or Google product. But it turned out it was just more fun to have the assistant speak like a formal butler. This was trivial to do, just a couple lines in a prompt. Similarly, I decided to make the admin dashboard views feel like a video game, because why not? I generated the image assets in ChatGPT, and vibe coded the whole UI in Cursor + Claude 3.7 Sonnet; it took a tiny bit of extra effort in exchange for a lot more fun.

Stevens isn’t a product you can run out of the box, it’s just a personal project I made for myself.

But if you’re curious, you can check out the code and fork the project here. You should be able to apply this basic pattern—a single memories table and an extensible constellation of cron jobs—to do lots of other useful things.

I recommend editing the code using your AI editor of choice with the Val Town CLI to sync to local filesystem.

2025-03-04 06:13:00

In my opinion, one of the most important ideas in product design is to avoid the “nightmare bicycle”.

Imagine a bicycle where the product manager said: “people don’t get math so we can’t have numbered gears. We need labeled buttons for gravel mode, downhill mode, …”

This is the hypothetical “nightmare bicycle” that Andrea diSessa imagines in his book Changing Minds.

As he points out: it would be terrible! We’d lose the intuitive understanding of how to use the gears to solve any situation we encounter. Which mode do you use for gravel + downhill?

It turns out, anyone can understand numbered gears totally fine after a bit of practice. People are capable!

Along the same lines: one of the worst misconceptions in product design is that a microwave needs to have a button for every thing you could possibly cook: “popcorn”, “chicken”, “potato”, “frozen vegetable”, bla bla bla.

You really don’t! You can just have a time (and power) button. People will figure out how to cook stuff.

Good designs expose systematic structure; they lean on their users’ ability to understand this structure and apply it to new situations. We were born for this.

Bad designs paper over the structure with superficial labels that hide the underlying system, inhibiting their users’ ability to actually build a clear model in their heads.

p.s. Changing Minds is one of the best books ever written about design and computational thinking, you should go read it.