2025-03-20 08:00:00

My fiancée and I were on the lookout for a piece of land to build our future home. We had some criteria in mind, such as the distance to certain places and family members, a minimum and a maximum size, and a few other things.

Each country has their own real estate platforms. In the US, the listing’s metadata is usually public and well-structured, allowing for more advanced searches, and more transparency in general.

In Austria, we mainly have willhaben.at, immowelt.at, immobilienscout24.at, all of which have no publicly available API.

The first step was to setup email alerts on each platform, with our search criteria. Each day, we got emails with all the new listings

Using the above approach we quickly got overwhelmed with keeping track of the listings, and finding the relevant information. Below are the main problems we encountered:

Many listings were posted on multiple platforms as duplicates, and we had to remember which ones we’ve already looked at. Once we investigated a listing, there was no good way to add notes.

Most listings had a lot of unnecessary text, and it took a lot of time to find the relevant information.

[DE] Eine Kindheit wie im Bilderbuch. Am Wochenende aufs Radl schwingen und direkt von zu Hause die Natur entdecken, alte Donau, Lobau, Donauinsel, alles ums Eck. Blumig auch die Straßennamen: Zinienweg, Fuchsienweg, Palargonienweg, Oleanderweg, Azaleengasse, Ginsterweg und AGAVENWEG …. duftiger geht’s wohl nicht.

Which loosely translates to:

[EN] Experience a picture-perfect childhood! Imagine weekends spent effortlessly hopping on your bike to explore nature’s wonders right from your doorstep. With the enchanting Old Danube just a stone’s throw away, adventure is always within reach. Even the street names are a floral delight: Zinienweg, Fuchsienweg, Oleanderweg, Azaleengasse, Ginsterweg, and the exquisite AGAVENWEG… can you imagine a more fragrant and idyllic setting

Although the real estate agent’s poetic flair is impressive, we’re more interested in practical details such as building regulations, noise level and how steep the lot is.

In Austria, the listings usually show you the distances like this:

Children / Schools

- Kindergarten <500 m

- School <1,500 m

Local Amenities

- Supermarket <1,000 m

- Bakery <2,500 m

However I personally get very limited information from this. Instead, we have our own list of POIs that we care about, for example the distance to relatives and to our workplace. Also, just showing the air distance is not helpful, as it’s really about how long it takes to get somewhere by car, bike, public transit, or by foot.

99% of the listings in Austria don’t have any address information available, not even the street name. You can imagine, within a village, being on the main street will be a huge difference when it comes to noise levels and traffic compared to being on a side street. Based on the full listing, it’s impossible to find that information.

The reason for this is that the real estate agents want you to first sign a contract with them, before they give you the address. This is a common practice in Austria, and it’s a way for them to make sure they get their commission.

Living in Vienna but searching for plots about 45 minutes away made scheduling viewings a challenge. Even if we clustered appointments, the process was still time-intensive and stressful. In many cases, just seeing the village was often enough to eliminate a lot: highway noise, noticeable power lines, or a steep slope could instantly rule it out.

Additionally, real estate agents tend to have limited information on empty lots—especially compared to houses or condos—so arranging and driving to each appointment wasn’t efficient. We needed a way to explore and filter potential locations before committing to in-person visits.

It became clear that we needed a way to properly automate and manage this process

I wanted a system that allows me to keep track of all the listings we’re interested in a flexible manner:

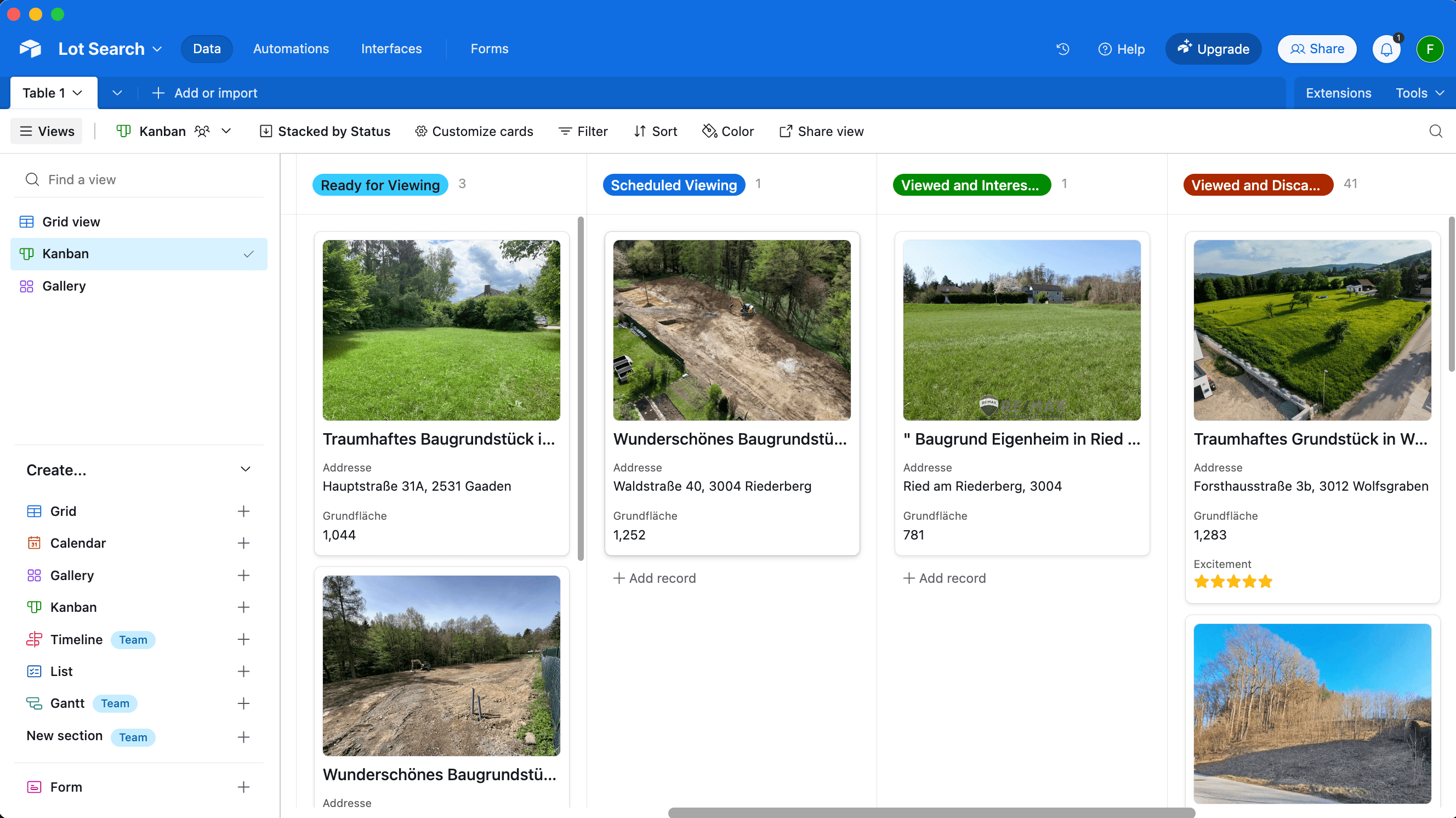

We quickly found Airtable to check all the boxes (Map View is a paid feature):

Whenever we received new listings per email, we manually went through each one and do a first check on overall vibe, price and village location. Only if we were genuinely interested, we wanted to add it to our Airtable.

So I wrote a simple Telegram bot to which we could send a link to a listing and it’d process it for us.

The simplest and most straightforward way to keep a copy of the listings was to use a headless browser to access the listing’s description and its images.. For that, I simply used the ferrum Ruby gem, but any similar tech would work. First, we open the page and prepare the website for a screenshot:

browser = Ferrum::Browser.new

browser.goto("https://immowelt.at/expose/123456789") # Open the listing

# Prepare the website: Depending on the page, you might want to remove some elements to see the full content

if browser.current_url.include?("immowelt.at")

browser.execute("document.getElementById('usercentrics-root').remove()") rescue nil

browser.execute("document.querySelectorAll('.link--read-more').forEach(function(el) { el.click() })") # Press all links with the class ".link--read-more", trigger via js as it doesn't work with the driver

elsif browser.current_url.include?("immobilienscout24.at")

browser.execute("document.querySelectorAll('button').forEach(function(el) { if (el.innerText.includes('Beschreibung lesen')) { el.click() } })")

end

Once the website is ready, we just took a screenshot of the full page, and save the HTML to have access to it later:

# Take a screenshot of the full page

browser.screenshot(path: screenshot_path, full: true)

# Save the HTML to have access later

File.write("listing.html", browser.body)

# Find all images referenced on the page

all_images = image_links = browser.css("img").map do |img|

{ name: img["alt"], src: img["src"] }

end

# The above `all_images` will contain a lot of non-relevant images, such as logos, etc.

# Below some messy code to get rid of the majority

image_links = image_links.select do |node|

node[:src].start_with?("http") && !node[:src].include?(".svg") && !node[:src].include?("facebook.com")

end

Important Note: All data processing was done manually on a case-by-case basis for listings we were genuinely interested in. We processed a total of 55 listings over several months across 3 different websites, never engaging in automated scraping or violating any platforms’ terms of service.

One of the main problems with the listings was the amount of irrelevant text, and being able to find the information you care about, like noise levels, building regulations, etc.

Hence, we simply prepared a list of questions we’ll ask AI to answer for us, based on the listing’s description:

generic_context = "You are helping a customer search for a property. The customer has shown you a listing for a property they want to buy. You want to help them find the most important information about this property. For each bullet point, please use the specified JSON key. Please answer the following questions:"

prompts = [

"title: The title of the listing",

"price: How much does this property cost? Please only provide the number, without any currency or other symbols.",

"size: The total plot area (Gesamtgrundfläche) of the property in m². If multiple areas are provided, please specify '-1'.",

"building_size: The buildable area or developable area—or the building site—in m². If percentages for buildability are mentioned, please provide those. If no information is available, please provide '-1'.",

"address: The address, or the street + locality. Please format it in the customary Austrian way. If no exact street or street number is available, please only provide the locality.",

"other_fees: Any additional fees or costs (excluding broker’s fees) that arise either upon purchase or afterward. Please answer in text form. If no information is available, please respond with an empty string ''.",

"connected: Is the property already connected (for example, electricity, water, road)? If no information is available, please respond with an empty string ''.",

"noise: Please describe how quiet or how loud the property is. Additionally, please mention if the property is located on a cul-de-sac. If no details are provided, please use an empty string ''. Please use the exact wording from the advertisement.",

"accessible: Please reproduce, word-for-word, how the listing describes the accessibility of the property. Include information on how well public facilities can be reached, whether by public transport, by car, or on foot. If available, please include the distance to the nearest bus or train station.",

"nature: Please describe whether the property is near nature—whether there is a forest or green space nearby, or if it is located in a development, etc. If no information is available, respond with an empty string ''.",

"orientation: Please describe the orientation of the property. Is it facing south, north, east, west, or a combination? If no information is available, respond with an empty string ''.",

"slope: Please describe whether the property is situated on a slope or is flat. If it is on a slope, please include details on how steep it is. If no information is available, respond with an empty string ''.",

"existingBuilding: Please describe whether there is an existing old building on the property. If there is, please include details. If no information is available, respond with an empty string ''.",

"summary: A summary of this property’s advertisement in bullet points. Please include all important and relevant information that would help a buyer make a decision, specifically regarding price, other costs, zoning, building restrictions, any old building, a location description, public transport accessibility, proximity to Vienna, neighborhood information, advantages or special features, and other standout aspects. Do not mention any brokerage commission or broker’s fee. Provide the information as a bullet-point list. If there is no information about a specific topic, please omit that bullet point entirely. Never say 'not specified' or 'not mentioned' or anything similar. Please do not use Markdown."

]

Now we need the full text of the listing. The ferrum gem does a good amount of magic to easily access the text without the need to parse the HTML yourself.

full_text = browser.at_css("body").text

All that’s left is to actually access the OpenAI API (or similar) to get the answers to the questions:

ai_responses = ai.ask(prompts: prompts, context: full_text)

To upload the resulting listing to Airtable I used the airrecord gem.

create_hash = {

"Title" => ai_responses["title"],

"Price" => ai_responses["price"].to_i,

"Noise" => ai_responses["noise"],

"URL" => browser.url,

"Summary" => ("- " + Array(ai_responses["summary"]).join("\n- "))

}

new_entry = MyEntry.create(create_hash)

For the screenshots, you’ll need some additionaly boilerplate code to first download, and then upload the images to a temporary S3 bucket, and then to Airtable using the Airtable API.

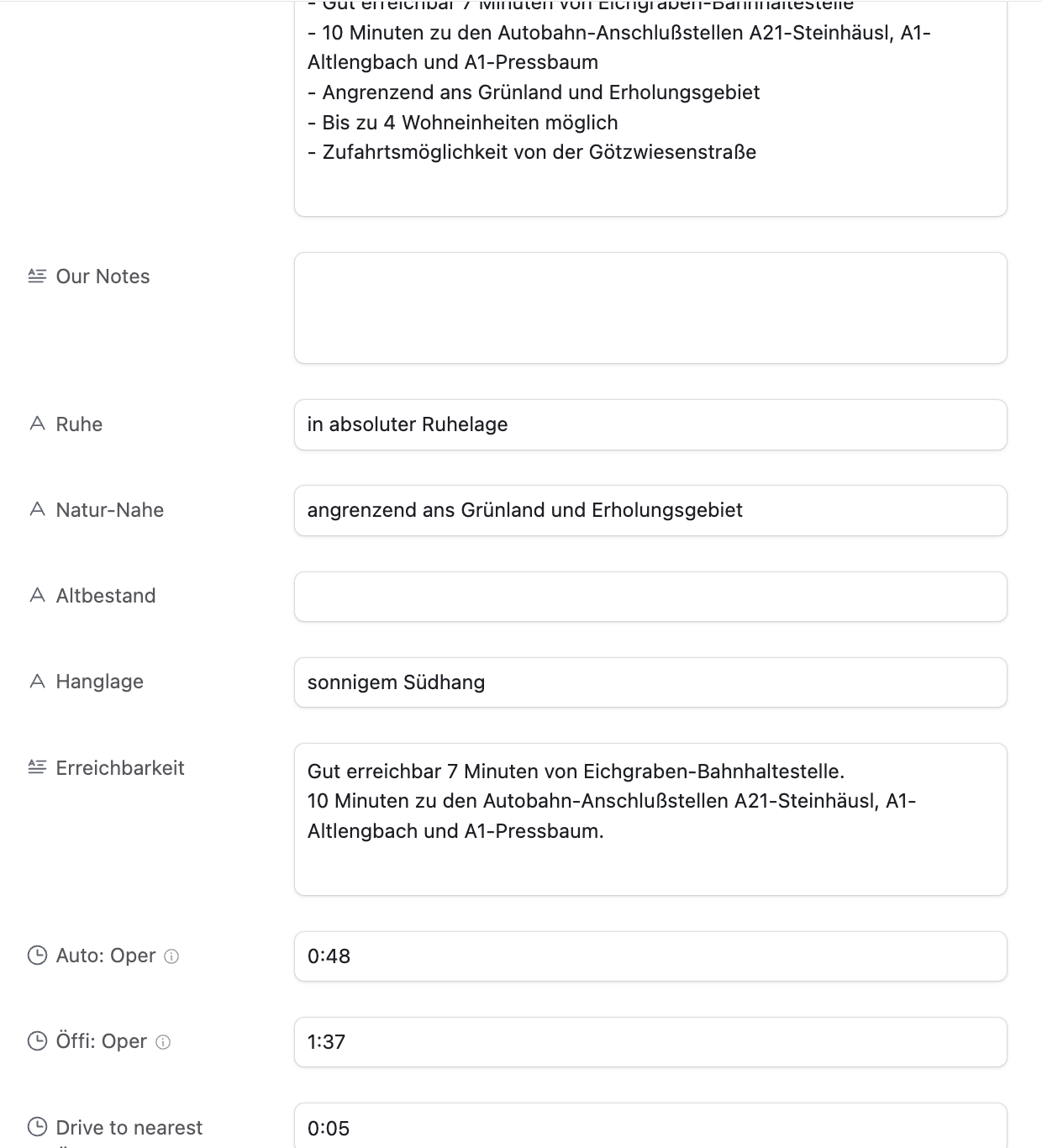

Below you can see the beautifully structured data in Airtable (in German), already including the public transit times:

The real estate agents usually actively blur any street names or other indicators if there is a map in the listing. There is likely no good automated way to do this. Since this project was aimed at only actually parsing the listings I was already interested in, I only had a total of 55 listings to manually find the address for.

Turns out, for around 80% for the listings I was able to find the exact address using one of the following approaches:

Variant A: Using geoland.at

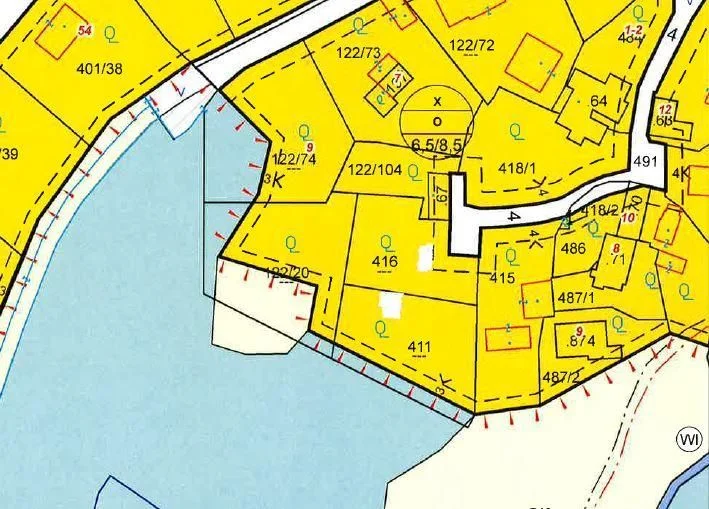

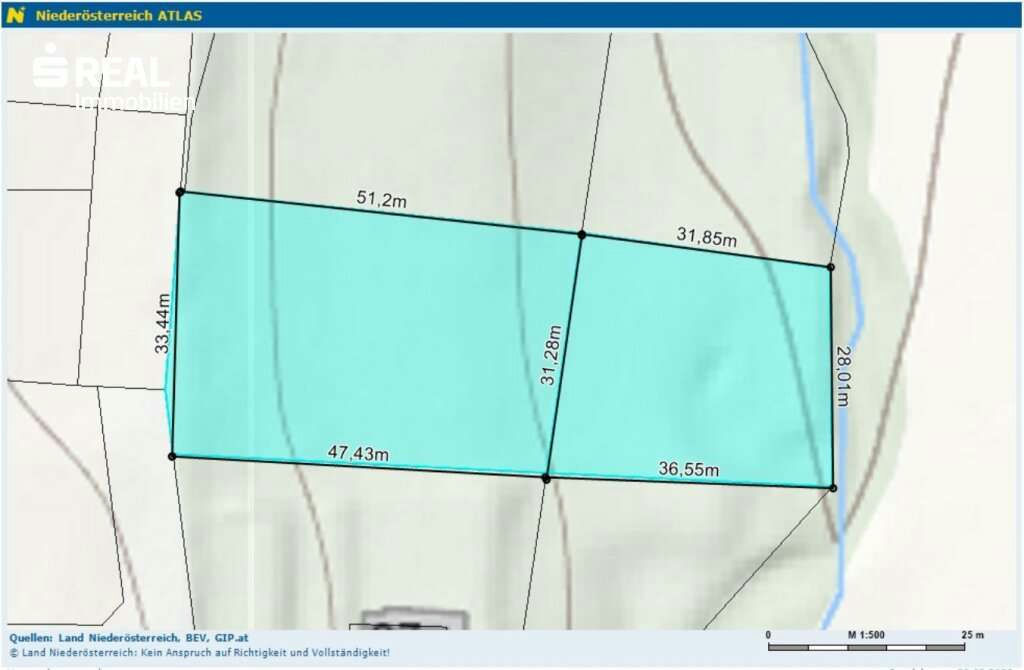

This is approach is Austria specific, but I could imagine other countries will have similar systems in place. I noticed many listings had a map that looks like this:

There are no street names, street numbers or river names. But you can see some numbers printed on each lot. Turns out, those are the “Grundstücksnummern” (lot numbers). The number tied together with the village name is unique, so you’ll be able to find that area of the village within a minute.

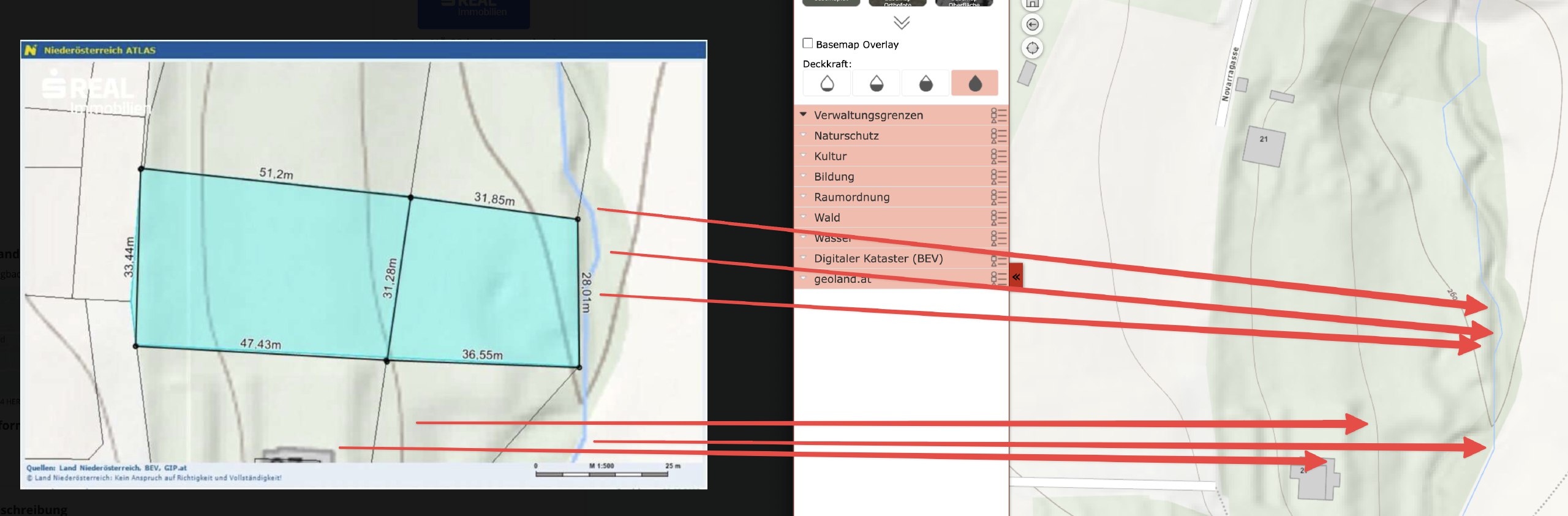

Variant B: By analysing the angles of the roads and rivers

The above map was a tricky one: It’s zoomed in so much that you can’t really see any surroundings. Also, the real estate agent hides the lot numbers, and switched to a terrain view.

The only orientation I had was the river. This village had a few rivers, but only 2 of them went in roughly the direction shown. So I went through those rivers manually to see where the form of the river matches the map, together with the light green background in the center, and the gray outsides. After around 30mins, I was able to find the exact spot (left: listing, right: my map)

Variant C: Requesting the address from the real estate agent

As the last resort, we contacted the real estate agent and ask for the address.

I want to emphasize: this system isn’t about avoiding real estate agents, but optimizing our search efficiency (like getting critical details same-day, and not having to jump on a call). For any property that passed our vetting, we contacted the agent and went through the purchase process as usual.

Once the address was manually entered, the Ruby script would pick up that info, and calculate the commute times to a pre-defined list of places using the Google Maps API. This part of the code is mostly boilerplate to interact with the API, and parse its responses.

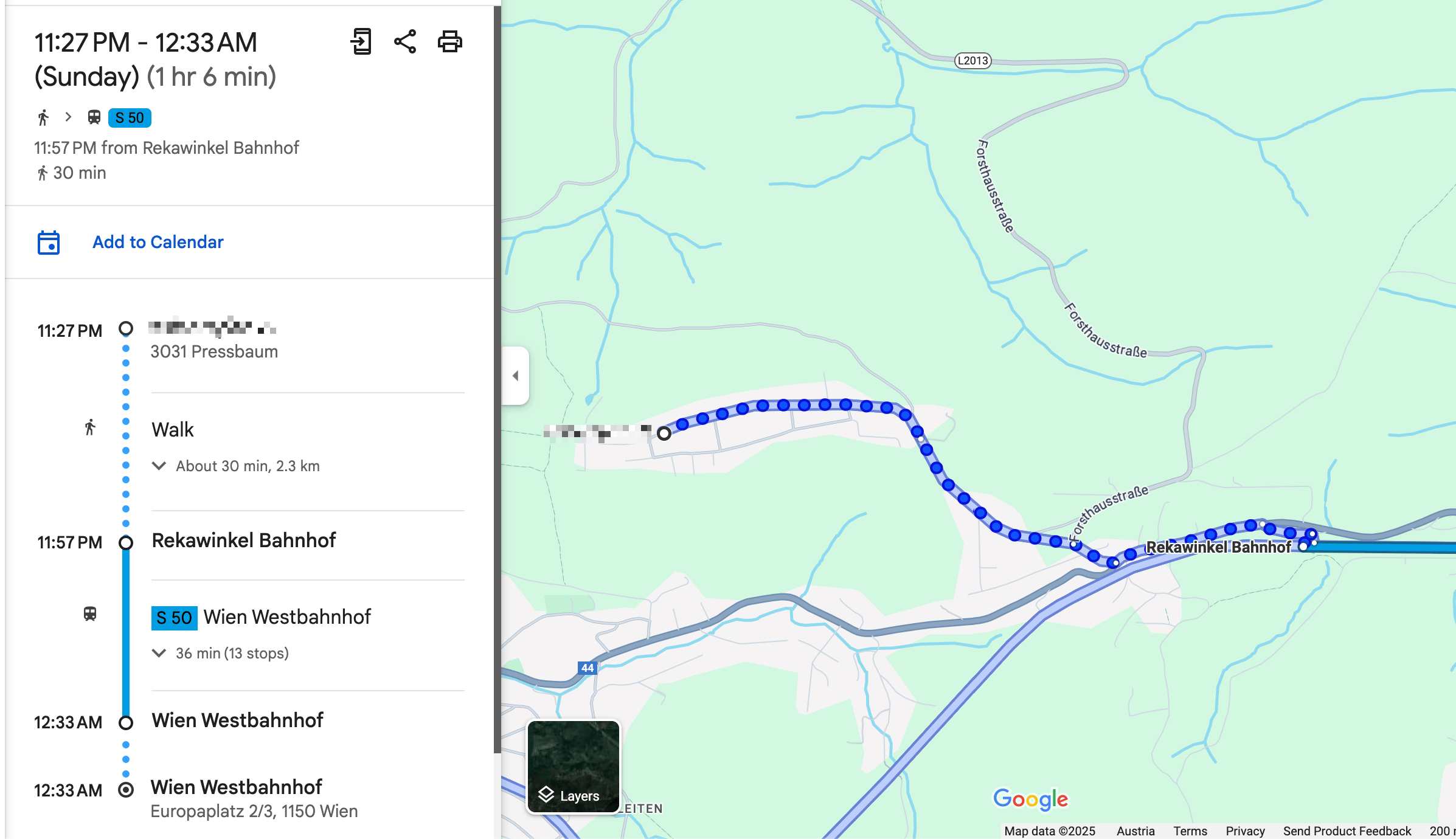

For each destination we were interested in, we calculated the commute time by car, bike, public transit, and by foot.

One key aspect that I was able to solve was the “getting to the train station” part. In most cases, we want to be able to take public transit, but with Google Maps it’s an “all or nothing”, as in, you either use public transit for the whole route, or you don’t.

More realistically, we wanted to drive to the train station (either by bike or car), and then take the train from there.

The code below shows a simple way I was able to achieve this. I’m well aware that this may not work for all the cases, but it worked well for all the 55 places I used it for.

if mode == "transit"

# For all routes calculated for public transit, first extract the "walking to the train station" part

# In the above screenshot, this would be 30mins and 2.3km

res[:walking_to_closest_station_time_seconds] = data["routes"][0]["legs"][0]["steps"][0]["duration"]["value"]

res[:walking_to_closest_station_distance_meters] = data["routes"][0]["legs"][0]["steps"][0]["distance"]["value"]

# Get the start and end location of the walking part

start_location = data["routes"][0]["legs"][0]["steps"][0]["start_location"]

end_location = data["routes"][0]["legs"][0]["steps"][0]["end_location"]

# Now calculate the driving distance to the nearest station

res[:drive_to_nearest_station_duration_seconds] = self.calculate_commute_duration(

from: "#{start_location["lat"]},#{start_location["lng"]}",

to: "#{end_location["lat"]},#{end_location["lng"]}",

mode: "driving")[:total_duration_seconds]

end

Once we had a list of around 15 lots we were interested in, we planned a day to visit them all. Because we have the exact address, there was no need for an appointment.

To find the most efficient route I used the RouteXL. You can upload a list of addresses you need to visit, and define precise rules, and it will calculate the most (fuel & time) efficient route, which you can directly import to Google Maps for navigation.

While driving to the next stop, my fiancée read the summary notes from the Airtable app, so we already knew the price, description, size and other characteristics of the lot by the time we arrive.

This approach was a huge time saver for us. Around 75% of the lots we could immediately rule out as we arrived. Sometimes there was a loud road, a steep slope, a power line, a noisy factory nearby, or most importantly: it just didn’t feel right. There were huge differences in vibes when you stand in front of a lot.

We always respected property boundaries - it was completely sufficient to stand in front of the lot, and walk around the area a bit to get a very clear picture.

After viewing 42 lots in-person on 3 full-day driving trips, we found the perfect one for us and contacted the real estate agent to do a proper viewing. We immediately knew it was the right one, met the owner, and signed the contract a few weeks later.

The system we built was a huge time saver for us, and allowed us to smoothly integrate the search process into our daily lives. I loved being able to easily access all the information we needed, and take notes on the go, while exploring the different villages of the Austrian countryside.

If you’re interested in getting access to the code, please reach out to me. I’m happy to share more info, but I want to make sure it’s used responsibly and in a way that doesn’t violate any terms of service of the platforms we used. Also, it’s quite specific to our use case, so it may need some adjustments to work for you.

2024-08-07 08:00:00

Note: This is a cross-post of the original publication on contextsdk.com.

This is the third post of our machine learning (ML) for iOS apps series. Be sure to read part 1 and part 2 first. So far we’ve received incredible positive feedback. We always read about the latest advancements in the space of Machine Learning and Artificial Intelligence, but at the same time, we mostly use external APIs that abstract out the ML aspect, without us knowing what’s happening under the hood. This blog post series helps us fully understand the basic concepts of how a model comes to be, how it’s maintained and improved, and how to leverage it in real-life applications

One critical aspect of machine learning is to constantly improve and iterate your model. There are many reasons for that, from ongoing changes in user-behavior, other changes in your app, all the way to simply getting more data that allows your model to be more precise.

In this article we will cover:

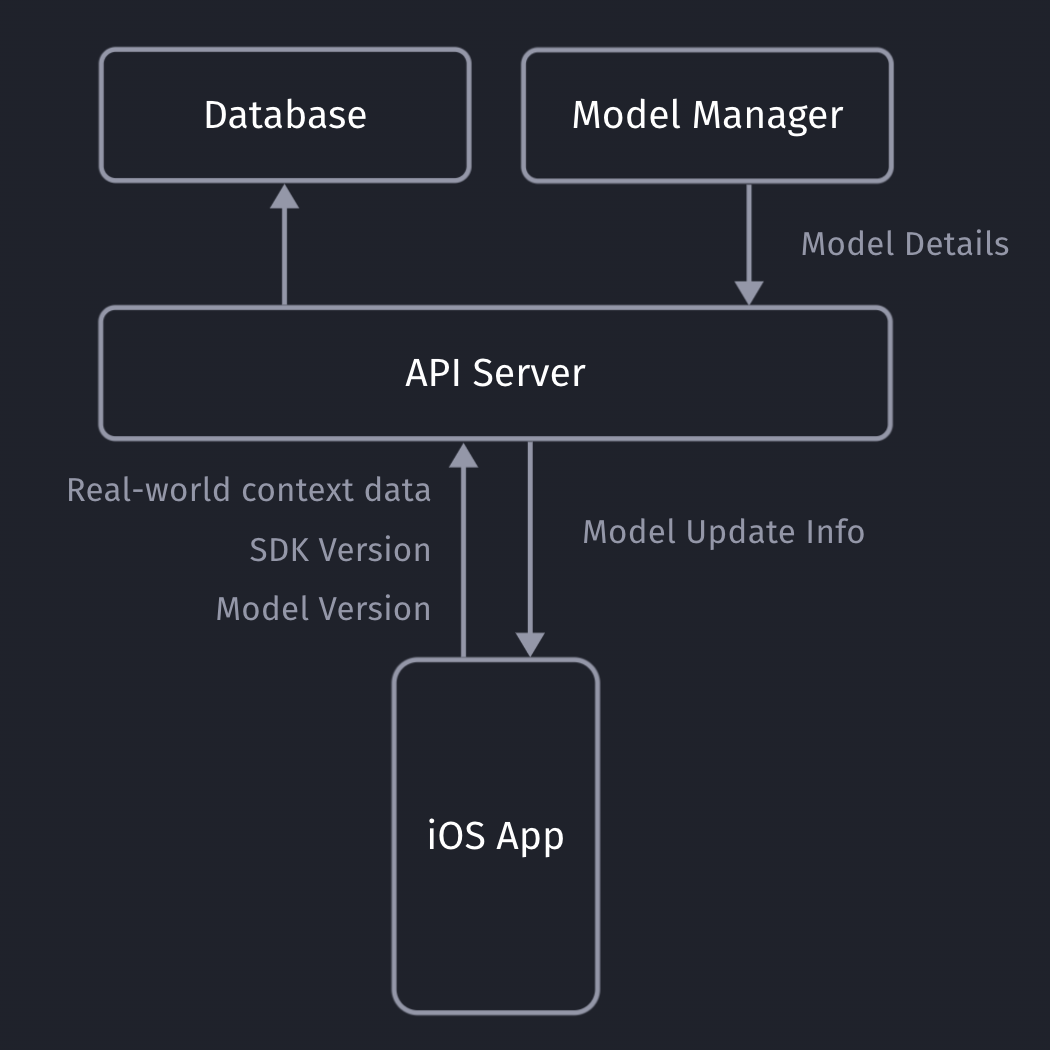

Our iOS app sends non-PII real-world context data to our API server, which will store the collected data in our database (full details here).

Our API servers respond with the latest model details so the client can decide if it needs to download an update or not.

It’s important for you to be able to remotely calibrate & fine-tune your models and their metadata, with the random upsell chance being one of those values. Since our SDK already communicates with our API server to get the download info for the most recent ML model, we can provide those details to the client together with the download URL.

private struct SaveableCustomModelInfo: Codable {

let modelVersion: String

let upsellThreshold: Double

let randomUpsellChance: Double

let contextSDKSpecificMetadataExample: Int

}

At ContextSDK, we generate and use more than 180 on-device signals to evaluate how good a moment is to show a certain type of content. With machine learning for this use-case, you don’t want a model to have 180 inputs, as training such a model would require enormous amounts of data, as the training classifier wouldn’t know which columns to start with. Without going into too much Data Science details, you’d want the ratio between columns (inputs) and rows (data entries) to meet certain requirements.

Hence, we have multiple levels of data processing and preparations when training our Machine Learning model. One step is responsible for finding the context signals that contribute the highest amount of weight in the model, and focus on those. The signals used vary heavily depending on the app.

It was easy to dynamically pass in the signals that are used by a given model in our architecture. We’ve published a blog post on how our stack enforces matching signals across all our components.

For simple models, you can use the pre-generated Swift classes for your model. Apple recommends using the MLFeatureProvider for more complicated cases, like when your data is collected asynchronously, to reduce the amounts of data you’d need to copy, or for other more complicated data sources.

func featureValue(for featureName: String) -> MLFeatureValue? {

// Fetch your value here based on the `featureName`

stringValue = self.signalsManager.signal(byString: featureName) // Simplified example

return MLFeatureValue(string: stringValue.string())

}

We won’t go into full detail on how we implemented the mapping of the various different types. We’ve created a subclass of MLFeatureProvider and implemented the featureValue method to dynamically get the right values for each input.

As part of the MLFeatureProvider subclass, you need to provide a list of all featureNames. You can easily query the required parameters for a given CoreML file using the following code:

featureNames = Set(mlModel.modelDescription.inputDescriptionsByName.map({$0.value.name}))

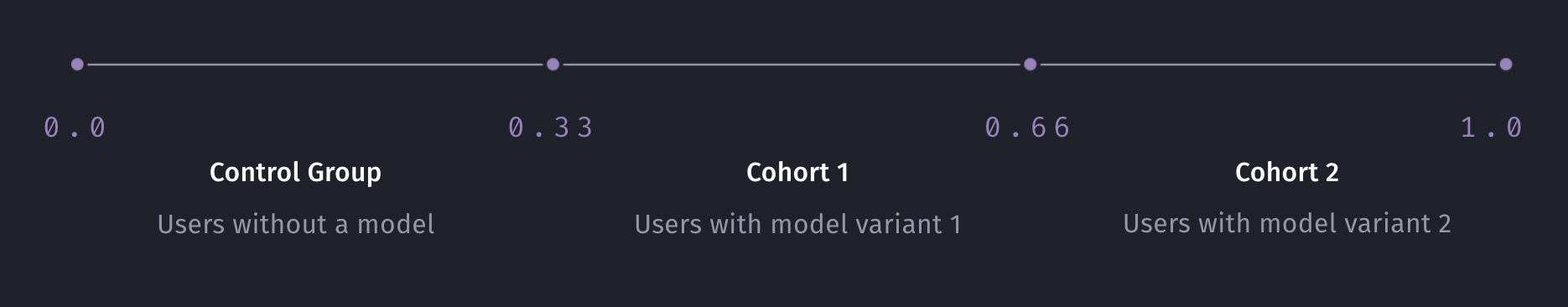

Most of us have used AB tests with different cohorts, so you’re most likely already familiar with this concept. We wanted something basic, with little complexity, that works on-device, and doesn’t rely on any external infrastructure to assign the cohort.

For that, we created ControlGrouper, a class that takes in any type of identifier that we only use locally to assign a control group:

import CommonCrypto

class ControlGrouper {

/***

The groups are defined as ranges between the upperBoundInclusive of groups.

The first group will go from 0 to upperBoundInclusive[0]

The next group from upperBoundInclusive[0] to upperBoundInclusive[1]

The last group will be returned if no other group matches, though for clarity the upperBoundInclusive should be set to 0.

If there is only 1 group regardless of specified bounds it is always used. Any upperBoundInclusive higher than 1 acts just like 1.

Groups will be automatically sorted so do not need to be passed in in the correct order.

An arbitrary number of groups can be supplied and given the same userIdentifier and modelName the same assignment will always be made.

*/

class func getGroupAssignment<T>(userIdentifier: String, modelName: String, groups: [ControlGroup<T>]) -> T {

if (groups.count <= 1) {

return groups[0].value

}

// We create a string we can hash using all components that should affect the result the group assignment.

let assignmentString = "\(userIdentifier)\(modelName)".data(using: String.Encoding.utf8)

// Using SHA256 we can map the arbitrary assignment string on to a 256bit space and due to the nature of hashing:

// The distribution of input string will be even across this space.

// Any tiny change in the assignment string will be massive difference in the output.

var digest = [UInt8](repeating: 0, count: Int(CC_SHA256_DIGEST_LENGTH))

if let value = (assignmentString as? NSData) {

CC_SHA256(value.bytes, CC_LONG(value.count), &digest)

}

// We slice off the first few bytes and map them to an integer, then we can check from 0-1 where this integer lies in the range of all possible buckets.

if let bucket = UInt32(data: Data(digest).subdata(in: 0..<4)) {

let position = Double(bucket) / Double(UInt32.max)

// Finally knowing the position of the installation in our distribution we can assign a group based on the requested groups by the caller.

// We sort here in case the caller does not provide the groups from lowest to higest.

let sortedGroups = groups.sorted(by: {$0.upperBoundInclusive < $1.upperBoundInclusive})

for group in sortedGroups {

if (position <= group.upperBoundInclusive) {

return group.value

}

}

}

// If no group matches, we use the last one as we can just imagine its upperBoundInclusive extending to the end.

return groups[groups.count - 1].value

}

}

struct ControlGroup<T> {

let value: T

let upperBoundInclusive: Double

}

For example, this allows us to split the user-base into 3 equally sized groups, one of which being the control group.

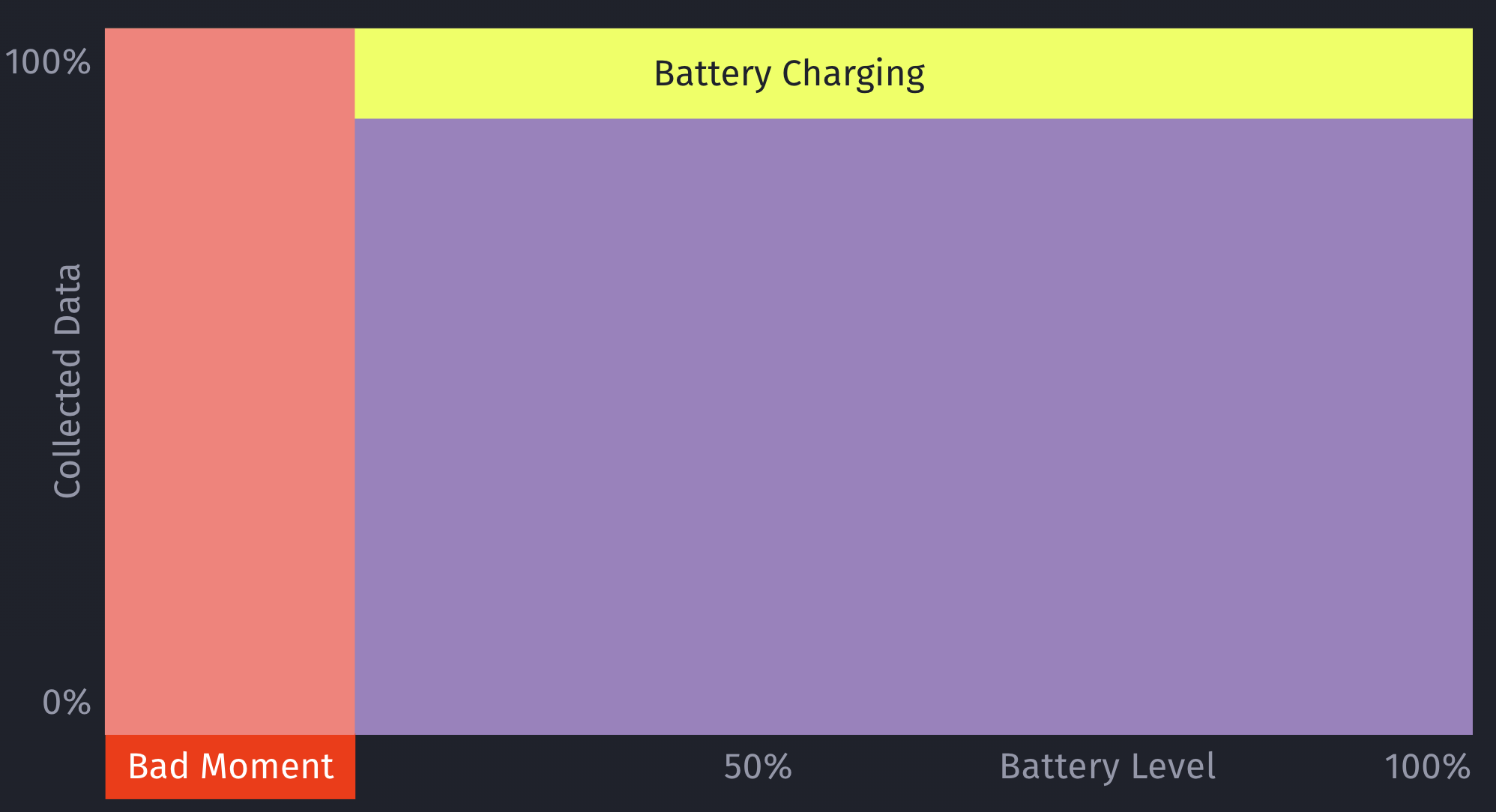

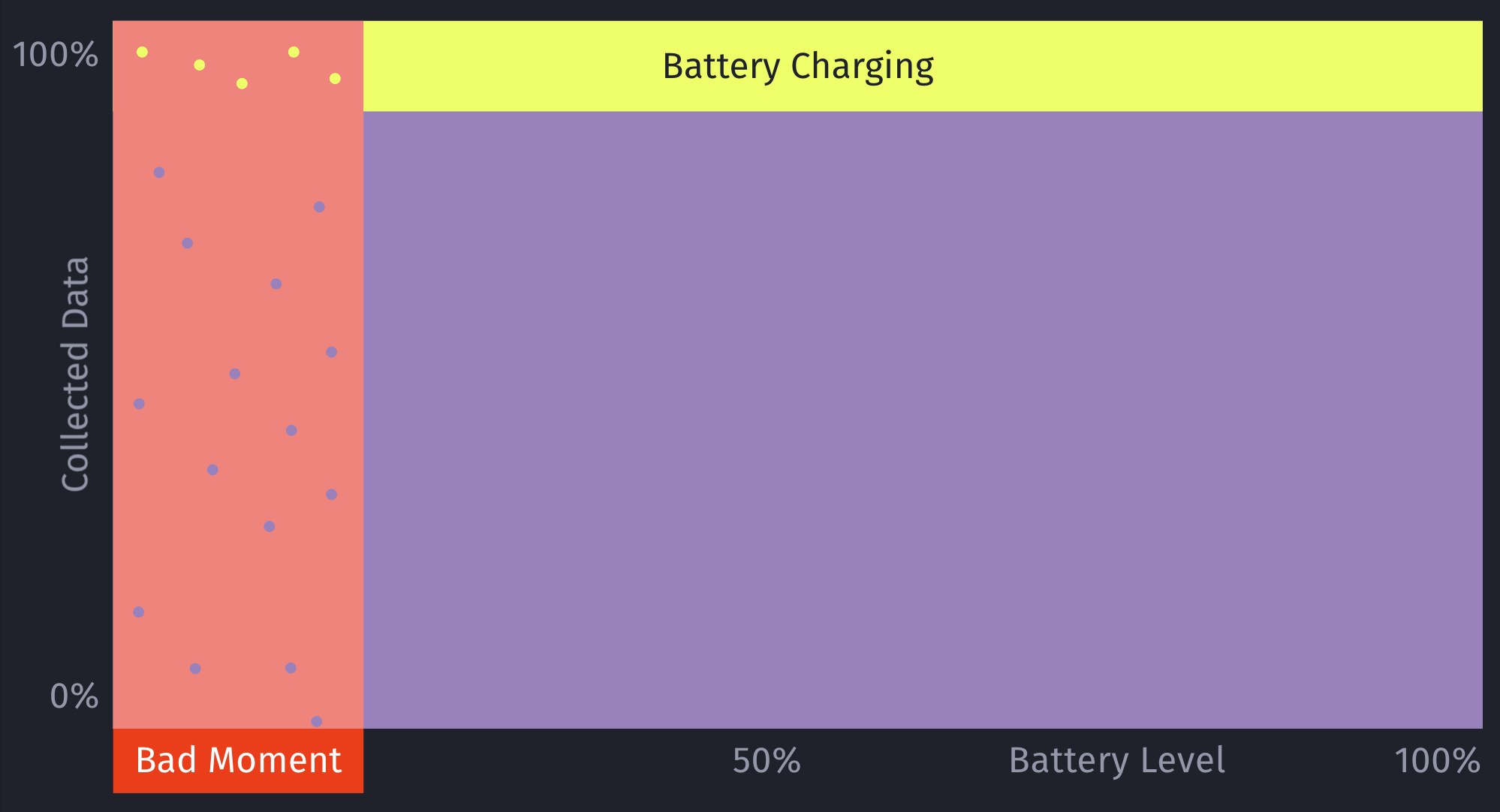

Depending on what you use the model for, it is easy to end up in some type of data blindness once you start using your model.

For example, let’s say your model decides it’s a really bad time to show a certain type of prompt if the battery is below 7%. While this may be statistically correct based on real-data, this would mean you’re not showing any prompts for those cases (< 7% battery level) any more.

However, what if there are certain exceptions for those cases, that you’ll only learn about once you’ve collected more data? For example, maybe that <7% battery level rule doesn’t apply, if the phone is currently plugged in?

This is an important issue to consider when working with machine learning: Once you start making decisions based on your model, you’ll create blind-spots in your learning data.

The only way to get additional, real-world data for those blind spots is to still sometimes decide to show a certain prompt even if the ML model deems it to be a bad moment to do so. This should be optimized to a small enough percentage that it doesn’t meaningfully reduce your conversion rates, but at the same time enough that you’ll get meaningful, real-world data to train and improve your machine learning model over time. Once we train the initial ML model, we look into the absolute numbers of prompts & sales, and determine an individual value for what the percentage should be.

Additionally, by introducing this concept of still randomly showing a prompt even if the model deems it to be a bad moment, it can help to prevent situations where a user may never see a prompt, due to the rules of the model. For example, a model may learn that there are hardly any sales in a certain region, and therefore decide to always skip showing prompts.

This is something we prevent on multiple levels for ContextSDK, and this one is the very last resort (on-device) to be sure this won’t happen. We continuously analyze, and evaluate our final model weights, as well as the incoming upsell data, to ensure our models leverage enough different types of signals.

let hasInvalidResult = upsellProbability == -1

let coreMLUpsellResult = (upsellProbability >= executionInformation.upsellThreshold || hasInvalidResult)

// In order to prevent cases where users never see an upsell this allows us to still show an upsell even if the model thinks it's a bad time.

let randomUpsellResult = Double.random(in: 0...1) < executionInformation.randomUpsellChance

let upsellResult = (coreMLUpsellResult || randomUpsellResult) ? UpsellResult.shouldUpsell : .shouldSkip

// We track if this prompt was shown as part of our random upsells, this way we can track performance.

modelBasedSignals.append(SignalBool(id: .wasRandomUpsell, value: randomUpsellResult && !coreMLUpsellResult))

As an additional layer, we also have a control group (with varying sizes) that we generate and use locally.

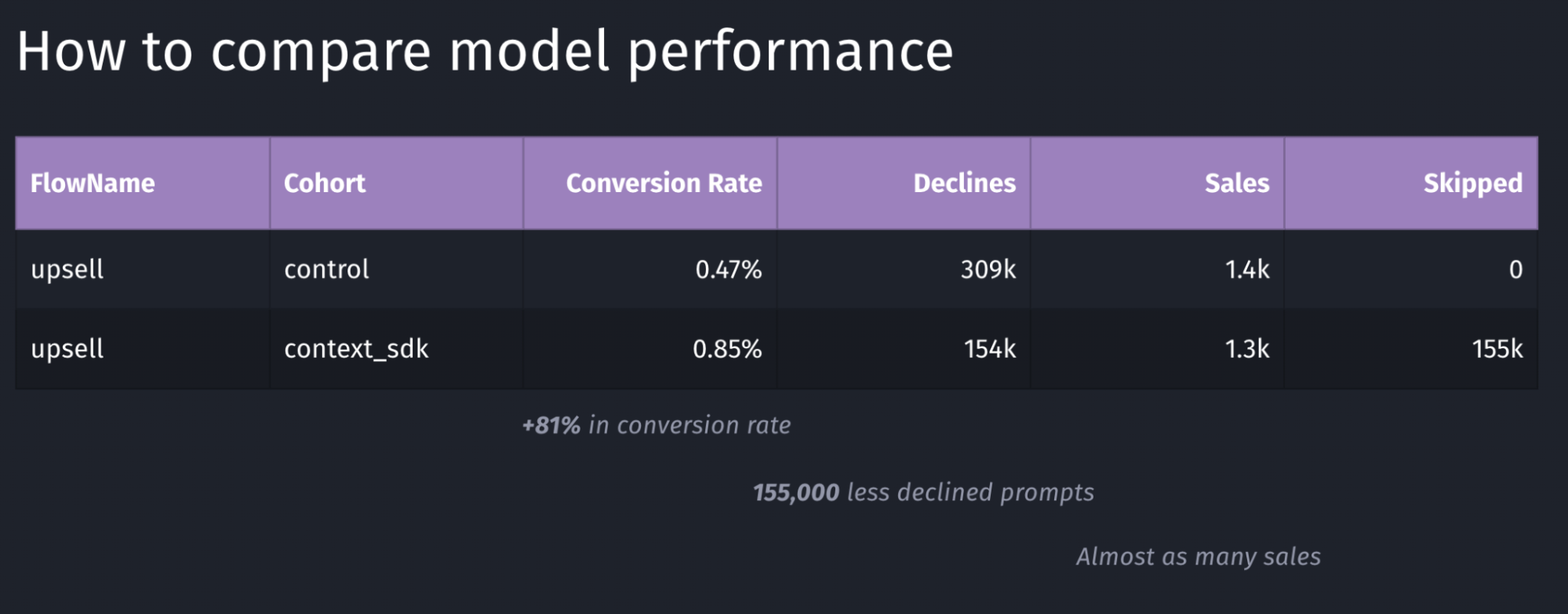

We’re working with a customer who’s currently aggressively pushing prompts onto users. They learned that those prompts lead to churn in their user-base, so their number one goal was to reduce the number of prompts, while keeping as much of the sales as possible.

We decided for a 50/50 split for their user-base to have two large enough buckets to evaluate the model’s performance

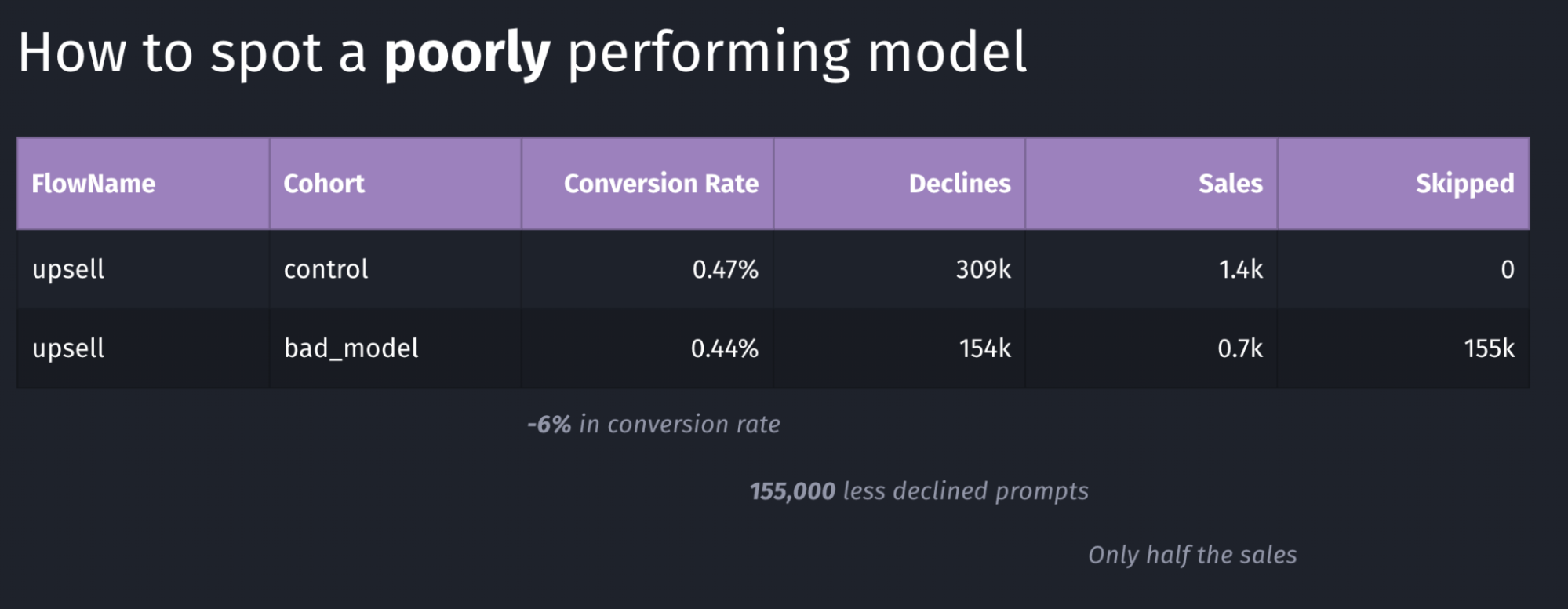

Depending on the goal of your model, you may want to target other key metrics to evaluate the performance of your model. In the table above, the main metric we looked for was the conversion rate, which in this case has a performance of +81%.

Above is an example of a model with poor performance: the conversion rate went down by 6% and the total number of sales dropped in half. Again, in our case we were looking for an increase in conversion rate, where in this case this goal is clearly not achieved.

Our systems continuously monitor whatever key metric we want to push (usually sales or conversion rate, depending on the client’s preference). As soon as a meaningful number of sales were made for both buckets, the performance is compared, and if it doesn’t meet our desired outcomes, the rollout will immediately be stopped, and rolled back, thanks to the over-the-air update system described in this article

In this article we’ve learned about the complexity of deploying machine learning models, and measuring and comparing their performance. It’s imperative to continuously monitor how well a model is working, and have automatic safeguards and corrections in place.

Overall, Apple has built excellent machine learning tools around CoreML, which have been built into iOS for many years, making it easy to build intelligent, offline-first mobile apps that nicely blend into the user’s real-world environment.

2024-05-22 08:00:00

Note: This is a cross-post of the original publication on contextsdk.com.

This is the second blog post covering various machine learning (ML) concepts of iOS apps, be sure to read part 1 first. Initially this was supposed to be a 2-piece series, but thanks to the incredible feedback of the first one, we’ve decided to cover even more on this topic, and go into more detail.

For some apps it may be sufficient to train a ML (machine learning) model once, and ship it with the app itself. However, most mobile apps are way more dynamic than that, constantly changing and evolving. It is therefore important to be able to quickly adapt and improve your machine learning models, without having to do a full app release, and go through the whole App Store release & review process.

In this series, we will explore how to operate machine learning models directly on your device instead of relying on external servers via network requests. Running models on-device enables immediate decision-making, eliminates the need for an active internet connection, and can significantly lower infrastructure expenses.

In the example of this series, we’re using a model to make a decision on when to prompt the user to upgrade to the paid plan based on a set of device-signals, to reduce user annoyances, while increasing our paid subscribers.

We believe in the craft of beautiful, reliable and fast mobile apps. Running machine-learning devices on-device makes your app responsive, snappy and reliable. One aspect to consider is the first app launch, which is critical to prevent churn and get the user hooked to your app.

To ensure your app works out of the box right after its installation, we recommend shipping your pre-trained CoreML file with your app. Our part 1 covers how to easily achieve this with Xcode

Your iOS app needs to know when a new version of the machine learning file is available. This is as simple as regularly sending an empty network request to your server. Your server doesn’t need to be sophisticated, we initially started with a static file host (like S3, or alike) that we update whenever we have a new model ready.

The response could use whatever versioning you prefer:

Whereas the iOS client would compare the version number of most recently downloaded model with whatever the server responds with. Which approach you choose, is up to you, and your strategy on how you want to rollout, monitor and version your machine learning models.

Over time, you most likely want to optimize the number of network requests. Our approach combines a smart mechanism where we’d combine the outcome collection we use to train our machine learning models with the model update checks, while also leveraging a flushing technique to batch many events together to minimize overhead and increase efficiency.

Ideally, the server’s response already contains the download URL of the latest model, here is an example response:

{

"url": "https://krausefx.github.io/CoreMLDemo/models/80a2-82d1-bcf8-4ab5-9d35-d7f257c4c31e.mlmodel"

}

The above example is a little simplified, and we’re using the model’s file name as our version to identify each model.

You’ll also need to consider which app version is supported. In our case, a new ContextSDK version may implement additional signals that are used as part of our model. Therefore we provide the SDK version as part of our initial polling request, and our server responds with the latest model version that’s supported.

First, we’re doing some basic scaffolding, creating a new ModelDownloadManager class:

import Foundation

import CoreML

class ModelDownloadManager {

private let fileManager: FileManager

private let modelsFolder: URL

private let modelUpdateCheckURL = "https://krausefx.github.io/CoreMLDemo/latest_model_details.json"

init(fileManager: FileManager = .default) {

self.fileManager = fileManager

if let folder = fileManager.urls(for: .applicationSupportDirectory, in: .userDomainMask).first?.appendingPathComponent("context_sdk_models") {

self.modelsFolder = folder

try? fileManager.createDirectory(at: folder, withIntermediateDirectories: true)

} else {

fatalError("Unable to find or create models folder.") // Handle this more gracefully

}

}

}

And now to the actual code: Downloading the model details to check if a new model is available:

internal func checkForModelUpdates() async throws {

guard let url = URL(string: modelUpdateCheckURL) else {

throw URLError(.badURL)

}

let (data, _) = try await URLSession.shared.data(from: url)

guard let jsonObject = try JSONSerialization.jsonObject(with: data) as? [String: Any],

let modelDownloadURLString = jsonObject["url"] as? String,

let modelDownloadURL = URL(string: modelDownloadURLString) else {

throw URLError(.cannotParseResponse)

}

try await downloadIfNeeded(from: modelDownloadURL)

}

If a new CoreML model is available, your iOS app now needs to download the latest version. You can use any method of downloading the static file from your server:

// It's important to immediately move the downloaded CoreML file into a permanent location

private func downloadCoreMLFile(from url: URL) async throws -> URL {

let (tempLocalURL, _) = try await URLSession.shared.download(for: URLRequest(url: url))

let destinationURL = modelsFolder.appendingPathComponent(tempLocalURL.lastPathComponent)

try fileManager.moveItem(at: tempLocalURL, to: destinationURL)

return destinationURL

}

Considering Costs

Depending on your user-base, infrastructure costs will be a big factor on how you’re gonna implement the on-the-fly update mechanism.

For example, an app with 5 Million active users, and a CoreML file size of 1 Megabyte, would generate a total data transfer of 5 Terabyte. If you were to use a simple AWS S3 bucket directly with $0.09 per GB egress costs, this would yield costs of about $450 for each model rollout (not including the free tier).

As part of this series, we will talk about constantly rolling out new, improved challenger models, running various models in parallel, and iterating quickly, paying this amount isn’t a feasible solution.

One easy fix for us was to leverage CloudFlare R2, which is faster and significantly cheaper. The same numbers as above costs us less than $2, and would be completely free if we include the free tier.

After successfully downloading the CoreML file, you need to compile it on-device. While this sounds scary, Apple made it a seamless, easy and safe experience. Compiling the CoreML file on-device is a requirement, and ensures that the file is optimized for the specific hardware it runs on.

private func compileCoreMLFile(at localFilePath: URL) throws -> URL {

let compiledModelURL = try MLModel.compileModel(at: localFilePath)

let destinationCompiledURL = modelsFolder.appendingPathComponent(compiledModelURL.lastPathComponent)

try fileManager.moveItem(at: compiledModelURL, to: destinationCompiledURL)

try fileManager.removeItem(at: localFilePath)

return destinationCompiledURL

}

You are responsible for the file management, including that you store the resulting ML file into a permanent location. In general, file management on iOS can be a little tedious, covering all the various edge cases.

You can also find the official Apple Docs on Downloading and Compiling a Model on the User’s Device.

We don’t yet have a logic on how we decide if we want to download the new model. In this example, we’ll do something very basic: each model’s file-name is a unique UUID. All we need to do is to check if a model under the exact file name is available locally:

private func downloadIfNeeded(from url: URL) async throws {

let lastPathComponent = url.lastPathComponent

// Check if the model file already exists (for this sample project we use the unique file name as identifier)

if let localFiles = try? fileManager.contentsOfDirectory(at: modelsFolder, includingPropertiesForKeys: nil),

localFiles.contains(where: { $0.lastPathComponent == lastPathComponent }) {

// File exists, you could add a version check here if versions are part of the file name or metadata

print("Model already exists locally. No need to download.")

} else {

let downloadedURL = try await downloadCoreMLFile(from: url) // File does not exist, download it

let compiledURL = try compileCoreMLFile(at: downloadedURL)

try deleteAllOutdatedModels(keeping: compiledURL.lastPathComponent)

print("Model downloaded, compiled, and old models cleaned up successfully.")

}

}

Of course we want to be a good citizen, and delete all older models from the local storage. Also, for this sample project, this is required, as we’re using UUIDs for versioning, meaning the iOS client actually doesn’t know about which version is higher. For sophisticated systems it’s quite common to not have this transparency to the client, as the backend may be running multiple experiments and challenger models in parallel across all clients.

private func deleteAllOutdatedModels(keeping recentModelFileName: String) throws {

let urlContent = try fileManager.contentsOfDirectory(at: modelsFolder, includingPropertiesForKeys: nil, options: .skipsHiddenFiles)

for fileURL in urlContent where fileURL.lastPathComponent != recentModelFileName {

try fileManager.removeItem(at: fileURL)

}

}

Now all that’s left is to automatically switch between the CoreML file that we bundled within our app, and the file we downloaded from our servers, whereas we’d always want to prefer the one we downloaded remotely.

In our ModelDownloadManager, we want an additional function that exposes the model we want to use. This can either be the bundled CoreML model, or the CoreML model downloaded most recently over-the-air

internal func latestModel() -> MyFirstCustomModel? {

let fileManagerContents = (try? fileManager.contentsOfDirectory(at: modelsFolder, includingPropertiesForKeys: nil)) ?? []

if let latestFileURL = fileManagerContents.sorted(by: { $0.lastPathComponent > $1.lastPathComponent }).first,

let otaModel = try? MyFirstCustomModel(contentsOf: latestFileURL) {

return otaModel

} else if let bundledModel = try? MyFirstCustomModel(configuration: MLModelConfiguration()) {

return bundledModel // Fallback to the bundled model if no downloaded model exists

}

return nil

}

There are almost no changes needed to our code base from part 1.

Instead of using the MyFirstCustomModel initializer directly, we now need to use the newly created .latestModel() method.

let batteryLevel = UIDevice.current.batteryLevel

let batteryCharging = UIDevice.current.batteryState == .charging || UIDevice.current.batteryState == .full

do {

let modelInput = MyFirstCustomModelInput(input: [

Double(batteryLevel),

Double(batteryCharging ? 1.0 : 0.0)

])

if let currentModel = modelDownloadManager.latestModel(),

let modelMetadata = currentModel.model.modelDescription.metadata[.description] {

let result = try currentModel.prediction(input: modelInput)

let classProbabilities = result.featureValue(for: "classProbability")?.dictionaryValue

let upsellProbability = classProbabilities?["Purchased"]?.doubleValue ?? -1

showAlertDialog(message:("Chances of Upsell: \(upsellProbability), executed through model \(modelMetadata)"))

} else {

showAlertDialog(message:("Could not run CoreML model"))

}

} catch {

showAlertDialog(message:("Error running CoreML file: \(error)"))

}

The only remaining code that’s left: triggering the update check. When you do that will highly depend on your app, and the urgency in which you want to update your models.

Task {

do {

try await modelDownloadManager.checkForModelUpdates()

showAlertDialog(message:("Model update completed successfully."))

} catch {

// Handle possible errors here

showAlertDialog(message:("Failed to update model: \(error.localizedDescription)"))

}

}

As part of this series, we’ve built out a demo app that shows all of this end-to-end in action. You can find it available here on GitHub: https://github.com/KrauseFx/CoreMLDemo:

Today we’ve covered how you can roll out new machine learning models directly to your users’ iPhones, running them directly on their ML-optimized hardware. Using this approach you can make decisions on what type of content, or prompts you show based on the user’s context, powered by on-device machine learning execution. Updating CoreML files quickly, on-the-fly without going through the full App Store release cycle is critical, to quickly react to changing user-behaviors, when introducing new offers in your app, and to constantly improve your app, be it increasing your conversion rates, reducing annoyances and churn, or optimizing other parts of your app.

This is just the beginning: Next up, we will talk about how to manage the rollout of new ML models, in particular:

Excited to share more on what we’ve learned when building ContextSDK to power hundreds of machine learning models distributed across more than 25 Million devices.

Update: Head over to the third post of the ML series

2024-05-06 08:00:00

Note: This is a cross-post of the original publication on contextsdk.com.

Machine Learning (ML) in the context of mobile apps is a wide topic, with different types of implementations and requirements. On the highest levels, you can distinguish between:

As part of this blog series, we will be talking about variant 2: We start out by training a new ML model on your server infrastructure based on real-life data, and then distributing and using that model within your app. Thanks to Apple’s CoreML technology, this process has become extremely efficient & streamlined.

We wrote this guide for all developers, even if you don’t have any prior data science or backend experience.

To train your first machine learning model, you’ll need some data you want to train the model on. In our example, we want to optimize when to show certain prompts or messages in iOS apps.

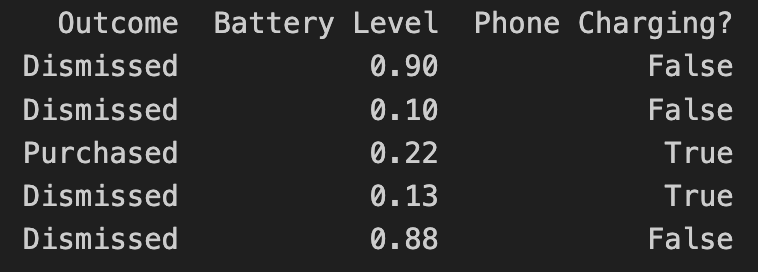

Let’s assume we have your data in the following format:

In the above example, the “label” of the dataset is the outcome. In machine learning, a label for training data refers to the output or answer for a specific instance in a dataset. The label is used to train a supervised model, guiding it to understand how to classify new, unseen examples or predict outcomes.

How you get the data to train your model is up to you. In our case, we’d collect non-PII data just like the above example, to train models based on real-life user behavior. For that we’ve built out our own backend infrastructure, which we’ve already covered in our Blog:

There are different technologies available to train your ML model. In our case, we chose Python, together with pandas and sklearn.

Load the recorded data into a pandas DataFrame:

import pandas as pd

rows = [

['Dismissed', 0.90, False],

['Dismissed', 0.10, False],

['Purchased', 0.24, True],

['Dismissed', 0.13, True]

]

data = pd.DataFrame(rows, columns=['Outcome', 'Battery Level', 'Phone Charging?'])

print(data)

Instead of hard-coded data like above, you’d access your database with the real-world data you’ve already collected.

To train a machine learning model, you need to split your data into a training set and a test set. We won’t go into detail about why that’s needed, since there are many great resources out there that explain the reasoning, like this excellent CGP Video.

from sklearn.model_selection import train_test_split

X = data.drop("Outcome", axis=1)

Y = data["Outcome"]

X_train, X_test, Y_train, Y_test = train_test_split(X, Y, test_size=0.2, shuffle=True)

The code above splits your data by a ratio of 0.2 (⅕) and separates the X and the Y axis, which means separating the label (“Outcome”) from the data (all remaining columns).

As part of this step, you’ll need to decide on what classifier you want to use. In our example, we will go with a basic RandomForest classifier:

from sklearn.ensemble import RandomForestClassifier

from sklearn.metrics import classification_report

classifier = RandomForestClassifier()

classifier.fit(X_train, Y_train)

Y_pred = classifier.predict(X_test)

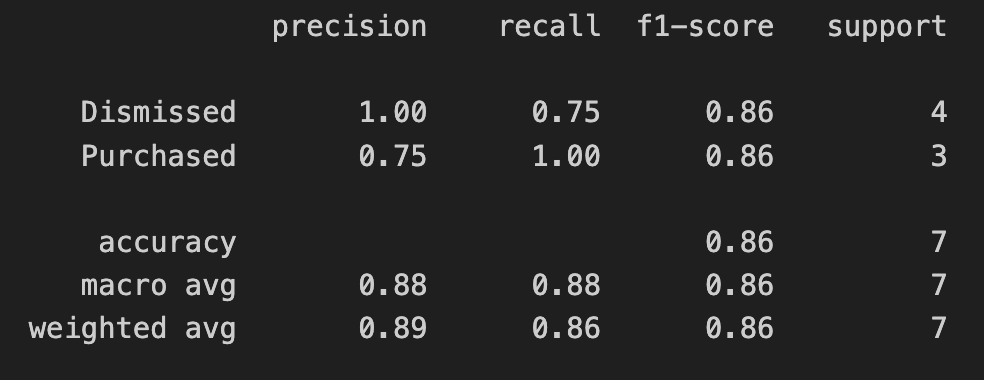

print(classification_report(Y_test, Y_pred, zero_division=1))

The output of the above training will give you a classification report. In simplified words, it will tell you more of how accurate the trained model is.

In the screenshot above, we’re only using test data as part of this blog series. If you’re interested in how to interpret and evaluate the classification report, check out this guide.

Apple’s official CoreMLTools make it extremely easy to export the classifier (in this case, our Random Forest) into a .mlmodel (CoreML) file, which we can run on Apple’s native ML chips. CoreMLTools support a variety of classifiers, however not all of them, so be sure to verify its support first.

import coremltools

coreml_model = coremltools.converters.sklearn.convert(classifier, input_features="input")

coreml_model.short_description = "My first model"

coreml_model.save("MyFirstCustomModel.mlmodel")

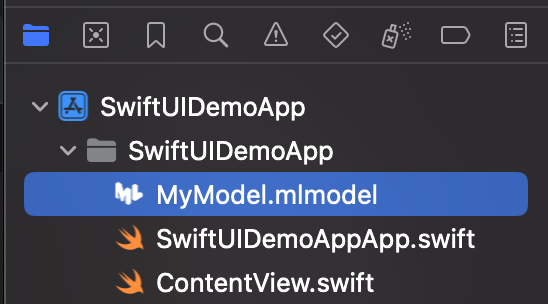

For now, we will simply drag & drop the CoreML file into our Xcode project. In a future blog post we will go into detail on how to deploy new ML models over-the-air.

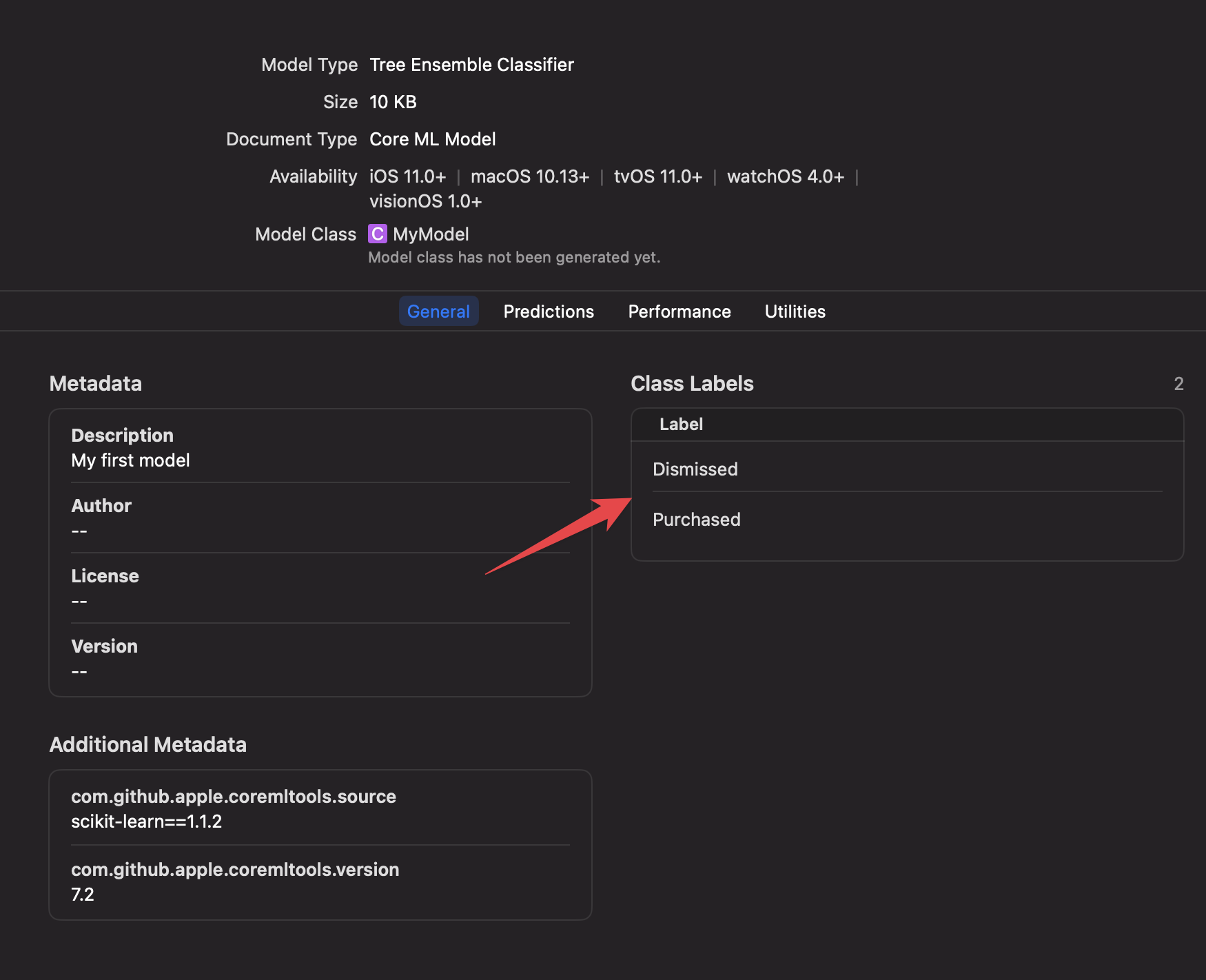

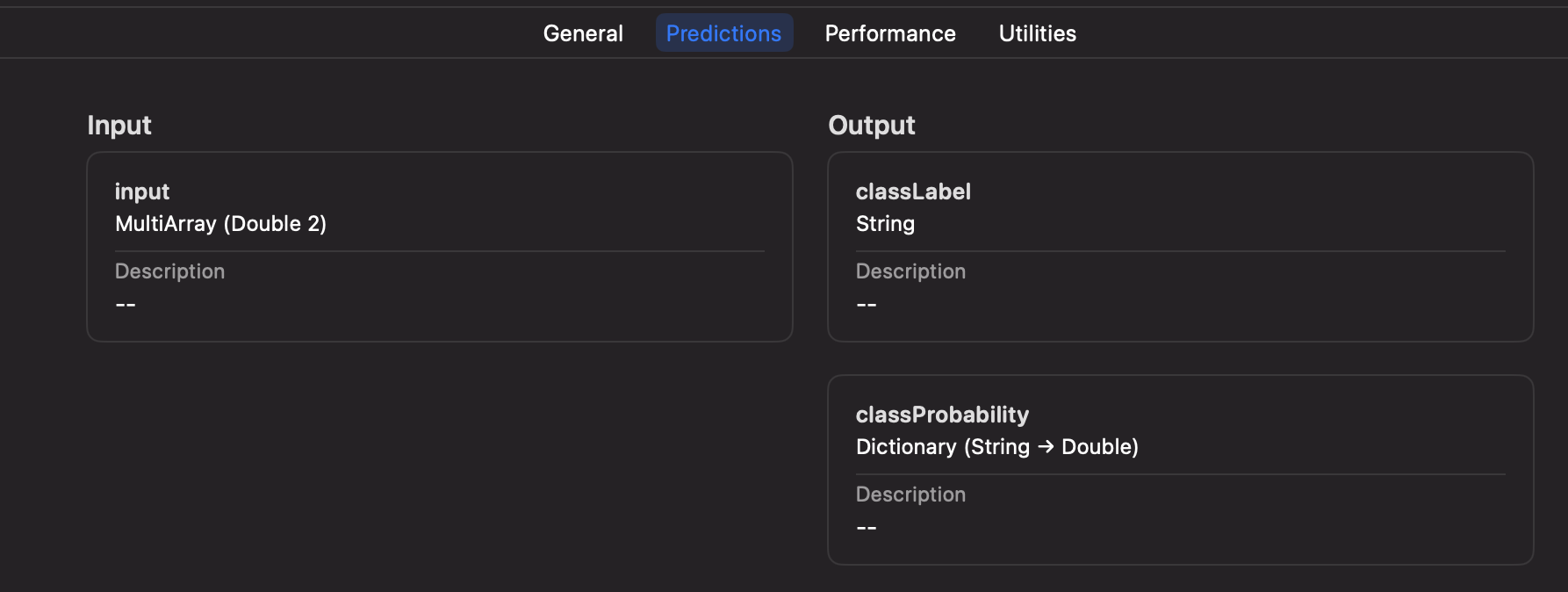

Once added to your project, you can inspect the inputs, labels, and other model information right within Xcode.

Xcode will automatically generate a new Swift class based on your mlmodel file, including the details about the inputs, and outputs.

let batteryLevel = UIDevice.current.batteryLevel

let batteryCharging = UIDevice.current.batteryState == .charging || UIDevice.current.batteryState == .full

do {

let modelInput = MyFirstCustomModelInput(input: [

Double(batteryLevel),

Double(batteryCharging ? 1.0 : 0.0)

])

let result = try MyFirstCustomModel(configuration: MLModelConfiguration()).prediction(input: modelInput)

let classProbabilities = result.featureValue(for: "classProbability")?.dictionaryValue

let upsellProbability = classProbabilities?["Purchased"]?.doubleValue ?? -1

print("Chances of Upsell: \(upsellProbability)")

} catch {

print("Error running CoreML file: \(error)")

}

In the above code you can see that we pass in the parameters of the battery level, and charging status, using an array of inputs, only identified by the index. This has the downside of not being mapped by an exact string, but the advantage of faster performance if you have hundreds of inputs.

Alternatively, during model training and export, you can switch to using a String-based input for your CoreML file if preferred.

We will talk more about how to best set up your iOS app to get the best of both worlds, while also supporting over-the-air updates, dynamic inputs based on new models, and how to properly handle errors, process the response, manage complex AB tests, safe rollouts, and more.

In this guide we went from collecting the data to feed into your Machine Learning model, to training the model, to running it on-device to make decisions within your app. As you can see, Python and its libraries, including Apple’s CoreMLTools, make it very easy to get started with your first ML model. Thanks to native support of CoreML files in Xcode, and executing them on-device, we have all the advantages of the Apple development platform, like inspecting model details within Xcode, strong types and safe error handling.

In your organization, you’ll likely have a Data Scientist who will be in charge of training, fine-tuning and providing the model. The above guide shows a simple example - with ContextSDK we take more than 180 different signals into account, of different types, patterns, and sources, allowing us to achieve the best results, while keeping the resulting models small and efficient.

Within the next few weeks, we will be publishing a second post on that topic, showcasing how you can deploy new CoreML files to Millions of iOS devices over-the-air within seconds, in a safe & cost-efficient manner, managing complicated AB tests, dynamic input parameters, and more.

Update: Head over to the second post of the ML series

2024-02-06 08:00:00

Note: This is a cross-post of the original publication on contextsdk.com.

This is a follow-up post to our original publication: How to compile and distribute your iOS SDK as a pre-compiled xcframework.

In this technical article we go into the depths of best practices around

For everyone who knows me, I love automating iOS app-development processes. Having built fastlane, I learned just how much time you can save, and most importantly: prevent human errors from happening. With ContextSDK, we fully automated the release process.

For example, you need to properly update the version number across many points: your 2 podspec files (see our last blog post), your URLs, adding git tags, updating the docs, etc.

With ContextSDK, we train and deploy custom machine learning models for every one of our customers. The easiest way most companies would solve this is by sending a network request the first time the app is launched, to download the latest custom model for that particular app. However, we believe in fast & robust on-device Machine Learning Model execution, that doesn’t rely on an active internet connection. In particular, many major use-cases of ContextSDK rely on reacting to the user’s context within 2 seconds after the app is first launched, to immediately optimize the onboarding flow, permission prompts and other aspects of your app.

We needed a way to distribute each customer’s custom model with the ContextSDK binary, without including any models from other customers. To do this, we fully automated the deployment of custom SDK binaries, each including the exact custom model, and features the customer needs.

Our customer management system provides the list of custom SDKs to build, tied together with the details of the custom models:

[

{

"bundle_identifiers": ["com.customer.app"],

"app_id": "c2d67cdb-e117-4c3e-acca-2ae7f1a42210",

"customModels": [

{

"flowId": 8362,

"flowName": "onboarding_upsell",

"modelVersion": 73

}, …

]

}, …

]

Our deployment scripts will then iterate over each app, and include all custom models for the given app. You can inject custom classes and custom code before each build through multiple approaches. One approach we took to include custom models dynamically depending on the app, is to update our internal podspec to dynamically add files:

# ...

source_files = Dir['Classes/**/*.swift']

if ENV["CUSTOM_MODEL_APP_ID"]

source_files += Dir["Classes/Models/Custom/#{ENV["CUSTOM_MODEL_APP_ID"]}/*.mlmodel"]

end

s.source_files = source_files

# ...

In the above example you can see how we leverage a simple environment variable to tell CocoaPods which custom model files to include.

Thanks to iOS projects being compiled, we can guarantee integrity of the codebase itself. Additionally we have hundreds of automated tests (and manual tests) to guarantee alignment of the custom models, matching SDK versions, model versions and each customer’s integration in a separate, auto-generated Xcode project.

Side-note: ContextSDK also supports over-the-air updates of new CoreML files, to update the ones we bundle the app with. This allows us to continuously improve our machine learning models over-time, as we calibrate our context signals to each individual app. Under the hood we deploy new challenger-models to a subset of users, for which we compare the performance, and gradually roll them out more if it matches expectations.

Building and distributing a custom binary for each customer is easier than you may expect. Once your SDK deployment is automated, taking the extra step to build custom binaries isn’t as complex as you may think.

Having this architecture allows us to iterate and move quickly, while having a very robust development and deployment pipeline. Additionally, once we segment our paid features for ContextSDK more, we can automatically only include the subset of functionality each customer wants enabled. For example, we recently launched AppTrackingTransparency.ai, where a customer may only want to use the ATT-related features of ContextSDK, instead of using it to optimise their in-app conversions.

If you have any questions, feel free to reach out to us on Twitter or LinkedIn, or subscribe to our newsletter on contextsdk.com.

Note: This is a cross-post of the original publication on contextsdk.com.

2024-02-01 08:00:00

Note: This is a cross-post of the original publication on contextsdk.com.

In this technical article we go into the depths and best practices around

At ContextSDK we have our whole iOS Swift codebase in a single local CocoaPod. This allows us to iterate quickly as a team, and have our SDK configuration defined in clean code in version control, instead of some plist Xcode settings.

ContextSDK.podspec

Pod::Spec.new do |s|

s.name = 'ContextSDK'

s.version = '3.2.0'

s.summary = 'Introducing the most intelligent way to know when and how to monetize your user'

s.swift_version = '5.7'

s.homepage = 'https://contextsdk.com'

s.author = { 'KrauseFx' => '[email protected]' }

s.ios.deployment_target = '14.0'

# via https://github.com/CocoaPods/cocoapods-packager/issues/216

s.source = { :git => "file://#{File.expand_path("..", __FILE__)}" }

s.pod_target_xcconfig = {

"SWIFT_SERIALIZE_DEBUGGING_OPTIONS" => "NO",

"OTHER_SWIFT_FLAGS" => "-Xfrontend -no-serialize-debugging-options",

"BUILD_LIBRARY_FOR_DISTRIBUTION" => "YES", # for swift Library Evolution

"SWIFT_REFLECTION_METADATA_LEVEL" => "none", # to include less metadata in the resulting binary

}

s.frameworks = 'AVFoundation'

s.public_header_files = 'Classes/**/*.h'

s.source_files = Dir['Classes/**/*.{swift}']

s.resource_bundles = { 'ContextSDK' => ['PrivacyInfo.xcprivacy'] }

s.test_spec 'Tests' do |test_spec|

test_spec.source_files = [

'Tests/*.{swift}',

'Tests/Resources/*.{plist}'

]

test_spec.dependency 'Quick', '7.2.0'

test_spec.dependency 'Nimble', '12.2.0'

end

end

During development, we want to easily edit our codebase, run the Demo app, and debug using Xcode. To do that, our Demo app has a simple Podfile referencing our local CocoaPod:

target 'ContextSDKDemo' do

use_frameworks!

pod 'ContextSDK', :path => '../ContextSDK', :testspecs => ['Tests']

end

Running pod install will then nicely setup your Xcode workspace, ready to run the local ContextSDK codebase:

Editing a ContextSDK source file (e.g. Context.swift) will then immediately be accessible and used by Xcode during the next compile. This makes development of SDKs extremely easy & efficient.

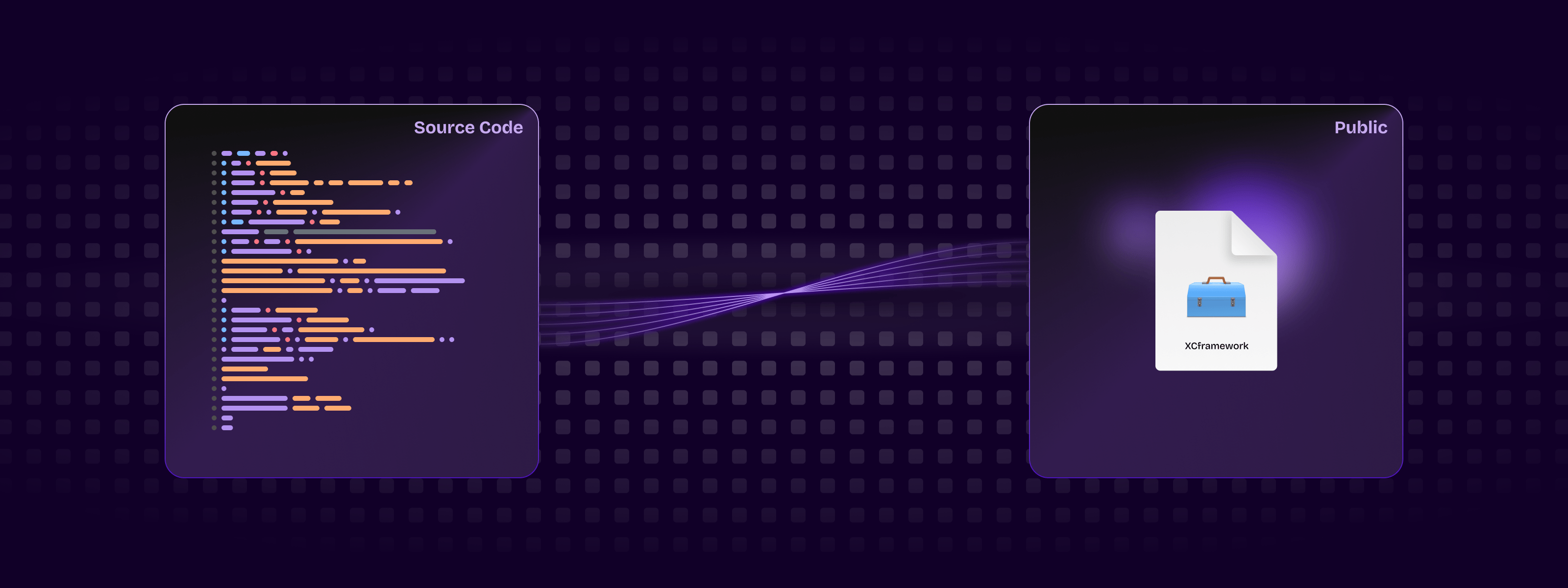

The requirement for commercial SDKs is often that its source code isn’t accessible to its user. To do that, you need to pre-compile your SDK into an .xcframework static binary, which can then be used by your customers.

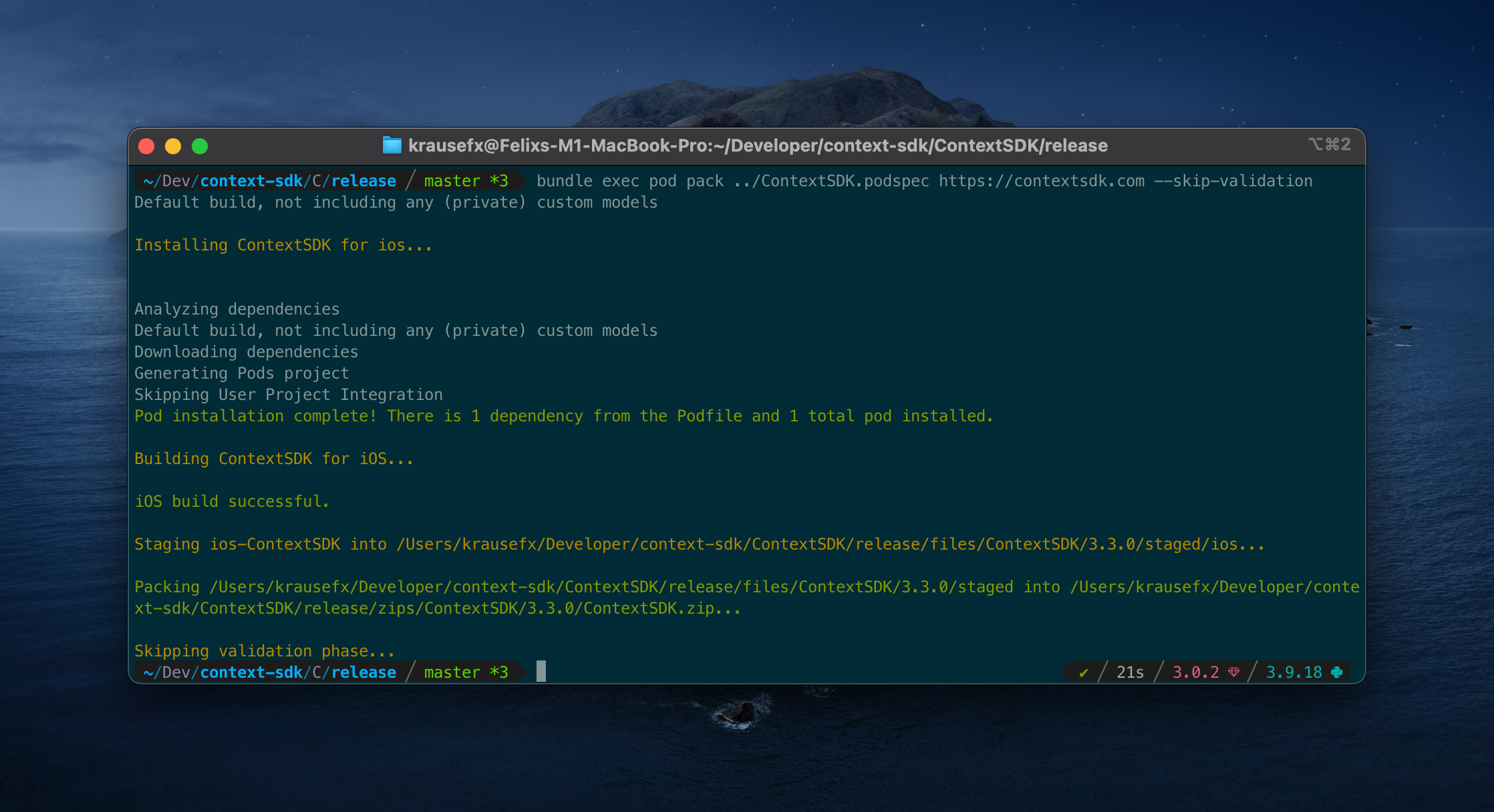

Thanks to the excellent cocoapods-pack project, started by Dimitris by Square, it’s easily possible to compile your SDK for distribution to your customers. After installing the gem, you can use the following command:

bundle exec pod pack ../ContextSDK.podspec https://contextsdk.com --skip-validation

Now open up the folder ./zips/ContextSDK/3.2.0/ and you will see a freshly prepared ContextSDK.zip. You can’t distribute that zip file right-away, as it contains an additional subfolder called ios, which would break the distribution through CocoaPods when we tested it.

As part of our deployment pipeline, we run the following Ruby commands to remove the ios folder, and re-zip the file:

puts "Preparing ContextSDK framework for release..."

sh("rm -rf zips")

sh("bundle exec pod pack ../ContextSDK.podspec https://contextsdk.com --skip-validation") || exit(1)

sh("rm -rf files")

# Important: we need to unzip the zip file, and then zip it again without having the "ios" toplevel folder

# which will break CocoaPods support, as CococaPods only looks inside the root folder, not iOS

zip_file_path = "zips/ContextSDK/#{@version_number}/ContextSDK.zip"

sh("unzip #{zip_file_path} -d zips/current")

sh("cd zips/current/ios && zip -r ../ContextSDK.zip ./*") # Now zip it again, but without the "ios" folder

return "zips/current/ContextSDK.zip"

ContextSDK.zip is now ready for distribution. If you unzip that file, you’ll see the ContextSDK.xcframework contained directly, which is what your users will add to their Xcode project, and will be picked up by CocoaPods.

There are no extra steps needed: the ZIP file you created above is everything that’s needed. Now you can provide the following instructions to your users:

ContextSDK.xcframework folder into the Xcode file listFrameworks, Libraries, and Embedded Content, add ContextSDK.xcframework, and select Embed & Sign

Distributing your pre-compiled .xcframework file through CocoaPods requires some extra steps.

You need a second ContextSDK.podspec file, that will be available to the public. That podspec will only point to your pre-compiled binary, instead of your source code, therefore it’s safe to distribute to the public.

Pod::Spec.new do |s|

s.name = 'ContextSDK'

s.version = '3.2.0'

s.homepage = 'https://contextsdk.com'

s.documentation_url = 'https://docs.contextsdk.com'

s.license = { :type => 'Commercial' }

s.author = { 'ContextSDK' => '[email protected]' }

s.summary = 'Introducing the most intelligent way to know when and how to monetize your use'

s.platform = :ios, '14.0'

s.source = { :http => '[URL to your ZIP file]' }

s.xcconfig = { 'FRAMEWORK_SEARCH_PATHS' => '"$(PODS_ROOT)/ContextSDK/**"' }

s.frameworks = 'AVFoundation'

s.requires_arc = true

s.swift_version = '5.7'

s.module_name = 'ContextSDK'

s.preserve_paths = 'ContextSDK.xcframework'

s.vendored_frameworks = 'ContextSDK.xcframework'

end

Make both your podspec, and your ZIP file available to the public. Once complete, you can provide the following instructions to your users:

Podfile:

pod 'ContextSDK', podspec: '[URL to your public .podspec]'

pod install

Create a new git repo (we called it context-sdk-releases), which will contain all your historic and current releases, as well as a newly created Package.swift file:

// swift-tools-version:5.4

import PackageDescription

let package = Package(

name: "ContextSDK",

products: [

.library(

name: "ContextSDK",

targets: ["ContextSDK"]),

],

dependencies: [],

targets: [

.binaryTarget(

name: "ContextSDK",

path: "releases/ContextSDK.zip"

)

]

)

You can use the same zip file we’ve created with SPM as well. Additionally, you’ll need to make use of git tags for releases, so that your customers can pinpoint a specific release. You can either make this repo public, or you’ll need to manually grant read permission to everyone who wants to use SPM.

To your users, you can provide the following instructions:

https://github.com/context-sdk/context-sdk-releases as dependencyAs we were building out our automated SDK distribution, we noticed there aren’t a lot of guides online around how to best develop, build and distribute your SDK as a pre-compiled binary, so we hope this article helps you to get started.

If you have any questions, feel free to reach out to us on Twitter or LinkedIn, or subscribe to our newsletter on contextsdk.com.

Note: This is a cross-post of the original publication on contextsdk.com.