2026-03-02 21:22:57

Hi all,

Here’s your Monday round-up of data driving conversations this week in less than 250 words.

Let’s go!

Switching it up ↑ Claude’s mobile app saw over 500,000 downloads on Saturday – its biggest day on record.

Chip deal ↑ Google has signed a multi-billion-dollar TPU deal with Meta, part of an internal push that executives have said could capture up to 10% of Nvidia’s ~$200 billion annual revenue.

DIY tech boom ↑ China has launched a ~$144 billion fund for tech self‑reliance, around 0.7% of 2025 GDP.

Business adoption ↑ ChatGPT now serves over 9 million business subscribers, according to OpenAI.

2026-03-01 17:40:13

You offer a clarifying framing to what has changed in just the past 25 years, and for grasping both the scale and depth of that transformation. — Danie H., a paying member

Hi all,

Welcome to the Sunday edition, in which we make sense of the week behind us.

Enjoy!

This past week, I convened the first AI Vistas dialogue, designed so that even the experts in the room learn by engaging with each other on one hard question. In our first dialogue, I explored Are we in charge of our AI tools or are they in charge of us? with , , and . A few themes that stood out to me:

Autonomy is no longer simply “I chose this,” but “I chose this with a cognitive exoskeleton that is steerable, hackable, and designed by others.”

Everyone has a generative core that must be protected from offloading to AI. We should all understand where our best thinking happens and ring-fence it from automation.

As AI takes over the “visible” work, human institutions must focus on invisible work: building attention, intuition, social and embodied skills.

, a research shop, published a speculative Substack post about the logical extreme of where AI disruption might take us. Their 2028 scenario has agentic tools letting developers clone mid-market SaaS in weeks, collapsing software pricing power overnight. This alarms other firms, which cut white-collar headcount and double down on AI. The dynamic continues to eat away at the demographic responsible for 75% of discretionary consumer spending. By 2028, they suggest unemployment at 10.2%, the S&P down 38%.

It sounds like a catastrophe. Citrini’s piece had a 3.4-to-1 ratio of negative/crisis words to positive ones, with 198 subjective/emotional words. It was designed to elicit an emotional response. And it did.

I take two issues with their essay – Citrini assumes no adaptation and that everything happens everywhere all at once. As investor Gavin Baker observed, the scarcity of watts and wafers will significantly slow the scenario. He estimates we’d need 1,000x more compute than we have today for anything resembling Citrini’s scenario. AI firms already face a compute crunch (as we argued here). This may raise the cost of compute and make human labor much more cost-competitive. My hunch is this, and other factors, like data center protests, will attenuate the rate of diffusion.

Citrini, of course, is not all wrong. The first flywheel has kicked off – cheap, fast software is already undercutting mid-market SaaS. But this focuses on the wrong part of the story. This month, someone on our team replaced a $40/month SaaS product in a couple of hours by building something that actually worked how they work. In this case, it’s not a job loss but a capability gain. We need to look at the gains and the pinch points of the transition. The collateral damage of railroads cannot be ignored, but nor can the fact that they didn’t just kill the canal companies; they also created an entirely new geography of economic possibility.

In creative destruction, markets always show what is destroyed first. And the markets are selling the canal phase of an AI transition that, like railroads, will destroy visible incumbents while building new economic possibilities. But that destruction will be slowed by the compute crunch, which buys time for adaptation that Citrini doesn’t consider.

See also:

Anthropic has released Claude for COBOL, an archaic software language still powering 43% of banking, 95% of ATMs, and $3 trillion+ in daily transactions. The average COBOL programmer is 64 years old. IBM dropped 13%.

Jack Dorsey is cutting Block’s workforce nearly in half, betting that AI tools paired with smaller, flatter teams is the way forward.

This week, the US government became, by a significant margin, the world’s most aggressive regulator of artificial intelligence. Poor Europe is losing even this ignominious title.

Defence Secretary Pete Hegseth designated Anthropic a national security supply chain risk, effectively barring federal contractors from using its technology in government work. Hours later, Trump directed every federal agency to follow suit. No Chinese AI company has received the same designation. It’s quite an astonishing sucker-punch.

The proximate cause was Anthropic’s refusal to lift all safety constraints on military use of Claude, around autonomous targeting and AI-assisted mass surveillance. These aren’t unreasonable positions; they reflect genuine technical concerns about where AI capability ends and unacceptable risk begins. But the punishment for holding them was disproportionate, a tool designed for compromised semiconductor supply chains and foreign hardware manufacturers, repurposed to punish an American AI lab.

The deeper problem is the one I keep returning to – the laws governing this space were written for a different technological era. FISA was last substantially updated in 2008. Defence contracting frameworks assume software is a passive, bounded tool. Neither statute has a vocabulary for AI systems that can synthesise fragmented surveillance data across datasets in minutes, or for the specific danger zone Anthropic identified: capable enough for defence use, not safe enough for autonomous lethal decisions. Raw executive power rushed into that gap.

2026-02-28 01:34:37

In today’s live, I gave a behind‑the‑scenes look at my OpenClaw agent, R Mini Arnold, and how it runs as a 24/7 chief of staff on a Mac mini using multiple specialized sub‑agents. I also showed how this kind of personal agent setup is already transforming my day‑to‑day work.

Enjoy.

Azeem

2026-02-26 00:46:11

This is the first AI Vistas discussion, a new series hosted by Exponential View where I bring people I trust into conversation around one hard question, because together we can see what none of us would see alone.

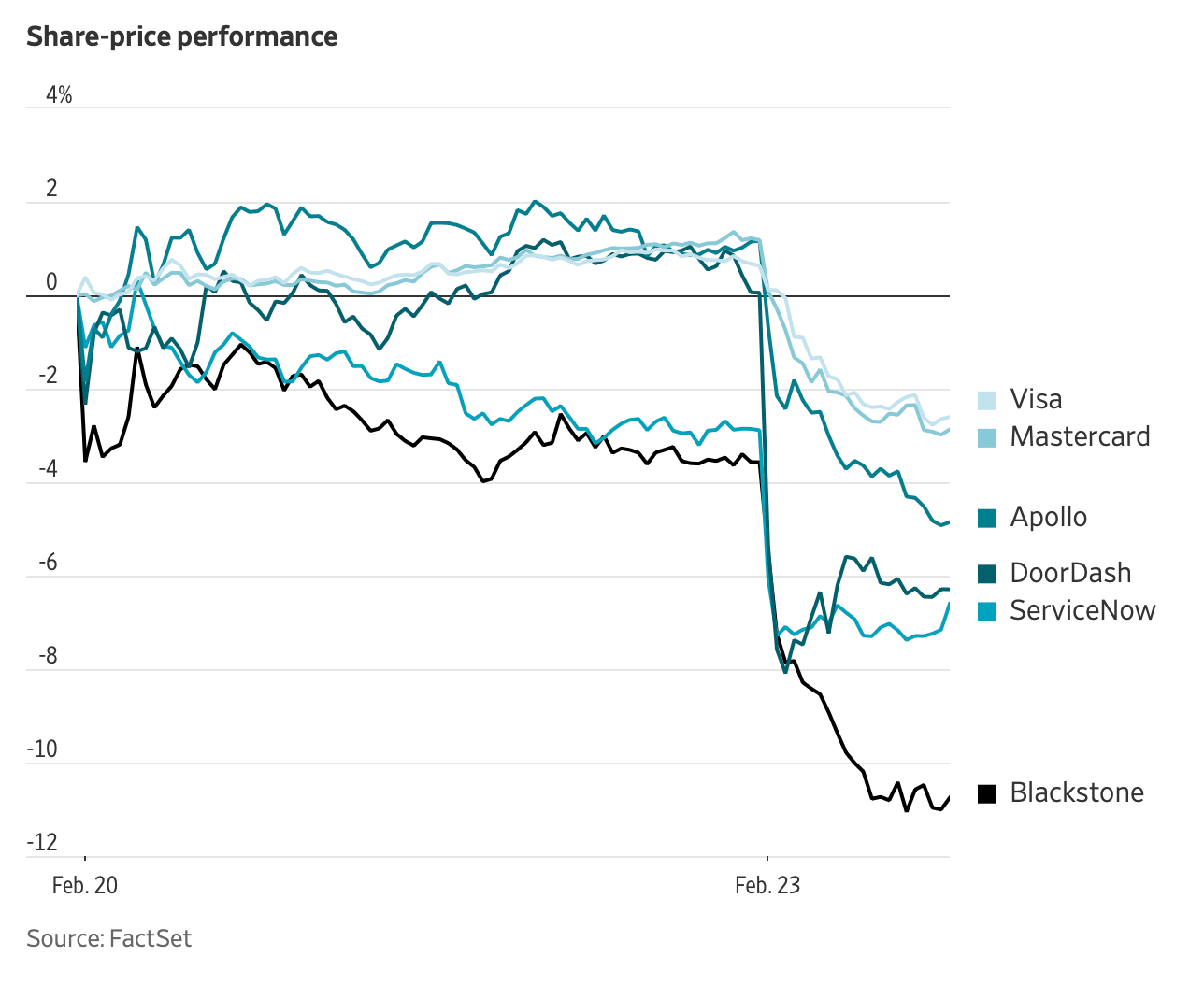

Two weeks ago, I read something in the financial press about a pattern called a dispersion event. I didn’t fully understand it, so before a short car ride, I asked my OpenClaw agent R Mini Arnold to explain it to me and find the data to evidence it.

By the time I arrived, the agent had pulled thirty years of market data and flagged contradictions in my recent thinking I hadn’t noticed. I adjusted my portfolio on the spot. The decision was mine, but the thinking behind it… it gets blurry. Who’s in charge here – me or my agent?

I wanted to sit with that, and not alone. I convened a conversation with , who wrote The Battle for Your Brain and advised President Obama on the ethics of the human mind; , one of the most cited medical researchers alive with a frontline view into AI implementation in medicine; , engineer and economist and former hedge fund manager now building AI platforms; and , CEO of The Atlantic, who moderated the dialogue.

Watch the full recording here.

Listen on Apple Podcasts.

Transcript (lightly edited for clarity and flow) is below.

Nick Thompson: Azeem, you’ve committed thousands of lines of agent code this year, built multiple apps since Christmas. But the car-journey story sounds like you were in control – you asked a question, got an answer, made a call. Give me the version where the blur actually kicks in.

Azeem Azhar: The blur is subtler than the question itself. My agent – I was using OpenClaw at the time – will often say, “In our previous conversations this week, you said X, and that seems to contradict what you’re asking now.” I’d been talking to it about AI bottlenecks and doubling down on the AI trade. Then I asked about downside risk. It spotted the contradiction: on one hand I was worried about a crash, on the other I was thinking of increasing my exposure. It drew my attention to it. We had a conversation, and it reframed the question I was asking.

Nita Farahany: This gets at a fundamental question about what it means to act autonomously. Are you acting in a way consistent with your own desires, or are you being steered by somebody else’s desires? There’s very little we do these days that is steered by our own desires.

I’m teaching a class this semester at Duke on mental privacy, advanced topics in AI law and policy. I ran an attention audit on my students. They recorded how many times they picked up their devices over three days, and what they spent their attention on. Day one: record it, don’t change your behaviour. Day two: no apps that algorithmically steer you — which meant basically nothing was allowed. Day three: do whatever you want, record it again. Day two was remarkable. Somebody read a book. They couldn’t remember the last time they’d done that. These are students. Someone finished a puzzle they’d been convinced they didn’t have time for. And day three? Worse than day one. Utterly sucked back in.

Is Azeem in charge? His behaviour is partly in charge, he told his agent to research a specific question. But the fact that he’s reacting to information it provides, constantly in this loop of notification and response, thinking about what his agents are doing while he’s being driven, it’s deeply interrelated. There is no longer a clear point where Azeem ends and his tools begin. They are an extended part of who he is.

Nick: And immensely hackable. He’s controlling his portfolio based on this. If I were a smart hedge fund, I’d figure out how to hack OpenClaw to steer his investments my way.

Nita: The very fact that Azeem has offloaded the question to his AI tool is the more fundamental question. It’s more subtle, more pervasive, and more universal than someone intentionally manipulating his portfolio.

Rohit Krishnan: One thing that surprises me is that despite these tools being insecure, the number of negative instances is surprisingly rare. The ability to cause harm is rising alongside the ability to do good and yet the bad events stay low. Think about it from a hedge fund’s perspective: where do you put your resources? Is hacking OpenClaw going to generate more alpha than putting that same effort into something else? The answer always seems to be no. So my OpenClaw talks to my email and occasionally answers them. I’m reasonably okay with that threat surface, despite knowing prompt injections exist.

Nick: Eric, does Rohit’s framework – that actual harms trail perceived dangers – hold in medicine?

Eric Topol: I don’t think we know yet. There are several studies now comparing AI alone versus AI with doctors, on various types of clinical performance. And AI did better than the doctors with AI. That wasn’t expected. Everything was supposed to be hybrid. The under-performers are more likely to accept the AI’s input, whereas the experts reject the AI’s good input. And if you extend that to having agentic support, perhaps even more so. We still don’t even know. Is it because doctors have automation bias? Is it because they aren’t grounded in how to use AI? It’s really fuzzy right now. The medical world is not as advanced as a lot of other domains that are adopting AI more quickly, because clinical decision-making is much more tricky and delicate.

Nick: That goes against something I’ve heard you say, Azeem – that humans using AI well should be much stronger than AI alone.

Azeem: What Eric described is a phenomenon we’ve seen across knowledge work. People below average improve with AI. People at the top get worse because they override its suggestions. But I wonder if there’s a U-shaped curve hiding in the data. Below average: you improve. Top quartile: you overthink it. Truly exceptional: you master the machine. Look at Andrej Karpathy, one of the great deep learning engineers, a much better software developer than any of us. He’s handed enormous amounts of his work to AI systems. He’s the ultimate expert but his response is: he’s getting more done, pushing limits much further.

Nita: Let me be the philosopher for a moment. What are we actually trying to measure? In Eric’s domain it’s clearer: did you get the diagnosis right? Is the patient better off? But in other areas, we’re not comparing apples to apples. Take education. Measure whether the essay is better or worse and the essay improves with AI. But measure the learning outcome and the answer flips. The human is worse off, the essay is better. So, is humanity better off with more polished AI-produced essays, or worse off because the people who wrote them didn’t learn?

Nick: So the solution is to let AI make the super consequential decisions – like surgery – but keep humans in the loop for the developmental stuff, like essays?

Nita: Ask the question over the long term. Today, should you use AI for a life-threatening decision? Yes. But carry it out: now you have physicians who no longer have intuition about whether to operate. People who can’t interrogate code because they don’t understand it – they’re pulling the AI slot machine for different output rather than critically evaluating it. Over time, are we better off or worse off? I worry in the long term that we will end up in a much worse position than in the short term.

Nick: So are you saying there are specific cases where the AI would get the surgery right more often, and we know it, and some patients would die from the human getting it wrong – but you’d still prefer that world because at least the humans stay competent?

Nita: That’s an artificial construct, Nick. The real question is what we invest in so that people maintain what I’m calling a constituent of competency over time. How do we do both? Use AI for the consequential decision today, and build the systems that keep humans capable of navigating it in the future. That’s the question nobody is asking.

Rohit: Maybe one thing to consider is whether those physicians were any good at working with AI. Every consequential decision I’ve made in the last three or four years has been with AI. Sometimes I’ve agreed, sometimes I’ve disagreed. But over time you build up a sense of when it’s accurate and when it’s not. These studies aren’t Jeopardy, where an expert gives one answer and AI gives another and you pick one. In the real world there are time constraints, checks and balances, multiple people looking at the same call. That context doesn’t show up in the papers.

Nick: Eric, so maybe the anomaly isn’t something fundamental – it’s just about this moment, where doctors don’t yet understand how to use AI. Once we learn, it’ll be fine?

Eric: That’s the hope, Nick. The medical community likes that explanation best.

Eric: The other issue in medicine we’ve just seen confirmed is de-skilling. There’s now a study of gastroenterologists who let AI find the polyps. Over time, when you turn the AI off, they’re not as good at finding polyps themselves. And then there are the younger doctors coming through who are going to be never skilled in the first place. The hope is that the hybrid everybody expected – human plus AI, better than either alone – eventually wins. But we don’t know.

Azeem: De-skilling is real. And what Nita and Eric are drawing attention to is a gap in education. In these early stages, it’s up to us to figure out our own pedagogy for maintaining skills. I spend a lot of time writing with this (fountain pen), computer turned off. Yesterday I had a session with a colleague where we worked on a problem in silence, on paper, no phones, no computers, for a couple of hours. We do that often in my team. Maybe it’s the wrong thing. But it’s our gesture toward understanding de-skilling – this sloppy vibing that has you moving further and further from your own mental capacities.

Nick: But aren’t you also doing the opposite? You’ve been writing about getting AI to write for you, trying to make it a better stylist. And writing is thinking, if you de-skill your writing, you de-skill your thinking.

Nita: I’m going to push back on that. I’ve heard “writing is thinking” too many times now, and I think it’s crap. When I write, I actually give a talk first. I think in public speaking more than in written form. I think in keynote form, how do I tell this story? Once I’ve told it and interacted with audiences a number of times, I can reduce it to writing. My thinking doesn’t happen primarily through writing. The problem is that if we don’t recognize that each person’s generative capacity works differently – for Azeem it might be the fountain pen, for someone else it’s talking it out, or painting – we end up with blanket rules that miss the point. Once you figure out where your generative constituent of competence lives, that’s the thing you protect from offloading.

Nick: So your advice is: figure out where you do your best thinking, then don’t let AI anywhere near that.

Nita: Exactly. Preserve the core thing that enables you to be flexible across novel situations. Don’t offload all generative thought. An email from me? Probably AI. A lecture recap? Often AI. But a keynote or a novel idea, that’s where my thinking happens. I figure it out in spoken form, then reduce it to writing. Maybe I’ll let AI be my copy editor. But the thinking itself stays with me.

Rohit: The way I look at it is, I have more capabilities at my disposal, and by default I end up spending time on what I actually want to do. It’s endogenous, I end up spending less time on things I care less about and more time on things I care more about. And that shifts. What I hold onto today is different from a year ago or ten years ago, because we change over time. Part of it might be a specific type of writing, part of it might be a specific type of research or public speaking – each one is different for all of us. So I think about it less as “what do I withhold from AI?” and more as: given this agent exists, what can we do together? If I get confidence it works, I hand off more. Same dynamic as having a really good PA – once you understand what they’re good at, you say “you take care of that, I’ll do this.”

Azeem: I’ve had so much more time in what Nita calls the space of generative constitutive competence. I spend more time writing, more time reading. I write many of my Exponential View essays longhand first, read them out, get them transcribed. It reminds me of something Eric introduced to me years ago: the idea that these tools could give doctors time back to be better doctors. In 2026, I’m doing more good thinking than four years ago, when I was battling an inbox and Google searches.

Nick: Azeem, you seem to spend part of your days in 2100 and part of your days in 1450. It’s beautiful to hear.

Eric: I agree it’s deeply personal. If I’m writing a paper or a blog, I feel like using AI to write it would be cheating. It isn’t right to share with other humans if it’s not my product. Maybe I’ll move into 2100 with Azeem at some point. But it’s changing, it’s dynamic. You offload more and more of the things that are just making your life more automated, more practical. Getting time back in medicine is probably the greatest goal, because it’s squeezed so badly. If you can get rid of the data-clerk function, the mundane tasks, you can be a much better doctor. And the patient-doctor relationship can get restored.

Azeem: Here’s what I struggle with, though. I’m still moving at speed. And great thinking comes from attention, observation, chewing things over. Many of our examples have time constraints – see the patient now, ship the code by Friday. But really great thinking has traditionally happened with care and self-reflection. Am I doing the quality of thinking I could do with ten uninterrupted days?

Nita: There’s a way to use AI for that, too. I came across a blog post describing what they call a “potato prompt.” I adopted it for Claude. The idea is that whenever I type “potato” followed by an argument, the AI tells me three ways it could fail, two counter-arguments, and a blind spot I’ve missed. And it has to be hostile, shed all niceties.

The first couple of times, I wanted to shrink into a hole and cry. It’s devastating. But it’s made my thinking so much sharper. Every time I throw up an argument and think, “that was a brilliant insight, Nita,” the potato prompt interrogates it. I still bounce ideas off friends. But that instant, real-time hostile feedback is a way to use AI to challenge you rather than offload.

Rohit: In general, I disagree that education can get us out of this. Educational systems were set up to do something fairly specific. The kids who learn these tools best are the ones who just play with them. That was true of the internet, true of mobile, true of programming.

With AI it’s exacerbated, because it is the easiest thing in the world to use. My eight-year-old accidentally discovered Canva has an AI feature – I didn’t even know. He’s been making little websites about dinosaurs. Nobody taught him. It’s a text box; he knows how to type. We need more whimsy and play, not structured courses.

Nita: Our systems have focused on output rather than core competencies. There’s a German school that trialled a mindfulness programme, a breathing programme. And a nationwide one in the US called Learning to Breathe. They develop interoceptive skills in children: embodiment, grounding, developing intuition. And they’ve shown a direct impact on math scores, because learning to inhabit your own body develops the cognitive capacities that make thinking possible. The tools come later. The capacity for thinking is what education should build.

Azeem: I’ll be the relentless optimist. We haven’t tried this in any consistent way over thirty or forty years. In professional services, we’ve ended up with lawyers who have terrible client interaction patterns and doctors whose bedside manner ranges from warm to terrifying – all without paying attention to these skills. Perhaps now we’ll be forced to. And that’s more of an opportunity than a deep problem.

Eric: We’re definitely training medical students the wrong way. They’re selected for test scores – which AI can already do better on anyway – and grade-point averages, not interpersonal communication skills. And not one medical school out of 160 in the US has AI in the core curriculum. It’s almost like it doesn’t exist. Whether it’s the selection or the education, we haven’t even acknowledged that this is the transformation of medicine.

Azeem: And to what extent are we looking for that high reliability in differential diagnosis, which is actually a mechanistic, algorithmic thing? Machines do that better than people. We’ve needed humans to do it because the knowledge was scarce, it was hard to get into a human skull. Maybe now that it can be offloaded, we develop the other part that delivers patient outcomes: the constitutive awareness, the embodied understanding of what it is to be a person.

Eric: Absolutely. Diagnostic accuracy will be one of the greatest gifts of AI in medicine. We already have good indicators of that. It’s just a matter of deploying it right.

Nick: We’re all cautiously optimistic. But AI is about to become much more powerful. What’s the thing you’re looking for, the development that tips us onto a better or worse track in the next year?

Nita: I’m picking up on something Eric said – that he feels like he’s cheating if he puts AI-written work into the world. That’s the tipping point I’m watching. We’ve been talking about selves: building them, maintaining them. But we exist in an ecosystem of information, the corpus of human knowledge we all draw from. The more of it that becomes synthetic, the less felt human experience is out there. People are trying to track how much internet content is now AI-produced. We don’t know what that does – to children’s cognitive development, to our collective ability to think.

Azeem: There was a moment in the atomic weapons era when you could measure exactly when it started – in atmospheric data, a radioisotope that wasn’t there before and then suddenly was. I think we’re approaching that moment with AI-generated text. If you’re on X, you see essays produced at a rate of knots by very busy people who aren’t writers. I read them because there’s insight, data, argument. But I’m curious whether what looks good in our mind starts to change because of constant exposure, and we won’t notice it’s happened.

Nick: What if the AI slop gets better than what humans produce? What if the average writing quality on X is higher in 2028 than 2023?

Rohit: The average quality is already higher. But people dislike it much more. I use Pangram (a tool for detecting AI-generated text) – it’s everywhere. Not just X. Journalists, published articles. And “slop” is the right word, because it has all the appearance of a fulfilling meal, but it has no calories. It’s fairly hollow. That’s AI doing something really badly. The things it does well – building the apps Azeem uses every day, or that I use – that’s real capability. If I got AI to write one of my articles and then had to rewrite it, that’s more work, not less.

Azeem: AI content also tells us something about how mechanised the production of words had already become. Certain research reports, academic papers, marketing copy, these were essentially algorithms carried out by humans, like the female computers at early NASA executing calculations we now hand to machines. The real writing happens somewhere else, and it’s extremely difficult.

All my efforts to build an AI-enabled style guide, I wanted one that helped people adhere to how I write. It cannot replicate what I do, except in trivial ways: I prefer words with Germanic roots to Latin ones, I write 91% active sentences, I vary sentence length within paragraphs. Those are the surface. What’s missing is what pops out of embodied, lived experience. And I guess the challenge we face is that as these tools become pervasive – and many of the experiences we get, whether it’s Netflix or TikTok or the products in the shops, are mediated through them – my experience is going to be that much more blunted, and therefore that much more aligned with what an external set of AI systems are doing, than what I might have done had I experienced things a little more raw.

Nita: I’ve been looking at the studies on meaning-making. The impact is more on the contributor than on the receiver. Your contribution to the corpus of human knowledge, that is part of the act of becoming. The brain needs that act. When AI replaces it, you don’t experience the same effect. One of the most influential books in my life is Flowers for Algernon. I read it in second grade — way too early, crying the whole time. It took Daniel Keyes fourteen years to write. It started when he was a medical student; later he had an encounter with someone who had intellectual challenges. All of those felt experiences went into the book. Is that different from the ninety minutes Azeem spent distilling his worldview with AI? It may be. We don’t know. But we have to acknowledge that something qualitatively different happens in fourteen years of writing than in ninety minutes of AI-assisted distillation.

If you’re working iteratively with AI to bring your voice and lived experience – using it as a vehicle to channel that – it’s different from someone who generates a LinkedIn post without putting themselves in it at all. There is a qualitative difference.

Azeem: There is a quality of difference. But here’s something I built today. We’ve written millions of words at EV. I wanted to extract our house view – what do I believe about certain things, and how have those beliefs shifted? I had AI run across all those words to build a concept map: the ten or twelve positions I’ve held and how they’ve moved over time. I personally reviewed it, then shared it with the team. The human work of ninety minutes would otherwise have been a thousand hours. I did less than 0.2% of the labour. But I still had the sense I was gifting something useful, usable, and distinctly me.

Rohit: Any time AI gets good enough, we start seeing it as a tool – because it becomes dependable. When it’s at the edge, we see it as the destruction of some intrinsic humanity. We’ve done this in every field: radiology, finance, supply chain. Art feels different because it’s a uniquely human endeavour. But whenever AI gets good enough to do a piece of work we ourselves wanted to do, we’ve generally been happy to hand it off and go write more books like Flowers for Algernon.

Eric: It’s a little bit like Quebec and the rest of Canada. You have to have deliberate intent to preserve your language, otherwise it’s crowded out. If we don’t have deliberate intent to keep AI as a tool, which is where I think it belongs, we’ll lose a part of humanity that is essential.

Azeem: Deliberate intent, that’s it. Deliberate intent about our own capabilities. Deliberate intent that these things are tools and however we interrogate them, however we anthropomorphise them, they are tools, and should stay as such.

Thank you for reading. This is the transcript of our first AI Vistas session. If you find it insightful, please share it widely – that’s the single best way to tell us this format is worth doing again.

2026-02-24 00:59:33

Hi all,

Here’s your Monday round-up of data driving conversations this week in less than 250 words.

Let’s go!

The AI economy ↑ AI capex is estimated to account for 64-80% of US Q4 2025 growth.

Autonomy needs humans ↓ Waymo’s entire remote guidance operation runs on just 70 human operators — a 43:1 car-to-human ratio. GM’s robotaxi service Cruise had 1.5 staff per car.1

Speed war ↑ Canadian startup Taalas’ latest hardware can run AI models nearly 10x faster than the previous state-of-the-art by hard-wiring the model directly onto chips.

New exit routes ↑ Secondary sales2 of private startup shares have grown from 3% of all VC exits in 2015 to 31% today.

2026-02-22 11:59:20

Azeem is the GOAT of AI analysis. – Max P., a paying member

Hi all,

Welcome to the Sunday edition. Today’s email stays unusually tightly focused for us: wages, robots, and token costs – and how, together, they’re beginning to rewire the basic economics of work.

Enjoy!

I’ve been living with my always-on AI agent, R Mini Arnold, for a couple of weeks now. What it’s already made clear is that once you drop the transaction cost of delegation by an order of magnitude, a personalized agent becomes infrastructure for knowledge work – in my case, it’s cleared a backlog of tasks that would otherwise never get done. I write about this in my weekend essay and alongside that reading, my conversation with digs into how we both use agents to write and think:

After years of the “Solow Paradox 2.0”, seeing AI everywhere except in the productivity statistics, early 2026 is an inflection point. argues that we’re seeing “a US productivity increase of roughly 2.7%.” Revised BLS data show “robust GDP growth alongside lower labor input” signals that the economy is transitioning out of the “investment valley” and into the “harvest phase”. points out that recent micro-studies consistently demonstrate significant performance boosts from generative AI, even if not yet widely reflected across the economy.

Most firms remain in shallow adoption. A new study by Nicholas Bloom and colleagues shows that, despite 70% of firms claiming to use AI, senior executives spend on average just 1.5 hours a week with these tools. Around 20% of firms report productivity gains, but the remaining 80% do not, so the aggregate impact on productivity over the past three years is essentially flat.

My view is that these micro gains are now turning into broader gains. Our own data shows that American public firms more frequently cite quantitative success measures when discussing their genAI projects. But I also think these will staccato across the economy, unevenly, dependent on firm leadership, access to capital, workforce capability and other factors. From a distance, the curve will be smooth; up close, it’ll be a juddery staircase.