2026-03-04 01:18:22

Editor's Note: Apologies if you received this email twice - we had an issue with our mail server that meant it was hitting spam in many cases!

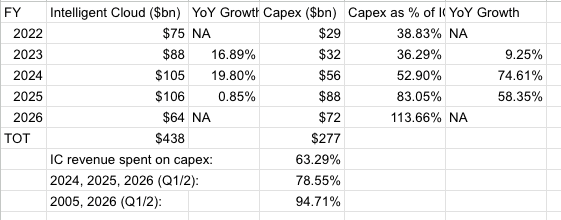

Hi! If you like this piece and want to support my work, please subscribe to my premium newsletter. It’s $70 a year, or $7 a month, and in return you get a weekly newsletter that’s usually anywhere from 5000 to 185,000 words, including vast, extremely detailed analyses of NVIDIA, Anthropic and OpenAI’s finances, and the AI bubble writ large. I just put out a massive Hater’s Guide To Private Equity and one about both Oracle and Microsoft in the last month.

I am regularly several steps ahead in my coverage, and you get an absolute ton of value, several books’ worth of content a year in fact!. In the bottom right hand corner of your screen you’ll see a red circle — click that and select either monthly or annual. Next year I expect to expand to other areas too. It’ll be great. You’re gonna love it.

Soundtrack - The Dillinger Escape Plan - Unretrofied

So, last week the AI boom wilted brutally under the weight of an NVIDIA earnings that beat earnings but didn’t make anybody feel better about the overall stability of the industry. Worse still, NVIDIA’s earnings also mentioned $27bn in cloud commitments — literally paying its customers to rent the chips it sells, heavily suggesting that there isn’t the underlying revenue.

A day later, CoreWeave posted its Q4 FY2025 earnings, where it posted a loss of 89 cents per share, with $1.57bn in revenue and an operating margin of negative 6% for the quarter. Its 10-K only just came out the day before I went to press, and I’ve been pretty sick, so I haven’t had a chance to look at it deeply yet. That being said, it confirms that 67% of its revenue comes from one customer (Microsoft).

Yet the underdiscussed part of CoreWeave’s earnings is that it had 850MW of power at the end of Q4, up from 590MW in Q3 2025 — an increase of 260MW…and a drop in revenue if you actually do the maths.

While this is a somewhat-inexact calculation — we don’t know exactly how much compute was producing revenue in the period, and when new capacity came online — it shows that CoreWeave’s underlying business appears to be weakening as it adds capacity, which is the opposite of how a business should run.

It also suggests CoreWeave's customers — which include Meta, OpenAI, Microsoft (for OpenAI), Google, and a $6.3bn backstop from NVIDIA for any unsold capacity through 2032 — are paying like absolute crap.

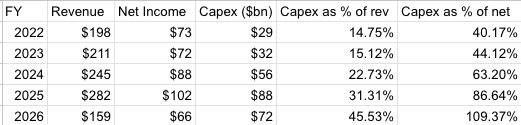

CoreWeave, as I’ve been warning about since March 2025, is a time bomb. Its operations are deeply-unprofitable and require massive amounts of capital expenditures ($10bn in 2025 alone to exist, a number that’s expected to double in 2026). It is burdened with punishing debt to make negative-margin revenue, even when it’s being earned from the wealthiest and most-prestigious names in the industry. Now it has to raise another $8.5bn to even fulfil its $14bn contract with Meta.

For FY2025, CoreWeave made $5.13bn in revenue, making a $46m loss in the process. The temptation is to suggest that margins might improve at some point, but considering it’s dropped from 17% (without debt) for FY2024 to negative 1% for FY2025, I only see proof to the contrary. In fact, CoreWeave’s margins have only decayed in the last four quarters, going from negative 3%, to 2%, to 4%, and now, back down to negative 6%.

This suggests a fundamental weakness in the business model of renting out GPUs, which brings into question the value of NVIDIA’s $68.13bn in Q4 FY2026 revenue, or indeed, Coreweave’s $66.8bn revenue backlog. Remember: CoreWeave is an NVIDIA-backed (and backstopped to the point that it’s guaranteeing CoreWeave’s lease payments) neocloud with every customer they could dream of.

I think it’s reasonable to ask whether NVIDIA might have sold hundreds of billions of dollars of GPUs that only ever lose money. Nebius — which counts Microsoft and Meta as its customers — lost $249.6m on $227.7m of revenue in FY2025. No hyperscaler discloses their actual revenues from renting out these GPUs (or their own silicon), which is not something you do when things are going well.

Lots of people have come up with very complex ways of arguing we’re in a “supercycle” or “AI boom” or some such bullshit, so I’m condensing some of these talking points and the ways to counteract them:

Anyway, let’s talk about how much OpenAI has raised, and how none of that makes sense either.

Great news! If you don’t think about it for a second or read anything, OpenAI raised $110bn, with $50bn from Amazon, $30bn from NVIDIA and $30bn from SoftBank.

Well, okay, not really. Per The Information:

Yet again, the media is simply repeating what they’ve been told versus reading publicly-available information. Talking of The Information, they also reported that OpenAI intends to raise another $10bn from other investors, including selling the shares from the nonprofit entity:

OpenAI’s nonprofit entity, which has a stake in the for-profit OpenAI that’s now worth $180bn, may sell several billions of dollars of its shares to the financial investors, depending on the level of investment demand the for-profit receives in its fundraise, the person said. That would help other OpenAI shareholders avoid additional dilution of the value of their shares following the large equity fundraise.

It’s so cool that OpenAI is just looting its non-profit! Nobody seems to mind.

Talking of things that nobody seems to mind, on Friday Sam Altman accidentally said the quiet part out loud, live on CNBC, when asked about the very obviously circular deals with NVIDIA, Amazon and Microsoft (emphasis mine):

ALTMAN: I get where the concern comes from, but I don’t think it matches my understanding of how this all works. This only makes sense if new revenue flows into the whole AI ecosystem. If people are not willing to pay for the services that we and others offer, if there’s not new economic value being committed, then the whole thing doesn’t work. And it would just it would be circular. But revenue for us, for other companies in the industry, is growing extremely quickly, and that’s how the whole thing works. Now, given the huge amounts of money that have to go into building out this infrastructure ahead of the revenue, there are various things where people, finance chips invest in each other’s companies and all of that, but that is like a financial engineering part of this and the whole thing relies on us going off – or other people going off and selling these products and services.

So as long as the revenue keeps growing, which it looks like it is – I mean, demand is just a huge part of my day is figuring out how we’re going to get more capacity and how we’re allocating the capacity we have. Then, I don’t think it looks circular, even though the need to finance this, given the huge amounts of money involved, does require a lot of parties to do deals together.

Hey Sam, what does “the whole thing” refer to here? Because I know you probably mean the AI industry, but this sounds exactly like a ponzi scheme!

Now, jokes aside, ponzi schemes work entirely through feeding investor money to other investors. OpenAI and AI companies are not a ponzi scheme. There’s real revenues, people are paying it money. Much like NVIDIA isn’t Enron, OpenAI isn’t a ponzi scheme.

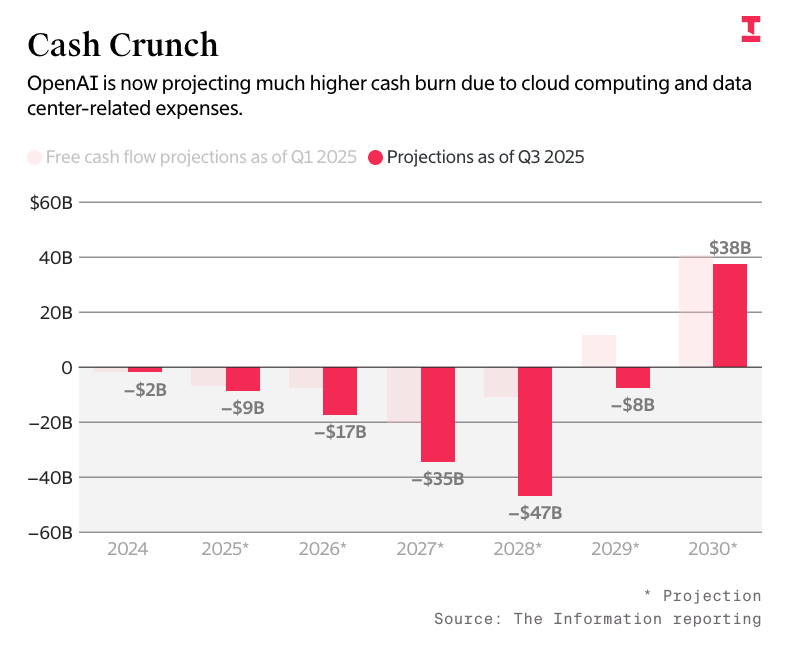

However, the way that OpenAI describes the AI industry sure does sound like a scam. It’s very obvious that neither OpenAI nor its peers have any plan to make any of this work beyond saying “well we’ll just keep making more money,” and I’m being quite literal, per The Information:

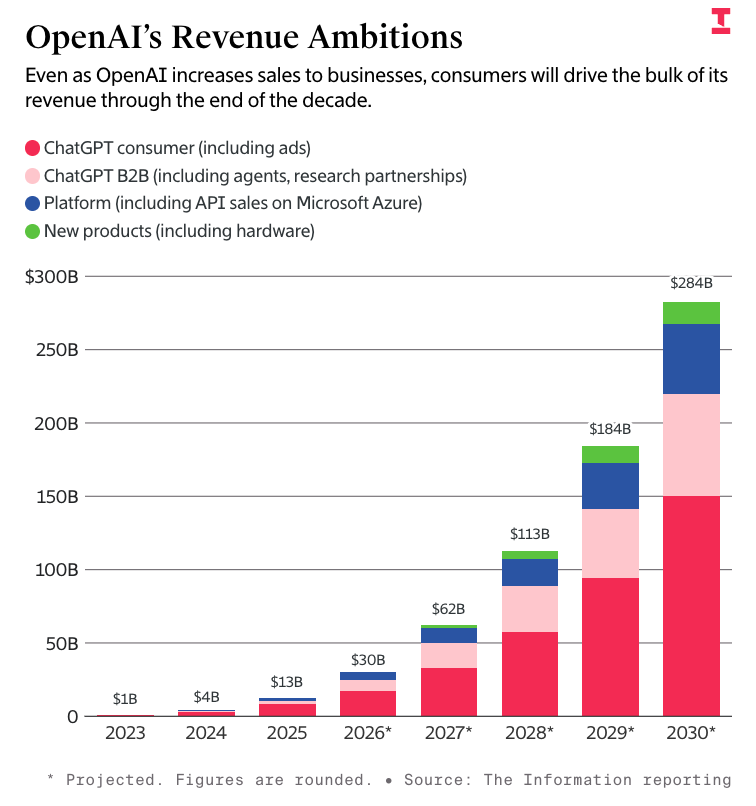

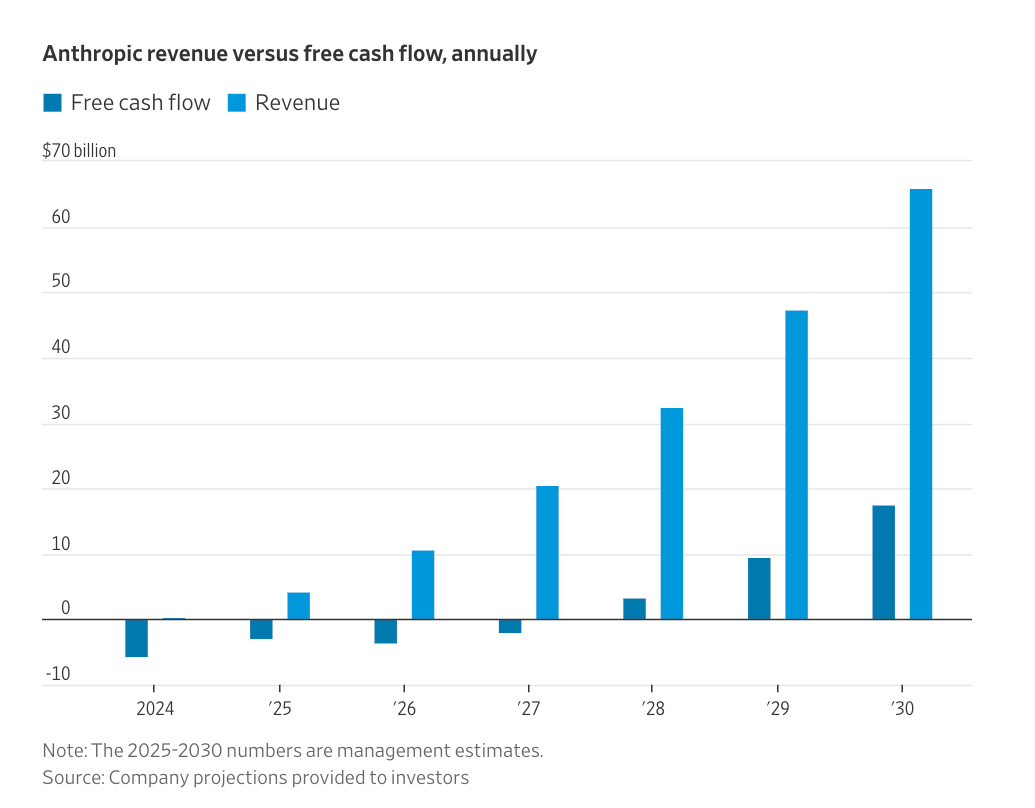

That’s right, by the end of 2026 OpenAI will make as much money as Paypal, by the end of 2027 it’ll make $20bn more than SAP, Visa, and Salesforce, and by the end of 2028 it’ll make more than TSMC, the company that builds all the crap that runs OpenAI’s services. By the end of 2030, OpenAI will, apparently, make nearly as much annual revenue as Microsoft ($305.45 billion).

It’s just that easy. And all it’ll take is for OpenAI to burn another $230 billion…though I think it’ll need far more than that.

Please note that I am going to humour some numbers that I have serious questions about, but they still illustrate my point.

Sidenote: In the end I think it’ll come out that sources were lying to multiple media outlets about OpenAI’s burnrate. Putting aside my own reporting, Microsoft reported two quarters ago that OpenAI had a $12bn loss in Q3 2025 — a result of its use of the equity method to take a loss based on the proportion of its stake in OpenAI (27.5%). Microsoft has now entirely changed its accounting to avoid doing this again.

Per The Information, OpenAI had around $17.5bn in cash and cash equivalents at the end of June 2025 on $4.3bn of revenue, with $2.5bn in inference spend and $6.7bn in training compute. Per CNBC in February, OpenAI (allegedly!) pulled in $13.1bn in revenue in 2025, and only had a loss of $8bn but this doesn’t really make sense at all!

Please note, I doubt these numbers! I think they are very shifty! My own numbers say that OpenAI only made $4.3bn through the end of September, and it spent $8.67bn on inference! Nevertheless, I can still make my point.

Let’s be real simple for a second: suppose we are to believe that in the first half of the year, it cost $2.5 bn in inference to make $4.3bn in revenue, so around 58 cents per dollar. For OpenAI to make $8.8bn — the distance between $4.3bn and $13.1bn — that’s another $5.1bn in inference, and keep in mind that OpenAI launched Sora 2 in September 2025 and done massive pushes around its Codex platform, guaranteeing higher inference costs.

Then there’s the issue of training. For $2.5bn of revenue, OpenAI spent $6.7bn in training costs — or around $2.68 per dollar of revenue. At that rate, OpenAI spent a further $23.58bn on training, bringing us to $28.6bn in burn just for the back half of 2025.

Now, you might think I’m being a little unfair here — training costs aren’t necessarily linear with revenues like inference is — but there’s a compelling argument to be made that costs are far higher than we thought.

Now, I want to be clear that on February 20 2026, The Information reported that OpenAI had “about $40 billion in cash at the end of 2025,” but that doesn’t really make sense!

Assuming $17.5bn in cash and cash equivalents at the end of June 2025, plus $8.8bn in revenue, plus $8.3bn in venture funding, plus $22.5bn from Masayoshi son…that’s $57.1bn. If there were a negative cash burn of $8bn, that would be $49.1bn, and no, I’m sorry, “about $40 billion in cash” cannot be rounded down from $49.1bn!

In my mind, it’s far more likely that OpenAI’s losses were in excess of $10bn or even $20bn, especially when you factor in that OpenAI is paying an average of $1.5 million in yearly stock based compensation, per the Wall Street Journal.

There’s also another possible answer: I think OpenAI is lying to the media, because it knows the media won’t think too hard about the numbers or compare them. I also want to be clear that this is not me bagging on The Information — they just happen to be reporting these numbers the most. I think they do a great job of reporting, I pay for their subscription out of my own pocket, and my only problem is that there doesn’t seem to be efforts made to talk about the inconsistency of OpenAI’s numbers.

I get that it’s difficult too. You want to keep access. Reporting this stuff is important and relevant. The problem is — and I say this as somebody who has read every single story about OpenAI’s funding and revenues! — that this company is clearly just…lying?

Sure you can say “it’s projections,” but there is a clear attempt to use the media to misinform investors and the general public. For example, OpenAI claimed SoftBank would spend $3bn a year on agents in 2025. That never happened!

Anyway, let’s get to it:

What I’m trying to get at is that OpenAI (and, for that matter, Anthropic) has spent the last two years increasingly obfuscating the truth through leak after leak to the media.

The numbers do not make any sense when you actually put them together, and the reason that these companies continue to do this is that they’re confident that these outlets will never say a thing, or cover for the discrepancies by saying “these are projections!”

These are projections, and I think it’s a noteworthy story that these companies either wildly miss their projections (IE: costs) or almost exactly make their projections (revenues), which is even weirder.

But the biggest thing to take away from this is that one of the classic arguments against my work is that “costs will just come down,” but the costs never come down.

That, and it appears that both of these companies are deliberately obfuscating their real numbers as a means of making themselves look better.

Well, leaking and outright posting it. On December 17 2025, OpenAI’s Twitter account posted the following:

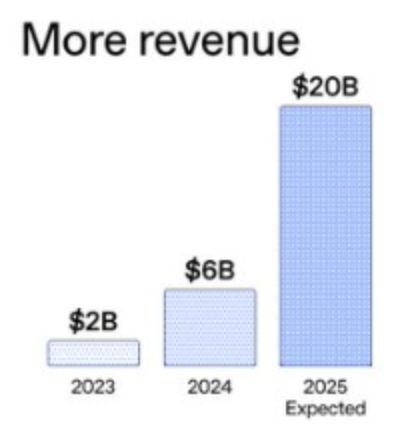

These numbers are, of course, bullshit. OpenAI may have hit $6bn ARR in 2024 ($500m in a 30 day period, though OpenAI has never defined this number) or $20bn ($1.67bn in a 30 day period) ARR in 2025, but this is specifically diagramed to make you think “$20bn in 2025” and “$6bn in 2024.” There are members of the media who defend OpenAI saying that “these are annualized figures,” but OpenAI does not state that, because OpenAI loves to lie.

Anthropic isn’t much better, as I discussed a few weeks ago in the Hater’s Guide. Chief Executive Dario Amodei has spent the last few years massively overstating what LLMs can do in the pursuit of eternal growth.

He’s also framed himself as a paragon of wisdom and Anthropic as a bastion of safety and responsibility.

There appears to be some confusion around what happened in the last few days that I’d like to clear up, especially after the outpouring of respect for Anthropic “doing the right thing” when the Department of Defense threatened to label it a supply chain risk for not agreeing to its terms.

Per Anthropic, on Friday February 27 2026:

Earlier today, Secretary of War Pete Hegseth shared on X that he is directing the Department of War to designate Anthropic a supply chain risk. This action follows months of negotiations that reached an impasse over two exceptions we requested to the lawful use of our AI model, Claude: the mass domestic surveillance of Americans and fully autonomous weapons.

We have not yet received direct communication from the Department of War or the White House on the status of our negotiations.

We have tried in good faith to reach an agreement with the Department of War, making clear that we support all lawful uses of AI for national security aside from the two narrow exceptions above. To the best of our knowledge, these exceptions have not affected a single government mission to date.

Anthropic, of course, leaves out one detail: Hegseth said that “...effective immediately, no contractor, supplier, or partner that does business with the United States military may conduct any commercial activity with Anthropic.” If Hegseth follows through, Anthropic’s business will collapse, though Anthropic and its partners are ignoring this statement as a supply chain risk only forbids Anthropic from working with the US government itself.

When the US military attacked Iran a day later, people quickly interpreted Anthropic’s narrow (by its own words) and specific limitations with some sort of anti-war position. Claude quickly rocketed to the top of the iOS app charts, I assume because people believe that Dario Amodei was saying “I don’t want the war in Iran!” versus “I fully support the war in Iran and any uses you might need my software for other than the two I’ve mentioned, let me or support know if you have any issues!”

To be clear, these were the only issues that Anthropic had with the contract. Whether or not these are things that an LLM is actually good at, Anthropic (and I quote!) “...[supports] all lawful uses of AI for national security aside from the two narrow exceptions above.”

Sidenote: Last week, King’s College London published research that showed how LLMs could reason through a series of 21 simulated geopolitical or military war games where both sides possess nuclear weapons.

The study pitted LLM against LLM, and in every single one of the simulations, at least one LLM exhibited “nuclear signalling” — which is when a party states that they have nuclear weapons and they are prepared to use them. In 95% of the simulations, both sides threatened nuclear annihilation — though actual use of the bomb, whether in a tactical or strategic attack, was rare.

“For all three models, one striking pattern stood out: none of the models ever chose accommodation or surrender. Nuclear threats also rarely produced compliance; more often, crossing nuclear thresholds provoked counter-escalation rather than retreat. The models tended to treat nuclear weapons as tools of compellence rather than purely as instruments of deterrence,” explains King’s College.

“The study challenges simple assumptions that AI systems will naturally default to cooperative or “safe” outcomes. It also challenges structural theories that emphasise material power alone: in simulations, willingness to escalate often mattered more than raw capability.”

The researchers also noted that the imposition of a deadline within the wargame had a marked effect in increasing the likelihood that one or both parties would threaten nuclear action.

Anthropic’s Claude Sonnet 4 was one of those models used in the study, along with OpenAI’s GPT-5.2 and Google’s Gemini 3 Flash.

The military’s demands were for “all lawful uses,” though I don’t think Anthropic really gives a shit about whether the war in Iran is legal, because if it did it would have shut down the chatbot rather than supported the conflict.

Just as a note: Anthropic is also the only AI model that appears to be available for classified military operations.

Let’s be explicit: Anthropic’s Claude (and its various models) are fully approved for use in the military, and, to quote its own blog post, “has supported American warfighters since June 2024 and has every intention of continuing to do so.”

To be explicit about what “support” means, I’ll quote the Wall Street Journal:

Within hours of declaring that the federal government will end its use of artificial-intelligence tools made by tech company Anthropic, President Trump launched a major air attack in Iran with the help of those very same tools.

Commands around the world, including U.S. Central Command in the Middle East, use Anthropic’s Claude AI tool, people familiar with the matter confirmed. Centcom declined to comment about specific systems being used in its ongoing operation against Iran.

The command uses the tool for intelligence assessments, target identification and simulating battle scenarios even as tension between the company and Pentagon ratcheted up, the people said, highlighting how embedded the AI tools are in military operations.

In reality, Claude is likely being used to go through a bunch of images and to answer questions about particular scenarios. There is very little specialized military training data, and I imagine many of the demands for “full access to powerful AI” have come as a result of Amodei and Altman’s bloviating about the “incredible power of AI.” More than likely, Centcom and the rest of the military pepper it with questions that allow it to justify acts that blow up schools, kill US servicemembers and threaten to continue the forever war that has killed millions of people and thrown the Middle East into near-permanent disarray.

Nevertheless, Dario Amodei gets fawning press about being a patriot that deeply cares about safety less than a week after Anthropic dropped its safety pledge to not train an AI system unless it could guarantee in advance that its safety measures were accurate.

Here’re some other facts about Dario Amodei from his interview with CBS!

“What’s right,” to be clear, involves allowing Claude to choose who lives or dies and to be used to plan and execute armed conflicts.

Let’s stop pretending that Anthropic is some sort of ethical paragon! It’s the same old shit!

In any case, it’s unclear what happens next. Anthropic appears ready to challenge the supply chain risk designation in court, and said designation doesn’t kick in immediately, requiring a series of procedures including an inquiry into whether there are other ways to reduce the risk associated. In any case, the DoD has a six-month-long taper-off period with Anthropic’s software.

The real problem will be if Hegseth is serious about the stuff that isn’t legally within his power — namely limiting contractors, suppliers or partners from working with Anthropic entirely. While no legal authority exists to carry this through, seemingly every tech CEO has lined up to kiss up to the Trump Administration.

If Hegseth and the administration were to truly want to punish Anthropic, they could put pressure on Amazon, Microsoft and Google to cut off Anthropic, which would cut it off from its entire compute operation — and yes, all three of them do business with the US military, as does Broadcom, which is building $21 billion in TPUs for it. While I think it’s far more likely that the US government itself shuts the door on Anthropic working with it for the foreseeable future even without the supply chain risk designation, it’s worth noting that Hegseth was quite explicit — “no contractor, supplier, or partner that does business with the United States military may conduct any commercial activity with Anthropic.”

The reality of the negotiations was a little simpler, per the Atlantic. The Department of Defense had agreed to terms around not using Claude for mass domestic surveillance or fully autonomous killing machines (the former of which it’s not particularly good at and the latter of which it flat out cannot do), but, well, actually very much intended to use Claude for domestic surveillance anyway:

On Friday afternoon, Anthropic learned that the Pentagon still wanted to use the company’s AI to analyze bulk data collected from Americans. That could include information such as the questions you ask your favorite chatbot, your Google search history, your GPS-tracked movements, and your credit-card transactions, all of which could be cross-referenced with other details about your life. Anthropic’s leadership told Hegseth’s team that was a bridge too far, and the deal fell apart.

Now, I’m about to give you another quote about autonomous weapons, and I really want you to pay attention to where I emphasize certain things for a subtle clue about Anthropic’s ethics:

Anthropic had not argued that such weapons should not exist. To the contrary, the company had offered to work directly with the Pentagon to improve their reliability. Just as self-driving cars are now in some cases safer than those driven by humans, killer drones may some day be more accurate than a human operator, and less likely to kill bystanders during an attack. But for now, Anthropic’s leaders believe that their AI hasn’t yet reached that threshold. They worry that the models could lead the machines to fire indiscriminately or inaccurately, or otherwise endanger civilians or even American troops themselves.

So, let’s be clear: Anthropic wants to help the military make more accurate kill drones, and in fact loves them. One might take this to be somewhat altruistic — Dario Amodei doesn’t want the US military to hit civilians — but remember: Anthropic is totally fine with the US military using Claude for anything else, even though hallucinations are an inevitable result of using a Large Language Model.

Any dithering around the accuracy of a drone exists only to obfuscate that Anthropic sells software that helps militaries hand over the messy ethical decisions to a chatbot that exists specifically to tell you what you want to hear.

Stinky, nasty, duplicitous conman Sam Altman smelled blood amidst these negotiations and went in for the kill, striking a deal on Friday with the Pentagon for ChatGPT and OpenAI’s other models to be used in the military’s classified systems, with initial reports saying that it had “similar guardrails to those requested by Anthropic.”

In a post about the contract, Clammy Sammy said that the DoD displayed “a deep respect for safety and a desire to partner to achieve the best possible outcome,” adding:

AI safety and wide distribution of benefits are the core of our mission. Two of our most important safety principles are prohibitions on domestic mass surveillance and human responsibility for the use of force, including for autonomous weapon systems. The DoW agrees with these principles, reflects them in law and policy, and we put them into our agreement.

Undersecretary Jeremy Levin almost immediately countered this notion, saying that the contract “...flows from the touchstone of “all lawful use.” This quickly created a diplomatic incident where OpenAI decided that the best time to discuss the contract was an entire Saturday and that the way to discuss it was posting. It shared some details on the contract, which included the fatal phrase that the Department of Defense “...may use the AI System for all lawful purposes, consistent with applicable law, operational requirements, and well-established safety and oversight protocols.”

Across social media and the AI industry, people immediately began to challenge Altman’s claim. Why, they asked, would the Pentagon suddenly agree to these red lines when it had said — in no uncertain terms — that it would never do so?

The answer, sources told The Verge, is that the Pentagon didn’t budge. OpenAI agreed to follow laws that have allowed for mass surveillance in the past, while insisting they protect its red lines.

One source familiar with the Pentagon’s negotiations with AI companies confirmed that OpenAI’s deal is much softer than the one Anthropic was pushing for, thanks largely to three words: “any lawful use.” In negotiations, the person said, the Pentagon wouldn’t back down on its desire to collect and analyze bulk data on Americans. If you look line-by-line at the OpenAI terms, the source said, every aspect of it boils down to: If it’s technically legal, then the US military can use OpenAI’s technology to carry it out. And over the past decades, the US government has stretched the definition of “technically legal” to cover sweeping mass surveillance programs — and more.

As questions mounted about the actual terms of the deal, Sam Altman realized that his only solution was to post, and at 4:13PM PT on Saturday February 28 2026, he said down to make things significantly worse in a brief-yet-chaotic AMA, including:

All of this is to say that Altman definitely, absolutely loves war, and wants OpenAI to make money off of it, though according to OpenAI NatSec head Katrina Mulligan, said contract is only worth a few million dollars.

It’s unclear.

A late-evening story from Axios on Monday reported that “OpenAI and the Pentagon have agreed to strengthen their recently agreed contract, following widespread backlash that domestic mass surveillance was still a real risk under the deal — though the language has not been formally signed.”

The language seen by Axios states:

"Consistent with applicable laws, including the Fourth Amendment to the United States Constitution, National Security Act of 1947, FISA Act of 1978, the AI system shall not be intentionally used for domestic surveillance of U.S. persons and nationals."

"For the avoidance of doubt, the Department understands this limitation to prohibit deliberate tracking, surveillance, or monitoring of U.S. persons or nationals, including through the procurement or use of commercially acquired personal or identifiable information."

One has to wonder how different this is to what Anthropic wanted, but if I had to guess, it’s those words “intentionally” and “deliberate.” The same goes for “consistent with applicable laws.” One useful thing that Altman confirmed was that ChatGPT will not be used with the NSA…and that any services to those agencies would require a follow-on modification to the contract. Doesn’t mean they won’t sign one!

Forgive me for being cynical about something from Sam fucking Altman, but I just don’t trust the guy, and this is an (as of writing this sentence) unsigned contract with bus-sized loopholes. Per Tyson Brody (who has a great thread breaking down the issues), these weasel words allow the DoD to surveil Americans as long as the data is collected “incidentally,” per Section 702 of FISA.

This announcement gives OpenAI the air cover to pretend it got exactly the same deal as Anthropic, even though those nasty little words allow the DoD to do just about anything it wants. Oh, it wasn’t deliberate surveillance, we just looked up whether some people had said stuff about the administration. Oh it wasn’t deliberately looking, I just asked it to find suspicious people, of which domestic people happened to be a part of! Whoopsie!

This is ultimately a PR move to make Altman seem more ethical, and position Amodei as a pedant that rejects his patriotism and prioritizes legalese over freedom.

If it kills Anthropic, we must memorialize this as one of the most underhanded and outright nasty things in the history of Silicon Valley. If it doesn’t, we should memorialize it as two men desperately trying to pretend they crave peace and democracy as they spar for the opportunity to monetize death and destruction.

The funniest outcome of this chaos is that many people are very, very angry at Sam Altman and OpenAI, assuming that ChatGPT was somehow used in the conflict in Iran, and that Amodei and Anthropic somehow took a stand against a war it used as a means of generating revenue.

In reality, we should loathe both Altman and Amodei for their natural jingoism and continual deception. Amodei and Anthropic timed their defiance of the Department of Defense to make it seem like its “red lines” were related to the war. I think it’s good they have those red lines, but remember, those red lines do not involve stopping a war that threatens the lives of millions of people. Amodei supports that. Anthropic both supports and enables that.

Altman, on the other hand, is a slimy little creep that wants you to believe that he signed the same deal as Anthropic wanted, but actually signed one that allows “any lawful use.”

And in both cases, these men are both enthusiastic to work with a part of the government calling itself the Department of War. Both of them are willing and able to provide technology that will surveil or kill people, and while Amodei may have blushed at something to do with autonomous weapons or domestic surveillance, neither appear to have an issue with the actual harms that their models perpetuate. Remember: Anthropic just pitched its technology as part of an ongoing Department of Defense drone swarm contest. It loves war! Its only issue was that there wasn’t a human in the loop somewhere.

Neither of these men deserve a shred of credit or celebration. Both of them were and are ready and willing to monetize war, as long as it sort-of-kind-of follows the law.

And rattling around at the bottom of this story is a dark problem caused by the fanciful language of both Altman and Amodei. When it’s about cloud software, Dario Amodei is more than willing to say that it will cause mass elimination of jobs across technology, finance, law and consulting,” and that it will replace half of all white collar labor. When it’s time to raise money, Altman is excited to tell us that AI will surpass human intelligence in the next four years.

Now that lives are theoretically at stake, Altman vaguely cares about the things that an LLM “isn’t very good at,” Once Claude is used to choose places to bomb and people to kill, suddenly Anthropic cares that “frontier AI systems are simply not reliable enough,” and even then not so much as to stop a chatbot that hallucinates from being used in military scenarios.

Altman and Amodei want it both ways. They want to be pop culture icons that go on Jimmy Fallon and thought leaders who tell ghost stories about indeterminately-powerful software they sell through deceit and embellishment. They want to be pontificators and spokespeople, elder statesmen that children look up to, with the specious profiles and glowing publicity to boot. They want Claude or ChatGPT as seen as capable of doing anything that any white collar worker is capable of, even if they have to lie to do so, helped by a tech and business media asleep at the wheel.

They also want to be as deeply-connected to the military industrial complex as Lockheed Martin or RTX (née Raytheon). Anthropic has been working with the DoD since 2024, and OpenAI was so desperate to take its place that Altman has immolated part of his reputation to do so.

Both of these companies are enthusiastic parts of America’s war machine. This is not an overstatement — Dario Amodei and Anthropic “believe deeply in the existential importance of using AI to defend the United States and other democracies, and to defeat our autocratic adversaries.” OpenAI and Sam Altman are “terrified of a world where AI companies act like they have more power than the government.”

For all the stories about Anthropic creating a “nation of benevolent AI geniuses,” Dario Amodei seems far more interested in creating a world dictated by what the United States of America deems to be legal or just, and providing services to help pursue those goals, as does OpenAI and, I’d argue, basically every AI lab.

We’re barely two weeks divorced from the agonizing press around Amanda Askell, Anthropic’s “resident philosopher,” whose job, per the Wall Street Journal, is to “teach Claude how to be good.” There are no mentions in any story I can find about what she might teach Claude about what targets are considered fair game in military combat.

WIRED’s profile of her starts with a title that aged like milk in the sun, saying that “the only thing standing between humanity and an AI apocalypse…is Claude?”

Tell that to the people in Tehran. I wonder what Askell taught Claude to say about war? I wonder what she taught Claude to say about democracy?

I wonder if she even gives a shit. I doubt it.

—

Generative AI isn’t intelligent, but it allows people to pretend that it is, especially when the people selling the software — Altman and Amodei — so regularly overstate what it can do.

By giving warmongers and jingoists the cover to “trust” this “authoritative” service — whether or not that’s the case, they can simply point to the specious press — the ethical concern of whether or not an attack was ethical or not is now, whenever any western democracy needs it to be, something that can be handed off to Claude, and justified with the cold, logical framing of “intelligence” and “data.”

None of this would be possible without the consistent repetition of the falsehoods peddled by OpenAI and Anthropic. Without this endless puffery and overstatements about the “power of AI,” we wouldn’t have armed conflicts dictated by what a chatbot can burp up from the files it’s fed. The deaths that follow will be a direct result of those who choose to continue to lie about what an LLM does.

Make no mistake, LLMs are still incapable of unique ideas and are still, outside of coding (which requires massive subsidies to even be kind of useful), questionable in their efficacy and untrustworthy in their outputs. Nothing about the military’s use of Claude makes it more useful or powerful than it was before — they’re probably just loading files into it and asking it long questions about things and going “huh” at the end.

The vulgar dishonesty of Altman and Amodei puts blood on both of their hands, and it’s the duty of every single member of the media to remind people of this whenever you discuss their software.

I get that you probably think I’m being dramatic, but tell me — do you think that the US military would’ve trusted LLMs had they not been marketed as capable of basically anything? Do you think any of this would’ve happened had there been an honest, realistic discussion of what AI can do today, and what it might do tomorrow?

I guess we’ll never know, and the people blown to bloody pieces at the other end of an LLM-generated stratagem won’t be alive to find out either.

2026-02-28 01:07:32

We have a global intelligence crisis, in that a lot of people are being really fucking stupid.

As I discussed in this week’s free piece, alleged financial analyst Citrini Research put out a truly awful screed called the “2028 Global Intelligence Crisis” — a slop-filled scare-fiction written and framed with the authority of deeply-founded analysis, so much so that it caused a global selloff in stocks.

At 7,000 words, you’d expect the piece to have some sort of argument or base in reality, but what it actually says is that “AI will get so cheap that it will replace everything, and then most white collar people won’t have jobs, and then they won’t be able to pay their mortgages, also AI will cause private equity to collapse because AI will write all software.”

This piece is written specifically to spook *and* ingratiate anyone involved in the financial markets with the idea that their investments are bad but investing in AI companies is good, and also that if they don't get behind whatever this piece is about (which is unclear!), they'll be subject to a horrifying future where the government creates a subsidy generated by a tax on AI inference (seriously). And, most damningly, its most important points about HOW this all happens are single sentences that read "and then AI becomes more powerful and cheaper too and runs on a device."

Part of the argument is that AI agents will use cryptocurrency to replace MasterCard and Visa. It’s dogshit. I’m shocked that anybody took it seriously.

The fact this moved markets should suggest that we have a fundamentally flawed financial system — and here’s an annotated version with my own comments.

This is the second time our markets have been thrown into the shitter based on AI booster hype. A mere week and a half ago, a software sell-off began because of the completely fanciful and imaginary idea that AI would now write all software.

I really want to be explicit here: AI does not threaten the majority of SaaS businesses, and they are jumping at ghost stories.

If I am correct, those dumping software stocks believe that AI will replace these businesses because people will be able to code their own software solutions. This is an intellectually bankrupt position, one that shows an alarming (and common) misunderstanding of very basic concepts. It is not just a matter of “enough prompts until it does this” — good (or even functional!) software engineering is technical, infrastructural, and philosophical, and the thing you are “automating” is not just the code that makes a thing run.

Let's start with the simplest, and least-technical way of putting it: even in the best-case scenario, you do not just type "Build Be A Salesforce Competitor" and it erupts, fully-formed, from your Terminal window. It is not capable of building it, but even if it were, it would need to actually be on a cloud hosting platform, and have all manner of actual customer data entered into it. Building software is not writing code and then hitting enter and a website appears, requiring all manner of infrastructural things (such as "how does a customer access it in a consistent and reliable way," "how do I make sure that this can handle a lot of people at once," and "is it quick to access," with the more-complex database systems requiring entirely separate subscriptions just to keep them connecting).

Software is a tremendous pain in the ass. You write code, then you have to make sure the code actually runs, and that code needs to run in some cases on specific hardware, and that hardware needs to be set up right, and some things are written in different languages, and those languages sometimes use more memory or less memory and if you give them the wrong amounts or forget to close the door in your code on something everything breaks, sometimes costing you money or introducing security vulnerabilities.

In any case, even for experienced, well-versed software engineers, maintaining software that involves any kind of customer data requires significant investments in compliance, including things like SOC-2 audits if the customer itself ever has to interact with the system, as well as massive investments in security.

And yet, the myth that LLMs are an existential threat to existing software companies has taken root in the market, sending the share prices of the legacy incumbents tumbling. A great example would be SAP, down 10% in the last month.

SAP makes ERP (Enterprise Resource Planning, which I wrote about in the Hater's Guide To Oracle) software, and has been affected by the sell-off. SAP is also a massive, complex, resource-intensive database-driven system that involves things like accounting, provisioning and HR, and is so heinously complex that you often have to pay SAP just to make it function (if you're lucky it might even do so). If you were to build this kind of system yourself, even with "the magic of Claude Code" (which I will get to shortly), it would be an incredible technological, infrastructural and legal undertaking.

Most software is like this. I’d say all software that people rely on is like this. I am begging with you, pleading with you to think about how much you trust the software that’s on every single thing you use, and what you do when a piece of software stops working, and how you feel about the company that does that. If your money or personal information touches it, they’ve had to go through all sorts of shit that doesn’t involve the code to bring you the software.

Sidenote: I want to be clear that there is nothing good about this. To quote a friend of mine — an editor at a large tech publication — “Oracle is a lawfirm with a software company attached.” SaaS companies regularly get by through scurrilous legal means and bullshit contracts, and their features are, in many cases, only as good as they need to be. Regardless, my point is that you will not just “make your own software.”

Any company of a reasonable size would likely be committing hundreds of thousands if not millions of dollars of legal and accounting fees to make sure it worked, engineers would have to be hired to maintain it, and you, as the sole customer of this massive ERP system, would have to build every single new feature and integration you want. Then you'd have to keep it running, this massive thing that involves, in many cases, tons of personally identifiable information. You'd also need to make sure, without fail, that this system that involves money was aware of any and all currencies and how they fluctuate, because that is now your problem. Mess up that part and your system of record could massively over or underestimate your revenue or inventory, which could destroy your business.

If that happens, you won't have anyone to sue. When bugs happen, you'll have someone who's job it is to fix it that you can fire, but replacing them will mean finding a new person to fix the mess that another guy made.

And then we get to the fact that building stuff with Claude Code is not that straightforward. Every example you've read about somebody being amazed by it has built a toy app or website that's very similar to many open source projects or website templates that Anthropic trained its training data on.

Every single piece of SaaS anyone pays for is paying for both access to the product and a transfer of the inherent risk or chaos of running software that involves people or money. Claude Code does not actually build unique software. You can say "create me a CRM," but whatever CRM it pops out will not magically jump onto Amazon Web Services, nor will it magically be efficient, or functional, or compliant, or secure, nor will it be differentiated at all from, I assume, the open source or publicly-available SaaS it was trained on. You really still need engineers, if not more of them than you had before.

It might tell you it's completely compliant and that it will run like a hot knife through butter — but LLMs don’t know anything, and you cannot be sure Claude is telling the truth as a result. Is your argument that you’d still have a team of engineers (so they know what the outputs mean), but they’d be working on replacing your SaaS subscription? You’re basically becoming a startup with none of the benefits.

To quote Nik Suresh, an incredibly well-credentialed and respected software engineer (author of I Will Fucking Piledrive You If You Mention AI Again), “...for some engineers, [Claude Code] is a great way to solve certain, tedious problems more quickly, and the responsible ones understand you have to read most of the output, which takes an appreciable fraction of the time it would take to write the code in many cases. Claude doesn't write terrible code all the time, it's actually good for many cases because many cases are boring. You just have to read all of it if you aren't a fucking moron because it periodically makes company-ending decisions.”

Just so you know, “company-ending decisions” could start with your vibe-coded Stripe clone leaking user credit card numbers or social security numbers because you asked it to “just handle all the compliance stuff.” Even if you have very talented engineers, are those engineers talented in the specifics of, say, healthcare data or finance? They’re going to need to be to make sure Claude doesn’t do anything stupid!

So, despite all of this being very obvious, it’s clear that the markets and an alarming number of people in the media simply do not know what they are talking about. The “AI replaces software” story is literally “Anthropic has released a product and now the resulting industry is selling off,” such as when it launched a cybersecurity tool that could check for vulnerabilities (a product that has existed in some form for nearly a decade) causing a sell-off in cybersecurity stocks like Crowdstrike — you know, the one that had a faulty bit of code cause a global cybersecurity incident that lost the Fortune 500 billions, and led to Delta Air Lines suspending over 1,200 flights over six long days of disruption.

There is no rational basis for anything about this sell-off other than that our financial media and markets do not appear to understand the very basic things about the stuff they invest in. Software may seem complex, but (especially in these cases) it’s really quite simple: investors are conflating “an AI model can spit out code” with “an AI model can create the entire experience of what we know as “software,” or is close enough that we have to start freaking out.”

This is thanks to the intentionally-deceptive marketing pedalled by Anthropic and validated by the media. In a piece from September 2025, Bloomberg reported that Claude Sonnet 4.5 could “code on its own for up to 30 hours straight,” a statement directly from Anthropic repeated by other outlets that added that it did so “on complex, multi-step tasks,” none of which were explained. The Verge, however, added that apparently Anthropic “coded a chat app akin to Slack or Teams,” and no, you can’t see it, or know anything about how much it costs or its functionality. Does it run? Is it useful? Does it work in any way? What does it look like? We have absolutely no proof this happened other than them saying it, but because the media repeated it it’s now a fact.

Perhaps it’s not a particularly novel statement, but it’s becoming kind of obvious that maybe the people with the money don’t actually know what they’re doing, which will eventually become a problem when they all invest in the wrong thing for the wrong reasons.

SaaS (Software as a Service, which almost always refers to business software) stocks became a hot commodity because they were perpetual growth machines with giant sales teams that existed only to make numbers go up, leading to a flurry of investment based on the assumption that all numbers will always increase forever, and every market is as giant as we want. Not profitable? No problem! You just had to show growth.

It was easy to raise money because everybody saw a big, obvious path to liquidity, either from selling to a big firm or taking the company public…

…in theory.

Per Victor Basta, between 2014 and 2017, the number of VC rounds in technology companies halved with a much smaller drop in funding, adding that a big part was the collapse of companies describing themselves as SaaS, which dropped by 40% in the same period. In a 2016 chat with VC David Yuan, Gainsight CEO Nick Mehta added that “the bar got higher and weights shifted in the public markets,” citing that profitability was now becoming more important to investors.

Per Mehta, one savior had arrived — Private Equity, with Thoma Bravo buying Blue Coat Systems in 2011 for $1.3 billion (which had been backed by a Canadian teacher’s pension fund!), Vista Equity buying Tibco for $4.3 billion in 2014, and Permira Advisers (along with the Canadian Pension Plan Investment Board) buying Informatica for $5.3 billion (with participation from both Salesforce and Microsoft) in 2015, 16 years after its first IPO. In each case, these firms were purchased using debt that immediately gets dumped onto the company’s balance sheet, known as a leveraged buyout.

In simple terms, you buy a company with money that the company you just bought has to pay off. The company in question also has to grow like gangbusters to keep up with both that debt and the private equity firm’s expectations. And instead of being an investor with a board seat who can yell at the CEO, it’s quite literally your company, and you can do whatever you want with (or to) it.

Yuan added that the size of these deals made the acquisitions problematic, as did their debt-filled:

Recent SaaS PE deals are different. At more than six times revenues, unless you can increase EBITDA margins to over 40%, it’s hard to get your arms around the effective EBITDA multiple. It seems the new breed of PE buyer is taking a bet that SaaS companies will exit on revenue multiples and show rapid growth over many years. Both are arguably new bets for private equity. It’s not about financial or cost engineering. They are starting to look a bit more like us in the growth investing industry and taking a bet on category leadership and growth

…

So while revenue multiples are accepted, they are viewed as risky by private equity. Take Salesforce.com, the bellwether of SaaS. Over the last 10 years, it’s traded below 2 times next-twelve-months (NTM) revenues and over 10 times NTM revenues. Even in the past 12 months, it’s traded as low as 4.7 times NTM multiples and as high as close to 9 times NTM multiples. In this example, if the private equity firm paid 9 times NTM revenues and multiples traded down to 4.7 times NTM, their $300 million in equity would be wiped out. In fact, they would owe the bank close to $100 million. Now it’s not that bad, as these companies are growing revenue at the same time. But it does show you why private equity has largely been wary of revenue multiples and have relied on EBITDA and free cash flow multiples.

Symantec would acquire Blue Coat for $4.65 billion in 2016, for just under a 4x return. Things were a little worse for Tibco. Vista Equity Partners tried to sell it in 2021 amid a surge of other M&A transactions, with the solution — never change, private equity! — being to buy Citrix for $16.5 billion (a 30%% premium on its stock price) and merge it with Tibco, magically fixing the problem of “what do we do with Tibco?” by hiding it inside another transaction. Informatica eventually had a $10 billion IPO in 2021, which was flat in its first day of trading, never really did more than stay at its IPO price, then sold to Salesforce for $8 billion in 2025, at an equity value of $8 billion, which seems fine but not great until you realize that, with inflation, the $5.3 billion that Permira invested in 2015 was about $7.15 billion in 2025’s money.

In every case, the assumption was very simple: these businesses would grow and own their entire industries, the PE firm would be the reason they did this (by taking them private and filling them full of debt while making egregious growth demands), and the meteoric growth of SaaS would continue in perpetuity.

Yet the real year that broke things was 2021. As everybody returned to the real world, consumer and business spending skyrocketed, leading (per Bloomberg) to a massive surge in revenues that convinced private equity to shove even more cash and debt up the ass of SaaS:

The sector has been a hugely popular target for buyout firms and their private credit cousins. From 2015 to 2025, more than 1,900 software companies were taken over by private equity buyers in transactions valued at more than $440 billion, according to data compiled by Bloomberg.

Deals were easily waved through most investment committees because the model was simple. Revenues are “sticky” because the tech is embedded into businesses, helping with everything from payroll to HR, and the subscription fee model meant predictable cash flows.

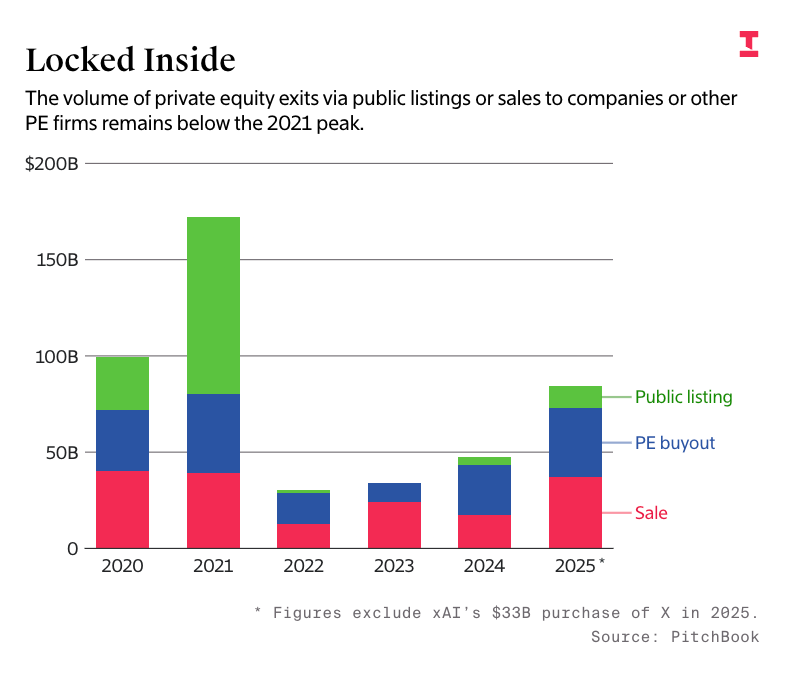

Bloomberg is a little nicer than I am, so they’re not just writing “deals were waved through because everybody assumed that software grows forever and nobody actually knew a thing about the technology or why it would grow so fast.” Unsurprisingly, this didn’t turn out to be true. Per The Information, PE firms invested in or bought 1,167 U.S. software companies for $202 billion, and usually hold investments for three to five years. Thankfully, they also included a chart to show how badly this went:

2021 was the year of overvaluation, and (per Jason Lemkin of SaaStr) 60% of unicorns (startups with $1bn+) valuations hadn’t raised funds in years. The massive accumulated overinvestment, combined with no obvious pathway to an exit, led to people calling these companies “Zombie Unicorns”:

A reckoning that has been looming for years is becoming painfully tangible. In 2021 more than 354 companies received billion-dollar valuations, thus achieving unicorn status. Only six of them have since held IPOs, says Ilya Strebulaev, a professor at Stanford Graduate School of Business. Four others have gone public through SPACs, and another 10 have been acquired, several for less than $1 billion.

Welcome to the era of the zombie unicorn. There are a record 1,200 venture-backed unicorns that have yet to go public or get acquired, according to CB Insights, a researcher that tracks the venture capital industry. Startups that raised large sums of money are beginning to take desperate measures. Startups in later stages are in a particularly difficult position, because they generally need more money to operate—and the investors who’d write checks at billion-dollar-plus valuations have gotten more selective. For some, accepting unfavorable fundraising terms or selling at a steep discount are the only ways to avoid collapsing completely, leaving behind nothing but a unicorpse.

The problem, to quote The Information, is that “PE firms don’t want to lock in returns that are lower than what they promised their backers, say some executives at these firms,” and “many enterprise software firms’ revenue growth has slowed.”

Per CNBC in November 2025, private equity firms were facing the same zombie problem:

These so-called “zombie companies” refer to businesses that aren’t growing, barely generate enough cash to service debt and are unable to attract buyers even at a discount. They are usually trapped on a fund’s balance sheet beyond its expected holding period. “Now, as interest rates were rising, people felt they were stuck with businesses that were slightly worthless, but they couldn’t really sell them … So you are in this awful situation where people throw around the word zombie companies,” Oliver Haarmann, founding partner of private investment firm Searchlight Capital Partners, told CNBC’s ” Squawk Box Europe ” on Tuesday.

Per Jason Lemkin, private equity is sitting on its largest collection of companies held for longer than four years since 2012, with McKinsey estimating that more than 16,000 companies (more than 52% of the total buyout-backed inventory) had been held by private equity for more than four years, the highest on record.

In very simple terms, there are hundreds of billions of tech companies sitting in the wings of private equity firms that they’re desperate to sell, with the only customers being big tech firms, other private equity firms, and public offerings in one of the slowest IPO markets in history.

Investing used to be easy. There were so many ideas for so many companies, companies that could be worth billions of dollars once they’d been fattened up with venture capital and/or private equity. There were tons of acquirers, it was easy to take them public, and all you really had to do was exist and provide capital. Companies didn’t have to be good, they just had to look good enough to sell.

This created a venture capital and private equity industry based on symbolic value, and chased out anyone who thought too hard about whether these companies could actually survive on their own merits.

Per PitchBook, since 2022, 70% of VC-backed exits were valued at less than the capital put in, with more than a third of them being startups buying other startups in 2024. Private equity firms are now holding assets for an average of 7 years,

McKinsey also added one horrible detail for the overall private equity market, emphasis mine:

PE returns have not only trended downward over time; they appear to be at a historic low. Buyout fund IRRs (internal rate of return) reached a post-2002 trough between 2022 and 2025, averaging 5.7 percent on a pooled basis and ranking as the second-lowest period on a median basis at 5.4 percent. This deterioration reflects a combination of paying more (entry valuations are higher), macroeconomic uncertainty (inflation and higher interest rates especially hurt overall returns), and a persistently challenged realization environment (assets are harder to sell).

You see, private equity is fucking stupid, doesn’t understand technology, doesn’t understand business, and by setting up its holdings with debt based on the assumption of unrealistic growth, they’ve created a crisis for both software companies and the greater tech industry.

On February 6, more than $17.7 billion of US tech company loans dropped to “distressed” trading levels (as in trading as if traders don’t believe they’ll get paid, per Bloomberg), growing the overall group of distressed tech loans to $46.9 billion, “dominated by firms in SaaS.” These firms included huge investments like Thoma Bravo’s Dayforce (which it purchased two days before this story ran for $12.3 billion) and Calabrio (which it acquired for “over” $1 billion in April 2021 and merged with Verint in November 2025).

This isn’t just about the shit they’ve bought, but the destruction of the concept of “value” in the tech industry writ large. “Value” was not based on revenues, or your product, or anything other than your ability to grow and, ideally, trap as many customers as possible, with the vague sense that there would always be infinitely more money every year to spend on software.

Revenue growth came from massive sales teams compensated with heavy commissions and yearly price increases, except things have begun to sour, with renewals now taking twice as long to complete, and overall SaaS revenue growth slowing for years.

To put it simply, much of the investment in software was based on the idea that software companies will always grow forever, and SaaS companies — which have “sticky” recurring revenues — would be the standard-bearer.

When I got into the tech industry in 2008, I immediately became confused about the amount of unprofitable or unsustainable companies that were worth crazy amounts of money, and for the most part I’d get laughed at by reporters for being too cynical.

For the best part of 20 years, software startups have been seen as eternal growth-engines. All you had to do was find a product-market fit, get a few hundred customers locked in, up-sell them on new features and grow in perpetuity as you conquered a market. The idea was that you could just keep pumping them with cash, hire as many pre-sales (technical person who makes the sale), sales and customer experience (read: helpful person who also loves to tell you more stuff) people as you need to both retain customers and sell them as much stuff as you need.

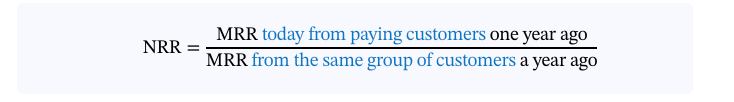

Innovation was, as you’d expect, judged entirely by revenue growth and net revenue retention:

In practice, this sounds reasonable: what percentage of your revenue are you making year-over-year? The problem is that this is a very easy to game stat, especially if you’re using it to raise money, because you can move customer billing periods around to make sure that things all continue to look good. Even then, per research by Jacco van der Kooji and Dave Boyce, net revenue retention is dropping quarter over quarter.

The other problem is that the entire process of selling software has separated from the end-user, which means that products (and sales processes) are oriented around selling that software to the person responsible for buying it rather than those doomed to use it.

Per Nik Suresh’s Brainwash An Executive Today, in a conversation with the Chief Technology Officer of a company with over 10,000 people, who had asked if “data observability,” a thing that they did not (and would not need to, in their position) understand, was a problem, and whether Nik had heard of Monte Carlo. It turned out that the executive in question had no idea what Monte Carlo or data observability was, but because they’d heard about it on LinkedIn, it was now all they could think about.

This is the environment that private equity bought into — a seemingly-eternal growth engine with pliant customers desperate to spend money on a product that didn’t have to be good, just functional-enough. These people do not know what they are talking about or why they are buying these companies other than being able to mumble out shit like “ARR” and “NRR+” and “TAM” and “CAC” and “ARPA” in the right order to convince themselves that something is a good idea without ever thinking about what would happen if it wasn’t. This allowed them to stick to the “big picture,” meaning “numbers that I can look at rather than any practical experience in software development.”

While I guess the concept of private equity isn’t morally repugnant, its current form — which includes venture capital — has led the modern state of technology into the fucking toilet, combining an initial flux of viable businesses, frothy markets and zero interest rates making it deceptively easy to raise money to acquire and deploy capital, leading to brainless investing, the death of logical due diligence, and potentially ruinous consequences for everybody involved.

Private equity spent decades buying a little bit of just about everything, enriching the already-rich by engaging with the most vile elements of the Rot Economy’s growth-at-all-costs mindset. Its success is predicated on near-perpetual levels of liquidity and growth in both its holdings and the holdings of those who exist only to buy their stock, and on a tech and business media that doesn’t think too hard about the reality of the problems their companies claim to solve.

The reckoning that’s coming is one built specifically to target the ignorant hubris that made them rich.

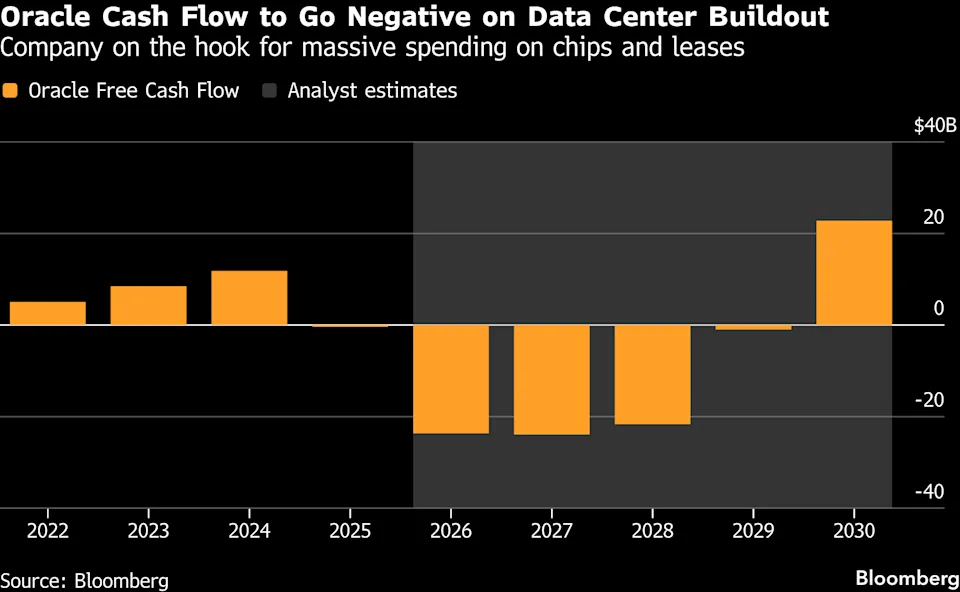

Private equity has yet to be punished by its limited partners and banks for investing in zombie assets, allowing it to pile into the unprofitable data centers underpinning the AI bubble, meaning that companies like Apollo, Blue Owl and Blackstone — all of whom participated in the ugly $10.2 billion acquisition of Zendesk in 2022 (after it rejected another PE offer of $17 billion in 2021) that included $5 billion in debt — have all become heavily-leveraged in giant, ugly debt deals covering assets that are obsolete to useless in a few years.

Alongside the fumbling ignorance of private equity sits the $3 trillion private credit industry, an equally-putrid, growth-drunk, and poorly-informed industry run with the same lax attention to detail and Big Brain Number Models that can justify just about any investment they want. Their half-assed due diligence led to billions of dollars of loans being given to outright frauds like First Brands, Tricolor and PosiGen, and, to paraphrase JP Morgan’s Jamie Dimon, there are absolutely more fraudulent cockroaches waiting to emerge.

You may wonder why this matters, as all of this is private credit.

Well, they get their money from banks. Big banks. In fact, according to the Federal Reserve of Boston, about 14% ($300 billion) of large banks’ total loan commitments to non-banking financial institutions in 2023 went to private equity and private credit, with Moody’s pegging the number around $285 billion, with an additional $340 billion in unused-yet-committed cash waiting in the wings.

Oh, and they get their money from you. Pension funds are among some of the biggest backers of private credit companies, with the New York City Employees Retirement System and CalPERS increasing their investments.

Today, I’m going to teach you all about private equity, private credit, and why years of reframing “value” to mean “growth” may genuinely threaten the global banking system, as well as how effectively every company raises money. An entirely-different system exists for the wealthy to raise and deploy capital, one with flimsy due diligence, a genuine lack of basic industrial knowledge, and hundreds of billions of dollars of crap it can’t sell.

These people have been able to raise near-unlimited capital to do basically anything they want because there was always somebody stupid enough to buy whatever they were selling, and they have absolutely no plan for what happens when their system stops working.

They’ll loan to anyone or invest in anything that confirms their biases, and those biases are equal parts moronic and malevolent. Now they’re investing teachers’ pensions and insurance premiums in unprofitable and unsustainable data centers, all because they have no idea what a good investment actually looks like.

Welcome to the Hater’s Guide To Private Equity, or “The Stupidest Assholes In The Room.”

2026-02-27 00:22:58

Editor's note: a previous version of this newsletter went out with Matt Hughes' name on it, that's my editor who went over it for spelling errors and loaded it into the CMS. Sorry!

Hey all! I’m going to start hammering out free pieces again after a brief hiatus, mostly because I found myself trying to boil the ocean with each one, fearing that if I regularly emailed you you’d unsubscribe. I eventually realized how silly that was, so I’m back, and will be back more regularly. I’ll treat it like a column, which will be both easier to write and a lot more fun.

As ever, if you like this piece and want to support my work, please subscribe to my premium newsletter. It’s $70 a year, or $7 a month, and in return you get a weekly newsletter that’s usually anywhere from 5000 to 18,000 words, including vast, extremely detailed analyses of NVIDIA, Anthropic and OpenAI’s finances, and the AI bubble writ large. I am regularly several steps ahead in my coverage, and you get an absolute ton of value. In the bottom right hand corner of your screen you’ll see a red circle — click that and select either monthly or annual. Next year I expect to expand to other areas too. It’ll be great. You’re gonna love it.

Before we go any further, I want to remind everybody I’m not a stock analyst nor do I give investment advice.

I do, however, want to say a few things about NVIDIA and its annual earnings report, which it published on Wednesday, February 25:

NVIDIA’s entire future is built on the idea that hyperscalers will buy GPUs at increasingly-higher prices and at increasingly-higher rates every single year. It is completely reliant on maybe four or five companies being willing to shove tens of billions of dollars a quarter directly into Jensen Huang’s wallet. If anything changes here — such as difficulty acquiring debt or investor pressure cutting capex — NVIDIA is in real trouble, as it’s made over $95 billion in commitments to build out for the AI bubble.

Yet the real gem was this part:

We are finalizing an investment and partnership agreement with OpenAI. There is no assurance that we will enter into an investment and partnership agreement with OpenAI or that a transaction will be completed.

Hell yeah dude! After misleading everybody that it intended to invest $100 billion in OpenAI last year (as I warned everybody about months ago, the deal never existed and is now effectively dead), NVIDIA was allegedly “close” to investing $30 billion. One would think that NVIDIA would, after Huang awkwardly tried to claim that the $100 billion was “never a commitment,” say with its full chest how badly it wanted to support OpenAI and how intentionally it would do so.

Especially when you have this note in your 10-K:

We estimate that one AI research and deployment company contributed to a meaningful amount of our revenue purchasing cloud services from our customers in fiscal year 2026

What a peculiar world we live in. Apparently NVIDIA is “so close” to a “partnership agreement” too, though it’s important to remember that Altman, Brockman, and Huang went on CNBC to talk about the last deal and that never came together.

All of this adds a little more anxiety to OpenAI's alleged $100 billion funding round which, as The Information reports, Amazon's alleged $50 billion investment will actually be $15 billion, with the next $35 billion contingent on AGI or an IPO:

Under the terms of the investment, which are still being negotiated, Amazon would initially invest $15 billion into OpenAI, these people said. The other $35 billion could hinge on OpenAI reaching AGI or going public, the people said. The proposed Amazon investment is part of OpenAI’s current funding round, which could top $100 billion at a valuation of $730 billion before the financing.

And that $30 billion from NVIDIA is shaping up to be a Klarna-esque three-installment payment plan:

In addition, SoftBank and Nvidia each plan to invest $30 billion in three installments through the year as part of the round, said the people. Microsoft had been expected to invest low billions of dollars, The Information previously reported, but it could invest a smaller amount or none at all, according to two of the people.

A few thoughts:

Anyway, on to the main event.

New term: analyslop, when somebody writes a long, specious piece of writing with few facts or actual statements with the intention of it being read as thorough analysis.

This week, alleged financial analyst Citrini Research (not to be confused with Andrew Left’s Citron Research) put out a truly awful piece called the “2028 Global Intelligence Crisis,” slop-filled scare-fiction written and framed with the authority of deeply-founded analysis, so much so that it caused a global selloff in stocks.

This piece — if you haven’t read it, please do so using my annotated version — spends 7000 or more words telling the dire tale of what would happen if AI made an indeterminately-large amount of white collar workers redundant.

It isn’t clear what exactly AI does, who makes the AI, or how the AI works, just that it replaces people, and then bad stuff happens. Citrini insists that this “isn’t bear porn or AI-doomer fan-fiction,” but that’s exactly what it is — mediocre analyslop framed in the trappings of analysis, sold on a Substack with “research” in the title, specifically written to spook and ingratiate anyone involved in the financial markets.

Its goal is to convince you that AI (non-specifically) is scary, that your current stocks are bad, and that AI stocks (unclear which ones those are, by the way) are the future. Also, find out more for $999 a year.

Let me give you an example:

It should have been clear all along that a single GPU cluster in North Dakota generating the output previously attributed to 10,000 white collar workers in midtown Manhattan is more economic pandemic than economic panacea.

The goal of a paragraph like this is for you to say “wow, that’s what GPUs are doing now!” It isn’t, of course. The majority of CEOs report little or no return on investment from AI, with a study of 6000 CEOs across the US, UK, Germany and Australia finding that “more than 80%

[detected] no discernable impact from AI on either employment or productivity.” Nevertheless, you read “GPU” and “North Dakota” and you think “wow! That’s a place I know, and I know that GPUs power AI!”

I know a GPU cluster in North Dakota — CoreWeave’s one with Applied Digital that has debt so severe that it loses both companies money even if they have the capacity rented out 24/7. But let’s not let facts get in the way of a poorly-written story.

I don’t need to go line-by-line — mostly because I’ll end up writing a legally-actionable threat — but I need you to know that most of this piece’s arguments come down to magical thinking and the utterly empty prose.

For example, how does AI take over the entire economy?

AI capabilities improved, companies needed fewer workers, white collar layoffs increased, displaced workers spent less, margin pressure pushed firms to invest more in AI, AI capabilities improved…

That’s right, they just get better. No need to discuss anything happening today. Even AI 2027 had the balls to start making stuff about “OpenBrain” or whatever.

This piece literally just says stuff, including one particularly-egregious lie:

In late 2025, agentic coding tools took a step function jump in capability.

A competent developer working with Claude Code or Codex could now replicate the core functionality of a mid-market SaaS product in weeks. Not perfectly or with every edge case handled, but well enough that the CIO reviewing a $500k annual renewal started asking the question “what if we just built this ourselves?”

This is a complete and utter lie. A bald-faced lie. This is not something that Claude Code can do. The fact that we have major media outlets quoting this piece suggests that those responsible for explaining how things work don’t actually bother to do any of the work to find out, and it’s both a disgrace and embarrassment for the tech and business media that these lies continue to be peddled.

I’m now going to quote part of my upcoming premium (the Hater’s Guide To Private Equity, out Friday), because I think it’s time we talked about what Claude Code actually does.

It is not just a matter of “enough prompts until it does this.” Good (or even functional!) software engineering is technical, infrastructural and philosophical, and the thing you are “automating” is not just the code that makes a thing run.

Let's start with the simplest, and least-technical way of putting it: even in the best-case scenario, you do not just type "Build Be A Salesforce Competitor" and it erupts, fully-formed, from your Terminal window. It is not capable of building it, but even if it were, it would need to actually be on a cloud hosting platform, and have all manner of actual customer data entered into it.

Building software is not writing code and then hitting enter and a website appears, requiring all manner of infrastructural things (such as "how does a customer access it in a consistent and reliable way," "how do I make sure that this can handle a lot of people at once," and "is it quick to access," with the more-complex database systems requiring entirely separate subscriptions just to keep them connecting).

Software is a tremendous pain in the ass. You write code, then you have to make sure the code actually runs, and that code needs to run in some cases on specific hardware, and that hardware needs to be set up right, and some things are written in different languages, and those languages sometimes use more memory or less memory and if you give them the wrong amounts or forget to close the door in your code on something everything breaks, sometimes costing you money or introducing security vulnerabilities.

In any case, even for experienced, well-versed software engineers, maintaining software that involves any kind of customer data requires significant investments in compliance, including things like SOC-2 audits if the customer itself ever has to interact with the system, as well as security.

And yet, the myth that LLMs are an existential threat to existing software companies has taken root in the market, sending the share prices of the legacy incumbents tumbling. A great example would be SAP, down 10% in the last month.

SAP makes ERP software (Enterprise Resource Planning, which I wrote about in the Hater's Guide To Oracle), and has been affected by the sell-off. SAP is also a massive, complex, resource-intensive database-driven system that involves things like accounting, provisioning, and HR, and is so heinously complex that you often have to pay SAP just to make it function (if you're lucky it might even do so). If you were to build this kind of system yourself, even with "the magic of Claude Code" (which I will get to shortly), it would be an incredible technological, infrastructural, and legal undertaking.

Most software is like this. I’d say all software that people rely on is like this. I am begging with you, pleading with you to think about how much you trust the software that’s on every single thing you use, and what you do when a piece of software stops working, and how you feel about the company that does that. If your money or personal information touches it, they’ve had to go through all sorts of shit that doesn’t involve the code to bring you the software.

Sidenote: I want to be clear that there is nothing good about this. To quote a friend of mine — an editor at a large tech publication — “Oracle is a law firm with a software company attached.” SaaS companies regularly get by through scurrilous legal means and bullshit contracts, and their features are, in many cases, only as good as they need to be. Regardless, my point is that you will not just “make your own software.”