2026-02-01 18:40:38

A simple character-based heuristic outperforms fixed timers and requires zero configuration. The formula threshold = max(5, min(chars × 1.2, 120)) handles 80%+ of cases without any user tuning, while an adaptive variant using exponential moving averages can personalize detection after a brief warm-up period. For applications requiring maximum robustness, the Modified Z-Score method using Median Absolute Deviation provides statistically rigorous outlier detection that remains stable even with contaminated data.

The core problem with fixed AFK timers like the current 60-second approach is that they ignore text length entirely. Shodan A 4-character line like 「ああ...」 legitimately takes 2-3 seconds to read, making a 60-second threshold absurdly generous—it would count 57 seconds of idle time toward reading statistics. Conversely, a 200-character passage might genuinely require 90+ seconds for a learner, yet would be incorrectly flagged as AFK.

Each approach below solves the same problem with increasing sophistication. Choose based on your implementation constraints and accuracy requirements.

Algorithm 1: Multi-Tier Character Heuristic requires no historical data and works immediately. Algorithm 2: EMA Adaptive Baseline learns individual reading speeds after 5-10 text boxes. Algorithm 3: Modified Z-Score with MAD provides the most statistically robust detection but requires maintaining a rolling history window.

| Approach | Lines of Code | Accuracy | Adapts to User | Cold Start |

|---|---|---|---|---|

| Character Heuristic | ~10 | Good (80%) | No | Instant |

| EMA Adaptive | ~40 | Very Good (90%) | Yes | 5-10 samples |

| Modified Z-Score | ~60 | Excellent (95%) | Yes | 10-20 samples |

This approach requires zero configuration and no warm-up period. It works by scaling the AFK threshold proportionally to text length, bounded by sensible minimum and maximum values.

python

def is_afk(time_seconds: float, char_count: int) -> bool:

"""

Simple heuristic that works without any learning.

Returns True if the reading time indicates user was likely AFK.

"""

# Minimum threshold: even "ああ" needs reaction time

MIN_THRESHOLD = 5

# Maximum threshold: beyond this is definitely AFK

MAX_THRESHOLD = 120

# Time allowance per character (accounts for reading + processing)

# 1.2 sec/char ≈ learner reading at 50 char/min + thinking time

SECONDS_PER_CHAR = 1.2

threshold = max(MIN_THRESHOLD, min(char_count * SECONDS_PER_CHAR, MAX_THRESHOLD))

return time_seconds > thresholdWhy these specific values? Japanese reading speeds vary dramatically: native speakers read 500-1,200 characters per minute Education in Japan (8-20 char/sec), while intermediate learners read 150-300 char/min (2.5-5 char/sec). The 1.2 seconds per character accommodates the slowest learners (~50 char/min) while including a 3× multiplier for dictionary lookups, re-reading, and processing time. The 5-second minimum handles reaction time for clicking through dialogue, while the 120-second cap prevents absurdly long thresholds for text walls.

Edge case behavior:

This approach learns the user's personal reading speed over time, providing increasingly accurate detection as more data accumulates. It falls back to the simple heuristic during the warm-up period.

python

class AdaptiveAFKDetector:

def __init__(self):

self.alpha = 0.2 # EMA smoothing factor

self.ema_time_per_char = None # Learned baseline

self.sample_count = 0

# Warm-up settings

self.MIN_SAMPLES = 5

self.FALLBACK_TIME_PER_CHAR = 1.2

# Detection settings

self.ANOMALY_MULTIPLIER = 3.0

self.ABSOLUTE_MIN = 5

self.ABSOLUTE_MAX = 180

def record_reading(self, time_seconds: float, char_count: int) -> None:

"""Call after user advances to next line (confirmed not AFK)."""

if char_count < 2: # Skip very short lines

return

time_per_char = time_seconds / char_count

# Clamp extreme values to avoid polluting baseline

time_per_char = max(0.1, min(time_per_char, 5.0))

if self.ema_time_per_char is None:

self.ema_time_per_char = time_per_char

else:

# EMA: new = α × current + (1-α) × old

self.ema_time_per_char = (

self.alpha * time_per_char +

(1 - self.alpha) * self.ema_time_per_char

)

self.sample_count += 1

def is_afk(self, time_seconds: float, char_count: int) -> bool:

"""Returns True if reading time indicates AFK."""

if self.sample_count < self.MIN_SAMPLES:

# Warm-up: use generous fallback

base = self.FALLBACK_TIME_PER_CHAR

else:

base = self.ema_time_per_char

threshold = char_count * base * self.ANOMALY_MULTIPLIER

threshold = max(self.ABSOLUTE_MIN, min(threshold, self.ABSOLUTE_MAX))

return time_seconds > thresholdWhy EMA over simple moving average? EMA adapts faster to changes in reading speed (user improving over time or switching between easy/hard games), requires no fixed-size buffer, and uses a single recursive formula. Towards Data Science The α=0.2 value means recent readings have ~20% weight while the accumulated baseline has ~80%, providing stability while still responding to sustained speed changes.

Batch calculation variant: For after-the-fact analysis where all readings are available, first filter out obvious outliers using the simple heuristic, then compute the EMA baseline from the remaining "clean" readings:

python

def batch_detect_afk(readings: list[tuple[float, int]]) -> list[bool]:

"""

Batch AFK detection for after-the-fact analysis.

readings: list of (time_seconds, char_count) tuples

"""

# First pass: rough filter using simple heuristic

def rough_filter(time, chars):

return time <= max(5, min(chars * 2.0, 180))

clean_readings = [(t, c) for t, c in readings if rough_filter(t, c) and c >= 2]

if len(clean_readings) < 5:

# Not enough clean data, use simple heuristic

return [time > max(5, min(chars * 1.2, 120)) for time, chars in readings]

# Compute baseline from clean readings

time_per_char_values = [t / c for t, c in clean_readings]

baseline = sum(time_per_char_values) / len(time_per_char_values)

# Second pass: detect outliers

results = []

for time, chars in readings:

threshold = max(5, min(chars * baseline * 3.0, 180))

results.append(time > threshold)

return resultsThis method provides the most statistically rigorous outlier detection. Unlike standard Z-scores (which assume normal distributions and are sensitive to outliers), the Modified Z-Score uses medians throughout, making it robust Statology to the right-skewed distribution typical of reading times. Towards Data Science

python

from collections import deque

import statistics

class RobustAFKDetector:

def __init__(self, window_size: int = 20):

self.window_size = window_size

self.time_per_char_history = deque(maxlen=window_size)

# Modified Z-score threshold (Iglewicz & Hoaglin recommend 3.5)

self.THRESHOLD = 3.5

self.K = 0.6745 # Scaling constant for MAD

self.ABSOLUTE_MIN = 5

self.ABSOLUTE_MAX = 180

self.FALLBACK_TIME_PER_CHAR = 1.2

def record_reading(self, time_seconds: float, char_count: int) -> None:

"""Record a confirmed reading (not AFK)."""

if char_count < 2:

return

time_per_char = max(0.1, min(time_seconds / char_count, 5.0))

self.time_per_char_history.append(time_per_char)

def is_afk(self, time_seconds: float, char_count: int) -> bool:

"""Detect if current reading time is anomalous."""

if char_count < 1:

return time_seconds > self.ABSOLUTE_MIN

# Hard limit check

if time_seconds > self.ABSOLUTE_MAX:

return True

# Need minimum samples for statistical detection

if len(self.time_per_char_history) < 5:

threshold = char_count * self.FALLBACK_TIME_PER_CHAR * 3

return time_seconds > max(self.ABSOLUTE_MIN, threshold)

# Calculate MAD-based detection

data = list(self.time_per_char_history)

median = statistics.median(data)

abs_deviations = [abs(x - median) for x in data]

mad = statistics.median(abs_deviations)

# Handle edge case: MAD = 0 (all values nearly identical)

if mad < 0.01:

mad = 0.1

# Modified Z-score: M = 0.6745 × (x - median) / MAD

time_per_char = time_seconds / char_count

modified_z = self.K * (time_per_char - median) / mad

return modified_z > self.THRESHOLDWhy Modified Z-Score? Reading time distributions are right-skewed—most readings cluster near the normal speed, with a long tail of increasingly rare AFK events. Standard Z-scores using mean and standard deviation are pulled by these outliers, causing "masking" where extreme values inflate the baseline and prevent detection of moderate outliers. The Modified Z-Score using median and MAD has a 50% breakdown point, meaning it remains accurate even if half the data are outliers.

The 0.6745 constant makes the Modified Z-Score comparable to standard Z-scores under normal distributions (σ ≈ 1.4826 × MAD). The 3.5 threshold is the academic standard from Iglewicz and Hoaglin's 1993 research on robust outlier detection.

| Parameter | Recommended Value | Justification |

|---|---|---|

| MIN_THRESHOLD | 5 seconds | Reaction time floor; handles clicking through very short dialogue |

| MAX_THRESHOLD | 120-180 seconds | Beyond this is definitively AFK; 2-3 minutes is generous |

| SECONDS_PER_CHAR | 1.2 for heuristic | Accommodates 50 char/min readers with 3× processing buffer |

| EMA_ALPHA | 0.2 | 80/20 split between stability and responsiveness |

| ANOMALY_MULTIPLIER | 3.0 | Approximately 3 standard deviations from baseline |

| MODIFIED_Z_THRESHOLD | 3.5 | Academic standard for MAD-based outlier detection |

| WARM_UP_SAMPLES | 5-10 | Minimum for stable baseline estimation |

| WINDOW_SIZE | 20 | Rolling window captures recent reading patterns |

Very short sentences (「ああ...」「はい」「うん」): These 2-5 character lines legitimately take 1-3 seconds. The MIN_THRESHOLD of 5 seconds provides a generous floor while still being much better than a 60-second fixed timer. Consider flagging times under 0.3× expected as "skipped" rather than read.

Very long passages (200+ characters): The MAX_THRESHOLD cap prevents unreasonable thresholds. Even slow learners shouldn't need more than 2-3 minutes for a single text box. If they do, they're likely AFK or the game has unusually long passages that should be segmented.

Dialogue choices: When the game presents multiple options, users pause to consider choices. If detectable (multiple text options, menu state), multiply threshold by 1.5-2×.

Voice-over pacing: When audio is playing, the minimum reading time equals audio duration—users can't advance faster than the voice. If audio duration is available: threshold = max(audio_duration × 1.5, normal_threshold).

Cold start / new game: During warm-up when adaptive methods lack data, the simple heuristic provides reasonable defaults. Store per-game baselines to accelerate future sessions with the same title.

"Too fast" detection: Times significantly below expected (less than 0.3× expected) indicate the user clicked through without reading. This is relevant for reading statistics accuracy but orthogonal to AFK detection.

python

def classify_reading(time_seconds: float, char_count: int, baseline_per_char: float) -> str:

expected = char_count * baseline_per_char

ratio = time_seconds / expected if expected > 0 else 0

if ratio < 0.3:

return "skipped"

elif ratio > 3.0:

return "afk"

else:

return "normal"Start with Algorithm 1 (character heuristic). It requires approximately 10 lines of code, zero configuration, no warm-up period, and handles the majority of cases correctly. The formula max(5, min(chars × 1.2, 120)) eliminates the fundamental problem of fixed timers ignoring text length.

Add Algorithm 2 (EMA adaptive) if users report inaccurate detection after extended use. This requires storing a single floating-point baseline per game and updating it after each valid reading. The warm-up period is brief (5-10 text boxes), and the improvement in accuracy is substantial for users whose reading speed differs significantly from the assumed default.

Consider Algorithm 3 (Modified Z-Score) only if you observe systematic accuracy problems with EMA—for instance, if users frequently have contaminated sessions where they were AFK multiple times, polluting the baseline. The MAD-based approach handles this gracefully but adds implementation complexity and requires maintaining a rolling window of historical readings.

For batch/after-the-fact calculation as specified in the requirements, the two-pass batch detection variant of Algorithm 2 is ideal: use the simple heuristic to identify clean readings, compute a baseline from those, then classify all readings against that baseline. This approach combines the robustness of having all data available with the simplicity of the character-based method.

2026-01-26 15:08:49

Do new cards on Ankidroid.

Sync with Anki Desktop.

Anki Desktop says you have not done any new cards.

Usually the issue is caused by reorder plugins, if you reorder before syncing it breaks. Reorder after syncing.

2026-01-26 14:50:09

For real-time Japanese visual novel translation requiring sub-3-second responses at minimal cost, Claude 3 Haiku emerges as the optimal choice, delivering the best balance of speed, price, and translation quality. Gemini 2.0 Flash offers an even cheaper alternative with faster responses but notably lower Japanese accuracy, while GPT-4o-mini provides superior translation quality at borderline acceptable latency. DeepSeek V3—despite excellent translation benchmarks—is unsuitable due to its 7-19 second time-to-first-token, far exceeding your latency requirement.

Based on your specific requirements (~1000 characters input, sub-3-second response, budget-focused, "good enough" quality), here are the optimal models:

| Rank | Model | Speed (300 tokens) | Cost (Input/Output per 1M) | JP Quality (VNTL) | Verdict |

|---|---|---|---|---|---|

| 1 | Claude 3 Haiku | ~2.8s ✅ | $0.25 / $1.25 | 68.9% | Best overall balance |

| 2 | Gemini 2.0 Flash | ~2.3s ✅ | $0.15 / $0.60 | ~66% | Cheapest reliable option |

| 3 | GPT-4o-mini | ~3.6-4.1s ⚠️ | $0.15 / $0.60 | 72.2% | Best quality, borderline speed |

| 4 | Gemini 2.5 Flash-Lite | ~1.1s ✅ | $0.10 / $0.40 | ~66% | Fastest, lower quality |

| 5 | Qwen 2.5 32B | ~2.5-3s ✅ | $0.20 / $0.60 | 70.7% | Best Asian language specialist |

Claude 3 Haiku achieves ~2.8 seconds for a typical 300-token translation response, comfortably under your 3-second threshold. At $0.25 per million input tokens and $1.25 per million output tokens, openrouterNebuly a typical VN translation request (1000 characters ≈ 500 tokens input, ~150 tokens output) costs approximately $0.0003 per line—meaning you could translate 10,000 lines for roughly $3.

The Visual Novel Translation Leaderboard (VNTL) ranks Claude 3 Haiku at 68.9% accuracy, huggingface which significantly outperforms traditional machine translation tools like Sugoi Translator (60.9%) and Google Translate (53.9%). huggingface Community feedback indicates Claude models excel at capturing "tone, style, and nuance" in dialogue— Designs Valleycritical for visual novel content with casual speech patterns, honorifics, and implied subjects.

If cost is your primary concern and you can tolerate slightly rougher translations, Gemini 2.0 Flash delivers responses in ~2.3 seconds at just $0.15/$0.60 per million tokens—roughly half the cost of Claude 3 Haiku. For extreme budget optimization, Gemini 2.0 Flash Experimental is currently free on OpenRouter with 1.05 million token context windows. openrouter

The tradeoff is meaningful: Gemini Flash models score around 66% on VNTL benchmarks versus Claude Haiku's 68.9%. For casual reading where you just need the gist, this difference is acceptable. For dialogue-heavy games with nuanced character interactions, you'll notice more awkward phrasing and occasional mishandled honorifics.

GPT-4o-mini achieves 72.2% VNTL accuracy—the highest among budget models and only 3% behind flagship GPT-4o (75.2%). This makes it objectively the best "good enough" translator in terms of output quality. The catch: its 85-97 tokens/second generation speed produces total response times of 3.6-4.1 seconds, slightly exceeding your 3-second requirement.

If you can tolerate occasional 4-second responses, GPT-4o-mini at $0.15/$0.60 Nebuly offers the best quality-per-dollar. LangCopilot Enabling streaming significantly improves perceived latency— OpenAItext appears as it generates, so you'll see the translation building rather than waiting for the full response. OpenAI

DeepSeek V3 scores an impressive 74.2% on VNTL—competitive with flagship models—but its 7.5-19 second time-to-first-token makes it completely unsuitable for real-time use. AIMultiple This latency occurs because DeepSeek's infrastructure prioritizes throughput over latency, and reasoning-focused models like DeepSeek R1 can take even longer.

Mistral models (including Mistral 7B and Mistral Small) receive mixed community feedback for Japanese translation, with reports of "old OPUS-MT-level issues" on nuance and honorifics. Designs Valley Llama models without Japanese-specific fine-tuning also underperform Asian-focused models like Qwen on this task.

For your use case (1000 characters input ≈ 500 tokens, ~150 tokens output per request):

| Model | Cost per Request | Cost per 1,000 Lines | Cost per Full VN (~50,000 lines) |

|---|---|---|---|

| Claude 3 Haiku | $0.0003 | $0.31 | ~$15 |

| Gemini 2.0 Flash | $0.0002 | $0.17 | ~$8 |

| GPT-4o-mini | $0.0002 | $0.17 | ~$8 |

| Gemini 2.0 Flash Exp | FREE | FREE | FREE (rate limited) |

OpenRouter adds approximately 25ms gateway overhead with its edge-based architecture— OpenRouterCodecademynegligible for your use case. Skywork Enable these optimizations for best results:

:nitro suffix on model slugs or sort by "latency" to prioritize fast providers OpenRouter

Based on community best practices, use this configuration:

Temperature: 0.0 (for consistent translations)

System prompt: "You are translating a Japanese visual novel to English.

Preserve the original tone and speaking style. Translate naturally

without over-explaining. Keep honorifics where appropriate."

Context: Include 10-15 previous lines for dialogue continuityFor real-time visual novel translation prioritizing the speed-cost-quality balance, Claude 3 Haiku is the clear winner—fast enough (2.8s), affordable (~$0.0003/line), and good enough quality (68.9% VNTL). Choose Gemini 2.0 Flash if you need to minimize costs further and can accept rougher translations. Choose GPT-4o-mini if translation quality matters most and you can tolerate occasional 4-second delays with streaming enabled. All three models dramatically outperform traditional machine translation while remaining affordable for high-volume visual novel content.

2025-12-08 18:56:46

Welcome to the award show everyone! Hosted by your favourite bee... Bee! 🥳

I wanted to summarise the best tools etc out there in 2025, and what better way then to put on a fake award show!

And like all true award shows and Christmas themed events, let's get into the spirit of giving.

This category features 3 tools.

If I could only pick 3 to learn Japanese with, it would be these 3.

The best overall winner of the 2025 Japanese Learning Awards is....

Yomitan is the go-to dictionary application.

It works in all browsers (Chrome, Firefox, Edge) and even on mobile browsers.

You install it easily and just select your language and some dictionaries

It supports:

Even if you don't use any of the fancy features, having a dictionary you can use at the click of a button is useful.

If you use Yomitan, you must also use Anki too.

Anki is the premier flashcard software.

You see a word you don't know, and create a flashcard for it in Anki.

Anki solves the issue of forgetting, mostly. You will still forget things, but significantly less.

https://github.com/bpwhelan/GameSentenceMiner

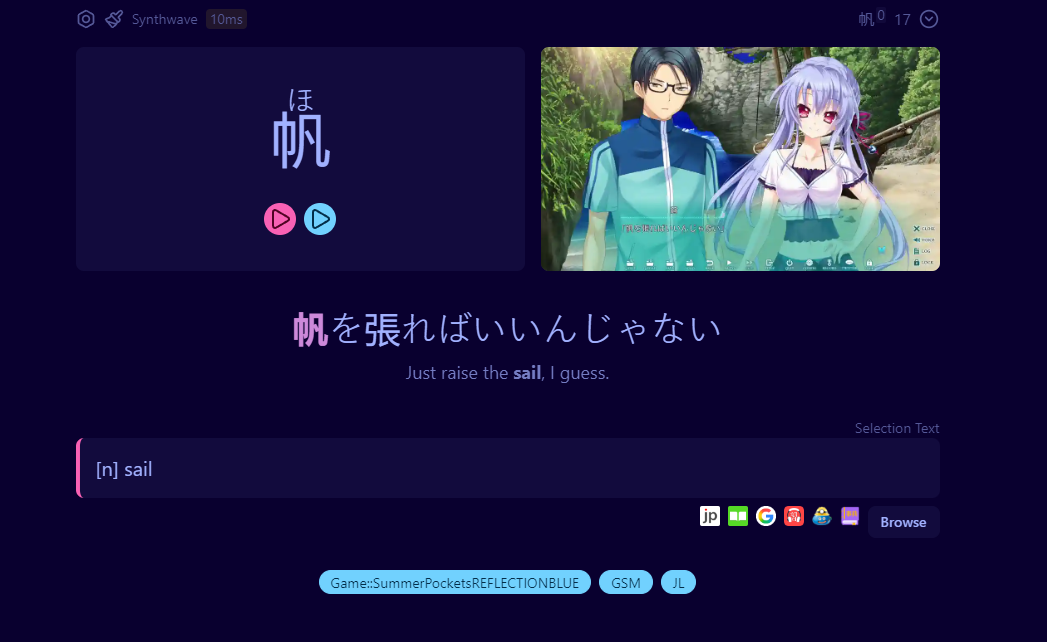

Game Sentence Miner (GSM) is an all-in-one toolkit to turn any visual media into Anki flashcards.

1. Look up words natively in game. 2. Click Add to Anki. 3. Anki card automatically made in the background with game audio + a gif of the game

Use the overlay to directly look words up (using Yomitan) in your game, anime, or manga without needing to go to another website to look it up.

Anki card created with GSM

Create flashcards in one click with the real audio used, and a gif of what happened on screen.

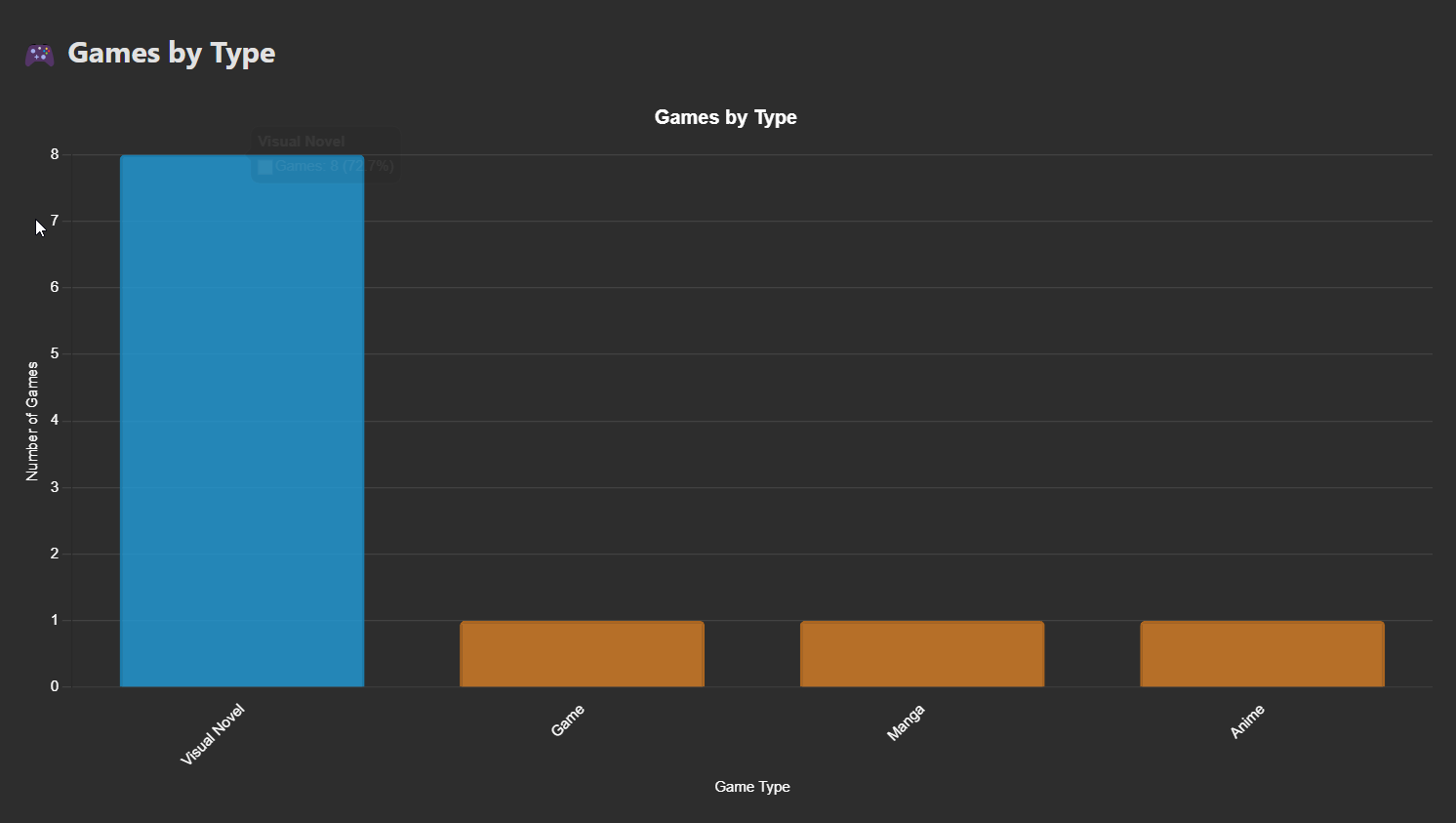

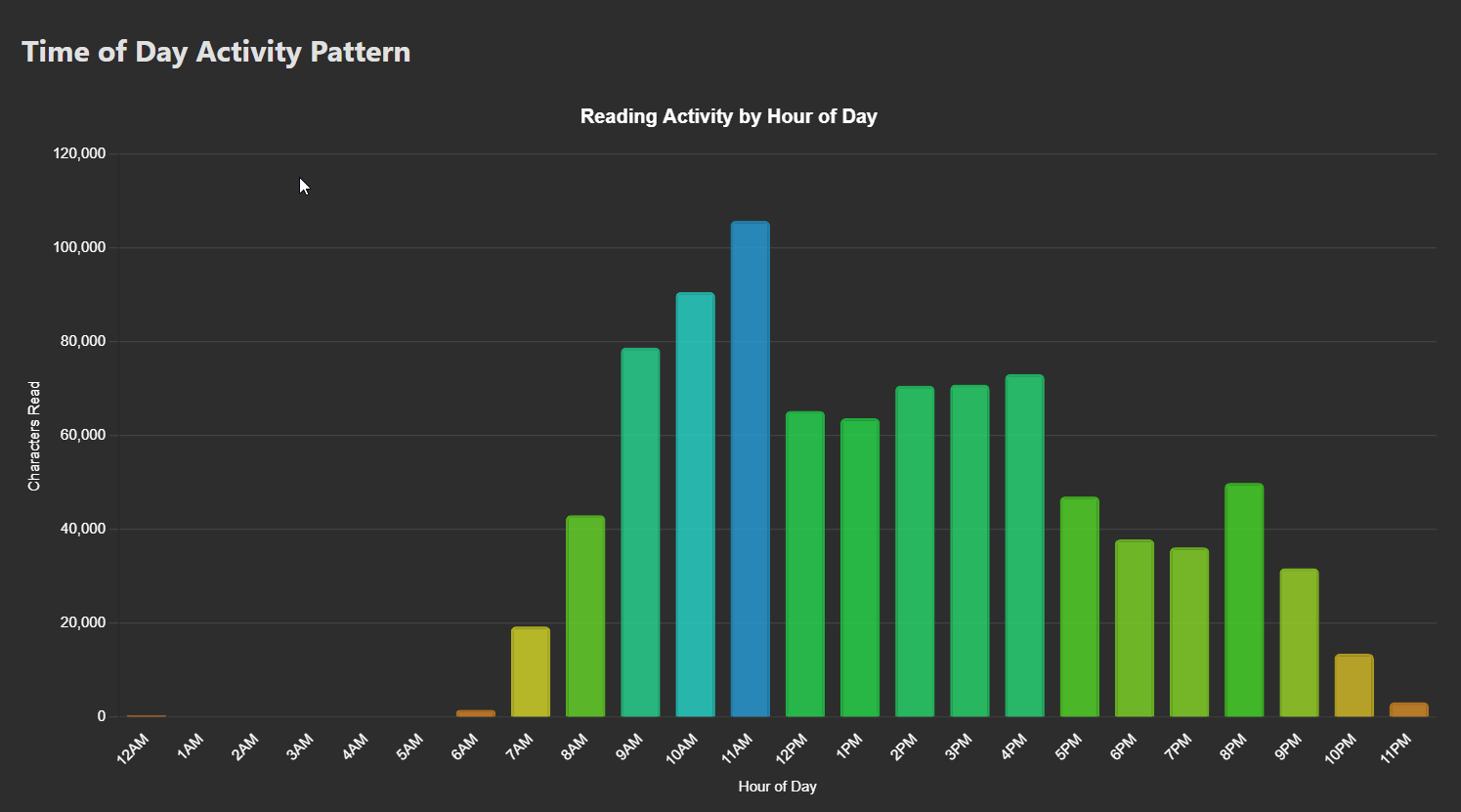

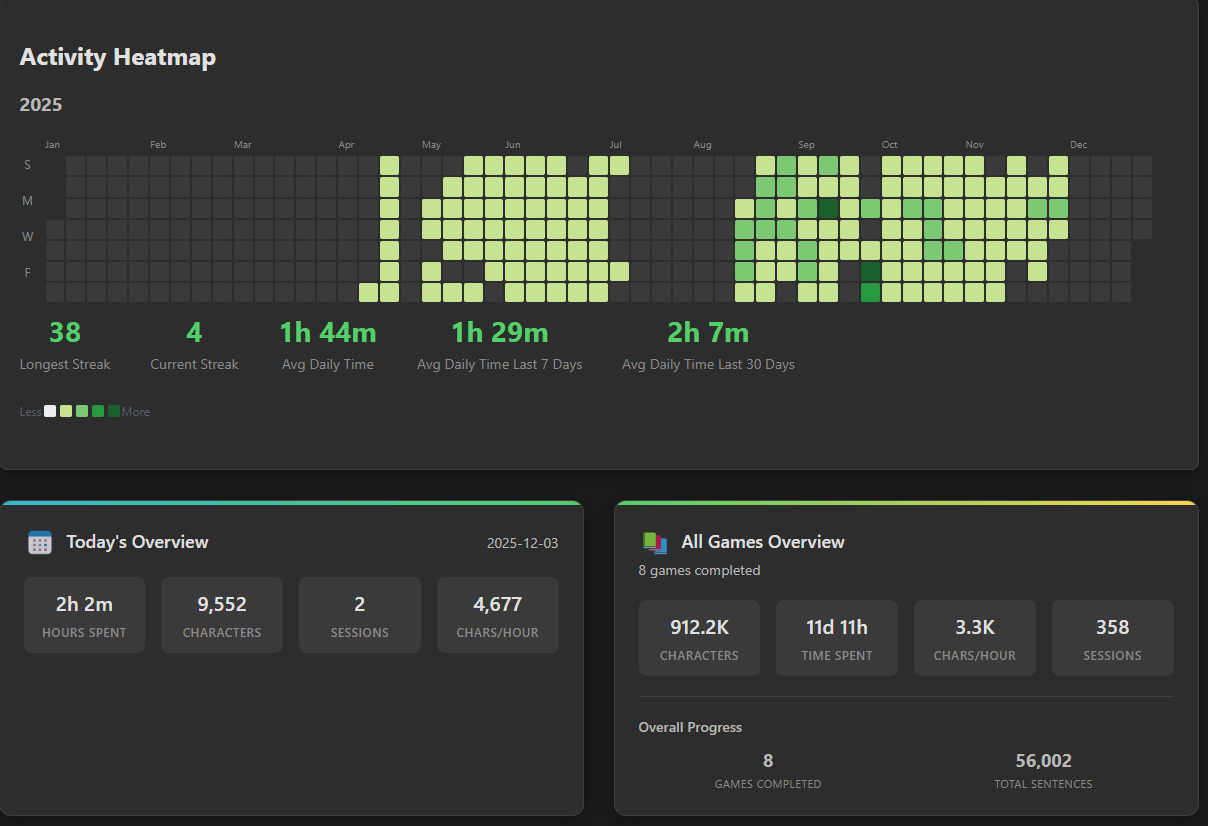

Analyse your statistics to help you learn to read better, over 30+ graphs and extensive goal planning.

Best of all? It's 100% free, works offline, and works for many other languages – not just Japanese!

GSM's main problem is the barrier to entry can be high, it's got a lot of features and many settings. Thankfully the author has created many, many blog posts and YouTube videos on how to use it.

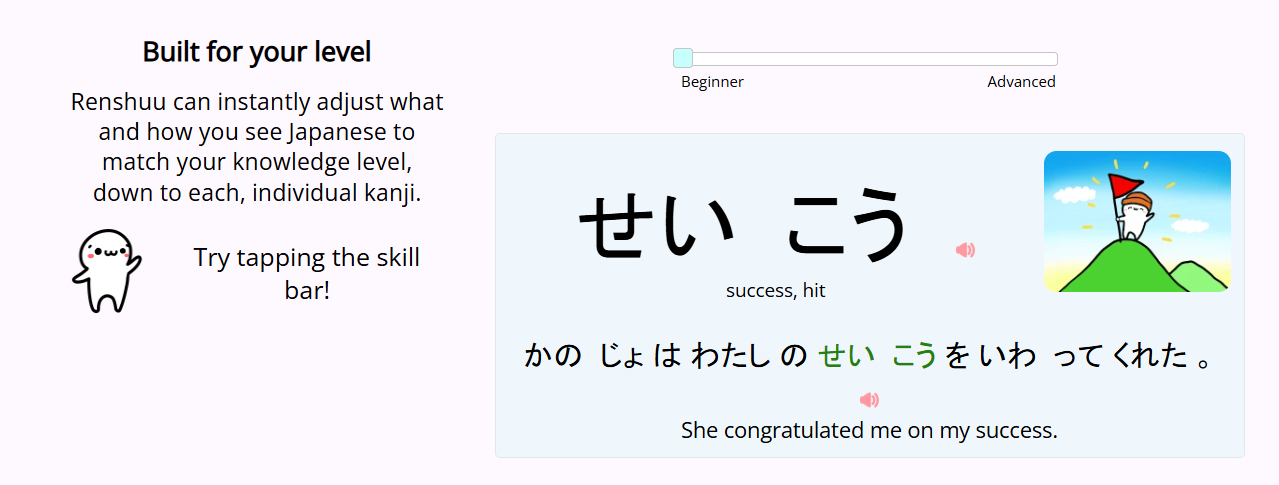

Renshuu wins the best app of 2025!

It's like Duolingo but better in every way.

It can work out your level and adjust the difficulty of words or sentences

It gives you varied practice. Writing kanji, flipping flashcards, and fun games.

If you're looking for an easy app to replace the Green Owl™️ but actually be somewhat effective, this is it!

Let's split this up into two, IOS and Android.

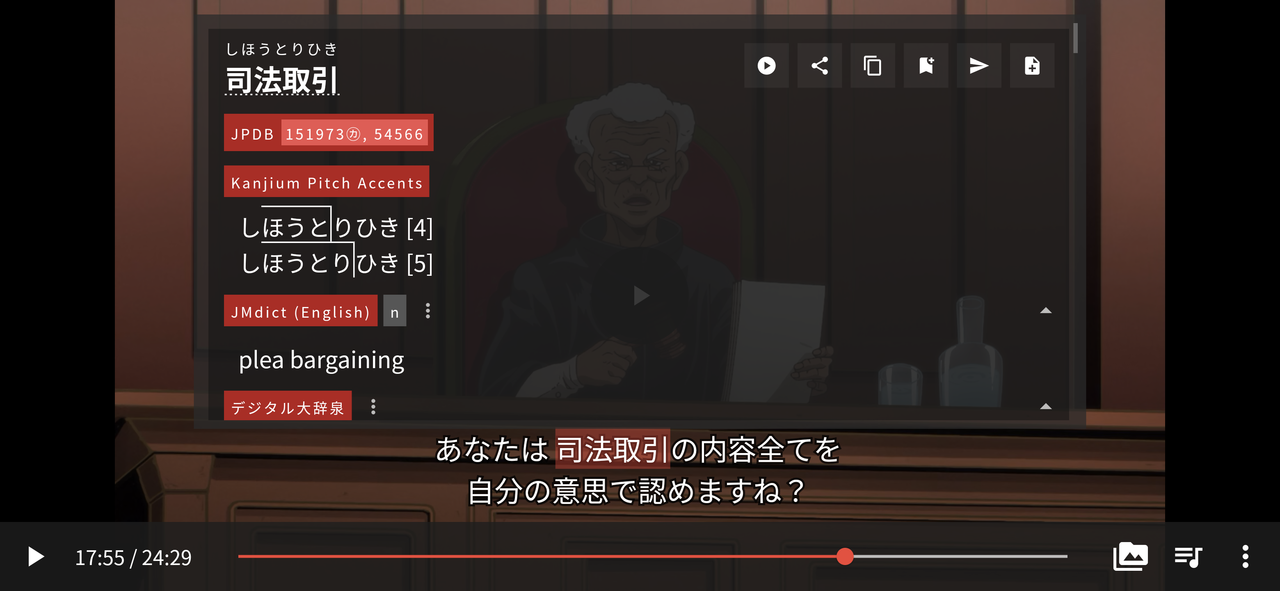

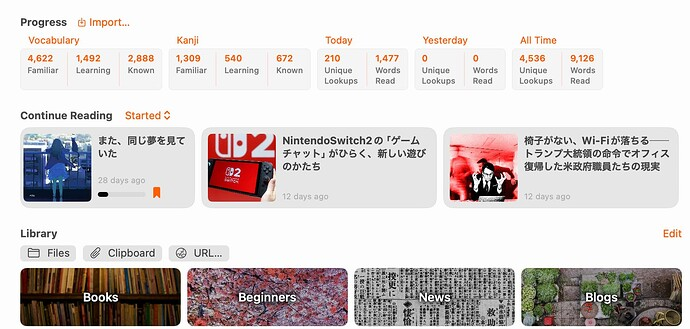

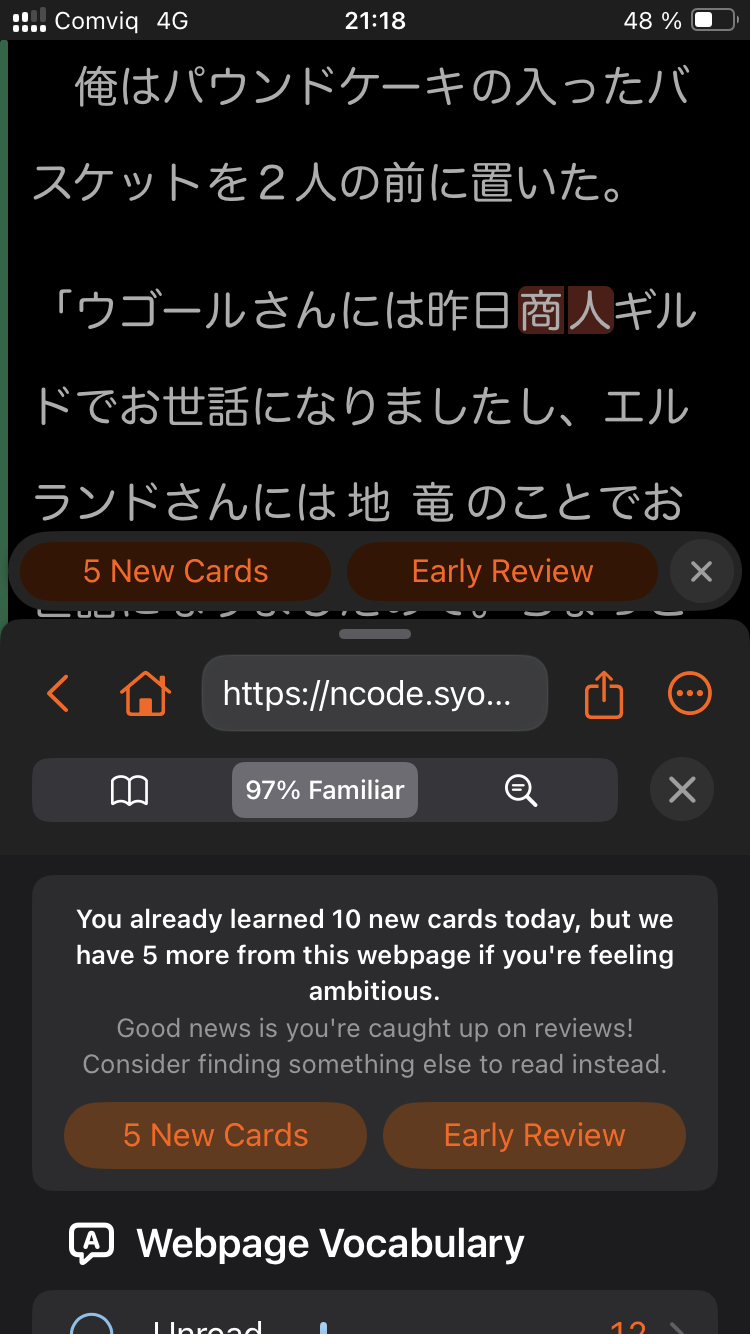

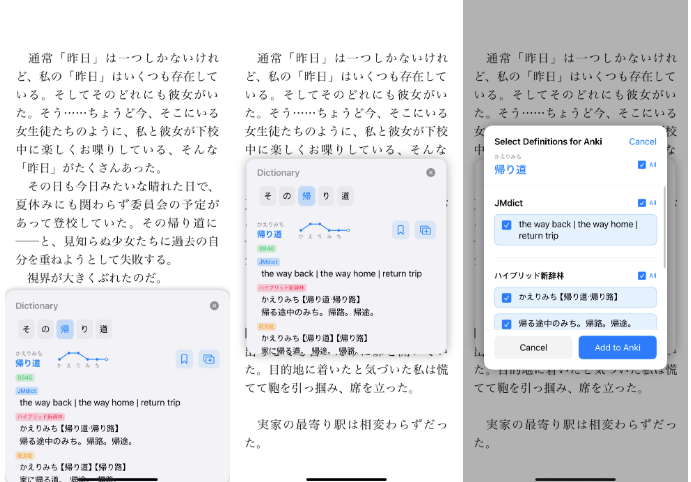

This is an everything-in-one kinda app.

If you do not have access to a computer, this is perhaps the best app to do everything on Android.

But! It does require some time to setup and learn how it all works.

Poe is Yomitan for Android

It supports Anki, native audio and pitch accent.

I wrote more about this here:

Now let's look at the options on IOS, albeit limited options.

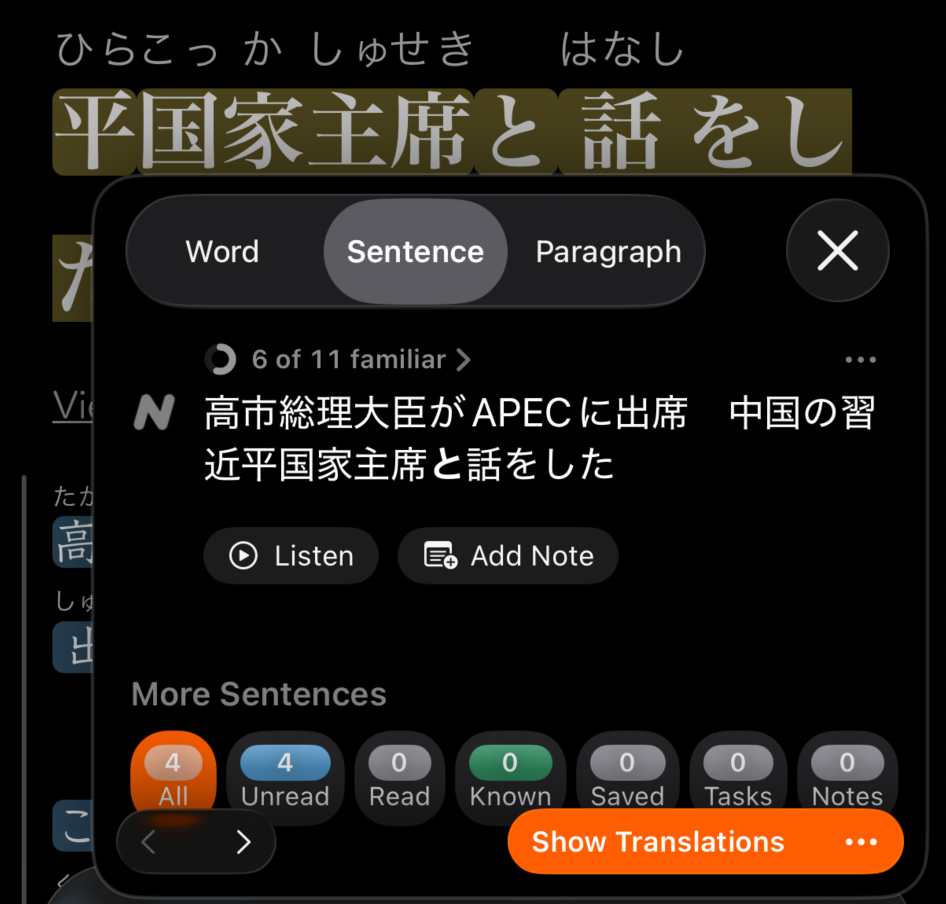

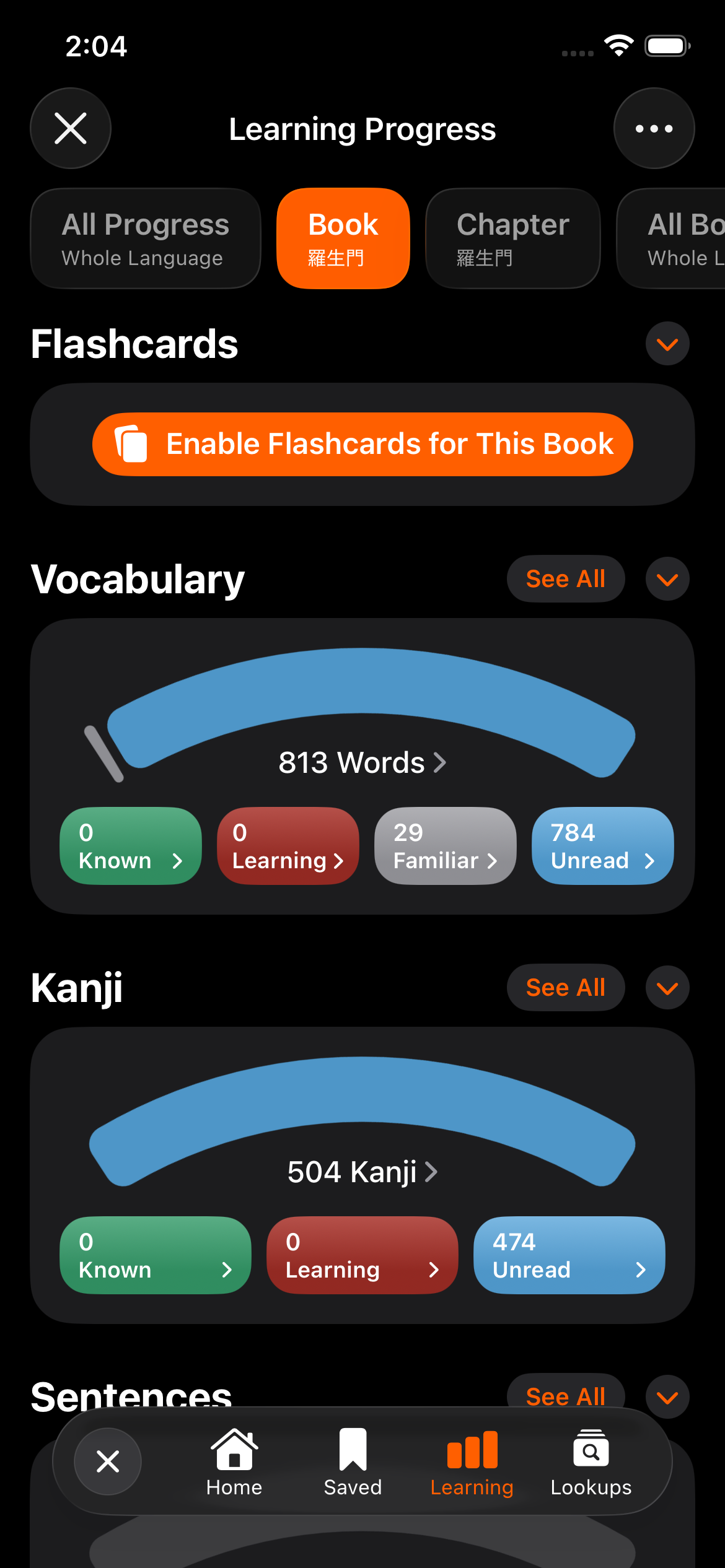

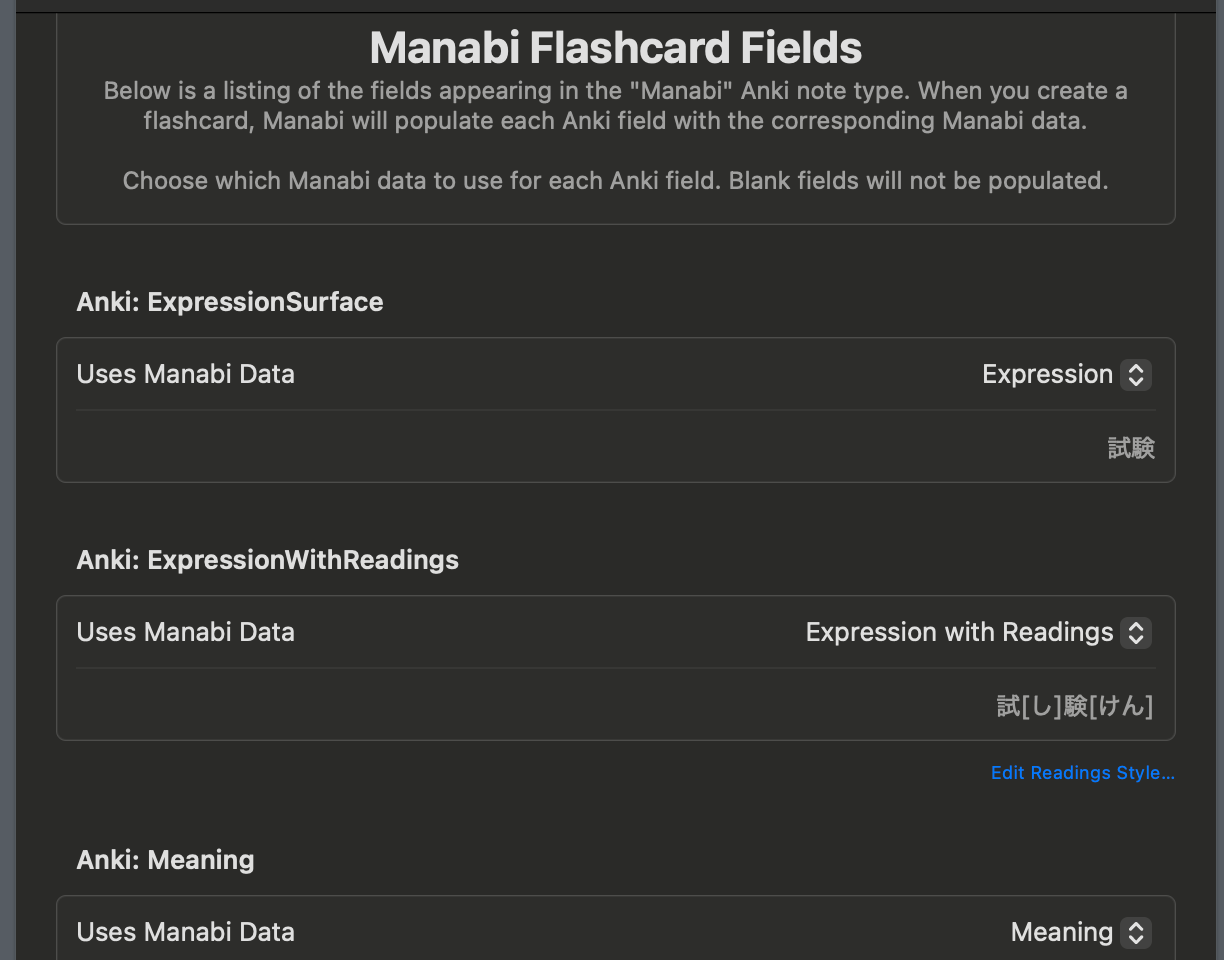

Manabi Reader is a way to read on IOS, similar to Jidoujisho but with less features. Not their fault, mostly IOS has a lot of walls.

You can look words up in dictionaries and send things to Anki.

See breakdown of sentences, how many words in a sentence do you know?

You can read books and webpages and get full comprehension statistics about that page.

You can also look up words using OCR or by pasting the text.

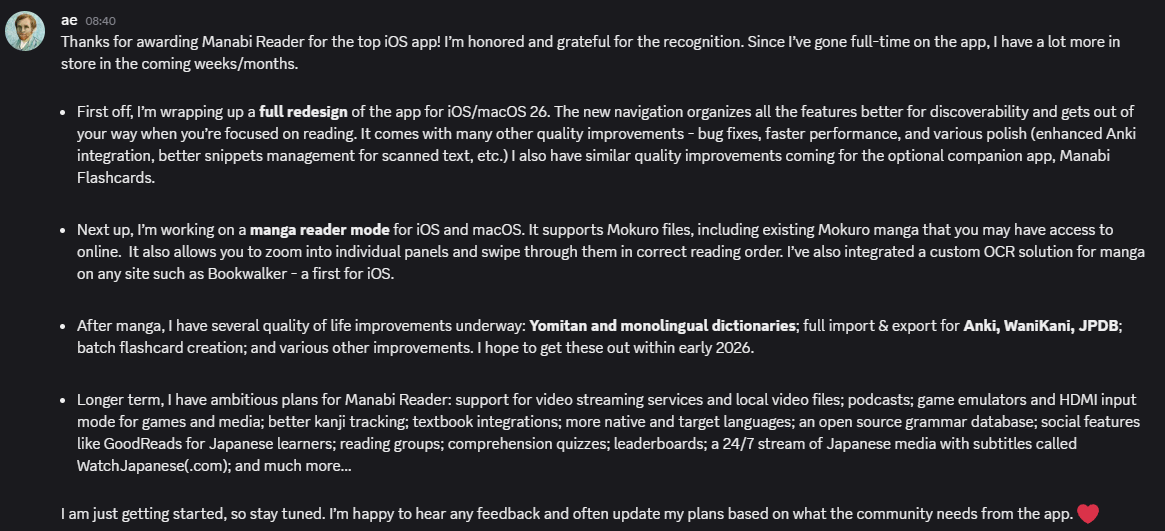

The author is working on a bunch of new features as they told me:

Here are some exclusive behind the scenes screenshots of the new Manabi Reader, coming soon!

This is another "look things up and make anki cards" app, but this time it focusses on reading books.

Since we've talked so much about Anki, one of the big questions people have who begun using it is "what decks do I use?"

This is the definitive Anki deck for people just getting into learning Japanese with Anki.

The idea is that this teaches the most common words found in media, not necessarily the words you'll come across ordering food in Japan.

Once you finish this deck you then know enough Japanese to read books / immerse. You will still struggle, but it won't be as bad as starting from 0.

Do you have problems reading city names? What about names of people?

The proper nouns Anki deck is designed to teach you all the important proper nouns you'll encounter, and then pretty much every proper noun ever.

"okay bee, I finished Kaishi. I want to use Yomitan to make my own Anki deck but it wants a note type... what do I use?"

I hear you say! probably....

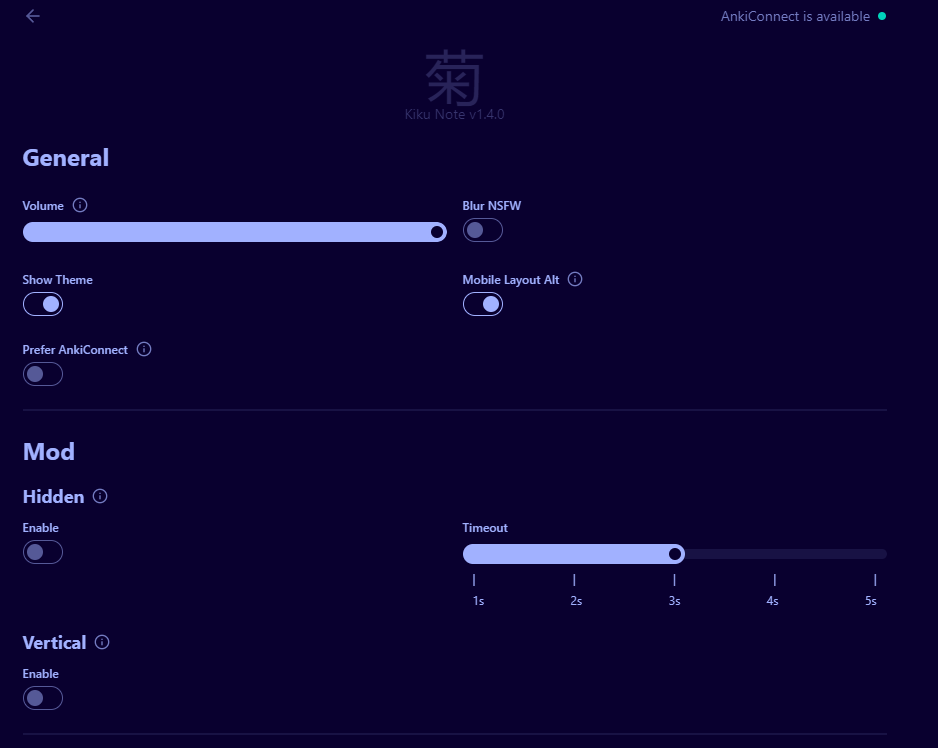

Kiku came out swinging towards the end of 2025 as the go to Anki note type.

Other note types were static, but Kiku harnessed the power of Javascript in Anki.

View similar Kanji, and view other flashcards that you made that use that kanji!

Sometimes you come across a word used in a really nice context, but you already have a flashcard for it!

You want to make another flashcard because you love this context, but it's just not possible without duplicating them or deleting your old card 🫠

Kiku solves this by allowing you to have multiple contexts in one card.

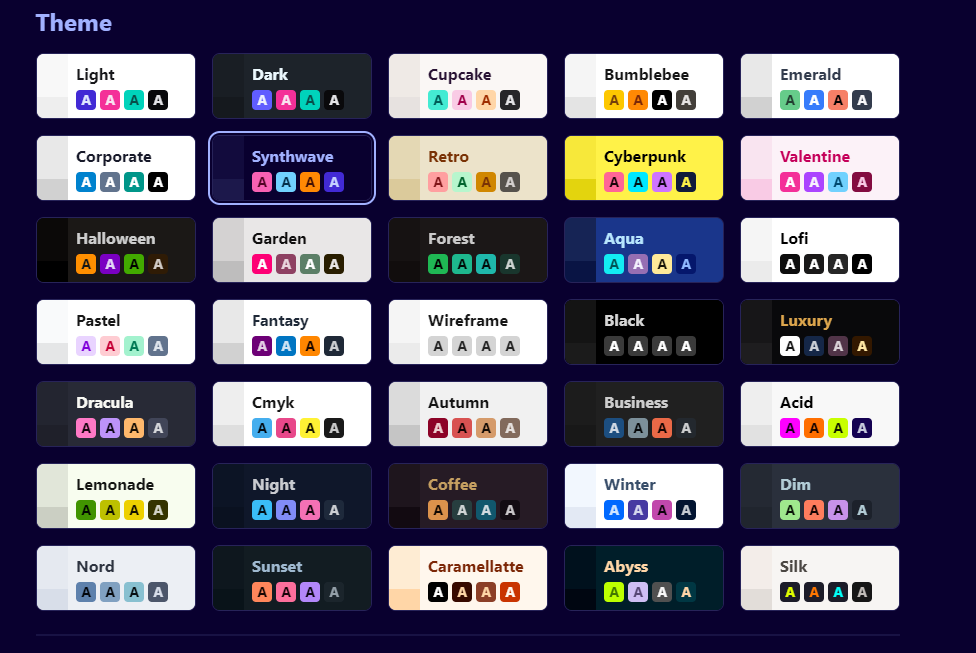

Most Anki card themes come in either light mode or dark mode.

Kiku has over 35 themes.

Kiku also has a settings page and a plugins system to really customise it for yourself.

Here's a bullet pointed list of my favourite features:

Lapis is made by the same person who made Kaishi.

It's very similar to Kiku but without all the fancy features (Kiku is based on Lapis).

If you want a less Javascript heavy card, this is great!

Now you use Anki, another common question people have is:

What Anki addons can I use to maximise it?

When you make Anki cards, they kinda go into a semi random order.

Not every word in Japanese is equally important.

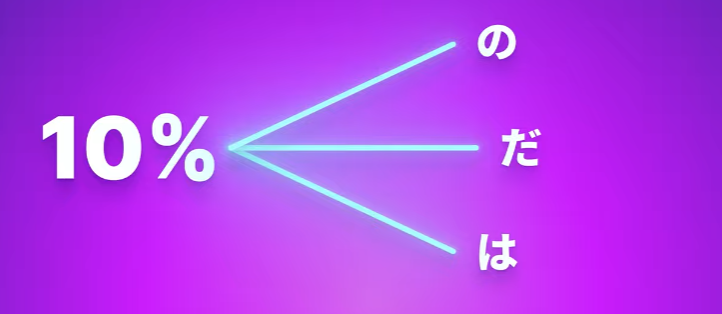

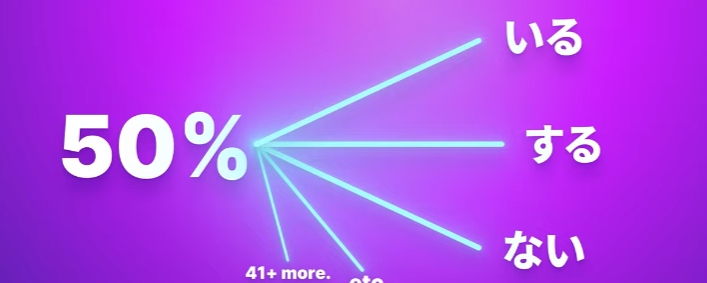

Migaku, who ran an analysis on Netflix found these statistics.

If you select a word at random, there is a 10% chance that word is one of these three:

50% chance it will be one of 45 words:

Words are repeated, often. Just learning the top 1500 words or so means you can understand 80% of all words in a show.

Therefore it makes sense to learn your Anki cards in the order of most frequent first.

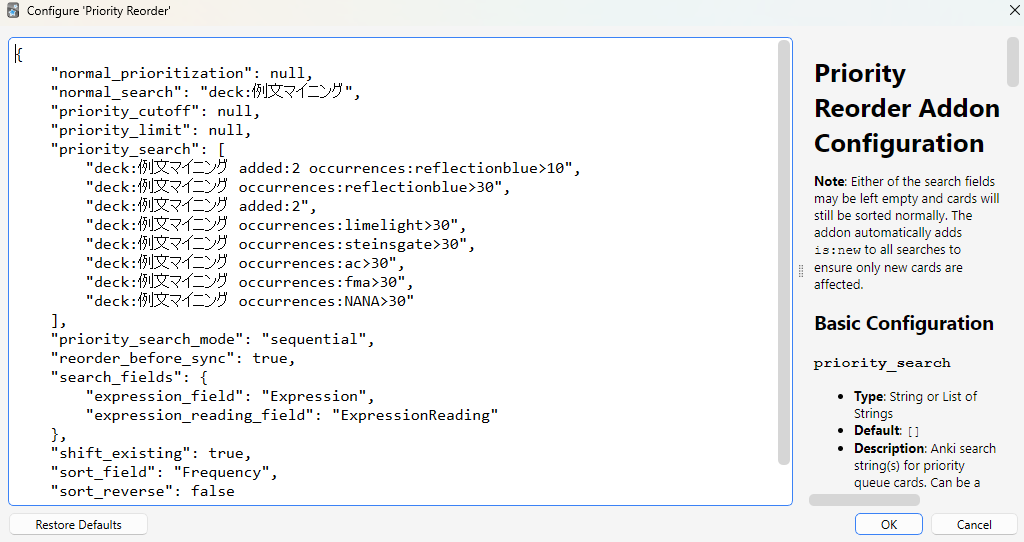

Priority Reorder does this.

But not all media is equal. One Piece has a lot of pirate talk, but you won't find that in other media.

Wouldn't it be cool to learn the most frequent words in One Piece if your goal is to watch it?

Priority reorder does that.

Finally, you have a short term memory. Flashcards you made today will stick better than flashcards made 50 days ago.

Wouldn't it be cool to also prioritise recently made flashcards that appear frequently in One Piece?

Priority Reorder does this!

Wouldn't it be cool to mine words that have a high frequency?

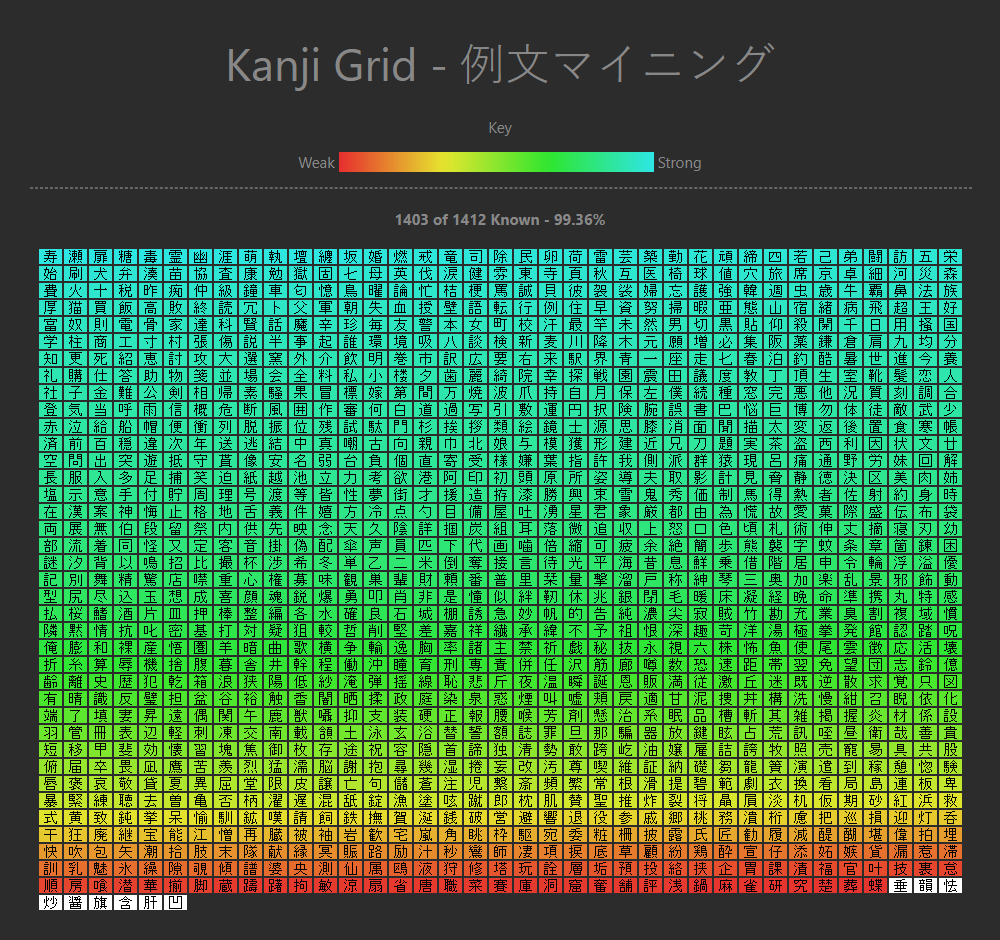

Looking to take the JLPT or similar and wondering "god, do I really know all the kanji in that exam?"

Or wanting to just see how you progress in terms of Kanji?

The Kanji Grid addon is for you!

https://ankiweb.net/shared/info/1610304449

This is an addon that works with Yomitan or similar tools.

It lets you listen to native audio in Yomitan, and even add that to your Anki cards.

It takes a bit to set up, but once you do you don't have to mess with it. You can now have native audio on all of your Anki cards!

This is all too much setup! I wish there was some sort of company I could pay to do this all for me

Not to worry, there is!

Migaku is an all-in-one solution.

They aim to do everything mentioned here already, albeit imperfectly and for a price.

They have courses which teach you the top 1500 words, Kanji and grammar designed to help you immerse as soon as possible similar to Kaishi.

They have their own SRS alternative to Anki, so you don't need addons etc to make anything work.

You can watch Netflix and look up all the words you want. They'll even highlight good words you should make flashcards out of.

They can tell you how much of a specific video you know in terms of words, what is your expected comprehension of it:

You can:

If you are looking for an alright solution to learning Japanese and you don't mind spending money, in my opinion this is it.

For me personally, messing with tools is one of my little joys so I don't mind it.

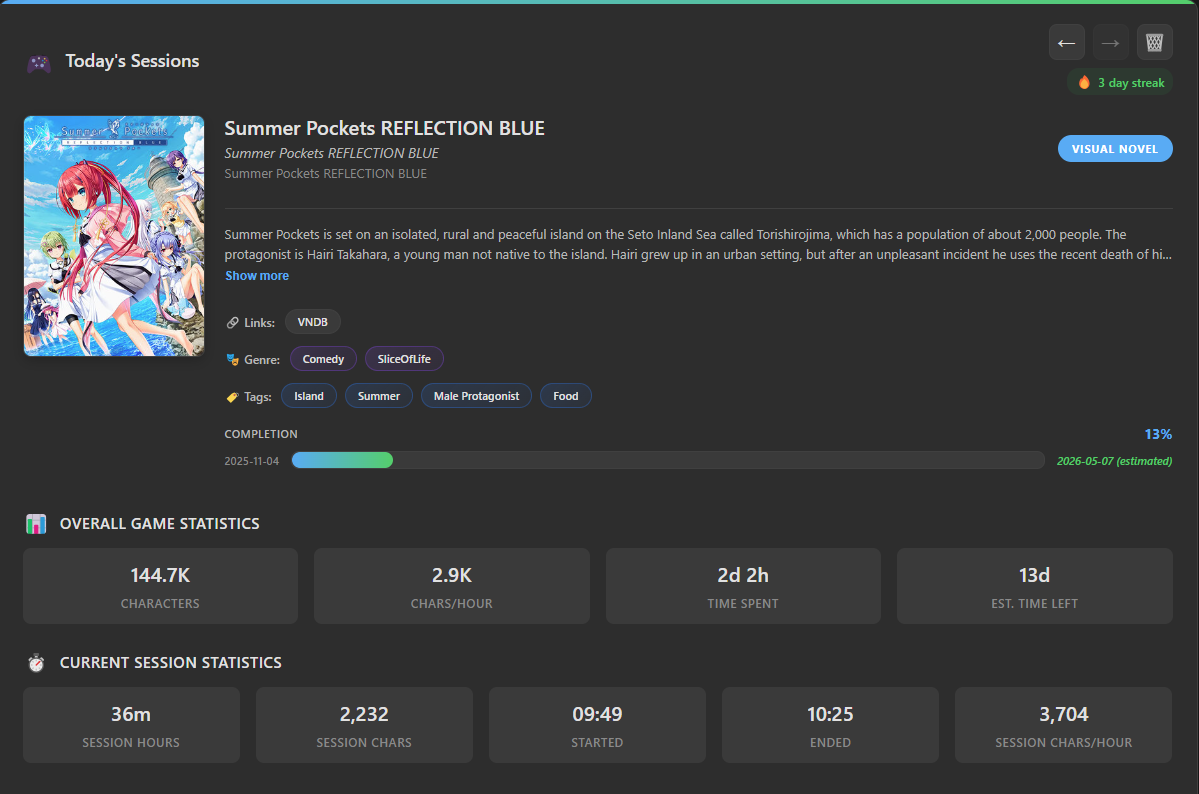

Japanese games are the greatest, let's look at options to learn Japanese from them.

The only real option is to use OCR, which is a fancy word to mean "the computer will read the text on the screen and give you the sentence so you can copy it / look it up".

After winning overall earlier, it does make sense that game sentence miner is the best for games.

Once you setup OCR, you can then setup the overlay to be able to look words up directly in the game.

It takes around 1 second to go from "text appearing on screen" to "being able to look up the text".

If you have a GPU it could be even less time, around 0.5 seconds or so.

You can then click the plus icon to make a flashcard, and GSM will make it all in the background. You don't have to constantly switch between enjoying a game and making flashcards.

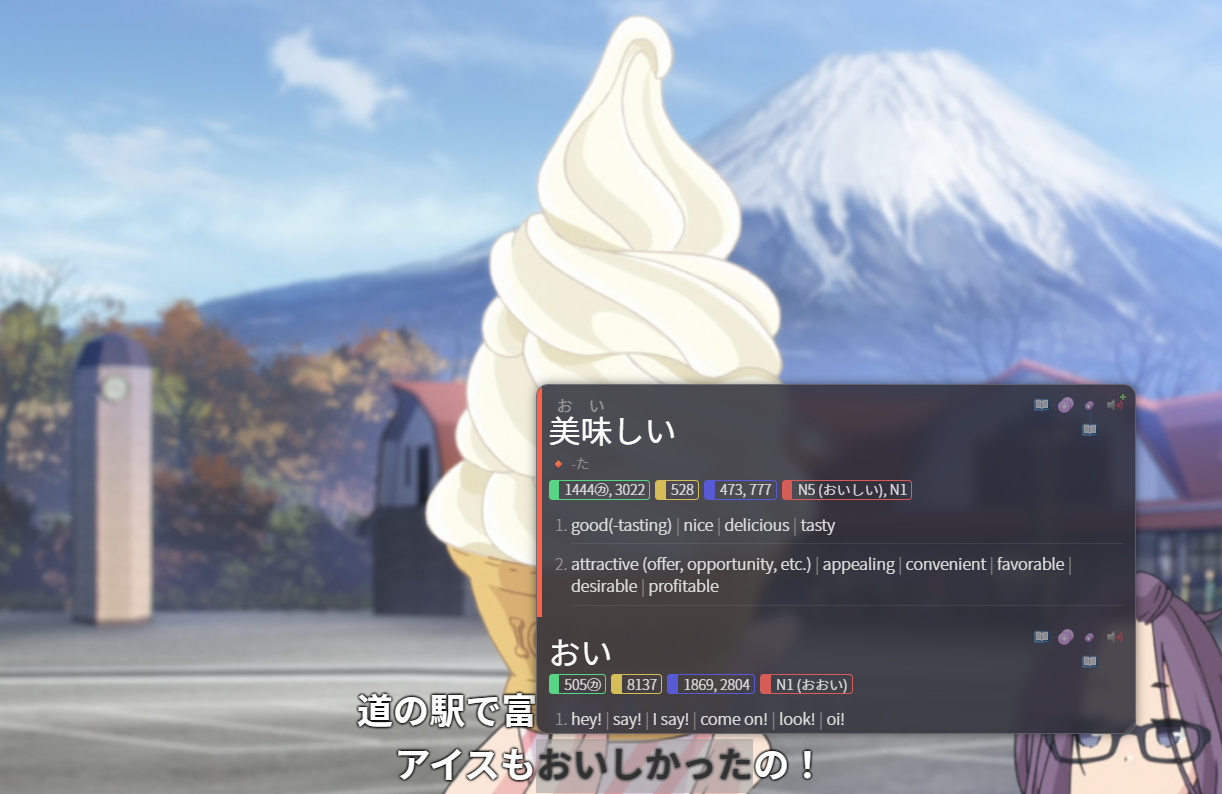

This is a really fast OCR that works anywhere on Windows, Linux or Mac.

It's super simple to setup and use, and it works similar to GSM's "hover over the word to see the meaning"

The only downside is that you can't mine to Anki with it, however it is extremely simple to use, fast, and works on anything on your screen (even Windows settings) so for that reason it's winning second place.

Yomininja is another tool similar to GSM.

It uses OCR to scan the screen and lets you look things up:

It's a lot simpler than GSM, but in my opinion it's not as pretty.

GSM doesn't highlight boxes red by default, and you can hover over the words and see the definition above them as you read it.

With Yomininja there's this extra box on the side you have to read.

Not to mention the fact that GSM lets you easily make flashcards with the audio and a gif from the game itself.

Still, Yomininja is extremely easy to use and a fan favourite.

GSM is really, really good for visual media on a computer.

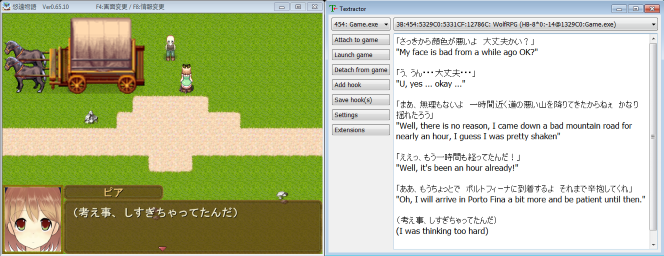

But when it comes to visual novels, we can use texthookers.

Texthookers work with the overlay just like OCR does with games.

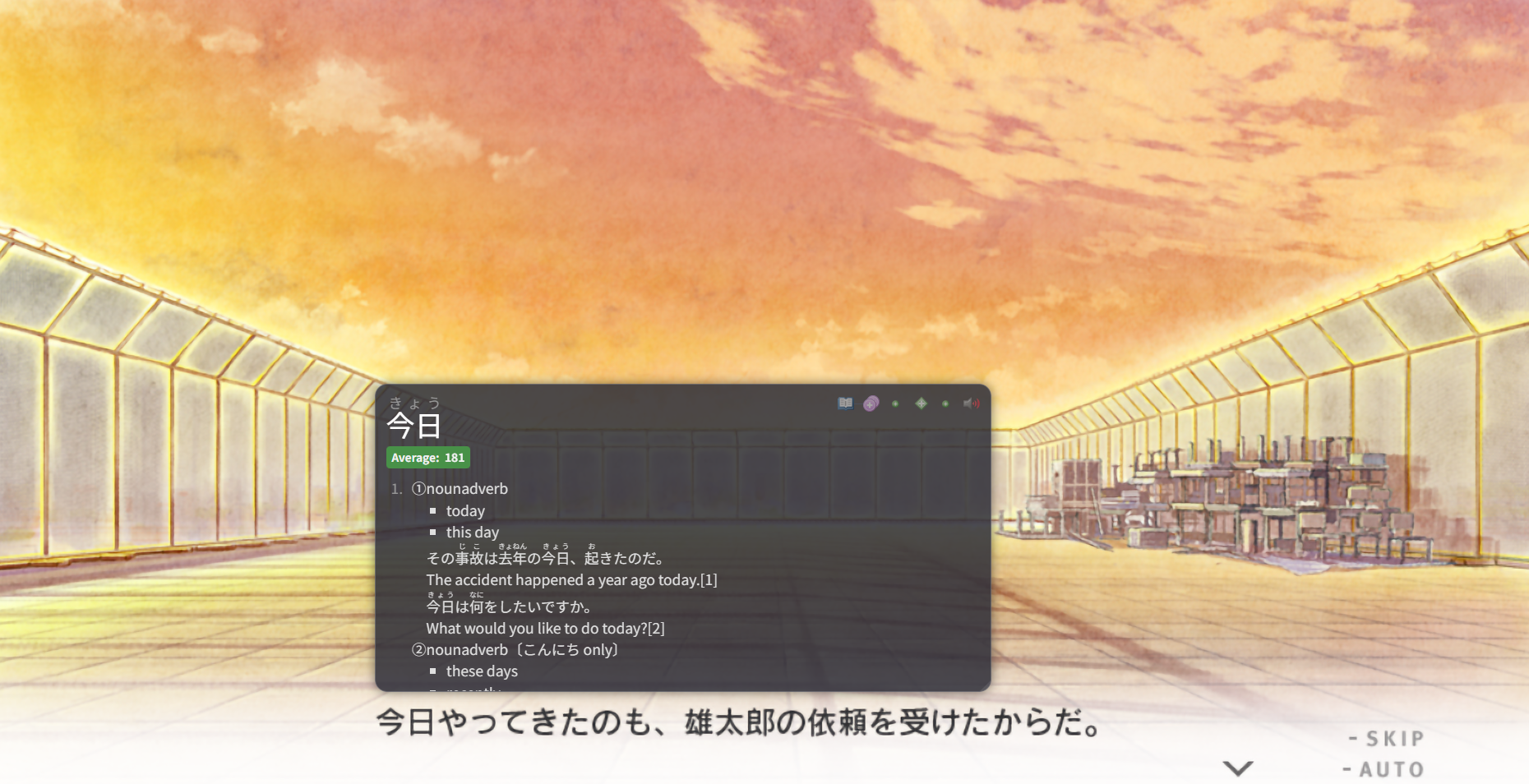

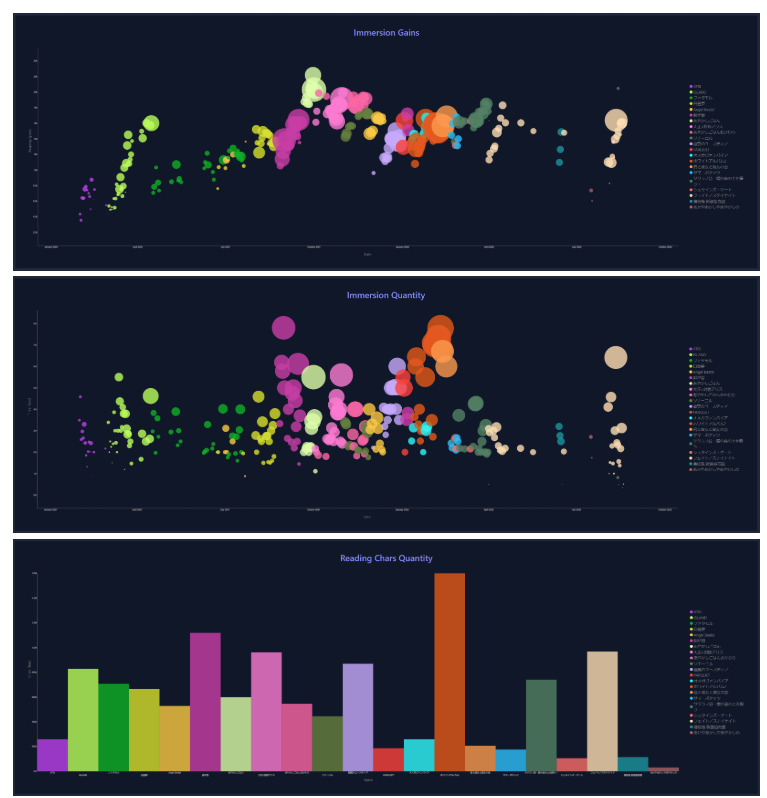

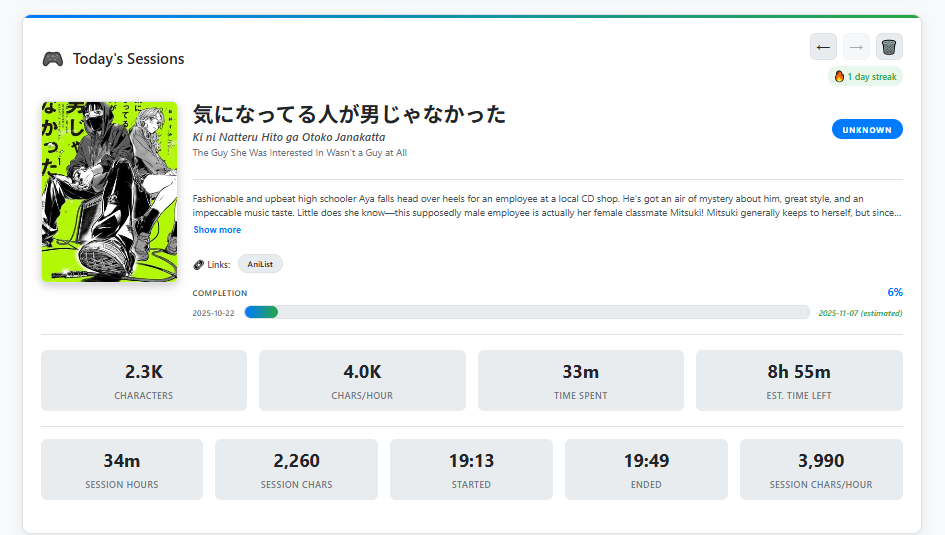

Let's explore an under-rated feature in GSM, as our next tool will have this too – stats.

GSM has over 35 charts related to statistics about everything you read, designed to help you answer questions such as:

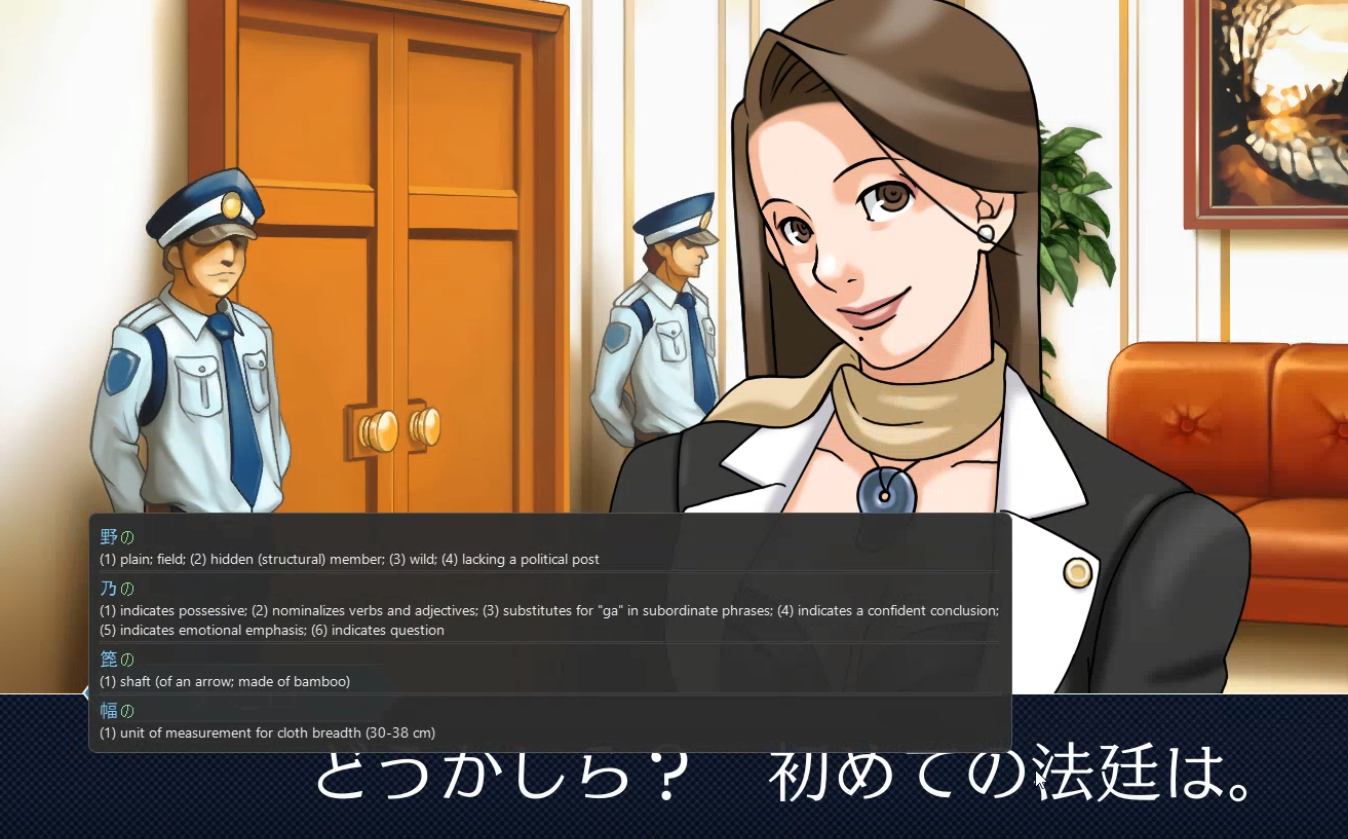

JL is an alternative Japanese dictionary program.

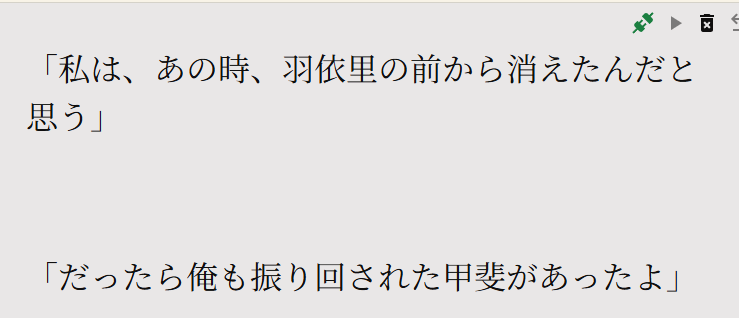

It works really, really well for visual novels.

I wrote extensively about it here:

But in short:

I like how you can put the textbox over the visual novel, which lets you do something similar to GSM's overlay.

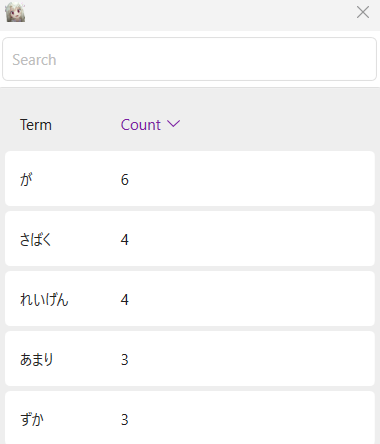

JL also has some stats:

And even more excitedly they have stats on how many times you looked up a word, something that no other dictionary app has.

Texthookers are lil programs that "hook" into visual novels or some games, take the text on your screen and give it to you.

They are faster and more accurate than OCR, but sometimes awkward to use.

By far the best texthooker out there.

It works on everything I try in terms of visual novels.

It can hook emulated devices such as PSP, PS2, and the Nintendo Switch.

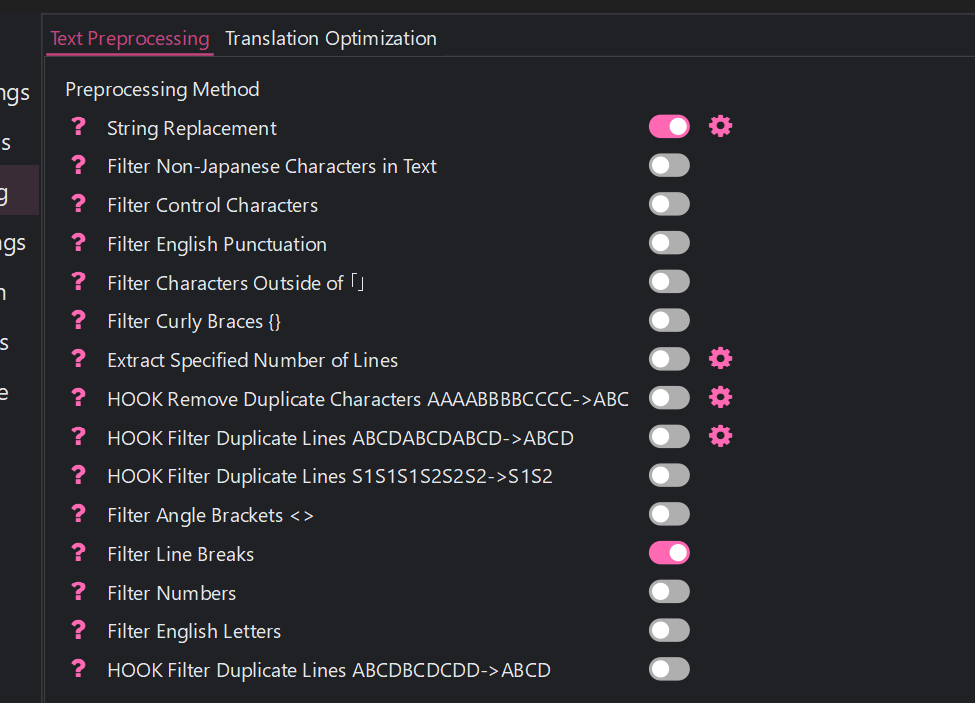

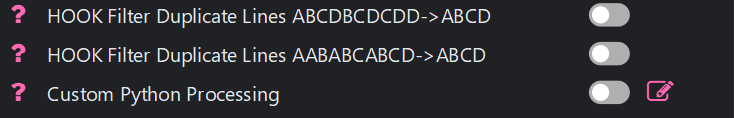

If you find a "bad" hook (one that has a lot of junk) there's a million things you can do to make it more normal.

Like filtering out curly braces, filtering out non Japanese text etc.

If this doesn't help you, you can even write a Python file to preprocess the text!

On top of this, Luna supports much more than just hooking.

But there are some rumours that the author has stolen the code of other people and rebranded it as their own (I have not found evidence of this, please let me know in the comments if you have proof).

Also, when writing code people often tell you what has changed since the last release.

The Luna author does not do this, it's kind of confusing to figure out what's been added or removed.

This led to people not trusting them.

On top of this, it supports a lot. Like translation, yomitan, Japanese parsing etc.

This led to someone forking the code and creating their own version, removing all of this and keeping just the hooking part.

Auora is a cracked dev, if you spend any time in Japanese Tool GitHub™️you will see them

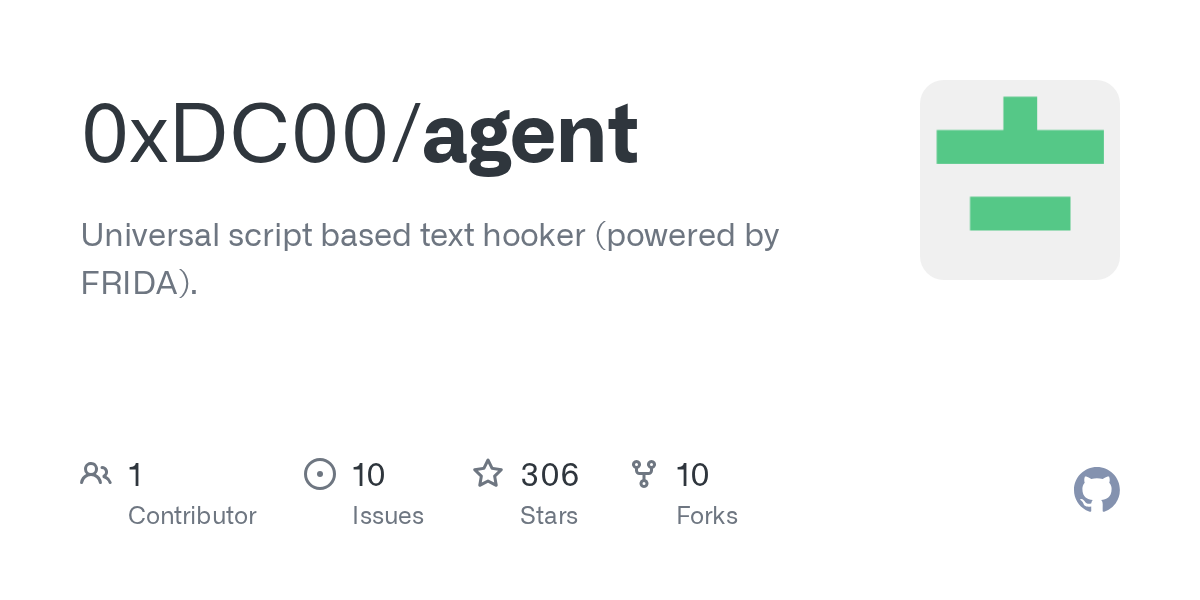

Agent is a much simpler texthooking program.

It has a database of hooks, and you click on the game or visual novel you want to play.

You then have a perfect hook, it's not dirty and works first time without much issue.

The problem is that it doesn't have hooks for all games and visual novels, yet.

Chen's textractor works similarly to Luna and Agent, closer to Luna in the sense that you find the hook yourself.

It's most similar to Textractor (it's a fork) which is an older texthooking program, so many people love this as its similar to what they already use and love.

Let's say you want to use Yomitan to mine from games / visual novels without GSM / JL or Yomininja etc.

Yomitan is browser only.

You need to take the text from OCR / Texthooking and place it onto a webpage to look up words.

There's some opinions about these, so let's list the most popular ones.

Kizuna is a texthooking paged based on another one by Renji.

Everytime your texthooker receives a line of text, it sends it to this page.

Here you can use Yomitan to look up words.

Kizuna is special because it's a social texthooking page.

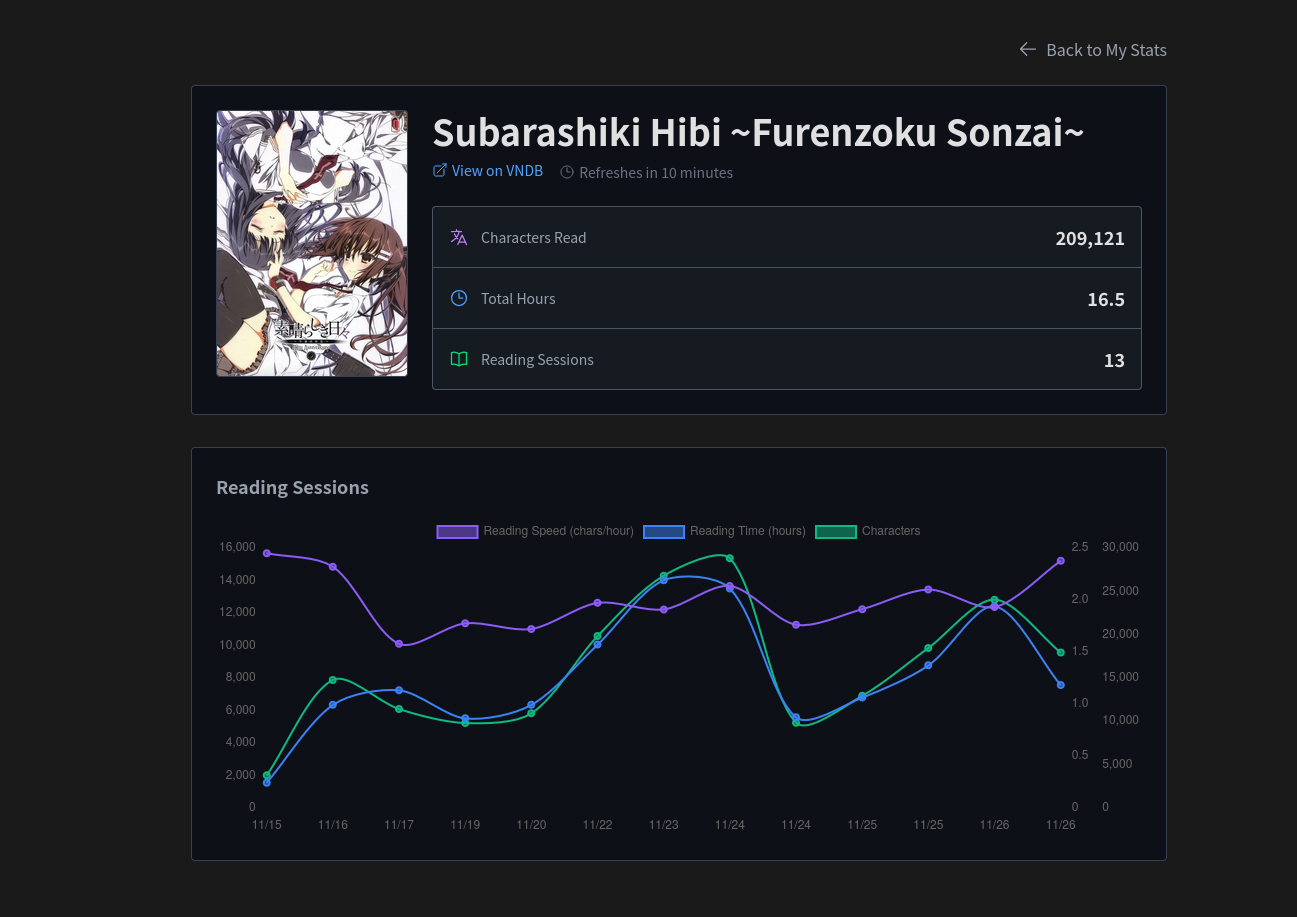

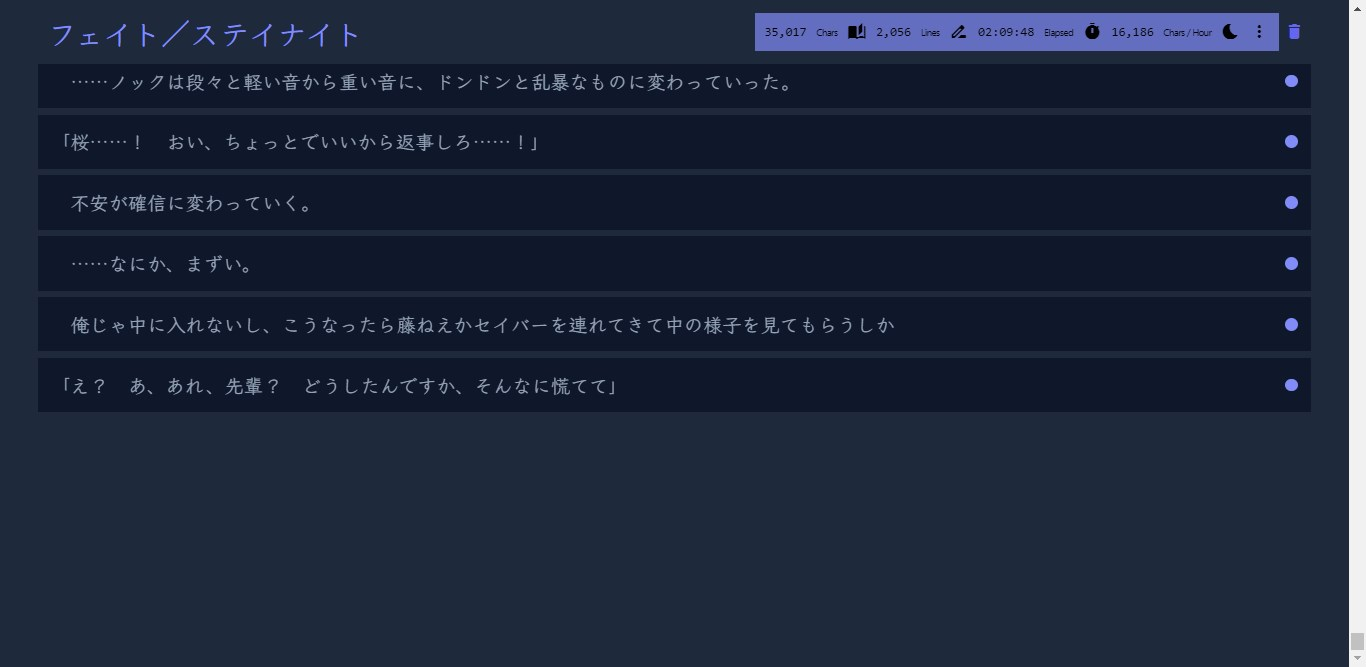

It records characters read and time spent per visual novel:

And you can create "rooms" with your friends to compare your stats together and motivate each other:

It's three main downsides are that it is focused primarily on Japanese visual novels, it's not open source and it doesn't let you export your data.

Renji's page inspired Kizuna's.

It's a simple texthooking page that can be downloaded and ran entirely locally, with its source code published online.

It looks pretty much exactly the same:

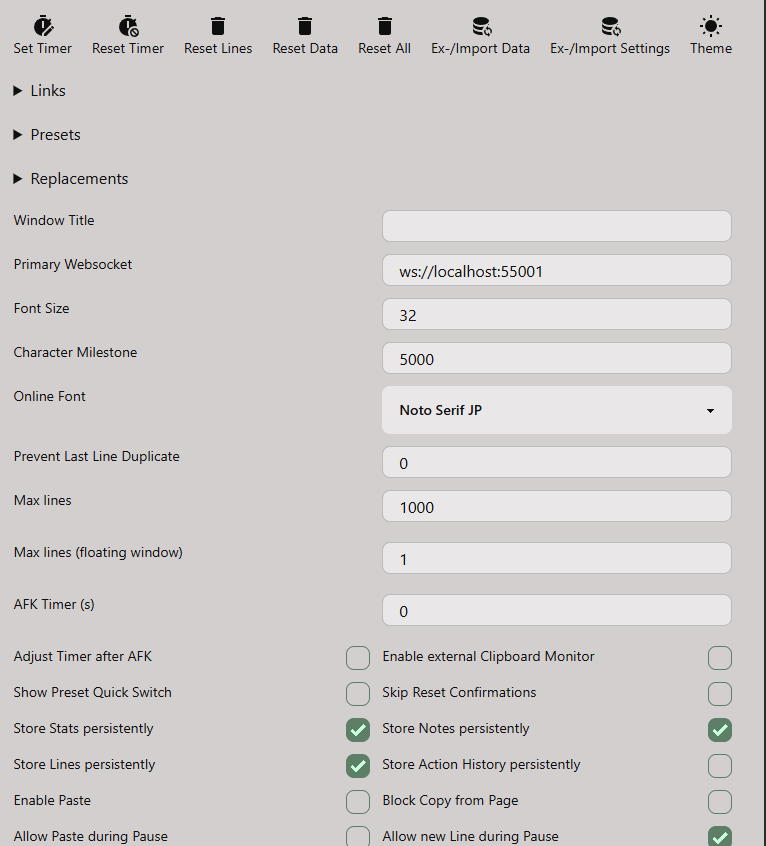

Many people use Renji's because it's lightweight and has many settings to allow you to configure things.

Maybe too many settings.

Because Renji's texthooker is open source it is often bundled into other software like Game Sentence Miner which has added a few specific features.

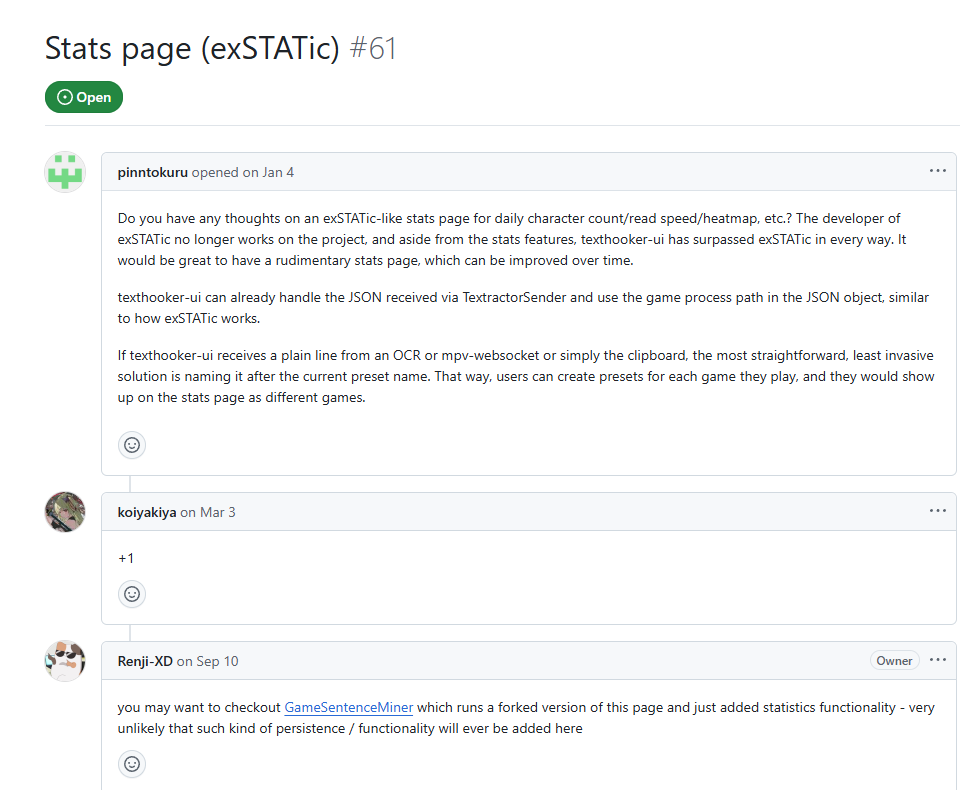

While Kizuna has stats, Renji's does not other than characters read and time spent.

The author, Renji, actually suggests people use GameSentenceMiner for stats with their texthooker:

You may have noticed in the previous paragraph someone mentioned ExStatic.

This is another texthooking page but with a lot more stats.

But in terms of functionality of the page itself, it's lacking somethings that Renji's has.

Still, many people use ExStatic as an easy way to get beautiful stats. It is open source and can be run entirely locally, without an internet connection.

Mokuro is a file format for manga. It's basically a HTML overlay over a bunch of Manga images that let you look up the words in that manga panel using Yomitan.

It does this by OCRing the manga to generate this.

Mokuro Reader is the app that lets you read these files:

The main problem with this is finding manga.

You have to find a way to buy the manga, get the raw images, and process it. There are less than legal ways to do this, but I won't talk about that here.

You can store all of your manga in the cloud along with progress etc and easily read from any device (you can read from any device, and use Yomitan on Android if you want to mine):

When you read manga, since it is in the browser, you can use Yomitan to look things up:

For reading it has some cool features, like a night mode to block out blue light to make night reading easier:

And a million other minor settings to alter how you read.

Of course it also has Anki support. Make a word card using Yomitan and then

Double click the screen to grab an image.

There's even stats!

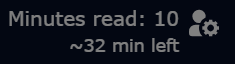

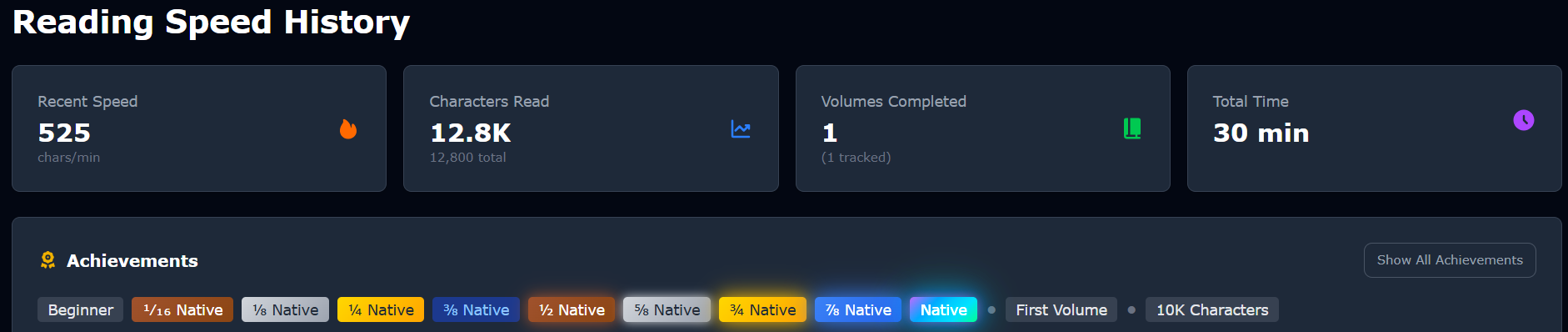

In the manga itself you can see how long it'd take to read your current volume:

The stats are visually appealing

With cool per volume / series data:

My main complaints are:

MangaTan was born out of anger.

Anger at Mokuro files being terrible to create.

Anger at current manga websites shoving 10 ads / second down your throat.

Hear it from the creator:

We can just drag and drop light novels into ttsu, hook into visual novels with ease, or load up anime with subtitles in ASB or Memento.

But manga? Manga has always been the exception.

The process often meant needing a high-end GPU just to run tools like Mokuro, and then waiting for it to finish

And don't get me started with those terrible websites that use the worst hosts in existence.

Who doesn't miss the old days of simply reading manga in bed? Before we were hardcore weebs, we didn't have to deal with our tenth ad on a limited 100kbit-speed-limited hoster while using Tachiyomi. But you can cast aside that

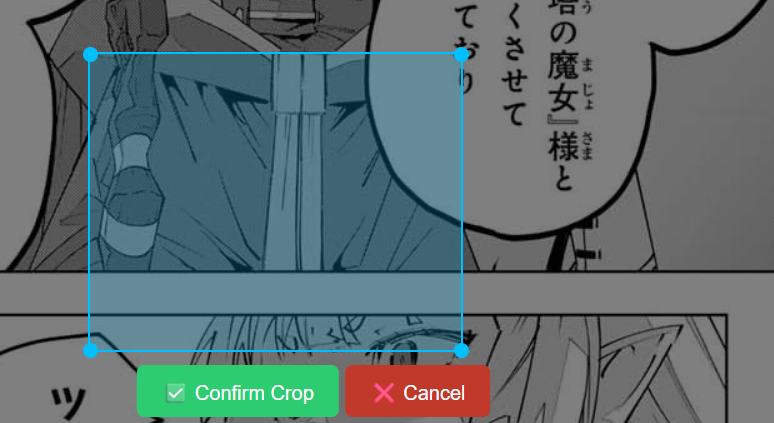

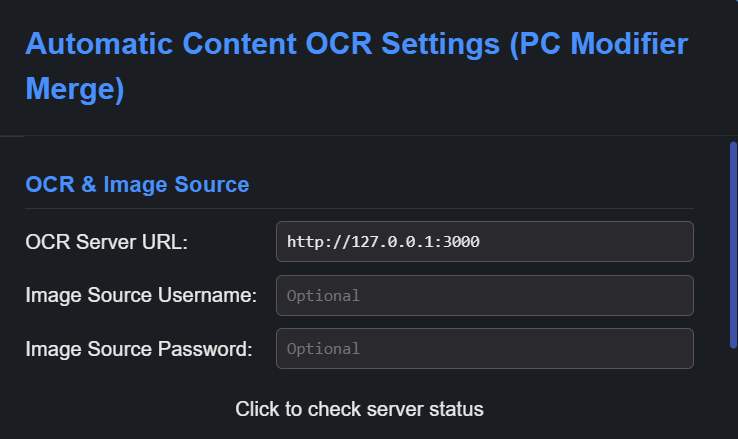

Mangatan aims to get rid of all the painpoints of Mokuro:

The setup requires some computer skills but once its done, it works alright.

.exe, which would make Mangatan extremely easy to use..exe you can use!

Every time you load a page it will OCR it and let you look things up.

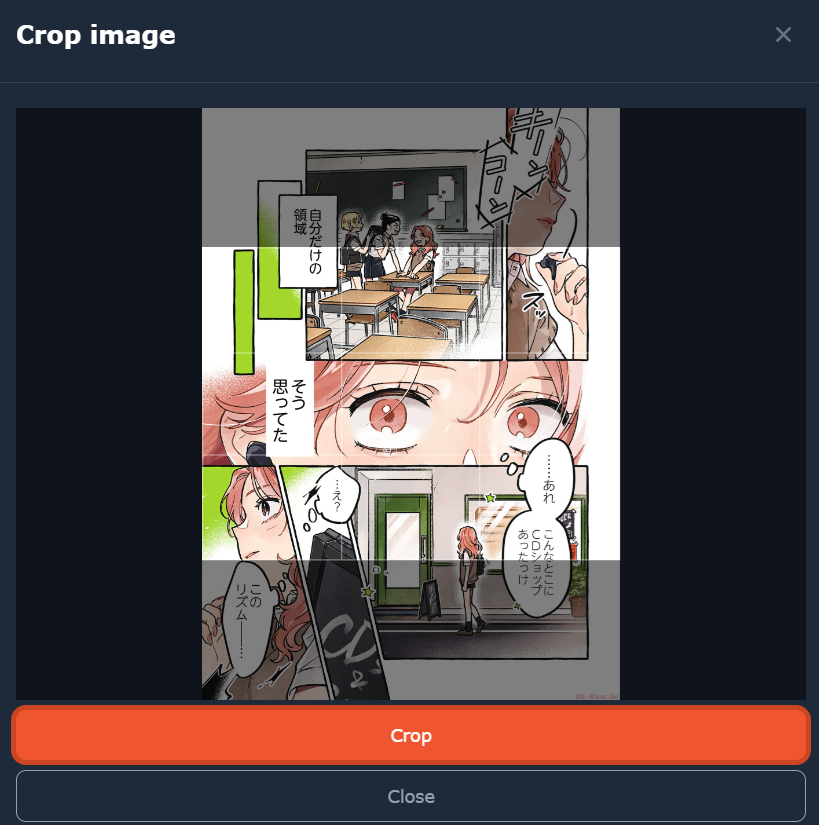

You can even crop images to send to Anki:

It's very lightweight once its running. All it does is OCR the manga and crop images for anki cards.

From the author themselves:

There's also a bunch of settings, for example you can use your own OCR server if you want:

I like setting the colour to purple and font colour to black, as it makes it look nicer to me:

There's no stats, but in this case it's a good thing. Mangatan is extremely lightweight.

It also works on Android devices.

You can also use GSM's OCR to read manga if you so wish.

Set up the OCR, and then open the overlay and you can create flashcards etc similar to how Mangatan does it.

GSM even has stats for manga:

But the downsides are that GSM isn't made for manga.

When it takes a screenshot, it can't crop the manga like Mokuro does (due to it being built for games / full screen things).

Look at this Anki card:

The image has black bars around it, whereas if it was cropped it would not.

If you already have a good GSM setup, it makes sense to use this. But if you want a really good manga setup, maybe this isn't so good.

It's also not as automatic as Mangatan, if you want nice stats you need to tell GSM you're reading something new.

Mangatan can only use Suwayomi whereas GSM can OCR any type of manga. But, Suwayomi has all the manga already so it's not that much of an improvement.

Also, GSM does not work on Android unlike Mokuro Reader and Mangatan.

Most people get into learning Japanese because of anime, so now let's talk about the best video players out there!

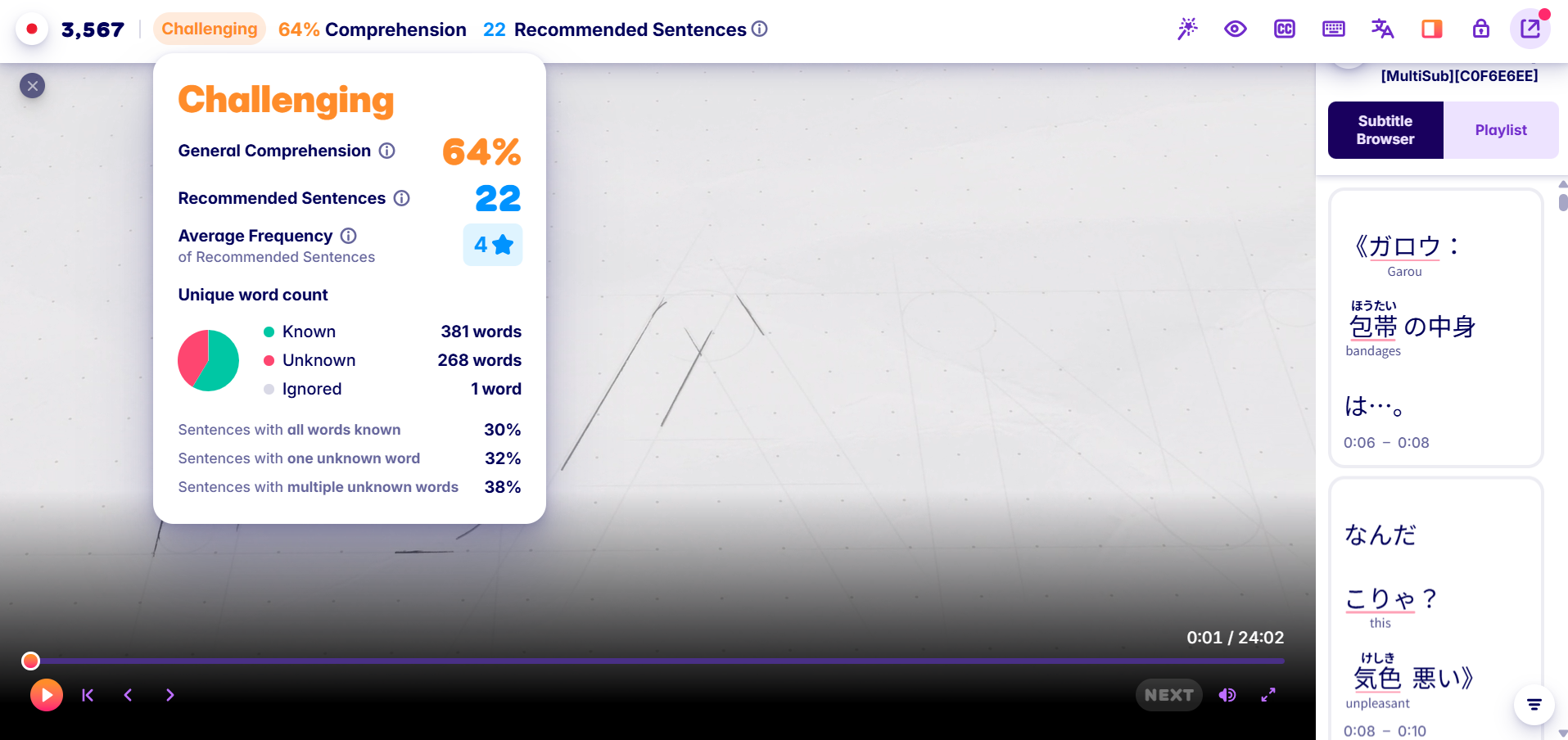

Despite being a paid for product, I believe Migaku offers the best service for watching videos.

Firstly let's look at this.

Migaku shows you an estimated comprehension score for the video you want to watch. It uses the frequency of the words and your known words to calculate this.

It's not as simple as "you know these words, you don't know these" – it uses an algorithm to work out the average frequency of words you do and don't know and uses that to calculate how hard a media is.

For example if the words you don't know are very high frequency, it will be a lower difficulty than a video with a bunch of words you don't know.

If the show doesn't have subtitles, you can also generate them using the top bar.

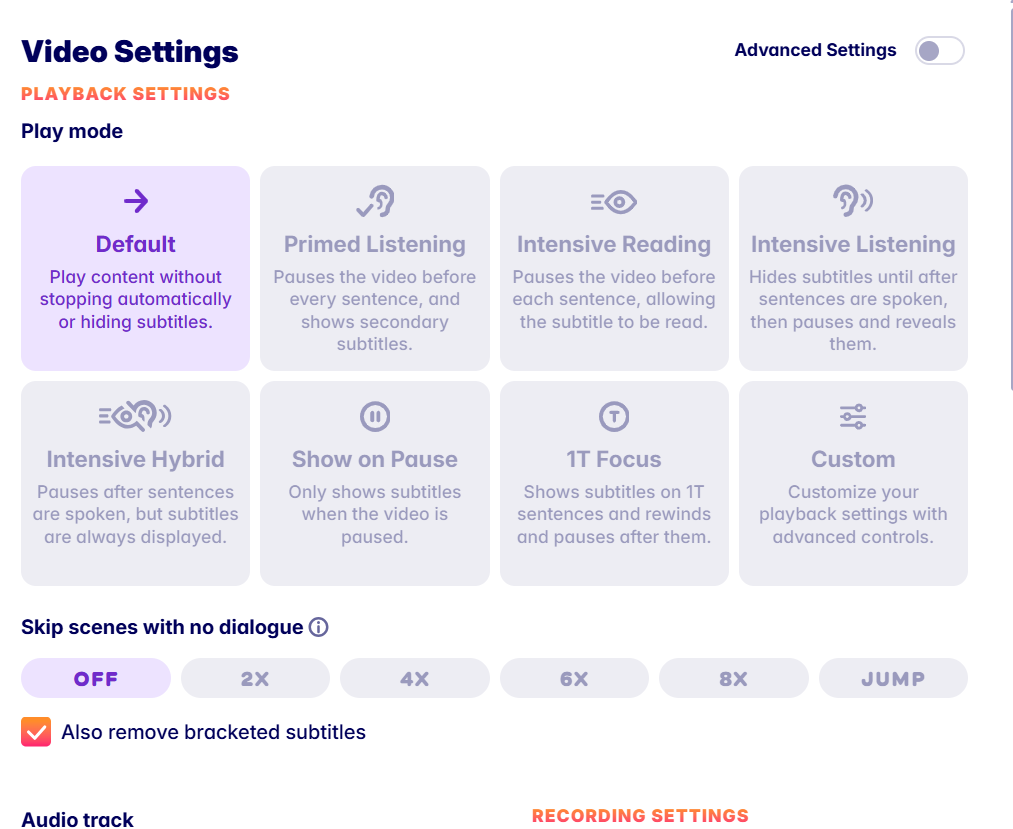

Migaku has about a million different presets for you to watch videos, or you can make your own:

As well as keyboard shortcuts so you don't even have to use a mouse:

If the UI is too cluttered you can just hide everything apart from this tab:

Migaku will highlight words in good sentences to mine with high frequency, and you can even tell it to include a lil single definition under the word to help you read the sentence:

Migaku works on Netflix and Disney+, but all of these screenshots use their local player. This is just a DVD I'm playing 😃

Its main downside is that it costs money, but I believe Migaku is easily the best video player out there in terms of features and ease of use.

But... It does cost money and do you really need those extra features? It's up to you.

ASB Player is the GOAT of free video players for Japanese.

It's offline.

It works with Netflix, YouTube etc.

It extracts subtitles from them.

You can mine from it just like Migaku.

There's really not much to it. It plays videos well, has great subtitle support and lets you mine from it.

Which is why it's so good and well loved, it does one thing and does it well.

However, compared to Migaku it is missing a few features some people may want:

But if you don't care for those features, ASB Player is great.

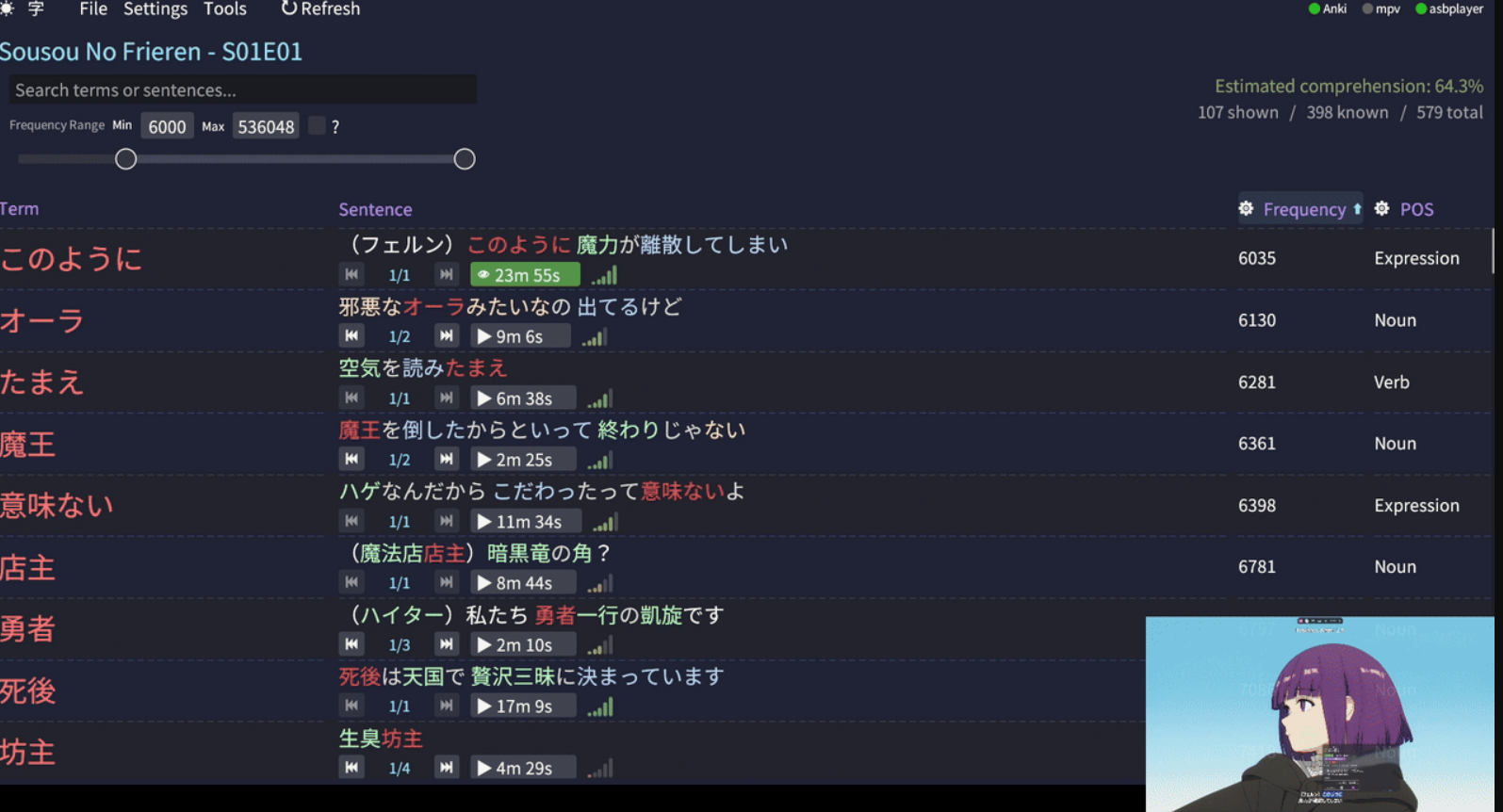

Yomine is a relatively new player in the field, and not actually a video player but something that supports video players.

To use their words:

A Japanese vocabulary mining tool designed to help language learners extract and study words from subtitle files. It integrates with ASBPlayer and MPV for timestamp navigation, ranks terms by frequency, and supports Anki integration to filter out known words.

So it's kinda of like the Migaku "you should mine this word" feature, but for ASB Player and free.

It basically extracts all the words from a video, checks to see if you have them in Anki and sorts them by a frequency.

From this you can then click a button to mine that word.

Here are some interesting ways people use it, which may not be obvious:

mp4 recording of a game you're playing with a subtitles file), loading it into Yomine and mining all the words you didn't mine during that playthrough. Useful if you want to play now, mine later.

Now let's look at some of the best websites out there.

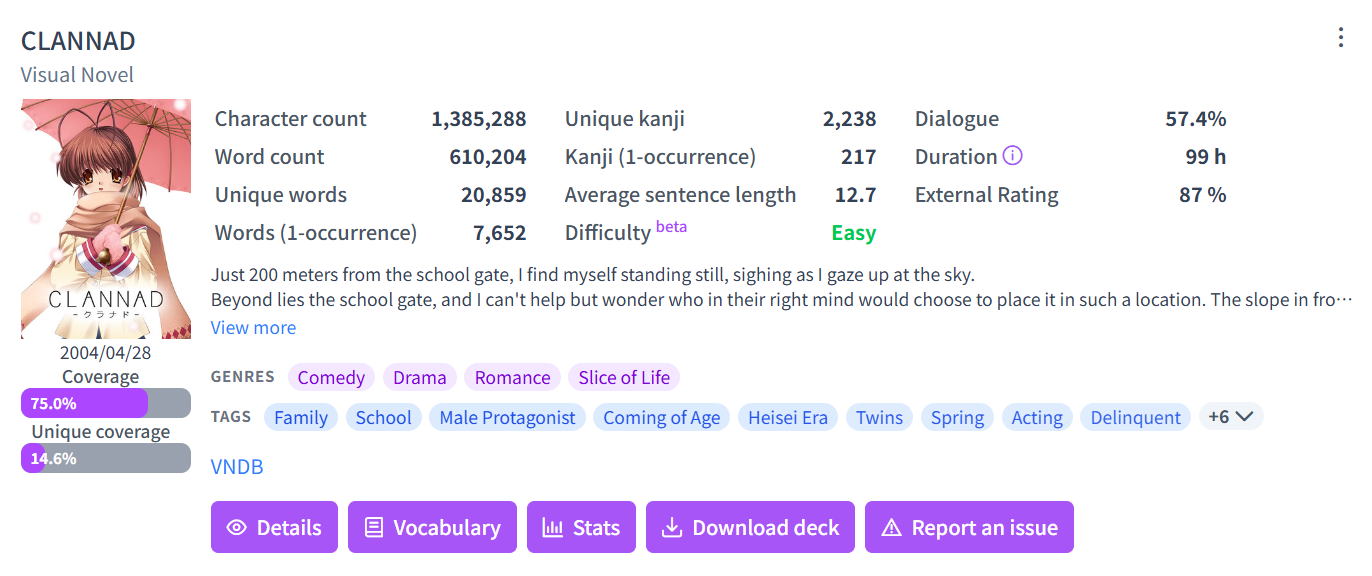

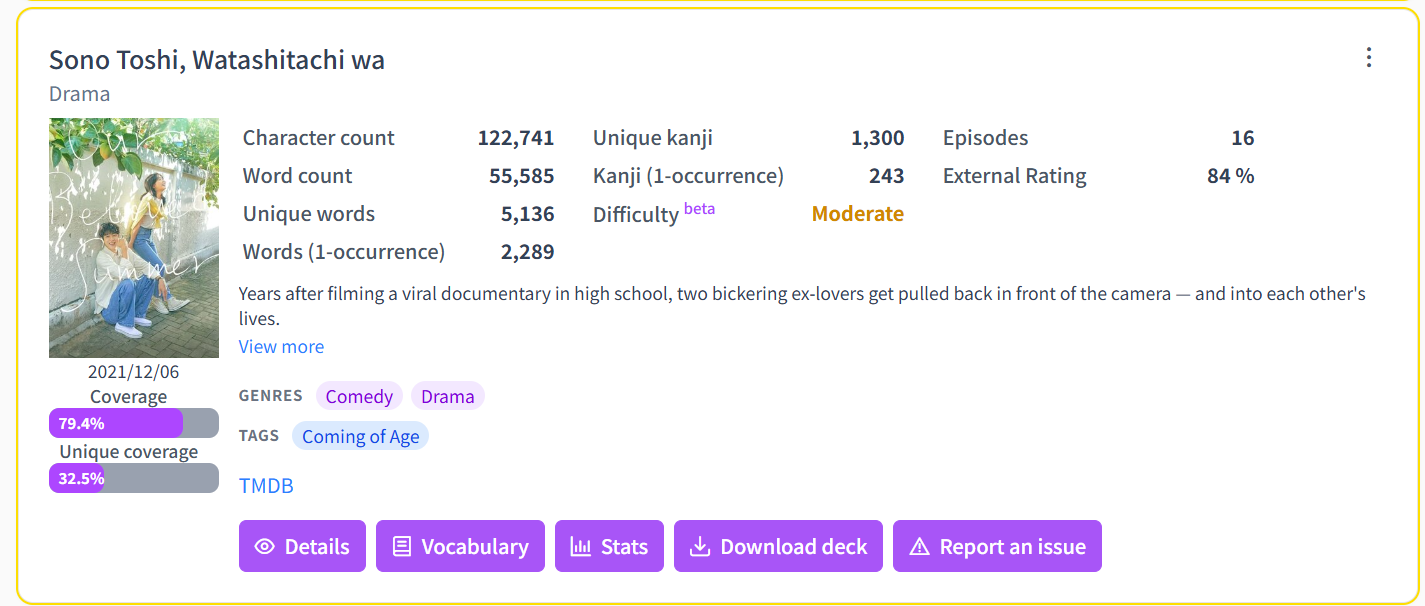

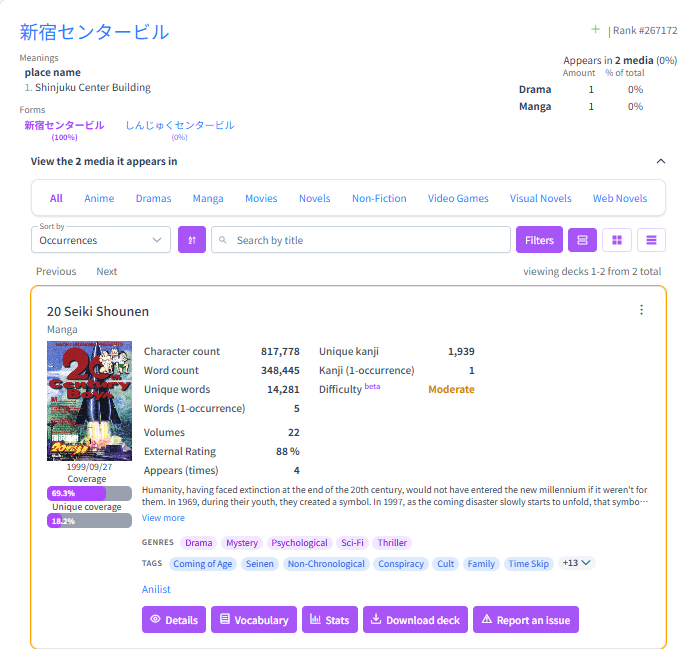

Jiten is a website that stores thousands of Japanese media and tells you a rough difficulty for them, among other things.

They support all sorts of media, not just visual novels or anime.

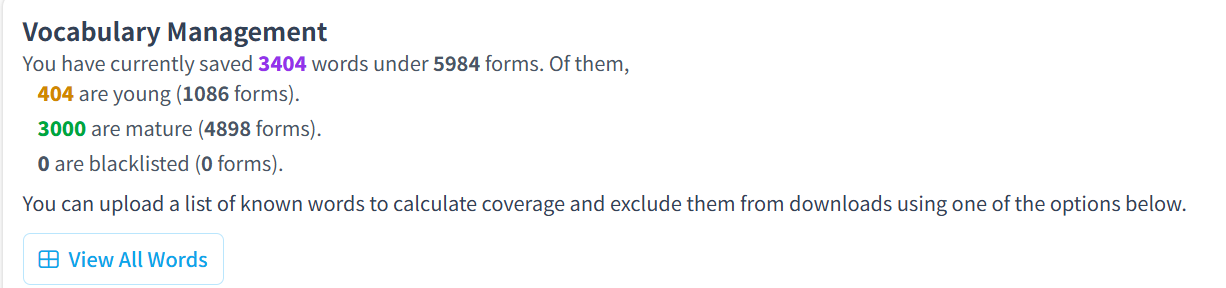

In Jiten you can upload a list of vocaburary you know (syncs with Anki and other tools):

Jiten can then rank how many words in the media you know, and tell you a personalised coverage score.

Something I like to do is find visual novels with >80% external rating (external rating == reviews on vndb, anilist etc) which I have >80% coverage for (meaning I will understand most of it).

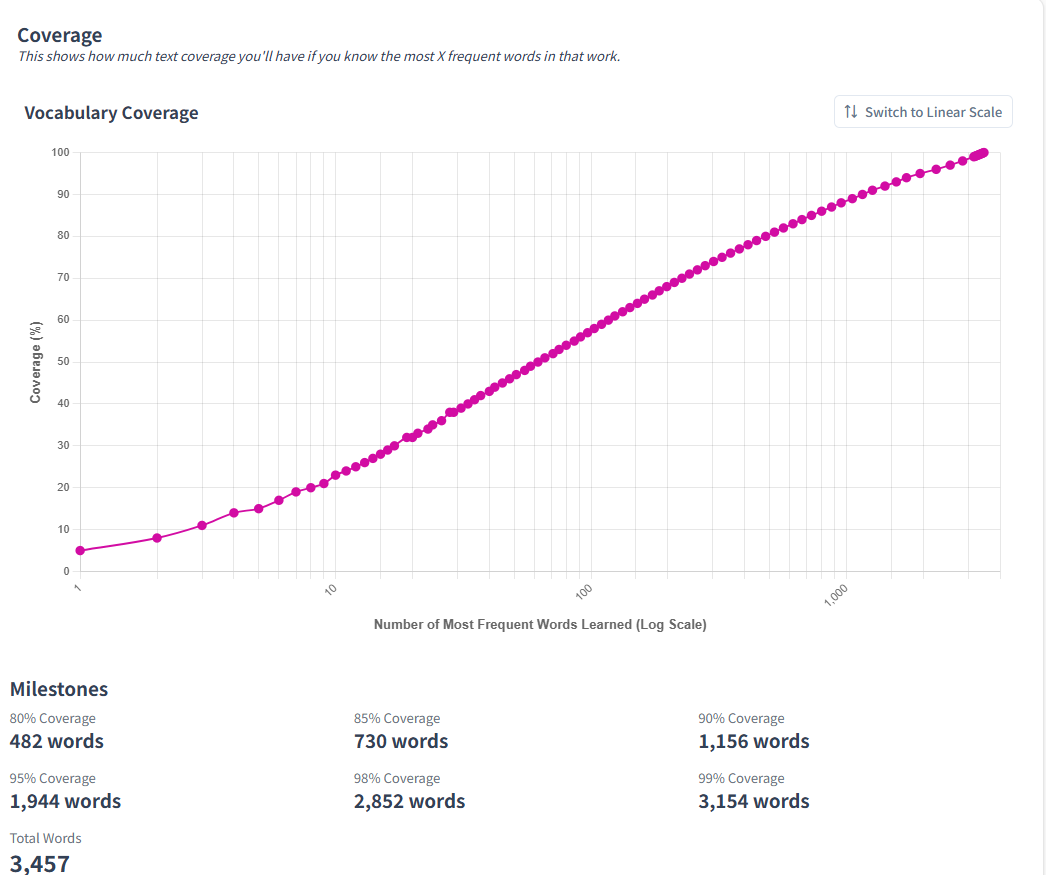

Once you've found a piece of media you like hit "statistics" and see a cool graph.

For example, in this anime to understand 95% of it you just need to know the most frequency 1944 words that appear in it.

To understand 99%, you need to know an extra 3200ish.

Learning 1944 of the words in this anime seems great, but how do we actually learn them?

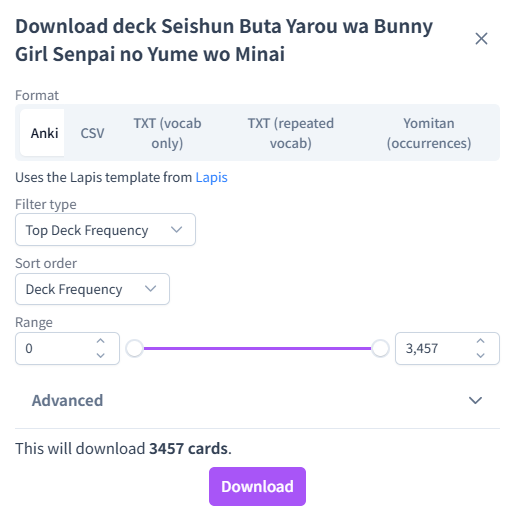

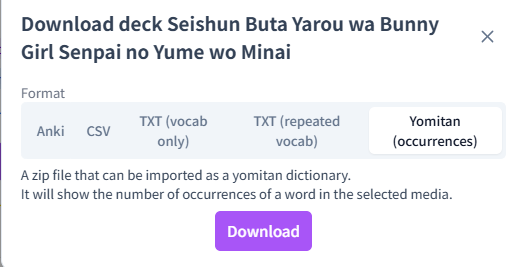

No worries, Jiten lets you download Anki decks with the exact freq order of that show. Learn the words you need to know for the media you want to watch.

If doing premade Anki decks isn't your thing, download the "occurrences" dictionary to get a frequency list you can use in Yomitan to tell you how often a word appears in the show.

That way I can mine high frequent words in shows I want to watch, without watching them yet. Almost like I'm prepping for it.

Jiten also has a dictionary you can use. Search a word to see its frequency, and all the media that word is in:

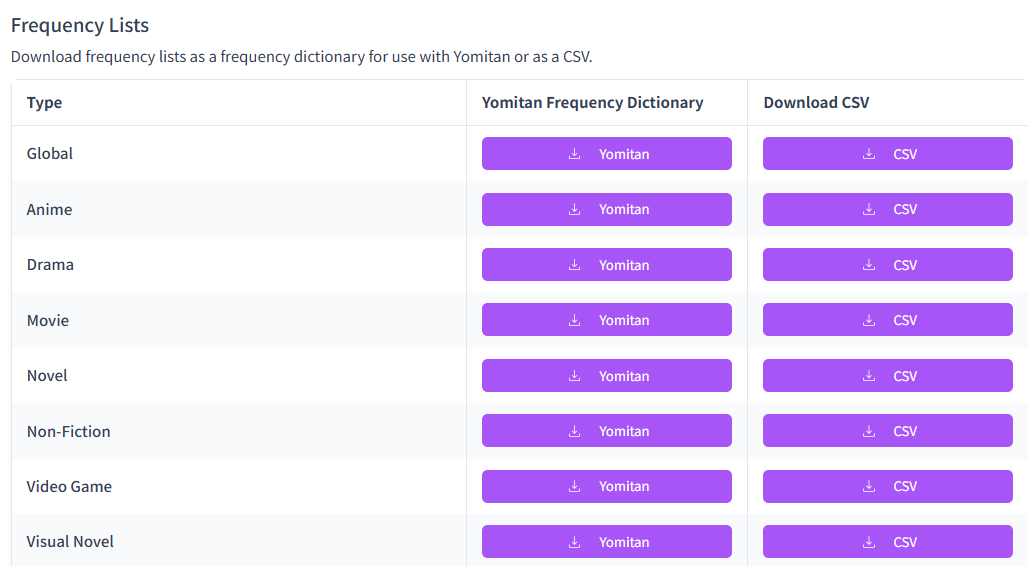

Speaking of dictionaries, you can download global frequency lists on Jiten too:

And finally, Jiten has a lot of data and every single week it is improving.

If you have spent any amount of time in Japanese spaces online you may have heard of "Morg". Especially on Discord or Reddit.

He's a really nice guy who knows a lot about grammar and wants to help you learn Japanese.

This year he took it upon himself to improve the Sakubi grammar guide, and ended up writing Yokubi.

It's a really succinct grammar guide designed to get you immersing ASAP.

Lumie Reader came out hitting this year with a single premise:

What if we made ttsu but good?

Another reading app for Japanese

It's entirely offline, very fast and supports a lot of features.

It has some features over other readers like:

You can tell I don't read books can't ya...

This year has been amazing for Japanese learning tools.

If you want to give back to the community but don't want to code, many of these devs have donation links listed.

What's your favourite tool? Tell me in the comments :) <3

2025-11-13 19:10:45

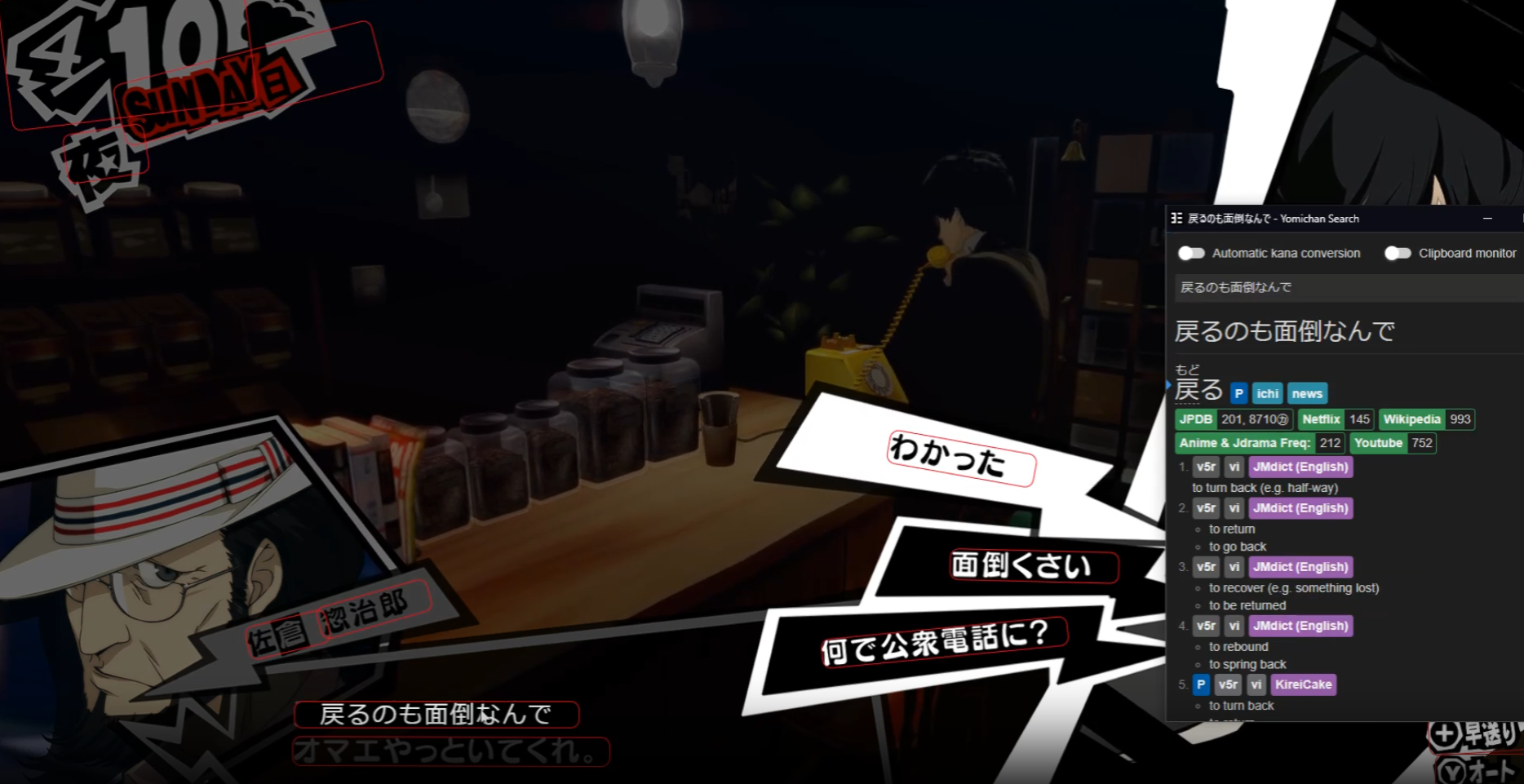

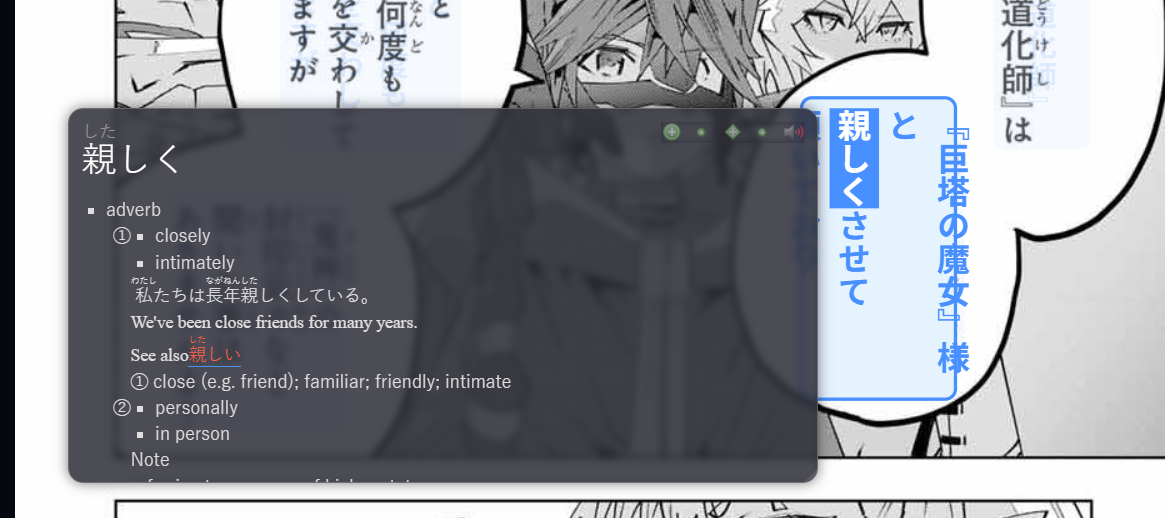

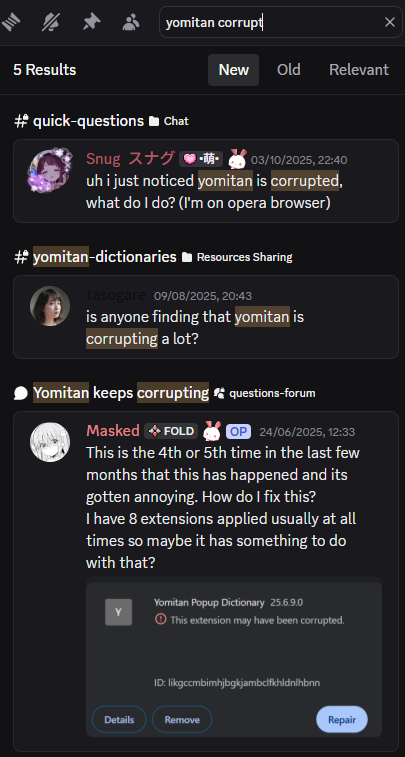

Yomitan is a famous dictionary app people use to learn Japanese.

JL is an alternative desktop dictionary app for Windows.

Let's jump right into it.

Yomitan takes around 6 seconds for me to check duplicates in my mining deck.

My mining deck is only 3500 cards, so if you have a larger deck I imagine it's terribly slow.

Secondly, if you take your Yomitan cursor and drag it across some Japanese text it tends to lag.

That's 6 whole seconds to load Yomitan and run a duplication check.

canAddNotes which is deck specific, Yomitan uses noteinfo which is not deck specific.You can only use Yomitan with a browser.

Yomitan relies on local storage in the browser.

This corrupts – often.

When Yomitan corrupts you have to reinstall all of your dictionaries and settings.

It's good it's a browser extension as it can work on any platform, but it also comes with downsides.

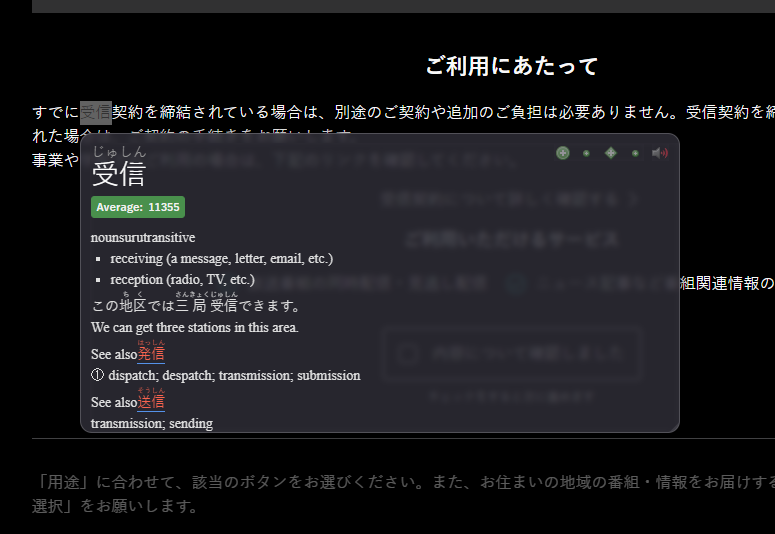

Firstly, let's talk about speed.

JL is really, really fast.

It's actually one of the main reasons you should use it.

Look at this gif. Absolutely no lag. Not to mention that it is technically showing more dictionaries and doing longer scan length (32) than my Yomitan does.

Also, duplicate detection is blazingly fast.

Do you see that small X next to the word? That indicates I can add it to Anki.

If it's red, it means it's a duplicate.

Rewatch the gif. Look at how fast that small red X appears again. Seriously.

This takes minimum 6 seconds in Yomitan for me btw.

It's so unbelievably fast I genuinely have no idea how they are doing this.

I was hesitant to believe they're using AnkiConnect at all, maybe they're talking directly to the DB which would explain why Yomitan is so slow?

But no, they're using AnkiConnect just like Yomitan does.

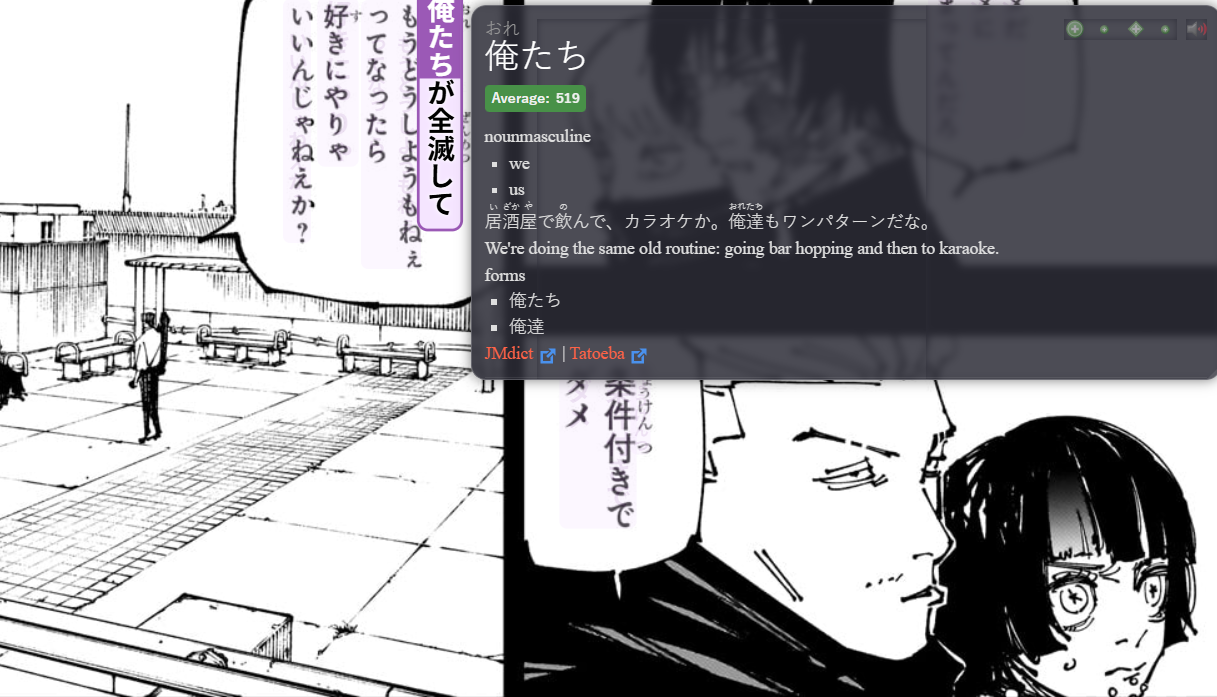

In my newbie stages where I read super slowly and I only have 3.5k Anki cards, I have to look up multiple words a sentence.

With Yomitan taking 10+ seconds every lookup and mine, this slows me down a lot.

Look at this:

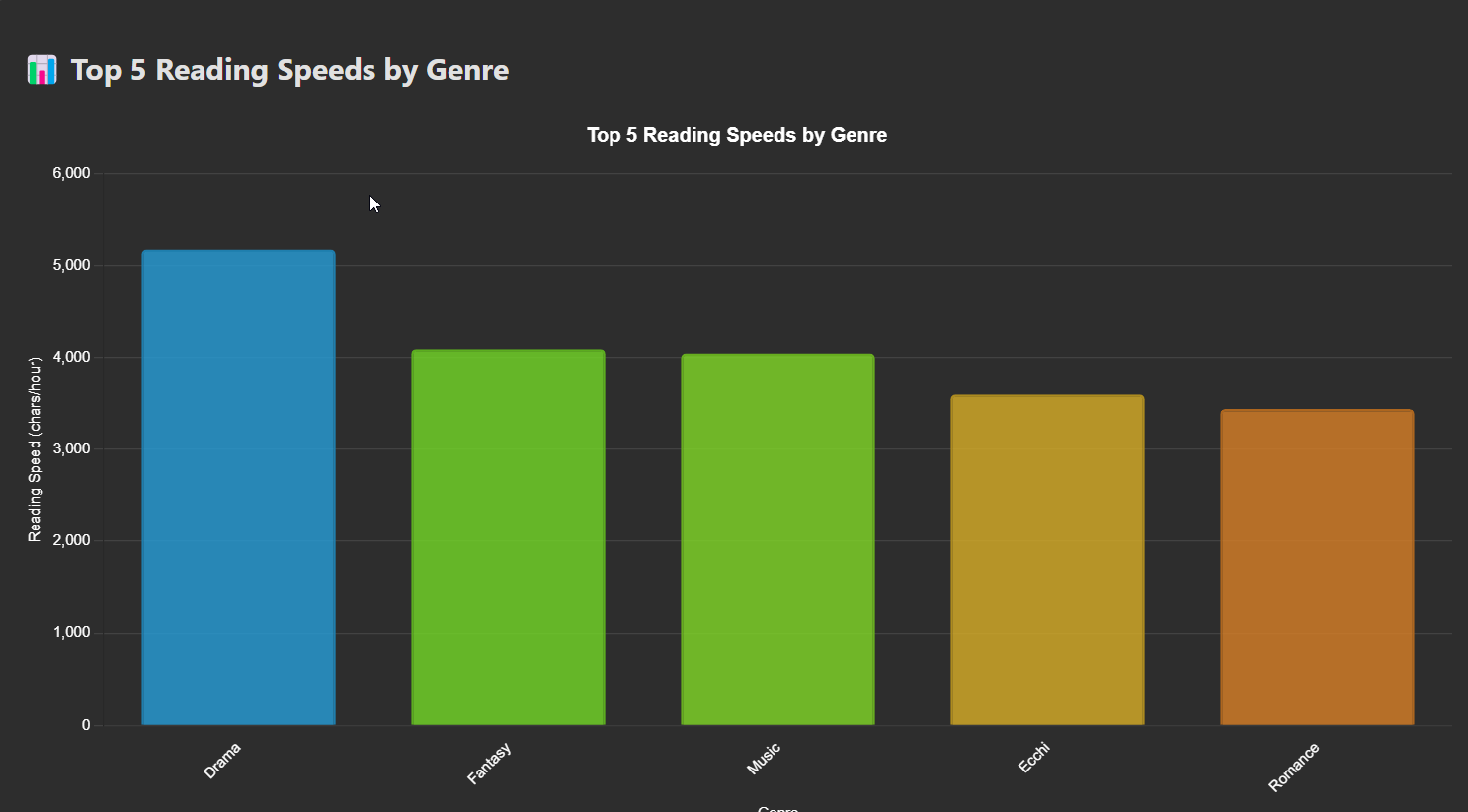

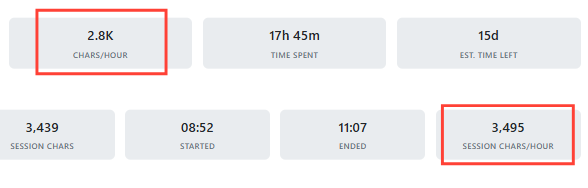

Top is my average chars / hour using Yomitan.

Bottom is with JL.

Unironically I have a +700 chars / hour buff by just using another program, simply because it's faster.

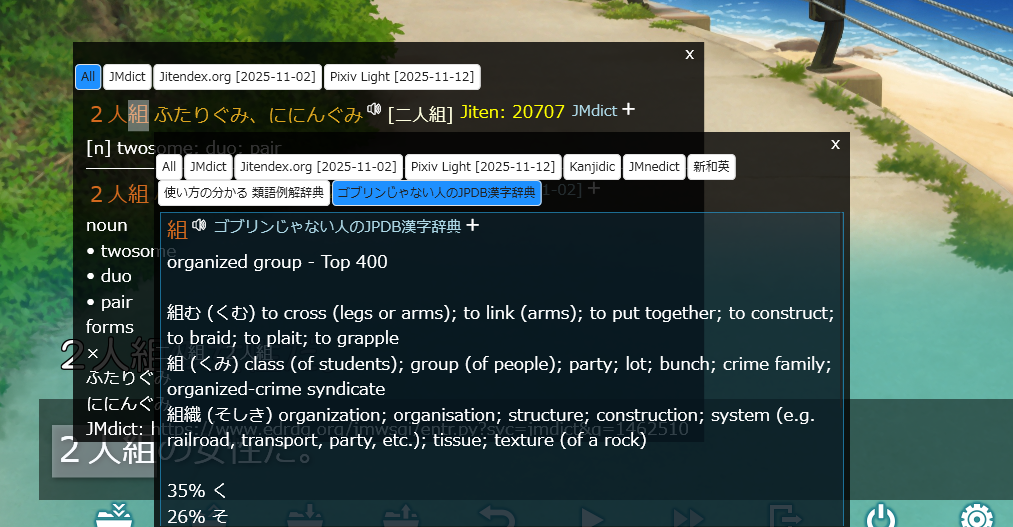

I really like this kanji dict:

But in Yomitan it kinda sucks.

It can't be an official Kanji dict, so it has to be a word dict. This is because of the format of Kanji dicts in Yomitan.

But because it's a word dict, it has to compete with all the other Yomitan dicts.

If your friend is inviting you to 飲み会 and you highlight this with Yomitan, you will have to scroll really far to find the Kanji dict for 飲.

To fix this, you have to use profiles and switch profiles per my blog post everytime you want to look up a kanji with this dict.

But in JL, you don't have to do that!

In mining mode, simply highlight the kanji you want to look at and click the Kanji dict and you get to see it instantly.

You can even look up words with jmdict, and then highlight the kanji in that definition to get specific Kanji definitions.

Being able to easily click what dict you want to see is so powerful. I believe this will make it easier for monolingual transitions too.

Have JP -> JP dicts show up first, then just use child windows and switch to JP -> EN if you need to using the tabs.

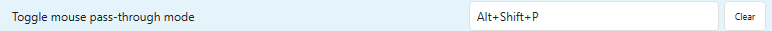

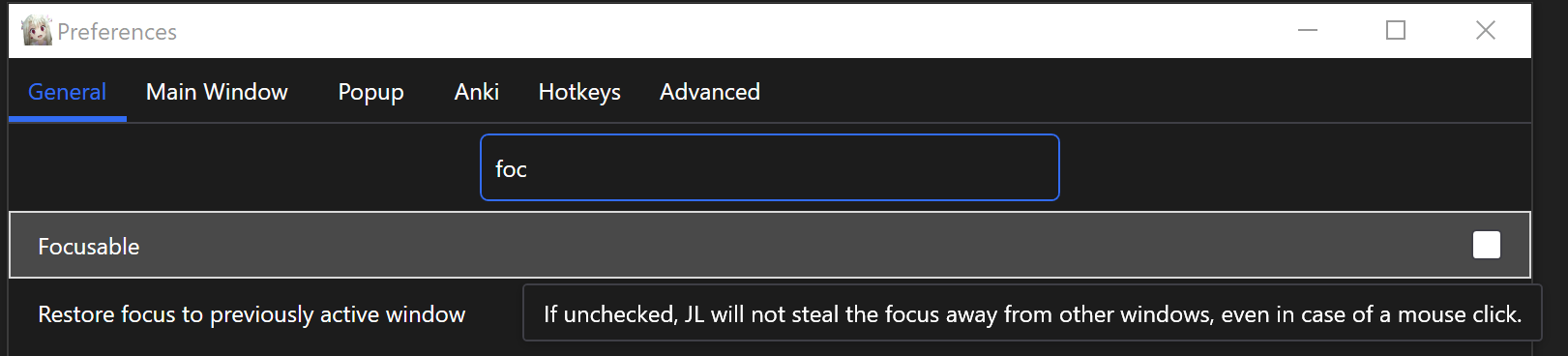

You can kinda make an overlay in JL.

With this mode, the text appears as if it was overlaid but not. You can then "click through" the text itself and it will click the window behind it, allowing you to progress in the story.

You have to enable this with a hotkey:

This kinda breaks for me on some full screen visual novels.

JL works great with GSM.

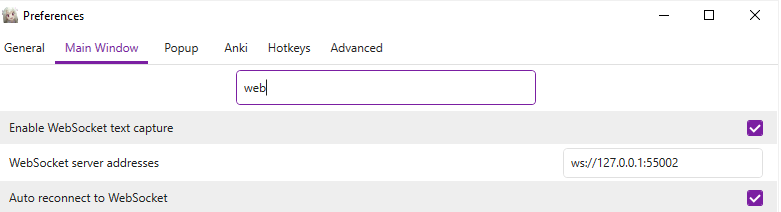

Set the addresses to use port 55002 and set it to auto reconnect to WebSocket.

Done 🥳

If you have a JL window over your visual novel, GSM uses OBS Window Capture so it won't show up in your screenshots.

Does this replace GSM overlay?

Nah, not really. Overlay overlays the text perfect on top of the game. It's 100% natural. This is a black box over your visual novel.

You can also use GSM to OCR a game or visual novel, and display it in JL.

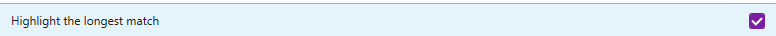

In the settings you can enabled highlight longest match.

This just makes it easier to go through the text.

There is a custom search feature, allowing you to easily Google sentences or words.

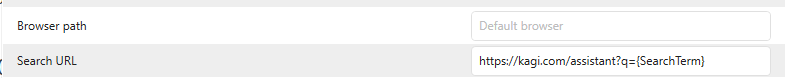

You can change it from Google to whatever you want.

I have it set to an AI prompt that just breaks down words for me. Sometimes I really struggle with accents / slang that aren't in dictionaries, so this helps a lot.

Sadly right now you can only search a specific word and not a whole sentence.

Names, places, spells, and more are very custom to the media you are consuming.

There's things like VNDB name dictionaries, but it's not perfect.

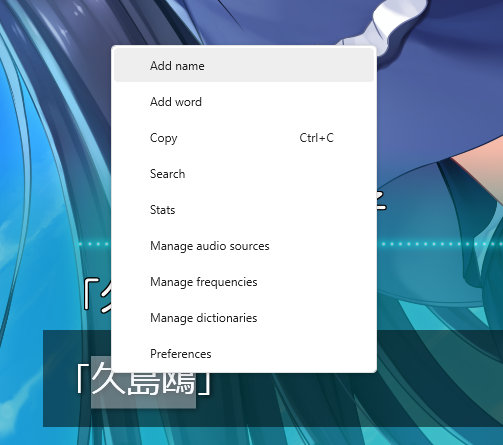

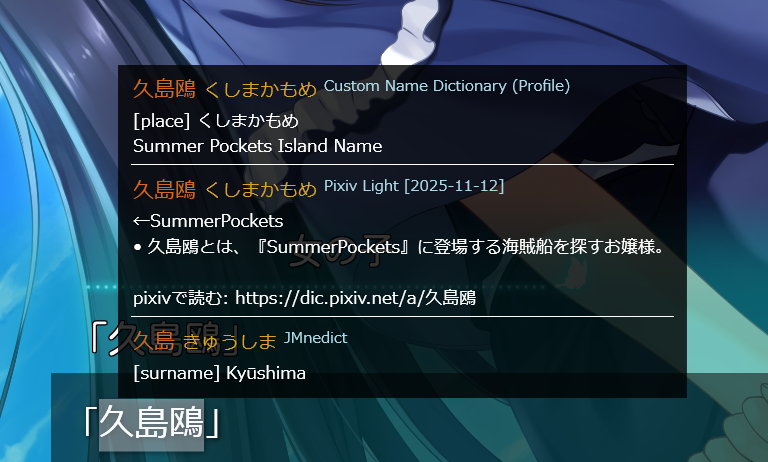

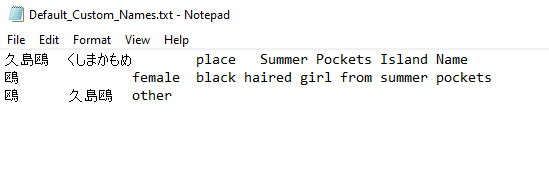

JL has custom dictionaries.

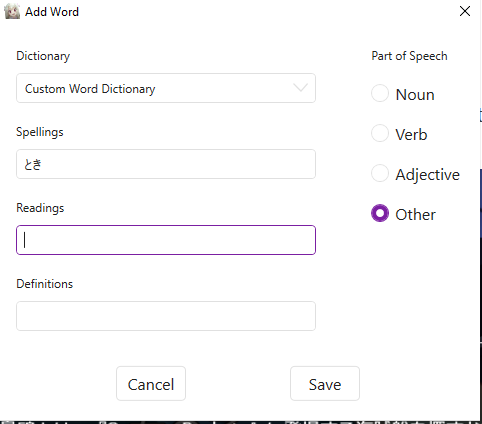

If the word you want to look up does not have a definition, right click it and add it:

Now everytime you look up that word you'll see your custom definition:

This is super easy to edit later on. For example, I made a mistake here.

It's not the island name, but the name of a girl on the island.

JL stores these custom dicts in plaintext format. No JSON. Just open it and edit it!

You can even make profiles in JL and have custom dicts per profile!

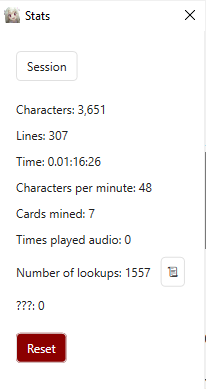

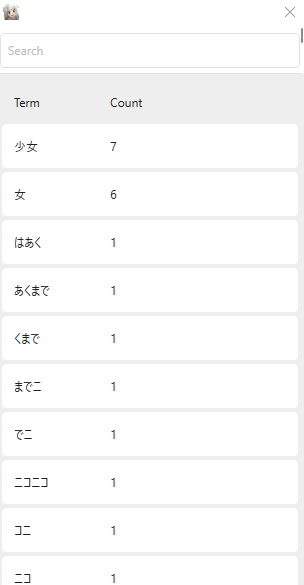

JL has stats.

Not as good as GSM if I may say so ;)

But what's cool is that you can see how many times you have looked up a word.

I wish this showed up in the popup window so I knew if I should mine a word that appears often or not.

You can also control a caret in the JL window and go keyboard only.

If you enable this setting, you can also just click enter on your keyboard to advance in a visual novel.

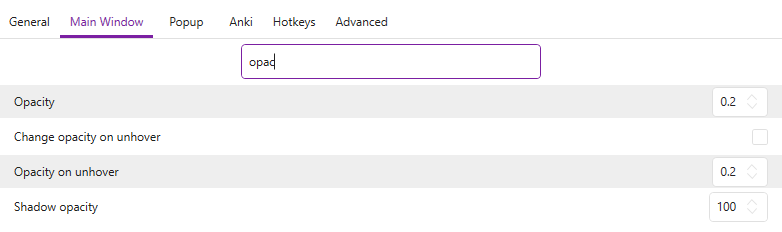

If you hate seeing the black box in your game, you can change these settings:

Now you'll only see it when you hover over it!

JL is a Windows only program. This is where Yomitan is still great.

You need some sort of text input event, like textractor / Lunahook / GSM OCR.

This is where GSM still works well, it acts as a middleman between getting the text and using dictionary software.

In my opinion JL is perfect for video games / visual novels, but for other things Yomitan still reigns supreme.

Download JL from here:

Extract it and run the .exe everytime you want to start JL.

I pinned it to my toolbar.

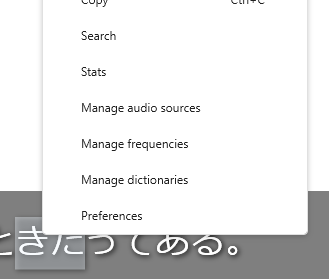

Right-click to open the settings menu etc.

When you first start JL it'll ask to download dictionaries.

Say yes, they're pretty good.

You can use Yomitan formatted dictionaries with JL.

I used some from Marv's starter pack:

Right click and click "manage audio sources"

If you are using Local Audio Server for Yomitan, enter this:

http://127.0.0.1:5050/?sources=jpod,jpod_alternate,nhk16,forvo&term={Term}&reading={Reading}

Otherwise it's the same as Yomitan.

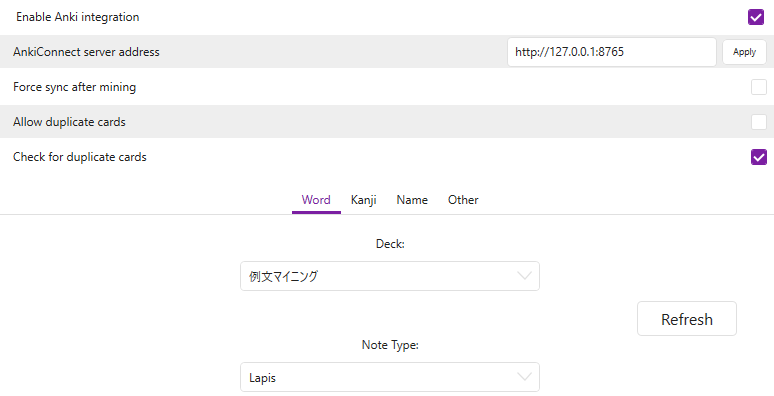

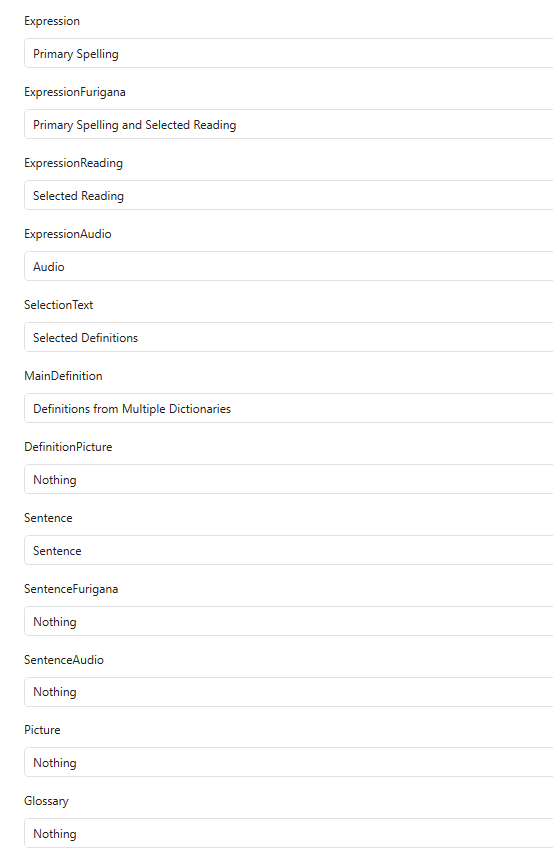

Go to the Anki tag and enabled it.

Here's what I've got for Lapis card type.

That's it! Enjoy playing with JL!

2025-11-12 06:38:39

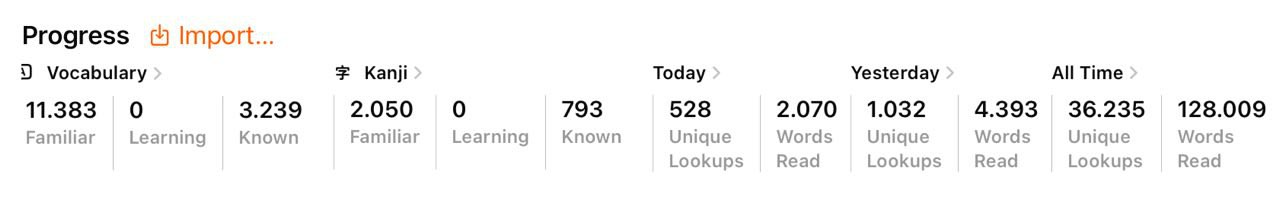

TLDR - It has the raw data

Other stat apps simply collect data such as how many characters you read and when.

They normally collect data like:

This allows them to calculate stats like:

etc

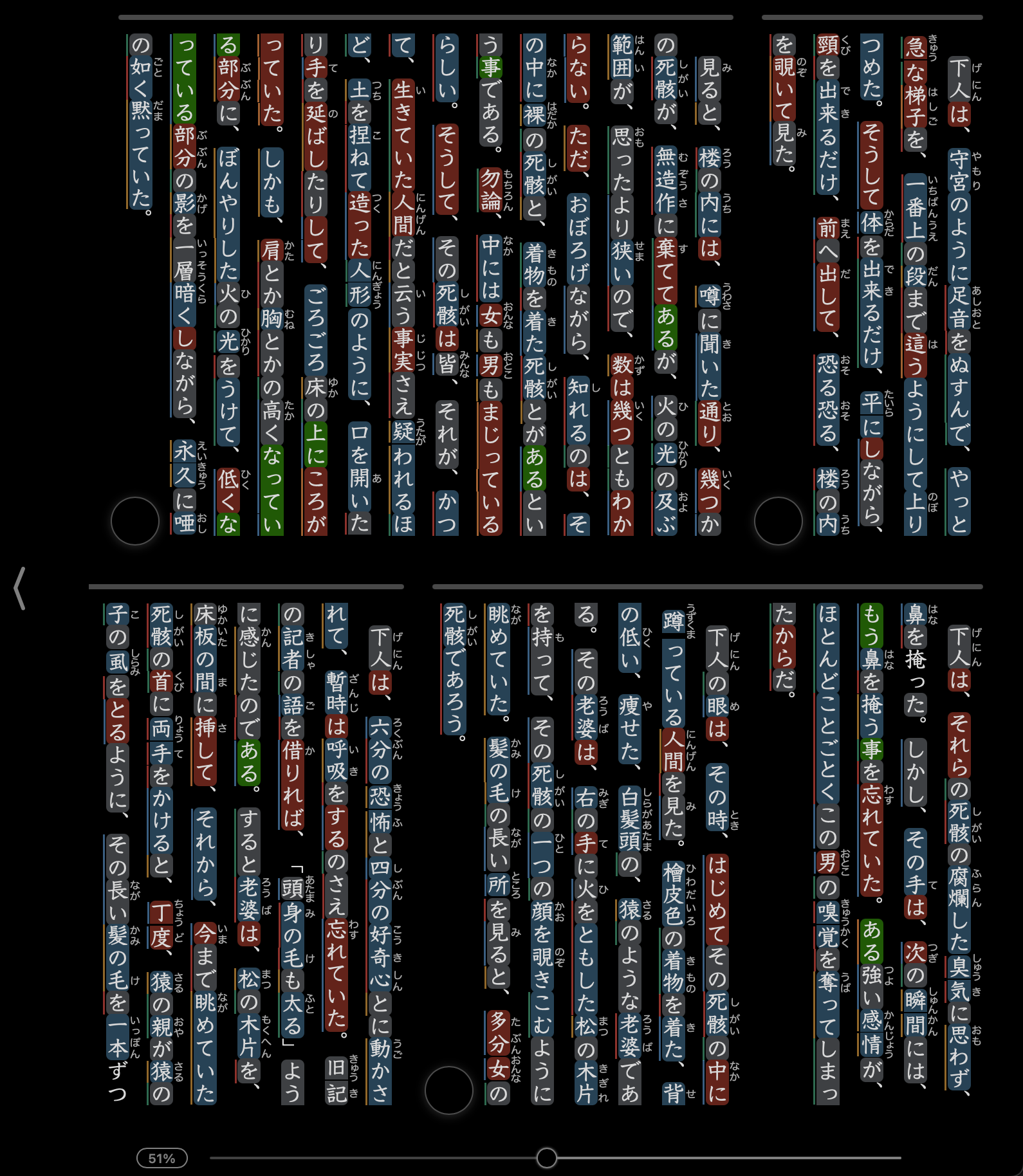

GSM collects the actual sentences you read. You don't tell GSM anything, GSM stores the actual sentences.

Specifically this data is stored:

This allows GSM to calculate all the same stats as before, but we can get some extra data like:

etc

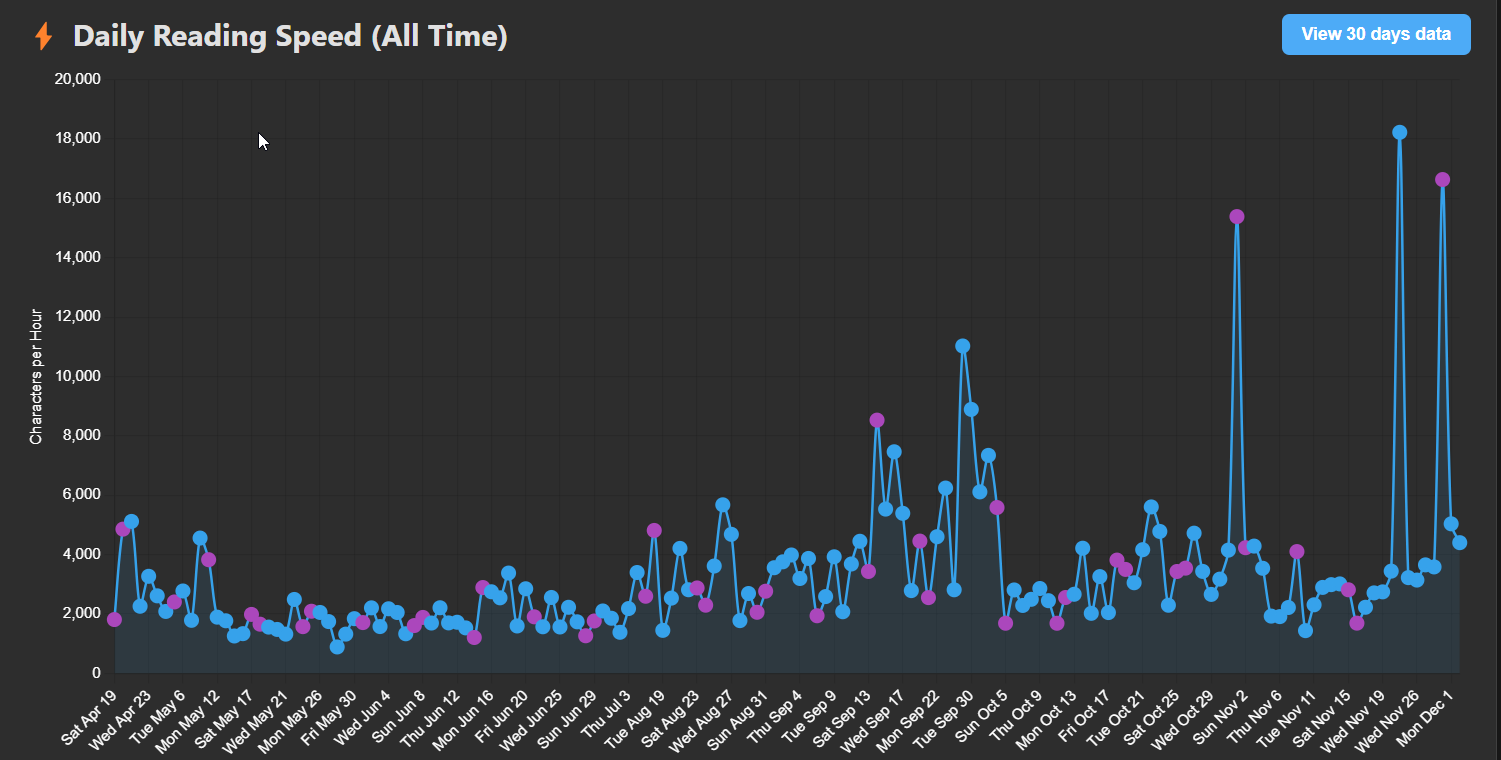

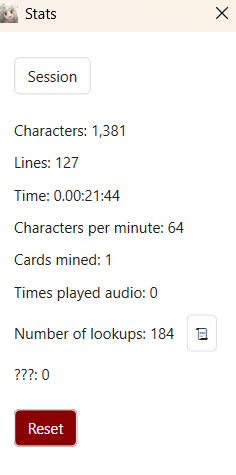

More importantly many statistic apps use an AFK timer to work out when you're AFK.

If you don't read for say 30 seconds, it considers you AFK.

Because they do not have the raw data, they calculate it once and that's it for life.

In GSM because we have the raw data, you can change your opinion about this anytime.

Other stat apps -> One time statistics, usually without the raw data, which cannot be changed and are inflexible

GSM Stats -> Has raw data, allows you to change your opinion whenever you want about your data