2026-03-03 21:47:00

I mentioned earlier that my next theme would be “quite the opposite when it comes to looks” compared to Bearful. I wasn’t exaggerating.

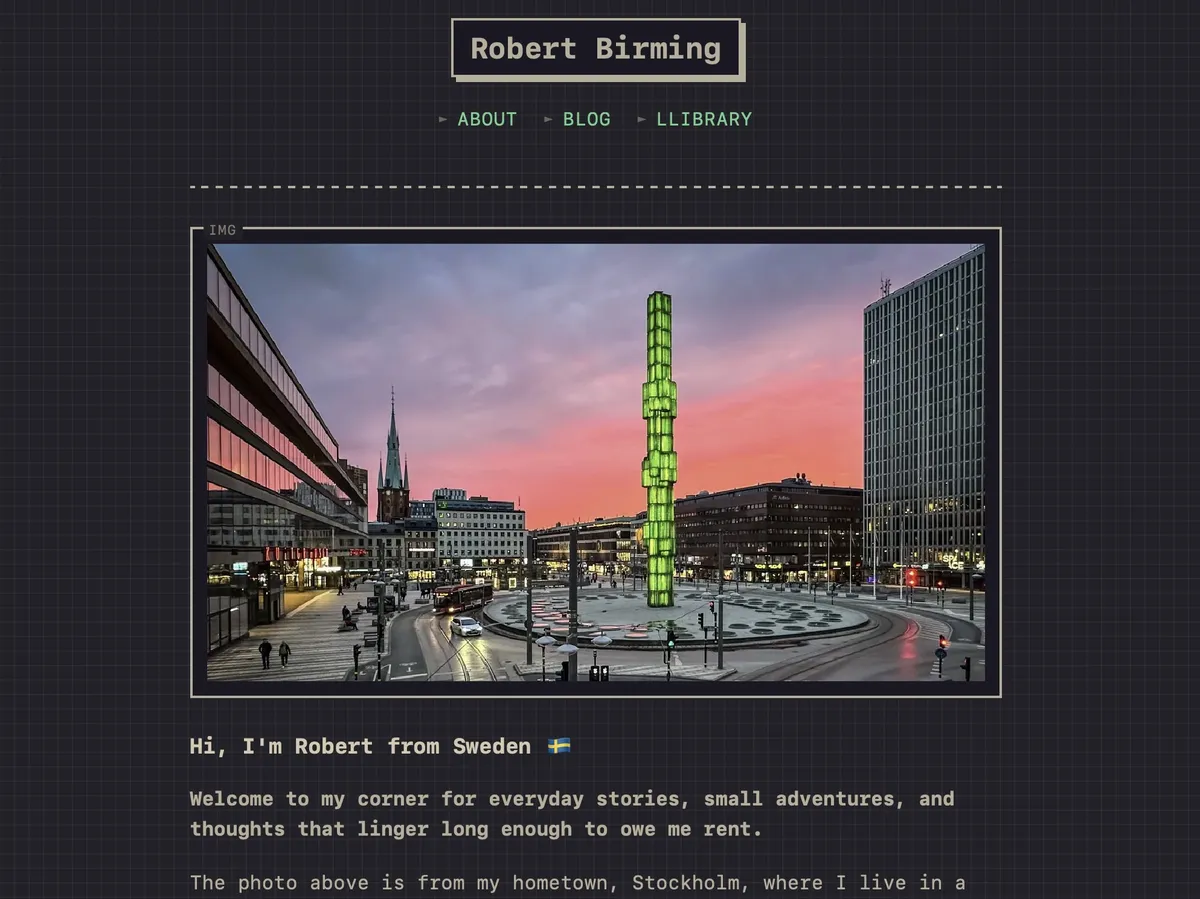

Say hello to Pixel Bear!

The theme is a celebration of Sylvia’s awesome pixel bears, which she created for the ongoing Bearblog Creation Festival. It comes with a pixelated, retro-inspired style and a bunch of geeky little details.

The Grid. A digital frontier. I tried to picture clusters of information as they moved through the computer. Kevin Flynn

Pixel Bear is based on the Bearful theme, so most add-ons should work fine, even though I haven’t tested them all.

I’ve also included a new upvote button, updated looks for the guestbook and status log, plus side note (.pixel-note) and image frame (.pixel-frame) styles.

I’ve just touched down in Sweden after two months in Thailand, so there might be a few jet lag quirks.

👆 Even if that's true, it was mostly an excuse to show you how the side note looks.

Last, but definitely not least, an optional style to show a pixel bear, or any image you like, in the footer. This is what started it all, the very reason this theme exists. Thank you, Sylvia!

If you want to try the Pixel Bear theme on your blog, simply copy the styles below, head over to your Bear theme settings, paste them in, and you’re done.

Happy pixelated blogging!

/*

* Pixel Bear — a pixelated Bear theme

* Version 0.3.0 | 2026-03-03

* Robert Birming | robertbirming.com

*/

:root {

color-scheme: light dark;

--width: 72ch;

--font-main: ui-monospace, monospace;

--font-secondary: ui-monospace, monospace;

--font-scale: 1em;

--background-color: #f0e8d8;

--heading-color: #3b2e20;

--text-color: #4a3c2e;

--link-color: #2e6e4e;

--visited-color: #8a7e6e;

--code-background-color: #e4dac6;

--code-color: #4a3c2e;

--blockquote-color: #5e4e3e;

--border-color: #4a3c2e;

--muted-color: #8a7e6e;

}

@media (prefers-color-scheme: dark) {

:root {

--background-color: #222129;

--heading-color: #d4c8b0;

--text-color: #b8ae9a;

--link-color: #6ecf8e;

--visited-color: #9a9a7a;

--code-background-color: #1a1924;

--code-color: #b8ae9a;

--blockquote-color: #9a8e7a;

--border-color: #b8ae9a;

--muted-color: #847b6e;

}

}

*,

*::before,

*::after {

box-sizing: border-box;

}

html {

-webkit-text-size-adjust: 100%;

}

body {

max-width: var(--width);

margin-inline: auto;

padding: 20px;

font-family: var(--font-secondary);

font-size: var(--font-scale);

line-height: 1.6;

overflow-wrap: break-word;

color: var(--text-color);

background-color: var(--background-color);

background-image:

linear-gradient(

to right,

color-mix(in srgb, var(--border-color) 6%, transparent) 1px,

transparent 1px

),

linear-gradient(

to bottom,

color-mix(in srgb, var(--border-color) 6%, transparent) 1px,

transparent 1px

);

background-size: 12px 12px;

}

p {

margin-block: 1.2em;

}

hr,

img,

figure,

blockquote,

table,

.highlight, .code,

.statuslog,

.pixel-note,

.gallery,

.preview {

margin-block: 1.8em;

}

h1, h2, h3, h4, h5, h6 {

margin-block: 1.6em 0.5em;

font-family: var(--font-main);

line-height: 1.4;

color: var(--heading-color);

}

h1 { font-size: 1.6em; }

h2 { font-size: 1.25em; }

h3 { font-size: 1.1em; }

h4 { font-size: 1em; }

a {

color: var(--link-color);

text-decoration: underline;

text-decoration-thickness: 2px;

text-decoration-style: dotted;

text-underline-offset: 0.2em;

}

a:hover {

text-decoration-style: solid;

}

a:focus-visible {

outline: 2px solid var(--link-color);

outline-offset: 2px;

}

main a:visited {

color: var(--visited-color);

}

hr {

border: 0;

text-align: center;

line-height: 1;

}

hr::after {

content: "□ □ □ □ □";

letter-spacing: 0.25em;

color: var(--muted-color);

font-size: 0.7em;

}

img {

max-width: 100%;

height: auto;

display: block;

image-rendering: pixelated;

}

figure {

margin-inline: 0;

}

figure img,

figure p {

margin-block: 0;

}

figcaption {

margin-block-start: 0.8em;

font-size: 0.85em;

text-align: center;

color: var(--muted-color);

}

.inline {

width: auto !important;

}

blockquote {

margin-inline: 0;

border: 2px solid var(--border-color);

border-inline-start: 6px solid var(--border-color);

padding: 0.75em 1.25em;

color: var(--blockquote-color);

font-style: italic;

position: relative;

}

blockquote::before {

content: "QUOTE";

position: absolute;

top: -0.75em;

left: 0.8em;

padding: 0 0.4em;

font-size: 0.7em;

font-style: normal;

letter-spacing: 0.08em;

background: var(--background-color);

color: var(--muted-color);

}

blockquote cite {

display: table;

margin-block-start: 1.6em;

margin-inline-start: auto;

padding: 0.2em 0.6em;

font-size: 0.75em;

font-style: normal;

background-color: var(--border-color);

color: var(--background-color);

text-transform: uppercase;

letter-spacing: 0.08em;

box-shadow: 2px 2px 0 0 var(--border-color);

}

blockquote cite::before {

content: "ref_id: ";

text-transform: none;

color: color-mix(in srgb, var(--background-color) 85%, transparent);

}

code {

font-family: monospace;

padding-inline: 0.3em;

background-color: var(--code-background-color);

color: var(--code-color);

}

pre {

margin: 0;

}

pre code {

padding: 0;

background: none;

}

.highlight, .code {

padding: 0.75em 1em;

overflow-x: auto;

background-color: var(--code-background-color);

color: var(--code-color);

border: 2px solid var(--border-color);

}

table {

width: 100%;

border-collapse: collapse;

border: 2px solid var(--border-color);

}

th, td {

padding: 0.5em 0.75em;

text-align: start;

border: 1px solid var(--border-color);

}

th {

font-weight: bold;

background-color: var(--border-color);

color: var(--background-color);

}

time {

font-style: normal;

color: var(--muted-color);

}

button {

margin: 0;

cursor: pointer;

}

input, textarea, button {

font: inherit;

letter-spacing: inherit;

}

button:focus-visible,

input:focus-visible,

textarea:focus-visible {

outline: 2px solid var(--link-color);

outline-offset: 2px;

}

header {

margin-block: 0 2em;

padding-block-end: 1.5em;

border-bottom: 2px dashed var(--border-color);

text-align: center;

}

header a.title {

display: inline-block;

text-decoration: none;

}

header a.title:hover {

background-color: transparent;

text-decoration: none;

}

header a.title h1 {

display: inline-block;

margin-block: 0 0.75em;

font-size: 1.5em;

line-height: 1.2;

background-color: var(--code-background-color);

color: var(--text-color);

border: 2px solid var(--border-color);

padding: 0.35em 0.6em;

box-shadow: 4px 4px 0 0 var(--border-color);

}

header a.title:hover h1 {

transform: translate(-1px, -1px);

box-shadow: 5px 5px 0 0 var(--border-color);

}

nav {

display: flex;

justify-content: center;

gap: 0.25em;

flex-wrap: wrap;

}

nav a {

text-decoration: none;

padding: 0.15em 0.4em;

font-size: 0.95em;

text-transform: uppercase;

letter-spacing: 0.04em;

}

nav a:hover {

background-color: var(--link-color);

color: var(--background-color);

text-decoration: none;

}

nav a::before {

content: "► ";

color: color-mix(in srgb, var(--muted-color) 75%, transparent);

font-size: 0.75em;

vertical-align: 0.15em;

}

nav a:hover::before,

nav a:focus-visible::before {

color: var(--background-color);

}

/* Blog list */

ul.blog-posts {

margin-block-end: 1.6em;

list-style: none;

padding: 0;

}

ul.blog-posts li {

display: flex;

align-items: center;

gap: 0.75em;

padding: 0.55rem 0.9rem 0.55rem 0;

border-bottom: 1px solid color-mix(in srgb, var(--text-color) 12%, var(--background-color));

}

ul.blog-posts li:last-child {

border-bottom: 0;

}

ul.blog-posts li a {

order: 1;

flex: 1;

min-width: 0;

font-weight: bold;

text-decoration: none;

}

ul.blog-posts li a::before {

content: "▸ ";

color: color-mix(in srgb, var(--muted-color) 25%, transparent);

font-size: 0.85em;

vertical-align: 0.1em;

}

ul.blog-posts li:hover a::before,

ul.blog-posts li a:focus-visible::before {

color: var(--link-color);

}

ul.blog-posts li a:hover {

text-decoration: none;

}

ul.blog-posts li a:visited {

color: var(--visited-color);

}

ul.blog-posts li span {

order: 2;

flex: 0 0 auto;

margin-inline-start: auto;

white-space: nowrap;

padding: 0.1em 0.5em;

font-size: 0.8em;

border: 1px solid color-mix(in srgb, var(--border-color) 20%, transparent);

background: color-mix(in srgb, var(--background-color) 75%, transparent);

color: var(--muted-color);

}

ul.blog-posts li:hover span {

border-color: var(--link-color);

color: var(--link-color);

}

/* Blog posts */

header nav p,

.post main > h1 + p {

margin-block-start: 0.25em;

}

.post main > h1 {

margin-block-end: 0;

}

body main > h1:first-child {

margin-block-start: 0;

}

/* Upvote button */

.upvote-count {

font-size: 0.75em;

}

footer {

margin-block-start: 4em;

padding: 1.8em 1em;

border: 2px solid var(--border-color);

position: relative;

text-align: center;

font-size: 0.85em;

word-spacing: -0.05em;

background-color: color-mix(in srgb, var(--code-background-color) 40%, transparent);

}

footer::before {

content: "SYSTEM.END";

position: absolute;

top: -0.8em;

left: 50%;

transform: translateX(-50%);

padding: 0 0.6em;

font-size: 0.7em;

letter-spacing: 0.1em;

background: var(--background-color);

color: var(--muted-color);

border: 2px solid var(--border-color);

}

footer p {

margin-block: 0.5em;

color: var(--text-color);

}

footer a {

color: var(--link-color);

margin-inline: 0.1em;

text-transform: lowercase;

font-weight: bold;

text-decoration: none;

}

footer a::before {

content: "[";

color: var(--muted-color);

}

footer a::after {

content: "]";

color: var(--muted-color);

}

footer a:hover {

background-color: var(--link-color);

color: var(--background-color);

text-decoration: none;

}

::selection {

background-color: var(--link-color);

color: var(--background-color);

}

mark {

background-color: var(--code-background-color);

color: var(--text-color);

padding-inline: 0.3em;

}

@media only screen and (max-width: 767px) {

ul.blog-posts li {

flex-wrap: wrap;

gap: 0.4em;

}

ul.blog-posts li span {

flex: 0 0 100%;

margin-inline-start: 0;

font-size: 0.75em;

}

}

/* Print styles */

@media print {

body {

max-width: 100%;

padding: 0;

background: none;

color: #000;

}

a {

color: #000;

text-decoration: underline;

}

hr::after {

color: #000;

}

.highlight, .code {

border: 1px solid #ccc;

}

header,

footer,

#upvote-form,

.upvote-button,

.tags {

display: none;

}

}

/*

* Want add-ons and style options?

* Visit my growing Bear library:

* https://robertbirming.com/bear-library/

*/

/* Footer image (uncomment and change URL to show a pixel bear or any image in the footer)

footer > span:last-child::before {

content: "";

display: block;

width: 96px;

height: 96px;

margin: 1.8em auto;

background: url("https://your-image-url.gif") no-repeat center / contain;

image-rendering: pixelated;

}

*/

/* Upvote: Pixel toast */

#upvote-form > small {

display: block;

margin-block-start: 1.8rem;

font-size: 1em;

}

#upvote-form .upvote-button {

display: inline-block;

padding: 0.25em 0.6em;

border: 2px solid var(--border-color);

background: var(--code-background-color);

color: var(--text-color);

font: inherit;

font-size: 0.85em;

letter-spacing: 0.05em;

cursor: pointer;

box-shadow: 2px 2px 0 0 var(--border-color);

}

#upvote-form .upvote-button svg,

#upvote-form .upvote-button .upvote-count {

display: none;

}

#upvote-form .upvote-button::before {

content: "▲ TOAST";

}

#upvote-form .upvote-button:focus-visible {

outline: 2px solid var(--link-color);

outline-offset: 2px;

}

@media (hover: hover) {

#upvote-form .upvote-button:not([disabled]):hover {

background-color: var(--link-color);

color: var(--background-color);

border-color: var(--link-color);

transform: translate(-1px, -1px);

box-shadow: 3px 3px 0 0 var(--border-color);

}

}

#upvote-form .upvote-button.upvoted,

#upvote-form .upvote-button[disabled] {

opacity: 0.6;

cursor: default;

box-shadow: none;

color: var(--text-color) !important;

}

#upvote-form .upvote-button.upvoted::before,

#upvote-form .upvote-button[disabled]::before {

content: "▲ TOASTED";

}

/* Status log */

.statuslog {

display: grid;

grid-template-columns: auto 1fr;

gap: 0.3em 1em;

max-width: 34rem;

margin-inline: auto;

padding: 1em 1.1em;

color: var(--text-color);

background: var(--code-background-color);

border: 2px solid var(--border-color);

position: relative;

}

.statuslog::before {

content: "STATUS";

position: absolute;

top: -0.75em;

left: 0.8em;

padding: 0 0.4em;

font-size: 0.7em;

letter-spacing: 0.08em;

background: var(--background-color);

color: var(--muted-color);

}

.statuslog-emoji {

font-size: 2.9rem;

line-height: 1;

align-self: start;

}

.statuslog-content {

font-size: 1em;

overflow-wrap: break-word;

}

.statuslog-content > :first-child {

margin-block-start: 0;

}

.statuslog-content > :last-child {

margin-block-end: 0;

}

footer .statuslog {

text-align: start;

}

/* Image frame (add class="pixel-frame" to a <figure> or wrapping <div>) */

.pixel-frame {

border: 2px solid var(--border-color);

padding: 0.75em;

position: relative;

background: var(--code-background-color);

}

.pixel-frame::before {

content: "IMG";

position: absolute;

top: -0.75em;

left: 0.8em;

padding: 0 0.4em;

font-size: 0.7em;

letter-spacing: 0.08em;

background: var(--background-color);

color: var(--muted-color);

}

.pixel-frame img {

margin: 0;

}

.pixel-frame figcaption {

margin-block: 0.6em 0;

}

/* Guestbook (guestbooks.meadow.cafe) */

#guestbooks___guestbook-form-container form {

display: flex;

flex-direction: column;

gap: 0.8em;

margin-block: 1.6em;

}

#guestbooks___guestbook-form-container :is(input, textarea, button) {

font: inherit;

letter-spacing: inherit;

appearance: none;

}

#guestbooks___guestbook-form-container :is(input[type="text"], input[type="email"], input[type="url"], textarea) {

width: 100%;

padding: 0.65em 0.85em;

color: var(--text-color);

background: var(--background-color);

border: 2px solid var(--border-color);

transition: border-color 0.15s ease, background-color 0.15s ease;

}

#guestbooks___guestbook-form-container :is(input[type="text"], input[type="email"], input[type="url"], textarea):focus-visible {

outline: 2px solid var(--link-color);

outline-offset: 2px;

}

#guestbooks___guestbook-form-container textarea {

min-height: 7.5em;

resize: vertical;

}

#guestbooks___guestbook-form-container :is(button, input[type="submit"]) {

align-self: flex-start;

padding: 0.55em 0.9em;

color: var(--text-color);

background: var(--code-background-color);

border: 2px solid var(--border-color);

cursor: pointer;

box-shadow: 2px 2px 0 0 var(--border-color);

transition: background-color 0.15s ease, border-color 0.15s ease;

}

#guestbooks___guestbook-form-container :is(button, input[type="submit"]):focus-visible {

outline: 2px solid var(--link-color);

outline-offset: 2px;

}

@media (hover: hover) {

#guestbooks___guestbook-form-container :is(button, input[type="submit"]):hover {

background-color: var(--link-color);

color: var(--background-color);

border-color: var(--link-color);

transform: translate(-1px, -1px);

box-shadow: 3px 3px 0 0 var(--border-color);

}

}

#guestbooks___guestbook-messages-header {

display: flex;

align-items: center;

gap: 0.4em;

margin-block: 2em 1em;

}

#guestbooks___guestbook-messages-header::before {

content: "💬";

translate: 0 1px;

}

#guestbooks___guestbook-messages-container > div {

margin-block: 1.2em;

padding: 1em 1.2em;

font-size: 0.95em;

border: 2px solid var(--border-color);

}

#guestbooks___guestbook-messages-container p {

margin: 0.35em 0 0;

padding: 0;

}

#guestbooks___guestbook-messages-container blockquote {

margin: 0.35em 0 0;

padding: 0;

border: 0;

background: none;

font-style: normal;

}

#guestbooks___guestbook-messages-container blockquote::before {

content: none;

}

#guestbooks___guestbook-messages-container time {

font-size: 0.85em;

opacity: 0.8;

white-space: nowrap;

}

#guestbooks___guestbook-messages-container .guestbook-message-reply {

position: relative;

margin-block: 0.9em 0.2em;

margin-inline-start: 1.6em;

padding: 0.9em 1.1em;

background: var(--code-background-color);

border: 2px solid var(--border-color);

}

#guestbooks___guestbook-messages-container .guestbook-message-reply::before {

content: "";

position: absolute;

top: -1em;

left: -0.9em;

width: 0.7em;

height: 1.6em;

border-left: 2px solid var(--border-color);

border-bottom: 2px solid var(--border-color);

}

/* Side note (wrap content in <div class="pixel-note">) */

.pixel-note {

padding: 1em 1.25em;

border: 2px solid var(--border-color);

border-inline-start: 6px solid var(--border-color);

background: var(--code-background-color);

position: relative;

font-size: 0.9em;

}

.pixel-note::before {

content: "NOTE";

position: absolute;

top: -0.75em;

left: 0.8em;

padding: 0 0.4em;

font-size: 0.7em;

letter-spacing: 0.08em;

background: var(--background-color);

color: var(--muted-color);

}

.pixel-note > :first-child {

margin-block-start: 0;

}

.pixel-note > :last-child {

margin-block-end: 0;

}

2026-03-03 06:17:00

新年新气象,今天将 305 篇博客从 WordPress 迁到了 Bear Blog。

是搬家,也是对自己过去几年的整理和归档。

与 WordPress 相处了四年,有许多美好的回忆,改了很多版主题,也做了很多插件。

回头看,自己喜欢的风格其实还是刚写博客时用 Hugo 搭建的模样。

迁移之后,博客使用的是 Bear Blog 自带的 Claritas 主题。相似的风格,但更加轻量。

本来想弄个自动化插件,但一直卡在了 Bear blog 登录的 Cloudflare Access,没能弄出个省心的方案。

后来换了个思路,写脚本批量提取 WordPress 数据,统一修改格式和元数据,重传图片到新的图床,再手动完成发布。没有全自动流程那么省力,但 2 个小时也完成了 300 多篇博客的迁移。

代码见:DayuGuo/WordPress2bearblog。

我是一个注意力很容易被分散的人。用 WordPress 的时候总是手痒,这改改,那弄弄。

Bear Blog 则克制很多,简单直接,帮助我专注于内容,省去很多维护性的工作和精力。

希望它能陪我很久。

本想除夕那几天写年终总结,结果得了诺如病毒,躺了一周。这几天才缓了过来,给大家拜个晚年,祝大家新的一年平安顺遂!

新博客没有评论区,如有想交流的可以直接发我邮箱:[email protected]

2026-03-03 02:16:19

My new hobby is refreshing the Bear blog discover page every few hours.

The posts are interesting, and usually there will be a few RE: post that replies to the original post , which appear on the discover feed after a few days.

I haven't had such fun reading articles for years. Hacker News a decade back was fun to read, but with recent rise of AI, most of the topics are AI related, and I lost interest browsing HN, it feels like these tech people have nothing to discuss aside from AI.

Thank you Herman and Bear Blog users!

2026-03-02 23:05:00

We were pruning the hedge in front of the kitchen window today.

It had started to block the light, growing a bit too enthusiastic for its position, so out came the shears.

Not long after we started, two long-tailed tits appeared in the cherry tree just beside us. They moved quickly through the branches, as they always do, light and restless. At first, we assumed they were just foraging.

But they stayed. And they watched.

The soft, constant chirping turned sharper, more insistent. Each cut we made seemed to irritate them a little more.

Then we noticed the lichen. Tiny white fragments, carried carefully in their beaks.

That was the moment it clicked.

We stepped back, looked more closely at the uncut section of the hedge, and there it was. A nest, still in progress. Not yet finished, but clearly underway.

If we had used a hedge trimmer, the job would have been done in minutes. Clean, straight, finished. We probably wouldn’t have noticed them at all.

A long-tailed tit’s nest is an extraordinary thing.

It’s not a simple cup, but a domed structure, almost oval, woven from moss, spider silk, and hair, which gives it a surprising elasticity. The outside is decorated with lichen and bits of bark, blending it almost perfectly into its surroundings. It’s disruptive camouflage. It breaks up the outline of the nest so it looks like a knot on a branch. Inside, it’s lined with feathers. Hundreds, sometimes thousands of them.

Both birds build it together.

A drawing from Britain's birds and their nests (1910) shows what it will eventually become:

At that point, the decision was obvious.

We could finish the hedge, or we could stop.

So we stopped.

The hedge will remain half-pruned for a while. Long enough for the nest to be completed, for the eggs to be laid, and, if all goes well, for the young to fledge.

It means a slightly uneven view from the kitchen window. But in return, we get to watch a nest being built from the first pieces of lichen to the moment it finally empties again.

That seems like a fair trade.

To be continued...

2026-03-02 20:51:07

Top of the Bear trending this morning was Ginoz's AI is NOT better than you, post, a response to Future Perfect's original post post from last week.

And I gotta say, I 1000% agree with Ginoz's viewpoint.

And honestly, I think the big problem is looking at generative AI through this lens that sometimes feels like a big “What do you want me to do? I don’t know graphic design! I had to use AI". These poor people without time and skill but with a subscriptions to ChatGPT.

Brother, sister — try learning. Try “trying and failing” for once. Try asking that friend who knows a thing or two about Photoshop for a favor. I know it’s hard, but let’s not act like there’s no other choice but to use generative AI when we’ve always managed to cope and thrive without it.

Back in high school and college in the early 90s (93-95), I was friends with a few garage bands. After all, this was the time of grunge, alternative, college radio, 120 Minutes on MTV, etc. They would distribute cassettes of recordings from their bedrooms and garages, all static and crunchy guitars and drums, barely able to hear the vocals because they were recording it on a boom box or something along those lines. One friend of mine even bought cassette albums from Goodwill, tossed the liners, popped the tabs off of the tapes to record over, and created his own liner notes out of dot-matrix printer paper. They were cut to fit the cases, and with some hand-drawn scribbles as artwork. Was it perfect? Nope. Was it cool as hell to get a tape from them? YEP.

I get that AI makes things easy. I get that it can be considered inexpensive. I get that you can drop a description in a box, look up something on Canva, slap your logo on it, and print them all up within a day, so you can tape them up on walls and poles all over town. It's EFFICIENT! It's FAST!

Our world and our society demand perfection. Your posts must be filtered, adjusted, and tweaked to show your best side. Your music must be absolutely clean and every note perfected, as if you made it in Phineas' studio in one take. There are no imperfections, unless they were placed there for The Aesthetic. The problem is - this perfection removes all the soul. All the feeling. There's something so fun about the mess-ups, about the scratches and the paste-smeared collages with torn edges and misspellings. There's something so freeing about making the thing yourself, even if it's not perfect. Too many people today forget that the imperfections are how we learn and grow. The excuse "I don't want to suck because it's cringey" really bothers me. Those cringe moments MAKE us. Push us to be better. Push us to learn. We're so afraid of failure that we're using a tool to strip the soul out of everything - writing, music, art. That failure builds resistance in humans and teaches us what we need to know to survive in this dumpster fire of a world.

Play with art. Get your hands dirty. Mess up, make it again. If it's not perfect right out the gate? Fuck it, it will be better next time. Learn, fail, then learn better. Trust me, the sense of accomplishment you will have at the end is worth it.

Feel free to reply to this post on my guestbook, or you can drop me a line via email!

2026-03-02 08:10:00

I really wish you would stop talking about my evil job.

A job should not define me.

Even if I am doing evil things, I am only doing that for eight hours a day. And sometimes overtime.

I'm not even that responsible for most of the evil things we're doing.

Most of that is a completely different department.

And when I clock in at my evil job, know that I'm against the evil parts.

What do you expect me to do anyway, quit my evil job?