2026-03-03 22:03:26

Customs and Border Protection (CBP) bought data from the online advertising ecosystem to track peoples’ precise movements over time, in a process that often involves siphoning data from ordinary apps like video games, dating services, and fitness trackers, according to an internal Department of Homeland Security (DHS) document obtained by 404 Media.

The document shows in stark terms the power, and potential risk, of online advertising data and how it can be leveraged by government agencies for surveillance purposes. The news comes after Immigration and Customs Enforcement (ICE) purchased similar tools that can monitor the movements of phones in entire neighbourhoods. ICE also recently said in public procurement documents it was interested in sourcing more “Ad Tech” data for its investigations. Following 404 Media’s revelation of that ICE purchase, on Tuesday a group of around 70 lawmakers urged the DHS oversight body to conduct a new investigation into ICE’s location data buying.

This sort of information is a “goldmine for tracking where every person is and what they read, watch, and listen to,” Johnny Ryan, director of the Irish Council for Civil Liberties (ICCL) Enforce, which has closely followed the sale of advertising data, told 404 Media in an email.

2026-03-03 01:26:08

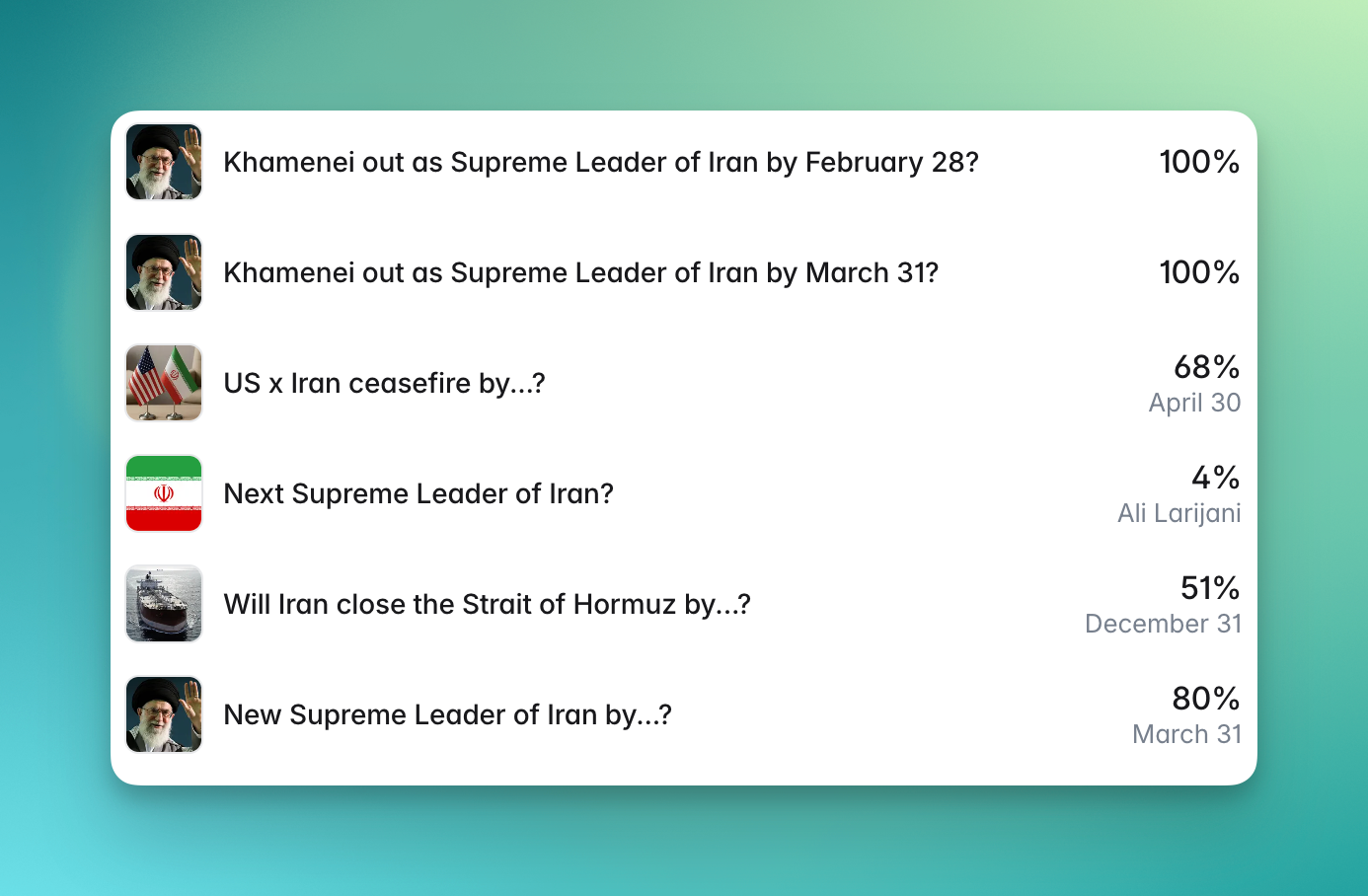

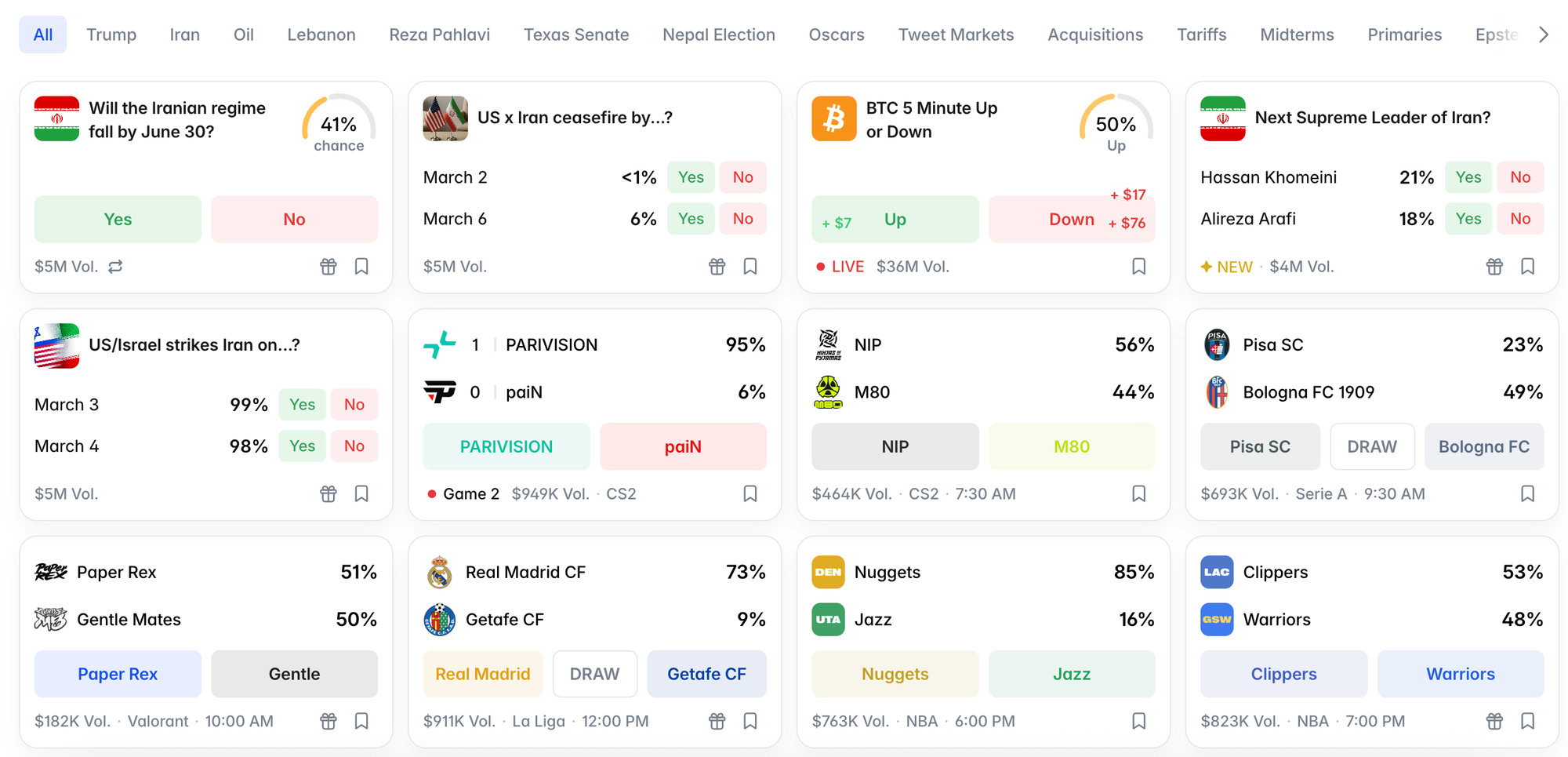

The main bet on the front page of Polymarket right now is “Will the Iranian regime fall by June 30?” The site has this at a 41 percent chance of happening as I write this.

On Polymarket, more than $5 million has been spent gambling on this question. On Kalshi, a competing prediction market where users can bet on almost anything, $54 million was spent on “Ali Khamenei out as Supreme Leader?,” a bet whose results somehow ended up ambiguous even after Khamenei’s assassination.

In a series of tweets over the weekend, Kalshi’s CEO and founder Tarek Mansour repeatedly twisted himself into pretzels attempting to explain how the absurd, grotesque exercise of allowing people to bet on politics, geopolitics, and world events is not supposed to allow people to profit from death.

“We don’t list markets directly tied to death. When there are markets where potential outcomes involve death, we design the rules to prevent people from profiting from death,” he wrote. He then posted the underlying rules of the bet, which read “If <leader> leaves solely because they have died, the associated market will resolve and the Exchange will determine the payouts to the holders of long and short positions based upon the last traded price (prior to the death).”

That we are discussing the ins-and-outs of which random gamblers get paid out during an illegal war in which already hundreds of school children have been bombed to death feels like the type of grotesque sideshow that is only possible because the U.S. government is only interested in regulating its perceived political enemies, and which only feels possible because much of the American economy feels held together by cope and the gobs of money being thrown into AI, data centers, and gambling. All of this is part of the perverse Silicon Valley, AI, crypto, and X-adjacent hustlebro gambling economy, which was legalized by companies like DraftKings and FanDuel, who spent eyewatering sums lobbying states to allow their gambling apps, and has been “legitimized” by sports leagues who wanted to print money and media companies desperate for the advertising dollars that came from gambling and has turned this all into a massive industrial complex that is not-so-slowly bankrupting a generation of underemployed people addicted to gambling. Polymarket and Kalshi took the DraftKings and FanDuel model and let people bet on basically anything, so now you can bet on which countries Iran will launch missiles against on the same app you bet on the Nuggets/Jazz game or the winner of the Best Picture Academy Award. The new model is so good at parting people from their money that DraftKings and FanDuel themselves have been anxious to get into prediction markets.

2026-03-02 23:59:51

Amazon’s cloud services are down in some of the Middle East after “objects” hit data centers in the United Arab Emirates (UAE) causing “sparks and fire.” Around 60 services tied to AWS are down in the region, affecting web traffic in the UAE and Bahrain. The outage comes following Iranian attacks on the UAE as retaliation for US and Israeli strikes on Iran.

Customers in Bahrain and the UAE began to report outages tied to the mec1-az2 and mec1-az3 clusters in AWS’ ME-CENTRAL-1 Region on March 1 after Iranian ballistic missiles and drones struck targets in and around Dubai. Amazon did not confirm that AWS was down in the Middle East due to an Iranian attack and instead referred 404 Media to its online dashboard.

“At around 4:30 AM PST, one of our Availability Zones (mec1-az2) was impacted by objects that struck the data center, creating sparks and fire,” AWS said on its health dashboard. “The fire department shut off power to the facility and generators as they worked to put out the fire. We are still awaiting permission to turn the power back on, and once we have, we will ensure we restore power and connectivity safely. It will take several hours to restore connectivity to the impacted AZ.”

As of this morning at 9:22 AM ET, the damage had spread. “We are expecting recovery to take at least a day, as it requires repair of facilities, cooling and power systems, coordination with local authorities, and careful assessment to ensure the safety of our operators,” AWS said. “We recommend customers enact their disaster recovery plans and recover from remote backups into alternate AWS Regions, ideally in Europe.”

Amazon later shared more information about the attack and confirmed it was the result of drones. “Due to the ongoing conflict in the Middle East, both affected regions have experienced physical impacts to infrastructure as a result of drone strikes. In the UAE, two of our facilities were directly struck, while in Bahrain, a drone strike in close proximity to one of our facilities caused physical impacts to our infrastructure,” it said. “These strikes have caused structural damage, disrupted power delivery to our infrastructure, and in some cases required fire suppression activities that resulted in additional water damage. We are working closely with local authorities and prioritizing the safety of our personnel throughout our recovery efforts.”

On Saturday, the United States and Israel launched Operation Epic Fury and struck targets inside of Iran, killing several political and military leaders including Ayatollah Ali Khamenei, the country’s Supreme Leader. In retaliation, Iran launched drone and missile attacks against Israel and multiple US-allied targets in the Middle East.

According to the Emirati defense forces, Iran attacked the country with two cruise missiles, 165 ballistic missiles, and more than 540 drones. The UAE and its capital city Dubai are often seen as a safe and stable destination in the Middle East. The country hosts wealthy people from across the region and influencers from across the world. Footage shared on social media showed the neon towers of the UAE backlit by missiles and munitions.

It’s unclear how long it will take for Amazon to restore services to the region or how far the damage will spread. Amazon’s dashboard is promising to bring things back up in “at least a day” but the war is far from over. Iran continues to strike targets in the Middle East and it’s unclear what America’s plan of attack is or how long this war might grind on.

Update 2/2/26: This story has been updated with more specifics about the attack from Amazon.

2026-03-02 21:00:57

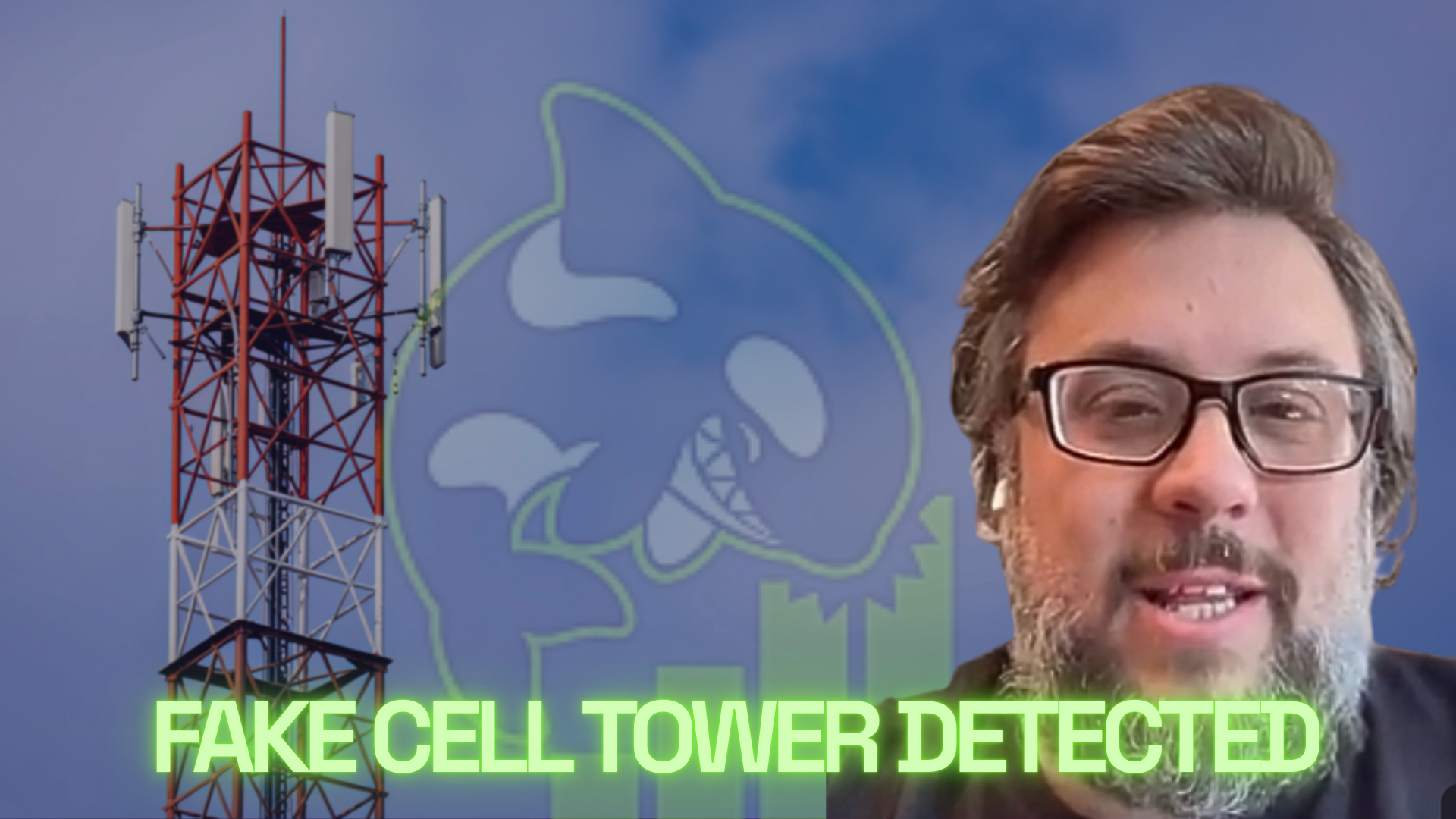

Joseph speaks to Cooper Quintin, a security researcher and senior public interest technologist with the Electronic Frontier Foundation (EFF). Quintin is one of the people behind Rayhunter, an easy to install tool that can detect nearby IMSI-catchers. This tech, sometimes known as Stingrays, poses as a fake cellphone tower to track a phone’s location, intercept calls and texts, and can sometimes even deliver malware.

Listen to the weekly podcast on Apple Podcasts, Spotify, or YouTube. Become a paid subscriber for access to this episode's bonus content and to power our journalism. If you become a paid subscriber, check your inbox for an email from our podcast host Transistor for a link to the subscribers-only version! You can also add that subscribers feed to your podcast app of choice and never miss an episode that way. The email should also contain the subscribers-only unlisted YouTube link for the extended video version too. It will also be in the show notes in your podcast player.

2026-03-01 04:48:27

Welcome back to the Abstract! Here are the studies this week that exposed prehistoric hookups, marched toward death, feasted on their own bodies, and found a buried legend in the Sahara.

First, Neanderthal males had lots more babies with human females than human males had with Neanderthal females. What’s up with that?! Then, strap in for a stellar swan song, antlers for breakfast, and a timeless style icon from the Cretaceous.

As always, for more of my work, check out my book First Contact: The Story of Our Obsession with Aliens or subscribe to my personal newsletter the BeX Files.

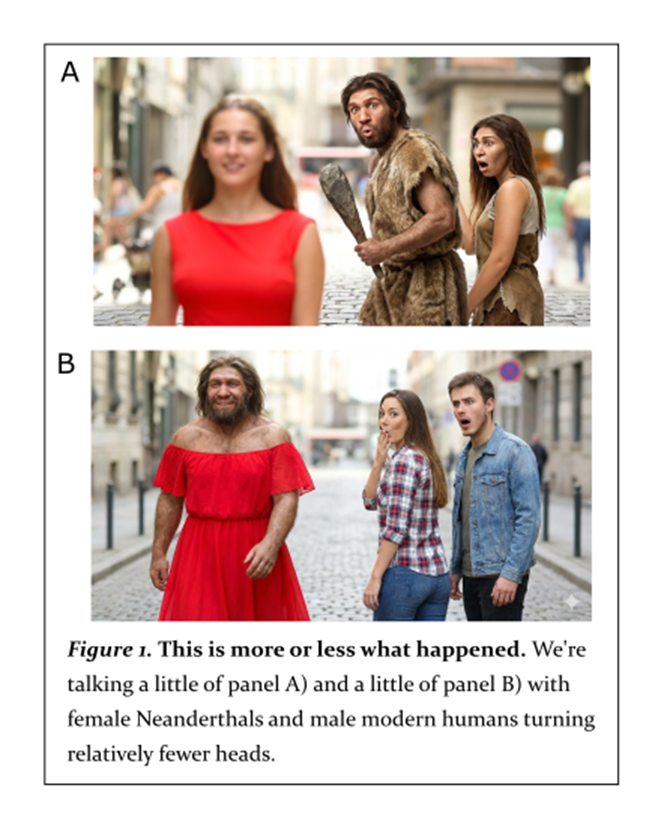

Humans and our close relatives, Neanderthals, produced children together many times before the latter went extinct about 40,000 years ago. As a result, the vast majority of people living today carry a pinch of Neanderthal DNA—the enduring proof of past copulations between our species.

Now, scientists have proposed that these prehistoric partnerships overwhelmingly occurred between Neanderthal males and females of our own species, Homo sapiens, with far fewer couplings between Neanderthal females and human males. This strong sexual bias provides the most "parsimonious” explanation for the uneven distribution of Neanderthal alleles (variants of specific genes) in modern human genomes, according to a new study.

“One of the notable features evident in alignments of Neanderthal genomes to those of modern humans is the presence of ‘Neanderthal deserts’ within modern human genomes: genomic regions where Neanderthal alleles are conspicuously rare in the modern human (and ancient modern human) gene pool,” said researchers led by Alexander Platt of the University of Pennsylvania.

In particular, the team noted that Neanderthal deserts show up on the human X chromosome, which they think hints at a strong sex bias toward breeding between Neanderthal males and human females.

The team compared Neanderthal genomes with genetic data from some sub-Saharan African populations that have no Neanderthal ancestry. This approach allowed them to track ancient gene flow from anatomically modern humans (AMHs)—in other words, our ancient Homo sapiens ancestors—into Neanderthal populations.

The results revealed that the Neanderthal X chromosomes had a 62 percent relative excess of DNA from AMHs. In other words, not only are there Neanderthal deserts on human X chromosomes, there are corollary “floods” or “oases” (whatever metaphor you like) of human DNA on Neanderthal X chromosomes.

This discovery is strong evidence that humans were contributing more alleles to the Neanderthal X chromosome, and Neanderthals were contributing less to the human X chromosome, due to an unexplained asymmetry in mate preference.

Overall, the genetic patterns the team observed “were likely colored by a persistent preference for pairings between males of predominantly Neanderthal ancestry and females of predominantly AMH ancestry over the reverse,” the researchers concluded. “The bias that we inferred seems to have remained consistent across admixture events separated by 200,000 years.”

Men prefer blondes; women prefer Neanderthals? I don’t know. This is just wildly interesting.

In other news…

We’ve all been there: One day, you’re an extreme red supergiant, and the next, you’re a yellow hypergiant. A new study reports that WOH G64, one of the biggest known stars in the sky, went through this “dramatic transition” sometime in 2014 (or at least, that’s when astronomers first captured this spectral shift in the star, which is located about 163,000 light years from Earth).

If the Sun were as big as WOH G64, it would stretch to the orbit of Saturn. This late-stage stellar titan offers an ultra-rare opportunity to see how red supergiants (RSGs) end their lives, a process that is shrouded in mystery—often literally, as these stars tend to be obscured by a lot of circumstellar gas.

“The apparent lack of luminous RSGs detected as supernova progenitors has sparked an ongoing debate over the fate of these stars,” said researchers led by Gonzalo Muñoz-Sanchez of the National Observatory of Athens. “WOH G64 thus provides critical insight into post-RSG evolution and the formation of dense circumstellar environments seen in core-collapse supernovae.”

It could be that WOH G64 does detonate. In fact, this may have already happened, but the light show hasn’t reached us yet. It may also collapse directly into a black hole with no supernova to show for it. We’ll just have to keep watching this space! This has been Big Star News.

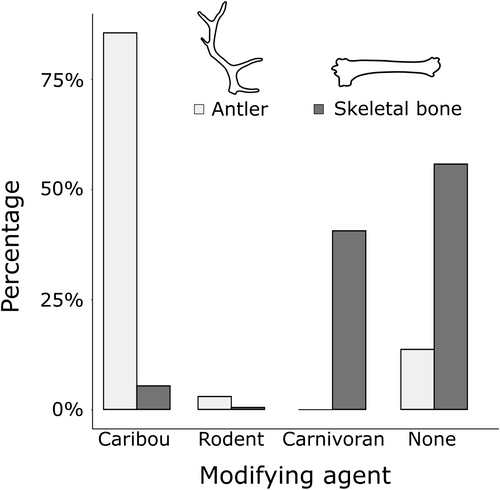

Antlers in deer are usually a male ornamentation that allows females to judge potential mates based on the quality of their head-bling. Caribou females, however, buck this trend as the only female deer with antlers. So, as a folktale might ask: How did the caribou get her antlers?

One answer is that antlers make a great post-partum snack, according to a new study. In migratory populations, female caribou shed their antlers when they reach calving grounds, usually just days before they give birth, which may give nursing mothers a much-needed vitamin boost.

“Pervasive antler consumption by caribou suggests that synchroneity between birthing and antler shedding evinces the importance of nutrient (calcium, phosphorus) transport for supporting calf survival,” said researchers led by Madison Gaetano of the University of Cincinnati. “Though intriguing, additional research will be important to more explicitly evaluate the dietary and fitness benefits (for both females and their calves) of antler-derived nutrients.”

Given that caribou also eat their placentas, it’s really impressive how these new mothers nourish themselves and their young with the fruits of their own bodies. Hardcore. Respect.

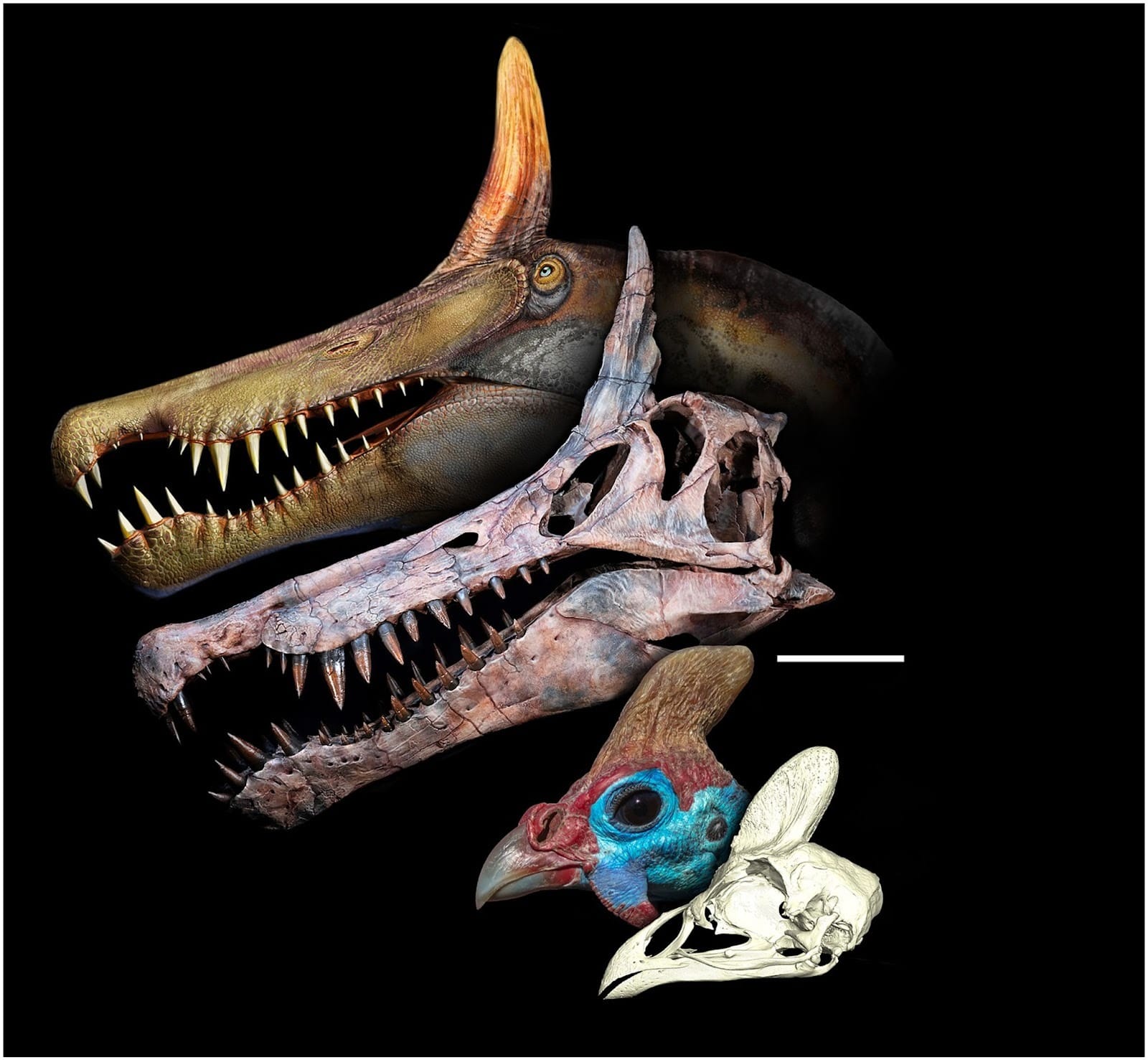

Speaking of animals with rad headgear, we’ll close with a shoutout to Spinosaurus mirabilis, a newly-discovered species of giant carnivorous dinosaur that rocked an epic scimitar-shaped skull crest. Move over, rock band T. Rex—this killer is the new wave of dinosaurian glam.

“Spinosaurus mirabilis…discovered in the central Sahara alongside long-necked dinosaurs in a riparian habitat, is distinguished by a scimitar-shaped bony crest projecting far above its skull roof,” said researchers led by Paul C. Sereno of the University of Chicago.

Spinosaurus stock has gone through the roof in recent decades as new finds have confirmed that they were the biggest land predators of all time, dethroning T-rex from a tyrant king to a mere tyrant vassal. As the ultimate charismatic megafauna, spinosaurs are popular in dino-blockbusters. Indeed, one of my favorite gags in cinematic history is when a Spinosaurus swallows a satellite phone in Jurassic Park III, so you know it’s lurking when you hear the Nokia ring tone. Pure dinosaurian comedic gold.

In any case, the new study sheds new light into the semi-aquatic nature of this majestic hunter, suggesting that this particular species was “a wading, shoreline predator with visual display an important aspect of its biology.” While this animal was no doubt visually captivating, it’s best to view it from a safe distance of about 94 million years.

Thanks for reading! See you next week.

2026-02-28 00:27:13

This is Behind the Blog, where we share our behind-the-scenes thoughts about how a few of our top stories of the week came together. This week, we discuss wishes made, god complexes, and the point of it all.

SAM: This week I wrote about Amazon’s changing policy for wishlists. It’s allowing gifters to choose third-party sellers for items, which could expose recipients’ delivery addresses to the gifter. The notice Amazon sent wishlist holders is a basic example of CYA messaging: Amazon can’t guarantee what a third party seller will do with your address once they have it, including giving it to a gifter for tracking purposes.

Sex workers first flagged this change on social media because many use wishlists as an easy way to accept gifts, tributes, tips, etc instead of or in addition to actual funds. This is important because payment processors are wildly hostile and actively discriminatory toward the adult industry, and having alternative ways to get paid is crucial if you’re debanked or banned from the usual payment processors. I think most use it in a supplementary fashion, though.